Abstract

Research has focused on increasing the treatment integrity of school-based interventions by utilizing performance feedback. The purpose of this study was to extend this literature by increasing special education teachers' treatment integrity for implementing antecedent and consequence procedures in an ongoing behavior support plan. A multiple baseline across teacher–student dyads (for two classrooms) design was used to evaluate the effects of performance feedback on the percentage of antecedent and consequence components implemented correctly during 1-hr observation sessions. Performance feedback was provided every other week for 8 to 22 weeks after a stable or decreasing trend in the percentage of antecedent or consequence components implemented correctly. Results suggested that performance feedback increased the treatment integrity of antecedent components for 4 of 5 teachers and consequence components for all 5 teachers. These results were maintained following feedback for all teachers across antecedent and consequence components. Teachers rated performance feedback favorably with respect to the purpose, procedures, and outcome, as indicated by a social validity rating measure.

Keywords: treatment integrity, performance feedback, behavior support plans, special education

Treatment integrity, the implementation of an intervention as intended, is a topic of interest among researchers and change agents (Gresham, Gansle, & Noell, 1993; Watson, Sterling, & McDade, 1997). This interest in treatment integrity is accompanied by a recent focus on accountability by school systems with the enactment of No Child Left Behind (U.S. Department of Education, 2001). Consequently, researchers have attempted to identify parsimonious, effective, and time-efficient strategies to ensure the integrity of school-based interventions (Cossiart, Hall, & Hopkins, 1973; Gillat & Sulzer-Azaroff, 1994; Mortenson & Witt, 1998; Noell, Duhon, Gatti, & Connell, 2002; Noell, Witt, Gilbertson, Ranier, & Freeland, 1997; Witt, Noell, LaFleur, & Mortenson, 1997). One successful intervention strategy for enhancing treatment integrity is performance feedback (Mortenson & Witt, 1998; Noell et al., 1997, 2000, 2002; Witt et al., 1997). In the literature, performance feedback has encompassed several components including (a) review of data, (b) praise for correct implementation, (c) corrective feedback, and (d) addressing questions or comments.

Performance feedback has been used in regular education settings to improve the implementation of academic interventions (Mortenson & Witt, 1998; Noell et al., 2000; Witt et al., 1997), peer tutoring (Noell et al., 2000), the use of contingent praise (Jones, Wickstrom, & Friman, 1997; Martens, Hiralall, & Bradley, 1997), and implementation of behavior-management interventions (Noell et al., 2002). Noell et al. (2002) examined the integrity with which 4 general education teachers employed interventions to decrease out-of-seat behavior and talking out in 8 elementary school students. Integrity was measured by permanent products that consisted of data collected either by students' self-report or by teachers. This study represents an example of the effects of performance feedback on one aspect of behavior interventions: data collection. In other situations, it may to be important to investigate integrity of specific intervention components for complex individualized behavior support plans.

Two other aspects of plan implementation that deserve consideration are (a) what antecedent interventions are implemented to decrease the likelihood of problem behavior and (b) the teacher's response to students' target behaviors. Teachers may not accurately implement both types of procedures with equal integrity because implementation of antecedent and consequence procedures may require different skills. Implementation of antecedent procedures requires teachers to plan and prevent the occurrence of behavior (Luiselli & Cameron, 1998), whereas consequence procedures require teachers to impose contingencies after the occurrence of problem behaviors (Kern, Choutka, & Skol, 2002).

The frequency and structure of performance feedback have been a focus of several studies. For example, research has demonstrated that weekly feedback (Mortenson & Witt, 1998) leads to increases in treatment integrity and may be more practical for supervisors, clinicians, and consultants than daily performance feedback. The schedule and immediacy of performance feedback may also influence the maintenance of treatment integrity. For example, in many studies performance feedback has consisted of reviewing data from the previous day (Noell et al., 1997, 2002; Witt et al., 1997) or week (Mortenson & Witt, 1998), rather than providing feedback on the same day.

The purpose of the present investigation was to extend the current research on treatment integrity by (a) directly examining the effects of performance feedback on special education teachers' implementation of antecedent and consequence procedures of ongoing individualized behavior support plans, (b) administering performance feedback every other week and during the same day as the observation, and (c) assessing short-term maintenance effects of performance feedback.

Method

Participants and Setting

The present study was conducted in a private school for students with acquired brain injury who exhibited significant behavior problems. Students were all male and ranged in age from 10 to 19 years. Three of the students had nontraumatic acquired brain injuries, and 2 students had been diagnosed with a traumatic brain injury. The sample of students observed comprised approximately 10% of the total population of the school.

Observational data were collected in two special education classrooms on a total of 5 teacher–student dyads. Two dyads (i.e., Mr. Canton and Seth; Mr. Martin and Philip) were identified from Classroom 1, and 3 dyads (i.e., Ms. Lowell and Brian; Ms. Malden and Jason; Mr. Mack and Darrin) were identified from Classroom 2. All teachers participating in the study had earned a bachelor's degree and were enrolled in a master's level program in special education. Teachers' experience working in this environment ranged between 6 and 30 months.

Materials

Behavior support plans

Individualized behavior support plans were previously created for each student and were ongoing at the time of the investigation. The plans had been in place for an average of 4 months at the time of the study. These plans consisted of individualized multicomponent interventions that prescribed both antecedent and consequence procedures for students' targeted problem behaviors. Teachers were expected to employ these behavior support plans under specific conditions (e.g., activities, times of day, and contingent on student behaviors). Six behaviors were targeted for Seth (tantrums, perseverative speech, inappropriate speech, inappropriate social behavior, teasing, and noncompliance), Philip (major aggression, minor aggression, inappropriate speech, inappropriate touching, public exposure, and instigation), and Brian (aggression, destruction, peer instigation, inappropriate speech, inappropriate sexual behavior, and noncompliance). Four behaviors were targeted for Jason (tantrums, inappropriate speech, inappropriate social behavior, and invasion of space), and six behaviors were targeted for Darrin (physical aggression, property destruction, wandering, inappropriate verbalizations, mimicking, and noncompliance).

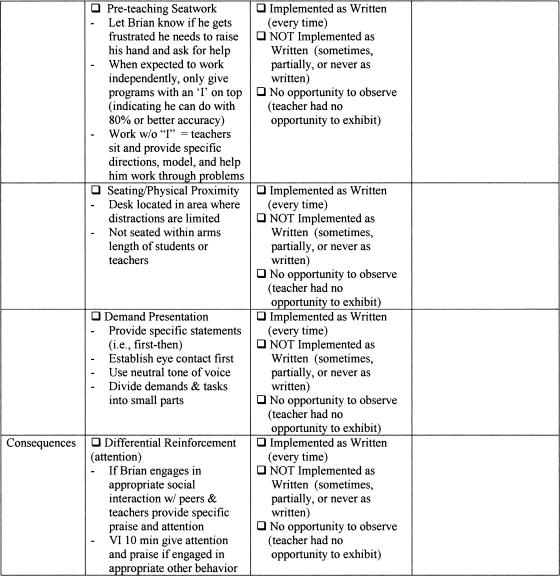

Integrity data sheet

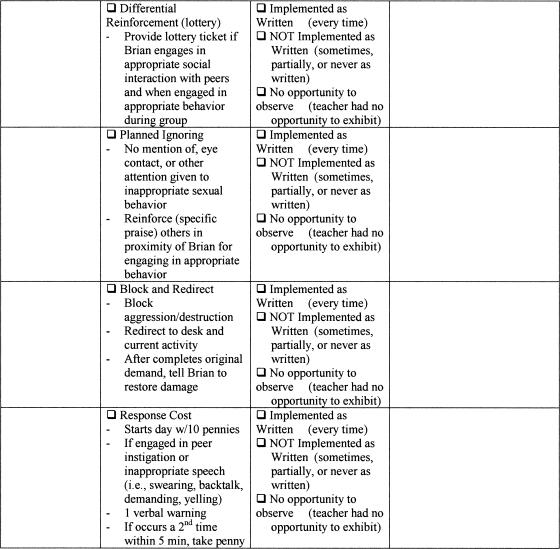

The integrity data sheet was three pages long and included (a) the type of procedure (i.e., antecedent or consequence) that was prescribed for that particular student, (b) an operational definition of each component of the intervention (copied directly from each student's behavior support plan), (c) observer ratings of the teacher implementation of each component (see below), and (d) a space for the observer to record comments or examples. Each component consisted of specific behaviors that teachers were instructed to engage in, either as a result of the activity a student was required to perform or in response to students' behavior. For example, when asked to change from a preferred to a nonpreferred activity, Ms. Malden was instructed to provide Jason with three transition warnings (i.e., at 2 min, 1 min, and 30 s). Another example of an antecedent component specified that Mr. Mack explain all expectations to Darrin using first-then statements for every activity that he engaged in. Consequences included reinforcement such as receiving social attention (e.g., pat on shoulder, praise, conversation) contingent on appropriate social behavior (e.g., sharing, complimenting peers) or planned ignoring following the occurrence of inappropriate speech. Table 1 lists all the antecedent and consequence components that were prescribed in various students' behavior support plans.

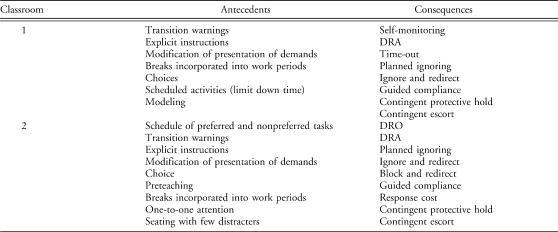

Table 1. Table 1 Behavior Support Plan Components Classified as Antecedents and Consequences.

Note. DRO = differential reinforcement of other behavior; DRA = differential reinforcement of alternative behavior.

The integrity data sheet divided each plan into 10 to 13 individualized components (see the Appendix for Brian's integrity data sheet; other participants' data sheets are available from the authors on request). One of three levels of implementation integrity was scored under the implementation rating section of the integrity data sheet: (a) implemented as written (i.e., the entire component was implemented every time the target behavior occurred or when the situation required an antecedent component), (b) not implemented as written (i.e., sometimes implemented the entire component as written, implemented part of the component as written, or did not employ the component as written), and (c) no opportunity to observe (i.e., the target behavior did not occur, or the antecedent was not present). For example, Ms. Malden received a rating of implemented as written if she provided all three transition warnings every time Jason was required to change from a preferred to a nonpreferred activity during the observation period. However, she received a rating of not implemented as written if she provided the three warnings for only two of three opportunities that Jason had to change from a preferred to a nonpreferred activity or if she provided only two transition warnings. Mr. Mack earned a rating of implemented as written if he blocked Darrin from leaving the classroom, redirected him to the scheduled activity, and provided a warning that Darrin would lose a penny for every time that he attempted to elope from his classroom. If Mr. Mack did not carry out all parts of this component every time that Darrin attempted to elope from his classroom, he received a rating of not implemented as written. In the comments and examples section of the integrity data sheet, consultants could provide an example of how the teacher implemented the component.

Percentage of correct implementation was used as the measure of treatment integrity, and was calculated by dividing the the number of plan components that were implemented as written divided by the total number of plan components teachers had the opportunity to exhibit. The average percentage of the plan components that teachers had the opportunity to exhibit was 61% (range, 50% to 90%) for Classroom 1 and 57% (range, 40% to 77%) for Classroom 2 across teacher–student dyads. There were no opportunities for teachers to exhibit all the components in the behavior support plan in any single observation period. It should be noted that consultants took notes on specific deviations from the written procedure.

Social validity

A 10-item questionnaire that examined the acceptability of the performance feedback and corresponding integrity observations was administered to each teacher after performance feedback was terminated. Three items addressed the purpose of performance feedback, four items inquired about the procedures of the observation and feedback sessions, and three items asked teachers to rate the outcomes of the feedback sessions (e.g., was it helpful to increase integrity of plan implementation) (Schwartz & Baer, 1991). Respondents were required to rate each item on a Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). Teachers were instructed not to place their names on the questionnaires, and a box was placed in a common area for teachers to return the forms.

Training

All teachers had received both general and student-specific formal training in implementing behavior support plans prior to this investigation. After being hired at the school, teachers received 4 hr of training in basic principles of applied behavior analysis. Teachers also received ongoing in-service training on general behavior principles four times annually. One in-service session was conducted during the course of this project, and the topic (i.e., task analysis) was unrelated to these students' behavior support plans. Teachers received 2 weeks of specific training on individual students' plans at the time they were developed. This training included reviewing the written plan with a consultant (i.e., one of the authors), modeling by the consultant in the classroom with the target student, prompting the teacher-in-training to employ the components as written, and immediate performance feedback given by the consultant. Teachers were not trained to a standardized criterion; however, training continued until they could verbally report each component of the plan and reported that he or she could implement the plan. In addition, consultants were present in the classrooms on a daily basis and provided informal feedback to teachers.

Procedure

Observation sessions

Observations were conducted an average of every 2.1 weeks for each dyad (range, 1 to 3 weeks). Variations in the time between observation sessions reflected teachers' earned leave time and school breaks. Each observation was planned to be 60 min long. There were five occasions on which observations were less than 60 min (range, 45 to 55 min). The length of time for each observation session and time between observation sessions remained consistent throughout the baseline, intervention, and maintenance phases of data collection. As recommended by Gresham et al. (1993), we attempted to reduce reactivity by conducting observations on a variable-time schedule and used consultants who routinely worked in the classrooms as primary observers. The consultant assigned to Classroom 1 spent 50% of his total time conducting observations. Nine percent of the consultant's time in Classroom 1 was devoted to this project. The consultant assigned to Classroom 2 devoted 33% of her time to conducting observations. Six percent of the consultant's time in Classroom 2 was devoted to this project.

Baseline

Baseline consisted of observing each student–teacher dyad and completing the integrity data sheet without the teachers' knowledge of the observation. Feedback was provided following the observation. Teachers were not told the purpose of the observation during baseline. This was not unusual, because observations were a consistent part of the duties of experimenters.

Intervention

Performance feedback was implemented after stable or decreasing performance in baseline was demonstrated by either the percentage of antecedent components or consequence components implemented as written. The first feedback session was provided to each teacher after the last baseline session to address inadequate plan implementation as soon as possible for the benefit of the student. On the same day as each observation, the experimenter spent an average of 12 min with the target teacher outside the classroom. At this time, components in the behavior support plan were reviewed, and feedback was provided on all the components that were observed. Feedback included providing praise for components followed as written and constructive feedback for those components that were followed sometimes or not at all. Constructive feedback consisted of reviewing the specific components observed and explaining how the component should have been implemented.

Performance feedback was terminated after improved performance had stabilized. Two maintenance sessions were conducted for Mr. Canton and Mr. Martin, three maintenance sessions were conducted for Ms. Lowell and Ms. Malden, and one maintenance session was conducted for Mr. Mack. Maintenance sessions were identical to baseline sessions. The first maintenance session occurred 5 weeks after the last feedback session. Each subsequent follow-up session occurred at 5-week intervals across dyads.

Experimental design

A concurrent multiple baseline across teacher–student dyads design was selected for each classroom to evaluate the efficacy of performance feedback. Maintenance sessions were employed to examine the short-term effects of the intervention when feedback was no longer provided.

Interobserver Agreement

Interobserver agreement was collected across 20% of the sessions. A second independent observer was present and used the same treatment integrity form to observe the teachers directly. On a component-by-component basis, comparisons were conducted between the second observer and the primary observer's responses. Agreement was calculated by dividing the number of agreements per component by the number of agreements plus disagreements per component and multiplying by 100%. Mean agreement across dyads was 95% (range, 91% to 100%).

Results

Teacher Implementation Of The Behavior Support Plan

Classroom 1

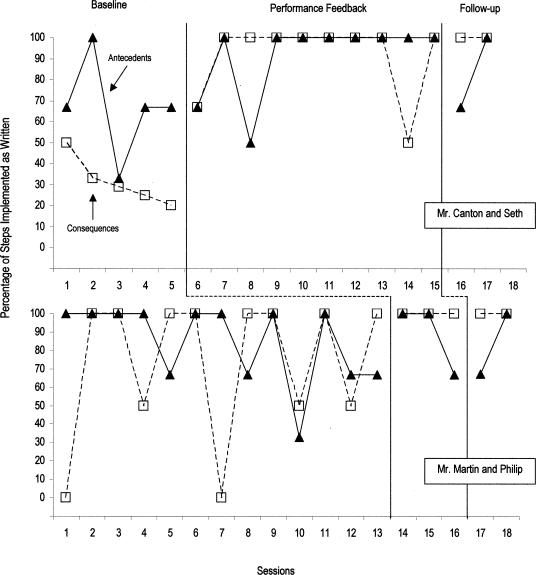

Figure 1 presents the percentage of antecedent and consequence components implemented as written (correctly implemented) for 2 student–teacher dyads in Classroom 1. During baseline, a decreasing trend was evident for the percentage of consequence components that Mr. Canton implemented as written (M = 31%). Performance of antecedent components implemented correctly was stable (M = 69%) during baseline. Following performance feedback there was an immediate increase in the percentage of consequence components correctly implemented (M = 92%) and a gradual increase in the percentage of antecedent components correctly implemented (M = 92%).

Figure 1. Percentage of antecedent and consequence components implemented as written across teacher–student dyads for Classroom 1.

During baseline, Mr. Martin's performance was variable for the correct implementation of antecedent and consequence components. Mr. Martin correctly implemented 100% (eight sessions), 50% (three sessions), or none (two sessions) of the consequence components during baseline, which makes these data difficult to interpret. The mean percentage of components correctly implemented during baseline was 85% for antecedents and 73% for consequences. Following performance feedback, Mr. Martin demonstrated 100% correct implementation across consequence components for three consecutive sessions (M = 100%). There was little change in Mr. Martin's correct implementation of antecedent components (M = 89%).

Classroom 2

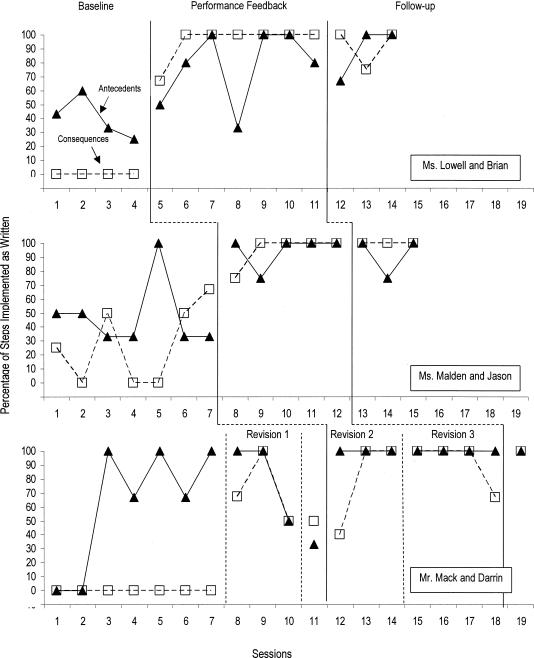

Figure 2 presents the percentage of antecedent and consequence components implemented as written for 3 student–teacher dyads in Classroom 2. During baseline, a decreasing trend was evident for the percentage of antecedent components correctly implemented by Ms. Lowell (M = 40%). Ms. Lowell did not correctly implement any consequence components during baseline (M = 0%). Following performance feedback, substantial improvements were demonstrated in the percentage of correctly implemented antecedent (M = 78%) and consequence components (M = 95%).

Figure 2. Percentage of antecedent and consequence components implemented as written across teacher–student dyads for Classroom 2.

During baseline, Ms. Malden's correct implementation of antecedent components was stable (M = 47%). Correct implementation of consequence components appeared to be increasing in baseline (M = 27%). Every attempt was made to have stable or decreasing trends in baseline prior to implementation of performance feedback. However, a clinical decision was made to employ performance feedback when the implementation of antecedent components was stable. Following performance feedback, correct implementation of antecedent (M = 95%) and consequence (M = 95%) components improved.

The sequence of baseline and performance feedback was varied for Mr. Mack and Darrin. During the initial baseline phase, Mr. Mack displayed stable performance for the implementation of antecedent components as written. Mr. Mack did not correctly implement any consequence components. Following the initial baseline, an increase in the frequency and intensity of Darrin's physical aggression required several revisions in his behavior support plan. Following Revision 1, there was an increase in the correct implementation of consequence components, and antecedent performance remained at baseline levels. Revision 2 encompassed both baseline and performance feedback. Performance feedback resulted in an increase in the percentage of antecedent and consequence components correctly implemented. Improvements in performance were maintained during Revision 3 across antecedent and consequence components.

Maintenance observations

Treatment integrity was maintained at high rates during the follow-up phase across all teachers (Figures 1 and 2). The percentages of antecedent (M = 83%) and consequence (M = 100%) components correctly implemented were maintained 5 and 10 weeks following the termination of performance feedback for Mr. Canton. For Mr. Martin, high levels of correct implementation were maintained across antecedent (M = 83%) and consequence (M = 100%) components 5 and 10 weeks after feedback. For Ms. Lowell, high percentages of correctly implemented antecedent (M = 89%) and consequence (M = 92%) components were maintained at 5, 10, and 15 weeks without performance feedback. Ms. Malden also implemented high percentages of antecedent (M = 92%) and consequence (M = 100%) components as written during three follow-up observations (i.e., 5, 10, and 15 weeks after intervention). Five weeks after performance feedback was terminated, Mr. Mack correctly implemented 100% of antecedent and consequence components.

Social Validity

Average ratings across all teachers ranged from 4.5 to 5.0 for each item. For the purpose of feedback sessions, intervention procedures, and intervention outcomes, mean ratings of 4.8, 4.7, and 4.9, respectively, were obtained, indicating that teachers strongly agreed with items related to the purpose and procedures as well as the benefits of the intervention outcomes on their skills and the subsequent impact on their students.

Discussion

The results revealed that accurate implementation of ongoing behavior support plans improved across 5 student–teacher dyads following performance feedback. This research extends the literature by demonstrating the efficacy of performance feedback on the treatment integrity of individualized multicomponent behavior support plans in a special education setting. This study also showed that the results of performance feedback were maintained for up to 15 weeks. In contrast to other studies (Mortenson & Witt, 1998; Noell et al., 1997, 2000;Witt et al., 1997), treatment integrity was assessed using direct observation, and performance feedback was provided every other week rather than daily (Noell et al., 1997, 2000; Witt et al., 1997) or weekly (Mortenson & Witt, 1998) and on the same day that the observation occurred.

Performance feedback resulted in greater percentages of both antecedent and consequence components correctly implemented for 4 of 5 teachers. For the 5th teacher (Mr. Martin), performance feedback resulted in increases in correct implementation of consequence components. These data also illustrate that during baseline, 4 of the 5 teachers correctly implemented more antecedent than consequence components, although implementation was variable in some cases and at lower rates than desirable. In the absence of performance feedback, teachers may have more successfully implemented antecedent components because these interventions were similar across students. For example, in Classroom 2, 3 students had daily scheduling with preferred following nonpreferred activities and choices built into all tasks, 2 students needed demands to be presented in individualized ways, and 2 students needed transition warnings. As a result, these antecedent procedures may have operated as part of the daily routine. In addition, the complexity (e.g., various schedules and types of reinforcement) and number of consequence procedures may account for lower treatment integrity of consequence components in baseline. Which consequence components were more likely to be correctly employed (e.g., positive vs. negative reinforcement) was not examined; however, research has previously demonstrated that teachers tend to focus their attention on students' inappropriate behavior (Cooper, Thomson, & Baer, 1970). Future research might consider assessing teachers' implementation of specific consequence components.

Differences in implementation of antecedent and consequence procedures evident in this study may have interesting implications for the types of interventions used to increase teachers' treatment integrity. These findings suggest that future studies should continue to examine the integrity of individual treatment components (Gresham et al., 1993). Analysis of whether treatment integrity is a function of teacher skill or motivational deficits is also a worthy topic for investigation. For example, exploring whether treatment integrity increases as a function of performance feedback, teachers' role in intervention planning, contingent reinforcement, training, or a combination of these may begin to address whether treatment integrity is a result of a skill or motivational deficit.

Despite its documented success, the potency of performance feedback has been criticized for several reasons, including the following: (a) Results have not been maintained at high rates (Mortenson & Witt, 1998; Witt et al., 1997). (b) It may be time consuming (Mortenson & Witt, 1998). (c) There may be a lack of acceptability by change agents (e.g., teachers) (Mortenson & Witt, 1998) as well as (d) reactivity to observers (Mortenson & Witt, 1998; Witt et al., 1997). To increase the sophistication of treatment-integrity interventions and specifically performance feedback, attempts to address these concerns were included in the current study.

Improvements in the integrity of behavior support plan implementation were maintained in the current study. This may be due to the length of time performance feedback was employed and the latency between sessions. Previous research implemented performance feedback for approximately 6 weeks (Mortenson & Witt, 1998; Noell et al., 2000) compared to 8 to 22 weeks in our study. Whereas other studies utilized daily (Noell et al., 1997, 2002; Witt et al., 1997) or weekly (Mortenson & Witt, 1998) performance feedback, we provided feedback every other week. Important to note is that performance feedback was provided during the same days that direct observations occurred. Consequently, recent examples of teachers' behavior could be reviewed during feedback sessions. This schedule of performance feedback may lead to the maintenance of treatment effects in the short term and is time efficient and practical for clinicians to implement. However, it is unlikely that these results would be maintained over the long term. We suspect that periodic collection of treatment integrity data and subsequent performance feedback are necessary for high rates of intervention integrity to persist.

The importance of investigating the social validity of performance feedback has evolved from suggestions (Mortenson & Witt, 1998) that feedback may serve as a negatively reinforcing event for some teachers. All of the teachers in the current study rated performance feedback favorably with respect to the purpose, procedures, and outcome; this is consistent with the findings of Noell et al. (2002). Assessing the acceptability of performance feedback provides a preliminary attempt to identify how teachers perceive treatment integrity interventions. Future researchers may consider experimentally analyzing the function that performance feedback serves for teachers, rather than relying on verbal report.

The current investigation has several limitations. First, it is possible that treatment effects were confounded by reactivity to being observed. Consistent with recommendations by Gresham et al. (1993), we attempted to reduce reactivity by conducting observations on a variable-time schedule and used consultants who had been assigned to these classrooms as primary observers. Therefore, it was common for teachers to see these consultants in the classroom conducting observations. Although we attempted to make these observations unobtrusive, teachers were aware during the intervention phase that they were being observed. A second limitation of the study is that implementation of performance feedback occurred when either antecedent or consequence components were stable or decreasing. As a result, experimental control may not be as robust for Ms. Malden, whose correct implementation of consequence components appeared to be improving in baseline. Every attempt was made to have stable or decreasing trends in baseline prior to feedback, but a clinical decision was made to employ performance feedback when the implementation of antecedent components was stable. Third, interobserver agreement was based on consistency between consultants' ratings on each plan component, but a more conservative assessment of agreement would have been to assess whether each consultant recorded the same type and number of deviations from the plan component in proportion to the number of opportunities the teacher had to implement the component. Fourth, it should be noted that verbal report was used to assess the social validity of performance feedback; this may be subject to rater bias given the frequency with which teachers worked with the consultants. Fifth, the functions of individual components included in performance feedback were not analyzed. For example, praise and corrective feedback may be differentially effective. Sixth, we categorized interventions as antecedent and consequence procedures. Although an attempt was made to use the literature available to make these determinations, some procedures (e.g., providing breaks from work and self-monitoring) could be categorized as either antecedent or consequence procedures. It may be fruitful to explore the classification of interventions considered to be antecedents and consequences. Finally, the impact of increased treatment integrity on students' maladaptive behavior was not examined. Ultimately, the interest in developing procedures to increase treatment integrity has resulted from its impact on treatment efficacy. Therefore future studies should make an effort to provide corresponding information on students' behavior.

In summary, the results of this study suggest that performance feedback is an effective and acceptable intervention for increasing the treatment integrity with which special education teachers administer antecedent and consequence components in the context of an ongoing behavior support plan. Although the length of time this intervention was employed extends that of previous performance feedback studies, feedback was provided for an average of 12 min every other week, which is both practical and time efficient. This study also introduced a method for using direct observation procedures instead of permanent products to assess treatment integrity.

Appendix A

Total components in the behavior support plan: 11

Total plan components teachers had the opportunity to exhibit: __________

Antecedent plan components teachers had the opportunity to exhibit: __________

Antecedent plan components teachers did not have the opportunity to exhibit: __________

Antecedent plan components teachers had the opportunity to exhibit:

where implementation was as written: _____ %_________

where implementation was not as written: _____ %_________

Consequence plan components teachers had the opportunity to exhibit: __________

Consequence plan components teachers did not have the opportunity to exhibit: __________

Consequence plan components teachers had the opportunity to exhibit:

where implementation was as written: _____ %_________

where implementation was not as written: _____ %_________

Study Questions

What are the typical components of a performance feedback system?

How did the authors attempt to minimize observer reactivity?

Briefly summarize the effects observed during the baseline and feedback conditions.

Given that performance feedback contains a number of elements, what types of behavioral processes may have accounted for the observed results?

To what did the authors attribute higher levels of treatment integrity for antecedent versus consequence procedures during baseline?

What type of data, not collected in the study, might have strengthened the authors' conclusions about improvements in teacher performance?

The authors discussed treatment integrity failures as a function of deficits related to skills versus motivation. Why might feedback as an intervention be unable to distinguish between the two types of deficits?

What are some potential advantages and disadvantages of biweekly observation and performance feedback?

Questions prepared by Natalie Rolider and Sarah E. Bloom, University of Florida

References

- Cooper M.L, Thomson C.L, Baer D.M. The experimental modification of teacher attending behavior. Journal of Applied Behavior Analysis. 1970;3:153–157. doi: 10.1901/jaba.1970.3-153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cossiart A, Hall R.V, Hopkins B.L. The effects of experimenter's instructions, feedback and praise on teacher praise and student attending behavior. Journal of Applied Behavior Analysis. 1973;6:89–100. doi: 10.1901/jaba.1973.6-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gillat A, Sulzer-Azaroff B. Promoting principals' managerial involvement in instructional improvement. Journal of Applied Behavior Analysis. 1994;27:115–129. doi: 10.1901/jaba.1994.27-115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gresham F.M, Gansle K.A, Noell G.H. Treatment integrity in applied behavior analysis with children. Journal of Applied Behavior Analysis. 1993;26:257–263. doi: 10.1901/jaba.1993.26-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones K.M, Wickstrom K.F, Friman P.C. The effects of observational feedback on treatment integrity in school-based behavioral consultation. School Psychology Quarterly. 1997;12:316–326. [Google Scholar]

- Kern L, Choutka C.M, Skol N.G. Education & Treatment of Children. Vol. 25. 2002. Assessment-based antecedent interventions used in natural settings to reduce challenging behavior: An analysis of the literature. pp. 113–130. [Google Scholar]

- Luiselli J.K, Cameron M.J, editors. Antecedent control: Innovative approaches to behavioral support. Baltimore: Paul H. Brookes; 1998. [Google Scholar]

- Martens B.K, Hiralall A.S, Bradley T.A. A note to teacher: Improving student behavior through goal setting and feedback. School Psychology Quarterly. 1997;12:33–41. [Google Scholar]

- Mortenson B.P, Witt J.C. The use of weekly performance feedback to increase teacher implementation of a pre-referral academic intervention. School Psychology Review. 1998;27:613–627. [Google Scholar]

- Noell G.H, Duhon G.J, Gatti S.L, Connell J.E. Consultation, follow-up, and implementation of behavior management interventions in general education. School Psychology Review. 2002;31:217–234. [Google Scholar]

- Noell G.H, Witt J.C, Gilbertson D.N, Ranier D.D, Freeland J.T. Increasing teacher intervention implementation in general education settings through consultation and performance feedback. School Psychology Quarterly. 1997;12:77–78. [Google Scholar]

- Noell G.H, Witt J.C, LaFleur L.H, Mortenson B.P, Ranier D.D, LeVelle J. Increasing intervention implementation in general education following consultation: A comparison of two follow-up strategies. Journal of Applied Behavior Analysis. 2000;33:271–284. doi: 10.1901/jaba.2000.33-271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwartz I.S, Baer D.M. Social validity assessments: Is current practice state of the art? Journal of Applied Behavior Analysis. 1991;24:189–204. doi: 10.1901/jaba.1991.24-189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- U.S. Department of Education. The no child left behind act. Washington, DC: Author; 2001. [Google Scholar]

- Witt J.C, Noell G.H, LaFleur L.H, Mortenson B.P. Teacher use of interventions in general education settings: Measurement and analysis of the independent variable. Journal of Applied Behavior Analysis. 1997;30:693–696. doi: 10.1901/jaba.1997.30-693. [DOI] [PMC free article] [Google Scholar]

- Watson T.S, Sterling H.M, McDade A. Demystifying behavioral consultation. School Psychology Review. 1997;26:467–474. [Google Scholar]