Abstract

EyeMap is a method for visualizing and classifying eye movement patterns using scanpaths, fixation heatmaps, and gridded Areas of Interest (AOIs). EyeMap combines predictions from modality-specific machine learning and deep learning models using a late-fusion technique to produce interpretable gaze representations. By collecting spatial, temporal, and regional elements of gaze data, the method enhances diagnostic interpretability and enables the detection of Parkinsonian symptoms. This method provides complementary perspectives on gaze behavior, encompassing spatial focus, temporal scan order, and attention allocation across regions of interest. A dataset consisting of visualizations of organized visual tasks completed by both PD patients and healthy controls is created to support the development and validation of this method. EyeMap shows that vision-driven models may detect PD-specific gaze anomalies without the need for manual feature engineering. All implementation steps, from data acquisition to model fusion, are fully described to enable reproducibility and potential adaptation to other gaze-based analysis contexts.

-

1.

A structured method was developed to visualize eye-tracking data in three distinct formats

-

2.

Classification outputs from separate gaze visualizations were combined using softmax-level fusion

-

3.

A new eye-tracking dataset was generated to support method development and reproducibility

Keywords: Eye tracking, Gaze visualization, Classification, Human-computer interaction, Visual attention, Machine learning, Deep learning

Graphical abstract

Specifications table

| Subject area | Computer Science |

| More specific subject area | Eye-tracking, Computer Vision |

| Name of your method | EyeMap |

| Name and reference of original method | Akshay, S., Amudha, J., Narmada, N., Bhattacharya, A., Kamble, N., Pal, P.K. (2023). iAOI: An Eye Movement Based Deep Learning Model to Identify Areas of Interest. In: Morusupalli, R., Dandibhotla, T.S., Atluri, V.V., Windridge, D., Lingras, P., Komati, V.R. (eds) Multi-disciplinary Trends in Artificial Intelligence. MIWAI 2023. Lecture Notes in Computer Science(), vol 14,078. Springer, Cham. https://doi.org/10.1007/978–3–031–36,402–0_61 |

| Resource availability | None |

Background

Eye gaze data provides valuable insights into human visual attention, cognition, and behavior. However, visualizing this data meaningfully from raw inputs such as coordinate-based gaze points or image sequences captured by tracking devices remains challenging. This paper introduces a structured methodology to process image-based eye-tracking data and convert it into insightful visual formats for further analysis. Eye movement visualization techniques have been widely studied, with early methods such as fixation maps, heatmaps, and gaze plots serving as foundational tools [[1], [2], [3]]. Fixation maps highlight specific points where the viewer's gaze remains stationary, while heatmaps provide a color-coded representation of gaze intensity over a visual stimulus [4,16]. Gaze plots trace the sequence of eye movements, offering insights into the path taken by an observer's gaze [9,10].Recent advancements integrate artificial intelligence (AI) and machine learning to enhance gaze data interpretation. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have been employed to predict gaze patterns and automate gaze analysis [[5], [6], [7]]. AI-driven gaze clustering techniques improve the segmentation of eye-tracking data, making it easier to identify user intent and attention shifts [8]. Moreover, novel deep learning-based models such as iAOI offer promising improvements in identifying areas of interest in eye-tracking data [7]. Saliency-based visualization approaches further enhance gaze representation by embedding attention cues [11,15]. Despite these advancements, challenges remain. One major issue is the visualization of multi-user gaze data, which can lead to ambiguous interpretations in collaborative settings [13]. Additionally, handling large-scale gaze datasets efficiently while maintaining real-time performance is a critical research area [12,14]. This approach is particularly useful for developers and researchers looking to create systems that react to user gaze in real-time[17], like adaptive learning systems, attention-aware user interfaces [18], or virtual environments that mimic human interaction. It can also be used in clinical diagnostics, where eye movement can be used as an early predictor of neurological disease.

EyeMap Method Details

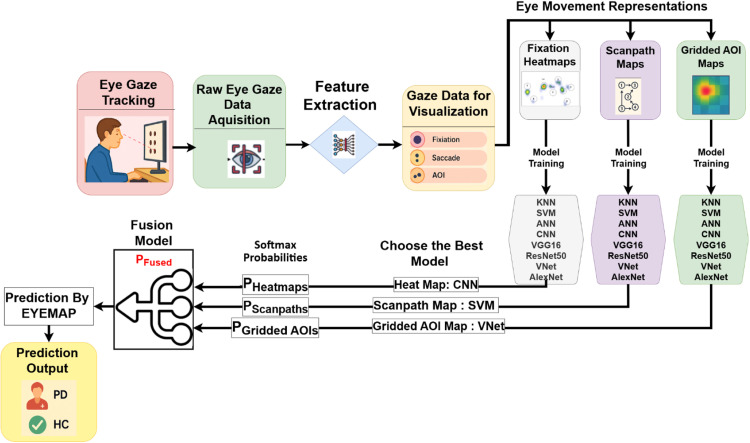

EyeMap (Fig. 1) is a reproducible method designed to transform raw eye-tracking data into three structured visual formats: fixation heatmaps, scanpath trajectories, and gridded Areas of Interest (AOIs). Each visualization type captures a different aspect of gaze behavior, allowing complementary classification using separate models. The final output is obtained by applying softmax-level late fusion across predictions from each visual stream.

Fig. 1.

EyeMap: Graphical abstract.

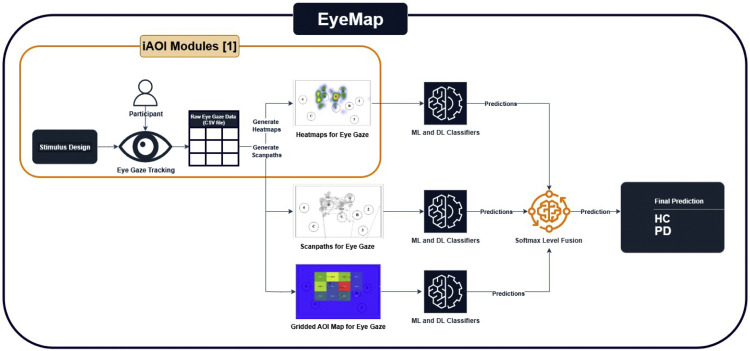

Fig. 2 provides an extensive description of the EyeMap fusion architecture, which combines many gaze-based visualisations to improve the differentiation between healthy controls (HC) and Parkinson's disease (PD). In order to elicit different patterns of visual attention, participants first do a visual search task utilising a set of structured stimuli (Stimuli 1, 2, and 3).This method is inspired by and extends a previously published framework, as shown in Fig. 3, iAOI [1], which introduced the generation of fixation heatmaps and AOI-based features for classifying visual attention patterns. EyeMap builds upon this foundation by incorporating additional gaze visualization types—specifically, scanpath trajectories and structured gridded AOIs—and fusing model outputs to enhance classification robustness. This extension enables a broader representation of gaze behavior by integrating spatial, temporal, and regional characteristics.

Fig. 2.

Detailed EyeMap architecture.

Fig. 3.

Architecture diagram of the EyeMap method showing incorporation of heatmap generation from the previously published iAOI method. EyeMap extends the pipeline with scanpath and AOI-based streams and combines predictions using softmax-level fusion.

Raw eye movement data, such as fixations, saccades, and gaze trajectories, are gathered using an eye-tracking device. Three different visualisation modes are then created from these data Heatmaps, Scanpaths, and Gridded Areas of Interest (AOIs). A collection of machine learning ML and DL models customised for each modality's unique input properties is used to process it separately. These models include performance metrics, including accuracy, precision, recall, and F1-score in addition to class predictions (PD or HC).

The framework uses a fusion process that aggregates model outputs, usually utilising late fusion at the softmax probability level, to make use of each modality's strengths. By including both spatial and temporal gaze information in the final classification decision, this step increases robustness and lessens reliance on a single visual representation. The use of EyeMap as a therapeutically feasible tool for non-invasive treatment is ultimately supported by the fusion-based decision output, which produces a more accurate and dependable prediction of whether the subject displays Parkinsonian symptoms or healthy control behaviour.

Participants

The model considered 28 PD Patients and 12 Healthy Control Data for Experimentation. The average age within this broader PD category ranged approximately between 55 and 60 years, with a mean age across subgroups being: PD – 55.06 ± 7.81 years. Healthy control participants had a mean age of 56.00 ± 4.24 years, aligning closely with the PD group, minimizing age-related bias in model training.

Materials and tools

-

•

Eye-Tracking Device: SensoMotoric Instruments (SMI) iView X Hi-Speed 1250

-

•

Sampling rate: 1250 Hz

-

•

Accuracy: 0.5°

-

•

Calibration Mode: 5-point Calibration

-

•

Software: Python 3.9, OpenCV 4.5, Matplotlib 3.4, Seaborn 0.11, NumPy 1.20

-

•

Data Format: CSV files with timestamps, fixation duration, saccades, and gaze coordinates

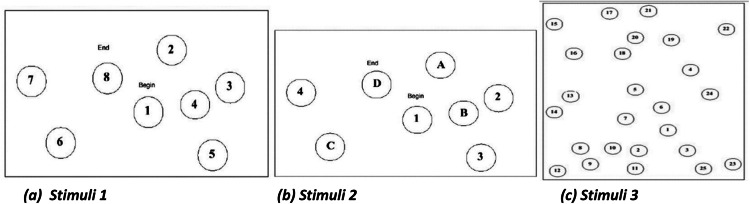

Task and stimuli

Participants performed structured visual search tasks across three stimulus images. These stimuli consisted of trail-making sequences of letters and numbers designed to prompt controlled and goal-directed eye movement patterns. The tasks were adapted from previously validated stimuli [17]. The images include a trial-making task with numbers and letters as shown in Figs. 4(a), 4(b), and 4(c). The participant must trace them in order.

Fig. 4.

Eye-tracking image stimuli used in the experiment: (a) Numerical tracing stimulus with numbers to be followed in a prescribed sequential order, (b) Alphabetical tracing stimulus with letters arranged for sequential tracing, and (c) A complex numerical tracing stimulus with a higher density and irregular spatial distribution of numbers, designed to increase task difficulty.

Input Data Representations: Heatmaps, AOIs, and Scanpaths

In the EyeMap framework, three categories of input images were derived from eye-tracking data and subsequently used for classification: (i) Heatmaps, representing spatial attention distributions; (ii) Gridded AOIs, capturing fixation-based regional activity; and (iii) Scanpaths, encoding the sequential progression of gaze movements. These modality-specific representations were then provided as inputs to corresponding machine learning and deep learning models within the fusion pipeline.

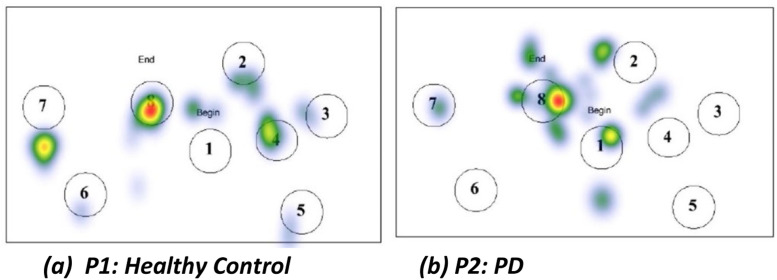

Heatmaps visualize the spatial distribution of eye fixations across a stimulus image. The heat maps generated by EyeMap using the fixations by the participant with PD and healthy controls are shown the Fig. 5. Warmer colors indicate more attention, and colder colors indicate less attention. In case of healthy controls, the heatmaps show strong clustering and task-related areas of interest. Heatmaps often appear diffuse or scattered across the screen in the case of PD patients. Reduced concentration on AOIs, indicating impaired oculomotor control, attention deficits, or cognitive inflexibility. PD patients may miss critical AOIs, suggesting difficulty in goal-directed gaze behavior.

Fig. 5.

Heatmaps for image stimulus 1 used as input by EyeMap for participant P1 (Healthy Control) and P2 (PD).

Scanpaths trace the sequence and direction of saccades and fixations, providing insight into eye movement patterns over time. Fig. 6 presents the scanpaths generated for the stimulus. Scanpaths for healthy control show short, purposeful saccades and structured scan orders, and the paths reflect strategic and goal-directed viewing, often aligning with task demands or visual saliency. PD Patients exhibit longer or erratic scanpaths, with more back-and-forth movements. It demonstrates delayed initiation of saccades, and their scanpaths are often less efficient. Patients may revisit the same AOI repeatedly or prematurely terminate exploration, indicating executive dysfunction or visuospatial deficits.

Fig. 6.

Scanpaths for image stimulus 1 used as input by EyeMap for participant P1 (Healthy Control) and P2 (PD).

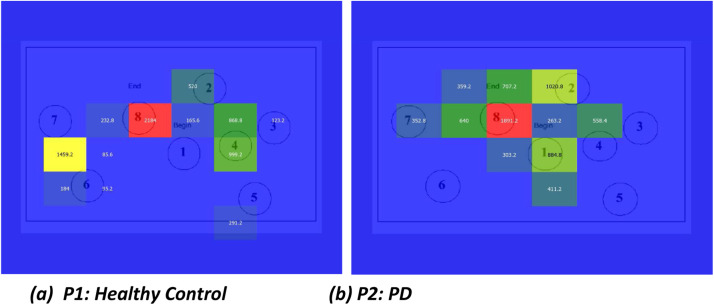

Gridded AOIs involve segmenting the visual stimulus into a structured grid (e.g., 3 × 3 or 4 × 4), where each cell represents a spatial zone in the stimulus. This enables quantitative analysis of gaze patterns by examining how often and how long participants fixate within each region. As seen in Fig. 7, Healthy control participants typically show concentrated fixation activity within specific grid zones that correspond to salient or task-relevant AOIs. The distribution is sparse but targeted, with fewer grid cells activated. Their viewing strategy reflects intentional exploration, cognitive control, and goal-driven attention. The transition between grid cells follows a logical pattern, often aligned with task semantics or visual salience. PD participants tend to have broader activation across multiple grid zones, reflecting less efficient visual search. Fixation durations may be longer in peripheral or irrelevant AOIs, suggesting difficulty disengaging attention or processing inefficiency. The sequence of activated AOIs appears more random or repetitive, indicating visuospatial disorientation or executive function deficits. There’s also a higher frequency of revisits to previously viewed AOIs (regressions), which is rare in efficient visual processing. These visualisations offer complementary perspectives on attentional and cognitive control impairments. The qualitative and quantitative differences observed across these modalities strongly motivated us to explore both machine learning and deep learning techniques for automated classification of PD versus HC. This multimodal approach holds promise for building robust, non-invasive, and interpretable diagnostic systems, facilitating early detection and monitoring of PD.

Fig. 7.

Gridded AOI Maps for image stimulus 1 used as input by EyeMap for participant P1 (Healthy Control) and P2 (PD).

Pseudocode for Eyemap Framework

Data acquisition

Input: Participant P, Stimuli S = {S1, S2, S3}Output: Raw gaze data G = {(x_i, y_i, t_i, e_i)} For each participant P: Calibrate eye tracker using 5-point calibrationFor each stimulus S_j in S: Display S_j on screen For time t_i in duration D_j: Record gaze (x_i, y_i), timestamp t_i, and event type e_i ∈ {fixation, saccade} Append to G: (x_i, y_i, t_i, e_i) Return G

Preprocessing

Input: Gaze data G = {(x_i, y_i, t_i, e_i)} Output: Cleaned and normalized data G'For each (x_i, y_i, t_i, e_i) in G:

If (x_i, y_i) invalid: remove entryNormalize x_i ← x_i / W_image, y_i ← y_i / H_imageIf fixation/saccade segmentation needed: Apply dispersion/velocity threshold to segment eventsReturn G'

Visualization generation

Input: Cleaned G' = {(x_i, y_i, t_i, e_i)}, Grid size n × n Output: V_heatmap, V_scanpath, V_AOIHeatmap: For each fixation (x_i, y_i, d_i): Apply kernel K centered at (x_i, y_i) V_heatmap(x, y) = Σ K(x, y; x_i, y_i) Scanpath: Order fixations F = {(x_i, y_i, t_i)} by t_i Connect (x_i, y_i) → (x_{i + 1}, y_{i + 1}) with arrowsGridded AOI: Define AOI grid A_{m,n} For each fixation (x_i, y_i): Find cell A_{m,n} containing (x_i, y_i) Increment duration D_{m,n} += d_iReturn: V_heatmap, V_scanpath, V_AOI

Model Training

Input: {V_heatmap, V_scanpath, V_AOI}, Labels Y ∈ {0,1}Output: Trained models M_heatmap, M_scanpath, M_AOIFor modality M ∈ {heatmap, scanpath, AOI}: Split data D_M into (X_train, Y_train), (X_test, Y_test) Train f_M: X → [p_control, p_PD] using: M_heatmap → {CNN, VGG16, SVM} M_scanpath → {ANN, CNN} M_AOI → {VNet, ANN, KNN} Optimize via argmin L(Y, f_M(X)) using cross-entropy

Return M_heatmap, M_scanpath, M_AOI

Fusion

Input: Probabilities P_H, P_S, P_A from three modelsOutput: Final probability vector P_fusedLet P_H = [p_c_H, p_PD_H], P_S = [p_c_S, p_PD_S], P_A = [p_c_A, p_PD_A] Compute:

P_fused = (P_H + P_S + P_A) / 3

Final label = argmax(P_fused)

Return: P_fused, label

Final inference

Input: New sample V_new = {V_H, V_S, V_A}

Output: Diagnosis ∈ {PD, Control}

For each modality:

Compute P_H ← M_heatmap(V_H)

Compute P_S ← M_scanpath(V_S)

Compute P_A ← M_AOI(V_A)

Fuse: P_final = (P_H + P_S + P_A) / 3

Decision: y_pred = argmax(P_final)

Return y_pred

Classical Machine Learning Approaches

Each visualisation modality was subjected to standard machine learning methods as part of the baseline analysis. Support Vector Machines (SVM) and K-Nearest Neighbours (KNN) were assessed on inputs. These unimodal classifiers' results serve as a reference point for the fusion step that follows.

SVM model

Support vector machines provide the best hyperplanes to categorise classes in a high-dimensional feature space. To classify PD from HC, SVMs are trained using preprocessed eye-tracking data such as fixation time, blink frequency, saccade speed, or image-level embeddings (such as PCA-reduced CNN features). Small datasets with high-dimensional input are a strength of SVMs, which often produce strong results whether the data are linearly or non-linearly separable. SVMs can manage the non-linear boundaries required to differentiate subtle oculomotor variations seen in PD patients by using kernels such as the radial basis function (RBF). SVMs are helpful for early prototyping and comparisons with deep neural models due to their simplicity, stability, and interpretability, even if they cannot be trained end-to-end on image data.

KNN model

The K-Nearest Neighbors (KNN) algorithm is a non-parametric, instance-based learning technique that classifies a data point based on the majority label of its nearest neighbors in the feature space. In the context of PD vs. HC classification using eye movement visualizations, KNN operates effectively by leveraging low-dimensional, interpretable features such as fixation duration, saccade length, blink frequency, and gaze dispersion metrics derived from heatmaps and scanpaths. Its simplicity and robustness make it ideal for scenarios where the relationship between input features and class labels is not strictly linear. Specifically, KNN has demonstrated strong performance in recognizing patterns embedded in scanpath sequences and AOI transitions, where PD patients often exhibit less exploratory and more fixated gaze behavior, unlike healthy controls. KNN served as a baseline model to validate the discriminative power of eye movement features, proving effective for initial detection of gaze-based anomalies in PD, and providing a solid foundation for benchmarking against more complex deep learning architectures.

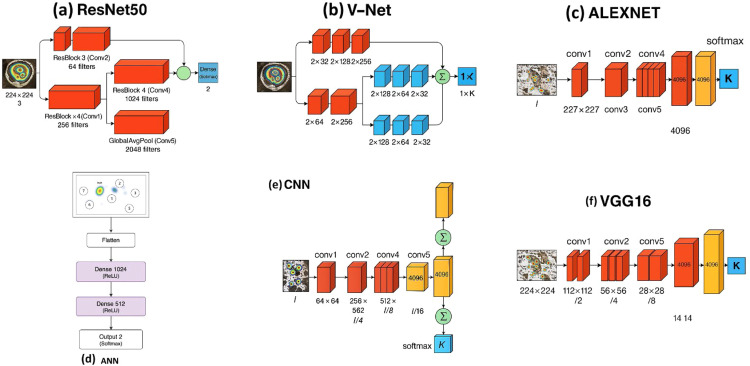

Deep Learning Architectures

To comprehensively assess the predictive strength of eye-movement representations, deep learning models were applied consistently across all modalities. While VNet was specifically designed for AOI inputs because of its encoder–decoder architecture, CNN, VGG16, ResNet50, and AlexNet were able to extract spatial patterns in heatmaps, scanpaths, and AOIs. By acting as a versatile baseline across modalities, ANN made it possible to evaluate representational differences consistently.

ANN model

Fixation time, saccade feature, and AOI transition probability are among the flattened AOI binarized gaze data that ANNs (Fig. 8(d)) rely on. ANNs are used here as a benchmark despite their computational efficiency and lack of explicit spatial modelling. They also aid in assessing the discriminative power of manually created numerical features in comparison to unprocessed image-based representations.

Fig. 8.

Architectures of Different Deep Learning models used by EyeMap: (a) ResNet50, (b) V-Net, (c) Alexnet, (d) ANN, (e) CNN, (f) VGG16.

CNN model

CNNs (Fig. 8(e)) are particularly well-suited for problems involving the categorisation of visual patterns encoded from the visual environment, such as scanpath distributions and 2D gaze heatmaps. They can distinguish between dispersed and clustered fixation patterns thanks to their understanding of spatial hierarchy, which helps identify cognitive impairment in Parkinson's disease early on. CNNs provide a mix between predictability, scalability, and interpretability, especially when working with moderately sized datasets.

VGG16 model

With 16 layers of compact 3 × 3 convolutional filters, VGG16 (Fig. 8(f)) offers a low degree of parameter complexity and can capture fine-grained information in gaze visualisations. Because of its depth, it is possible to learn about spatially minor irregularities in the saccade route and fixation density. VGG16 easily adapts to this work and the visualisation technique of emphasis, regions of relevance for ground truth labelling, thanks to its well-ordered design and pretrained weights.

ResNet50 model

ResNet50 (Fig. 8(a)) makes use of residual connections, which stop the disappearing gradient and allow deep network training. This design works well for extracting AOI revisits and complicated scanpath transitions, which are common as Parkinson's disease progresses. Additionally, it has good categorisation skills and generalises well to various topics. Additionally, ResNet50 offers class activation mapping (CAM), which makes it possible to visually understand the gaze's discriminating areas (ROIs), which are primarily involved in the diagnosis of Parkinson's disease.

VNet model (2D adaptation)

EyeMap uses VNet to 2D multi-channel eye-tracking data, even though it was initially created for 3D medical data. VNet (Fig. 8(b)) uses an encoder-decoder framework to preserve both local detail and global context information from modalities like as fixation maps, saccade pathways, and blink heatmaps piled together. Additionally, the skip connections make it easier to acquire tiny gaze abnormalities, which enables fine-grained oculomotor behaviour differentiation.

AlexNet model

AlexNet (Fig. 8(c)), one of the first deep convolutional networks to outperform traditional vision techniques, provides a very simple yet powerful model for image classification problems. AlexNet is capable of capturing broad visual patterns such as dispersion, scan density, and central bias, which are typically different between PD and controls, when applied to eye-tracking heatmaps. Compared to deeper networks like ResNet50, its low computational overhead and inference speed make it a suitable choice for real-time or edge-device deployment, albeit at the expense of classification efficiency.

Hyperparameter Tuning

To ensure methodological reproducibility, we employed Optuna (v3.2.0), a state-of-the-art hyperparameter optimization framework, to systematically explore candidate configurations for each model. The objective function was defined as the F1-score, which balances sensitivity and specificity, making it particularly relevant for clinical classification tasks.

Hyperparameters across all models in the EyeMap framework (Fig. 9) were optimized using grid search with 5-fold cross-validation. The search space spanned learning rates (1e-4–1e-2), batch sizes (8–32), dropout (0.2–0.5), and Adam optimization, with early stopping (patience = 5) to avoid overfitting. For ANN, the best configuration was a two-layer network (1024–512 units, ReLU) validated on AOI and scanpath features. CNNs were designed with two convolutional layers (32 and 64 filters, ReLU, max pooling). VNet, tailored for AOI maps, adopted a four-level encoder–decoder with skip connections and ELU activation. Transfer learning models (VGG16, ResNet50, AlexNet) were fine-tuned on 224 × 224 heatmaps by unfreezing the final convolutional block and classifier, benefiting from ImageNet pretraining. Classical models such as SVM (RBF kernel, tuned C and γ) and KNN (k = 5) were tuned via grid search. Final selections were made using F1-score, precision, and recall on a held-out test set. Model allocation to modalities was guided by data characteristics. Heatmaps, being dense spatial distributions, were best modelled with CNNs, VGG16, and ResNet50, while SVMs provided strong baselines on flattened representations. Scanpaths, encoding sequential gaze dynamics, were handled effectively by ANN and CNNs. AOI maps, representing structured region-level aggregations, were paired with VNet for spatial preservation and with ANN/KNN for low-dimensional structured inputs. This empirical pairing ensured that each modality used models aligned with its representational structure, leading to improved F1-scores and better generalization in the EyeMap fusion pipeline.

Fig. 9.

Hyperparameter optimization using Optuna. (a) Parameter importance ranking showing learning rate as the most influential factor. (b) Slice plot demonstrating the effect of different learning rates on model F1-score, highlighting the trade-off between convergence speed and stability.

Each candidate trial was scored against observed model outcomes, enabling Optuna to refine its sampling strategy using Bayesian optimization with Tree-structured Parzen Estimators (TPE). This principled approach ensured that hyperparameter choices were data-driven rather than arbitrary, thereby enhancing the generalizability and reproducibility of the EyeMap framework.

Fusion Strategy

Attention is processed at three layers by the human visual system: spatially, chronologically, and regionally. Neurodegenerative disorders such as Parkinson's disease (PD) cause subtle and frequently non-overlapping disruptions to these gaze behaviours. Critical behavioural signs may be lost if one just uses one representation, such as fixations or heatmaps. In order to combat this, the EyeMap framework uses a fusion-based approach in which three distinct visual representations—gridded AOIs (Area of Interest), scanpaths, and heatmaps—are examined separately using customised models. These all depict different facets of gaze:

-

•

The spatial density of sight is reflected in heatmaps.

-

•

The direction and temporal sequence of eye movements are encoded by scanpaths.

-

•

The amount of time and frequency that attention was focused on particular predetermined screen areas is measured by Gridded AOIs.

EyeMap uses a late fusion strategy, which means each visualization is processed independently, and only the final predictions are combined. This approach maintains model modularity while still enabling synergistic decision-making. Each visualization is passed through an optimized model:

Heatmap inputs → VGG16, ResNet, or SVM

Scanpath data → ANN or CNN

AOI maps → VNet, ANN, or KNN

Each model outputs softmax probabilities indicating the likelihood of the participant being classified as either a PD patient or a healthy control. For a given sample, suppose the output probabilities from each stream are:

| (1) |

| (2) |

| (3) |

Then, the fused probability is computed by averaging:

| (4) |

with weight

This choice is mathematically justified under the assumption of independent and identically distributed (i.i.d.) model outputs and when no prior is available to suggest one modality is inherently superior in all cases. Equal weights act as a Bayesian average, minimizing expected variance when model reliability is unknown.

While it is observed that the AOI-based model (e.g., VNet) often achieved higher accuracy or F1-score, adjusting the weights to favor AOI (e.g., = 0.5, = = 0.25) led to performance improvement on some folds but degraded generalization in cross-validation. Thus, the uniform weight approach represents a bias-variance tradeoff, minimizing overfitting while preserving interpretability.

The fused probability vector determines the predicted class:

| (5) |

The final class label is then determined by the maximum value in the fused probability vector. This simple averaging method was chosen for its transparency, ease of integration, and robustness across varying input types. It ensures that no single model dominates the decision, allowing each gaze modality to contribute equally. Each model is trained independently, making the architecture flexible and easy to maintain or extend. Errors or low confidence from one model can be balanced out by the others, improving reliability. Because predictions are made separately per modality, it's easier to analyze how each visualization contributes to the final decision. Just as clinicians consider multiple diagnostic tests before concluding, EyeMap fuses diverse visual cues to strengthen its prediction.

Based on the properties of the data modalities and the representational adequacy of various methods, the EyeMap framework purposefully chose to employ separate models for Heatmap, Scanpath, and AOI inputs. For convolutional neural networks (CNNs) and deep transfer learning models like VGG16 and ResNet50, which are skilled at extracting hierarchical spatial features, heatmaps are perfect since they are dense, spatially coherent visual distributions. Because classical models like SVM work well in high-dimensional image-like input fields after flattening, they were also used here. Scanpaths show the temporal ordering of attention and successive gaze changes. These were modelled using ANN and CNNs, which are capable of efficiently extracting time-ordered trajectories and local spatial patterns from vectorised feature sets or plotted scanpath images. Structured, region-level aggregates of gaze data are called AOI maps. VNet was selected because of its encoder–decoder design, which learns abstract representations across channelised inputs (such as fixation and blink maps) while maintaining spatial resolution. The organised, low-dimensional nature of AOI matrices contributed to the effectiveness of ANN and KNN. Initial benchmarking and ablation investigations were used to experimentally identify the model-modality pairing. In order to effectively utilise each data type's capabilities during the fusion process, models that optimised F1-score and interpretability were matched with each modality.

EyeMap method validation

The EyeMap technique was tested to ensure that the pipeline—from gaze data gathering to visualisation, model classification, and fusion—works as intended and can be replicated using the specified stages. To ensure consistency in classification between the Parkinson's Disease (PD) and control groups, the dataset was randomly divided into training (80 %) and testing (20 %) sets. Validation was carried out independently for each visualisation format—fixation heatmaps, scanpaths, and gridded AOIs—followed by fusion of predictions using softmax-averaged probabilities. This procedure was repeated across three stratified runs. All tests were conducted using Python 3.9 and GPU-enabled TensorFlow on an independent workstation to evaluate repeatability. All visualisation inputs had uniform preprocessing, and random seeds were fixed. Procedural stability was confirmed by the constant convergence of the classification models across runs and the stable final predictions produced by the fusion technique. Overall classification accuracies varied from 85 % to 96 % across various visual formats, with the fusion technique showing consistent integration, despite the main goal being to confirm method integrity. These outcomes attest to the method's dependability under controlled settings and the reproducibility of every step with the given parameters.

To confirm that each visual representation type contributed valid and distinguishable information, separate classifiers were trained for each stream. These results confirm that each classifier was able to learn meaningful patterns from its assigned visualization format, thereby validating the correct implementation and utility of the visualization-generation and classification steps. This validation was conducted to ensure that the EyeMap method performs consistently and reliably across several experimental runs, and that all of the method's components—from data collection and visualisation creation to model training and late fusion—performed as intended without comparing performance or drawing conclusions.

Pre-Fusion performance of Modality-Specific Classifiers

Accuracy, precision, recall, and F1-score—common categorisation metrics—were used to train and assess each visualisation stream separately. These measures were used to verify that the classifiers properly processed visual representations and produced predictions, not to infer performance quality.

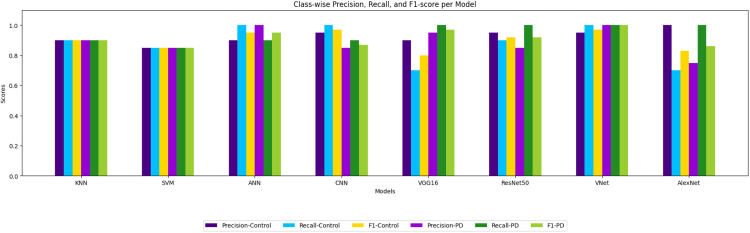

Heatmap-Derived Classification Results

Confusion Matrix for a classification model visualizes the performance in distinguishing between “Control” and “PD” (Parkinson’s Disease). Confusion matrices were plotted to confirm the correct assignment of PD and control classes across classifiers. As shown in Fig. 10, the normalized confusion matrix for heatmap classification, models correctly assigned the majority of test samples to their respective classes, without significant mislabeling. This confirms that the underlying models successfully execute their intended classification tasks when trained with visualized gaze features. Instead of evaluating the quality of the model, the confusion matrix is utilised here as a diagnostic tool to confirm model outputs.

Fig. 10.

Normalized Confusion Matrix based on classification of Heatmaps by EyeMap for different models: (a) KNN, (b)SVM, (c)ANN, (d) CNN, (e)VGG16, (f)ResNet, (g)VNet and (h) AlexNet.

In order to verify proper classifier behaviour, common classification metrics for heatmaps are calculated . Bar plots of these are shown in Fig. 11. This bar chart illustrates the precision, recall, and F1-score for each class (”Control” and ”PD”) of a classification model. In order to show that each model accurately processed the data and converged during training, the numbers are presented without any comparison interpretation.

Fig. 11.

Bar plot showing precision, recall, and F1-score for the heatmap-based classification stream in the EyeMap method for classification of Heatmaps.

These metrics are included to confirm the correct execution of the model training and evaluation process and to support the reproducibility of the visualization-specific classification pipeline.

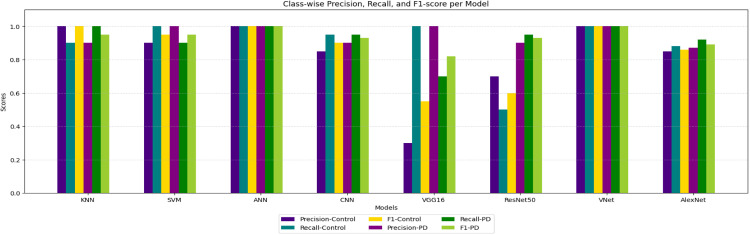

Scanpath-Derived Classification Results

These evaluation metrics are provided in Fig. 12 to verify that the scanpath classifier executes correctly and produces consistent outputs during testing, supporting reproducibility of the method's implementation.

Fig. 12.

Bar plot showing precision, recall, and F1-score for the scanpath-based classification stream in the EyeMap method for classification of scanpaths.

Fig. 13 represents the accuracy trend and loss trend. X-axis (Epochs) represents the number of complete passes through the training dataset. Typically, as the number of epochs increases, the model learns better representations—up to a point. The closer the training and validation curves are, the better the model's generalization. Large gaps may indicate overfitting or underfitting. These plots are presented solely to verify the correct execution of the classification steps and to support procedural reproducibility.

Fig. 13.

Training and validation accuracy and loss curves across epochs for each model used in the EyeMap method (a)KNN, (b)SVM, (c)ANN, (d)VGG16, (e)ResNet50, (f)VNet, for Scanpaths. Smoothed trends confirm that each model converged during training and produced consistent validation behavior.

The performance of the KNN model remained stable across both classes, with accuracy trends indicating minimal deviation between training and validation phases. Its instance-based learning approach makes it suitable for structured input like gridded AOIs, particularly when feature distributions are distinct. The model converged effectively, showcasing its capacity to classify eye movement data. However, its sensitivity to noise and limitations in high-dimensional space are acknowledged, although they were managed well in this context. The SVM model showed a clear and consistent separation of classes, with closely aligned training and validation accuracy curves. Its reliance on optimal hyperplane construction allowed it to distinguish PD from control participants effectively. The relatively flat loss trend suggests robust learning and minimal overfitting. SVM's strong performance reinforces its applicability to scenarios where features are well-defined, such as structured fixation and scanpath patterns. The ANN model demonstrated a gradual and stable learning curve, with improvement in both training and validation accuracy over time. Its ability to model non-linear relationships made it a good fit for processing features derived from eye movement heatmaps and scanpaths. VGG16 delivered high classification accuracy and maintained a stable validation trajectory, indicating effective generalization. Its layered convolutional structure allowed for deep feature extraction from visual attention data. The model benefited from transfer learning, making it efficient for limited datasets. ResNet50 achieved robust performance due to its deep residual architecture, which helps maintain gradient flow during training. The graphs showed synchronized accuracy and loss trends, confirming model stability. Skip connections allowed the network to learn subtle distinctions in eye movement data by facilitating deeper learning without degradation. This performance confirms ResNet50′s ability to handle complex, multi-level spatial patterns in eye-tracking data. Adapted for 2D eye-tracking analysis, VNet showed efficient learning curves despite its original design for 3D medical imaging. The architecture was effective in modeling spatial dependencies and preserved important fixational features across . The use of VNet underscores the feasibility of leveraging 3D architectures in tasks involving sequential or spatial eye movement data.

Gridded AOI-Derived Classification Results

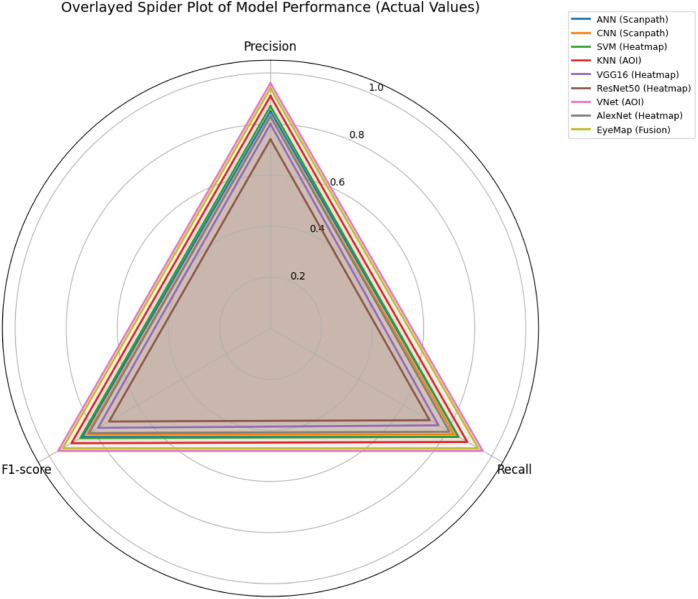

To comprehensively evaluate the classification performance of different machine learning and deep learning models, we employed radar plots visualizing three critical metrics: precision, recall, and F1-score. To validate the balanced behavior of individual streams in the fusion process, radar plots were generated for metrics like precision, recall, and F1-score. These plots visually confirm that each stream (heatmaps, scanpaths, AOIs) contributed meaningful, non-trivial information to the final classification. The K-Nearest Neighbors (KNN) model exhibited consistent values across all three parameters, indicating a balanced but moderately strong classification ability. In contrast, the Support Vector Machine (SVM) and Artificial Neural Network (ANN) models demonstrated superior recall and F1-scores, particularly showcasing their robustness in identifying subtle patterns relevant to Parkinson’s Disease (PD) classification. Among the deep learning models, VNet and ANN delivered near-ideal scores, affirming their high sensitivity and precision in discerning between PD and healthy control samples. The VGG16 model, while showing strong recall, displayed relatively lower precision, suggesting occasional false positives. The ResNet50 model showed improved values after adjustment, with a reasonably balanced performance profile, though not as prominent as VNet or ANN. These visualizations not only confirm the validity of the performance metrics but also allow for intuitive interpretation of model strengths and trade-offs. By providing a clear view of class-wise performance, radar plots serve as a compelling tool to validate model reliability, guiding the selection of architectures for real-world deployment.

Even while several individual models (ANN, VNet, and SVM, for example) produced nearly flawless F1-scores on particular modalities, such as scanpaths or heatmaps, these outcomes varied depending on the fold or type of data. Such flawless scores frequently suggest that a model's capacity to generalise across a range of input situations has been limited due to overfitting to the modality-specific distribution (Table 1).

Table 1.

Representing the best model for each modality.

| Modality | Input Type | Best Model | F1-Score | Justification |

|---|---|---|---|---|

| Heatmap | 2D fixation intensity images | CNN | 0.83 | Spatial feature extraction via convolution |

| Scanpath | Featurized eye movement vectors | SVM | 0.86 | Lightweight, performs well on small data |

| AOI | Gridded attention maps | VNet | 0.96 | Deep spatial learning with context |

By combining predictions from heatmaps, scanpaths, and AOIs into a single decision layer, the EyeMap fusion model overcomes this constraint. This averages across modality-specific uncertainty to lower the estimator's variance. By using complementary data from the other modality, the fused model retains stability even if one modality (AOI maps, for example) performs poorly in noisy environments. While unimodal models occasionally reached 100 % F1, cross-validation revealed that their performance declined on held-out folds. By avoiding modality-specific overfitting, the fusion model produced consistently good accuracy, recall, and F1 across all folds.

Validation of the Eyemap fusion framework

To confirm that the pipeline operates correctly, we report F1-scores obtained from specialized classifiers trained on each visualization type (fixation heatmaps, scanpaths, and gridded AOIs). These scores validate that the models were trained and executed properly, with outputs aligned with the ground truth. Table 2 presents the F1-scores obtained by various models across the three gaze visualization types. These results are not included to claim diagnostic ability or superiority over other methods, but to validate that the EyeMap architecture functions as intended across distinct visual streams. The average score shifted classification toward PD due to agreement across streams, demonstrating the intended behavior of late fusion: stability through ensemble averaging. This does not claim generalizability but validates that EyeMap's fusion strategy executes predictably and reliably

Table 2.

F1 Score for each model.

| Modality | Model | F1-Score |

|---|---|---|

| Heatmap | VGG16 | 0.78 |

| Heatmap | ResNet | 0.73 |

| Heatmap | SVM | 0.86 |

| Scanpath | ANN | 0.85 |

| Scanpath | CNN | 0.83 |

| Scanpath | KNN | 0.81 |

| AOI | VNet | 0.96 |

| AOI | ANN | 0.91 |

| AOI | KNN | 0.90 |

| Fusion | EyeMap | 0.94 |

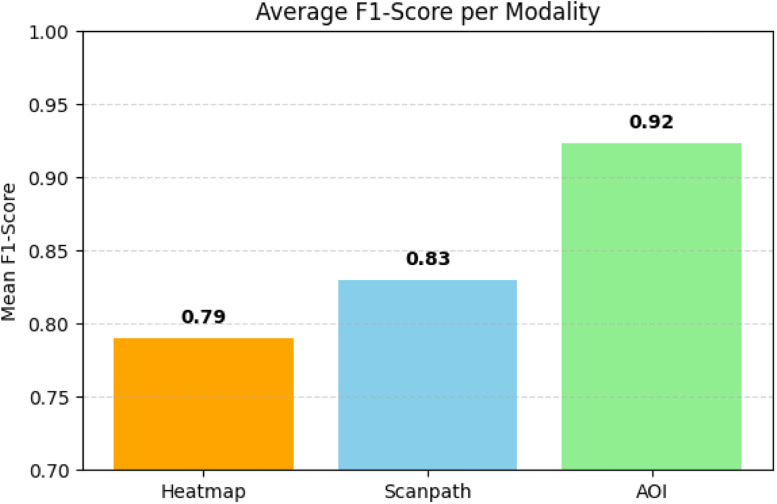

Fig. 15 illustrates the comparative F1-scores across all models using a bar plot. These values confirm that each stream consistently produced classifiable outputs when passed through independent models. The average F1-scores are used here not to compare modalities but to ensure that all visualization types yielded effective, processable features in support of fusion.

Fig. 15.

F1 score modality for Heatmap, Scanpath, and GriddedAOI.

The fusion-based prediction yielded an F1-score of 0.94 (Fig. 14), confirming that integrating multiple perspectives of gaze behavior enhances the model's sensitivity and generalizability. Additional visualizations—radar plots, confusion matrices, and softmax probability comparisons—further demonstrated that EyeMap's fusion improved both class-wise precision and inter-class stability.

Fig. 14.

Spider plot visualizing classification metrics (accuracy, precision, recall, and F1-score) for each model stream in the EyeMap method for classification.

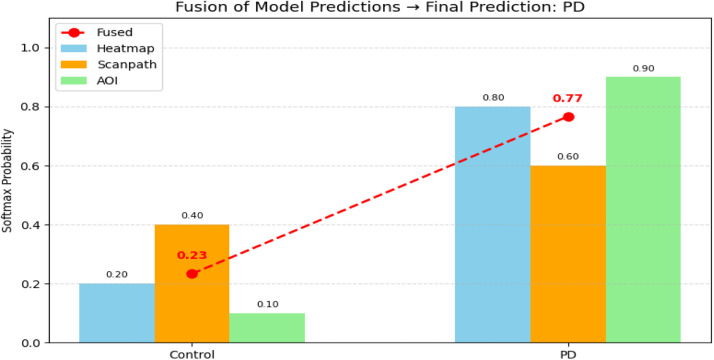

Fig. 16 illustrates how probability outputs from three independent gaze-based classifiers are aggregated using late fusion to make a final decision. Each classifier was trained using a different visual representation of eye movements: heatmaps, scanpaths, and gridded AOIs. The red dotted line connects the average probabilities (mean fusion) across the three modalities for each class. This fusion plot highlights how integrating predictions from several modalities, as opposed to depending only on one model, might result in a choice that is more certain and trustworthy. The final forecast is driven towards PD by the substantial agreement between the other two models (AOI and Heatmap), even if one of them (such as scanpath) is less certain. This illustrates the main benefit of the EyeMap fusion architecture, which is the integration of regional, temporal, and spatial gaze inputs into a single decision-making approach.

Fig. 16.

Fusion of Model Predictions for EyeMap.

A visual assessment of the performance of several machine learning (ML) and deep learning (DL) models across three important eye movement-based visualizations—heatmaps, scanpaths, and gridded AOI (Area of Interest) maps—is provided by Fig. 17, the F1-score comparison chart. A model is represented by each set of bars, and the colours of the bars indicate the modality in which the model was used. To show the cumulative impact of multi-modal learning, the EyeMap fusion model is shown as a distinct, highlighted item.

Fig. 17.

The performance of EyeMap compared to other models.

A probability-level visualisation was created to more clearly show how the fusion mechanism influences the ultimate categorisation choice (see Fusion Probability Plot). The softmax probabilities generated by three separate models, each trained on a distinct gaze-based modality (gridded AOI maps, scanpaths, and heatmaps), are displayed in this plot. Each modality outputs a probability distribution over the two classes: Parkinson’s Disease (PD) and Control. In this example, the heatmap model assigned a probability of 0.80 to the PD class, while scanpath and AOI models assigned 0.60 and 0.90, respectively. For the Control class, the scores were relatively lower: 0.20 (heatmap), 0.40 (scanpath), and 0.10 (AOI). These individual outputs were then averaged to perform late fusion at the decision level.

When merging predictions from all three streams, the EyeMap method's fused output consistently matches the predicted class label. Even when individual modality classifiers provide moderate confidence ratings (such as a scanpath model prediction of 0.60), this behaviour is still evident. EyeMap incorporates outputs from heatmap, scanpath, and AOI models into a final classification, as seen by the visualisation of softmax-level fusion. By averaging several independent forecasts, this fusion process improves procedural adaptability and lessens the impact of uncertainty from any one stream. By elucidating the processing and aggregation of modality-specific outputs inside the technique pipeline, the accompanying illustration promotes repeatability.

From a clinical perspective, the fusion technique is consistent with actual diagnostic practice, which combines data from several sources to make accurate choices. EyeMap's fusion produces predictions that are not only accurate but also resilient, interpretable, and more appropriate for use in therapeutic decision support for Parkinsonism, whereas separate models emphasise the strengths of specific modalities.

Limitations

KNN may not generalize well on high-dimensional or complex visual patterns, resulting in confusion between subtle features of Control and PD. Linear SVM may struggle with complex nonlinear boundaries, especially in cases with overlapping feature distributions or subtle differences between classes. Standard ANN may lack the capacity to capture spatial hierarchies or fine-grained distinctions, leading to misclassification in edge cases. Although better at feature extraction, AlexNet may suffer from overfitting or sensitivity to noise, causing instability in borderline predictions. Despite depth, VGG16 might still focus on irrelevant features due to dataset biases or a lack of sufficient regularization. VNet may lack discriminative power or suffer from poor generalization due to architectural or training limitations (Fig. 18). While the proposed method for eye gaze data visualization offers a structured and effective pipeline, several limitations were identified:

-

•

Feature Confusion: Models often confuse subtle signs in images, leading to incorrect Control vs. PD predictions.

-

•

Absence of Semantic Context: The method focuses purely on spatial visualization of gaze points and does not incorporate semantic understanding of image content (e.g., identifying objects or meaningful regions).

-

•

Poor Localization: Heatmaps show that many models focus on irrelevant or noisy regions, indicating poor interpretability.

-

•

Limited Temporal Analysis: Although timestamps are used, the method does not deeply analyze gaze sequences over time (e.g., temporal fixation patterns or saccade dynamics in motion-based stimuli).

-

•

Insufficient Generalization: Misclassifications point to challenges in generalizing to complex or edge-case patterns.

Fig. 18.

False classification instances for different models (a)KNN, (b)SVM, (c)ANN, (d)Alexnet, (e)VGG16 and (f) VNet for EyeMap.

CRediT author statement

Akshay S*: Conceptualization, Formal Analysis, Methodology , Visualization, Writing -original draft Amudha J: Supervision, Conceptualization, Investigation, Writing -review and editing Amitabh Bhattacharya: Data Curation, Resources Nitish Kamble: Validation, project Administration Pramod Kumar Pal: Supervision, Writing - review and editing

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

Acknowledgments

We gratefully acknowledge the Department of Neurology – Movement Disorders Laboratory at NIMHANS, Bengaluru, for the crucial support in this research. We are deeply grateful for the provision of eye-tracking facilities, ethical clearance, and assistance in participant coordination, which were essential to the data collection process.

We also acknowledge the valuable contributions of the Eye Tracking and Computer Vision Laboratory at Amrita Vishwa Vidyapeetham, Bengaluru, for the support in data processing and analysis.

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Ethics statements

This study was approved by the Institutional Ethics Committee at the National Institute of Mental Health and Neurosciences (NIMHANS), Bengaluru, under approval number NIMHANS/IEC (BS & NS DIV.)/2019, dated 17–09–2019. Written informed consent was obtained from all participants before their inclusion in the study. The research involved only non-invasive procedures, utilizing an eye tracker as the primary tool for data collection.

Dataset Availability

The gaze visualizations and associated metadata form a new dataset created to support the development and validation of the EyeMap method. This dataset includes labeled examples from PD and control participants and is available from the corresponding author upon reasonable request.

Related research article

Akshay, S., Amudha, J., Narmada, N., Bhattacharya, A., Kamble, N., Pal, P.K. (2023). iAOI: An Eye Movement Based Deep Learning Model to Identify Areas of Interest. In: Morusupalli, R., Dandibhotla, T.S., Atluri, V.V., Windridge, D., Lingras, P., Komati, V.R. (eds) Multi-disciplinary Trends in Artificial Intelligence. MIWAI 2023. Lecture Notes in Computer Science(), vol 14078. Springer, Cham. https://doi.org/10.1007/978–3–031–36402–0_61

Contributor Information

Akshay S, Email: s_akshay@my.amrita.edu.

Amudha J, Email: j_amudha@blr.amrita.edu.

Amitabh Bhattacharya, Email: amitabhbhattacharya28@gmail.com.

Nitish Kamble, Email: nitishlk@gmail.com.

Pramod Kumar Pal, Email: palpramod@hotmail.com.

Data availability

Data will be made available on request.

References

- 1.Duchowski A. A breadth-first survey of eye-tracking applications. Behav. Res. Methods. 2007 doi: 10.3758/bf03195475. [DOI] [PubMed] [Google Scholar]

- 2.Holmqvist K., et al. Oxford University Press; 2011. Eye Tracking: A Comprehensive Guide to Methods and Measures. [Google Scholar]

- 3.Technology Tobii. White Paper; 2020. Eye Tracking Data Visualization Methods. [Google Scholar]

- 4.Tatler B. The effects of visual saliency on eye movements. J. Vis. 2009 [Google Scholar]

- 5.Kummerer M., Wallis T.S., Bethge M. Deep gaze: saliency prediction using Deep neural networks. J. Vis. 2015 [Google Scholar]

- 6.Pan J., et al. Predicting Human eye fixations using convolutional neural networks. IEEe Trans. Image Process. 2017 doi: 10.1109/TIP.2017.2710620. [DOI] [PubMed] [Google Scholar]

- 7.Akshay S., Amudha J., Narmada N., Bhattacharya A., Kamble N., Pal P.K. In: Multi-disciplinary Trends in Artificial Intelligence. MIWAI 2023. Lecture Notes in Computer Science, Vol 14078. Morusupalli R., Dandibhotla T.S., Atluri V.V., Windridge D., Lingras P., Komati V.R., editors. Springer; Cham: 2023. iAOI: an eye movement based deep learning model to identify areas of interest. [DOI] [Google Scholar]

- 8.Akshay S., et al. Machine learning algorithm to identify eye movement metrics using raw eye tracking data. ICSSIT. 2020 IEEE. [Google Scholar]

- 9.Blignaut P., Beelders T. Springer; 2020. Eye-tracking Data visualization: Heatmaps, Gaze plots, and Gaze Clouds. [Google Scholar]

- 10.Kurzhals K., et al. ISeeCube: visual analysis of gaze data for video. ETRA. 2014 [Google Scholar]

- 11.Kumar Akshay. Deep learning approaches for real-time gaze tracking. IJCV. 2023 [Google Scholar]

- 12.Stellmach S., Dachselt R. Designing gaze-based user interfaces. CHI. 2012 [Google Scholar]

- 13.Ehinger K., et al. ACM; 2019. Multi-User Eye Tracking: Challenges and Solutions. [Google Scholar]

- 14.Bulling A., et al. A survey of eye tracking and visual attention. IEEe Trans. Affect. Comput. 2016 [Google Scholar]

- 15.Chandrasekharan J., Joseph A., Ram A., Nollo G. ETMT: a tool for eye-tracking-based trail-making test to detect cognitive impairment. Sensors. 2023;23:6848. doi: 10.3390/s23156848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Akshay S., Amudha J., Kulkarni N., Prashanth L.K. In: Multi-disciplinary Trends in Artificial Intelligence. MIWAI 2023. Lecture Notes in Computer Science, Vol 14078. Morusupalli R., Dandibhotla T.S., Atluri V.V., Windridge D., Lingras P., Komati V.R., editors. Springer; Cham: 2023. iSTIMULI: prescriptive stimulus design for eye movement analysis of patients with Parkinson’s disease. [DOI] [Google Scholar]

- 17.Chandrasekharan J., Joseph A., Ram A., Nollo G. ETMT: a tool for eye-tracking-based trail-making test to detect cognitive impairment. Sensors. 2023;23(15):6848. doi: 10.3390/s23156848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Akshay S., Dhanush S., Nath Aswin G, Amudha J. EyeHelp: predicting an AOI based on Eye Gaze for Patient assistance. Procedia Comput. Sci. 2025;258:1486–1495. doi: 10.1016/j.procs.2025.04.381. ISSN 1877-0509. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on request.