Abstract

The concept of contrastive explanations originating from human reasoning is used in explainable artificial intelligence. In machine learning, contrastive explanations relate alternative prediction outcomes to each other involving the identification of features leading to opposing model decisions. We introduce a methodological framework for deriving contrastive explanations for machine learning models in chemistry to systematically generate intuitive explanations of predictions in high-dimensional feature spaces. The molecular contrastive explanations (MolCE) methodology explores alternative model decisions by generating virtual analogues of test compounds through replacements of molecular building blocks and quantifies the degree of “contrastive shifts” resulting from changes in model probability distributions. In a proof-of-concept study, MolCE was applied to explain selectivity predictions of ligands of D2-like dopamine receptor isoforms.

Keywords: Explainable artificial intelligence, Human reasoning, Contrastive explanations, Molecular features, Analogue comparison, Selectivity prediction

Scientific contribution

We introduce the first approach for generating contrastive explanations of machine learning models in chemistry. The methodology generates explanations of predictions that are readily accessible, chemically intuitive, and interpretable at the molecular level of detail.

Introduction

With the rise of artificial intelligence (AI), machine learning (ML) is increasingly applied in different fields to address many different prediction tasks. Typically, contemporary ML models are “black boxes”, excluding the possibility to explain their decisions based on human considerations [1–3]. This general limitation poses a problem for the acceptance of ML predictions in interdisciplinary research areas such as drug discovery [4, 5]. Given the black box nature of deep ML models, it comes as no surprise that the field of explainable AI (XAI) is substantially gaining in attention [5–8]. In XAI, computational approaches are investigated to provide explanations of ML predictions, either as a part of the modeling processes or retroactively, which is more often the case. To this end, a multitude of concepts and methods have been proposed to provide insights into the inner workings of ML models [8, 9]. These approaches aim to provide explanations for solitary model decisions (local explanations) or strive for global explainability by generalizing internal process patterns as indicators of model behavior [9]. Computational explanations then provide a basis for model interpretation, human understanding, and causal reasoning [10]. Accordingly, explanation methods predominantly focus on accurate predictions, rather than weakly predictive models and their output.

XAI approaches for generating local explanations have received particular attention. For instance, feature attribution methods [9, 11], such as locally interpretable model-agnostic explanations (LIME) [12] or SHapley Additive exPlanations (SHAP) [13] quantify the individual contribution of each feature to a model decision. In chemistry, feature attribution analysis is usually combined with mapping of features onto molecular structures to help explain and interpret prediction outcomes [14]. Other XAI approaches adapted for ML in chemistry include, for instance, anchors, a rule-based methodology [15, 16], or counterfactuals [17–19]. Feature attribution and rule-based methods have in common that they attempt to explain a particular prediction without taking alternative prediction outcomes (such as different class labels) into account. In other words, these methods explore the question “why was prediction P obtained?”. Notably, humans often try to explain a given event by considering not only the event itself, but alternative outcomes instead [20]. For predictions, this leads to the question “why was prediction P obtained but not Q?” [20]. For instance, counterfactual thinking reflects the tendency of gaining insights into decisions by comparing different outcomes [17]. In molecular ML, counterfactuals are defined as closely related test compounds (analogues) yielding different predictions (class labels) [18, 19]. Moreover, the underlying question why prediction P and not Q was obtained is not only addressed by comparing alternative outcomes, but also by attempting to contrast potential origins of different outcomes, leading to contrastive explanations [21]. In this case, a given event (prediction) P is formally defined as the fact and the alternative event Q as the foil [21]. Contrasting these events aims to reduce cognitive load (with available and potentially contributing features) and increase clarity of ensuing explanations. In XAI, both of the related yet distinct concepts of counterfactuals and contrastive explanations are utilized [22, 23]. In human and artificial intelligence, contrastive explanations are especially sought after if a different event (prediction) than the observed one was anticipated [21, 23]. In ML, extending the comparison of closely related test instances yielding opposing predictions via counterfactuals [19, 22], contrastive explanations aim to capture features having the greatest impact on differentiating the fact from the foil [22–24]. Therefore, subsets of features responsible for distinct model decisions are determined.

In this work, we have adapted and modified the concept of contrastive explanations for molecular ML and applications in chemistry. A theoretical framework was established to generate chemically intuitive contrastive explanations that are interpretable at the level of molecular structure. In a proof-of-concept investigation, the molecular contrastive explanations (MolCE) approach was applied to analyze selectivity predictions for receptor ligands based on a new molecular test system.

Methods

Theoretical background

In ML, the principal goal of contrastive explanations is obtaining subsets of features present or absent in test instances that are minimally required for reaching opposing predictions [23–25]. This is referred to as the identification of pertinent positive or pertinent negative feature sets, representing minimal sets of features that must be present or absent to yield a particular model decision, respectively [23–25]. Contrastive explanations can be searched for by systematic reduction and re-assembly of feature subsets and determining the impact on predictions. Such explanations can also be generated by perturbing feature sets for test instances to obtain increasingly contrasting outcomes compared to an original prediction [26], that is, generating perturbed feature sets of foils increasingly departing from the fact [26]. Notably, this represents a continuum of increasing feature perturbations that may or may not invert a class label prediction [26].

Formally, a model assigning test instances to different classes is applied to predict an original instance , obtaining output probability for representing the fact class. Then, the model is applied to predict instance obtained following feature perturbation, yielding output probability for a foil class selected from all available classes. The contrastive behavior is calculated as the normalized shift in the probability distribution of the foil relative to the fact:

| 1 |

The probability distributions and are derived from and , respectively. The terms and represent the relative prediction probability of the fact class compared to the foil class, that is, for the original test instance and after perturbation of the feature set, respectively. The values range from 1 and -1, with a value of 1 accounting for a complete probability shift from the fact to the foil class and a value of 0 indicating no shift. Thus, following feature perturbation, positive values reflect “contrastive shifts” towards the foil class and negative values contrastive shifts towards the fact class, corresponding to increasing probability of the original prediction.

Molecular contrastive explanations

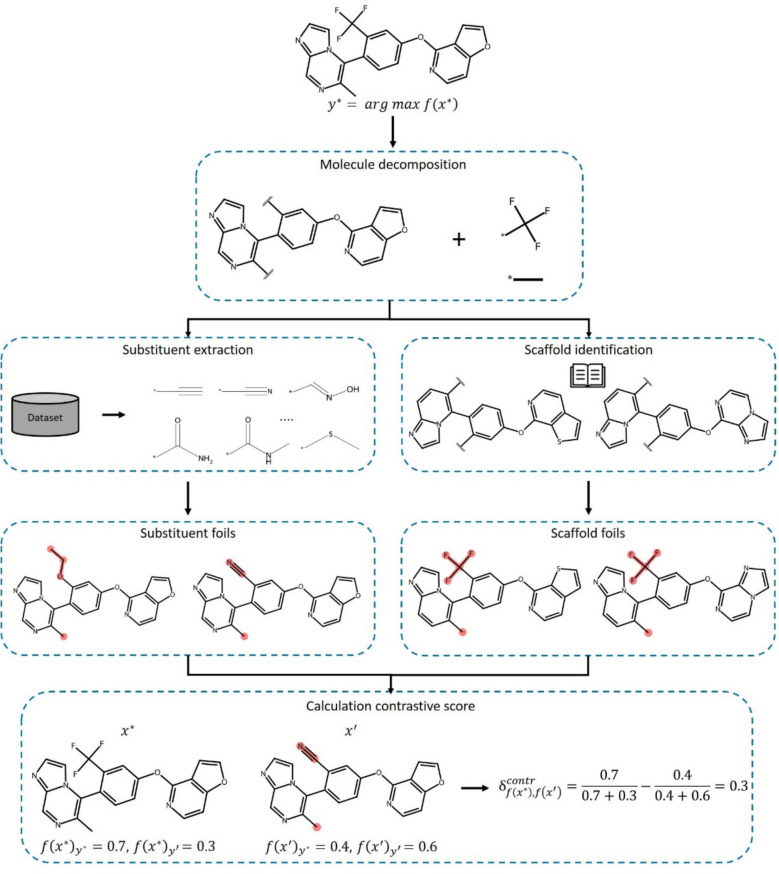

The MolCE approach adapts these principles for assessing contrastive shifts as a consequence of “molecular feature perturbation” resulting from the exchange of substituents or scaffolds (core structures) in given test instances, as illustrated in Fig. 1. Therefore, a test compound is decomposed into its substituents and scaffold according to Bemis and Murcko [27]. Then, virtual analogues are produced by replacing substituents or the scaffold (with a similar one, as detailed below). These virtual compounds represent foils. From a given data set used for ML, all unique substituents are extracted and iteratively added to the scaffold of a test instance at existing substitution sites, preserving one original substituent at a time while systematically replacing the others. For example, for a given scaffold with two substituents, each virtual analogue would include the original scaffold, one of the original substituents and a new substituent from the data set, producing a wealth of substituent foils. Additionally, virtual compounds are generated by combining the original substituents with selected scaffolds (see below), producing scaffold foils.

Fig. 1.

MolCE methodology. The application of the MolCE approach to an exemplary compound is summarized. Initially, the compound is decomposed into its scaffold and substituents. Alternative substituents are extracted from a reference data set and equivalent scaffolds are identified based on a reference dictionary of reduced carbon skeletons. Next, substituent foils and scaffold foils are generated through iterative substituent and scaffold replacement, respectively. Finally, the contrastive behavior is calculated for each foil

For this purpose, a reference dictionary was generated. For the creation of this dictionary, 1,115,950 unique compounds were extracted from BindingDB [28] and ChEMBL (version 35) [29], yielding a total of 658,659 unique scaffolds. These scaffolds were converted into carbon skeletons by replacing all heteroatoms with carbons and setting all bond orders to one. These carbon skeletons were further reduced (and generalized) by removing all linker atoms having two bonded neighbors. Accordingly, each reduced carbon skeleton represented a set of skeletons with varying linker lengths. The resulting 43,899 reduced carbon skeletons were stored in the dictionary that can be queried with a given reduced carbon skeleton extracted from a scaffold of interest to identify a set of alternative scaffolds. By design, these scaffolds are topologically closely related to the scaffold of interest.

For a test compound yielding a particular reduced skeleton, a variety of scaffolds were obtained. To ensure high similarity of original and alternative scaffolds, an atom-based size cut-off of 85% was applied, meaning that the number of atoms in alternative scaffolds could maximally differ 15% from the number of atoms in the original scaffold, thus focusing on very similar scaffolds. Then, in a test compound, the scaffold was systematically replaced with other qualifying candidates while retaining the original substituents.

The generation of substituent foils and scaffold foils ensured that feature perturbations led to chemically meaningful changes of test compounds corresponding to the applicability domain. Therefore contrastive shifts resulted from chemically relevant differences in molecular features (instead of artificial feature perturbations).

For a predicted test compound (fact), the most contrastive virtual analogues (foils) were identified by calculating the contrastive behavior, as detailed above. In the exemplary calculation at the bottom of Fig. 1, the model was applied to the original test instance () and virtual analogue () to obtain its prediction probabilities for the fact class () and foil class (). The resulting probabilities were then used to calculate the contrastive behavior as defined in (1). Positive values indicate contrastive shifts of increasing magnitude.

The application of MolCE does not depend on specific classification or regression models and there is no intrinsic link between model performance and contrastive explanations of individual predictions.

Selectivity data sets

For our proof-of-concept application of MolCE, compound selectivity data sets were generated. Selectivity for a given target over others is generally more difficult to predict than compound activity versus inactivity, and selectivity data sets are typically much smaller than compound activity classes (if they can be obtained at all). Thus, selectivity predictions represent a low-data application, which we deliberately selected for MolCE analysis.

Therefore, for our study, a compound test system for multi-class predictions comprising compounds selective for different D2-like dopamine receptors and non-selective compounds was assembled. Dopamine receptors are relevant pharmaceutical targets implicated in various neurological disorders such as Parkinson’s disease [30]. We selected the D2-like dopamine receptors family members D2R, D3R, and D4R, for which we could identify sufficient numbers of selective ligands for ML. While these receptor isoforms have high sequence similarity, their localization and function differ significantly [31]. For instance, D2R is primarily associated with antipsychotic drugs where common side effects include motor and endocrine irregularities [32]. Conversely, targeting D4R is a promising strategy to treat schizophrenia and pathologies resulting from opioid usage [32]. Hence, dopamine receptor isoform-selective compounds are sought after for the treatment of isoform-specific diseases [32, 33].

Receptor isoform pair-based selectivity data sets were generated using antagonists having pre-defined potency differences. A shared compound was classified as selective if the potency difference was at least 100-fold and as non-selective if the potency difference was at most tenfold. These criteria ensured that ligand selectivity was properly accounted for based on experimental potency differences.

Ligands of D2R, D3R, and D4R were extracted from BindingDB and CHEMBL, filtering out potential assay interference compounds and colloidal aggregators [34–36]. In addition, availability of a numerically specified potency value (IC50, Ki, or Kd) of at least 10 µM was required. Accordingly, compounds with activity in the low micromolar to low nanomolar range were selected. If a compound had multiple potency values falling into the same order of magnitude (that is, one log unit or less), the average of the values was calculated. By contrast, a compound was disregarded if the measurements differed by more than one log unit.

Two selectivity data sets were assembled including target pairs D2R–D4R and D3R–D4R. As expected, these data sets contained more non-selective (142–735) than selective compounds (18–230), as reported in Table 1. In addition, the numbers of unique analogue series (AS; compounds having the same core structure and different substituents) and singletons identified using the compound-core relationship algorithm are reported [37]. The distribution of AS and singletons relative to the total number of compounds reflected a comparable degree of chemical diversity across all selectivity classes.

Table 1.

D2-like dopamine receptor selectivity data sets

| Isoform pair | Label | # CPDs | # AS | # Singletons |

|---|---|---|---|---|

| D2R–D4R | Non-selective | 735 | 101 | 86 |

| D2R-selective | 29 | 6 | 5 | |

| D4R-selective | 230 | 25 | 10 | |

| D3R–D4R | Non-selective | 142 | 25 | 42 |

| D3R-selective | 18 | 4 | 3 | |

| D4R-selective | 37 | 5 | 6 |

Balanced random forest models

To distinguish between selective and non-selective compounds, balanced random forest (BRF) models [38] controlling data imbalance were generated using scikit-learn [39]. For each prediction task, 10 independent trials were carried out by partitioning compounds into training sets (70%) and test sets (30%) using the “StratisfiedShuffleSplit” function of scikit-learn. BRF hyperparameters included the minimal number of samples for a leaf node (1, 2, 5, 10), minimal number of leaves required for a split (2, 3, 5, 10), and the number of decision trees (25, 50, 100, 200, 400). These hyperparameters were optimized using the training set partitioned into validation sets (70/30%) and GridSearchCV [39]. Compounds were represented using a folded extended-connectivity fingerprint with bond diameter 4 (ECFP4) [40].

Performance measures

To assess the ability of models to distinguish between non-selective and selective ligands, several performance metrics were applied including balanced accuracy (BA) [41], precision, recall, F1 score (F1) [42] and Matthew’s correlation coefficient (MCC) [43].

TP abbreviates true positive predictions; FP false positives; TN, true negatives and FN, false negatives. TPR refers to the true positive rate and TNR to the true negative rate.

Macro-averaging was applied to the F1 score, precision, and recall. Accordingly, the metrics were calculated separately for each class and the arithmetic mean was determined as the final value.

Results and discussion

Classification performance

For both selectivity data sets, the ability of BRF models to distinguish between non-selective and selective compounds was determined. Figure 2 summarizes the performance of the BRF models across 10 independent trials.

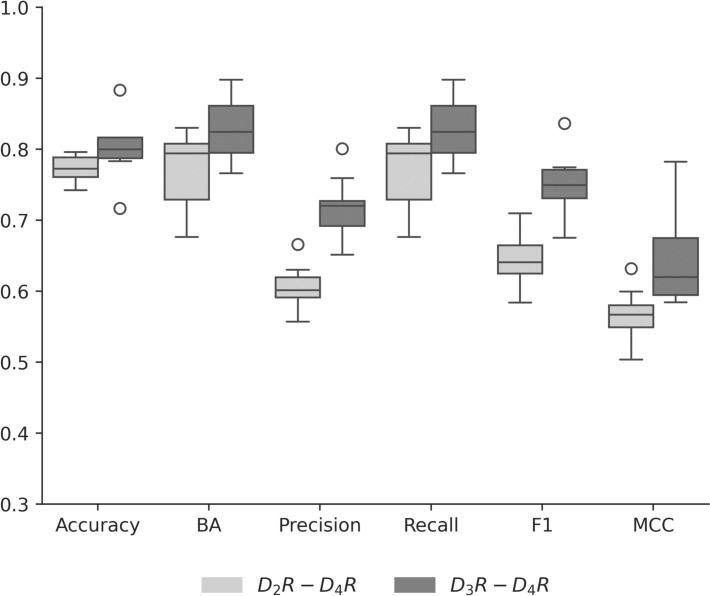

Fig. 2.

Model performance. Boxplots report the performance of BRF models on dopamine receptor selectivity data sets across 10 independent trials as based on different metrics

BRF models adequately distinguished selective from non-selective compounds with a global accuracy of ~ 80%. Overall performance based on MCC values was marginally higher for the D3R–D4R compared to the D2R–D4R data set, with median values of 0.62 and 0.57, respectively. Precision values were consistently lower than recall, with median values of 0.60 and 0.72 for precision and 0.79 and 0.82 for recall, respectively. Thus, most positive instances were correctly predicted for the non-selective and selective classes. For comparison, other ML methods we tested including support vector machines with different kernels had lower performance for selective classes, with median balanced accuracy values ranging from 0.64 to 0.71.

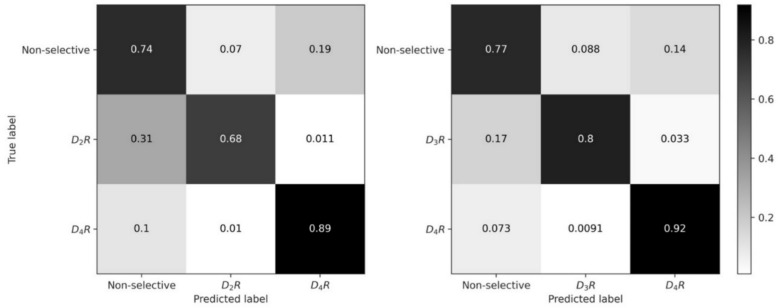

To further evaluate the performance of the BRF models, confusion matrices were generated, as shown in Fig. 3. The matrices revealed that the models had similar performance for selective and non-selective test compounds, with normalized accuracy values ranging from 0.68–0.92 to 0.74–0.77, respectively. Importantly, if selective compounds were incorrectly predicted, they were mostly predicted to be non-selective, rather than belonging to the other selectivity class. Similarly, for both selectivity data sets, incorrectly predicted non-selective compounds were mostly predicted to be D4R-selective. In this low-data application (see above), the overall high prediction accuracy for selective and non-selective test compounds provided a solid basis for subsequent MolCE analysis focusing on correctly predicted test compounds.

Fig. 3.

Class-dependent prediction accuracy. For the two selectivity data sets, confusion matrices report BRF model prediction accuracy over 10 independent trials. The matrices were normalized based on true labels

MolCE statistics

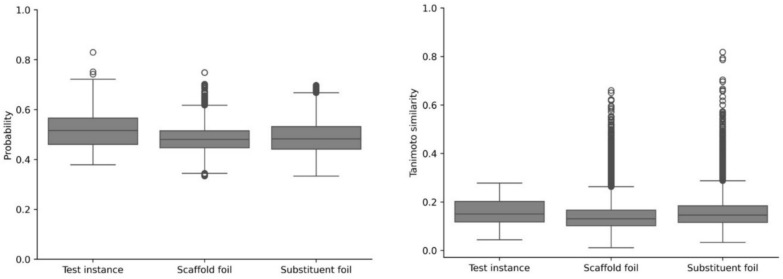

MolCE analysis was carried out for all correctly predicted non-selective and incorrectly predicted selective test instances. Correctly predicted non-selective compounds were of particular interest because determination of contrastive shifts enabled the identification of features driving the predictions of these compounds towards isoform selectivity. For this purpose, each set of selective compounds was considered a foil class. Conversely, for incorrectly predicted selective test instances, non-selective test compounds served as the foil class. This set-up enabled the identification of chemical features directing the incorrect prediction of non-selectivity (the fact) towards the correct prediction of selectivity (the foil). Additionally, it provided the basis for the analysis of chemical features determining correct predictions of compound selectivity that were not captured by incorrect predictions. First, it was established whether the contrastive examples fell into the application domain of the model. Figure 4 (left) shows the highest class probability following the prediction of test instances and generated scaffold or substituent foils. For BRF models, the maximal probability serves as a direct measure of model confidence. The probability distributions are similar for test instances and the generated foils, indicating a high degree of confidence in the test predictions and contrastive examples. Next, it was determined whether the generated contrastive examples were similar to the training compounds. Therefore, pairwise Tanimoto similarity calculations between scaffold and substituent foils and all training instances were carried out (Fig. 4, right). The similarity distributions of the contrastive examples are consistent with the distribution of the test instances, revealing that the contrastive examples fell into the application domain. Furthermore, to verify that contrastive shifts were not the result of large feature changes to compounds, the correlation between the contrastive shifts and Tanimoto similarity of the contrastive examples relative to test instances was determined. There was no detectable correlation for scaffold or substituent foils (with Pearson’s correlations ranging from -0.08 to -0.24). Thus, the observed contrastive shifts were the result of defined chemical changes and not cumulative feature perturbations.

Fig. 4.

Applicability domain analysis. For an exemplary trial, all test set predictions and resulting scaffold and substituent foils were analyzed. Boxplots report the distribution of the highest class probability following model prediction of test instances or foils (left). Additionally, the distributions of pairwise Tanimoto similarity calculations for test instances or foils and all training instances are shown (right)

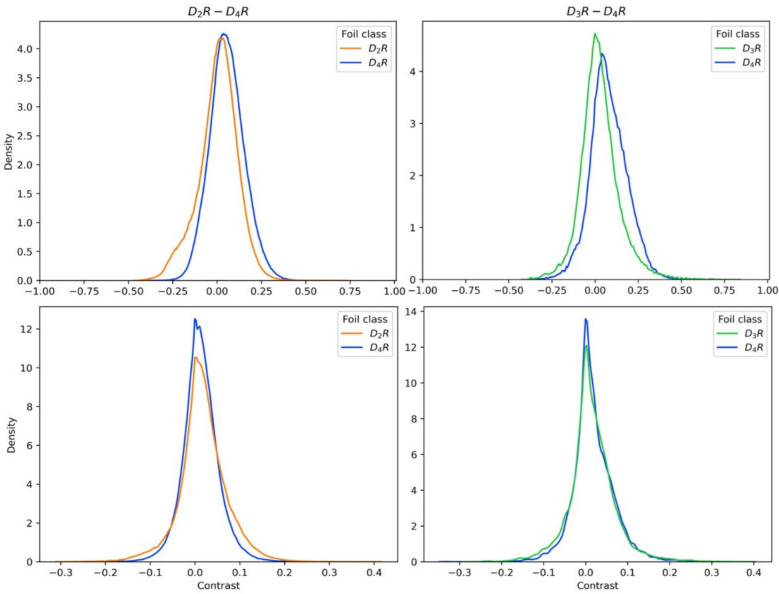

Figure 5 reports the contrastive behavior of all scaffold foils (top) and substituent foils (bottom) for D2R–D4R (left) and D3R–D4R (right) data sets, respectively. The distributions were similar for both selectivity data sets. Most scaffold foils showed only marginal contrastive behavior relative to the original prediction. This finding was attributable to the high similarity between the original and exchanged scaffolds. However, in each case, extreme values of the distributions identified a number of similar but highly-contrastive scaffold foils, with scores ranging from 0.46 to 0.83, indicating large contrastive shifts towards the fact or foil class. While corresponding observations were made for substituent foils, they generally caused contrastive shifts of smaller magnitude, with scores ranging from − 0.33 to 0.41.

Fig. 5.

Contrastive behavior. Shown are the distributions of contrastive behavior values of all scaffold foils (top) and substituent foils (bottom) generated over 10 independent trials for data sets D2R–D4R (left) and D3R–D4R (right)

Following our approach, the design of scaffold foils and substituent foils was structurally conservative, focusing on structural analogues, as it should be in order to arrive at chemically intuitive contrastive explanations. Importantly, the score distributions consistently included values indicating substantial contrastive shifts. Since sampling of our foils through scaffold or substituent replacements was computationally efficient, informative foils were consistently identified among large numbers of candidates. These scaffold foils and substituent foils captured small chemical modifications that disproportionately affected model decisions, revealing chemical features that were critically important for the ability of the BRF models to distinguish between fact and foil classes, as discussed in the following.

Contrastive explanations

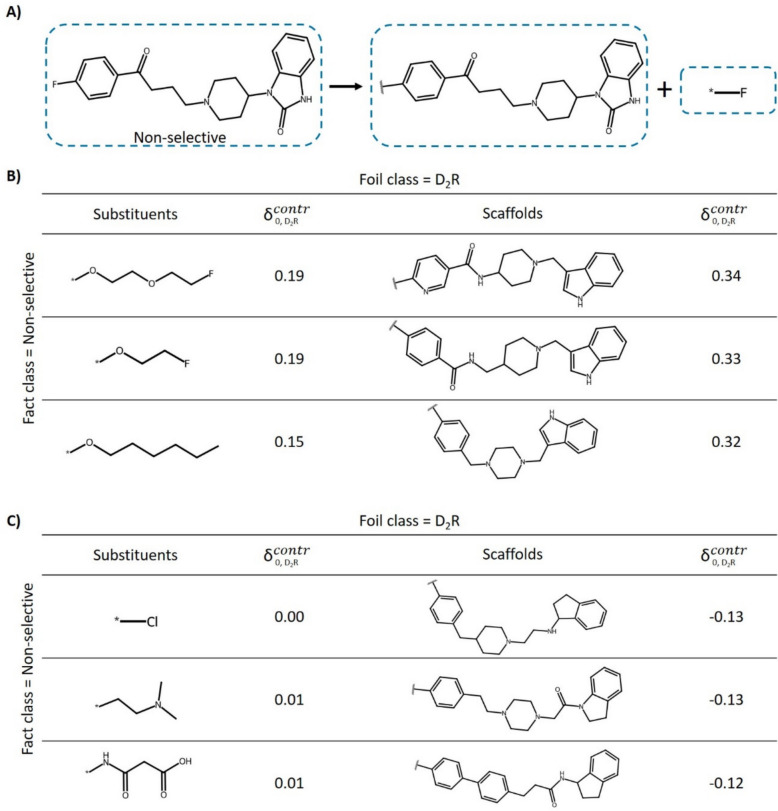

Figure 6a illustrates the application of MolCE to an exemplary correctly predicted non-selective test compound. In this case, D2R-selective compounds represented the foil class. Figure 6b shows the most contrastive alternative substituents and scaffolds that shifted the prediction towards D2R selectivity. Comparably large substituents containing ether moieties displayed the highest contrastive behavior. Moreover, small modifications of the original scaffold significantly impacted the prediction, with contrastive behavior scores ranging from 0.32 to 0.34. In the two most contrastive scaffolds, the original linker fragment was replaced with an amide linker. In all three contrastive scaffolds, the original imidazolidinone was replaced with a pyrroline moiety, revealing confined structural modifications that consistently caused contrastive shifts, indicating their relevance for the prediction of D2R selectivity. This observation was verified by determining the relative occurrence of the substructure across the data set. The pyrroline moiety was detected in 31% of the D2R-selective ligands compared to 9% and 10% of D4R- and non-selective ligands, respectively. The least contrastive substituents and scaffolds shown in Fig. 6c either had a negligible effect on the predictions or further shifted the probability in support of the fact class prediction. The original fluorine substituent was freely interchangeable with the least contrastive substituent, chlorine, as indicated by a score of 0. Furthermore, several similar scaffolds with negative scores were identified, thus further increasing the probability of the original prediction. Hence, on the one hand, different substituent and scaffold modifications were found to further support the correct prediction of non-selectivity; on the other, specific modifications were identified to cause substantial contrastive shifts towards the foil class.

Fig. 6.

Contrastive explanation for a non-selective test compound. Shown is the application of MolCE to a correctly predicted non-selective test instance, with D2R-selective compounds representing the foil class. A The compound is decomposed into its scaffold and substituent. In B and C, the most and least contrastive substituents and scaffolds are shown, respectively

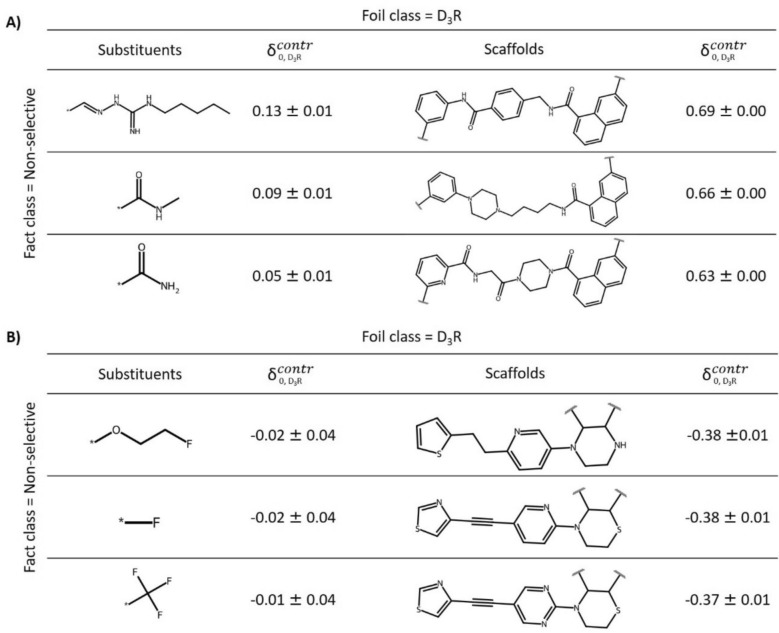

Next, we aggregated contrastive behavior for test compounds to search for global explanations. Therefore, contrastive behavior was calculated for all correctly predicted non-selective test compounds of the D3R–D4R data set over 10 independent trials, with D3R-selective compounds serving as the foil class. The values were averaged for all scaffolds from scaffold foils and substituents from substituent foils with single substitution sites to identify the most and least contrastive scaffolds and substituents, respectively (Fig. 7).

Fig. 7.

Global MolCE analysis of correct predictions. For all correctly predicted non-selective test compounds of the D3R–D4R data set across 10 independent trials, with D3R-selective compounds serving as the foil class, A and B show the most and least contrastive substituents and scaffolds, respectively, together with their mean contrastive behavior values

Figure 7a shows the most contrastive scaffolds and substituents. Mean contrastive shifts were much larger for scaffolds than substituents, indicating a key role of specific core structure modifications for distinguishing between non-selective and D3R-selective compounds. Highly-contrastive scaffolds contained amide linker and naphthalene moieties. In addition, consistently contrastive substituents were amine or amide derivatives. Conversely, Fig. 7b shows the least contrastive substituents and scaffolds, reinforcing correct predictions of non-selective compounds (with negative mean scores). These scaffolds contained heterocyclic amines combined with thiophene or thiazole moieties, which were not observed in highly-contrastive scaffolds. The least contrastive substituents contained fluorine substituents (including a single fluorine and trifluoromethyl group). Encouragingly, MolCE analysis identified global contrastive explanations for distinguishing between non-selective and D3R-selective test compounds. As an additional control, selectivity labels were randomized and the models re-trained using randomized data. For the resulting random predictions, the original contrastive shifts were no longer detectable.

Furthermore, global MolCE analysis was carried out for all D4R-selective test compounds from the D2R–D4R data set that were incorrectly predicted to be non-selective, hence representing a prediction scenario different from the one discussed above. In this case, the fact class was the incorrect prediction of non-selectivity and the foil class the correct prediction of D4R selectivity. Accordingly, the most contrastive substituents and scaffolds shifted the probability distribution furthest towards the correct prediction outcome. As shown in Fig. 8a, contrastive substituents comprised carbonitrile, ether, and amine groups. Given their small positive scores, these substituents did not substantially affect the predictions. By contrast, contrastive shifts were much larger for scaffold foils. Notably, D4R-selective compounds contained different core structure variants, which was also reflected by the corresponding scaffold foils causing the largest contrastive shifts. The common feature of these scaffolds was the presence of a piperazine linked to a N-containing heterocyclic ring. In light of these observations, we determined the occurrence of the piperazine moieties in the core structures of the D2R–D4R data set. This substructure occurred in 65% of the D4R-selective ligands compared to 31% and 43% of D2R- and non-selective ligands, respectively. Figure 8b shows exemplary D4R-selective compounds containing a piperazine moiety. In all compounds, the piperazine substructure was also linked to a N-containing heterocyclic ring. These observations provided an intuitive explanation for the incorrect predictions, as the ML model preferentially associated D4R selectivity with this signature structural motif. However, the piperazine-heterocycle motif was not contained in all D4R-selective compounds including the incorrectly predicted instances. Figure 8c shows mapping of SHAP feature importance values on the D4R-selective compounds containing a piperazine moiety. On the basis of SHAP analysis, the piperazine motif also strongly contributed to the correct prediction of D4R-selectivity, consistent with the contrastive shifts for incorrect predictions of non-selectivity. However, SHAP analysis prioritizes large substructures for individual predictions, which is arguably more complex less clear than the focus on the piperazine motif considering contrastive shifts.

Fig. 8.

Global MolCE analysis of incorrect predictions. A For all D4R-selective test compounds from the D2R–D4R data set incorrectly predicted to be non-selective over 10 trials, with correctly predicted D4R-selective compounds serving as the foil class, the most contrastive substituents and scaffolds are shown. B Exemplary D4R-selective compounds containing the piperazine moiety (red) are shown. C For comparison, SHAP values were calculated with TreeExplainer [44] to quantify feature contributions. Values of features present in the D4R-selective test compounds were projected on their structures by atom-based mapping. Positive SHAP values (red) support the prediction of D4R-selectivity while negative values (blue) oppose this prediction

Conclusions

The black box nature of contemporary ML models works against the acceptance of predictions for experimental design and thus limits the potential impact of ML in interdisciplinary research and development. Therefore, the field of XAI has steadily gained in attention over the past years. In general, XAI approaches aim to provide computational explanations for predictions or general insights into model behavior or internal processes. In the natural sciences, intuitive accessibility and interpretability of computational explanations play a critically important role for potential experimental follow-up. Due to their computational heterogeneity, not all XAI approaches are well suited for generating intuitive explanations of predictions that can be appreciated by an interdisciplinary audience. As a consequence, concepts originating from, for instance, psychology or the social sciences are sought after that can be adapted for XAI and have the potential to closely interface human and artificial intelligence. Among these is contrastive reasoning, reflecting a human tendency to explain non-anticipated events by concentrating on opposing outcomes. In XAI, contrastive explanations have thus far mostly focused on identifying feature subsets that are minimally required to ensure or prevent a particular prediction. In successful cases, this yields sound computational explanations for model decisions, but does not necessarily ensure interpretability and causal reasoning, especially in the natural sciences. We have devised the first adaptation of contrastive explanations for applications in chemistry, aiming to generate explanations that can be readily appreciated from a chemical perspective and further explored at the molecular level of detail. Therefore, the MolCE methodology generates virtual candidate molecules (instead of exploring ML feature sets) and quantifies their contrastive behavior compared to “factual” predictions. This makes it possible to determine contrastive shifts, select preferred candidate molecules, and analyze structural features contributing to contrastive explanations, as shown herein. The “fact-foil” framework for assessing contrastive behavior is versatile and capable of accounting for various prediction settings, making it possible to center the analysis in different ways. Intuitive access to and interpretability of predictions also plays a key role in medicinal chemistry-driven early-phase drug discovery. In support of candidate selection and compound synthesis, contrastive explanations focusing on compound structures and minimal changes are readily accessible to practicing chemists, without the need to consider theoretical foundations in detail. While complementary to feature attribution methods, contrastive explanations generated with MolCE often provide a clearer chemical picture of origins of prediction outcomes than quantification of feature contributions that is interpretable in chemical terms by comparing structural analogues. This is particularly attractive for introducing and evaluating compound predictions in the practice of medicinal chemistry. Taken together, the findings of our proof-of-concept study suggest that the MolCE approach will be of considerable interest for molecular ML and practical applications in chemistry. It is also noted that the approach should be applicable to further advance the analysis of molecular counterfactuals, for instance, by determining contrastive shifts as a consequence of minimal structural modifications, providing an opportunity for future research. Moreover, the method can be easily adapted to regression tasks by calculating contrastive shifts as a function of predicted values instead of class probabilities. As a part of our study, the new selectivity data sets and the MolCE method are made freely available.

Abbreviations

- AI

Artificial intelligence

- AS

Analogues series

- BA

Balanced accuracy

- BRF

Balanced random forest

- CPDS

Compounds

- ECFP4

Extended-connectivity fingerprint with bond diameter 4

- FN

False negative

- FP

False positive

- LIME

Locally interpretable model-agnostic explanations

- MCC

Matthew’s correlation coefficient

- ML

Machine learning

- MolCE

Molecular contrastive explanations

- SHAP

Shapley additive explanations

- TN

True negative

- TNR

True negative rate

- TP

True positive

- TPR

True positive rate

- XAI

Explainable AI

Author contributions

Alec Lamens: Conceptualization, Methodology, Software, Data curation, Investigation, Formal analysis, Writing—original draft, Writing—review and editing. Jürgen Bajorath: Conceptualization, Methodology, Investigation, Formal analysis, Writing—original draft, Writing—review and editing.

Funding

Open Access funding enabled and organized by Projekt DEAL. There are no external funders of this work.

Data availability

All data and code are available via the following link: https://uni-bonn.sciebo.de/s/UZmmShkogZrMbGQ.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Castelvecchi D (2016) Can we open the black box of AI? Nature 538:20–23. 10.1038/538020a [DOI] [PubMed] [Google Scholar]

- 2.Rudin C (2022) Why black box machine learning should be avoided for high-stakes decisions. Brief Nat Rev Meth Primers 2:81. 10.1038/s43586-022-00172-0 [Google Scholar]

- 3.Liang Y, Li S, Yan C, Li M, Jiang C (2021) Explaining the black-box model: a survey of local interpretation methods for deep neural networks. Neurocomputing 419:168–182. 10.1016/j.neucom.2020.08.011 [Google Scholar]

- 4.Vamathevan J, Clark D, Czodrowski P, Dunham I, Ferran E, Lee G, Li B, Madabhushi A, Shah P, Spitzer M, Zhao S (2019) Applications of machine learning in drug discovery and development. Nat Rev Drug Discov 18:463–477. 10.1038/s41573-019-0024-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Vijayan RSK, Kihlberg J, Cross JB, Poongavanam V (2022) Enhancing preclinical drug discovery with artificial intelligence. Drug Discov Today 27:967–984. 10.1016/j.drudis.2021.11.023 [DOI] [PubMed] [Google Scholar]

- 6.Gunning D, Stefik M, Choi J, Miller T, Stumpf S, Yang GZ (2019) XAI—explainable artificial intelligence. Sci Robot 4:eaay7120. 10.1126/scirobotics.aay7120 [DOI] [PubMed] [Google Scholar]

- 7.Vilone G, Longo L (2021) Notions of explainability and evaluation approaches for explainable artificial intelligence. Inf Fusion 76:89–106. 10.1016/j.inffus.2021.05.009 [Google Scholar]

- 8.Angelov PP, Soares EA, Jiang R, Arnold NI, Atkinson PM (2021) Explainable artificial intelligence: an analytical review. Wiley Interdiscip Rev Data Min Knowl Discov 11:e1424. 10.1002/widm.1424 [Google Scholar]

- 9.Linardatos P, Papastefanopoulos V, Kotsiantis S (2020) Explainable AI: a review of machine learning interpretability methods. Entropy 23:18. 10.3390/e23010018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bajorath J (2025) From scientific theory to duality of predictive artificial intelligence models. Cell Rep Phys Sci 6:102516. 10.1016/j.xcrp.2025.102516 [Google Scholar]

- 11.Wang Z, Huang C, Li Y, Yao X (2024) Multi-objective feature attribution explanation for explainable machine learning. ACM Trans Evol Comput Optim 4:1–32. 10.1145/3617380 [Google Scholar]

- 12.Ribeiro MT, Singh S, Guestrin C (2016) Why should I trust you? Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp 1135–1144. 10.1145/2939672.293977

- 13.Chen H, Covert IC, Lundberg SM, Lee S (2023) Algorithms to estimate shapley value feature attributions. Nat Mach Intell 5:590–601. 10.1038/s42256-023-00657-x [Google Scholar]

- 14.Rodríguez-Pérez R, Miljković F, Bajorath J (2022) Machine learning in chemoinformatics and medicinal chemistry. Annu Rev Biomed Data Sci 5:43–65. 10.1146/annurev-biodatasci-122120-124216 [DOI] [PubMed] [Google Scholar]

- 15.Ribeiro MT, Singh S, Guestrin C (2018) Anchors: high-precision model-agnostic explanations. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, pp 1727–1735. 10.1609/aaai.v32i1.11491

- 16.Lamens A, Bajorath J (2024) Molanchor method for explaining compound predictions based on substructures. Eur J Med Chem Rep 12:100230. 10.1016/j.ejmcr.2024.100230 [Google Scholar]

- 17.Byrne RM (2016) Counterfactual thought. Annu Rev Psychol 67:135–157. 10.1146/annurev-psych-122414-033249 [DOI] [PubMed] [Google Scholar]

- 18.Wellawatte GP, Seshadri A, White AD (2022) Model agnostic generation of counterfactual explanation for molecules. Chem Sci 13:3697–3705. 10.1039/D1SC05259D [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lamens A, Bajorath J (2024) Generation of molecular counterfactuals for explainable machine learning based on core-substituent recombination. ChemMedChem 19:e202300586. 10.1002/cmdc.202300586 [DOI] [PubMed] [Google Scholar]

- 20.Miller T (2019) Explanation in artificial intelligence: insights from the social sciences. Artif Intell 267:1–38. 10.1016/j.artint.2018.07.007 [Google Scholar]

- 21.Lipton P (1990) Contrastive explanation. R Inst Philos Suppl 27:247–266. 10.1017/S1358246100005130 [Google Scholar]

- 22.Stepin I, Alonso JM, Catala A, Pereira-Fariña M (2021) Survey of contrastive and counterfactual explanation generation methods for explainable artificial intelligence. IEEE Access 9:11974–12001. 10.1109/ACCESS.2021.3051315 [Google Scholar]

- 23.Miller T (2021) Contrastive explanation: a structural-model approach. Knowl Eng Rev 36:e14. 10.1017/S0269888921000102 [Google Scholar]

- 24.Dhurandhar A, Pedapati T, Balakrishnan A, Chen PY, Shanmugam K, Puri A (2024) Model agnostic contrastive explanations for classification models. EEE J Emerg Sel Top Circ Syst 14:789–798. 10.1109/JETCAS.2024.3486114 [Google Scholar]

- 25.Dhurandhar A, Chen PY, Luss R, Tu CC, Ting P, Shanmugam K, Das P (2018) Explanations based on the missing: towards contrastive explanations with pertinent negatives. Neur Inf Proc Sys 32:592–603. 10.48550/arXiv.1802.07623 [Google Scholar]

- 26.Jacovi A, Swayamdipta S, Ravfogel S, Elazar Y, Choi Y, Goldberg Y (2021) Contrastive explanations for model interpretability. Preprint at arXiv: 2103.01378

- 27.Bemis GW, Murcko MA (1996) The properties of known drugs. 1. Molecular frameworks. J Med Chem 39:2887–2893. 10.1021/jm9602928 [DOI] [PubMed] [Google Scholar]

- 28.Liu T, Lin Y, Wen X, Jorissen R, Gilson MK (2007) Bindingdb: a web-accessible database of experimentally determined protein–ligand binding affinities. Nucleic Acids Res 35:D198–D201. 10.1093/nar/gkl999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bento AP, Gaulton A, Hersey A, Bellis LJ, Chambers J, Davies M, Krüger FA, Light Y, Mak L, McGlinchey S, Nowotka M, Papadatos G, Santos R, Overington JP (2014) The ChEMBL bioactivity database: an update. Nucleic Acids Res 42:D1083–D1090. 10.1093/nar/gkt1031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mishra A, Singh S, Shukla S (2018) Physiological and functional basis of dopamine receptors and their role in neurogenesis: possible implication for Parkinson’s disease. J Exp Neurosci 12:1–8. 10.1177/1179069518779829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Beaulieu JM, Gainetdinov RR (2011) The physiology, signaling, and pharmacology of dopamine receptors. Pharmacol Rev 63:182–217. 10.1124/pr.110.002642 [DOI] [PubMed] [Google Scholar]

- 32.Keck TM, Free RB, Day MM, Brown SL, Maddaluna MS, Fountain G, Cooper C, Fallon B, Holmes M, Stang CT, Burkhardt R, Bonifazi A, Ellenberger MP, Newman AH, Sibley DR, Wu C, Boateng CA (2019) Dopamine d4 receptor-selective compounds reveal structure–activity relationships that engender agonist efficacy. J Med Chem 62:3722–3740. 10.1021/acs.jmedchem.9b00231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Xiao J, Free RB, Barnaeva E, Conroy JL, Doyle T, Miller B, Bryant-Genevier M, Taylor MK, Hu X, Dulcey AE, Southall N, Ferrer M, Titus S, Zheng W, Sibley DR, Marugan JJ (2014) Discovery, optimization, and characterization of novel D2 dopamine receptor selective antagonists. J Med Chem 57:3450–3463. 10.1021/jm500126s [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Baell JB, Holloway GA (2010) New substructure filters for removal of pan assay interference compounds (PAINS) from screening libraries and for their exclusion in bioassays. J Med Chem 53:2719–2740. 10.1021/jm901137j [DOI] [PubMed] [Google Scholar]

- 35.Irwin JJ, Duan D, Torosyan H, Doak AK, Ziebart KT, Sterling T, Tumanian G, Shoichet BK (2015) An aggregation advisor for ligand discovery. J Med Chem 58:7076–7087. 10.1021/acs.jmedchem.5b01105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bruns RF, Watson IA (2012) Rules for identifying potentially reactive or promiscuous compounds. J Med Chem 55:9763–9772. 10.1021/jm301008n [DOI] [PubMed] [Google Scholar]

- 37.Naveja JJ, Vogt M, Stumpfe D, Medina-Franco JL, Bajorath J (2019) Systematic extraction of analogue series from large compound collections using a new computational compound-core relationship method. ACS Omega 4:1027–1032. 10.1021/acsomega.8b03390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Breiman L (2001) Random forests. Mach Learn 45:5–32. 10.1023/A:1010933404324 [Google Scholar]

- 39.Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay É (2011) Scikit-Learn: Machine learning in python. J Mach Learn Res 12:2825–2830 [Google Scholar]

- 40.Rogers D, Hahn M (2010) Extended-connectivity fingerprints. J Chem Inf Model 50:742–754. 10.1021/ci100050t [DOI] [PubMed] [Google Scholar]

- 41.Brodersen KH, Ong CS, Stephan KE, Buhmann JM (2010) The balanced accuracy and its posterior distribution. In: 20th International Conference on Pattern Recognition (ICPR), pp 3121–3124. 10.1109/ICPR.2010.764

- 42.Van Rijsbergen CJ (1979) Information retrieval. Butterworth-Heinemann. 10.1002/asi.4630300621 [Google Scholar]

- 43.Matthews BW (1975) Comparison of the predicted and observed secondary structure of t4 phage lysozyme. Biochim Biophys Acta Protein Struct 405:442–451. 10.1016/0005-2795(75)90109-9 [DOI] [PubMed] [Google Scholar]

- 44.Lundberg SM, Erion G, Chen H, DeGrave A, Prutkin JM, Nair B, Katz R, Himmelfarb J, Bansal N, Lee S (2020) From local explanations to global understanding with explainable AI for trees. Nat Mach Intell 2:56–67. 10.1038/s42256-019-0138-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data and code are available via the following link: https://uni-bonn.sciebo.de/s/UZmmShkogZrMbGQ.