Abstract

The rapid development of high-throughput sequencing technologies has generated vast amounts of omics data, making multi-omics integration a crucial approach for understanding complex diseases. Despite the introduction of various multi-omics integration methods in recent years, existing approaches still have limitations, primarily in their reliance on manual feature selection, restricted applicability, and inability to comprehensively capture both inter-sample and cross-omics interactions. To address these challenges, we propose mmMOI, an end-to-end multi-omics integration framework that incorporates multi-label guided learning and multi-scale attention fusion. mmMOI directly processes raw high-dimensional omics data without requiring manual feature selection, thereby enhancing model interpretability and eliminating biases introduced by feature preselection. First, we introduce a multi-label guided multi-view graph neural network, which enables the model to adaptively learn omics data representations across different datasets, thereby improving generalizability and stability. Second, we design a multi-scale attention fusion network, which integrates global attention and local attention. This dual-attention mechanism allows mmMOI to more accurately integrate multi-omics data, enhance cross-omics feature representations, and improve classification performance. Experimental results demonstrate that mmMOI significantly outperforms state-of-the-art methods in classification tasks, exhibiting high stability and adaptability across diverse biological contexts and sequencing technologies. Additionally, mmMOI successfully identifies key disease-associated biomarkers, further enhancing its biological interpretability and practical relevance. The source code, datasets, and detailed hyperparameter configurations for mmMOI are available at https://github.com/mlcb-jlu/mmMOI.

Keywords: multi-omics integration, multi-label guided learning, multi-scale fusion, graph neural network

Introduction

Cancer is an extremely complex genomic disease that can occur in most human organs [1]. Previous studies have shown that genomic alterations such as somatic mutations, copy number changes, epigenetic aberrations, chromatin rearrangements, and gene fusions can all lead to cancer [2]. The treatment methods and processes for different cancer patients vary significantly. Moreover, even patients with the same type of cancer can have significantly different responses to the same drug and survival risks, suggesting a high degree of heterogeneity among subtypes of the same cancer. Therefore, clinical approaches should consider these differences to implement appropriate diagnostic and therapeutic measures [3]. The ability to accurately identify and classify these cancers and their subtypes will directly impact patients’ precision diagnosis and personalized treatment [4, 5].

With the advent of high-throughput sequencing technology, scientists have accumulated vast amounts of omics data [6, 7], including genomics [8], transcriptomics [9], epigenomics [10], metabolomics [11], and proteomics [12]. Early cancer classification predominantly relied on single-omics data, which failed to capture the intricate relationships between gene mutation, expression, and regulation, resulting in less accurate classifications [13, 14]. Integrating various omics data offers a comprehensive understanding of complex diseases and can be approached in three ways: early, late, and intermediate integrations [15]. Early integration combines all omics data into a single feature set, which can increase redundancy and decrease model performance. Late integration models each data type separately and combines the results but misses inter-omics correlations. Intermediate integration merges different omics data during model construction, reducing redundancy while preserving biological correlations, thus yielding better results. These methods need to address two main challenges: the feature representation of single-omics data and the effective integration of different types of omics data.

The most common method for representing single-omics data is the autoencoder (AE). Ma et al. [16] proposed the Multi-Omics Cancer Subtype Classification (MOCSC), which uses stacked sparse denoising AEs to extract features. These features are then provided to a single-layer neural network to obtain initial predictions, which are integrated into a view-related discovery network for final training and prediction. Benkirane et al. [17] introduced the CustOmics method, a staged fusion framework. In the first stage, an AE is provided for each type of omics data to create subrepresentations, which are input into a central variational AE in the second stage. Graph neural network (GNN)-based methods have also been proposed to capture the similarity relationships between samples. Wang et al. [18] developed the Multi-Omics Graph Convolutional Network (MOGONET), which uses three independent graph convolutional networks to analyze different types of omics data and integrates the learned label spaces into tensors for final classification. Chen et al. [19] proposed the Supervised graph contrastive learning for cancer subtype identification (MCRGCN), which employs supervised graph contrastive learning on multi-omics data using various data augmentation methods to effectively learn the unique feature distributions and interactions of different omics data, thus achieving cancer subtype classification. Liang et al. [20] introduced the Explainable multi-omics integration for disease prediction and module detection (GREMI), which uses graph attention networks to learn biomolecular interaction information for feature representation. The subsequent fusion process employs a real-class probability method to adaptively classify at both the feature and omics levels.

Attention mechanisms are widely applied in multi-omics problems. Gong et al. [21] proposed the Multi-omics integration via attention-based deep learning for biomedical classification (MOADLN), which uses a self-attention mechanism for dimensionality reduction and classifies through a multi-omics correlation discovery network. Pang et al. [22] proposed the attentionMOI method, which uses a distribution-based feature denoising algorithm for feature selection and then uses multi-omics attention fusion to predict cancer prognosis and identify cancer subtypes. Li et al. [23] proposed the Multi-omics integration via graph convolutional networks for cancer subtyping (MoGCN), combining AE and GCN for cancer subtype analysis. Ouyang et al. [24] proposed the Multi-omics integration via adaptive graph learning and attention mechanism (MOGLAM), combining GCN and attention mechanisms for cancer classification and biomarker identification. This method uses a dynamic graph convolutional network with feature selection to identify important biomarkers and applies a multi-omics attention mechanism to weight the embedded representations of different omics, capturing complex common and complementary information.

Despite recent advances in multi-omics integration, existing methods still face several limitations. First, omics data preprocessing is heavily dependent on feature selection, making the final integration outcomes more influenced by initial feature selection rather than the model’s intrinsic representation learning capability. This reliance not only restricts model interpretability but also introduces the risk of label leakage. Second, many current methods are tailored for specific datasets, requiring extensive parameter tuning or structural modifications when applied to different data types, thereby limiting their generalizability. Third, most existing approaches employ single-level attention mechanisms, which fail to capture both inter-sample and cross-omics interactions sufficiently. This deficiency reduces the comprehensiveness and granularity of multi-omics data integration.

To address these challenges, we proposed mmMOI, a multi-omics integration framework using multi-label guided learning and multi-scale fusion. The framework has two components: (i) the single-omics data representation learning module with a multi-view GNN based on multi-label guided learning and (ii) the multi-omics data fusion module with a multi-scale attention fusion network. Unlike traditional feature representation learning methods, we treat it as an auxiliary training task. Under the guidance of partial true labels and pseudo-labels extracted via a graph convolution module, the model evaluates predictions from each view and adjusts the fusion weights accordingly. This allows the model to adaptively integrate multi-view graphs, producing a consensus graph representation enriched with global information. Next, each omics representation learned is fed into the global attention module. This module assigns weights to different omics features while preserving the intra-omics relationships between patients. Finally, the local attention module refines the fused representations by capturing shared and complementary information across different omics types. In classification tasks across different cancer subtypes, mmMOI demonstrates superior performance compared to state-of-the-art approaches, consistently exhibiting high stability and adaptability across diverse biological contexts and sequencing technologies. Additionally, mmMOI effectively identifies key disease-associated biomarkers, further enhancing its biological interpretability and clinical applicability.

The primary contributions of the proposed model are summarized as follows: (i) We introduce a novel multi-omics integration framework that combines multi-label guided learning with multi-scale attention fusion, enhancing both interpretability and generalizability. (ii) A multi-label guided multi-view GNN is employed to adaptively learn representations from omics data, mitigating the risk of overfitting to specific labels. (iii) A multi-scale attention fusion network is designed to dynamically integrate different omics layers dynamically, enabling the model to accurately capture complex biological interactions.

Materials and methods

Overview of mmMOI

We present a multi-omics integration framework using multi-label guided learning and multi-scale fusion (short for mmMOI), as illustrated in Fig. 1A. For single-omics representation learning (Fig. 1B), the framework utilizes a multi-view GNN guided by multi-label learning to learn representations from each omics data type. Unlike traditional methods, this module treats representation learning as an auxiliary training task, enhancing feature extraction without overfitting specific labels. For multi-omics data fusion (Fig. 1C), the framework employs a multi-scale attention fusion network that adaptively integrates representations from different omics layers. This allows the model to capture complex interactions between various biological data types.

Figure 1.

Overview of mmMOI, including (A) The overall flow of the multi-omics integration framework, (B) The framework of the multi-view GNN guided by multi-label learning, and (C) The framework of the multi-scale attention fusion network.

Single-omics data representation

Cancer multi-omics datasets typically comprise only a few hundred samples, while feature dimensions can reach the thousands, often containing significant noise and redundant features. Consequently, representation learning is crucial for handling such high-dimensional omics data. In our study, we propose a multi-view GNN model based on multi-label auxiliary training for single-omics data representation learning. This model adaptively reduces the dimensionality of multi-omics data across different datasets and downstream tasks during the learning process. The corresponding pseudocode is shown as Algorithm 1.

Dimensionality reduction autoencoder

For any type of omics data  , where

, where  represents the number of patients, and

represents the number of patients, and  represents the original feature dimensions of the data. Define

represents the original feature dimensions of the data. Define  and

and  as the encoder and decoder of the AE, respectively. The encoder

as the encoder and decoder of the AE, respectively. The encoder  maps the input omics data

maps the input omics data  to the hidden space

to the hidden space  , and the decoder reconstructs

, and the decoder reconstructs  into data

into data  with the same dimensions as the input data

with the same dimensions as the input data  . Ultimately, the hidden space

. Ultimately, the hidden space  is extracted as the low-dimensional representation of the learned omics data. Specifically,

is extracted as the low-dimensional representation of the learned omics data. Specifically,  (where

(where  is the dimension of the intermediate representation), and

is the dimension of the intermediate representation), and  . The AE reduces the dimension of the omics data

. The AE reduces the dimension of the omics data  from

from  to

to  , resulting in the low-dimensional feature representation

, resulting in the low-dimensional feature representation  . Based on this, we can obtain the node relationship matrix

. Based on this, we can obtain the node relationship matrix  , which is defined as follows:

, which is defined as follows:

|

(1) |

where  is the set threshold.

is the set threshold.

Dimensionality reduction autoencoder

For any omics data  , where

, where  represents the number of patients, and

represents the number of patients, and  represents the original feature dimensionality. Define

represents the original feature dimensionality. Define  and

and  as the encoder and decoder, respectively. The encoder

as the encoder and decoder, respectively. The encoder  maps

maps  to latent space

to latent space  , and the decoder

, and the decoder  reconstructs

reconstructs  to

to  with identical dimensionality to

with identical dimensionality to  . The latent representation

. The latent representation  (where

(where  is the reduced dimension) is extracted as the low-dimensional features. The AE thus reduces dimensionality from

is the reduced dimension) is extracted as the low-dimensional features. The AE thus reduces dimensionality from  to

to  . Based on

. Based on  , we derive the node relationship matrix

, we derive the node relationship matrix  as follows:

as follows:

|

(2) |

where  is a predefined threshold, and

is a predefined threshold, and  .

.

Multi-label guided graph fusion

In multi-omics problems, complex interdependencies and associations exist between different samples. Although AEs have certain nonlinear fitting capabilities, they cannot capture these relationships well. GNNs, on the other hand, use graph-structured data to learn models and possess powerful nonlinear capturing abilities. They can utilize node features and the similarity relationships between nodes to extract more effective data representations. Therefore, we input the low-dimensional representations of different omics data, output by the dimensionality reduction AE, into the GNN for further feature representation learning. To extract the relationships between different nodes effectively, we use various similarity metrics to generate adjacency matrices  for

for  views. Since the

views. Since the  from different views contain complementary information, integrating them into a consensus graph is crucial. Intuitively, weighting and summing each refined

from different views contain complementary information, integrating them into a consensus graph is crucial. Intuitively, weighting and summing each refined  is a simple yet effective method. In our model, we use the actual labels

is a simple yet effective method. In our model, we use the actual labels  from the training dataset and the pseudo labels

from the training dataset and the pseudo labels  obtained from clustering the consensus graph to score each view. The

obtained from clustering the consensus graph to score each view. The  is obtained through the graph encoding module to determine the weight of each view. By

is obtained through the graph encoding module to determine the weight of each view. By  , we can calculate the weight

, we can calculate the weight  of each view

of each view  :

:

|

(3) |

where  denotes the number of views, and

denotes the number of views, and  represents the smooth-sharp parameter. When

represents the smooth-sharp parameter. When  , it has a smoothing effect; when

, it has a smoothing effect; when  , it amplifies the differences between views. Based on this, the adjacency matrix of the consensus graph can be defined as follows:

, it amplifies the differences between views. Based on this, the adjacency matrix of the consensus graph can be defined as follows:

|

(4) |

Multi-label guided graph fusion

In multi-omics problems, complex interdependencies exist between samples. While AEs have nonlinear fitting capabilities, GNNs better capture relationships using graph-structured data. GNNs leverage node features and pairwise similarities to extract effective representations. Therefore, we feed the low-dimensional omics representations  from dimensionality reduction AEs into GNNs for feature learning. To model node relationships, we generate view-specific adjacency matrices

from dimensionality reduction AEs into GNNs for feature learning. To model node relationships, we generate view-specific adjacency matrices  using multiple similarity metrics. Since

using multiple similarity metrics. Since  contain complementary information, we integrate them into a consensus graph. The adjacency matrix of the consensus graph can be defined as follows:

contain complementary information, we integrate them into a consensus graph. The adjacency matrix of the consensus graph can be defined as follows:

|

(5) |

where weights  are determined by multi-label guidance. Using training labels

are determined by multi-label guidance. Using training labels  and pseudo-labels

and pseudo-labels  from consensus graph clustering, we compute view scores

from consensus graph clustering, we compute view scores  via graph encoding:

via graph encoding:

|

(6) |

where  denotes the number of views, and

denotes the number of views, and  represents the smooth-sharp parameter. When

represents the smooth-sharp parameter. When  , it has a smoothing effect; when

, it has a smoothing effect; when  , it amplifies the differences between views.

, it amplifies the differences between views.

Graph encoding and view evaluation

As shown in Fig. 1B, the inputs to the graph encoder are the low-dimensional features  obtained from the AE, the adjacency matrices

obtained from the AE, the adjacency matrices  from different views, and the adjacency matrix

from different views, and the adjacency matrix  of the consensus graph. The purpose of this module is to encode the shared low-dimensional features

of the consensus graph. The purpose of this module is to encode the shared low-dimensional features  along with the graph structure information to generate latent representations of different omics data. Specifically, we train a parameter-shared GNN to utilize the adjacency matrices

along with the graph structure information to generate latent representations of different omics data. Specifically, we train a parameter-shared GNN to utilize the adjacency matrices  from different views and the adjacency matrix

from different views and the adjacency matrix  of the consensus graph to encode the low-dimensional features

of the consensus graph to encode the low-dimensional features  :

:

|

(7) |

|

(8) |

where GNN denotes the graph convolution operation,

denotes the graph convolution operation,  represents the low-dimensional features obtained from the AE, and

represents the low-dimensional features obtained from the AE, and  and

and  are the normalized forms of

are the normalized forms of  and

and  , respectively.

, respectively.

Since the latent embedding  of the consensus graph

of the consensus graph  contains the global common information from all views, we can use it as a pseudo label

contains the global common information from all views, we can use it as a pseudo label  to evaluate the clustering scores

to evaluate the clustering scores  of each view

of each view  . Additionally, we utilize the actual labels

. Additionally, we utilize the actual labels  from the training dataset to assist in learning the fusion parameters. Specifically,

from the training dataset to assist in learning the fusion parameters. Specifically,  can be calculated as follows:

can be calculated as follows:

|

(9) |

where  denotes the calculation of metrics such as accuracy,

denotes the calculation of metrics such as accuracy,  represents the k-means algorithm, and MLP represents the linear classifier.

represents the k-means algorithm, and MLP represents the linear classifier.

Graph encoding and view evaluation

As shown in Fig. 1B, the encoder of the view-specific graph takes as input the low-dimensional features  from the AE and view-specific adjacency matrices

from the AE and view-specific adjacency matrices  . Meanwhile, the encoder of the consensus graph takes as input the low-dimensional features

. Meanwhile, the encoder of the consensus graph takes as input the low-dimensional features  from the AE and the consensus adjacency matrix

from the AE and the consensus adjacency matrix  . This module encodes

. This module encodes  with graph structural information to generate latent representations. We implement a parameter-shared GNN that operates on both view-specific and consensus graphs as follows:

with graph structural information to generate latent representations. We implement a parameter-shared GNN that operates on both view-specific and consensus graphs as follows:

|

(10) |

|

(11) |

where  denotes graph neural network,

denotes graph neural network,  and

and  are normalized adjacency matrices. As shown in Fig. 1B, the consensus embedding

are normalized adjacency matrices. As shown in Fig. 1B, the consensus embedding  contains global information and serves as pseudo-labels

contains global information and serves as pseudo-labels  . We evaluate each view using both

. We evaluate each view using both  and training labels

and training labels  as follows:

as follows:

|

(12) |

where  computes evaluation metrics (e.g. accuracy),

computes evaluation metrics (e.g. accuracy),  denotes training set embeddings, and

denotes training set embeddings, and  is the view score.

is the view score.

|

Multi-omics data fusion

Using the method proposed in the previous section, we can obtain feature representations for single-omics data. Next, we need to fuse different types of omics data to obtain a unified feature representation. We propose a multi-scale attention fusion network that focuses on both the global attention information among different omics data and the local attention information among different samples, thereby effectively fusing multiple types of omics data.

Global attention fusion network

Before fusing multi-omics data, it is crucial to address the fact that different types of omics data contribute differently to the final classification outcome [24]. The current feature representations only contain the correlation information between different patients within each type of omics data. Direct fusion may negatively impact the final classification accuracy; therefore, it is necessary to learn the contributions of different omics and adjust the features before fusion. Inspired by Hu et al. [25] in their research on channel attention in the computer vision field, we propose a Global Attention Fusion Network (GAFN) for multi-omics data. We treat different omics data as different channels and adaptively learn the importance of each channel using an attention mechanism. Based on this importance, we assign different weight values to different omics data, thereby enhancing the representation capabilities of the subsequent fusion network. First, we perform global pooling operations to compress a feature channel into a single point  , which contains all the information of the

, which contains all the information of the  th omics feature. The specific calculation is as follows:

th omics feature. The specific calculation is as follows:

|

(13) |

where  is the

is the  th omics representation,

th omics representation,  represents the dimensionality of the features after compression. We can obtain the global feature vector

represents the dimensionality of the features after compression. We can obtain the global feature vector  describing each feature channel.

describing each feature channel.

Then, we use two bottleneck fully connected layers to learn the global feature vector, allowing it to comprehensively capture channel attention information. The final omics channel attention vector  is calculated as follows:

is calculated as follows:

|

(14) |

where  and

and  are learnable weights, with Rectified Linear Unit (ReLU) activation function

are learnable weights, with Rectified Linear Unit (ReLU) activation function  and Sigmoid activation function

and Sigmoid activation function  .

.

By this point, we have learned the attention weights of each channel. However, we only weigh the initial omics features by the obtained omics channel attention vector  . In that case, the resulting feature vector will inevitably overemphasize the omics with richer information while neglecting the roles of other omics. Therefore, we introduce a residual mechanism [26], which considers the contributions of different omics while preserving their inherent specific information. We calculate the representation

. In that case, the resulting feature vector will inevitably overemphasize the omics with richer information while neglecting the roles of other omics. Therefore, we introduce a residual mechanism [26], which considers the contributions of different omics while preserving their inherent specific information. We calculate the representation  of the

of the  th omics after passing through the channel attention residual network as follows:

th omics after passing through the channel attention residual network as follows:

|

(15) |

where  denotes channel-wise scaling and

denotes channel-wise scaling and  represents the ReLU activation function.

represents the ReLU activation function.

Local attention fusion network

The GAFN learns attention weights from a global perspective of all patients and all omics data, helping us assign different importance to different omics features. However, we have not yet performed representation learning from the perspective of all omics data for each patient, which may result in the loss of important common and complementary information between different omics in the final fused features. Inspired by Vaswani et al. [27] in their research on self-attention and multi-head attention in the MLP field, we propose a Local Attention Fusion Network (LAFN) for multi-omics data. For patient  , we form the omics feature matrix:

, we form the omics feature matrix:

|

(16) |

where  is the

is the  th omics feature, and

th omics feature, and  represents the dimensionality of the omics features.

represents the dimensionality of the omics features.

Then, we can compute query, key, and value projections as follows:

|

(17) |

|

(18) |

|

(19) |

where  ,

,  , and

, and  are learnable weights.

are learnable weights.

After that, we use  and

and  combined with the softmax function to calculate the similarity weight

combined with the softmax function to calculate the similarity weight  for patient

for patient  as follows:

as follows:

|

(20) |

where  is used as a scaling factor to ensure that the calculated similarity weights do not become too large.

is used as a scaling factor to ensure that the calculated similarity weights do not become too large.

We can then calculate the feature matrix  that describes each omics for patient

that describes each omics for patient  as follows:

as follows:

|

(21) |

Following the concept of multi-head attention, we set up multiple self-attention blocks, with each self-attention block acting as an attention head. Through the mutual attention of different heads, we identify various associations between omics from different angles, enhancing the representation learning capabilities for different omics. We concatenate the  attention heads, obtaining the new feature matrix

attention heads, obtaining the new feature matrix  as follows:

as follows:

|

(22) |

where  denotes concatenation.

denotes concatenation.

We then input this into a feedforward neural network (NN) composed of linear layers. This allows us to learn the final representation  of patient

of patient  , which integrates the features of various omics data, calculated as follows:

, which integrates the features of various omics data, calculated as follows:

|

(23) |

where  is learnable, and the flatten operation transforms the matrix into a 1D vector to obtain the final representation

is learnable, and the flatten operation transforms the matrix into a 1D vector to obtain the final representation  of patient

of patient  . The features of all patients form the final feature representation matrix

. The features of all patients form the final feature representation matrix  , which is used for downstream tasks.

, which is used for downstream tasks.

Model optimization

Dimensionality reduction autoencoder

During training, we optimize two objectives: (i) reconstruction loss, which measures the difference between the original features  and the reconstructed features

and the reconstructed features  using mean squared error (MSE); (ii) auxiliary classification loss, which make the extracted low-dimensional representations

using mean squared error (MSE); (ii) auxiliary classification loss, which make the extracted low-dimensional representations  more suitable for actual classification task using cross-entropy loss on the training set. The combined loss function is:

more suitable for actual classification task using cross-entropy loss on the training set. The combined loss function is:

|

(24) |

where  denotes Frobenius norm (element-wise MSE),

denotes Frobenius norm (element-wise MSE),  is the ground truth label of sample

is the ground truth label of sample  on class

on class  ,

,  is the predicted probability of sample

is the predicted probability of sample  on class

on class  ,

,  is the number of training samples (

is the number of training samples ( ), and

), and  is a hyperparameter to balance feature reconstruction and classification tasks.

is a hyperparameter to balance feature reconstruction and classification tasks.

Multi-view graph neural network

To effectively represent different omics data, we aim to learn the intrinsic distribution information of different data. Referring to existing multi-view clustering research [28], we use the KL divergence loss to optimize the model, which is defined as follows:

|

(25) |

where  denotes Kullback–Leibler divergence, used to measure the distance between two distributions. The view distributions

denotes Kullback–Leibler divergence, used to measure the distance between two distributions. The view distributions  and consensus

and consensus  are computed via Student’s t-distribution:

are computed via Student’s t-distribution:

|

(26) |

where  are cluster centroids initialized by k-means on

are cluster centroids initialized by k-means on  . The target distribution

. The target distribution  is derived from

is derived from  as follows:

as follows:

|

(27) |

Additionally, we use the labeled data from the training set to provide auxiliary constraints to the multi-view GNN, with the specific loss function as follows:

|

(28) |

where  is the ground truth label for sample

is the ground truth label for sample  for class

for class  ,

,  is the predicted probability from classifier

is the predicted probability from classifier  ,

,  represents

represents  th row of feature matrix

th row of feature matrix  , and

, and  is the number of training samples. The complete loss function of the multi-view GNN is as follows:

is the number of training samples. The complete loss function of the multi-view GNN is as follows:

|

(29) |

Multi-omics data fusion network

We employ a classifier to predict class label on the final feature representation  obtained through the multi-scale attention fusion network and update the model parameters of the multi-scale attention fusion network by minimizing the following loss:

obtained through the multi-scale attention fusion network and update the model parameters of the multi-scale attention fusion network by minimizing the following loss:

|

(30) |

where  is the ground truth label for sample

is the ground truth label for sample  for class

for class  ,

,  is the predicted probability from classifier

is the predicted probability from classifier  ,

,  represents

represents  th row of fused feature matrix

th row of fused feature matrix  , and

, and  is the number of training samples.

is the number of training samples.

Results

Evaluation datasets and baselines

In this study, we validated the mmMOI method using four types of cancer datasets: GBM (glioblastoma multiforme subtypes), BRCA (breast invasive carcinoma subtypes), OV (ovarian serous cystadenocarcinoma subtypes), and KIPAN (kidney cancer classification). For each cancer-type, we collected three types of omics data and classification labels containing mRNA expression, miRNA expression, and DNA methylation. The omics data for GBM, BRCA, and OV cancers were sourced from benchmark datasets compiled by Rappoport et al. using TCGA data [29]. Class labels for GBM, BRCA, and OV cancers and the KIPAN dataset were obtained from the UCSC cancer database [30]. The details of the four datasets are shown in Table 1.

Table 1.

Summary of datasets in our study

| Dataset | Categories | Number of features for training mRNA, methy, miRNA |

|---|---|---|

| GBM | Proneural:72, Classical:71, Mesenchymal:84, Neural:47 | 12 042, 5000, 534 |

| BRCA | Basal-like:92, HER2-enriched:37, Luminal A:278, Luminal B:110 | 15 551, 5000, 390 |

| OV | Mesenchymal:68, Proliferative:76, Differentiated:66, Immunoreactive:81 | 15 789, 5000, 349 |

| KIPAN | KICH:65, KIRC:201, KIRP:294 | 15 148, 5000, 533 |

To evaluate the performance of our method on the cancer classification task, we compared it with several traditional machine learning methods and the latest deep learning methods. The comparison methods include: NN [31], RF [32], SVM [33], XGBoost [34], MOGONET [18], MoGCN [23], MOGLAM [24], CustOmics [17], AttentionMOI [22], GREMI [20], and MCRGCN [19].

Comparative experiment

As shown in Tables 2–5, we compared the proposed mmMOI model with several baseline methods across four datasets. The results indicate that the mmMOI model outperforms the best baseline in all classification tasks, with notable improvements in Accuracy, F1-macro, Precision, and Recall. To further quantify the extent of these performance gains, we conducted paired t-tests to compute the statistical significance ( -values) of the improvements across the different metrics. The experimental findings demonstrate that, on all pertinent datasets, the majority of performance enhancements achieved by the proposed method relative to the baseline reached statistical significance (

-values) of the improvements across the different metrics. The experimental findings demonstrate that, on all pertinent datasets, the majority of performance enhancements achieved by the proposed method relative to the baseline reached statistical significance ( .05).

.05).

Table 2.

Classification performance of all methods on GBM dataset

| Method | ACC | F1-macro | Precision | Recall |

|---|---|---|---|---|

| NN | 0.7520 0.036 0.036 |

0.7324 0.025 0.025 |

0.7795 0.037 0.037 |

0.7383 0.022 0.022 |

| RF | 0.6873 0.074 0.074 |

0.6494 0.084 0.084 |

0.6838 0.082 0.082 |

0.6563 0.078 0.078 |

| SVM | 0.7636 0.016 0.016 |

0.7291 0.028 0.028 |

0.8048 0.016 0.016 |

0.7307 0.023 0.023 |

| XGBoost | 0.6546 0.098 0.098 |

0.6296 0.099 0.099 |

0.6579 0.087 0.087 |

0.6301 0.104 0.104 |

| MOGONET | 0.6742 0.061 0.061 |

0.6428 0.072 0.072 |

0.6616 0.087 0.087 |

0.6491 0.064 0.064 |

| MoGCN | 0.7512 0.015 0.015 |

0.7233 0.023 0.023 |

0.7271 0.018 0.018 |

0.7454 0.033 0.033 |

| MOGLAM | 0.6868 0.065 0.065 |

0.6370 0.072 0.072 |

0.6610 0.070 0.070 |

0.6534 0.072 0.072 |

| CustOmics | 0.7902 0.056 0.056 |

0.7697 0.066 0.066 |

0.8005 0.069 0.069 |

0.7750 0.066 0.066 |

| AttentionMOI | 0.7925 0.044 0.044 |

0.7818 0.044 0.044 |

0.8033 0.050 0.050 |

0.7862 0.044 0.044 |

| GREMI | 0.7866 0.036 0.036 |

0.7736 0.043 0.043 |

0.8159 0.048 0.048 |

0.7767 0.045 0.045 |

| MCRGCN | 0.8086 0.050 0.050 |

0.7926 0.052 0.052 |

0.8020 0.052 0.052 |

0.8030 0.052 0.052 |

| mmMOI | 0.8293 0.053 0.053 |

0.8160 0.056 0.056 |

0.8400 0.053 0.053 |

0.8195 0.059 0.059 |

| Gain for best | 2.07% (0.0338) | 2.34% (0.0206) | 2.41% (0.0134) | 1.65% (0.0245) |

Table 3.

Classification performance of all methods on BRCA dataset

| Method | ACC | F1-macro | Precision | Recall |

|---|---|---|---|---|

| NN | 0.8472 0.039 0.039 |

0.8091 0.036 0.036 |

0.8513 0.059 0.059 |

0.7918 0.02 0.02 |

| RF | 0.8410 0.030 0.030 |

0.7217 0.069 0.069 |

0.8223 0.116 0.116 |

0.7011 0.051 0.051 |

| SVM | 0.8282 0.021 0.021 |

0.7597 0.052 0.052 |

0.8789 0.044 0.044 |

0.7236 0.047 0.047 |

| XGBoost | 0.8564 0.027 0.027 |

0.7778 0.080 0.080 |

0.8347 0.109 0.109 |

0.7512 0.064 0.064 |

| MOGONET | 0.8446 0.027 0.027 |

0.7854 0.062 0.062 |

0.8291 0.050 0.050 |

0.7777 0.063 0.063 |

| MoGCN | 0.8041 0.002 0.002 |

0.6866 0.005 0.005 |

0.6847 0.003 0.003 |

0.7331 0.017 0.017 |

| MOGLAM | 0.8590 0.030 0.030 |

0.8334 0.036 0.036 |

0.8544 0.045 0.045 |

0.8433 0.034 0.034 |

| CustOmics | 0.8713 0.008 0.008 |

0.8478 0.017 0.017 |

0.8895 0.019 0.019 |

0.8306 0.026 0.026 |

| AttentionMOI | 0.8525 0.027 0.027 |

0.8076 0.049 0.049 |

0.8229 0.043 0.043 |

0.8084 0.055 0.055 |

| GREMI | 0.8692 0.035 0.035 |

0.8036 0.042 0.042 |

0.8732 0.064 0.064 |

0.7854 0.040 0.040 |

| MCRGCN | 0.8882 0.024 0.024 |

0.8540 0.021 0.021 |

0.8860 0.014 0.014 |

0.8454 0.039 0.039 |

| mmMOI | 0.9010 0.018 0.018 |

0.8747 0.028 0.028 |

0.8947 0.028 0.028 |

0.8667 0.034 0.034 |

| Gain for best | 1.28% (0.0314) | 2.07% (0.0289) | 0.87% (0.0329) | 2.12% (0.0469) |

Among the compared methods, RF, SVM, and XGBoost are classical machine learning methods, while the others are based on deep learning. Interestingly, machine learning methods performed comparably to deep learning methods on some datasets. For instance, SVM achieved the second-best Precision score on the OV dataset, indicating that machine learning methods can still be viable for specific tasks. However, it should be noted that RF and XGBoost performed poorly on the GBM and OV datasets, highlighting the instability of machine learning methods. In deep learning methods, a simple three-layer fully connected NN struggled to learn effective feature representations from the complex multi-omics data, resulting in poor performance across all datasets. The CustOmics method, although not outstanding among the compared methods, consistently ranked among the top performers in each dataset, demonstrating good robustness. CustOmics’ two-stage training, where each omics data type is input into an AE to learn intermediate representations, further validates the efficacy of our mmMOI’s AE-based pretraining strategy.

The performance of MOGONET, MoGCN, MOGLAM, and AttentionMOI varied significantly across different datasets. MOGONET and MOGLAM performed well on the easily distinguishable KIPAN and BRCA datasets but poorly on the more challenging GBM and OV datasets. Conversely, AttentionMOI excelled in the GBM and OV datasets but underperformed in the BRCA and KIPAN datasets. This suggests that these methods are highly sensitive to data quality. Among the latest methods, GREMI achieved the second-best performance on the KIPAN dataset, while MCRGCN was second-best on the other three datasets. Both methods employ GNN structures, confirming the suitability of GNNs for cancer classification tasks. Notably, no method achieved outstanding results across all datasets, underscoring the superior stability of our mmMOI deep learning method.

Table 4.

Classification performance of all methods on OV dataset

| Method | ACC | F1-macro | Precision | Recall |

|---|---|---|---|---|

| NN | 0.7391 0.063 0.063 |

0.7298 0.062 0.062 |

0.8026 0.033 0.033 |

0.7326 0.066 0.066 |

| RF | 0.7182 0.031 0.031 |

0.7064 0.036 0.036 |

0.7230 0.035 0.035 |

0.7115 0.029 0.029 |

| SVM | 0.8091 0.055 0.055 |

0.8027 0.062 0.062 |

0.8309 0.056 0.056 |

0.8023 0.060 0.060 |

| XGBoost | 0.6636 0.055 0.055 |

0.6501 0.062 0.062 |

0.6691 0.049 0.049 |

0.6545 0.060 0.060 |

| MOGONET | 0.6891 0.019 0.019 |

0.6785 0.019 0.019 |

0.7169 0.024 0.024 |

0.6821 0.019 0.019 |

| MoGCN | 0.7364 0.009 0.009 |

0.7290 0.009 0.009 |

0.7308 0.008 0.008 |

0.7454 0.011 0.011 |

| MOGLAM | 0.7546 0.042 0.042 |

0.7469 0.047 0.047 |

0.7831 0.047 0.047 |

0.7508 0.041 0.041 |

| CustOmics | 0.8118 0.034 0.034 |

0.8059 0.039 0.039 |

0.8271 0.035 0.035 |

0.8079 0.037 0.037 |

| AttentionMOI | 0.8175 0.033 0.033 |

0.8093 0.038 0.038 |

0.8176 0.038 0.038 |

0.8215 0.036 0.036 |

| GREMI | 0.8045 0.105 0.105 |

0.7981 0.106 0.106 |

0.8200 0.097 0.097 |

0.7993 0.103 0.103 |

| MCRGCN | 0.8364 0.011 0.011 |

0.8239 0.013 0.013 |

0.8272 0.015 0.015 |

0.8272 0.023 0.023 |

| mmMOI | 0.8518 0.041 0.041 |

0.8497 0.040 0.040 |

0.8722 0.023 0.023 |

0.8488 0.042 0.042 |

| Gain for best | 1.54% (0.0610) | 2.58% (0.0387) | 2.58% (0.0387) | 2.16% (0.0287) |

| NN | 0.9314 0.048 0.048 |

0.9322 0.044 0.044 |

0.9451 0.045 0.045 |

0.9248 0.042 0.042 |

| RF | 0.9452 0.025 0.025 |

0.9454 0.022 0.022 |

0.9565 0.023 0.023 |

0.9389 0.026 0.026 |

| SVM | 0.9524 0.013 0.013 |

0.9509 0.016 0.016 |

0.9620 0.021 0.021 |

0.9420 0.015 0.015 |

| XGBoost | 0.9262 0.014 0.014 |

0.9298 0.011 0.011 |

0.9413 0.119 0.119 |

0.9218 0.016 0.016 |

| MOGONET | 0.9562 0.020 0.020 |

0.9598 0.017 0.017 |

0.9648 0.023 0.023 |

0.9566 0.014 0.014 |

| MoGCN | 0.9305 0.009 0.009 |

0.9387 0.007 0.007 |

0.9410 0.007 0.007 |

0.9394 0.007 0.007 |

| MOGLAM | 0.9505 0.018 0.018 |

0.9544 0.013 0.013 |

0.9542 0.013 0.013 |

0.9569 0.017 0.017 |

| CustOmics | 0.9562 0.016 0.016 |

0.9554 0.015 0.015 |

0.9547 0.016 0.016 |

0.9582 0.020 0.020 |

| AttentionMOI | 0.9303 0.011 0.011 |

0.9281 0.009 0.009 |

0.9254 0.009 0.009 |

0.9442 0.002 0.002 |

| GREMI | 0.9619 0.019 0.019 |

0.9612 0.022 0.022 |

0.9661 0.028 0.028 |

0.9591 0.019 0.019 |

| MCRGCN | 0.9571 0.014 0.014 |

0.9419 0.018 0.018 |

0.9398 0.023 0.023 |

0.9453 0.014 0.014 |

| mmMOI | 0.9724 0.016 0.016 |

0.9721 0.017 0.017 |

0.9728 0.016 0.016 |

0.9729 0.022 0.022 |

| Gain for best | 1.05% (0.0587) | 1.09% (0.0278) | 0.67% (0.0192) | 1.38% (0.0480) |

Table 5.

Classification performance of all methods on KIPAN dataset

| Method | ACC | F1-macro | Precision | Recall |

|---|---|---|---|---|

| NN | 0.9314 0.048 0.048 |

0.9322 0.044 0.044 |

0.9451 0.045 0.045 |

0.9248 0.042 0.042 |

| RF | 0.9452 0.025 0.025 |

0.9454 0.022 0.022 |

0.9565 0.023 0.023 |

0.9389 0.026 0.026 |

| SVM | 0.9524 0.013 0.013 |

0.9509 0.016 0.016 |

0.9620 0.021 0.021 |

0.9420 0.015 0.015 |

| XGBoost | 0.9262 0.014 0.014 |

0.9298 0.011 0.011 |

0.9413 0.119 0.119 |

0.9218 0.016 0.016 |

| MOGONET | 0.9562 0.020 0.020 |

0.9598 0.017 0.017 |

0.9648 0.023 0.023 |

0.9566 0.014 0.014 |

| MoGCN | 0.9305 0.009 0.009 |

0.9387 0.007 0.007 |

0.9410 0.007 0.007 |

0.9394 0.007 0.007 |

| MOGLAM | 0.9505 0.018 0.018 |

0.9544 0.013 0.013 |

0.9542 0.013 0.013 |

0.9569 0.017 0.017 |

| CustOmics | 0.9562 0.016 0.016 |

0.9554 0.015 0.015 |

0.9547 0.016 0.016 |

0.9582 0.020 0.020 |

| AttentionMOI | 0.9303 0.011 0.011 |

0.9281 0.009 0.009 |

0.9254 0.009 0.009 |

0.9442 0.002 0.002 |

| GREMI | 0.9619 0.019 0.019 |

0.9612 0.022 0.022 |

0.9661 0.028 0.028 |

0.9591 0.019 0.019 |

| MCRGCN | 0.9571 0.014 0.014 |

0.9419 0.018 0.018 |

0.9398 0.023 0.023 |

0.9453 0.014 0.014 |

| mmMOI | 0.9724 0.016 0.016 |

0.9721 0.017 0.017 |

0.9728 0.016 0.016 |

0.9729 0.022 0.022 |

| Gain for best | 1.05% (0.0587) | 1.09% (0.0278) | 0.67% (0.0192) | 1.38% (0.0480) |

Ablation experiments

To evaluate the effectiveness of each module and the integration of various data types in our experiment, we conducted two parts of ablation studies. The first part focuses on module-based ablation, aiming to verify whether the design of each module in our model contributes to improving the overall experimental performance. The second part centers on ablation based on different omics data types, aiming to confirm whether the fusion of multiple omics data types enhances the performance of cancer classification tasks.

Ablation study of different modules

Tables 6–9 report the results of module-based ablation experiments on four datasets. In the experimental setup of w/o ML-GNN, the multi-view GNN part guided by multi-label learning is removed, and a simple AE is used for representation learning to verify the effect of the design of this part on single-omics representation learning. w/o GA-FN and w/o LA-FN represent the removal of the global attention fusion module and the local attention fusion module in the multi-omics data fusion part to verify the multi-omics fusion effect of attention from different angles. During the experiment, we performed ablation for each module while keeping the other modules intact. According to the results of each table, it can be seen that the best classification performance can be obtained by using all modules. According to the ablation effect of each module, the removal of single-omics representation learning has the greatest impact on the classification performance in most datasets, which indicates that the simple AE cannot capture the association information between different samples, and the multi-view GNN guided by multi-label learning can effectively extract this part of the information. At the same time, the removal of each attention fusion module impacts the model performance, which indicates that the use of multi-scale attention fusion modules can better extract the specificity and complementarity information between multi-omics data.

Table 6.

The results of ablation study on GBM dataset

| ACC | F1-macro | Precision | Recall | |

|---|---|---|---|---|

| w/o ML-GNN | 0.7941 0.058 0.058 |

0.7655 0.070 0.070 |

0.7986 0.074 0.074 |

0.7747 0.066 0.066 |

| w/o GA-FN | 0.8029 0.062 0.062 |

0.7892 0.064 0.064 |

0.8067 0.067 0.067 |

0.7949 0.066 0.066 |

| w/o LA-FN | 0.7766 0.051 0.051 |

0.7381 0.065 0.065 |

0.7836 0.047 0.047 |

0.7459 0.058 0.058 |

| All modules | 0.8293 0.053 0.053 |

0.8160 0.056 0.056 |

0.8400 0.053 0.053 |

0.8195 0.059 0.059 |

Table 7.

The results of ablation study on BRCA dataset

| ACC | F1-macro | Precision | Recall | |

|---|---|---|---|---|

| w/o ML-GNN | 0.8733 0.040 0.040 |

0.8344 0.050 0.050 |

0.8692 0.067 0.067 |

0.8217 0.040 0.040 |

| w/o GA-FN | 0.8938 0.019 0.019 |

0.8665 0.032 0.032 |

0.8872 0.032 0.032 |

0.8557 0.035 0.035 |

| w/o LA-FN | 0.8815 0.024 0.024 |

0.8342 0.037 0.037 |

0.8920 0.040 0.040 |

0.8148 0.038 0.038 |

| All modules | 0.9010 0.018 0.018 |

0.8747 0.028 0.028 |

0.8947 0.028 0.028 |

0.8667 0.034 0.034 |

Table 8.

The results of ablation study on OV dataset

| ACC | F1-macro | Precision | Recall | |

|---|---|---|---|---|

| w/o ML-GNN | 0.8173 0.038 0.038 |

0.8133 0.038 0.038 |

0.8289 0.034 0.034 |

0.8139 0.040 0.040 |

| w/o GA-FN | 0.8455 0.041 0.041 |

0.8421 0.040 0.040 |

0.8650 0.027 0.027 |

0.8419 0.042 0.042 |

| w/o LA-FN | 0.8273 0.045 0.045 |

0.8225 0.048 0.048 |

0.8470 0.032 0.032 |

0.8223 0.049 0.049 |

| All modules | 0.8518 0.041 0.041 |

0.8497 0.040 0.040 |

0.8722 0.023 0.023 |

0.8488 0.042 0.042 |

Table 9.

The results of ablation study on KIPAN dataset

| ACC | F1-macro | Precision | Recall | |

|---|---|---|---|---|

| w/o ML-GNN | 0.9619 0.014 0.014 |

0.9603 0.015 0.015 |

0.9647 0.015 0.015 |

0.9582 0.023 0.023 |

| w/o GA-FN | 0.9695 0.012 0.012 |

0.9704 0.014 0.014 |

0.9716 0.013 0.013 |

0.9704 0.019 0.019 |

| w/o LA-FN | 0.9624 0.015 0.015 |

0.9630 0.016 0.016 |

0.9686 0.018 0.018 |

0.9597 0.019 0.019 |

| All modules | 0.9724 0.016 0.016 |

0.9721 0.017 0.017 |

0.9728 0.016 0.016 |

0.9729 0.022 0.022 |

Ablation study of different omics data

mmMOI integrates three types of omics data for cancer classification. To explore the contribution of each type of omics data to the final classification and verify the necessity of multi-omics fusion, we conducted ablation experiments targeting different omics data types. We tested the classification performance on LGFPAM using single-omics data (mRNA, methy, and miRNA) and combinations of two omics data (mRNA+methy, mRNA+miRNA, and methy+miRNA). The experimental results on the GBM, BRCA, OV, and KIPAN datasets are shown in Fig. 2. We observed that, in all classification tasks, models trained with all three types of omics data outperformed those trained with two types of omics data and those trained with only one type of omics data. This showcases our method’s robust ability to fuse multi-omics data by integrating various types of omics information. Therefore, using all three types of omics data for fusion studies is necessary. Additionally, we noted that mRNA expression data consistently outperformed DNA methylation data and miRNA expression data across all classification tasks in the three cancer subtype classification datasets. Notably, on the BRCA and OV datasets, the performance of models using only mRNA expression data surpassed that of models using two types of omics data. It was even close to the performance of models using all three types of omics data. However, in the KIPAN dataset, the results were better using miRNA expression data. Hence, we conclude that mRNA expression significantly contributes to distinguishing different cancer subtypes in the three cancer subtype classification tasks. In contrast, DNA methylation and miRNA expression data contain more noise. At the same time, in the classification task of kidney cancer, miRNA expression data play a more important role, and the other two omics data contained more noise. However, effective information can still be extracted after feature fusion in all datasets. This indicates that our model can effectively capture important information from omics data while minimizing the impact of redundant and noisy information.

Figure 2.

Comparison of classification results across different omics data via mmMOI model.

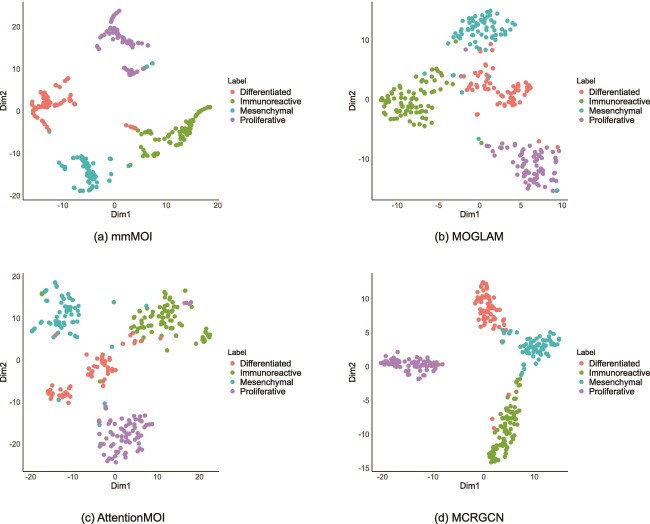

t-SNE visualization

To more intuitively evaluate the representation learning ability of our model, we used t-distributed Stochastic Neighbor Embedding (t-SNE) [35] to perform dimensionality reduction and visualization analysis on the GBM, BRCA, OV, and KIPAN datasets. Note that the omics representation features we used for this visualization analysis are the final embeddings learned by the model, which are applied to the final classification. To visually demonstrate the performance of our model in multi-omics fusion representation learning, we compared the t-SNE visualization results of our method with those of three other models with good classification performance: MOGLAM, AttentionMOI, and MCRGCN. Figures 3–6 show the visualization results of each method on the four datasets. The embeddings learned by our method produce good clustering effects on all four datasets. Additionally, by comparing with MOGLAM AttentionMOI and MCRGCN, we found that the embeddings learned by our method, after dimensionality reduction visualization, generate clusters with smaller intra-class dispersion and more significant inter-class dispersion. This indicates that the strategies adopted by our model for multi-omics data fusion representation are successful.

Figure 3.

Visualization of embedding representations of four methods on the GBM dataset using t-SNE: (a) mmMOI, (b) MOGLAM, (c) AttentionMOI, (d) MCRGCN.

Figure 6.

Visualization of embedding representations of four methods on the KIPAN dataset using t-SNE: (a) mmMOI, (b) MOGLAM, (c) AttentionMOI, (d) MCRGCN.

Figure 4.

Visualization of embedding representations of four methods on the BRCA dataset using t-SNE: (a) mmMOI, (b) MOGLAM, (c) AttentionMOI, (d) MCRGCN.

Figure 5.

Visualization of embedding representations of four methods on the OV dataset using t-SNE: (a) mmMOI, (b) MOGLAM, (c) AttentionMOI, (d) MCRGCN.

Prognostic analysis of selected biomarkers

To further evaluate the clinical utility of the biomarkers identified by our model, we leveraged the International Cancer Genome Consortium dataset as an independent clinical cohort to systematically validate their prognostic significance. Figure 7 illustrates representative prognostic biomarkers across different cancer types. Among them, podoplanin (PDPN) was found to be positively correlated with tumor malignancy, mainly expressed in the mesenchymal subtype with the worst GBM prognosis [36]. Maguire et al. found that ACSBG1 is significantly upregulated in breast cancer cells, thereby promoting the progression of obesity-related breast cancer. This effect is mediated through the regulation of long-chain fatty acid metabolism, which enhances the energy reserves of cancer cells and supports tumor growth [37]. Elsharkawi et al. demonstrated that, in ovarian cancer tissues, the methylation levels at multiple CpG sites within the PCDH17 promoter were significantly higher than those in normal or benign lesions. This finding suggests that gene silencing of PCDH17 through promoter methylation may be involved in the initiation and progression of ovarian cancer [38]. Zhao et al. demonstrated that SHC1 regulates polymerase I and transcript release factor (PTRF) expression through the epidermal growth factor receptor (EGFR) signaling pathway and can be detected in the exosomes present in the urine of patients with clear cell renal cell carcinoma. Analysis of urinary exosomes indicated that aberrant expression of PTRF/CAVIN1 is closely related to the development of clear cell renal cell carcinoma, suggesting that it may not only function intracellularly but also serve as a noninvasive diagnostic biomarker for early screening of the disease [39]. Refer to the supplementary data for additional prognostic biomarker analysis results.

Figure 7.

The Kaplan–Meier survival curve of representative biomarkers in different datasets.

Enrichment analysis of selected biomarkers

To systematically evaluate the potential roles of the biomarkers automatically identified by our model in tumor initiation and progression, we first performed KEGG pathway and Gene Ontology (GO) enrichment analyses on the sets of differentially expressed genes output by the model for each dataset. Figure 8 summarizes the rankings of the top 10 enriched Kyoto Encyclopedia of Genes and Genomes (KEGG) pathways across four tumor types (GBM, BRCA, OV, and KIPAN). The three most significant pathways for each dataset are outlined below.

Figure 8.

The pathway enrichment of selected biomarkers in different datasets.

In the GBM dataset, the top three enriched pathways were Neuroactive ligand–receptor interaction, Neuroactive ligand signaling, and the cAMP signaling pathway. Each of these pathways is closely associated with extracellular signal recognition by neuronal cells and downstream second-messenger regulation, suggesting pivotal roles in glioma cell proliferation, migration, and resistance to therapy.

For the BRCA dataset, differentially expressed genes were predominantly enriched in the PI3K–Akt signaling pathway, the MAPK signaling pathway, and the Ras signaling pathway. These canonical oncogenic cascades are well established as key drivers of breast cancer, mediating cell proliferation, inhibition of apoptosis, and control of the cell cycle, thereby supporting the model’s capacity to capture core tumorigenic mechanisms.

In the OV dataset, the most significantly enriched pathways were human papillomavirus (HPV) infection, neuroactive ligand–receptor interaction, and proteoglycans in cancer. The enrichment of proteoglycan-related processes highlights their established roles in stromal remodeling, cell adhesion, and migration within the ovarian tumor microenvironment. In contrast, the enrichment of the HPV infection pathway suggests a possible, yet underexplored, viral-associated mechanism in particular ovarian cancer subtypes.

Finally, in the KIPAN dataset, enriched pathways included the MAPK signaling pathway, Salmonella infection, and Chemical carcinogenesis–receptor activation. The central role of the MAPK cascade in mediating cellular responses to extracellular stress and growth factors is well documented. In contrast, the enrichment of infection-related pathways may reflect the influence of the immune or inflammatory microenvironment on renal tumor biology.

Overall, these KEGG enrichment results not only align with the established pathogenetic mechanisms of each cancer type but also substantiate the biological relevance and translational potential of the biomarkers identified by our model. The full results of the GO enrichment analysis are provided in the supplementary data.

Discussion and conclusion

In recent years, the rapid advancement of high-throughput sequencing technologies has made the integration of multi-omics data an essential tool for unraveling the complexities of diseases. However, existing methods still face several limitations, including a heavy reliance on feature selection, restricted applicability across different datasets, and the inability to effectively capture intricate interactions at both the sample and omics levels. To address these challenges, we propose a multi-omics fusion framework mmMOI based on multi-label guided learning and multi-scale fusion learning to solve the problem of cancer classification. mmMOI is an end-to-end supervised learning method comprising two main components: the single-omics representation learning module and the multi-omics data fusion module. The multi-view GNN module, based on multi-label guided learning, is utilized in the single-omics representation learning part. The multi-view adaptive aggregation design, guided by real labels, has a better ability to capture sample similarity relationships than traditional methods. The multi-omics fusion part comprises a global attention module and a local attention module, which enable the fusion features to retain better the complementary information between different omics and the internal relationships between different patients.

Comparative experiments on four cancer datasets demonstrate that mmMOI achieves superior classification accuracy and stability compared to state-of-the-art methods. Furthermore, the model exhibits high stability and adaptability across different biological contexts and sequencing technologies. Additional visual dimensionality reduction analyses further validate the strong discriminative power of mmMOI’s learned omics features across various data types, confirming its effectiveness in multi-omics integration. Ablation experiments reveal that each core component of mmMOI makes a positive contribution to the final classification outcomes. Most importantly, mmMOI successfully identifies key disease-associated biomarkers, reinforcing its biological interpretability and highlighting its potential applicability in disease diagnosis and treatment. In future research, we aim to expand the applicability of mmMOI to a broader range of cancer datasets and other multi-omics classification tasks.

Key Points

We presented a novel framework to integrate multi-omics data by combining multi-label guided learning and multi-scale fusion techniques.

A multi-view graph neural network guided by multi-label learning was utilized to learn representations from each omics data type, enhancing feature extraction without overfitting specific labels.

A multi-scale attention fusion network was employed to adaptively integrate representations from different omics layers, which allows the model to capture complex interactions between various biological data types.

The method has been tested on benchmark datasets, showing consistent outperformance compared to other state-of-the-art methods.

Ablation experiments were conducted to assess the validity of each component and the impact of different fusion methods.

Conflict of interest: None declared.

Supplementary Material

Contributor Information

Yuze Li, Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, College of Computer Science and Technology, Jilin University, Qianjin Street 2699, 130012 Jilin, China.

Yinghe Wang, Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, College of Computer Science and Technology, Jilin University, Qianjin Street 2699, 130012 Jilin, China.

Tao Liang, Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, College of Computer Science and Technology, Jilin University, Qianjin Street 2699, 130012 Jilin, China.

Ying Li, Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, College of Computer Science and Technology, Jilin University, Qianjin Street 2699, 130012 Jilin, China.

Wei Du, Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education, College of Computer Science and Technology, Jilin University, Qianjin Street 2699, 130012 Jilin, China.

Funding

The authors acknowledge financial support from the National Natural Science Foundation of China (grant no. 62372494); Natural Science Foundation of Jilin Province (grant no. 20240302086GX).

Data availability

The source code, datasets, and detailed hyperparameter configurations for mmMOI are available at https://github.com/mlcb-jlu/mmMOI.

References

- 1. Garraway LA, Lander ES. Lessons from the cancer genome. Cell 2013;153:17–37. 10.1016/j.cell.2013.03.002 [DOI] [PubMed] [Google Scholar]

- 2. Chakravarthi BV, Nepal S, Varambally S. Genomic and epigenomic alterations in cancer. Am J Pathol 2016;186:1724–35. 10.1016/j.ajpath.2016.02.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Liu Z, Zhang XS, Zhang S. Breast tumor subgroups reveal diverse clinical prognostic power. Sci Rep 2014;4:4002. 10.1038/srep04002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Duan R, Gao L, Gao Y. et al. Evaluation and comparison of multi-omics data integration methods for cancer subtyping. PLoS Comput Biol 2021;17:1–33. 10.1371/journal.pcbi.1009224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Hasin Y, Seldin M, Lusis A. Multi-omics approaches to disease. Genome Biol 2017;18:83. 10.1186/s13059-017-1215-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Van VA. Next generation sequencing of microbial transcriptomes: challenges and opportunities. FEMS Microbiol Lett 2010;302:1–7. [DOI] [PubMed] [Google Scholar]

- 7. Subramanian I, Verma S, Kumar S. et al. Multi-omics data integration, interpretation, and its application. Bioinform Biol Insights 2020;14:117793221989905–24. 10.1177/1177932219899051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Hu W, Lin D, Cao S. et al. Adaptive sparse multiple canonical correlation analysis with application to imaging (epi) genomics study of schizophrenia. IEEE Trans Biomed Eng 2018;65:390–9. 10.1109/TBME.2017.2771483 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Sugnet CW, Kent WJ, Ares M. et al. Transcriptome and genome conservation of alternative splicing events in humans and mice. Pac Symp Biocomput 2024;9:66–77. 10.1142/9789812704856_0007 [DOI] [PubMed] [Google Scholar]

- 10. Wang YP, Lei QY. Metabolic recoding of epigenetics in cancer. Cancer Commun 2018;38:25. 10.1186/s40880-018-0302-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Hu L, Liu J, Zhang W. et al. Functional metabolomics decipher biochemical functions and associated mechanisms underlie small-molecule metabolism. Mass Spectrom Rev 2020;39:417–33. 10.1002/mas.21611 [DOI] [PubMed] [Google Scholar]

- 12. Uhlén M, Fagerberg L, Hallström BM. et al. Tissue-based map of the human proteome. Science 2015;347:1260419. 10.1126/science.1260419 [DOI] [PubMed] [Google Scholar]

- 13. Huang Z, Zhan X, Xiang S. et al. SALMON: survival analysis learning with multi-omics neural networks on breast cancer. Cancer Commun 2019;10:166. 10.3389/fgene.2019.00166 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Chen Y, Wen Y, Xie C. et al. MOCSS: multi-omics data clustering and cancer subtyping via shared and specific representation learning. iScience 2023;26:107378. 10.1016/j.isci.2023.107378 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Rappoport N, Shamir R. Multi-omic and multi-view clustering algorithms: review and cancer benchmark. Nucleic Acids Res 2019;47:1044. 10.1093/nar/gky1226 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ma Y, Guan J. MOCSC: a multi-omics data based framework for cancer subtype classification. In: IEEE (ed.), Proceedings of the 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Piscataway, NJ, USA: IEEE; 2022. pp. 2853–2856.

- 17. Benkirane H, Pradat Y, Michiels S. et al. Customics: a versatile deep-learning based strategy for multi-omics integration. PLoS Comput Biol 2023;19:1–19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Wang T, Shao W, Huang Z. et al. Mogonet integrates multi-omics data using graph convolutional networks allowing patient classification and biomarker identification. Nat Commun 2021;12:3445. 10.1038/s41467-021-23774-w [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Chen F, Peng W, Dai W. et al. Supervised graph contrastive learning for cancer subtype identification through multi-omics data integration. Health Inform Sci Syst 2024;12:1–12. 10.1007/s13755-024-00274-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Liang H, Luo H, Sang Z. et al. GREMI: an explainable multi-omics integration framework for enhanced disease prediction and module identification. IEEE J Biomed Health Inform 2024;28:6983–96. 10.1109/JBHI.2024.3439713 [DOI] [PubMed] [Google Scholar]

- 21. Gong P, Cheng L, Zhang Z. et al. Multi-omics integration method based on attention deep learning network for biomedical data classification. Comput Methods Programs Biomed 2023;231:107377. 10.1016/j.cmpb.2023.107377 [DOI] [PubMed] [Google Scholar]

- 22. Pang J, Liang B, Ding R. et al. A denoised multi-omics integration framework for cancer subtype classification and survival prediction. Brief Bioinform 2023;24:1–12. 10.1093/bib/bbad304 [DOI] [PubMed] [Google Scholar]

- 23. Li X, Ma J, Leng L. et al. MoGCN: a multi-omics integration method based on graph convolutional network for cancer subtype analysis. Front Genet 2022;13:806842. 10.3389/fgene.2022.806842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Ouyang D, Liang Y, Li L. et al. Integration of multi-omics data using adaptive graph learning and attention mechanism for patient classification and biomarker identification. Comput Biol Med 2023;164:107303. 10.1016/j.compbiomed.2023.107303 [DOI] [PubMed] [Google Scholar]

- 25. Hu J, Shen L, Sun G. Squeeze-and-excitation networks. IEEE Trans Pattern Anal Mach Intell 2019;42:2011–23. 10.1109/TPAMI.2019.2913372 [DOI] [PubMed] [Google Scholar]

- 26.Xie S, Girshick R, Dollar P. et al. Aggregated residual transformations for deep neural networks. In: IEEE (ed.), Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Piscataway, NJ, USA: IEEE; 2017. pp. 1492–1500.

- 27.Vaswani A, Shazeer N, Parmar N. et al. Attention is all you need. In: Guyon I, von Luxburg U, Bengio S, Wallach H, Fergus R, Vishwanathan S, Garnett R (eds.), Advances in Neural Information Processing Systems 30 (NeurIPS 2017). Red Hook, NY, USA: Curran Associates, Inc.; 2017. pp. 6000–6010.

- 28.Ling Y, Chen J, Ren Y. et al. Dual label-guided graph refinement for multi-view graph clustering. In: Chien L, Kambhampati S, Liu Q (eds.), Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence (AAAI-23). Palo Alto, CA, USA: AAAI Press; 2023. pp. 8791–8798.

- 29. Weinstein JN, Collisson EA, Mills GB. et al. The cancer genome atlas pan-cancer analysis project. Nat Genet 2013;45:1113–20. 10.1038/ng.2764 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Goldman M, Craft B, Swatloski T. et al. The UCSC cancer genomics browser: update 2015. Nucleic Acids Res 2015;43:D812–7. 10.1093/nar/gku1073 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. LeCun Y, Bengio Y, Hinton G. Deep learning. Nature 2015;521:436–44. 10.1038/nature14539 [DOI] [PubMed] [Google Scholar]

- 32. Breiman L. Random forests. Mach Learn 2001;45:5–32. 10.1023/A:1010933404324 [DOI] [Google Scholar]

- 33. Platt JC. Sequential Minimal Optimization: A Fast Algorithm for Training Support Vector Machines. Microsoft Research, 1998. [Google Scholar]

- 34.Chen TQ, Guestrin C. A scalable tree boosting system. In: Bontempi G, Horvath T, Luo J, Motoda H, Papadimitriou S (eds.), Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD '16). New York, NY, USA: ACM; 2016. pp. 785–794.

- 35. van der Maaten L, Hinton G. Visualizing data using t-SNE. J Mach Learn Res 2008;9:2579–605. [Google Scholar]

- 36. Shiina S, Ohno M, Ohka F. et al. Car T cells targeting podoplanin reduce orthotopic glioblastomas in mouse brains. Cancer Immunol Res 2016;4:259–68. 10.1158/2326-6066.CIR-15-0060 [DOI] [PubMed] [Google Scholar]

- 37. Maguire OA, Ackerman SE, Szwed SK. et al. Creatine-mediated crosstalk between adipocytes and cancer cells regulates obesity-driven breast cancer. Cell Metab 2021;33:499–512.e6. 10.1016/j.cmet.2021.01.018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Elsharkawi SM, Elkaffash D, Moez P. et al. PCDH17 gene promoter methylation status in a cohort of Egyptian women with epithelial ovarian cancer. BMC Cancer 2023;23:89. 10.1186/s12885-023-10549-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Zhao Y, Wang Y, Zhao E. et al. PTRF/CAVIN1, regulated by SHC1 through the EGFR pathway, is found in urine exosomes as a potential biomarker of ccRCC. Carcinogenesis 2020;41:274–83. 10.1093/carcin/bgz147 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The source code, datasets, and detailed hyperparameter configurations for mmMOI are available at https://github.com/mlcb-jlu/mmMOI.