Abstract

Background

The quality of assessment in undergraduate medical colleges remains underexplored, particularly concerning the availability of validated instruments for its measurement. Bridging the gap between established assessment standards and their practical application is crucial for improving educational outcomes. To address this, the ‘Assessment Implementation Measure’ (AIM) tool was designed to evaluate the perception of assessment quality among undergraduate medical faculty members. While the content validity of the AIM questionnaire has been established, limitations in sample size have precluded the determination of construct validity and a statistically defined cutoff score.

Objective

To establish the construct validity of the Assessment Implementation Measure (AIM) tool. To determine the cutoff scores of the AIM tool and its domains statistically for classifying assessment implementation quality.

Methods

This study employed a cross-sectional validation design to establish the construct validity and a statistically valid cutoff score for the AIM tool to accurately classify the quality of assessment implementation as either high or low. A sample size of 347 undergraduate medical faculty members was used for this purpose. The construct validity of the AIM tool was established through exploratory factor analysis (EFA), reliability was confirmed via Cronbach's alpha, and cutoff scores were calculated via the receiver operating characteristic curve (ROC).

Results

EFA of the AIM tool revealed seven factors accounting for 63.961% of the total variance. One item was removed, resulting in 29 items with factor loadings above 0.40. The tool’s reliability was excellent (0.930), and the seven domains ranged from 0.719 to 0.859; however, the ‘Ensuring Fair Assessment’ domain demonstrated a weak Cronbach’s alpha of 0.570. The cutoff score for differentiating high and low assessment quality was calculated as 77 out of 116 using the ROC curve. The scores for the seven domains ranged from 5.5 to 18.5. The tool's area under the curve (AUC) was 0.994, and for the seven factors, it ranged from 0.701 to 0.924.

Conclusion

The validated AIM tool and statistically established cutoff score provide a standardized measure for institutions to evaluate and improve their assessment programs. EFA factor analysis grouped 29 of the 30 items into 7 factors, demonstrating good construct validity. The tool demonstrated good reliability via Cronbach’s alpha, and a cutoff score of 77 was calculated through ROC curve analysis. This tool can guide faculty development initiatives and support quality assurance processes in medical schools.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12909-025-07862-9.

Keywords: Undergraduate medical education, Assessment, Construct validity, Exploratory factor analysis, Receiver operating characteristic, Area under the curve, Cutoff score

Background

Every teaching institution encounters challenges in establishing high-quality assessment programs, and undergraduate medical education is no exception. Medical schools strive to gain approval from accrediting bodies and increase the quality of their assessment programs to produce graduates with the clinical competence and knowledge necessary to meet global standards. Regular evaluations are essential to ensure that the assessment system remains relevant and effective, allowing stakeholders to identify lapses and propose solutions related to faculty development and student learning.

Assessment in medical education ensures that students gain the necessary competencies for clinical practice by measuring knowledge, skills, and attitudes. This allows educators to identify knowledge gaps and customize learning experiences. Assessments also ensure public accountability by guaranteeing that graduates are competent and compassionate clinicians [1]. They are essential for program evaluation, maintaining quality standards, and fulfilling accreditation requirements [2]. The data collected from assessments also informs innovation in educational practices and adapts to advancements in medical knowledge, thereby preparing graduates for their professional roles [3].

Student assessment is a comprehensive decision-making process with implications beyond measuring students’ success. It is linked to program evaluation by providing important data to determine program effectiveness and improve teaching methods. Although no single assessment tool is perfect, a combination of assessments tailored to the learning level can be effective. Assessments collect, analyze, and interpret evidence to determine how well a student meets the learning objectives. Furthermore, timely and formative feedback allows students to improve well before a final grade is given [4].

Measuring the quality of assessments is essential for maintaining the credibility and reputation of medical programs by ensuring that only competent individuals enter the medical profession. Ineffective assessment practices can allow underqualified individuals to enter the field, posing risks to healthcare quality. Regular evaluation of assessments enables the alignment of assessments with learning objectives and current medical practices, thereby promoting the development of skilled healthcare professionals capable of delivering excellent patient care [5].

Studies by Van Der Vleuten et al. [3] and Norcini [6] emphasize the need for robust assessment systems to maintain and ensure the quality of education. Maintaining quality assurance in assessment requires the participation of the entire academic team, particularly those well-versed in student assessment principles. However, despite their critical role in assessment implementation, faculty contributions and perspectives on assessment quality have often been overlooked in the medical education literature. While other stakeholders may provide valuable insights into the assessment process, they may lack the expertise necessary to provide a comprehensive evaluation of student performance. Therefore, faculty members are best positioned to offer comprehensive judgments, ensuring that graduates are prepared to meet the complex demands of medical practice. They are responsible for designing, implementing, and evaluating assessments, selecting suitable assessment types for specific learning objectives, and analyzing assessment data to inform their teaching practices and improve student learning outcomes [7]. Their experience enables them to identify gaps in assessment methods and discrepancies between accepted standards and actual practices. As subject matter experts, with comprehensive knowledge of the curriculum, learning objectives, and assessment principles, faculty are best equipped to assess the validity, feasibility, and reliability of assessments [6].

The quality of assessment in undergraduate medical colleges remains underexplored, due to the limited availability of validated instruments for its measurement. Tools measuring the educational environment are available, but none of them estimate the quality of assessment in the context of medical education [8]. Bridging the gap between established assessment standards and their practical application is crucial for improving educational outcomes. To address this, the ‘Assessment Implementation Measure’ (AIM) tool was designed to evaluate the perception of assessment quality among undergraduate medical faculty members [8].

Although primarily intended for use by teachers, it can also be utilized by other stakeholders with an adequate understanding of the assessment program. It demonstrated good internal consistency, reliability, and content validity index (CVI) values at both the item and scale levels. However, owing to the small sample size, construct validity could not be established, and the scoring system was not statistically determined, limiting its practical utility.

The most overlooked aspect of scale development is how to interpret the scores and report the values obtained from these tools [9]. An assessment tool with high validity and scientifically established cutoff scores will enable medical schools to conduct self-assessments and identify areas for improvement. This will improve the general standard of medical education and ensure that students are fully equipped to handle the requirements of the healthcare profession.

Objective of the study

To establish the construct validity of the Assessment Implementation Measure (AIM) tool.

To determine the cutoff scores of the AIM tool and its domains statistically for classifying assessment implementation quality.

Methods

Study design

This study employed a cross-sectional validation design and a statistical approach to establish a cutoff score in a two-step process:

Assessing construct validity via exploratory factor analysis (EFA).

Determining the cutoff scores via receiver operating characteristic (ROC) curve analysis.

The study received ethical approval from the institutional review board of Riphah International University (appl: Riphah/IRC/24/1015) (Annexure 1).

Step 1: Construct Validity using Exploratory Factor Analysis

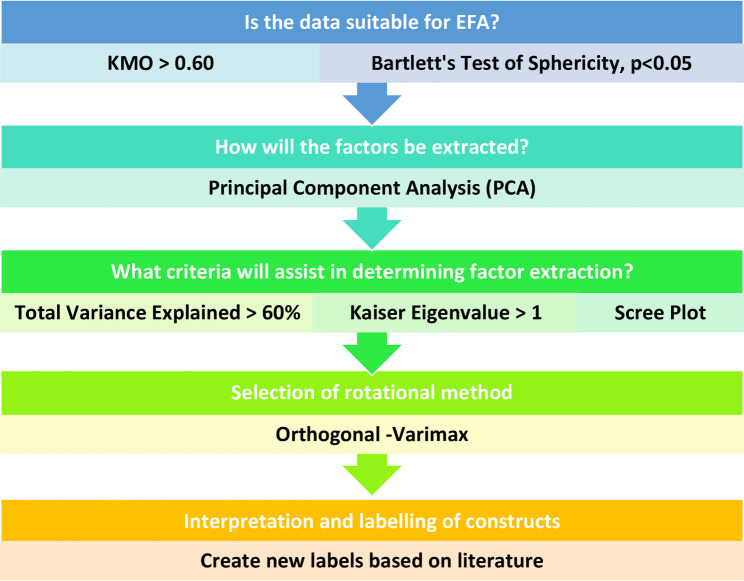

The purpose of EFA is to identify the relationships between observed and latent variables, reducing the data into smaller groups. Using IBM SPSS Version 26, EFA was conducted via principal component analysis with varimax rotation to determine the construct validity of the AIM tool, following the five-step protocol of Williams [10] (Fig. 1).

Fig. 1.

5-Step exploratory factor analysis protocol [10]

Sampling and sample size

Participants were recruited using convenience sampling from medical and dental colleges across Pakistan. Recruitment was conducted via email, WhatsApp, and LinkedIn. Participation was voluntary, and informed consent was obtained from all respondents.

Faculty were considered eligible if they were currently teaching in a medical or dental college and had a minimum of one year of teaching experience. Incomplete or partially filled responses were excluded from the study.

The sample size was calculated based on a participant-to-item ratio of 10:1 for the 30-item AIM tool, which is widely recommended for EFA [10]. Based on this guideline, a minimum of 300 participants was required. The survey was distributed to approximately 700 eligible faculty members. A total of 360 responses were received, of which 13 were excluded due to incompleteness, resulting in 347 complete responses retained for analysis. This final sample exceeded the required threshold for robust factor analysis.

Instrument/tool used in the study

The AIM tool was used in the study (Annexure 2). This is a 30-item self-reporting questionnaire with a 5-point Likert scale ranging from strongly disagree (0) to strongly agree [4]. The tool demonstrated a strong content validity index (CVI) of 0.98 and an internal consistency measured by a Cronbach’s alpha of 0.9. The items were assigned to four subdomains by experts, with scores allocated according to quartiles rather than being derived from statistical analysis [8].

Data collection

Data collection was performed via Google Forms, which were sent to prospective participants via email, WhatsApp, and LinkedIn to maximize accessibility (Annexure 2). The survey included the AIM tool items as well as demographic variables such as gender, academic rank, and teaching experience to contextualize the findings. Care was taken to ensure data anonymity and confidentiality.

Data analysis

Descriptive statistics, including frequency, percentage, mean, and standard deviation, were calculated.

Kaiser—Meyer–Olkin (kmo) measure of sampling adequacy/Bartlett’s test of sphericity

The Kaiser-Meyer-Olkin (KMO) measure and Bartlett’s Test of Sphericity were used to assess the suitability of the data for factor analysis. The KMO index ranges from 0 to 1, and a KMO value above 0.60 indicates an adequate sample size [10]. Bartlett’s test evaluates whether the correlations between variables are strong enough for factor analysis, with a p-value < 0.05 suggesting statistical significance [10].

Communality

This determines the suitability of the data for EFA. A value closer to 1 means that the variable is appropriately represented by the extracted factor [11]. The acceptable range lies between 0.4 and 0.7.

Principal component analysis (PCA)/Factor extraction

PCA is a statistical technique used to simplify and reduce the number of variables in a dataset while preserving as much information as possible [12]. The criterion for factor retention was Kaiser’s criterion of eigenvalues greater than or equal to 1.

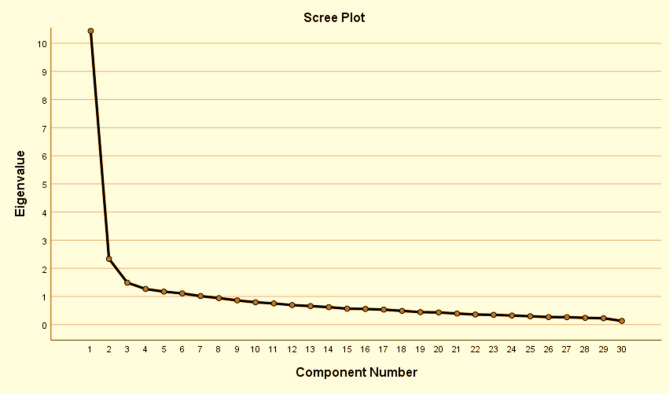

Scree plot

The scree plot is a graph used in factor analysis to determine the number of factors to retain. The eigenvalues are plotted on the y-axis, and the component number is plotted on the x-axis. The “elbow” point, where the slope changes, indicates significant factors to be kept on the left, whereas those on the right likely represent random errors.

Factor rotation

Factor rotation is used to make the final output easier to understand by simplifying the factor structure (10). Factor loadings greater than 0.4 on a single factor were retained, whereas those with low or substantial cross-loadings were removed.

Construct labeling

The factors were named based on the common themes of the items loading onto them.

Reliability and internal consistency

Cronbach’s alpha, ranging from 0 to 1, describes the extent to which all the items measure the same construct [13]. The acceptable values range from 0.70 to 0.95.

Step 2: Cut-off score using Receiver Operating Curve analysis

In this phase, the ROC curve was used to determine the cutoff values for acceptable and unacceptable assessment quality of each item, domain, and overall tool. This statistical test utilized the dataset from Step 1. The ROC analysis was conducted with IBM SPSS Version 26. Each participant’s mean score for each item was compared to a criterion measure based on their perceptions of assessment program quality as high or low. The sensitivity (true positive rate) and specificity (true negative rate) were calculated for each potential cutoff score, leading to an ROC curve with sensitivity on the y-axis and 1-specificity on the x-axis. The AUC was calculated to assess the overall discriminatory power of the AIM tool.

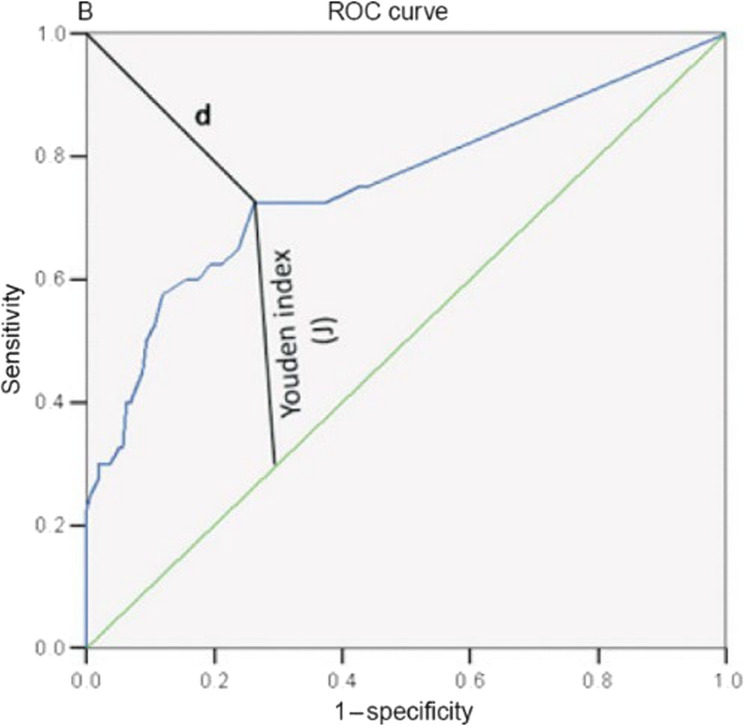

To optimize the cutoff score and balance sensitivity and specificity, two methods were used:

1. Youden’s index (sensitivity + specificity − 1): The optimal cutoff point is defined as the point on the ROC curve that is farthest from the diagonal (AUC-0.5).

2. Distance Method: The optimal cutoff point is defined as the shortest distance between the ROC curve and the upper left corner (Fig. 2).

Fig. 2.

Optimal cut-off point on the ROC curve

Results

Participant demographics

A total of 347 participants across Pakistan were included in the study, resulting in a response rate of 51%. The participant demographics are shown in Table 1.

Table 1.

Participant demographics

| Participant Demographic | Number | Percentage % |

|---|---|---|

| Gender | ||

| Male | 152 | 44 |

| Female | 195 | 56 |

| Basic Qualification | ||

| MBBS | 266 | 77 |

| BDS | 81 | 23 |

| Institute Type | ||

| Government | 110 | 32 |

| Private | 237 | 68 |

| Department | ||

| Basic | 130 | 37 |

| Para Clinical | 67 | 19 |

| Clinical | 150 | 43 |

| Designation | ||

| Senior Registrar/Lecturer | 53 | 15 |

| Consultant | 6 | 2 |

| Assistant Professor | 130 | 37 |

| Associate Professor | 79 | 23 |

| Professor | 79 | 23 |

| Teaching Experience | ||

| Less than 2 years | 22 | 6 |

| 2–5 | 58 | 17 |

| 6–10 | 103 | 29 |

| 11–15 | 82 | 23 |

| 16–20 | 40 | 11 |

| 21–25 | 27 | 7 |

| 26–30 | 8 | 2 |

| More than 31 years | 7 | 2 |

| Medical Education Qualification | ||

| None | 23 | 6 |

| Workshop | 45 | 13 |

| Certificate | 158 | 45 |

| Diploma | 18 | 5 |

| Master | 93 | 26 |

| PhD | 10 | 3 |

Descriptive statistics of domains

After EFA, the AIM tool was divided into 7 domains (Table 2). The mean score ranged from 2.52 to 3.10, and the standard deviation varied from 0.041 to 0.128. The skewness and kurtosis scores indicated a normal distribution (Table 2; Fig. 3-A-H).

Table 2.

Summary statistics of the 7 domains of the AIM tool

| AIM Tool Domains | Number of Items | Cronbach Alpha | Mean | St Dev | Skew | Kurtosis |

|---|---|---|---|---|---|---|

| Assessment Methods | 7 | 0.859 | 2.68 | 0.128 | −0.59 | 0.11 |

| Assessment Quality Practices | 4 | 0.822 | 2.52 | 0.094 | −0.20 | −4.82 |

| Purpose of Assessment | 4 | 0.783 | 2.68 | 0.124 | −0.02 | −2.69 |

| Assessment Policies | 5 | 0.719 | 3.10 | 0.062 | 0.43 | 0.36 |

| Assessment Design | 3 | 0.809 | 2.71 | 0.068 | −1.65 | - |

| Assessment Transparency | 4 | 0.742 | 2.89 | 0.123 | −0.51 | - |

| Ensuring Fair Assessment | 2 | 0.570 | 2.59 | 0.041 | - | - |

| OVERALL RELIABILITY | 29 | 0.930 | 2.75 | 0.118 | −0.227 | −0.710 |

Fig. 3.

Response distribution; A AIM tool; B Methods domain; C Quality practices domain; D Purpose domain; E Policy domain; F: Design domain; G Transparency domain; H Fairness domain

Step one: construct validity using exploratory factor analysis

This was determined via EFA via the protocol of Williams [10] (Fig. 1). The KMO measure (0.920) indicated an adequate sample size, and Bartlett’s test of sphericity (0.000) was highly significant (Table 3).

Table 3.

KMO and bartlett’s tests

| KMO and Bartlett’s Test | ||

|---|---|---|

| Kaiser-Meyer-Olkin Measure of Sampling Adequacy | 0.920 | |

| Bartlett’s Test of Sphericity | Approx. Chi-Square | 5036.437 |

| df | 435 | |

| Sig. | 0.000 | |

An EFA utilizing orthogonal varimax rotation revealed that 7 factors accounted for 63.96% of the total variance (Table 4). One item was removed because of a low factor loading [11], resulting in a 29-item tool. During rotation, certain items were aligned with different factors, as shown in red (Table 5). Four factors maintained item groupings comparable to those of the original tool, and their names were retained. Additionally, three new factors were identified and designated as follows: Assessment design, Assessment transparency, and Ensuring fair assessment. Certain authors advocate for the elimination of a factor when it contains fewer than two or three variables. However, the “fairness” domain consists of only two items, which are essential to the assessment process. As a result, this domain was retained [11].

Table 4.

Total Variance – 29 items

| Total Variance Explained | |||

|---|---|---|---|

| Component | Initial Eigenvalues | ||

| Total | % of Variance | Cumulative % | |

| 1 | 10.263 | 35.389 | 35.389 |

| 2 | 2.330 | 8.034 | 43.423 |

| 3 | 1.488 | 5.130 | 48.553 |

| 4 | 1.239 | 4.271 | 52.824 |

| 5 | 1.165 | 4.019 | 56.843 |

| 6 | 1.058 | 3.647 | 60.498 |

| 7 | 1.007 | 3.471 | 63.961 |

Table 5.

EFA: rotated component matrix after removal of item 8

| Item No | Component/Domain | ||||||

|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | |

| 11. Appropriate assessment methods are used to assess the affective domain. | 0.78 | ||||||

| 12. Appropriate weightage is given to knowledge, skills, and attitude domains | 0.73 | ||||||

| 10. Appropriate assessment methods are used to assess the psychomotor domain. | 0.65 | ||||||

| 17. The assessment system promotes student learning | 0.57 | ||||||

| 13. The assessment methods used are feasible | 0.55 | ||||||

| 20. Assessments encourage integrated learning | 0.51 | 0.48 | |||||

| 14. Use of new assessment methods is encouraged | 0.45 | 0.41 | |||||

| 28. Regular post-exam item analysis is conducted | 0.83 | ||||||

| 29. Post-exam item analysis results are communicated | 0.82 | ||||||

| 27. There is an item bank | 0.55 | ||||||

| 30. Regular faculty development workshops are conducted | 0.51 | . | |||||

| 18. Regular formative assessments are done | 0.64 | ||||||

| 21. Prompt feedback is given to students | 0.61 | ||||||

| 19. Appropriate mix of formative and summative assessments | 0.43 | 0.60 | |||||

| 25. Teachers are trained to provide feedback | 0.46 | 0.51 | |||||

| 3. Clear assessment procedures | 0.71 | ||||||

| 4. Clear criteria for student progression to the next class | 0.69 | ||||||

| 5. Exam retakes are clearly documented | 0.61 | ||||||

| 2. Oriented about the assessment policy | 0.59 | ||||||

| 1. Clearly defined assessment policy | 0.57 | ||||||

| 15. Clear blueprints | 0.76 | ||||||

| 16. Clear checklists/rubrics for performance assessments | 0.41 | 0.67 | |||||

| 26. Assessments represent the exam blueprints | 0.55 | ||||||

| 24. Adequate role of external examiners in summative exams | 0.66 | ||||||

| 22. The assessment system ensures fair conduct | 0.55 | ||||||

| 23. Adequate resources for assessments | 0.55 | ||||||

| 9. Appropriate assessment methods are used to assess the cognitive domain | 0.43 | 0.48 | |||||

| 6. Appeal against assessment results | 0.72 | ||||||

| 7. External scrutiny of assessment by experts | 0.71 | ||||||

A scree plot revealed an “elbow” after the seventh component, indicating that the first seven factors should be retained, as they account for most of the variance in the data (Fig. 4).

Fig. 4.

Scree plot with 7 eigenvalues

Reliability and internal consistency

The AIM tool demonstrated excellent internal consistency, with an overall Cronbach’s alpha of 0.93 [13]. The domain-specific reliability indices ranged from 0.719 (acceptable) to 0.859 (good), but the “Ensuring Fair Assessment” domain had a suboptimal alpha of 0.57 (Table 2).

Step two: cut-off score using receiver operating curve analysis

Two methods were used to identify the cutoff score:

Youden index: a point on the ROC curve that is farthest from the diagonal line.

Distance method: the shortest distance between the upper left corner and the ROC curve (Fig. 2).

The analysis identified a cutoff score of 77 out of 116, distinguishing high- from low-quality assessment practices with a sensitivity of 0.964 and specificity of 0.960 (Table 6). The domain-specific cutoff scores ranged from 5.5 to 18.5, but the transparency domain demonstrated two separate cutoff scores (10.5/11.5). When such an inconsistency occurs, the Youden index value is recommended [14].

Table 6.

Area under the curve and cutoff scores

| AIM Domain | AUC | Sensitivity | Specificity | Youden Index (J) | Min. Distance | 95% CI | No. of Items | Domain Total Score | Domain Cut-Off Score |

|---|---|---|---|---|---|---|---|---|---|

| Assessment Methods | 0.924 | 0.842 | 0.880 | 0.722 | 0.198 | 0.895-0.953 | 7 | 28 | 18.5 |

| Assessment Quality Practices | 0.882 | 0.730 | 0.888 | 0.618 | 0.293 | 0.847-0.918 | 4 | 16 | 10.5 |

| Purpose of Assessment | 0.902 | 0.802 | 0.848 | 0.650 | 0.250 | 0.870-0.934 | 4 | 16 | 10.5 |

| Assessment Policies | 0.724 | 0.595 | 0.744 | 0.339 | 0.479 | 0.669-0.779 | 5 | 20 | 15.5 |

| Assessment Design | 0.865 | 0.802 | 0.840 | 0.642 | 0.255 | 0.822-0.908 | 3 | 12 | 8.5 |

| Assessment Transparency | 0.864 | 0.919/0.811 | 0.688/0.784 | 0.607 | 0.287 | 0.822-0.906 | 4 | 16 | 10.5–11.5 |

| Ensuring Fair Assessment | 0.701 | 0.563 | 0.736 | 0.299 | 0.510 | 0.644-0.758 | 2 | 8 | 5.5 |

| AIM Tool | 0.994 | 0.964 | 0.960 | 0.924 | 0.054 | 0.990-0.999 | 29 | 116 | 77 |

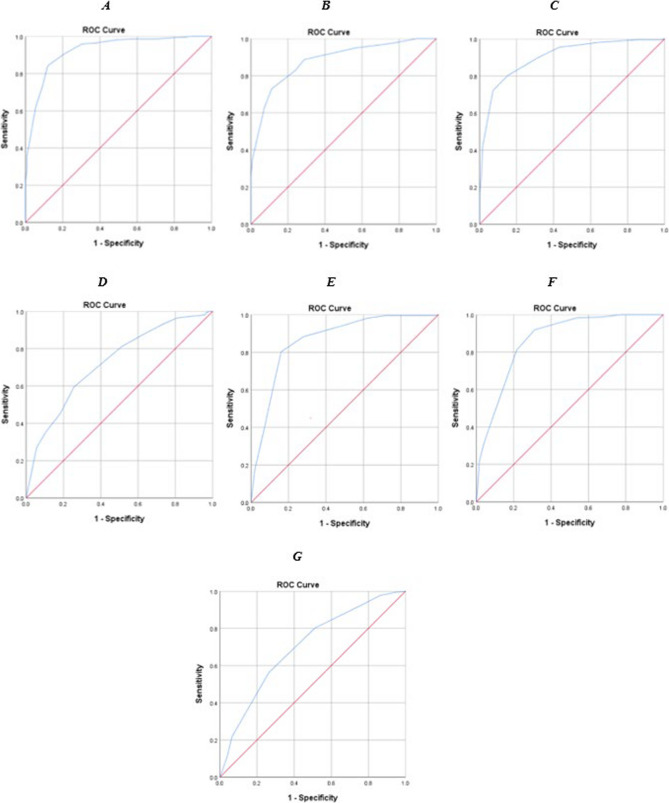

The overall AUC of 0.994 reflects the tool’s excellent ability to differentiate between good and poor assessment implementation (Table 6; Fig. 5-A). All the tool items had an AUC of more than 0.5, with domain AUCs ranging from 0.701 to 0.924 (Table 6; Figs. 5-B-C and 6).

Fig. 5.

A- AUC of the AIM tool; B- AUC of 29 items; C- AUC of seven factors

Fig. 6.

AUC of Each Domain A: Assessment Methods B: Assessment of Quality Practices C: Purpose of Assessment D: Assessment Policies E: Assessment Design F: Assessment Transparency G: Ensuring Fair Assessment

Table 7 displays the final AIM tool after EFA, and ROC analysis comprising 29 items grouped into seven factors.

Table 7.

Final AIM tool with cutoff scores

| AIM Domain | Items | No. of Items | Domain Total Score | Domain Cut-Off Score |

|---|---|---|---|---|

| Assessment Methods |

Appropriate assessment methods are used to assess the affective domain. Appropriate weightage is given to knowledge, skills, and attitude domains Appropriate assessment methods are used to assess the psychomotor domain. The assessment system promotes student learning The assessment methods used are feasible Assessments encourage integrated learning Use of new assessment methods is encouraged |

7 | 28 | 18.5 |

| Assessment Quality Practices |

Regular post-exam item analysis is conducted Post-exam item analysis results are communicated There is an item bank Regular faculty development workshops are conducted |

4 | 16 | 10.5 |

| Purpose of Assessment |

Regular formative assessments are done Prompt feedback is given to students Appropriate mix of formative and summative assessments Teachers are trained to provide feedback |

4 | 16 | 10.5 |

| Assessment Policies |

Clear assessment procedures Clear criteria for student progression to the next class Exam retakes are clearly documented Oriented about the assessment policy Clearly defined assessment policy |

5 | 20 | 15.5 |

| Assessment Design |

Clear blueprints Clear checklists/rubrics for performance assessments Assessments represent the exam blueprints |

3 | 12 | 8.5 |

| Assessment Transparency |

Adequate role of external examiners in summative exams Assessment system ensures fair conduct Adequate resources for assessments Appropriate assessment methods are used to assess the cognitive domain |

4 | 16 | 10.5–11.5 |

| Ensuring Fair Assessment |

Appeal against assessment results External scrutiny of assessment by experts |

2 | 8 | 5.5 |

| AIM Tool | 29 | 116 | 77 |

Discussion

Establishing high-quality assessment practices in undergraduate medical education is crucial for ensuring the effectiveness of educational programs and the training of qualified health professionals. By determining the construct validity and cutoff score of the Assessment Implementation Measure (AIM) tool, the current study builds on the original work by Sajjad et al. [8], where tool development and content validity were verified through expert opinion [8]. This study, therefore, provides statistical evidence of the utility and reliability of the AIM tool and contributes meaningfully to assessment quality assurance in health professions education.

Construct validity is essential to ensure that an instrument accurately measures its intended construct. During the original AIM tool development by Sajjad et al. [8], construct validity could not be confirmed due to a limited sample size, and the authors assigned items to four conceptual domains that were not statistically established [8]. In contrast, our study addressed this shortcoming by employing exploratory factor analysis (EFA), a standard psychometric technique, which simplifies data by reducing the variables into a smaller group of factors [15, 16]. EFA generated a seven-factor structure covering the domains of assessment methods, quality practices, policies, purpose, design, transparency, and fairness. One item from the original 30-item AIM tool was removed due to a low factor loading, resulting in a final 29-item instrument with strong loadings and explained variance, providing psychometric evidence of the tool’s construct validity [16]. These findings are consistent with scale refinement practices in educational research [16].

In this study, the internal consistency as measured by Cronbach’s alpha, remained high overall (α = 0.93), indicating excellent consistency [13]. However, the ‘Ensuring Fair Assessment’ domain demonstrated suboptimal reliability (α = 0.570), likely due to its containing only two items. This finding signals the need to refine and potentially expand this domain in future versions [13]. Similar limitations have been observed in domains with low item representation in other validated tools [17–20].

Once a tool has been developed, it is important to know how to interpret and report the scores [9]. To enhance the interpretability and decision utility of the AIM tool, this study employed the Receiver Operating Characteristic (ROC) analysis, a method seldom used in educational tool validation. Traditional standard-setting techniques rely heavily on expert consensus and can be subjective, inconsistent, and context-dependent [21]. In contrast, ROC analysis offers an objective method of determining performance thresholds, thereby minimizing human bias and enhancing reproducibility. Using both Youden’s index and the Distance method, this study identified an optimal overall score of 77 for the AIM tool, with a high Area Under the Curve (AUC = 0.994), indicating excellent discriminatory capacity [22]. These results confirm the tool’s ability to precisely differentiate between high and low-quality assessment practices [23]. Moreover, the agreement between the two methods across most domains supports the validity of the selected cutoff scores, providing useful reference points for educators and decision makers.

The AIM tool’s conceptual focus is on the implementation quality of assessments, and its psychometric rigor positions it uniquely among other instruments in medical education. While tools such as HELES, DREEM, AMEET, and JHLES focus on the broader aspects of the learning environment, none are specifically designed to assess features of assessment [17–20]. The only comparable reference found in the literature explored student perceptions of assessment environments [24], which, though insightful, does not address faculty responsibilities and practices. Given that faculty members are responsible for designing, delivering, and analyzing assessments, their input is critical for any meaningful evaluation of assessment quality [6]. The AIM tool, therefore, fills an important gap by focusing on faculty-led assessment processes through a multidimensional lens.

Ensuring the assessment quality of a medical college is essential for producing skilled medical professionals who possess the expertise and critical thinking skills required to deliver reliable and efficient patient care, thereby maintaining public trust. This study presents a psychometrically validated and statistically grounded tool for evaluating the quality of assessments in undergraduate medical education. The validated AIM tool now presents institutions with a data-driven mechanism to improve their assessment practices and ensure that both curricular objectives and international quality standards are achieved. It permits self-audit and evidence-based reforms in assessment strategy and holds promise for institutional benchmarking, accreditation readiness, and ultimately, improved learner outcomes and patient care.

Conclusion

This study addresses a significant gap in the medical education literature by providing a standardized, validated instrument to evaluate the quality of assessment implementation. This study’s strength lies in its robust methodology, the application of advanced validation techniques, and the inclusion of both validity and reliability indices. Through exploratory factor analysis, the AIM tool was refined into a seven-factor structure characterized by strong psychometric indices, thereby confirming its construct validity. In addition, ROC analysis statistically established cutoff scores to distinguish between high and low-quality assessment practices. Together, these findings support the utility of the AIM tool for faculty development, institutional quality assurance, and research in medical education assessment.

Practical implications

The validated AIM tool can discriminate between effective and ineffective assessment implementation, thereby assisting medical institutions in identifying specific strengths and weaknesses that enable targeted resource allocation. The statistically derived scores represent the minimum threshold required for effective assessment; scores below the threshold indicate areas requiring improvement. The routine use of the AIM tool facilitates continuous quality improvement within assessment programs, allowing institutions to monitor their progress over time, establish improvement objectives, and measure the effect of interventions or changes in their assessment quality.

An established cutoff score provides a yardstick for measuring assessment practices, promoting a culture of excellence in medical education. Assessment programs may be categorized based on AUC values as follows [23]: (i) good (AUC = 0.8–0.9); (ii) fair (AUC = 0.6–0.7); and (iii) poor (AUC = 0.5–0.6). Programs classified as poor can benefit from targeted interventions, which are particularly valuable when resources are limited.

Limitations and recommendations

Despite the strengths of this study, several limitations must be recognized. While the sample size was sufficient for EFA, future studies with larger and more diverse institutional participation may further enhance the generalizability and cultural applicability of the tool. Additionally, Confirmatory Factor Analysis (CFA) was not conducted to validate the factor structure identified during EFA. Longitudinal studies could investigate how the tool adapts to changes in assessment practices over time. The low reliability of the ‘Ensuring Fair Assessment’ domain is also an area for potential refinement.

Supplementary Information

Acknowledgements

Not applicable.

Abbreviations

- AIM

Assessment Implementation Measure

- AUC

Area Under the Curve

- CFA

Confirmatory Factor Analysis

- CVI

Content Validity Index

- EFA

Exploratory Factor Analysis

- KMO

Kaiser-Martin-Olkin measure

- PCA

Principal Component Analysis

- ROC

Receiver Operating Characteristic

Authors’ contributions

Concept of research, objective, research proposal, data collection, data analysis, article writing, and proofreading by KM. Concept of research, data analysis, article writing, and proofreading by MS. Concept of research and proofreading by RAK. All the authors read and approved the final manuscript.

Funding

No funds were received from anyone for this research project.

Data availability

All the data generated and analyzed during this study are provided in the article.

Declarations

Ethics approval and consent to participate

The study was approved by the institutional review board of Riphah International University (appl: Riphah/IRC/24/1025) (Annex 1).

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.General Medical Council. Outcomes for graduates. 2018. p. 28-30.

- 2. Greenfield J, Traboulsi EI, Lombardo-Klefos K, Bierer SB. Best practices for building and supporting effective ACGME-mandated program evaluation committees. MedEdPORTAL. 2020;16:11039. 10.15766/mep_2374-8265.11039. [DOI] [PMC free article] [PubMed]

- 3.Schuwirth LWT, van der Vleuten CPM. A history of assessment in medical education. Adv Health Sci Educ. 2020;25(5):1045–56. [DOI] [PubMed] [Google Scholar]

- 4.Harden RM, Lilley P. Best practice for assessment. Med Teach. 2018;40(11):1088–90. [DOI] [PubMed] [Google Scholar]

- 5.Dent J, Harden R, Hunt D. In: Dent J, Harden R, Hunt D, editors. A practical guide for medical teachers. 6 ed. Elsevier Health Sciences. 2021. p. 271-278.

- 6.Norcini J, Anderson MB, Bollela V, Burch V, Costa MJ, Duvivier R, et al. 2018 consensus framework for good assessment. Med Teach. 2018;40(11):1102–9. [DOI] [PubMed] [Google Scholar]

- 7.Al-Ismail MS, Naseralallah LM, Hussain TA, Stewart D, Alkhiyami D, Abu Rasheed HM, et al. Learning needs assessments in continuing professional development: A scoping review. Med Teach. 2023;45(2):203–11. [DOI] [PubMed] [Google Scholar]

- 8.Sajjad M, Khan RA, Yasmeen R. Measuring assessment standards in undergraduate medical programs: development and validation of AIM tool. Pak J Med Sci. 2018;34(1):164–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bahar Şahin Sarkın D, Deniz Gülleroğlu H. Anxiety in prospective teachers: determining the cut-off score with different methods in multi-scoring scales. Kuram Ve Uygulamada Egitim Bilimleri. 2019;19(1):3–21. [Google Scholar]

- 10.Williams B, Onsman A, Brown T, Andrys Onsman P, Ted Brown P. Exploratory factor analysis: A five-step guide for novices. J Emerg Prim Health Care (JEPHC). 2010;8(3):990399. [Google Scholar]

- 11.Samuels P. Advice on Exploratory Factor Analysis [Internet]. 2017. Available from: https://www.researchgate.net/publication/319165677.

- 12.Jollife IT, Cadima J. Principal component analysis: a review and recent developments. Philos Trans A Math Phys Eng Sci [Internet]. 2016 Apr 4 [cited 2024 Jun 23];374(2065). Available from:pmc/articles/PMC4792409/. [DOI] [PMC free article] [PubMed]

- 13.Sharma B. A focus on reliability in developmental research through cronbach’s alpha among medical, dental and paramedical professionals. Asian Pac J Health Sci [Internet]. 2016;3(4):271–8. Available from: www.apjhs.com. [Google Scholar]

- 14.Perkins NJ, Schisterman EF. The inconsistency of optimal cutpoints obtained using two criteria based on the receiver operating characteristic curve. Am J Epidemiol. 2006;163(7):670–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Goretzko D, Pham TTH, Bühner M. Exploratory factor analysis: current use, methodological developments and recommendations for good practice. Curr Psychol. 2021;40(7):3510–21. [Google Scholar]

- 16.Tavakol M, Wetzel A. Factor Analysis: a means for theory and instrument development in support of construct validity. Int J Med Educ [Internet]. 2020 Nov 6 [cited 2024 Jul 9];11:245. Available from: /pmc/articles/PMC7883798/ . [DOI] [PMC free article] [PubMed]

- 17.Rusticus SA, Wilson D, Casiro O, Lovato C. Evaluating the Quality of Health Professions Learning Environments: Development and Validation of the Health Education Learning Environment Survey (HELES). Eval Health Prof [Internet]. 2020 Sep 1 [cited 2024 Jun 20];43(3):162–8. Available from: https://pubmed.ncbi.nlm.nih.gov/30832508/. [DOI] [PubMed]

- 18.Salih KMA, Idris MEA, Elfaki OA, Osman NMN, Nour SM, Elsidig HA, et al. Measurement of the educational environment in MBBS teaching program, according to DREEM in college of medicine, university of bahri, khartoum, Sudan. Adv Med Educ Pract. 2018;9:617–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shahid R, Khan RA, Yasmeen R. Establishing construct validity of Ameet (Assessment of medical educational environment by the teachers) inventory. J Pak Med Assoc. 2019;69(1):34–43. [PubMed] [Google Scholar]

- 20.Shochet RB, Colbert-Getz JM, Wright SM. The Johns Hopkins learning environment scale: measuring medical students’ perceptions of the processes supporting professional formation. Acad Med. 2015;90(6):810–8. [DOI] [PubMed]

- 21.Lane AS, Roberts C, Khanna P. Do we know who the person with the borderline score is, in Standard-Setting and Decision-Making. Health Professions Educ. 2020;6(4):617–25. [Google Scholar]

- 22.Foley CS, Moore EC, Milas M, Berber E, Shin J, Siperstein AE. Receiver operating characteristic analysis of intraoperative parathyroid hormone monitoring to determine optimum sensitivity and specificity: analysis of 896 cases. Endocr Pract. 2019;25(11):1117–26. [DOI] [PubMed] [Google Scholar]

- 23.Schaufeli WB, De Witte H, Hakanen JJ, Kaltiainen J, Kok R. How to assess severe burnout? Cutoff points for the burnout assessment tool (BAT) based on three European samples. Scand J Work Environ Health. 2023;49(4):293–302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sim JH, Tong WT, Hong WH, Vadivelu J, Hassan H. Development of an instrument to measure medical students’ perceptions of the assessment environment: initial validation. Med Educ Online. 2015;20(28612). 10.3402/meo.v20.28612. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All the data generated and analyzed during this study are provided in the article.