Abstract

Objective

To evaluate the diagnostic accuracy of 4 multimodal large language models (MLLMs) in detecting and grading diabetic retinopathy (DR) using their new image analysis features.

Design

A single-center retrospective study.

Subjects

Patients diagnosed with prediabetes and diabetes.

Methods

Ultra-widefield fundus images from patients seen at the University of California, San Diego, were graded for DR severity by 3 retina specialists using the ETDRS classification system to establish ground truth. Four MLLMs (ChatGPT-4o, Claude 3.5 Sonnet, Google Gemini 1.5 Pro, and Perplexity Llama 3.1 Sonar/Default) were tested using 4 distinct prompts. These assessed multiple-choice disease diagnosis, binary disease classification, and disease severity. Multimodal large language models were assessed for accuracy, sensitivity, and specificity in identifying the presence or absence of DR and relative disease severity.

Main Outcome Measures

Accuracy, sensitivity, and specificity of diagnosis.

Results

A total of 309 eyes from 188 patients were included in the study. The average patient age was 58.7 (56.7–60.7) years, with 55.3% being female. After specialist grading, 70.2% of eyes had DR of varying severity, and 29.8% had no DR. For disease identification with multiple choices provided, Claude and ChatGPT scored significantly higher (P < 0.0006, per Bonferroni correction) than other MLLMs for accuracy (0.608–0.566) and sensitivity (0.618–0.641). In binary DR versus no DR classification, accuracy was the highest for ChatGPT (0.644) and Perplexity (0.602). Sensitivity varied (ChatGPT [0.539], Perplexity [0.488], Claude [0.179], and Gemini [0.042]), whereas specificity for all models was relatively high (range: 0.870–0.989). For the DR severity prompt with the best overall results (Prompt 3.1), no significant differences between models were found in accuracy (Perplexity [0.411], ChatGPT [0.395], Gemini [0.392], and Claude [0.314]). All models demonstrated low sensitivity (Perplexity [0.247], ChatGPT [0.229], Gemini [0.224], and Claude [0.184]). Specificity ranged from 0.840 to 0.866.

Conclusions

Multimodal large language models are powerful tools that may eventually assist retinal image analysis. Currently, however, there is variability in the accuracy of image analysis, and diagnostic performance falls short of clinical standards for safe implementation in DR diagnosis and grading. Further training and optimization of common errors may enhance their clinical utility.

Financial Disclosure(s)

Proprietary or commercial disclosure may be found in the Footnotes and Disclosures at the end of this article.

Keywords: Diabetic retinopathy, Ultra-widefield fundus photography, Multimodal large language model, Artificial intelligence, Image analysis

Diabetes mellitus is a common disease estimated to affect over 10% of the global population aged 20 to 79 years.1 Diabetic retinopathy (DR) is an associated microvascular disease of the retina. Of those with type 2 diabetes mellitus, approximately 60% develop DR with 25 years of diabetes mellitus. Diabetic retinopathy can result in severe vision loss and is a leading cause of blindness in working-age adults.2 Because primary prevention and effective treatments are available, screening can drastically improve outcomes3.

Regular screening for DR represents a critical opportunity for saving vision but requires immense resources.4 In the United States, DR screening rates fall well short of recommendations.5 Poor access to care, out-of-pocket expenses, and lack of time for providers all contribute to this shortfall. In minority groups, various social determinants of health disproportionately reduce screening rates further. In low- and lower-middle-income countries, the most significant barriers to screening are related to a lack of infrastructure and human resources, including a relative shortage of ophthalmologists.6 As the global population and incidence of diabetes continue to rise, resource demands are anticipated to grow even larger in the coming decades.1 New technology offers potential solutions to these escalating demands and could expand screening access and viability.

Amidst a landscape of rapidly developing artificial intelligence (AI) technologies are large language models (LLMs). These generative AI use deep learning algorithms and vast amounts of training data to interpret and provide seemingly human responses to user prompts.7 Since the release of ChatGPT (OpenAI) in 2022, the popularity of LLMs has grown rapidly,8,9 and there is a growing body of research into the potential applications of this technology in medicine.10, 11, 12 Although originally confined to text-only inputs, the capabilities of LLMs have recently expanded with the introduction of multimodal large language models (MLLMs) that can interpret other inputs such as ophthalmic imaging.13, 14, 15 These models use visual encoders to identify image features such as objects, textures, and colors, which are transformed into other formats that can be fused with text information and processed by the LLM.16 Beginning in late 2023, ChatGPT gained the ability to interpret images with GPT-4 with vision,17 which has since been incorporated into their GPT-4o model.10,18 Other LLM platforms, including Claude (Anthropic), Copilot (Microsoft Corporation), Gemini (Google LLC), Llama (Meta Platforms, Inc), and Perplexity (Perplexity AI), have followed suit and are now multimodal generalists capable of processing queries with both imaging and text, as well as other input modalities. Although many studies have been published on LLM performance in text-based ophthalmology queries,10 to date there appears to be only limited research19 evaluating how accurately widely available MLLMs can identify DR from retinal images.

In this study, we compare the abilities of 4 leading MLLMs to detect and grade DR severity using ultra-widefield (UWF) fundus images. Four current models from different companies were tested: ChatGPT-4o, Claude 3.5 Sonnet, Google Gemini 1.5 Pro, and Perplexity Llama 3.1 Sonar/Default. Because MLLM prompt input can strongly influence output,9,20 the study also explores variability in diagnostic accuracy across different prompting styles.

Methods

This is a comparative analysis study using retrospective images of patients seen at the University of California, San Diego, from July 2021 to August 2024. Informed consent was waived by the Institutional Review Board of the University of California, San Diego, due to the retrospective nature of the work. Besides the anonymized fundus photograph, no additional patient information was provided to the MLLM or human graders, including image metadata. The research was conducted according to the principles of the Declaration of Helsinki.

Image Database

The study used an anonymized dataset of images acquired at our institution to avoid overlap with online data, which could potentially be used to train MLLMs. Eligible DR images were identified first by a search of the University of California, San Diego, electronic health record for patients who had completed UWF imaging and had a diagnosis of either diabetes or prediabetes. Eyes with any history of the following were excluded: retinal pathology besides DR, history of retinal surgery, history of ocular trauma, central retinal artery or vein occlusion, pathologic myopia, glaucoma, or intraocular surgery completed within 6 months of imaging. Images without visible third-order arterioles were deemed of poor quality and also excluded. A maximum of 1 image per eye was included to ensure heterogeneity of the dataset. This process was continued until various levels of DR severity (per preliminary grading by a senior retina fellow [N.N.M.]) were well-represented in the dataset. Ultra-widefield images had been obtained from patients approximately 15 minutes after dilation with tropicamide 1% and phenylephrine 2.5% (Optos P200DTx, Optos PLC). Demographic information was collected for each patient, including age, sex, and self-reported race.

Image Processing

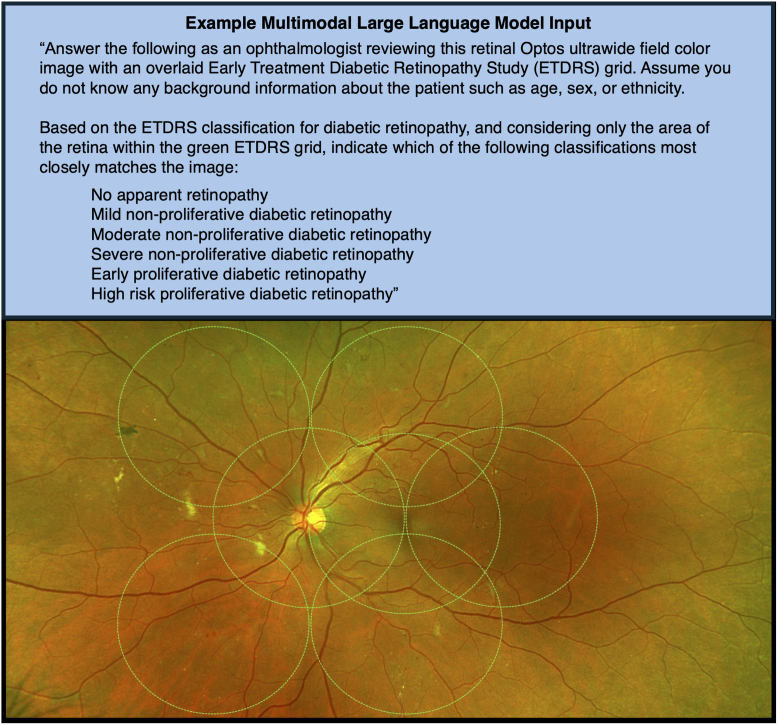

Images were downloaded from Zeiss Forum Viewer (version 4.2.4.15, Carl Zeiss) after zooming in to the innermost UWF borders to maximize quality of the ETDRS area. This method was chosen as this system is commonly used to view clinical images. Images were saved in portable network graphic format and fully anonymized. Two versions of each image were then saved: one with an ETDRS 7-standard field grid overlay for grading DR severity and a second version without the grid for all prompts unrelated to disease severity. The grid overlay was designed and positioned per the previously published methods21,22 to adapt UWF images for ETDRS grading (Fig 1). The grid overlay was manually added using Microsoft PowerPoint (version 16.89, Microsoft Corporation).

Figure 1.

Example MLLM input, MLLM prompt (3.1) text, and ultra-widefield fundus image input. The ETDRS 7-field grid overlay (green) was applied for grading of disease severity using the ETDRS criteria. MLLM = multimodal large language model.

Expert Review

Each image was then independently graded for disease severity by 3 retina attending physicians (J.F.R., N.L.S., and S.B.) to establish ground truth. Grades were assessed using the ETDRS classification system of DR, per the American Academy of Ophthalmology Preferred Practice Pattern.23 The possible severity grades were: no DR, mild nonproliferative diabetic retinopathy (NPDR), moderate NPDR, severe NPDR, early proliferative diabetic retinopathy (PDR), and high-risk PDR. Graders held equal weight, and at least a two-thirds consensus was required to establish a ground truth severity grade. Any images without consensus following independent grading were adjudicated by all graders together to establish a final grade.

MLLM Prompting

Four MLLM models with image analysis capabilities were compared: ChatGPT-4o, Claude 3.5 Sonnet, Google Gemini 1.5 Pro, and Perplexity Llama 3.1 Sonar/Default. The paid versions of each were used to access the most up-to-date models at the time of data collection (August 2024 to October 2024). The same series of images and prompts were entered into each model in identical fashion. For each UWF patient image, 4 unique prompts (labeled 1, 2, 3.1, and 3.2) were entered into the MLLM along with the uploaded image, one at a time. A new chat was started for every image and prompt to maintain the independence of each query and to avoid MLLMs interpreting separate queries as interrelated follow-up questions. Multimodal LLM responses were recorded in a spreadsheet.

Prompts were crafted with common principles of prompt engineering in mind.9,20 Because of the length of some prompts, we have presented these in Figure S1 (available at www.ophthalmologyscience.org) for reference. Each prompt included a preface instructing the MLLM to “think like an ophthalmologist,” to assume no other background information was known about the patient, and to provide the most likely answer based on the image alone. Prompts tested the MLLMs using a multiple-choice (MC) format: Prompt 1 (MC diagnosis from 8 common retina conditions), Prompt 2 (MC diagnosis, DR, or no DR), and Prompt 3 (MC disease severity, 6 choices). Prompt 3 requested grading of disease severity using the same classification system as the human graders. Because of the complexity of the question and to explore the effects of prompt engineering, this prompt was tested with 2 versions (3.1 and 3.2) that provided variable information about the grading criteria. Prompt 3.1 instructed the MLLM to use the ETDRS DR severity classification but provided no rubric or criteria details. Prompt 3.2 instructed the same and listed the full ETDRS criteria, including standard photographs that are part of the grading system. This is also the same as what was shown to human graders. For Prompts 1 and 2, the images without an ETDRS grid were used to avoid potentially biasing results, as the grid itself is associated with DR diagnosis. For both versions of Prompt 3, the images with the grid were used to facilitate using the grading criteria, and the MLLM was instructed to grade only within the ETDRS 7-field grid.

Statistical Analysis

Subject-level demographic and eye-level diagnostic characteristics are presented as count (%) and mean (95% confidence interval) for categorical and continuous parameters, respectively. Comparisons of subject-level demographic characteristics were performed between the DR and non-DR cohorts using chi-squared tests and t tests for categorical and continuous parameters, respectively. Multimodal LLM performance was evaluated for accuracy, sensitivity, and specificity. Additionally, Cohen kappa was reported for Prompt 2 to assess agreement between MLLM predictions and the actual diagnosis. For Prompts 1 and 3, we provided overall sensitivity and specificity metrics, as well as per-class estimates to evaluate individual DR and non-DR model-based predictive performances. Nonparametric bootstrap resampling, conducted at the subject level, was utilized to compute 95% confidence intervals of model performance metrics, as well as derive P values depicting significant differences across MLLM predictive performance. The statistical analysis was conducted using the R programming language for statistical computation, version 4.4.0 (R Core Team 2024, R Foundation for Statistical Computing). P values <0.05 were considered statistically significant, except for multiple comparisons between MLLM models, which used a significance level of 0.0006 per Bonferroni adjustment.

Results

Cohort Summary

A total of 309 eyes from 188 patients were included, after removing eyes with excluded ocular history or poor UWF image quality preventing accurate grading. Average patient age was 58.7 (confidence interval: 56.7, 60.7) years, and 55.3% were female (Table 1). There were no significant differences between the no DR eyes and the DR eyes in terms of sex, age, or self-reported race. The no DR mean age was 62.8 (58.9–66.6) years versus 57.2 (54.9–59.6) years in the DR group. Of the included eyes, 305 (98.7%) were from patients with diabetes, and 4 (1.3%) were from patients with prediabetes who underwent screening.

Table 1.

Demographic Summary Cohort of Age, Sex, and Self-Identified Race with Demographics Stratified by Disease Status

| Total Cohort (n = 188 Subjects) |

DR (n = 140 Subjects) |

No DR (n = 48 Subjects) |

P Value | |

|---|---|---|---|---|

| Age, mean | 58.7 (56.7–60.7) | 57.2 (54.9–59.6) | 62.8 (58.9–66.6) | 0.017 |

| Sex | ||||

| Female | 104 (55.3%) | 77 (55.4%) | 27 (55.1%) | >0.999 |

| Male | 84 (44.7%) | 63 (44.6%) | 21 (44.9%) | |

| Race | 0.467 | |||

| American Indian or Alaska Native | 1 (0.5%) | 1 (0.7%) | 0 (0.0%) | |

| Asian | 21 (11.2%) | 14 (10.1%) | 7 (14.3%) | |

| Black or African American | 9 (4.8%) | 5 (3.6%) | 4 (8.2%) | |

| Native Hawaiian or Other Pacific Islander | 2 (1.1%) | 2 (1.4%) | 0 (0.0%) | |

| Other race or mixed race | 65 (34.6%) | 52 (37.4%) | 13 (26.5%) | |

| Unknown or not reported | 13 (6.9%) | 8 (5.8%) | 5 (10.2%) | |

| White | 77 (41.0%) | 57 (41.0%) | 20 (40.8%) |

DR = diabetic retinopathy.

Means are presented with 95% confidence intervals.

In terms of diagnosis, 92 (29.8%) eyes had no DR per human grader consensus, and 217 (70.2%) had DR of varying severity (Table 2). In binary disease classification, independent consensus grades (2 or more graders in agreement) were reached for all eyes with no adjudication necessary, with a Fleiss' kappa of 0.819 indicating near-perfect agreement. For disease severity, independent consensus grades (2 or more graders in agreement) were reached for 292 (94.5%) eyes, and group adjudication was required for 17 (5.5%) eyes with disagreement. Consensus among graders was reached for all images. Intraclass correlation factoring relative nearness of severity grades between graders was 0.826, indicating good reliability.

Table 2.

Cohort Ground Truth Disease Status and Severity of Number of Patients and Percentage of the Cohort per Diagnosis, as Determined by Expert Grader Consensus

| Total Cohort (n = 309 Eyes) | |

|---|---|

| Disease status | |

| DR | 217 (70.2%) |

| No DR | 92 (29.8%) |

| Disease severity | |

| No DR | 92 (29.8%) |

| Mild NPDR | 89 (28.8%) |

| Moderate NPDR | 83 (26.9%) |

| Severe NPDR | 4 (1.3%) |

| Early PDR | 22 (7.1%) |

| High-risk PDR | 19 (6.1%) |

DR = diabetic retinopathy; NPDR = nonproliferative diabetic retinopathy; PDR = proliferative diabetic retinopathy.

MLLM Multiple Choice Disease Classification (Prompt 1)

Table 3 summarizes the performance of all MLLMs in choosing the correct diagnosis (out of 8 choices) in terms of accuracy, mean multiclass sensitivity and specificity, as well as the sensitivity and specificity on a per-class basis (DR vs. no DR). P values are included to indicate significant differences in these metrics between MLLMs. For accuracy, Claude (0.608 [0.547–0.668]) and ChatGPT (0.566 [0.506–0.624]) scored highest, with Perplexity (0.369 [0.311–0.433]) and Gemini (0.339 [0.283, 0.399]) scoring significantly lower (P < 0.0006 for all comparisons). Sensitivity ranged between models, with significantly higher mean sensitivities for ChatGPT (0.641 [0.557–0.659]) and Claude (0.618 [0.564–0.676]). Specificity of roughly 0.75 was seen for ChatGPT, Gemini, and Perplexity.

Table 3.

Multimodal Large Language Model Performance for Multiple-Choice (8 Retina Conditions) Identification of Diabetic Retinopathy (Prompt 1)

| Model | Accuracy | Sensitivity | Specificity | Sensitivity (DR) | Sensitivity | Specificity (DR) | Specificity (No DR) |

|---|---|---|---|---|---|---|---|

| ChatGPT 4o | 0.566 (0.506–0.624)†,‡ | 0.641 (0.557–0.659)†,‡ | 0.779 (0.733–0.825)∗ | 0.456 (0.383–0.525) | 0.826 (0.732–0.904) | 0.881 (0.795–0.941) | 0.677 (0.609–0.745) |

| Claude Sonnet 3.5 | 0.608 (0.547–0.668)§,|| | 0.618 (0.564–0.676)§,|| | 0.636 (0.568–0.689)§,|| | 0.594 (0.517–0.668) | 0.641 (0.522–0.752) | 0.663 (0.545–0.774) | 0.608 (0.527–0.682) |

| Gemini 1.5 Pro | 0.339 (0.283–0.399) | 0.447 (0.422–0.478) | 0.771 (0.726–0.808) | 0.177 (0.127–0.226) | 0.717 (0.613–0.817) | 0.978 (0.940–1.000) | 0.563 (0.491–0.636) |

| Perplexity Llama 3.1/Default | 0.369 (0.311–0.433) | 0.476 (0.438–0.514) | 0.758 (0.722–0.785) | 0.212 (0.158–0.273) | 0.739 (0.635–0.830) | 0.935 (0.879–0.977) | 0.581 (0.507–0.649) |

DR = diabetic retinopathy; MLLM = multimodal large language model.

Accuracy, mean multiclass sensitivity and specificity, as well as sensitivity and specificity on a per-class basis (DR vs. no DR), were presented for each MLLM model. Multimodal large language models were given the following choices: age-related macular degeneration, diabetic retinopathy, hypertensive retinopathy, sickle cell retinopathy, post-traumatic retinopathy, retinal vein occlusion, normal retina, and other pathology. Means are presented with 95% confidence intervals. Significant differences (P < 0.0006, per Bonferroni correction) between models for overall accuracy, sensitivity, and specificity are denoted by the following: ∗ChatGPT vs. Claude; †ChatGPT vs. Gemini; ‡ChatGPT vs. Perplexity; §Claude vs. Gemini; ||Claude vs. Perplexity.

MLLM Binary Diabetic Retinopathy Classification (Prompt 2)

Table 4 summarizes MLLM performance in classifying images as more likely to have DR or no DR. Here, ChatGPT accuracy was highest (0.644 [0.583–0.703]), followed by Perplexity (0.602 [0.542–0.659]), Claude (0.417 [0.347–0.482]), and Gemini (0.324 [0.259–0.391]). ChatGPT's accuracy was significantly greater than that of Claude (P < 0.0006) and Gemini (P < 0.0006). ChatGPT also scored highest in terms of F1 score (0.644 [0.621–0.701]), measuring model precision and recall, and Cohen kappa (κ) [0.326 (0.257–0.434)], which indicated only minimal agreement with the expert graders. There was substantial variation in sensitivity, and the highest scores were modest at best (ChatGPT [0.539], Perplexity [0.488], Claude [0.179], and Gemini [0.042]). Specificity was universally high, led by Gemini (0.989) and Claude (0.978), followed by ChatGPT (0.891) and Perplexity (0.870).

Table 4.

Multimodal Large Language Model Performance in Binary Classification of Diabetic Retinopathy vs. No Diabetic Retinopathy (Prompt 2)

| Model | Accuracy | Sensitivity | Specificity | Cohen Kappa (κ) | F1 Score |

|---|---|---|---|---|---|

| ChatGPT 4o | 0.644 (0.583–0.703)∗,† | 0.539 (0.478–0.592)∗,† | 0.891 (0.837–0.917) | 0.326 (0.257–0.434)∗,† | 0.644 (0.621–0.701)∗,† |

| Claude Sonnet 3.5 | 0.417 (0.347–0.482)‡,§ | 0.179 (0.144–0.266)‡,§ | 0.978 (0.957–0.989)§ | 0.102 (0.078–0.149)‡,§ | 0.274 (0.247–0.314)‡,§ |

| Gemini 1.5 Pro | 0.324 (0.259–0.391)|| | 0.042 (0.031–0.096)|| | 0.989 (0.978–1.000)|| | 0.019 (0.011–0.046)|| | 0.050 (0.045–0.061)|| |

| Perplexity Llama 3.1/Default | 0.602 (0.542–0.659) | 0.488 (0.407–0.532) | 0.870 (0.815–0.925) | 0.273 (0.246–0.362) | 0.614 (0.548–0.657) |

MLLM = multimodal large language model.

Accuracy, sensitivity, specificity, Cohen kappa, and F1 results (95% confidence interval) for each MLLM model. Multimodal large language models were asked to identify whether diabetic retinopathy or no diabetic retinopathy was more likely based on the ultra-widefield fundus image. Significant differences (P < 0.0006, per Bonferroni correction) in accuracy, sensitivity, and specificity between models are denoted by the following: ∗ChatGPT vs. Claude; †ChatGPT vs. Gemini; ‡Claude vs. Gemini; §Claude vs. Perplexity; ||Gemini vs. Perplexity.

MLLM Diabetic Retinopathy Severity Classification (Prompts 3.1 and 3.2)

Table 5 summarizes MLLM performance in classifying images based on severity of DR (including no DR) using both versions of Prompt 3. On average, accuracy was highest for the simpler prompt with the least specific grading criteria (Prompt 3.1) and lower with increased prompt specificity (Prompt 3.2). For this reason, we will focus on the results from Prompt 3.1. Highest accuracy was attained by Perplexity (0.411 [0.351–0.473]), followed by ChatGPT (0.395 [0.338–0.454]), Gemini (0.392 [0.329–0.453]), and Claude (0.314 [0.258–0.375]). There were no significant differences in accuracy between MLLM models for Prompt 3.1. Cohen kappa with equal distance weighting indicated weak agreement with expert graders for all MLLMs (Prompt 3.1; highest κ = 0.178, Gemini), which was also the case with kappa scores weighted by squared distances to account for relative nearness of severity grades to each other (Prompt 3.1: highest weighted κ = 0.213, Gemini). Average sensitivity was universally low for Prompt 3.1 (Perplexity [0.247], ChatGPT [0.229], Gemini [0.224], and Claude [0.184]), with Claude significantly lower than the others. Specificity was roughly 0.85 for all MLLMs, with small significant differences between Claude (0.840) and the other models.

Table 5.

Multimodal Large Language Model Performance for Grading Diabetic Retinopathy Disease Severity (Prompts 3.1 and 3.2)

| Prompt 3.1 |

Prompt 3.2 |

|||||

|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | |

| ChatGPT 4o | 0.395 (0.338–0.454) | 0.229 (0.214–0.269) | 0.866 (0.861–0.882)∗ | 0.372 (0.315–0.432)† | 0.277 (0.231–0.299) | 0.863 (0.860–0.874)∗,† |

| Claude Sonnet 3.5 | 0.314 (0.258–0.375) | 0.184 (0.173–0.189)§ | 0.840 (0.836–0.842)‡,§ | 0.272 (0.213–0.330) | 0.168 (0.163–0.176) | 0.834 (0.832–0.838) |

| Gemini 1.5 Pro | 0.392 (0.329–0.453) | 0.224 (0.201–0.232) | 0.858 (0.850–0.866) | — | — | — |

| Perplexity Llama 3.1/Default | 0.411 (0.351–0.473) | 0.247 (0.228–0.269) | 0.864 (0.855–0.871) | 0.217 (0.164–0.273) | 0.181 (0.117–0.219) | 0.831 (0.829–0.834) |

MLLM = multimodal large language model.

Accuracy, sensitivity, and specificity (95% confidence interval) are provided for each MLLM model. Prompt 3.1 named the ETDRS grading criteria, whereas Prompt 3.2 provided the full ETDRS grading criteria. Prompt 3.1 yielded the best results on average. Gemini was incompatible with Prompt 3.2, as it did not accept multiple image uploads per query; therefore, these results are not shown. Significant differences (P < 0.0006, per Bonferroni correction) in accuracy, sensitivity, and specificity between models are denoted by the following: ∗ChatGPT vs. Claude; †ChatGPT vs. Perplexity; ‡Claude vs. Gemini; §Claude vs. Perplexity.

Error Analysis

For all prompts, error analyses were performed to identify patterns associated with incorrect answers and, where possible, to scrutinize the justifications provided by MLLMs for given answers.

The first analysis for Prompts 1 and 2 stratified false negative answers by ground truth DR severity to reveal whether certain DR severities were more prone to being missed. Marked variations were found between MLLMs. On average, however, they frequently missed both mild and higher severity cases (Table S1, available at www.ophthalmologyscience.org). False positives were then assessed for hallucinated features named in the MLLM text answers. The most frequently hallucinated features were found to be microaneurysms and hemorrhages, followed by exudates, neovascularization, and other features not included in the ETDRS criteria (Table S2, available at www.ophthalmologyscience.org).

The results of Prompts 3.1 and 3.2 were first stratified by ground truth disease severity, finding sensitivity varied considerably (Table S3, available at www.ophthalmologyscience.org). In some cases, sensitivity and specificity were highly unbalanced, such as in Claude's strong overprediction of moderate NPDR in Prompt 3.2 (sensitivity: 96.4%; specificity: 4.9%). All models struggled to identify both types of PDR across all prompts. Sensitivity was higher for no DR, mild, and moderate NPDR, but still only exceeded 50% in a minority of cases. This is obviously critical for a clinical screening tool. Results could also vary dramatically between prompts, for example, as seen in the decrease in Claude's sensitivity (0.045) for mild NPDR in Prompt 3.2 compared with Prompt 3.1 (0.933).

For Prompts 3.1 and 3.2, incorrect answers were then assessed for error type to understand the causes for incorrect severity grades. For this analysis, 200 incorrectly graded eyes (50 from each MLLM) were randomly selected for each prompt based on patient visit date. After reviewing the MLLM text outputs (Fig S2, available at www.ophthalmologyscience.org), error categories were found to include DR feature hallucinations, DR feature omissions, incorrect usage of the ETDRS grading system, or a combination of hallucinations/omissions and incorrect application of the grading system. At least 90% of errors in this sample were attributable to feature hallucinations or omissions (Table S4, available at www.ophthalmologyscience.org). In rare errors attributable to the grading system, grading logic appeared intact, but an alternative grading system was used. This typically appeared to be the International Clinical Disease Severity Scale,24 despite including the required grading scale in the prompt. This would be evident, for example, by the MLLM making reference to the “4-2-1 Rule” in its explanation for a grade, which is part of the International scale but not ETDRS. Prompt 3.2 had no errors attributable to the apparent grading system used.

A final error analysis for Prompts 3.1 and 3.2 looked at errors where disease severity was underclassified or overclassified and then stratified these errors by ground truth DR disease severity (Table S5, available at www.ophthalmologyscience.org). This found that underclassification errors, tending to represent omissions, were most common in Prompt 3.1 and frequently occurred across most severity levels. Overclassifications, tending to represent hallucinations, were more common in Prompt 3.2. Because no DR and high-risk PDR cases cannot be under and overclassified by definition, these were not included.

Discussion

This study evaluated the capabilities of 4 MLLMs in detecting DR and grading disease severity from UWF fundus images. From our review, this may address gaps in the literature associated with MLLM image analysis, which is a relatively recent feature. Our findings demonstrate the potential utility of MLLMs in DR screening, with diagnostic accuracy from fundus imaging alone exceeding 60% in some cases. However, at present, sensitivity and specificity remain below those achieved by human graders screening for DR, which exceed at least 75% sensitivity and 95% specificity in many studies.25, 26, 27 Multimodal LLM performance also falls short of that achieved by other AI-based algorithms for DR detection, which have achieved upward of 90% sensitivity and specificity.25,28,29 A 2024 systematic review by Joseph et al28 found AI algorithms could correctly detect DR from fundus images on average 94% of the time and exclude eyes without DR 89% of the time. In addition, our study found no MLLM was able to reliably identify PDR, which would be critical for a screening tool given the high risk of vision loss associated with untreated PDR. In general, the observed sensitivity rates were inadequate and could lead to missed diagnoses, and suboptimal specificities could lead to overdiagnosis and resource inefficiencies. Combining MLLM screening with human grading of flagged images offers 1 path to more balanced sensitivity and specificity,30 but improvement to sensitivity at the levels we observed would be needed regardless. The MLLMs tested have only been developed recently, however, and performance is likely to continue to improve with refinement and training.10

Among the models we tested, ChatGPT-4o and Claude 3.5 Sonnet often performed significantly better than Gemini 1.5 Pro and Perplexity Llama 3.1 Sonar/Default, indicating that the model of MLLM is relevant. This varied, however, with different prompts and prompting styles, as seen in Perplexity's outperforming of Claude in binary disease classification, for example. This highlights the effects of prompt engineering and that accuracy achieved for 1 prompt should not be assumed for another. As a form of prompt engineering, MC disease identification (Prompt 1) was also tested as a prompt variant to binary DR classification (Prompt 2) and to gauge baseline performance in a query unspecific to DR. Nevertheless, results remained well below the standard for clinical implementation. Our results from testing different versions of disease severity prompts (Prompts 3.1 and 3.2) suggested that prompt simplicity may have benefits or at least is unlikely to be detrimental. Research has shown the accuracy of ChatGPT-4 with vision image analysis can be improved with “few-shot training.”31 However, when testing this for disease severity by providing 1 to 2 labeled examples with prompts, a marked decline in accuracy was observed with a single answer given repeatedly (data not presented), so this was not used for further analyses.

To provide a baseline understanding of the errors driving poor performance and diagnostic decisions, we performed error analyses for all prompts, finding that errors were most often due to DR feature omissions and hallucinations rather than logical errors or poor interpretations of the grading system. Poor performance was not limited to certain disease severities but was most pronounced in the identification of severe NPDR and both forms of PDR. The most commonly hallucinated features in Prompt 2 false positives were microaneurysms, hemorrhages, and exudates. For disease severity, either underclassification errors (likely feature omissions) or overclassification errors (hallucinations) were largely responsible for misses, depending on the prompt. Errors attributable to the grading system were rare in Prompt 3.1, and there were no errors attributable to the apparent grading system used by MLLMs in Prompt 3.2. This suggests possibly better adherence to the rubric when further details were provided in the prompt, although larger sample sizes would be needed to confirm this. Insights into MLLM reasoning may help guide further training that could improve the models.

Although our results suggest these MLLMs are not sufficiently accurate at present for safe clinical implementation in DR screening, there is potential for the technology to eventually have an impact in this space. As mentioned above, other AI tools specifically trained for DR detection have been shown to accurately assist in diagnosis. In practical terms, it has been estimated that various automated DR screening tools can reduce workloads by 26% to 70%.4,32,33 Cost-effectiveness has also been found in many, although not all, comparisons of automated versus manual DR screening.27,29,33,34 In countries with higher costs associated with physician graders, such as the United States, data suggest automated DR screening to be particularly cost-effective,29 reducing costs by an estimated 23.3% in 1 analysis.35 An Australian analysis found a benefit–cost ratio of approximately 7.5 for a scenario in which manual DR screening was replaced by an automated AI system.27 Because automated screening accuracy meets and even surpasses that of human graders,28 it is likely that AI tools can improve both practical and economic efficiency of DR screening in many settings.

Multimodal LLMs offer several potential advantages over other automated DR screening tools, which have been estimated to cost anywhere from $9 to upward of $66 per patient screening.27,33,34,36,37 These costs can include fixed fees per patient, as does the United States Food and Drug Administration-approved IDx-DR diagnostic tool for automated DR detection.34 Certain models of MLLMs, on the other hand, can be accessed for free or currently for as little as $20 per month.38 Enterprise pricing for clinical DR screening with MLLMs is not available, and a direct cost-comparison study would be beneficial. However, the potential cost savings of an MLLM charging a flat monthly fee, which could be used to screen hundreds of patients per month, is evident. Although other AI tools are also often specially trained and designed to detect specific pathologies, MLLMs are generalized tools with wide-ranging applications not limited to specific use cases, such as screening for a single disease.10 A variety of clinical support could theoretically be accessible to any provider with an internet connection and a smartphone, tablet, or computer. This efficiency could help expand screening to low-resource areas and settings beyond ophthalmology offices, such as primary care and endocrinology clinics, potentially increasing early detection of DR in otherwise undiagnosed patients.28 With the greatest relative increase in diabetes prevalence expected to occur in middle-income countries over the next 2 decades,39 economic viability and access will be critical in providing adequate DR screening globally. Multimodal LLMs may be uniquely positioned to support providers meeting growing economic and human resource demands.

With these advantages, there has been great interest in using LLMs in clinical practice. Within retinal disease, ChatGPT-4 has generated appropriate responses to some text-based diagnostic questions upward of 80%-90% of the time, though results vary by study and application.40,41 In assessing DR risk from text-based clinical and biochemical data, 1 study found the sensitivity and specificity of ChatGPT-4 to be 67% and 68%.42 The diagnostic accuracy of MLLMs for image analysis, however, has been assessed in only a very limited number of studies relating to ophthalmic conditions. The accuracy of ChatGPT-4 with vision in diagnosing glaucoma from fundus images was found to be 68%, 70%, and 81% for 3 different image sets,13 and ChatGPT-4 accurately diagnosed various ophthalmic diseases from fundus images in 4 of 12 cases.43 Our results fall between these ranges and are somewhat similar to those achieved in text-only-based DR risk assessment with ChatGPT-4.42 Results are likely to improve with additional training and development, much as text-based models have.

Although we tested a total of 4 prompts, prompt engineering and other prompting styles could be explored further. The models we tested were also not fine-tuned for a particular application. This was a deliberate choice to assess baseline performance of the standard models, but additional studies using fine-tuning could elucidate the maximum attainable performance of these MLLMs for DR screening. Although few-shot learning did not appear helpful in our study, additional research using other prompts and example images, as investigated previously,31 is warranted.

As AI technologies are explored and considered for eventual clinical use, additional research into ethical considerations such as accountability, transparency, patient privacy, and equity in health care delivery should be prioritized. Although this study used only anonymized data, clinical use of MLLMs with identifying patient information can carry additional ethical and future regulatory implications that will need to be addressed. Hallucinations and inaccuracies will also pose safety concerns if these technologies are used to augment or replace human work, and proper oversight and rigorous validation will be required. These issues will be central amidst economic pressures as AI becomes integrated into health care.

This study was limited by its retrospective nature with a relatively small number of cases for certain DR severity levels, and the population was limited to a single academic center. Providing only UWF imaging to MLLMs and comparing grading using ETDRS grids may limit generalizability because DR diagnosis often benefits from multimodal imaging and other clinical context. Our results do, however, provide a baseline for the accuracy achievable from fundus imaging alone. The exclusion of patients with other retinal pathology may also limit generalizability. Finally, because MLLMs provided categorical answers without confidence estimates, receiver operating characteristic areas under the curve were not calculated.

In conclusion, this study provides a foundational assessment of 4 widely accessible MLLMs in image-based detection and grading of DR. At present, performance falls short of expected clinical standards for safe implementation for screening. As models continue to develop, further research into performance following additional training and fine-tuning will be useful to provide ongoing assessment of their clinical potential.

Manuscript no. XOPS-D-25-00169.

Footnotes

Supplemental material available atwww.ophthalmologyscience.org.

The study was conducted at the University of California San Diego, La Jolla, CA.

Disclosures:

All authors have completed and submitted the ICMJE disclosures form.

The authors made the following disclosures:

I.D.N.: Grants – Unrestricted grants from UCSD Jacobs Retina Center and RPB inc. (payments were made to the institution [Jacobs Retina Center, Shiley Eye Center, UCSD]), Grant from the Jacobs Family Retina Fellowship Fund (payments were made to the institution [Jacobs Retina Center, Shiley Eye Center, UCSD]); Travel expenses – travel cost reimbursement through UCSD (payments were made through UCSD after submitting travel cost receipts).

J.S.C.: Honoraria – AbbVie, Genentech, Glaukos, Immunocore, Mallinckrodt, Alcon.

J.F.R.: Consultant – Carl Zeiss Meditec (1/2024 $500 consulting fee regarding surgical microscope with payment made to author. No relation to this project and no conflict to disclose).

N.L.S.: Grants – Robert Winn Foundation, NIH K12; Travel expenses – ABO 2025 Oral Board Examination – Examiner.

S.B.: Grants – NIH (CO-1 ON 2 grants ROI), Nixon Visions Foundation (support for research time), Ocugen (P1 – clinical trial x2), MeiraGTx (P1 – ON 3 trials), Janssen (P1 – ON 2 trials), Belite (P1 – ON 1 trial), IONIS (P1 – ON 1 trial); Royalties – JP Medical, Elsevier (books); Honoraria – American Academy of Optometry; Participation on a Data Safety Monitoring Board – AAVantgarde (DSMB).

HUMAN SUBJECTS: Human subjects were included in this study. Informed consent was waived by the institutional review board of the University of California, San Diego due to the retrospective nature of the work. Besides the anonymized fundus photograph, no additional patient information was provided to the multimodal large language model or human graders, including image metadata. The research was conducted according to the principles of the Declaration of Helsinki.

No animal subjects were used in this study.

Author Contributions:

Conception and design: Most, Chen, Borooah

Data collection: Most, Mehta, Nagel, Russell, Scott, Borooah

Analysis and interpretation: Most, Walker, Borooah

Obtained funding: N/A

Overall responsibility: Most, Borooah

Supplementary Data

References

- 1.Hossain M.J., Al-Mamun M., Islam M.R. Diabetes mellitus, the fastest growing global public health concern: early detection should be focused. Health Sci Rep. 2024;7 [Google Scholar]

- 2.Antonetti D.A., Silva P.S., Stitt A.W. Current understanding of the molecular and cellular pathology of diabetic retinopathy. Nat Rev Endocrinol. 2021;17:195–206. doi: 10.1038/s41574-020-00451-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wong T.Y., Sun J., Kawasaki R., et al. Guidelines on diabetic eye care: the international council of ophthalmology recommendations for screening, follow-up, referral, and treatment based on resource settings. Ophthalmology. 2018;125:1608–1622. doi: 10.1016/j.ophtha.2018.04.007. [DOI] [PubMed] [Google Scholar]

- 4.Nørgaard M.F., Grauslund J. Automated screening for diabetic retinopathy – a systematic review. Ophthalmic Res. 2018;60:9–17. doi: 10.1159/000486284. [DOI] [PubMed] [Google Scholar]

- 5.Lu Y. Divergent perceptions of barriers to diabetic retinopathy screening among patients and care providers, Los Angeles, California, 2014–2015. Prev Chronic Dis. 2016;13:E140. doi: 10.5888/pcd13.160193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Piyasena M.M.P.N., Murthy G.V.S., Yip J.L.Y., et al. Systematic review on barriers and enablers for access to diabetic retinopathy screening services in different income settings. PLoS One. 2019;14 [Google Scholar]

- 7.Gilson A., Safranek C.W., Huang T., et al. How does ChatGPT perform on the united States Medical Licensing Examination (USMLE)? The implications of large Language models for medical education and knowledge assessment. JMIR Med Educ. 2023;9 [Google Scholar]

- 8.Mohammadi S.S., Nguyen Q.D. A user-friendly approach for the diagnosis of diabetic retinopathy using ChatGPT and automated machine learning. Ophthalmol Sci. 2024;4 [Google Scholar]

- 9.Meskó B. Prompt engineering as an important emerging skill for medical professionals: tutorial. J Med Internet Res. 2023;25 [Google Scholar]

- 10.Agnihotri A.P., Nagel I.D., Artiaga J.C.M., et al. Large language models in ophthalmology: a review of publications from top ophthalmology journals. Ophthalmol Sci. 2024;5 [Google Scholar]

- 11.Chen J.S., Reddy A.J., Al-Sharif E., et al. Analysis of ChatGPT responses to ophthalmic cases: can ChatGPT think like an ophthalmologist? Ophthalmol Sci. 2025;5 [Google Scholar]

- 12.Bellanda V.C.F., Santos M.L.D., Ferraz D.A., et al. Applications of ChatGPT in the diagnosis, management, education, and research of retinal diseases: a scoping review. Int J Retina Vitr. 2024;10:79. [Google Scholar]

- 13.Jalili J., Jiravarnsirikul A., Bowd C., et al. Glaucoma detection and feature identification via GPT-4V fundus image analysis. Ophthalmol Sci. 2025;5 [Google Scholar]

- 14.Chia M.A., Antaki F., Zhou Y., et al. Foundation models in ophthalmology. Br J Ophthalmol. 2024;108 [Google Scholar]

- 15.Mihalache A., Huang R.S., Cruz-Pimentel M., et al. Artificial intelligence chatbot interpretation of ophthalmic multimodal imaging cases. Eye. 2024;38:2491–2493. doi: 10.1038/s41433-024-03074-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Guo R., Wei J., Sun L., et al. A survey on advancements in image–text multimodal models: from general techniques to biomedical implementations. Comput Biol Med. 2024;178 [Google Scholar]

- 17.GPT-4V(ision) system card. https://openai.com/index/gpt-4v-system-card/

- 18.Xu P., Chen X., Zhao Z., Shi D. Unveiling the clinical incapabilities: a benchmarking study of GPT-4V(ision) for ophthalmic multimodal image analysis. Br J Ophthalmol. 2024;108:1384–1389. doi: 10.1136/bjo-2023-325054. [DOI] [PubMed] [Google Scholar]

- 19.Aftab O., Khan H., VanderBeek B.L., et al. Evaluation of ChatGPT-4 in detecting referable diabetic retinopathy using single fundus images. AJO Int. 2025;2 [Google Scholar]

- 20.Wu J.H., Nishida T., Moghimi S., Weinreb R.N. Effects of prompt engineering on large language model performance in response to questions on common ophthalmic conditions. Taiwan J Ophthalmol. 2024;14:454–457. doi: 10.4103/tjo.TJO-D-23-00193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Silva P.S., Cavallerano J.D., Sun J.K., et al. Nonmydriatic ultrawide field retinal imaging compared with dilated standard 7-Field 35-mm photography and retinal specialist examination for evaluation of diabetic retinopathy. Am J Ophthalmol. 2012;154:549–559.e2. doi: 10.1016/j.ajo.2012.03.019. [DOI] [PubMed] [Google Scholar]

- 22.Silva P.S., Cavallerano J.D., Sun J.K., et al. Peripheral lesions identified by mydriatic ultrawide field imaging: distribution and potential impact on diabetic retinopathy severity. Ophthalmology. 2013;120:2587–2595. doi: 10.1016/j.ophtha.2013.05.004. [DOI] [PubMed] [Google Scholar]

- 23.Flaxel C.J., Adelman R.A., Bailey S.T., et al. Diabetic retinopathy preferred practice pattern. Ophthalmology. 2020;127:P66–P145. doi: 10.1016/j.ophtha.2019.09.025. [DOI] [PubMed] [Google Scholar]

- 24.Wu L., Fernandez-Loaiza P., Sauma J., et al. Classification of diabetic retinopathy and diabetic macular edema. World J Diabetes. 2013;4:290–294. doi: 10.4239/wjd.v4.i6.290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Alqahtani A.S., Alshareef W.M., Aljadani H.T., et al. The efficacy of artificial intelligence in diabetic retinopathy screening: a systematic review and meta-analysis. Int J Retina Vitr. 2025;11:48. [Google Scholar]

- 26.Tahir H.N., Ullah N., Tahir M., et al. Artificial intelligence versus manual screening for the detection of diabetic retinopathy: a comparative systematic review and meta-analysis. Front Med. 2025;12 [Google Scholar]

- 27.Hu W., Joseph S., Li R., et al. Population impact and cost-effectiveness of artificial intelligence-based diabetic retinopathy screening in people living with diabetes in Australia: a cost effectiveness analysis. EClinicalMedicine. 2024;67 [Google Scholar]

- 28.Joseph S., Selvaraj J., Mani I., et al. Diagnostic accuracy of artificial intelligence-based automated diabetic retinopathy screening in real-world settings: a systematic review and meta-analysis. Am J Ophthalmol. 2024;263:214–230. doi: 10.1016/j.ajo.2024.02.012. [DOI] [PubMed] [Google Scholar]

- 29.Rajesh A.E., Davidson O.Q., Lee C.S., Lee A.Y. Artificial intelligence and diabetic retinopathy: AI framework, prospective studies, head-to-head validation, and cost-effectiveness. Diabetes Care. 2023;46:1728–1739. doi: 10.2337/dci23-0032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Krause J., Gulshan V., Rahimy E., et al. Grader variability and the importance of reference standards for evaluating machine learning models for diabetic retinopathy. Ophthalmology. 2018;125:1264–1272. doi: 10.1016/j.ophtha.2018.01.034. [DOI] [PubMed] [Google Scholar]

- 31.Ono D., Dickson D.W., Koga S. Evaluating the efficacy of few-shot learning for GPT-4Vision in neurodegenerative disease histopathology: a comparative analysis with convolutional neural network model. Neuropathol Appl Neurobiol. 2024;50 [Google Scholar]

- 32.Hansen M., Tang H.L. Automated detection of diabetic retinopathy in three European populations - university of surrey. J Clin Exp Ophthalmol. 2016;7:4. [Google Scholar]

- 33.Rizvi A., Rizvi F., Lalakia P., et al. Is artificial intelligence the cost-saving lens to diabetic retinopathy screening in Low- and middle-income countries? Cureus. 2023;15 [Google Scholar]

- 34.Ruamviboonsuk P., Chantra S., Seresirikachorn K., et al. Economic evaluations of artificial intelligence in ophthalmology. Asia Pac J Ophthalmol. 2021;10:307–316. [Google Scholar]

- 35.Fuller S.D., Hu J., Liu J.C., et al. Five-year cost-effectiveness modeling of primary care-based, nonmydriatic automated retinal image analysis screening among low-income patients with diabetes. J Diabetes Sci Technol. 2020;16:415–427. doi: 10.1177/1932296820967011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Xie Y., Nguyen Q.D., Hamzah H., et al. Artificial intelligence for teleophthalmology-based diabetic retinopathy screening in a national programme: an economic analysis modelling study. Lancet Digit Health. 2020;2:e240–e249. doi: 10.1016/S2589-7500(20)30060-1. [DOI] [PubMed] [Google Scholar]

- 37.Tufail A., Rudisill C., Egan C., et al. Automated diabetic retinopathy image assessment software: diagnostic accuracy and cost-effectiveness compared with human graders. Ophthalmology. 2017;124:343–351. doi: 10.1016/j.ophtha.2016.11.014. [DOI] [PubMed] [Google Scholar]

- 38.ChatGPT pricing. https://openai.com/chatgpt/pricing/

- 39.Sun H., Saeedi P., Karuranga S., et al. IDF diabetes atlas: global, regional and country-level diabetes prevalence estimates for 2021 and projections for 2045. Diabetes Res Clin Pract. 2022;183 [Google Scholar]

- 40.Cheong K.X., Zhang C., Tan T.E., et al. Comparing generative and retrieval-based chatbots in answering patient questions regarding age-related macular degeneration and diabetic retinopathy. Br J Ophthalmol. 2024;108:1443–1449. doi: 10.1136/bjo-2023-324533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ferro D.L., Roth J., Zinkernagel M., Anguita R. Application and accuracy of artificial intelligence-derived large language models in patients with age related macular degeneration. Int J Retina Vitr. 2023;9:71. [Google Scholar]

- 42.Raghu K., S T., S Devishamani C., et al. The utility of ChatGPT in diabetic retinopathy risk assessment: a comparative study with clinical diagnosis. Clin Ophthalmol Auckl NZ. 2023;17:4021–4031. [Google Scholar]

- 43.Gupta A., Al-Kazwini H. Evaluating chatgpt's diagnostic accuracy in detecting fundus images. Cureus. 2024;16 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.