Abstract

Extensive research over the past two decades has focused on identifying a preictal period in scalp as well as intracranial EEG (iEEG). This has led to a plethora of seizure prediction and forecasting algorithms which have reached only moderate success on curated and pre-segmented EEG datasets (accuracy/AUC ≳ 0.8). Furthermore, when tested on their ability to pseudo-prospectively predict seizures from continuous EEG recordings, all existing algorithms suffer from low sensitivity (large false negatives), high time in warning (large false positives), or both. In this study we provide pilot evidence that predictive modeling of the dynamics of iEEG features (biomarkers), seizure risk, or both at the scale of tens of minutes can significantly improve the pseudo-prospective accuracy of almost any state-of-the-art seizure forecasting model. In contrast to the bulk of prior research that has focused on designing better features and classifiers, we start from off-the-shelf features and classifiers and shift the focus to learning how iEEG features (classifier input) and seizure risk (classifier output) evolve over time. Using iEEG from patients undergoing presurgical evaluation at the Hospital of the University of Pennsylvania and six state-of-the-art baseline models, we first demonstrate that a wide array of iEEG features are highly predictable over time, with over 99% and 35% of studied features, respectively, having for 10-second- and 10-minute-ahead prediction (mean of 0.85 and 0.2). Furthermore, in almost all patients and baseline models, we observe a strong correlation between feature predictability (with some features remaining predictable up to 30 minutes) and classification-based feature importance. As a result, we subsequently demonstrate that adding an autoregressive model that predicts iEEG features on 12 ± 4 minutes into the future is almost universally beneficial, with a mean improvement of 28% in terms of area under pseudo-prospective sensitivity-time in warning curve (PP-AUC). Addition of the second autoregressive predictive model at the level of seizure risk further improved accuracy, with a total mean improvement of 51% in PP-AUC. Our results provide pioneering evidence for the long-term predictability of seizure-relevant iEEG features and the vast utility of time series predictive modeling for improving seizure forecasting using continuous intracranial EEG.

1. Introduction

Despite continuous advancements in antiseizure medications, over one-fifth of epilepsy patients remain refractory to pharmacological interventions1–3. Motivated by the potential to improve the lives of patients with drug-resistant epilepsy and powered by evidence that epileptic seizures may begin well in advance of clinical onset4, seizure prediction and forecasting have been the subject of intensive research efforts over the past two and a half decades5–12, all aiming to achieve the same goal: reliably identifying the preictal period with increasing temporal and statistical accuracy. The field has attracted considerable interest, as evidenced by various international initiatives and competitions13–16, collectively propelling substantial innovation in algorithmic approaches to seizure prediction. These efforts have resulted in a diverse range of methodologies, from statistical models to deep learning frameworks. Yet, despite this progress, accurate seizure forecasting remains an elusive goal. Existing algorithms universally suffer from low sensitivity, high false positives rates, or both, particularly when tested pseudo-prospectively on continuous EEG. This gap highlights a pressing need for novel approaches that go beyond the commonly-pursued goals of improved feature extraction and advanced classification techniques.

Existing seizure detection, prediction, and forecasting algorithms commonly operate by splitting the time series into (possibly overlapping) segments, extracting certain features (biomarkers) from each, and training a classifier on those features5–12, 17–20. The features may be purely signal-based20–25 or based on dynamical systems theory26–33; they may be derived from time domain24, 34–36, frequency domain34, 37–40, or both21, 23, 25, 41, 42; and the classification may be done via a simple threshold crossing43, 44 or various state-of-the-art machine learning algorithms23, 24, 41, 45–57. In all cases, however, this approach is critically limited as it treats the features extracted from different segments as independent and identically distributed (i.i.d.) observations from some underlying distributions. This independence is often implicit in the employed statistical and machine learning algorithms, but it ironically ignores mounting knowledge of the multiple-timescale dynamics of ictogenesis itself58, 59, as well as the various slow and ultra-slow dynamics of the circadian and multidien rhythms5, 17, 21, 60, 61, sleep-wake states62, 63, and seizure clustering64, 65 which all have well-documented modulatory effects on ictogenesis. As such, the standard approach misses a great opportunity for true “forecasting”, where the risk of a seizure occurring minutes later is determined not based the brain’s current state, but based on what we predict its state to be minutes into the future.

In this paper, we seek to improve the performance of existing machine learning-based seizure forecasting algorithms by augmenting them with two layers of predictive modeling: one at the level of iEEG features used as input for classification, and one at the level of seizure risk produced as output by the classification models. Our approach leverages the long-range temporal correlations in classification features to expand contextual information, and refines its estimates of seizure risk by filtering historical trends in its past risk estimates. Instead of proposing a new classifier, feature set, or model architecture, we introduce a general framework that operates independently of the specific choice of algorithm or features, adding additional layers to existing seizure prediction methods in a way that requires no retraining or fine-tuning of the baseline model. By integrating this framework with various baseline models, we demonstrate its capacity to consistently improve performance across a wide range of models, from support vector machines (SVMs) and random forests to convolutional neural networks (CNNs) and transformers.

2. Results

Multi-level predictive modeling for improved seizure prediction.

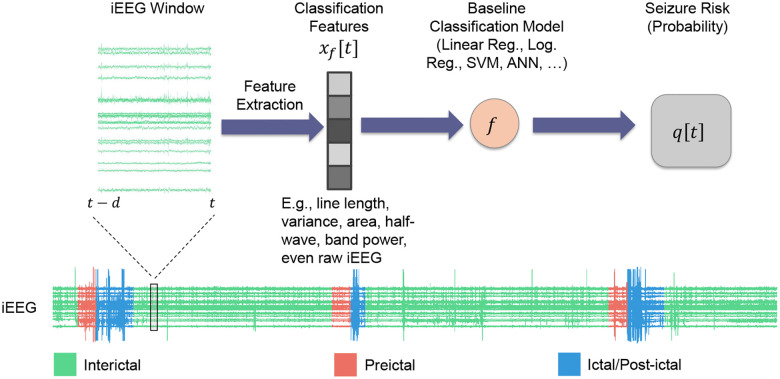

Significant prior work has pursued the design of seizure prediction algorithms from scalp and intracranial EEG5–12. Instead of seeking to design another such algorithm, in this work we take the orthogonal path of choosing a seizure prediction algorithm off the shelf and augmenting it with rich temporal statistical relationships that existing algorithms largely overlook. A typical seizure prediction/forecasting algorithm can be abstracted into a classifier that maps a vector of features extracted from a moving window of (i)EEG up to time (say, ) to the probability that the given window is preictal (Figure 1):

| (1) |

In what follows we will refer to the function as the baseline model and to time-varying probability as the seizure risk at time generated by this model. may subsequently be thresholded to obtain a binary interictal/preictal decision if needed.

Figure 1: Standard seizure prediction pipeline.

Existing seizure prediction/forecasting algorithms involve processing raw iEEG data through a series of steps to classify brain states as either preictal (seizure-impending) or interictal (baseline). This pipeline often starts with preprocessing to remove artifacts followed by extracting relevant features , a classification model that uses these features to predict the preictal probability of the given window , and an optional final thresholding to obtain a binary decision. While the same scheme may be used for any electrophysiological data, in this work we use pre-surgical iEEG from patients obtained from the iEEG.org database (see Methods). In all of what follows, we compute features over windows of size minutes, shifted each time by 1 step = 10 seconds.

By nature, the above procedure is reactive rather than predictive. A positive (i.e., preictal) detection, even if made correctly, says something about a time window in the past (i.e., ) rather than a potentially impending seizure in the future. Therefore, we augment the above approach with two predictive, autoregressive dynamical models that are placed before and after the classifier, respectively (Figure 2). The former takes the general form

| (2) |

where is a generally nonlinear dynamical model that takes as input the past lags of classification features from to , and predicts the future evolution of iEEG features steps into the future. We tune the structure and hyper-parameters of (particularly, and ) and train Eq. (2) as a regression model to minimize the error between predicted and true features over a patient-specific training set of data, separately for each patient and each feature (see Methods). Once learned, we feed the predictions of Eq. (2) at test time to the same classifier , giving the predicted risk

| (3) |

which is then used for pseudo-prospective seizure forecasting on a separate, within-patient test data (see Methods). The subscript B+F reflects the addition of feature dynamics in Eq. (2) to the baseline classifier , and distinguishes from the baseline risk in Eq. (1).

Figure 2: Proposed multi-level predictive modeling for improve seizure forecasting.

Unlike the bulk of prior research, we start with an off-the-shelf seizure forecasting model (cf. Figure 1) and augment it with one or both of two autoregressive predictive models: , that operates on iEEG features (inputs to ), and that operates on seizure risk (outputs from ). All models and modeling choices, including the structure, parameters, and hyper-parameters of and are tuned separately for each patient (see Methods). This results in 4 estimates of seizure risk , where (baseline), (baseline + feature dynamics), (baseline + risk dynamics), or (baseline + both dynamics). Each is used for pseudo-prospective seizure forecasting on a held-out continuous test duration of each patient’s own iEEG.

A second autoregressive dynamical model is in turn built to capture temporal dynamics at the output of the classifier. This model generalizes the commonsense approach of looking at a window of seizure risks to make a interictal/preictal decision, and similar to Eq. (2) takes the general form (Figure 2)

| (4) |

The function and the lag hyper-parameter are trained separately for each patient (see Methods). Given that each term is dependent on the underlying feature , this approach can be also considered a way to indirectly increase the iEEG context available for seizure forecasting and to refine the seizure risk at time based on its historical trends. Finally, we allow for the combination of feature and risk dynamics, giving rise to the combined estimate

In general, the choice of dynamical model (feature, risk, or combined) that leads to best pseudo-prospective accuracy varies from patient to patient and needs to be optimized per patient.

iEEG features follow predictable dynamics up to 30 minutes into the future.

We first investigated the temporal predictability of various iEEG-based features that are commonly used for seizure forecasting. This is important because at the core of the predictive scheme described above (Figure 2) is the autoregressive model in Eq. (2) that predicts the evolution of iEEG features into the future.† The viability of our scheme is therefore contingent on the presence of predictable dynamics in iEEG features .

Using an autoregressive (AR) form for feature dynamics as in Eq. (2), we examined how accurately each iEEG feature can be predicted into the future, with a focus on assessing whether features remained predictable over extended periods. In general the AR function can (and often should, cf. Figure 5) be nonlinear, but for this analysis we limited to be linear for simplicity and computational feasibility. We then parametrically varied the horizon length for every patient and every feature, and measured cross-validated (out-of-sample) regression accuracy as a function of .

Figure 5: Pseudo-prospective seizure forecasting accuracy improves after incorporating predictive dynamical modeling of iEEG features.

(a) Area under the pseudo-prospective sensitivity-time in warning curve (PP-AUC, cf. Figure 8 and Methods for details) for the baseline model (left, gray) and best dynamics-augmented alternative (right, colored) across five patients and six baseline models. The color of the right bar in each panel illustrates the best-performing model and matches the color code in (b). Each bar shows mean ± 2 s.e.m. over randomized train-test splits. (b) Summarizing the results in panel (a) across patients and baseline models. The use of dynamics results in higher PP-AUC (top), higher sensitivity at 30% time-in-warning (TiW) (middle), and lower TiW at 60% sensitivity (bottom). (c) Distribution of AR models that achieved the highest PP-AUC across all patients and baseline models. Note the major differences with the similar distribution in Figure 3. (d) Distribution (histogram) of the relative improvement in PP-AUC across all patient-baseline combinations in (a). Relative improvement is measured as (PP-AUCB+D - PP-AUCB) / PP-AUCB.

The results, as shown in Figure 3a, display the distribution of regression values as a function prediction horizon , combined across a wide range of seizure-relevant iEEG features separately for each patient (see Methods). As expected, the predictive accuracy generally decreases with prediction horizon . Interestingly, however, most features maintained above-chance predictability even over long horizons up to 10 minutes. Beyond the 10-minute mark more than half of the features often exhibit a negative (lower than chance predictability). Yet, in each patient there are some features that remain predictable even up to 30 minutes. These results support the hypothesis that iEEG features hold intrinsic temporal structures that persist over long horizons, providing a basis for long-term seizure forecasting.

Figure 3: Long-horizon predictability of seizure-relevant iEEG features using autoregressive (AR) dynamical models.

(a) Each panel, corresponding to one patient indicated at the top, shows the distribution of cross-validated of a linear -step-ahead AR model as in Eq. (2) as a function of prediction horizon . The vertical range in all panels is limited to [−1, 1] for better visualization. Each violin plot is combined across all features, which are themselves a union of various sets of features used by different seizure classification models in the literature (see Table 2 for a categorical breakdown and Methods for details). Green (resp., gray) indicates positive (negative) and better (worse) than chance performance. Most features remain predictable above chance up to 2–10 minutes depending on the patient, and across all patients some features remain predictable above chance up to 30 minutes. (b) Predictability of iEEG features by category. Data is the same as panel (a) but now combined across patients and separated by feature category (average number of features across patients: power features ≃ 2800, power correlation features ≃ 8000, time correlation features ≃ 8000, statistical features ≃ 1600, eigenvalue features ≃ 250). For a complete list of features in each category, see Methods. The bottom right sub-panel shows the medians of all distributions overlaid and color-coded by feature category. Power features clearly have the longest predictability compared to other categories. (c) Distribution of AR models that explain most variance (have maximum ) across all features and patients. For each patient-feature, we fit five different AR models (1 linear, 4 nonlinear) and selected the one with the highest cross-validated . MLP: multi-layer perceptron, KNN: k-nearest neighbor. See Methods for details on each model.

Importantly, the long-horizon predictability of iEEG features varies significantly across feature categories (time-domain, frequency-domain, etc.). As shown in Figure 3b, power features have the farthest predictability, while the predictability of eigenvalue features (eigenvalues of channel cross-correlation matrices) drops to chance level most quickly (see Methods for details). Yet, not all frequency-domain features are necessarily better predictable than time-domain features; power correlation features, e.g., decay to chance-level more quickly than time correlation features. Though beyond the scope of this work, these differences in predictability can be very valuable for improved feature engineering and classifier design for seizure forecasting.

Further, using potentially nonlinear AR models can further improve the long-horizon predictability of iEEG features, but only marginally. For each patient and iEEG feature, we compared five different functional forms for Eq. (2)–the linear and 4 nonlinear forms. We then found the most accurate model for each patient-feature and computed the win percentage of model across all patient-features. As shown in Figure 3c, in about three quarter of cases, the linear model achieves the highest , followed by the multi-layer perceptron artificial neural network that approximately covers the remaining quarter of patient-features. Notably, this result shows that the dynamic linearity we had found earlier at the level of raw iEEG potentials66 approximately holds also for a wide range of iEEG features. Though weak, however, the nonlinearity of iEEG features is not negligible and will in fact prove to be critical for dynamics-augmented seizure forecasting (cf. Figure 5).

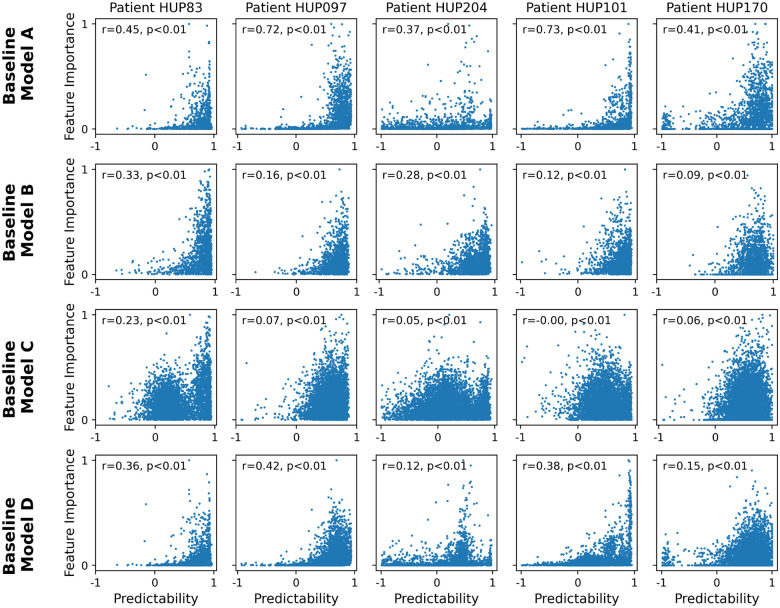

iEEG features with higher predictability tend to also have higher importance in seizure classification.

We next examined the importance of each iEEG feature for seizure classification across several state-of-the-art seizure forecasting models, and compared the importance of each feature with its long-horizon predictability (Figure 4). While the exact definition of feature importance varies between classification models (see Methods), it generally quantifies the sensitivity of the classifier’s output to variations in each input (feature) and is a metric of how relevant each feature has been for seizure classification based on training data. Note that, in general, the importance and predictability of features are independent quantities coming from two orthogonal approaches to the iEEG time series (cross-sectional seizure-focused classification vs. temporal seizure-independent regression). Therefore, the relationship between feature importance and feature predictability is critical for the success of integrating feature dynamics (Eq. (2)) into seizure forecasting. In particular, it is the conjunction of feature importance and predictability that will make dynamics-augmenting potentially beneficial, whereas either alone is unlikely to be sufficient.

Figure 4: Presence of significant positive correlation between feature predictability and feature importance across various state-of-the-art seizure classification models.

Each panel shows the relationship between feature predictability and feature importance for one patient and one classification model. Each dot represents one iEEG feature, and predictability is computed as the cross-validated of a linear AR model with a prediction horizon of 2 minutes. The horizontal range of each panel is limited to [−1, 1] for better visualization. Feature importance is computed based on the respective baseline model, see Methods for details. The Spearman correlation coefficient and associated p-value are shown in each panel.

As seen from Figure 4, in nearly all baseline models and all patients, there exists a strong positive correlation between feature importance and feature predictability. As with most aspects of seizure forecasting, there exists strong heterogeneity among patients as well as baseline models. In general, the positive correlation between feature importance and predictability is strongest for baseline model B and weakest for the baseline model C. Further, except for model C, this relationship is often generally monotonic and approximately exponential, with a rapid and strong increase in feature importance as predictability approaches 1. These results thus strongly support the potential benefit of dynamics-augmentation for seizure forecasting (Figure 2), as we directly examine next.

Incorporating feature dynamics improves accuracy of pseudo-prospective seizure forecasting.

Building on the established predictability of seizure-relevant iEEG features over extended time intervals, we used the estimated seizure risk in Eq. (3) for continuous pseudo-prospective seizure forecasting using iEEG data from five patients from the Hospital of the University of Pennsylvania (HUP) on the iEEG.org portal. To demonstrate that our approach is robust and can adapt to various baseline models, we implemented six different state-of-the-art seizure forecasting models as the baseline model (Figure 1 and Eq. (1)) for each patient (see Methods). These models reflect a wide range of effective strategies in seizure forecasting, including models recognized for their strong performance in seizure research (Baseline Models A and B), models that have excelled in Kaggle-hosted crowed-sourced competitions (Models C and D), and approaches using state-of-the-art machine learning (Models E and F). In each case, we measure seizure forecasting accuracy based on the area under the pseudo-prospective sensitivity-time in warning curve (PP-AUC, Figure 8, see Methods for details).

Figure 8: Illustrative example of performance metric used for comparison between methods, namely, the pseudo-prospective area under the curve (PP-AUC).

This metric closely resembles the standard area under the receiving operator characteristic curve, with the main difference being the substitution of false positive rate with time in warning.

The results, summarized in Figure 5, demonstrate a strong improvement in seizure forecasting accuracy across nearly all patients and baseline models. For each patient-baseline combination, we evaluated the same five candidates for feature dynamical modeling ( in Eq. (2)) as in Figure 3c and selected the one with the highest PP-AUC. Figure 5a shows a breakdown of average PP-AUC for baseline and best-performing dynamics-augmented alternative across all patients and baseline models. The success rate of each dynamical model and the distribution of relative improvement in PP-AUC are summarized in panels b and c, respectively. Except for one case where the baseline model alone is slightly better than all dynamical alternatives, in all other patient-baseline combinations augmenting the baseline model with AR feature dynamics results in a higher PP-AUC. Across all patient-baseline combinations, we obtain a mean increase in PP-AUC of 28%, signifying the substantial but untapped potential of simple AR modeling for improved seizure forecasting.

Besides improving the accuracy of seizure forecasting, predictive dynamical models can provide insights into the nature of seizure dynamics. In particular, the optimal length of “past” and “future” that maximizes seizure forecasting accuracy are important markers of the timescale at which seizures arise in each patient (Figure 6). Note that these values are based on optimizing seizure forecasting accuracy (PP-AUC) and are thus different from values shown in Figure 3 that reflect pure feature autoregressive accuracy. Notably, we can see from Figure 6 that both the optimal number of autoregressive lags (past) and prediction horizons (future) are internally stable for each patient, externally variable across patients, and overall on the order of ~10 minutes for all patients. These values, while consistent with the earliest reports of the preictal period4, provide detailed measures of temporal structures in iEEG that are sufficiently robust and reproducible in each patient that can be used for automated seizure forecasting.

Figure 6: Optimal temporal timescale of iEEG feature dynamics for seizure forecasting.

The left and right panels show the optimal number of autoregressive lags (history length) and the optimal prediction horizon (step size), respectively. Both values are obtained using the linear model based on maximizing the PP-AUC score and reported separately per patient as median (across all features for that patient) ± 1 s.e.m. Note that both the optimal number of lags and the optimal prediction horizon varies considerably across patients, reflecting the vast heterogeneities that exist among epilepsy patients and the need for patient-specific modeling.

Modeling risk dynamics further enhances seizure predictability.

Next we examined the effects of adding a second layer of predictive dynamics at the level of seizure risk, Eq. (4), on pseudo-prospective seizure forecasting. Similar to above, we for each patient-baseline combination we used a training portion of iEEG data to fit the baseline model, five feature-predictive models , and one risk-predictive model , resulting in 12 possible combinations (baseline alone or baseline with either of the 5 forms of , each with or without ). We then evaluated the PP-AUC of each of these 12 possibilities in patient-specific test data, repeated over all train-test splits, and found the model with the highest mean PP-AUC for each patient-baseline combination.

The results, shown in Figure 7, demonstrate the additional benefit of including risk dynamics over not only the baseline but also the combination of baseline and feature dynamics. Similar to Figure 5, inclusion of dynamic predictive models improves PP-AUC in all except one patient-baseline combination (Figure 7a). However, the addition of risk dynamics has further improved PP-AUC in half of the cases (Figure 7c), bringing the total mean improvement in PP-AUC over baseline to 51% (Figure 7d).

Figure 7: Further improvements in seizure forecasting accuracy with incorporation of feature and risk dynamics.

(a) Side-by-side breakdown of improvements in PP-AUC resulting from incorporation of both feature (Eq. (2)) and risk (Eq. (4)) dynamics. Details are similar to Figure 5a. In each sub-panel, the color of the bar on the right still shows the form of feature dynamics that resulted in highest PP-AUC, and the dotted hatches show the presence of risk dynamics in the best-performing model. (b) Summary comparisons of PP-AUC, sensitivity at 30% TiW, and TiW at 60% sensitivity between baseline and dynamics-augmented forecasters across all patients and baseline models. Details parallel those in Figure 5b. (c) Distribution of models that achieved the highest PP-AUC across all patients and baseline models. Details are similar to Figure 5c with the addition of dotted hatches showing the presence of risk dynamics in the best-performing model as in (a). (d) Distribution of relative improvement in PP-AUC across all patient-baseline combinations similar to Figure 5d. (e) Distributions of raw PP-AUCs for all four possible combinations of baseline models with feature and/or risk dynamics. Each distribution combines across all 5 patients and 6 baseline models.

Figure 7e shows the overall distribution of PP-AUC across all patient-baseline combinations using , and . The addition of risk dynamics, both in the absence and presence of feature dynamics, plays a more regulatory role, reducing outliers and increasing the robustness and reliability of seizure forecasting via a dynamic filtering of the risk dynamics. This effect can also be seen from the detailed breakdown of seizure forecasting with and without the risk dynamics alone (Supplementary Figure 1) and results in only a marginal gain in the absence of feature prediction. It is the latter that is the main driver of improved PP-AUC, and the combination of the two dynamic layers that results in best seizure forecasting overall.

3. Discussions

Seizure prediction and forecasting research over the past two decades has largely reduced these problems to statistical classification and/or regression, and relied primarily on advancements in machine learning and data acquisition to improve outcomes. In this study we provided pioneering evidence for the utility of an orthogonal dimension of improvement, i.e., explicitly modeling temporal dynamics over classification features and seizure risk. Using publically available pre-surgical iEEG data from five patients at the Hospital of the University of Pennsylvania (HUP) and six state-of-the-art seizure forecasting models as baseline, we showed that adding simple autoregressive (AR) predictive models at the level of iEEG features can increase pseudo-prospective forecasting accuracy by an average of 28%. This improvement increased to 51% when we added a second AR model at the level of seizure risk, while the latter alone had marginal benefit. Additional analyses revealed that these benefits primarily stem from the convergence of two factors: long-term predictability of many iEEG features up to tens of minutes, and strong positive correlation between the predictability of iEEG features and their importance for seizure classification. In addition to revealing a new dimension of temporal dynamics in the context of ictogenesis, these results open the door to various future studies into the benefits of predictive modeling in specific applications of seizure prediction and forecasting.

In general, the benefits of predictive modeling in a context such as seizure forecasting depends on a trade off between accuracy and latency. On the one hand, predictive modeling (Eq. (2)) uses temporal statistical correlations to predict iEEG features several minutes before they occur, and this creates an advantage in terms of latency. However, on the other hand, any predictive model will inevitably introduce errors due to the stochastic nature of the underlying time series, suboptimal modeling, etc. This creates a trade off (which becomes stronger as the prediction horizon increase) between using “future” features which are only approximate, or using present features which are perfectly accurate. As such, it is neither trivial nor guaranteed that adding predictive modeling should improve seizure forecasting, and which effect dominates is in general patient-, baseline classifier-, and horizon-dependent. Given this context, our findings are significant as they show that for almost all patient-baseline combinations and at least some prediction horizons, it is latency that dominates accuracy.

Our study further addresses an important gap in seizure forecasting research, namely, moving beyond curated and pre-segmented preictal-interictal data and benchmarking algorithms based on pseudo-prospective analysis (PPA) on continuous data. As clearly seen from Figure 7e, the median pseudo-prospective AUC across all the models and patients we tested is about 0.35 at baseline (and about 0.5 even after addition of predictive modeling). This is alarmingly lower than commonly reported AUCs on the basis of curated data (see, e.g., Ref.67). The latter values are likely to be overly optimistic and not the most accurate reflection of how each algorithm would actually perform in a clinical setting. One major reason behind this gap is the additional difficulty of preventing false positives when an algorithm is monitoring continuous iEEG with long interictal durations which can contain numerous “seizure-looking” motifs. This is a serious challenge, however, and in need of more focused attention in future research. Whether a seizure forecasting algorithm is used to deliver advisory warnings to the patient or closed-loop neurostimulation to the brain, the additional challenges of PPA are crucial and inevitable in either setting.

In the context of PPA, also notable are the differences between acutely- and chronically-recorded iEEG. In this study we used pre-surgical iEEG data recorded acutely at the HUP epilepsy monitoring unit (EMU). By nature, however, EMU conditions are highly non-stationary and recorded seizures are likely more heterogeneous in their mechanisms and dynamics than each patient’s normal seizures outside of the hospital. The number of recorded seizures is also very limited in acute data, with only a few for most patients and hardly over a dozen in any patient. Both factors (abnormally high heterogeneity and very few recorded seizures) are major bottlenecks in using acute data, particularly for data-driven algorithms like those investigated here. We therefore expect seizure forecasting algorithms to reach (potentially significantly) higher PP-AUCs if used with chronically-recorded data. On the other hand, however, chronically recorded iEEG has significantly lower spatiotemporal coverage than acute iEEG, and future work is needed to rigorously test and benchmark the algorithms studied in this work on chronically recorded data. Also a promising avenue for future research is testing the benefits of predictive modeling on seizure forecasting using scalp EEG and even other physiological time series (heart rate, seizure occurrence, etc.).

A long-standing question in epilepsy research is what exactly constitutes the “preictal” period68. In this work we initially used the common one-hour preseizure window (precisely, 65–5min before seizure onset) for labeling data for the purpose of training each classifier. However, our predictive modeling results yielded optimal prediction horizons (i.e., value that resulted in maximum PP-AUC) that varied widely across patients, ranging approximately between 7 to 20 minutes (Figure 6). These values are interestingly consistent with the earliest reports of the preictal period4, and are promising from a clinical standpoint as even a five-minute warning could give patients enough time to reach a safe space or administer medication to reduce the risk of seizure occurrence. At the same time, these results highlight the importance of accurate patient-specific characterization of the preictal period, and suggest as a potential data-driven biomarker that can be used for this purpose.

An interesting finding of our study was a major disagreement between the choice of predictive models of iEEG features that directly maximized regression (Figure 3c) and the choice that maximized downstream pseudo-prospective forecasting accuracy (Figure 5c). As far as the former is concerned, a linear AR model best described iEEG feature dynamics in most cases. However, complex, nonlinear models (particularly those based on the Koopman operator theory) led to greater accuracy when used in the context of seizure forecasting. This is interesting from at least two perspectives. First is the importance of end-to-end (rather than piece-by-piece) optimization when multiple analyses/models are combined within a larger pipeline. Second is the additional complexity of seizure forecasting compared to pure iEEG feature prediction. This additional complexity may be the reason why a linear model can be optimal for pure feature prediction, but a more expressive nonlinear model may give predictions that are more useful for seizure forecasting. Future work is needed to dive more deeply into and mechanistically explain the nature of the dynamics of iEEG features and seizure risk.

This study has a number of limitations. The exploratory nature of our work required us to implement and test a combinatorially large number of structural and parametric choices for each patient, each of which required running computationally expensive algorithms on continuous iEEG from tens to hundreds of channels over days to weeks of recording. Therefore, in this work we only used data from 5 patients that had a (relatively) large numbers of lead seizures (Table 1). In this selection we did not further restrict the patient’s type of epilepsy in order to maximize the number of lead seizures per patient, a choice that also showcases the promise of our approach across diverse epilepsies. Future work will aim to extend our findings to larger cohorts through more focused, hypothesis-driven investigations. Another limitation of this work is that our dynamical models, similar to the baseline classifiers they augment, are data-driven and do not provide a mechanistic understanding of the relationship between each individual’s pathophysiology and their predictive dynamics. This, in particular, makes it harder to generalize models across individuals and limits the success of seizure forecasting to the quality and quantity of available data. Another constraint is that seizure annotations that we have used (i.e., “the ground truth”) were have been performed manually by a board-certified epileptologist, limiting our analyses to seizures that have been documented in clinical records. Human errors and inter-rater variability have been documented and studied extensively, but manual annotations still remain the gold standard due largely to the complexities of automated seizure detection69, 70. Finally, our current implementation does not impose limitations on CPU or memory usage. This flexibility is advantageous for exploratory work but will need to be addressed for future translations to implantable devices.

Table 1:

Data characteristics of the patients used in this study. “Other cortex” refers to seizure onset zones outside the mesial temporal or temporal neocortical structures.

| Patient ID | Seizure Onset Zone | # Channels | Data duration (days) | # Lead Seizures |

|---|---|---|---|---|

| HUP083 | Other Cortex | 92 | 13.7 | 11 |

| HUP097 | Multifocal | 102 | 13.1 | 12 |

| HUP101 | Multifocal | 102 | 12.3 | 10 |

| HUP170 | Other Cortex | 228 | 6.7 | 13 |

| HUP204 | Mesial Temporal Lobe | 117 | 6.1 | 10 |

In sum, our findings underscore the importance of slow dynamics in seizure forecasting, provide an innovative approach for using slow dynamics at the levels of iEEG features and seizure risk, and illustrate a largely-untapped potential for improved seizure forecasting. These insights pave the way for future work focused on enhancing model robustness and generalizability, meta-learning across large cohorts of patients, and prospective clinical testing in both acute and chronic contexts.

4. Methods

Data.

All analyses and experiments presented in this paper were conducted using publicly available iEEG data from the International Epilepsy Electrophysiology Portal (iEEG.org). The data for all patients consisted of stereoelectroencephalography (sEEG) recordings. We present results on patients, each with varying seizure characteristics, electrode channels, and seizure durations, as summarized in Table 1. patient selection was based on the availability of a sufficient number of lead seizures (≥ 10), which is critical for enabling a reliable train-test split in the seizure prediction framework. While additional patients were available in the database, only a small subset satisfied this criterion, and we further restricted our analyses to five patients in order to manage the extensive computational experiments required for this study. We down-sampled all recordings to 200 Hz for uniformity and lowering the computation complexity of feature extraction. For each patient, seizure onset and offset times were manually annotated by a trained epileptologist and accompanied the raw data on iEEG.org.

Using the inter-seizure intervals we annotated a seizure as a lead seizure if it occurred at least 8 hours away from the previous seizure offset. Interictal periods were defined as durations occurring at least 3 hours away from any seizure event in both temporal directions, whereas the interval [65, 5] minutes before each lead seizure was labeled as preictal. For each patient, we constructed the training set using the preictal periods from the first three lead seizures, together with three neighboring interictal periods of 1 hour each. To further ensure that the baseline classifier had sufficient training data, we also randomly selected one or two additional lead seizures (with matched interictal segments) for inclusion in the training set. Limiting this number to at most two strikes a balance between providing enough data for classifier training and retaining the majority of seizures for unbiased pseudo-prospective evaluation. The corresponding segments were excluded from testing, and all remaining iEEG data after the third lead seizure were reserved for evaluation.

Baseline classification models.

As noted earlier, throughout this work we started from a given (off-the-shelf) “baseline” seizure forecasting model (Eq. (1)) and studied the benefits of augmenting it with predictive models at the level of its input (iEEG features, ) and/or output (seizure risk, ). Therefore, our approach is in general agnostic to the choice of baseline model and its iEEG features , and we have evaluated it using a diverse set of baseline models and features. Specifically, we used six different baseline models, as summarized in Table 2. We selected these models based on the following criteria: those employed in influential papers on seizure forecasting (Models A and B), those shown to be effective in crowd-sourced Kaggle competitions (Models C and D), and those representative of modern machine learning approaches (Models E and F). For the latter four, publicly available code facilitated implementation, whereas for the first two, we replicated their classifiers using our custom code based on our best understanding of the corresponding papers and their available supplementary material.

Table 2:

List of baseline seizure forecasting models used in this work.

| Baseline Model | Classifier () | Features (x) | Reference |

|---|---|---|---|

| A | k-Nearest Neighbor | Power features, Statistical features (line-length, signal average) | Based on Ref.71 |

| B | Logistic Regression | Power features | Based on Ref.72 |

| C | Support Vector Machine | Power features, Power correlation, Eigenvalues | ‘Birchwood’ model in Ref.73 |

| D | Random Forest | Power features, Time correlation, Eigenvalues, Statistical features (channel kurtosis, standard deviation, line-length) | Team B in Ref.15 |

| E | Convolutional Neural Network | Power features | Based on Ref.47 |

| F | Transformer | Power feature | Model in Ref.57 |

For each baseline model we computed its respective features as originally proposed on a 2-minute moving window. The window was shifted each time by 50ms and 10s on training and test data, respectively. After feature extraction, we train each baseline model as a supervised binary classifier and extract its resective seizure risk from before the final thresholding step. The resulting, continuous seizure risk trajectory was computed on both the training and test samples. The former was used to train the risk-predictive models in Eq. (4), while the latter were used to compute each baseline model’s PP-AUC (see below).

Feature categories.

The features used for model training were grouped into five categories to better illustrate their differences in dynamics (Figure 3). Power features capture the spectral content of the iEEG by computing band-limited power for each channel, either in canonical frequency bands (delta, theta, alpha, beta, gamma) or in equally spaced frequency bins used in Baseline Models C. In total, for each patient we had power features, where denotes the number of iEEG channels (see Table 1). Power correlation features measure the interdependence of spectral activity across channels by computing correlation matrices between band power time series, e.g., correlating the power in band 1 of channel 12 with the power in band 3 of channel 4. The total number of power correlation features equals . for each patient. Time correlation features similarly compute pairwise correlations, but directly on the raw iEEG time series rather than on band powers, quantifying linear relationships between channels in the time domain. Similar to power correlation features, for each patient the total number of time correlation features is given by . Eigenvalue features summarize the global structure of these correlation matrices by using their eigenvalues ( features in total), which capture overall network synchrony and connectivity patterns. Finally, Statistical features are various simple time-domain signal statistics from each channel, which may vary slightly across baseline models. Typical examples include line length, mean, standard deviation, and the standard deviation of first differences. These features capture basic amplitude and variability characteristics of the signals. In total we had statistical features for each patient.

Computation of feature importance for baseline models.

To relate feature predictability (from the feature dynamical model) to their relevance for classification, we computed feature importance scores for the baseline classifiers using model-appropriate approaches. For -nearest neighbors (Model A), where importance is not explicitly defined, we used a permutation approach: feature values were shuffled across samples, and the resulting drop in accuracy was recorded. For logistic regression and linear SVM (Models B and C), importance was taken as the absolute value of the learned coefficients or weight vector, reflecting the strength of association between features and the decision boundary. For the random forest (Model D), importance was quantified by the mean decrease in Gini impurity, as implemented by the scikit-learn package in python. For the deep learning models (Models E and F), we did not compute feature importance, since attribution methods (e.g., saliency maps) are computationally intensive and beyond the scope of this study.

Predictive modeling of iEEG feature dynamics.

For each patient we learned the feature-predictive model in Eq. (2) as a nonlinear regression problem. We considered five different forms for , as detailed in Table 3, and tuned the parameters of each model by minimizing its sum of squared error

over the training dataset. In all cases, the function was learned element by element, such that the future values of each iEEG feature were predicted using its own past history. The hyper-parameters of each model were fixed as listed in Table 3, except for the main hyper-parameters and , which were optimized by iterating over min and min separately for each patient.

Table 3:

List of autoregressive dynamical models used for -step ahead prediction of iEEG features in Eq. (2).

| Model | Equation |

|---|---|

| Linear | |

| Quadratic | |

| KNN | where are time indices of the -nearest neighbors among training data to . We used for all patients. |

| MLP | where is the activation function, are weight matrices, and . We used for all patients. |

| Koopman autoencoder74 | and , where the encoder is with , and the decoder is with . Here, is the latent dimension (set to 64), and is the learnable Koopman operator that evolves the latent state forward by one step. and . |

KNN: k-nearest neighbor; MLP: multi-layer perceptron.

Predictive modeling of seizure risk.

The second-level autoregressive (AR) model implements more holistic decision making by looking at historical trends in seizure risk instead of only instantaneous risk. Further, in contrast to simpler techniques such as majority voting or ensemble methods, which aggregate decisions from multiple classifiers or time points, the AR model learns sequential trends in data which can lead to more nuanced decision-making. For each patient and baseline model (Eq. (1)), we train as a standard supervised classification problem using the same training data used for learning the baseline model and feature-predictive model . The values of are provided as input and the preictal/interictal labels provided as target output. In all cases we have used a random forest classifier for with a lag of (15 minutes), which we have empirically observed to perform better than alternative methods such as logistic regression and KNN. Once the classifier is trained, for each test sample we obtain by computing the ratio of the number of positive (preictal)-classifying tree divided by the number of all decision trees in the random forest.

Pseudo-prospective analysis.

In comparison to the more common approach of evaluating a seizure forecasting model based on pre-segmented, often balanced datasets, pseudo-prospective analysis (PPA) measures the accuracy of a seizure forecasting model based on its ability to continuously monitor an iEEG stream over multiple days and correct predict clinically marked seizures while minimizing false alarms during extended interictal periods. Following72, 75, we implement pseudo-prospective analysis as follows. Given a test period , we first evaluate the seizure risk time series where , or . For and , this time series is directly shifted by steps to compensate for the lack of an -step ahead predictive component. We then sweep over the value a threshold between 0 and 1, and for each fixed value of we calculate the values of sensitivity (true positive rate) and time in warning as follows. At any time , a warning period is triggered when exceeds , and the warning remains active for a fixed duration of 30 minutes following the threshold crossing. This blocking is important in PPA for the stability of the warning raised by the algorithm, as an otherwise “flickering” warning would have little utility and/or lead to major confusions in decision making. The sensitivity is then computed as the fraction of seizures that occur during an active warning period, and time in warning as the percentage of total test time spent in warning. These measures, both a function of , capture the burdens of false negatives and false positives, respectively.

By varying the threshold we then obtain the pseudo-prospective sensitivity-time in warning curve, as exemplified in Figure 8. The bottom left and top right corners of this curve are achieved trivially by all models, corresponding to and , respectively. We measure the accuracy of each seizure fore caster based on the area under this curve, denoted by pseudo-prospective area under the curve or PP-AUC. PP-AUC quantifies the trade-off between sensitivity and TIW, allowing for an analysis of how well the model balances early detection and false alarms. PP-AUC ≃ 1 denotes a near-perfect model that correctly predicts all seizures with no false positives, PP-AUC ≃ 0 denotes the opposite, and PP-AUC ≃ 0.3 ~ 0.4 denotes an approximately at-chance model (chance is often lower than 0.5 because of the temporal blocking in PPA).

Computing chance-level PP-AUC.

Unlike standard AUC for which the chance level is 0.5, even with imbalanced data, chance-level PP-AUC is often less than 0.5 because of the temporal (here, 30min) blocking in PPA. Further, we cannot simply shuffle the test samples to obtain chance level, as seizures typically cluster in time and shuffling would destroy their clustering which is beneficial for any (chance included) seizure forecaster. Therefore, to obtain chance-level PP-AUC we randomize the baseline model by training it over a shuffled training set in which the preictal/interictal labels are randomly shuffled. This randomized model is then tested in PPA as usual, ensuring a fair chance level for PP-AUC that truly reflects a randomized decision maker without altering the structure of PPA.

Statistical comparison.

To allow for statistical comparisons between models, we repeated the classifier training multiple times, each time using the preictal periods from the first three lead seizures together with three neighboring interictal segments, and additionally including one or two randomly selected lead seizures (with matched interictal segments) to augment the training set. The corresponding segments were excluded from evaluation, and the remaining iEEG data after the third lead seizure were used for testing. For each random draw of training segments, seizure likelihood estimates from the trained classifier were used to calculate the corresponding PP-AUCs.

Supplementary Material

Acknowledgements.

The research performed in this study was supported in part by the National Science Foundation Award Number 2239654 (to E.N.) and the National Institute of Health Award Number R01-NS-116504 (to K.A.D.).

Footnotes

Additional Information. The iEEG data used in this study is publicly available from the iEEG.org Portal at https://www.ieeg.org/.

The autoregressive model , while important, is secondary and plays a filtering (smoothing) role.

References

- [1].Picot M.-C., Baldy-Moulinier M., Daurès J.-P., Dujols P., and Crespel A., “The prevalence of epilepsy and pharmacoresistant epilepsy in adults: a population-based study in a western european country,” Epilepsia, vol. 49, no. 7, pp. 1230–1238, 2008. [DOI] [PubMed] [Google Scholar]

- [2].Fattorusso A., Matricardi S., Mencaroni E., Dell’Isola G. B., Di Cara G., Striano P., and Verrotti A., “The pharmacoresistant epilepsy: an overview on existent and new emerging therapies,” Frontiers in neurology, vol. 12, p. 674483, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Berg A. T., “Identification of pharmacoresistant epilepsy,” Neurologic clinics, vol. 27, no. 4, pp. 1003–1013, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Litt B., Esteller R., Echauz J., D’Alessandro M., Shor R., Henry T., Pennell P., Epstein C., Bakay R., Dichter M. et al. , “Epileptic seizures may begin hours in advance of clinical onset: a report of five patients,” Neuron, vol. 30, no. 1, pp. 51–64, 2001. [DOI] [PubMed] [Google Scholar]

- [5].Baud M. O., Proix T., Gregg N. M., Brinkmann B. H., Nurse E. S., Cook M. J., and Karoly P. J., “Seizure forecasting: bifurcations in the long and winding road,” Epilepsia, vol. 64, pp. S78–S98, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Andrzejak R. G., Zaveri H. P., Schulze-Bonhage A., Leguia M. G., Stacey W. C., Richardson M. P., Kuhlmann L., and Lehnertz K., “Seizure forecasting: Where do we stand?” Epilepsia, vol. 64, pp. S62–S71, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Cheng J. C. and Goldenholz D. M., “Seizure prediction and forecasting: a scoping review,” Current Opinion in Neurology, vol. 38, no. 2, pp. 135–139, 2025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Shoeibi A., Ghassemi N., Khodatars M., Jafari M., Hussain S., Alizadehsani R., Moridian P., Khosravi A., Hosseini-Nejad H., Rouhani M., Zare A., Khadem A., Nahavandi S., Atiya A. F., and Acharya U. R., “Epileptic seizure detection using deep learning techniques: A review,” arXiv preprint arXiv:2007.01276, 2020. [Google Scholar]

- [9].Kim T., Nguyen P., Pham N., Bui N., Truong H., Ha S., and Vu T., “Epileptic seizure detection and experimental treatment: A review,” Frontiers in Neurology, vol. 11, p. 701, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Sharmila A. and Geethanjali P., “A review on the pattern detection methods for epilepsy seizure detection from eeg signals,” Biomedical Engineering/Biomedizinische Technik, vol. 64, no. 5, pp. 507–517, 2019. [DOI] [PubMed] [Google Scholar]

- [11].Paul Y., “Various epileptic seizure detection techniques using biomedical signals: a review,” Brain informatics, vol. 5, no. 2, p. 6, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Leijten F. S., Consortium D. T., van Andel J., Ungureanu C., Arends J., Tan F., van Dijk J., Petkov G., Kalitzin S., Gutter T., de Weerd A., Vledder B., Thijs R., van Thiel G., Roes K., Hofstra W., Lazeron R., Cluitmans P., Ballieux M., and de Groot M., “Multimodal seizure detection: A review,” Epilepsia, vol. 59, pp. 42–47, 2018. [DOI] [PubMed] [Google Scholar]

- [13].bbrinkm and Cukierski W., “American epilepsy society seizure prediction challenge,” https://kaggle.com/competitions/seizure-prediction, 2014, kaggle. [Google Scholar]

- [14].Kuhlmann L., LizLopez, O’Connell M., rudyno5, Wang S., and Cukierski W., “Melbourne university aes/mathworks/nih seizure prediction,” https://kaggle.com/competitions/melbourne-university-seizure-prediction, 2016, kaggle. [Google Scholar]

- [15].Kuhlmann L., Karoly P., Freestone D. R., Brinkmann B. H., Temko A., Barachant A., Li F., Titericz G. Jr, Lang B. W., Lavery D. et al. , “Epilepsyecosystem. org: crowd-sourcing reproducible seizure prediction with long-term human intracranial eeg,” Brain, vol. 141, no. 9, pp. 2619–2630, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].E. E. S. Laboratory, “Seizure detection challenge (2025),” Epilepsy Benchmarks website, 2025. [Online]. Available: https://epilepsybenchmarks.com/challenge/ [Google Scholar]

- [17].Stirling R. E., Cook M. J., Grayden D. B., and Karoly P. J., “Seizure forecasting and cyclic control of seizures,” Epilepsia, vol. 62, pp. S2–S14, 2021. [DOI] [PubMed] [Google Scholar]

- [18].Costa G., Teixeira C., and Pinto M. F., “Comparison between epileptic seizure prediction and forecasting based on machine learning,” Scientific Reports, vol. 14, no. 1, p. 5653, 2024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Dümpelmann M., “Early seizure detection for closed loop direct neurostimulation devices in epilepsy,” Journal of neural engineering, vol. 16, no. 4, p. 041001, 2019. [DOI] [PubMed] [Google Scholar]

- [20].Kassiri H., Tonekaboni S., Salam M. T., Soltani N., Abdelhalim K., Velazquez J. L. P., and Genov R., “Closed-loop neurostimulators: A survey and a seizure-predicting design example for intractable epilepsy treatment,” IEEE transactions on biomedical circuits and systems, vol. 11, no. 5, pp. 1026–1040, 2017. [DOI] [PubMed] [Google Scholar]

- [21].Khambhati A. N., Chang E. F., Baud M. O., and Rao V. R., “Hippocampal network activity forecasts epileptic seizures,” Nature Medicine, vol. 30, no. 10, pp. 2787–2790, 2024. [Google Scholar]

- [22].Skarpaas T. L., Jarosiewicz B., and Morrell M. J., “Brain-responsive neurostimulation for epilepsy (rns® system),” Epilepsy Research, vol. 153, pp. 68–70, 2019. [DOI] [PubMed] [Google Scholar]

- [23].Yang H., Mueller J., Eberlein M., Kalousios S., Leonhardt G., Duun-Henriksen J., Kjaer T., and Tetzlaff R., “Seizure forecasting with ultra long-term eeg signals,” Clinical Neurophysiology, vol. 167, pp. 211–220, 2024. [DOI] [PubMed] [Google Scholar]

- [24].Halimeh M., Jackson M., Loddenkemper T., and Meisel C., “Training size predictably improves machine learning-based epileptic seizure forecasting from wearables,” Neuroscience Informatics, vol. 5, no. 1, p. 100184, 2025. [Google Scholar]

- [25].Liang S.-F., Liao Y.-C., Shaw F.-Z., Chang D.-W., Young C.-P., and Chiueh H., “Closed-loop seizure control on epileptic rat models,” Journal of neural engineering, vol. 8, no. 4, p. 045001, 2011. [DOI] [PubMed] [Google Scholar]

- [26].Ashourvan A., Pequito S., Khambhati A. N., Mikhail F., Baldassano S. N., Davis K. A., Lucas T. H., Vettel J. M., Litt B., Pappas G. J., and Bassett D. S., “Model-based design for seizure control by stimulation,” Journal of Neural Engineering, vol. 17, no. 2, p. 026009, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Rafik D. and Larbi B., “Autoregressive modeling based empirical mode decomposition (emd) for epileptic seizures detection using eeg signals.” Traitement du Signal, vol. 36, no. 3, 2019. [Google Scholar]

- [28].Solaija M. S. J., Saleem S., Khurshid K., Hassan S. A., and Kamboh A. M., “Dynamic mode decomposition based epileptic seizure detection from scalp eeg,” IEEE Access, vol. 6, pp. 38 683–38 692, 2018. [Google Scholar]

- [29].Chang W.-C., Kudlacek J., Hlinka J., Chvojka J., Hadrava M., Kumpost V., Powell A. D., Janca R., Maturana M. I., Karoly P. J., Freestone D. R., Cook M. J., Palus M., Otahal J., Jefferys J. G. R., and Jiruska P., “Loss of neuronal network resilience precedes seizures and determines the ictogenic nature of interictal synaptic perturbations,” Nature neuroscience, vol. 21, no. 12, pp. 1742–1752, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].Li A., Inati S., Zaghloul K., and Sarma S., “Fragility in epileptic networks: the epileptogenic zone,” in 2017 American Control Conference (ACC). IEEE, 2017, pp. 2817–2822. [Google Scholar]

- [31].Aarabi A. and He B., “Seizure prediction in hippocampal and neocortical epilepsy using a model-based approach,” Clinical Neurophysiology, vol. 125, no. 5, pp. 930–940, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Chisci L., Mavino A., Perferi G., Sciandrone M., Anile C., Colicchio G., and Fuggetta F., “Real-time epileptic seizure prediction using ar models and support vector machines,” IEEE Transactions on Biomedical Engineering, vol. 57, no. 5, pp. 1124–1132, 2010. [DOI] [PubMed] [Google Scholar]

- [33].Kiymik M. K., Subasi A., and Ozcalık H. R., “Neural networks with periodogram and autoregressive spectral analysis methods in detection of epileptic seizure,” Journal of Medical Systems, vol. 28, no. 6, pp. 511–522, 2004. [DOI] [PubMed] [Google Scholar]

- [34].Logesparan L., Casson A. J., and Rodriguez-Villegas E., “Optimal features for online seizure detection,” Medical & biological engineering & computing, vol. 50, no. 7, pp. 659–669, 2012. [DOI] [PubMed] [Google Scholar]

- [35].Gotman J., “Automatic seizure detection: improvements and evaluation,” Electroencephalography and clinical Neurophysiology, vol. 76, no. 4, pp. 317–324, 1990. [DOI] [PubMed] [Google Scholar]

- [36].Esteller R., Echauz J., Tcheng T., Litt B., and Pless B., “Line length: an efficient feature for seizure onset detection,” in 2001 Conference Proceedings of the 23rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, vol. 2. IEEE, 2001, pp. 1707–1710. [Google Scholar]

- [37].Zhang Z. and Parhi K. K., “Low-complexity seizure prediction from ieeg/seeg using spectral power and ratios of spectral power,” IEEE transactions on biomedical circuits and systems, vol. 10, no. 3, pp. 693–706, 2015. [DOI] [PubMed] [Google Scholar]

- [38].Hopfengärtner R., Kasper B. S., Graf W., Gollwitzer S., Kreiselmeyer G., Stefan H., and Hamer H., “Automatic seizure detection in long-term scalp eeg using an adaptive thresholding technique: a validation study for clinical routine,” Clinical Neurophysiology, vol. 125, no. 7, pp. 1346–1352, 2014. [DOI] [PubMed] [Google Scholar]

- [39].Saab M. and Gotman J., “A system to detect the onset of epileptic seizures in scalp eeg,” Clinical Neurophysiology, vol. 116, no. 2, pp. 427–442, 2005. [DOI] [PubMed] [Google Scholar]

- [40].Bandarabadi M., Rasekhi J., Teixeira C., and Dourado A., “Sub-band mean phase coherence for automated epileptic seizure detection,” in The International Conference on Health Informatics. Springer, 2014, pp. 319–322. [Google Scholar]

- [41].Hills M., “Seizure detection using fft, temporal and spectral correlation coefficients, eigenvalues and random forest,” Github, San Fr. CA, USA, Tech. Rep, pp. 1–10, 2014. [Google Scholar]

- [42].Bartolomei F., Chauvel P., and Wendling F., “Epileptogenicity of brain structures in human temporal lobe epilepsy: a quantified study from intracerebral eeg,” Brain, vol. 131, no. 7, pp. 1818–1830, 2008. [DOI] [PubMed] [Google Scholar]

- [43].Sun F. T. and Morrell M. J., “The rns system: responsive cortical stimulation for the treatment of refractory partial epilepsy,” Expert review of medical devices, vol. 11, no. 6, pp. 563–572, 2014. [DOI] [PubMed] [Google Scholar]

- [44].Berényi A., Belluscio M., Mao D., and Buzsáki G., “Closed-loop control of epilepsy by transcranial electrical stimulation,” Science, vol. 337, no. 6095, pp. 735–737, 2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Feng T., Ni J., Gleichgerrcht E., and Jin W., “Seizureformer: A transformer model for ieabased seizure risk forecasting,” arXiv preprint arXiv:2504.16098, 2025. [Google Scholar]

- [46].Gleichgerrcht E., Dumitru M., Hartmann D. A., Munsell B. C., Kuzniecky R., Bonilha L., and Sameni R., “Seizure forecasting using machine learning models trained by seizure diaries,” Physiological measurement, vol. 43, no. 12, p. 124003, 2022. [Google Scholar]

- [47].Truong N. D., Nguyen A. D., Kuhlmann L., Bonyadi M. R., Yang J., Ippolito S., and Kavehei O., “Convolutional neural networks for seizure prediction using intracranial and scalp electroencephalogram,” Neural Networks, vol. 105, pp. 104–111, 2018. [DOI] [PubMed] [Google Scholar]

- [48].Kiral-Kornek I., Roy S., Nurse E., Mashford B., Karoly P., Carroll T., Payne D., Saha S., Baldassano S., O’Brien T., Grayden D., Cook M., Freestone D., and Harrera S., “Epileptic seizure prediction using big data and deep learning: toward a mobile system,” EBioMedicine, vol. 27, pp. 103–111, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Chen W., Chiueh H., Chen T., Ho C., Jeng C., Ker M., Lin C., Huang Y., Chou C., Fan T., Cheng M., Hsin Y., Liang S., Wang Y., Shaw F., Huang Y., Yang C., and Wu C., “A fully integrated 8-channel closed-loop neural-prosthetic cmos soc for real-time epileptic seizure control,” IEEE Journal of Solid-State Circuits, vol. 49, no. 1, pp. 232–247, 2013. [Google Scholar]

- [50].Tzallas A. T., Tsipouras M. G., and Fotiadis D. I., “Epileptic seizure detection in eegs using time–frequency analysis,” IEEE transactions on information technology in biomedicine, vol. 13, no. 5, pp. 703–710, 2009. [DOI] [PubMed] [Google Scholar]

- [51].Zheng Y.-x., Zhu J.-m., Qi Y., Zheng X.-x., and Zhang J.-m., “An automatic patient-specific seizure onset detection method using intracranial electroencephalography,” Neuromodulation: Technology at the Neural Interface, vol. 18, no. 2, pp. 79–84, 2015. [DOI] [PubMed] [Google Scholar]

- [52].Shoeb A., Edwards H., Connolly J., Bourgeois B., Treves S. T., and Guttag J., “Patient-specific seizure onset detection,” Epilepsy & Behavior, vol. 5, no. 4, pp. 483–498, 2004. [DOI] [PubMed] [Google Scholar]

- [53].Heller S., Hügle M., Nematollahi I., Manzouri F., Dümpelmann M., Schulze-Bonhage A., Boedecker J., and Woias P., “Hardware implementation of a performance and energy-optimized convolutional neural network for seizure detection,” in 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). IEEE, 2018, pp. 2268–2271. [Google Scholar]

- [54].Manzouri F., Heller S., Dümpelmann M., Woias P., and Schulze-Bonhage A., “A comparison of machine learning classifiers for energy-efficient implementation of seizure detection,” Frontiers in systems neuroscience, vol. 12, p. 43, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Hügle M., Heller S., Watter M., Blum M., Manzouri F., Dumpelmann M., Schulze-Bonhage A., Woias P., and Boedecker J., “Early seizure detection with an energy-efficient convolutional neural network on an implantable microcontroller,” in 2018 International Joint Conference on Neural Networks (IJCNN). IEEE, 2018, pp. 1–7. [Google Scholar]

- [56].Donos C., Dümpelmann M., and Schulze-Bonhage A., “Early seizure detection algorithm based on intracranial eeg and random forest classification,” International journal of neural systems, vol. 25, no. 05, p. 1550023, 2015. [DOI] [PubMed] [Google Scholar]

- [57].Yan J., Li J., Xu H., Yu Y., and Xu T., “Seizure prediction based on transformer using scalp electroencephalogram,” Applied Sciences, vol. 12, no. 9, p. 4158, 2022. [Google Scholar]

- [58].El Houssaini K., Bernard C., and Jirsa V. K., “The epileptor model: a systematic mathematical analysis linked to the dynamics of seizures, refractory status epilepticus and depolarization block,” Eneuro, 2020. [Google Scholar]

- [59].Guirgis M., Chinvarun Y., Del Campo M., Carlen P. L., and Bardakjian B. L., “Defining regions of interest using cross-frequency coupling in extratemporal lobe epilepsy patients,” Journal of neural engineering, vol. 12, no. 2, p. 026011, 2015. [DOI] [PubMed] [Google Scholar]

- [60].Proix T., Truccolo W., Leguia M. G., King-Stephens D., Rao V. R., and Baud M. O., “Forecasting seizure risk over days,” MedRxiv, p. 19008086, 2019. [Google Scholar]

- [61].Karoly P. J., Goldenholz D. M., Freestone D. R., Moss R. E., Grayden D. B., Theodore W. H., and Cook M. J., “Circadian and circaseptan rhythms in human epilepsy: a retrospective cohort study,” The Lancet Neurology, vol. 17, no. 11, pp. 977–985, 2018. [DOI] [PubMed] [Google Scholar]

- [62].Rocamora R., Andrzejak R. G., Jiménez-Conde J., and Elger C. E., “Sleep modulation of epileptic activity in mesial and neocortical temporal lobe epilepsy: a study with depth and subdural electrodes,” Epilepsy & Behavior, vol. 28, no. 2, pp. 185–190, 2013. [DOI] [PubMed] [Google Scholar]

- [63].Cordeiro I. M., Von Ellenrieder N., Zazubovits N., Dubeau F., Gotman J., and Frauscher B., “Sleep influences the intracerebral eeg pattern of focal cortical dysplasia,” Epilepsy research, vol. 113, pp. 132–139, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [64].Cook M. J., Karoly P. J., Freestone D. R., Himes D., Leyde K., Berkovic S., O’Brien T., Grayden D. B., and Boston R., “Human focal seizures are characterized by populations of fixed duration and interval,” Epilepsia, vol. 57, no. 3, pp. 359–368, 2016. [DOI] [PubMed] [Google Scholar]

- [65].Ferastraoaru V., Schulze-Bonhage A., Lipton R. B., Dümpelmann M., Legatt A. D., Blumberg J., and Haut S. R., “Termination of seizure clusters is related to the duration of focal seizures,” Epilepsia, vol. 57, no. 6, pp. 889–895, 2016. [DOI] [PubMed] [Google Scholar]

- [66].Nozari E., Stiso J., Caciagli L., Cornblath E. J., He X., Bertolero M. A., Mahadevan A. S., Pappas G. J., and Bassett D. S., “Is the brain macroscopically linear? a system identification of resting state dynamics,” bioRxiv, 2020. [Online]. Available: https://www.biorxiv.org/content/early/2020/12/22/2020.12.21.423856 [Google Scholar]

- [67].Epilepsy Ecosystem, “Leaderboard,” https://www.epilepsyecosystem.org/leaderboard, accessed: 2025-07-18.

- [68].Litt B. and Echauz J., “Prediction of epileptic seizures,” The Lancet Neurology, vol. 1, no. 1, pp. 22–30, 2002. [DOI] [PubMed] [Google Scholar]

- [69].Tveit J., Aurlien H., Plis S., Calhoun V. D., Tatum W. O., Schomer D. L., Arntsen V., Cox F., Fahoum F., Gallentine W. B. et al. , “Automated interpretation of clinical electroencephalograms using artificial intelligence,” JAMA neurology, vol. 80, no. 8, pp. 805–812, 2023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [70].Xu Z., Scheid B. H., Conrad E., Davis K. A., Ganguly T., Gelfand M. A., Gugger J. J., Jiang X., LaRocque J. J., Ojemann W. K. et al. , “Annotating neurophysiologic data at scale with optimized human input,” Journal of Neural Engineering, 2025. [Google Scholar]

- [71].Cook M. J., O’Brien T. J., Berkovic S. F., Murphy M., Morokoff A., Fabinyi G., D’Souza W., Yerra R., Archer J., Litewka L. et al. , “Prediction of seizure likelihood with a long-term, implanted seizure advisory system in patients with drug-resistant epilepsy: a first-in-man study,” The Lancet Neurology, vol. 12, no. 6, pp. 563–571, 2013. [DOI] [PubMed] [Google Scholar]

- [72].Karoly P. J., Ung H., Grayden D. B., Kuhlmann L., Leyde K., Cook M. J., and Freestone D. R., “The circadian profile of epilepsy improves seizure forecasting,” Brain, vol. 140, no. 8, pp. 2169–2182, 2017. [DOI] [PubMed] [Google Scholar]

- [73].Brinkmann B. H., Wagenaar J., Abbot D., Adkins P., Bosshard S. C., Chen M., Tieng Q. M., He J., Muñoz-Almaraz F., Botella-Rocamora P. et al. , “Crowdsourcing reproducible seizure forecasting in human and canine epilepsy,” Brain, vol. 139, no. 6, pp. 1713–1722, 2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [74].Takeishi N., Kawahara Y., and Yairi T., “Learning koopman invariant subspaces for dynamic mode decomposition,” Advances in neural information processing systems, vol. 30, 2017. [Google Scholar]

- [75].Snyder D. E., Echauz J., Grimes D. B., and Litt B., “The statistics of a practical seizure warning system,” Journal of neural engineering, vol. 5, no. 4, p. 392, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.