Abstract

Objective

Accelerate adoption of clinical research technology that obtains electronic health record (EHR) data through HL7 Fast Healthcare Interoperability Resources (FHIR).

Materials and Methods

Based on experience helping institutions implement REDCap-EHR integration and surveys of users and potential users, we discuss the technical and organizational barriers to adoption with strategies for remediation.

Results

With strong demand from researchers, the 21st Century Cures Act Final Rule in place, and REDCap software already in use at most research organizations, the environment seems ideal for REDCap—EHR integration for automated data exchange. However, concerns from information technology and regulatory leaders often slow progress and restrict how and when data from the EHR can be used.

Discussion and Conclusion

While technological controls can help alleviate concerns about FHIR applications used in research, we have found that messaging, education, and extramural funding remain the strongest drivers of adoption.

Keywords: Electronic Health Records (D057286), Research (D012106), interoperability, SMART-on-FHIR, implementation

Introduction

The ability to pull electronic health record (EHR) data into the REDCap electronic data capture system has been one of the most highly requested features since REDCap’s inception in 2004.1 Researchers hoped to solve the “swivel-chair problem” where study personnel were transcribing or copy-pasting data from the EHR into an electronic data capture (EDC) system like REDCap. This inefficient and error-prone workflow was rampant in clinical research.2–5 The REDCap Consortium hosts a convention for informatics administrators,6 and from 2015 to 2018, EHR data integration was one of the most discussed topics. Therefore, when we announced the Clinical Data Interoperability Services (CDIS) module for REDCap in 2018, which enabled REDCap users to extract data directly from the EHR, we expected rapid uptake from many of the 2679 institutions using REDCap at the time.7

Besides the demand from researchers and clear benefits for efficiency and accuracy, there were several factors that we believed would contribute to the rapid adoption of CDIS among REDCap Consortium members. Around the CDIS release, the US Food and Drug Administration was actively issuing guidance for real-world data for clinical trials.8 CDIS was included in the core code for REDCap, meaning that any institution using a version of REDCap released after 2019 had the EHR extraction feature installed and ready for connection to the EHR. Institutions would not need to have additional software reviewed by their information technology (IT) gatekeepers. Additionally, the enactment of the 21st Century Cures Act Final Rule by the Office of the National Coordinator in 2020 required EHR vendors and healthcare organizations to make data available for access to external systems through Health Level Seven Fast Healthcare Interoperability Resources (FHIR).9 Any certified EHR system would have an application programming interface (API) through which REDCap could connect to pull data with users’ EHR system credentials.

The CDIS feature was extensively vetted by security, privacy, and research regulatory teams and the institutional review board at VUMC. VUMC saw rapid adoption of CDIS. From January 2019 to July 2024, VUMC researchers have used CDIS in 243 projects, pulling over 62 million data points for 168,051 records. VUMC is an active member of the Clinical and Translational Science Awardee (CTSA) community and leader in the Trial Innovation Network as a Trial Innovation Center and Recruitment Innovation Center.10–12 As such, we believed that clinical research innovations and their approval processes at VUMC might generalize to other academic research organizations.

We had several federally funded projects and network studies that encouraged the use of CDIS. As the data coordinating center for some of these studies, Vanderbilt provided support and resources to aid in the adoption of CDIS for clinical trials. The setup and mapping of EHR data to REDCap fields in CDIS were performed by researchers. No support was needed from an IT professional, which should have made the module attractive for reducing the burden on IT departments. Finally, we had an engaged administrator community through the REDCap Consortium that provided support in the adoption and refinement of new features such as CDIS. REDCap Consortium members have shared strategies on how to support users, training materials, and configuration files through the REDCap Community website.

Despite numerous positive factors that would seemingly favor early and widespread CDIS adoption, relatively few institutions initially took advantage of the feature. While slow adoption of new clinical research technology is expected, the fact that CDIS was proven valuable and fully integrated within REDCap should have given it an advantage over other software. The purpose of this case study is to describe the barriers to implementation for a well-established, highly trusted FHIR application such as REDCap, and to offer strategies for overcoming some of them.

Methods

By supporting REDCap Consortium members in their implementation of CDIS, we learned many lessons about technical and organizational barriers to SMART-on-FHIR applications for research. Our engagement with researchers, technical staff, and leadership at many academic medical centers worldwide provided a diverse perspective and actionable, real-world insights into implementing FHIR technology effectively. Some of the programmatic venues where we support institutions implementing CDIS are twice-monthly office hours open to REDCap administrators and health IT representatives, a monthly EHR working group of REDCap administrators, and many individual consultations with study teams, research leadership, and technical teams. Building on these insights, we also conducted a survey of current and potential CDIS implementers. Through email, we invited anyone who requested the application from their EHR vendor or who attended an office hour session with us seeking help with setting up CDIS to complete the survey. We asked them to rate pre-defined barriers identified from our prior experience on a scale from 0 (not at all a barrier) to 100 (major barrier). The VUMC institutional review board approved this study (#250800).

Results

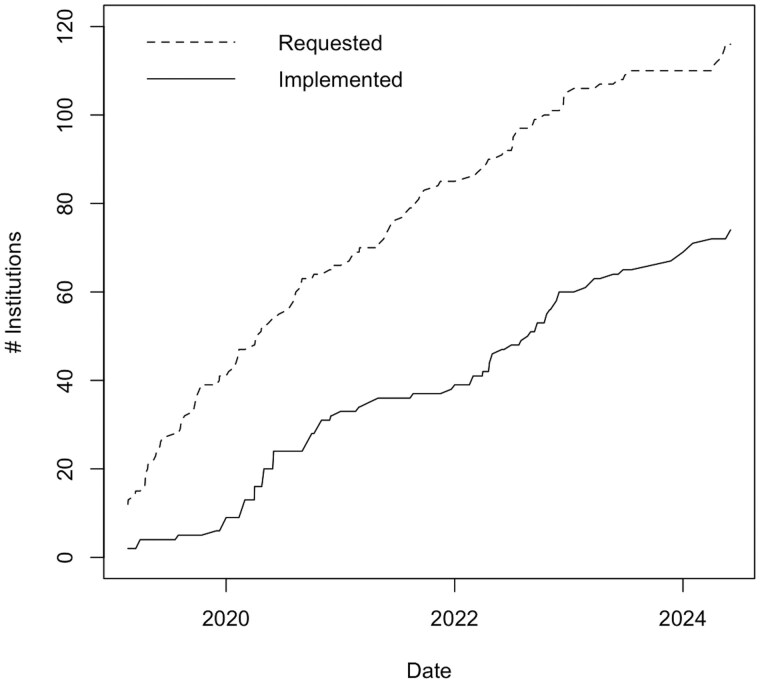

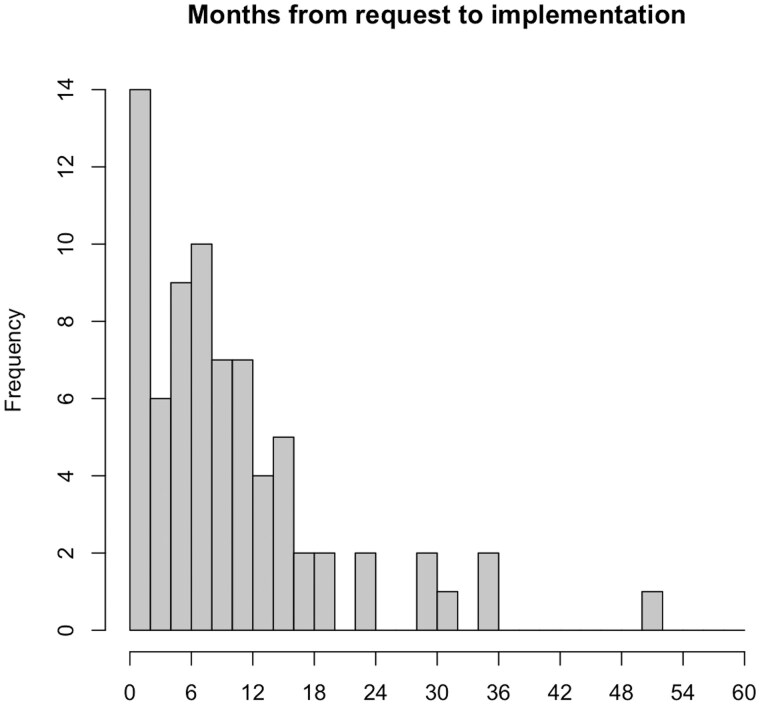

As of May 2024, 77 institutions were using CDIS out of 7202 institutions worldwide using REDCap. The number of institutions that have adopted CDIS with the Epic EHR system since launch in 2018 lags noticeably compared to those who had requested the integration (Figure 1). Institutions that did implement CDIS took anywhere from under a month to over 4 years to implement with a median of 8 months (Figure 2).

Figure 1.

Number of institutions requesting the REDCap-Epic integration compared to those who had implemented.

Figure 2.

Months from request to implementation of REDCap-Epic integration for 74 institutions who successfully deployed CDIS.

For the survey of implementers, we sent out 429 invitations and received 60 responses. Table 1 summarizes the barriers to implementation reported in surveys, the mean barrier score and standard deviation, and strategies that we have employed to address them. We saw heterogeneity in the reporting of specific variables across participating institutions.

Table 1.

Barriers to REDCap Clinical Data Interoperability Services (CDIS) adoption, remedies that have demonstrated success, and the average rating of barrier to implementation (0 = not a barrier, 100 = major barrier) in a study of current and potential CDIS implementers (n = 60).

| Barriers | Remedies | Mean barrier rating (standard deviation) |

|---|---|---|

| Competing with clinical priorities for Health Information Technology effort to implement CDIS | Extramural funding with local PI or informatician to serve as champion for CDIS implementation | 57 (32) |

| Lack of REDCap personnel with knowledge about EHR data and credentials/expertise to test CDIS | Cross-train REDCap administrator in EHR data and/or study coordinator or data manager with EHR expertise in REDCap | 54 (31) |

| Technical and networking setup | Engaging IT leadership early in the process. Once approvals are obtained, get all technical stakeholders on regular calls until connection is set up and tested | 50 (34) |

| Concerns that users do not understand the limitations of using EHR data for research | Training or paid consultations for users by informatics professionals | 48 (32) |

| Perception that all study data must be obtainable from the EHR for all participants for CDIS to be useful | Demonstration of accuracy and efficiency improvements for subsets of study data and participants through published comparison studies.13,14 | 39 (30) |

| Concerns that personal health information for other individuals might be included in EHR notes and inadvertently pulled to CDIS | Feature to disable FHIR resources across the REDCap instance customize exclusion of sensitive resources like notes | 35 (31) |

| Perceived competition or redundancy with existing EHR data resources | Improved resources for the proper use cases for CDIS versus research databases and other EHR data sources | 33 (30) |

| Separation between research and clinical enterprises requires third-party agreements for data transfers | Setting up an instance of REDCap owned by the clinical enterprise for CDIS and other covered entity projects | 27 (30) |

| Institutional Review Board or regulatory requiring that access be blocked for data not specified in a research application | Emphasis for leadership that users only have access to data they already have in the EHR and that all access is logged and auditable. Training for users that they are accountable for the responsible use of EHR data and the conduct of research | 27 (16) |

| Misconception that CDIS could write data into the EHR | Clear documentation of controls through EHR application services that restrict any resources with write capabilities | 25 (29) |

| [Non-US institutions] Country-specific non-USCDI core terminologies not pre-programmed in REDCap | Custom mapping feature in CDIS with codelist curation by local team | 23 (28) |

Discussion

Major barriers to adoption include the number of approvals required from IT and regulatory leadership, and the fear that leaders often have in allowing new technologies that access patient data. Moreover, the individuals who make the final decision on whether to approve new SMART-on-FHIR applications are different from institution to institution, which makes providing guidance on local navigation challenging. Even though providers and researchers are only accessing data they already have access to through the EHR browser, alleviating data privacy and security concerns requires persistence from researchers and the REDCap administrators that support them. In addition to reiterating the benefits to clinical research operations, reminding leadership that patient data access through FHIR is a federal requirement, and that other applications with fewer safeguards than REDCap are using FHIR to collect patient data, can be an effective means of easing their concerns.

Institutional review board (IRB) and regulatory teams required technical safeguards to prevent users from accessing data not specified in their research application, even if they already had access to that data in the EHR. CDIS was built to inherit EHR data access rights, allowing users with EHR credentials to pull data they already have access to in the clinical system into REDCap in real time. Therefore, imposing limitations on the data pulled is not possible through CDIS technically. Some sites proposed creating a proxy server between the EHR and REDCap to prevent unauthorized data, both at a FHIR resource level and a patient level. This proved intractable because the effort to maintain a server nullified any efficiency gains for research teams. Instead, we recommended training emphasizing that CDIS users were responsible for any patient records from which they collected data in REDCap just as they were when they performed chart review through the EHR browser. Researchers were made aware that any data pulled from the EHR was logged both in the EHR and in REDCap, and that those logs were auditable by research compliance offices or IRBs.

Another barrier noted by potential CDIS institutions was the concern that researchers would not properly use data pulled from the EHR, nor know how to map data properly in REDCap. EHR data are not collected for research purposes, and the limitations of using EHR data for research are well documented.15 We remind sites regularly that only users who have EHR credentials can use CDIS, and we recommend that those individuals using CDIS have gone through EHR training, which covers extensively the setting and context for EHR data entry.

Several institutions noted that they did not have personnel with both REDCap and EHR data expertise to train or advise researchers in using CDIS. To address this concern, we have recommended that sites cross-train at least one REDCap administrator on the EHR or at least one coordinator with EHR data experience in REDCap. While the goal is still for researchers to build their own CDIS projects, the cross-trained individual would be able to train and advise on CDIS use. In addition, we have generated training documents that can be used to complement local training efforts.

Some sites noted that structured data from the EHR is insufficient, and that the useful information for clinical research is in unstructured data such as clinical notes. The granularity of EHR notes can provide additional insights that are often not captured in structured formats.16,17 The ability to obtain and analyze notes in real time with CDIS has enormous potential for complex phenotyping or screening in clinical research with natural language processing and large language models. A concern raised was that personal health information related to individuals other than the patient, such as family medical history, may be documented in clinical notes and inadvertently pulled to CDIS. While this is an important concern, CDIS is still an improvement for security and privacy compared to current workflows where coordinators frequently copy and paste entire notes into REDCap or middleware, or are accessing large corpora of notes. Nevertheless, we plan to implement a feature that allows institutions to disable specific FHIR resources, such as clinical notes, across the entire REDCap instance.

Most of the barriers to implementation we have faced are with US partners. However, international partners in Canada, Europe, and Australia have expressed interest in CDIS. US institutions implement the United States Core Data for Interoperability (USCDI) FHIR profile, which specifies certain codelists. Other countries use different coding systems, such as the Canadian Classification of Health Interventions. While it was possible for REDCap to include international coding systems in codelists, having to search through long lists of international codes could decrease usability for US customers who make up the majority of CDIS users. We addressed this challenge by allowing sites to create institution-specific custom codelists, which can be shared between REDCap institutions in the same country.

Another challenge to implementing CDIS is perceived competition or redundancy with existing EHR data resources. Many academic organizations have teams that pull data from a copy of their EHR database for research in a common data model (CDM) such as Observational Medical Outcomes Partnership CDM18 or The National Patient-Centered Clinical Research Network CDM.19 The ideal use cases for CDIS are fundamentally different from those relying on harmonized or transformed data, and those requiring relational databases (Table 2). REDCap Clinical Data Pull (the CDIS mode, which sources data from the EHR directly into case report form fields), is most appropriate for prospective data where near real-time data (updated in REDCap 5-10 minutes after entry in EHR) may be required. The Clinical Data Mart (the CDIS mode that pulls all data points for specified patients and resources over a given period) updates less frequently (every 24 hours) but can pull longitudinal records for a pre-specified list of patients. An ideal use case of Clinical Data Mart is inpatient and intensive care studies, where there is a high density of laboratory and vital signs data, and all participants have records in the EHR due to admission to that hospital being an enrollment criterion.

Table 2.

Contrasting scenarios for REDCap Clinical Data Pull, Clinical Data Mart, and Relational EHR Research Databases

| REDCap Clinical Data Pull | REDCap Clinical Data Mart | Relational EHR or research database | |

|---|---|---|---|

| Appropriate study types | Prospective case report form studies | Registry, limited longitudinal studies | Large retrospective cohort longitudinal studies |

| Real-timeliness of data updated from EHR | 5-10 minutes | 24 hours | 24 hours to weeks |

| Ideal number of patients per site over the entire study | 25-10 000 | 25-500 | 100-1 000 000+ |

| Researcher effort required for setup per site | 4-40 hours depending on study complexity | 1-10 hours | 1-4 hours to create data request |

| Health IT or data analyst effort required for setup | Not applicable | Not applicable | 4-40 hours depending on query complexity |

The greatest driver for CDIS implementation has been funding of multisite studies that require its use. While the NIH has encouraged the use of FHIR in research,20,21 most funding announcements stop short of mandating FHIR.22 REDCap CDIS is available at no cost to academic, non-profit, and government organizations. Therefore, we believe that multisite and coordinating center applications for funding could specify CDIS use for participating sites when appropriate. When we repeated a REDCap case report form study using automated vital signs and laboratory results data transferred with CDIS, we estimated that coordinators could reduce their data entry time by 84%.13 Setting up the EHR and REDCap to use CDIS may only require a few hours of effort from EHR team members. However, implementation can drag on for months without strong informatics or clinical champion support (Figure 2), which is consistent with previous surveys about readiness for FHIR implementation.23 Funding for implementing standard infrastructure ensures that a research or clinical champion, typically the site principal investigator, is engaged in the implementation and pushing for health IT prioritization.

Conclusion

Despite advances in interoperability between EHRs and external systems brought about by the adoption of FHIR standards and the 21st Century Cures Act Final Rule, challenges remain for using them for clinical research. Multisite implementers of clinical research software using EHR FHIR APIs should heed the most important lesson learned from CDIS: Develop clear messaging about the value, proper use of the interface, and access controls for IT and regulatory leadership. In our future work, we will work with funders and coordinating centers to consider budgeting for application deployment, which has associated setup costs, but will pay dividends in time saved for research personnel and improved data quality.

Acknowledgments

The authors would like to acknowledge the members of the REDCap Consortium who have collaborated to improve CDIS for the benefit of the entire clinical research enterprise. We would also like to acknowledge Alejandro Araya from UTHealth Science Center-Houston; Andrew Poppe from Yale University; Ashleigh Lewis from Seattle Children’s Research Institute; Chris Battiston from Women’s College Hospital; Christopher Martin from Nicklaus Children’s Health System; Kathryn Cook, and Joseph Wick from Mayo Clinic; Leila Deering from Marshfield Clinic Research Institute; Eliel Melon from University of Puerto Rico—Medical Sciences Campus; Giovanni Ometto from London North West Healthcare NHS Trust; Marcela Martínez von Scheidt from Hospital Italiano de Buenos Aires, Argentina; Kayode Alalade from Institute of Human Virology Nigeria; Olawale Akinbobola from Philip B Chase, University of Florida; Rachel Houk, Nancy Wittmer, Kelley Mancine from Denver Health & Hospital Authority; Sara Hollis from Ascension Saint Thomas; Srividhya Asuri from University of Kentucky Center for Clinical and Translational Science; Scott M. Carey from Johns Hopkins University; Sashah Damier from Nova Southeastern University; Todd Miller from Children’s Hospital Colorado; Izabelle Humes from Oregon Health & Science University; Meredith Nahm Zozus from University of Texas Health Science Center at San Antonio; Theresa Ekblad, Laurie Freitag, and Anthony Castillo from Essentia Institute of Rural Health; and the 35 other respondents to the barriers study.

Contributor Information

Alex C Cheng, Department of Biomedical Informatics, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States; Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Cathy Shyr, Department of Biomedical Informatics, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States; Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Adam Lewis, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Francesco Delacqua, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Teresa Bosler, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Mary K Banasiewicz, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Robert Taylor, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Christopher J Lindsell, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Paul A Harris, Department of Biomedical Informatics, Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States; Vanderbilt Institute of Clinical and Translational Research, Vanderbilt University Medical Center, Nashville, TN 37203, United States.

Author contributions

Alex Cheng (Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Validation, Visualization, Writing—original draft, Writing—review & editing), Cathy Shyr (Investigation, Methodology, Writing—review & editing), Adam Lewis (Data curation, Project administration, Resources, Writing—review & editing), Francesco Delacqua (Data curation, Resources, Software, Writing—review & editing), Teresa Bosler (Project administration, Resources, Writing—review & editing), Mary Katie Banasiewicz (Data curation, Resources, Writing—review & editing), Robert Taylor (Conceptualization, Data curation, Methodology, Project administration, Software, Supervision, Writing—review & editing), Christopher J. Lindsell (Conceptualization, Funding acquisition, Investigation, Supervision, Writing—review & editing), and Paul Harris (Conceptualization, Funding acquisition, Investigation, Methodology, Supervision, Writing—review & editing)

Funding

This work was supported by the National Center for Advancing Translational Sciences through grants UL1TR002243, U24TR004432, and U24TR004437 and the National Library of Medicine through grant K99LM014429 and contract 75N97019P00279.

Conflicts of interest

Christopher Lindsell reports funding from NHLBI and NCATS relevant to the submitted work, funding from NIH, CDC, DoD, AstraZeneca, bioMerieux, Biomeme, Endpoint Health, Entegrion Inc, Novartis, Cytokinetics, Regeneron to institution outside of the submitted work; patents for risk stratification in sepsis and septic shock issued to CCHMC; stock options in bioscape digital unrelated to the current work; paid participation in data and safety monitoring committees; and editor in chief, Journal of Clinical and Translational Science. No other authors have relevant competing interests to disclose.

Data availability

The data underlying implementation statistics will be shared on reasonable request to the corresponding author.

References

- 1. Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377-381. 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Garza MY, Williams T, Ounpraseuth S, et al. Error rates of data processing methods in clinical research: a systematic review and meta-analysis of manuscripts identified through PubMed. Res Sq. 2023. 10.21203/rs.3.rs-2386986/v2 [DOI] [PubMed] [Google Scholar]

- 3. Brundin-Mather R, Soo A, Zuege DJ, et al. Secondary EMR data for quality improvement and research: a comparison of manual and electronic data collection from an integrated critical care electronic medical record system. J Crit Care. 2018;47:295-301. 10.1016/j.jcrc.2018.07.021 [DOI] [PubMed] [Google Scholar]

- 4. Feng JE, Anoushiravani AA, Tesoriero PJ, et al. Transcription error rates in retrospective chart reviews. Orthopedics. 2020;43:e404-e408. doi: 10.3928/01477447-20200619-10 [DOI] [PubMed] [Google Scholar]

- 5. Garza MY, Williams T, Myneni S, et al. Measuring and controlling medical record abstraction (MRA) error rates in an observational study. BMC Med Res Methodol. 2022;22:227. 10.1186/s12874-022-01705-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Harris PA, Taylor R, Minor BL, et al. ; REDCap Consortium. The REDCap consortium: building an international community of software platform partners. J Biomed Inform. 2019;95:103208. 10.1016/j.jbi.2019.103208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Cheng AC, Duda SN, Taylor R, et al. REDCap on FHIR: clinical data interoperability services. J Biomed Inform. 2021;121:103871. 10.1016/j.jbi.2021.103871 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.FDA. Real-World Evidence. 2024. Accessed December 13, 2024. https://www.fda.gov/science-research/science-and-research-special-topics/real-world-evidence

- 9.21st Century Cures Act: Interoperability, Information Blocking, and the ONC Health IT Certification Program. Federal Register. 2020. Accessed June 26, 2023. https://www.federalregister.gov/documents/2020/05/01/2020-07419/21st-century-cures-act-interoperability-information-blocking-and-the-onc-health-it-certification

- 10. Bernard GR, Harris PA, Pulley JM, et al. A collaborative, academic approach to optimizing the national clinical research infrastructure: the first year of the trial innovation network. J Clin Transl Sci. 2018;2:187-192. 10.1017/cts.2018.319 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Shah M, Culp M, Gersing K, et al. Early vision for the CTSA program trial innovation network: a perspective from the national center for advancing translational sciences. Clin Transl Sci. 2017;10:311-313. 10.1111/cts.12463 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Wilkins CH, Edwards TL, Stroud M, et al. The recruitment innovation center: developing novel, person-centered strategies for clinical trial recruitment and retention. J Clin Transl Sci. 2021;5:e194. 10.1017/cts.2021.841 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Cheng AC, Banasiewicz MK, Johnson JD, et al. Evaluating automated electronic case report form data entry from electronic health records. J Clin Transl Sci.. 2023;7:e29. 10.1017/cts.2022.514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Garza MY, Spencer C, Hamidi M, et al. Comparing the accuracy of traditional vs FHIR-based extraction of electronic health record data for two clinical trials. In: Digital Health and Informatics Innovations for Sustainable Health Care Systems. IOS Press; 2024:1368-72. [DOI] [PubMed] [Google Scholar]

- 15. Weiskopf NG, Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc. 2013;20:144-151. 10.1136/amiajnl-2011-000681 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Sheikhalishahi S, Miotto R, Dudley JT, et al. Natural language processing of clinical notes on chronic diseases: systematic review. JMIR Med Inform., 2019;7:e12239. 10.2196/12239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Yim W-W, Yetisgen M, Harris WP, et al. Natural language processing in oncology: a review. JAMA Oncol. 2016;2:797-804. 10.1001/jamaoncol.2016.0213 [DOI] [PubMed] [Google Scholar]

- 18. Reinecke I, Zoch M, Le, et al. The usage of OHDSI OMOP—a scoping review. In: German Medical Data Sciences 2021: Digital Medicine: Recognize—Understand—Heal. IOS Press; 2021:95-103. [DOI] [PubMed] [Google Scholar]

- 19. Qualls LG, Phillips TA, Hammill BG, et al. Evaluating foundational data quality in the national Patient-Centered clinical research network (PCORnet®). EGEMS (Wash DC). 2018;6:3. 10.5334/egems.199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.NOT-OD-19-122: Fast Healthcare Interoperability Resources (FHIR) Standard. Accessed December 4, 2024. https://grants.nih.gov/grants/guide/notice-files/NOT-OD-19-122.html

- 21.NOT-OD-20-146: Accelerating Clinical Care and Research through the Use of the United States Core Data for Interoperability (USCDI). Accessed December 4, 2024. https://grants.nih.gov/grants/guide/notice-files/NOT-OD-20-146.html

- 22. Duda SN, Kennedy N, Conway D, et al. HL7 FHIR-based tools and initiatives to support clinical research: a scoping review. J Am Med Inform Assoc. 2022;29:1642-1653. 10.1093/jamia/ocac105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Eisenstein EL, Zozus MN, Garza MY, et al. ; Best Pharmaceuticals for Children Act - Pediatric Trials Network Steering Committee. Assessing clinical site readiness for electronic health record (EHR)-to-electronic data capture (EDC) automated data collection. Contemp Clin Trials. 2023;128:107144. 10.1016/j.cct.2023.107144 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data underlying implementation statistics will be shared on reasonable request to the corresponding author.