Abstract

When multiple objects are simultaneously present in a scene, the visual system must properly integrate the features associated with each object. It has been proposed that this “binding problem” is solved by selective attention to the locations of the objects [Treisman, A.M. & Gelade, E. (1980) Cogn. Psychol. 12, 97–136]. If spatial attention plays a role in feature integration, it should do so primarily when object location can serve as a binding cue. Using functional MRI (fMRI), we show that regions of the parietal cortex involved in spatial attention are more engaged in feature conjunction tasks than in single feature tasks when multiple objects are shown simultaneously at different locations but not when they are shown sequentially at the same location. These findings suggest that the spatial attention network of the parietal cortex is involved in feature binding but only when spatial information is available to resolve ambiguities about the relationships between object features.

Objects are defined by a multitude of features, such as color, shape, and motion, with each of the features processed by a host of visual areas located in ventral and dorsal regions of the cerebral cortex (1). The mechanism by which the brain integrates such distributed neural information to form a cohesive perception of objects is still unknown. Treisman and Gelade (2), Treisman (3), and others (4, 5) have proposed that the different features of each object are neurally bound together by means of attention to the object's location. Spatial attention is thought to be particularly critical for proper binding whenever there are multiple objects simultaneously present in the scene. It is under such conditions that the relationships between object features are most ambiguous, and hence more prone to conjunction errors (3, 4).

Although spatial attention figures prominently in several models of feature binding, there is as yet little neurological evidence to support this location-based hypothesis. The parietal cortex is a likely candidate substrate given that it has been associated with the representation and manipulation of spatial information (6–10). However, the neural evidence to date is equivocal about the role of the parietal cortex in feature binding. Although some neuropsychological studies support the hypothesis that the parietal cortex is involved in feature integration (11–13), others do not (14). In addition, previous functional imaging studies have either shown no evidence for parietal cortex involvement in feature conjunction (15–17) or have produced inconclusive evidence (18–20) because of potential confounds such as task difficulty and/or eye movements (19, 21).

To demonstrate that the parietal cortex plays a role in visual feature binding and to buttress the claim that this role is related to spatial attention, three criteria should be fulfilled. First, it is essential to localize the region(s) of the parietal cortex that are involved in spatial attention given that the parietal lobe also has been associated with nonspatial functions (19, 22–24). Second, the same parietal region(s) should show conjunction-related activation (i.e., more engaged in conditions requiring integration of features compared to conditions requiring only single feature judgments, even after controlling for task difficulty and eye movements). Third, the conjunction-related activation should primarily occur when there are multiple objects simultaneously present in the visual scene; for it is under such conditions that the relationship between objects features is ambiguous (4, 12, 13). In other words, the activation should be specifically observed under conditions in which spatial attention can assist proper feature binding. Here we report evidence from a series of fMRI experiments that specific regions of the right parietal cortex satisfy all three criteria.

Methods

Subjects.

Subjects were healthy, right-handed individuals, free of any history of neurological or psychiatric problems. All subjects gave written informed consent before participating in the study. This study was approved by the Human Investigations Committee of the Yale University School of Medicine. Ten subjects (six males, four females, ages 21–36) participated in the first experiment whereas two other groups of 15 subjects participated in the second experiment (eight males, ages 19–30) and control experiment (six males, ages 20–37).

Behavioral Tasks.

In the first experiment (spatial attention localizer task), subjects performed feature-matching tasks based on the shape (S) or location (L) of objects. Stimuli were identical across conditions and only the feature judgment changed. The task was presented in a block design, with six trials/block (21.3 sec) and nine blocks/fMRI run. At the start of each block, subjects were cued for 2 s to the feature judgment required for that block. The stimulus set consisted of five snowflake shapes (3.75o diameter), five colors, and five positions. Two snowflakes of different shapes, colors, and positions were first shown for 1,080 ms followed by a mask for 40 ms, and then by a probe snowflake presented for 1,080 ms (Fig. 1A). Subjects determined whether the test snowflake matched either one of the two sample snowflakes in the attended feature. Two-thirds of the trials were matches. The location task was relative to a white frame. The 0.25°-thick frame was 12.6° wide and 10.8° high. To increase the difficulty of the location judgment, the position of the white frame in the test display was jittered by ≈1.5° relative to its position in the sample display. Subjects received practice before the scanning session until they reached a predefined criterion in sensitivity for all conditions (A′ = 0.8).

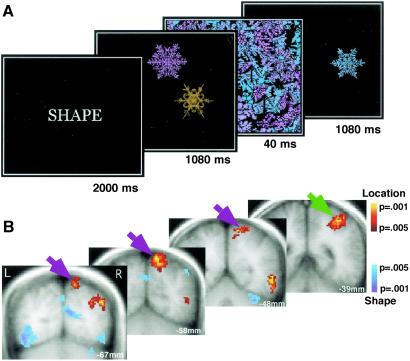

Fig 1.

Definition of spatial attention ROIs. (A) Trial design. Each block of trials began with a screen instructing the subjects which feature to attend. In each trial, two snowflake objects were presented, followed by a mask and a test object. Subjects determined whether the test matched one of the two sample objects in the attended feature (shape or location). Object colors were uninformative for the shape and location judgments and were used for pilot experiments of feature conjunctions (data not shown). (B) Brain activation for location (red) compared to shape judgment (blue). Location judgment activated two regions of the parietal cortex: anterior intra-parietal (green arrow) and superior parietal (BA7) cortex (purple arrow).

In the second experiment (feature conjunction experiment), subjects were asked to determine whether a test object matched either of two previously presented sample objects on either its shape, color, or combination of shape and color (Fig. 2A). The stimulus set consisted of five novel geometric objects and equiluminant colors. The sample objects were presented either simultaneously or sequentially. In the sequential presentation, the two sample objects (each ≈2.2° diameter) were successively presented at fixation for 140 ms each and separated by a 300-ms blank interval to minimize interference effects (25). In the simultaneous presentation, the samples were presented simultaneously for 180 ms above and below the fixation point, with each object centered at 0.5° from fixation. Objects in the simultaneous presentation were smaller (≈0.75° in diameter) than in the sequential presentation to equate for the spatial extent of attention. Although the two modes of presentation differ in low-level physical stimulation, data analysis was primarily carried out within each presentation mode to discard any confounds associated with these stimulus differences (see below). For both presentation modes, a mask (250 ms) and then a test object (1,100 ms) followed the sample objects. The test object was randomly jittered within a 0.5° radius circle around the fixation point to prevent its position from matching the locations of the sample objects in either presentation modes. This level of jittering ensured highly comparable overlaps between the test and sample objects in both the sequential and simultaneous displays. All objects in all conditions were presented within the fovea (2° of visual angle), and all sample objects were presented 180 ms or less to minimize eye movements. The trials were blocked (seven trials of 3 s each/block, 12 blocks/fMRI run). At the start of each block, an instruction screen with the words “shape only,” “color only,” or “shape and color” presented for 2 s prompted the subjects to the feature judgment required for that block.

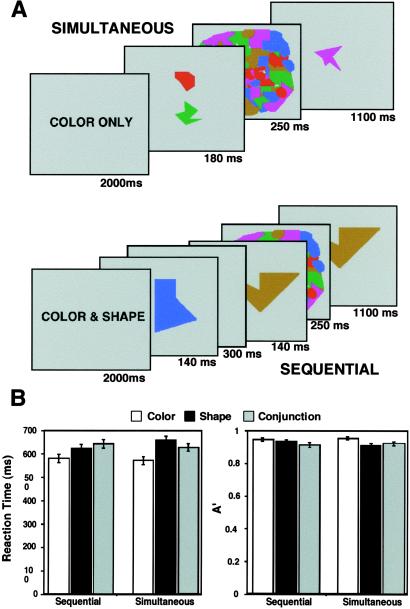

Fig 2.

Experiment 2. (A) Trial design. Each block of trials began with a screen instructing the subjects which feature(s) to attend. In the simultaneous presentation trials, the two sample objects were spatially separated but shown simultaneously, whereas in the sequential presentation trials, the two objects were shown successively at fixation point. The sample objects were followed by a mask and then a test object. (B) Behavioral performance during the fMRI sessions.

For each condition, two-thirds of the trials were match trials [the test object matched one of the two sample objects for the relevant feature(s)] whereas the remaining third consisted of nonmatch trials. For the single feature conditions, the test objects in the nonmatch trials consisted of one of the other three colors or shapes of the set of five for the feature of interest. The other test object feature could equally come from either one of the two sample objects or from the rest of the set. For the conjunction conditions, 80% of the test objects in the nonmatch trials consisted of the feature of one of the two sample objects and the complementary feature of the other sample object, whereas for the remaining 20% of the nonmatch trials one of the two features was novel. All six block types (three features and two presentation modes) were presented within each fMRI run. Subjects received practice before the scanning session until they reached a predefined criterion in sensitivity for all conditions (A′ = 0.8).

Working Memory Load Control Experiment.

Fifteen subjects were scanned while performing a visual working memory load task. In this event-related experiment, subjects encoded a varying number of sample objects (one to four) shown at any of the four corners of an imaginary square surrounding the fixation point (corner-to-fixation distance: 1.2°). The displayed gray-scale objects (1° wide) came from a set of six novel, geometrically shaped objects similar to those used in experiment 2. A trial consisted of a small fixation cross for 2 s, followed by object display presentation for 100 ms, followed by the small fixation cross for another 1,200 ms, and by the test display for 2 s during which subjects responded. Finally, a large fixation cross was presented for 8.7 s before the next trial began (total trial duration: 14 s). During the test display, a single object was presented at one of the previously occupied positions, and subjects responded whether the test object was identical to the sample object shown at that position. The sequence presentation of the different set sizes was randomized. There were four trials of each set size/fMRI run, and each subject performed four runs/fMRI session.

Imaging Methods.

Subjects were scanned with a 1.5 T GE MRI system with resonant gradients for echo-planar imaging. T1-weighted structural images were first acquired by using conventional parameters. In experiment 1, image acquisition consisted of a gradient echo single shot sequence with echo time (TE): 45 ms, flip angle: 60°, repetition time (TR): 1,500 ms. Each image was 128 × 64 pixels over a field of view of 40 × 20 cm (in-plane resolution: 3.12 mm). Eight 8 mm-thick slices spanning the parietal and occipital lobes were acquired perpendicular to AC-PC line. Stimuli were presented by using rsvp software (Williams, P. and Tarr, M. J. RSVP: Experimental Control Software for MacOS, http://psych.umb.edu/rsvp/) with a Macintosh PowerPC 7100 and back-projected from an LCD panel onto a screen that was viewed by the supine subject in the scanner through a prism mirror. The echo-planar imaging parameters for experiment 2 were the following: nineteen, 8-mm thick slices perpendicular to the anterior–posterior commissure (AC-PC) line, TR: 2,000 ms, TE: 60 ms, flip angle: 60°, 64 × 64 pixels over a field of view of 20 × 20 cm. Those for the load control experiment were as experiment 2 except that fourteen, 8-mm thick slices were acquired parallel to the AC-PC line.

Data Analysis.

The first two images of each block were discarded from further analysis to account for the delay in the hemodynamic response. Statistical parametric maps of blood oxygenation level-dependent (BOLD) activation for each subject were created using a skew-corrected percentage signal difference (10). The anatomical and Gaussian-filtered (full width at half maximum = 4.0 mm) BOLD images for each subject were transformed into standardized Talairach space. The resulting maps from all subjects were superimposed to create cluster-filtered (six contiguous pixels) composite maps. The probability that the mean percentage signal change of activation across subjects was significantly different from zero was calculated by using a t test for each composite pixel. The composite maps for experiment 1 reveal pixels with P values < 0.001 (uncorrected for the number of comparisons). For experiment 2, a region of interest (ROI) analysis also was performed based on the brain regions activated in the parietal cortex in experiment 1. The mean percentage signal change for each ROI of each subject was first computed, and statistical differences in the group mean percentage change between the conjunction and each of the two single feature conditions were calculated with t tests (with significance level set at P < 0.05) separately for the sequential and simultaneous presentations. To determine whether the ROI activations with the conjunction judgments could be an artifact of distinct sectors of the ROI responding to each of the two conjunction vs. single-feature comparisons, statistical parametric maps of the two comparisons (conjunction minus shape and conjunction minus color) were overlaid. Individual ROI voxels were considered to be significantly activated by both conjunction vs. single feature comparisons if they survived a P < 0.05 threshold applied to each of the two comparison maps. For the working memory load control experiment, images acquired from 2 to 8 s after the sample display presentations in correct trials only were used for further data analysis. The fMRI data were otherwise analyzed as in experiment 2 by using the ROIs defined in experiment 1.

Results

Experiment 1: Isolation of Parietal Cortical Regions Involved in Spatial Attention.

In keeping with previous studies (7, 26, 27), we defined regions preferentially involved in spatial attention as those that were more activated in the location matching task than in the shape matching task. The areas preferentially engaged by the spatial attention task were in the superior parietal cortex (Broadman area 7) and intra-parietal sulcus (IPS) (Fig. 1B). The Talairach coordinates of the center of mass of activation were x = +40, y = −39, and z = +47, for the intra-parietal cortex and x = +16, y = −58, and z = +56 for the superior parietal cortex. These results are consistent with previous imaging studies of spatial attention (7, 26, 27). In addition, the right parietal cortex was more activated than the left (paired t tests; superior parietal cortex: t = 3.78, P = 0.004, intra-parietal cortex: t = 1.9, P = 0.088), replicating previous evidence of a right-hemisphere dominance for spatial attention (8, 26, 28). The greater parietal cortex activation with the spatial attention task than with the object identity task is unlikely to be due to task difficulty, as indicated by a sensitivity measure (A′) and reaction time data acquired outside the scanner: A′ for location: 0.94 ± 0.02, shape: 0.94 ± 001, paired t test, P = 0.70. Reaction time (RT) for location: 639 ms ± 17 ms, shape: 718 ms ± 15 ms, paired t test, P < 0.001).

Experiment 2: Testing the Role of the Parietal Cortex in Visual Feature Conjunction.

This experiment was designed to test two predictions: (i) The parietal cortex ROIs should be more activated during feature conjunction tasks than single feature judgment tasks, and (ii) they should do so primarily under conditions when there are multiple objects presented simultaneously at different locations in the visual scene. To test these assumptions, we asked subjects to perform a feature judgment task that involved determining whether a test object matched either one of two sample objects in shape, color, or combination of shape and color. In the simultaneous condition, the two objects were presented simultaneously at different locations whereas in the sequential condition the two objects were shown sequentially at the same location (Fig. 2A). This experimental strategy was inspired from the study design of Friedman-Hill and coworkers (12), who compared feature binding in a brain lesion patient in sequential vs. simultaneous displays. Our experiment also is similar in design to the distractor interference study of Kastner and coworkers (29) in comparing sequential vs. simultaneous displays.

Behavioral Performance.

Performance (reaction time and A′) was monitored during the fMRI session to assess task difficulty (Fig. 2B). Both measures of performance indicated that the conjunction task was not harder than the two single feature tasks, at least for the simultaneous presentations. For A′, a 2 × 3 ANOVA with feature judgment (shape, color, and conjunction) and presentation mode (simultaneous, sequential) as factors revealed a main effect of feature (F2,28 = 10.0, P = 0.0005) and a feature X presentation interaction (F2,28 = 3.9, P = 0.03) but no main effect of presentation (F1,14 = 0.4, P = 0.53). More important, the conjunction task was not harder than both of the single feature tasks. For both sequential and simultaneous presentations, the conjunction judgment was more difficult than color (post hoc Scheffé tests: P = 0.005, P = 0.001, respectively) but not shape (P = 0.11, P = 0.65, respectively). The same qualitative results, with shape and conjunction conditions being more difficult than the color condition, apply when using accuracy as a measure of performance (simultaneous condition: 94.0% ± 1.6 for color, 86.3 ± 1.6 for shape, and 86.9 ± 2.2 for conjunction; sequential condition: 92.7% ± 1.3 for color, 87.5 ± 1.6 for shape, and 89.6 ± 2.2 for conjunction).

Could the subjects' performance in the conjunction conditions be simply achieved by performing only single feature matching instead of solving for the specific conjunction of features? Given the trial proportions (two-thirds match and one-third nonmatch, with 80% of the test objects in the nonmatch trials consisting of the feature of one of the two sample objects and the complementary feature of the other sample object), the A′ that subjects would be expected to achieve if they used only individual feature strategies in the conjunction condition is 0.865. The observed A′ for the conjunction condition in both the sequential (0.916) and simultaneous presentations (0.919) are significantly above 0.865 (t tests: t = 3.26, P = 0.003 for sequential; t = 5.5, P < 0.001 for simultaneous). Thus, the subjects' behavioral data in the conjunction conditions cannot be simply accounted for by subjects performing single feature judgments.

The analysis of reaction times showed a similar pattern to the A′ performance. The conjunction judgment took longer than both color (post hoc Scheffé test; P < 0.0001) and shape (P = 0.02) in the sequential presentation, whereas in the simultaneous condition the conjunction judgment took longer than color (P < 0.0001) but not shape (shape > conjunction, P < 0.001). There was also no performance difference between the conjunction tasks in the simultaneous and sequential presentations (P = 0.94 for A′, and P = 0.24 for RT). Despite being equated in difficulty, simultaneous presentation recruited the parietal cortex more than sequential presentation (Fig. 3A). Although this result is consistent with a role for spatial attention in feature conjunction when multiple objects are simultaneously presented, it is also conceivable that low-level physical differences between the simultaneous and sequential displays, such as stimulus size and duration, also contributed to this activation.

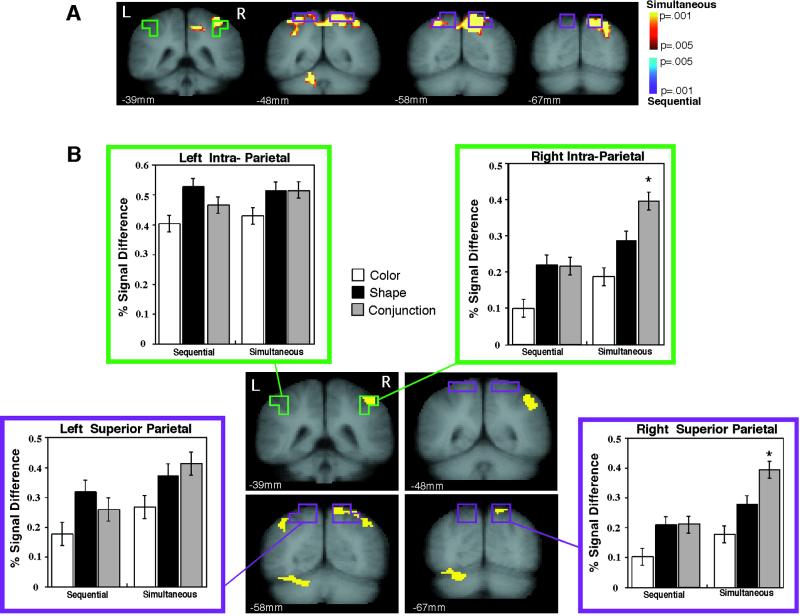

Fig 3.

(A) Direct comparison of conjunction-related activation in the simultaneous (yellow) and sequential (blue) presentations. Green and purple boxes indicate the position of the intra- and superior parietal ROIs, respectively. The simultaneous-presentation activation largely overlaps with the parietal ROIs. (B) Activation of parietal ROIs with conjunction and single feature judgments. Both the right intra- and superior parietal ROIs were more activated during the conjunction judgments than during either of the single feature judgments in the simultaneous presentation mode only (asterisks). Yellow voxels in the statistical parametric map represent brain areas significantly more activated for the conjunction judgment than for both of the single feature judgments during the simultaneous presentations condition (voxels met criteria of P < 0.05 for each of the two conjunction vs. single-feature comparisons). The activated voxels overlap with the ROIs.

Activation of Parietal Cortex with Feature Conjunction.

To avoid the pitfalls of directly contrasting simultaneous and sequential presentations, we compared each conjunction condition to its respective single feature conditions (shape, color), as these were physically matched to the conjunction conditions. In this analysis, the effects of feature judgment (color/shape/conjunction) and presentation mode (simultaneous/sequential) on the activation of the right and left parietal ROIs were assessed separately.

For the right hemisphere, the conjunction task engaged both parietal ROIs more than either of the two single feature judgments, but only during simultaneous object presentation (Fig. 3B). An ANOVA demonstrated that the interaction between presentation mode and feature judgment was significant (F2,28 = 5.3, P = 0.01) in right superior parietal cortex (BA7) and marginally significant (F2,28 = 2.8, P = 0.077) in right IPS. There were no significant interactions in the left BA7 (F2,28 = 1.7, P = 0.21) or IPS (F2,28 = 1.4, P = 0.26). For the right BA7 ROI, the conjunction activation was greater than the activation for both color (post hoc Scheffé test, P < 0.0001) and shape (P = 0.001) during simultaneous presentations, but not during sequential presentations (conjunction > color, P = 0.003, but not shape, P = 0.99). The same pattern held true for the right IPS: For simultaneous presentation, the conjunction activation was greater than color (P < 0.0001) or shape (P = 0.01), whereas for sequential presentation, the conjunction-related activation was greater than color (P = 0.06) but not shape (P = 0.99). These ROI-based findings of conjunction-enhanced activation in simultaneous displays are corroborated by voxel-based activation maps (Fig. 3B). Thus, performing a conjunction judgment on simultaneously presented items recruited the right superior parietal and intra-parietal cortices more than single feature judgments. This conjunction-enhanced activation was not observed when objects were presented sequentially at the same location.

Working Memory Load Control Experiment.

The observation of conjunction-related activation in the simultaneous condition, but not in the sequential condition, in regions of interest defined by a spatial attention task strongly suggests that the role of the parietal cortex in feature binding is related to spatial processing. However, a way in which the conjunction conditions differed from the single feature conditions is in the number of features that had to be encoded. It is therefore possible that the activation of the parietal cortex was not associated with feature integration per se, but with working memory load. Although the fact that no parietal activation was associated with the conjunction condition in sequential displays argues against this possibility, we examined the effect of a parametric variation of a working memory load task on another group of fifteen subjects.

For each trial of this event-related fMRI design, subjects were presented for 100 ms with a variable number (1–4) of sample objects shown around fixation point, and determined after a delay period of 1,200 ms if a test object shown at one of the sample object positions matched the sample object in identity. Behavioral performance acquired inside the scanner demonstrated a main effect of load (F3,14 = 34.01, P < 0.001) (accuracysetsize1 = 0.97, accuracysetsize2 = 0.94, accuracysetsize3 = 0.79, and accuracysetsize4 = 0.69). Using Cowan's (30) formula for estimating the number of objects encoded, these accuracy results translate into 0.93, 1.76, 1.77, and 1.8 encoded objects for set sizes 1–4. Thus, the manipulation was successful in increasing the visual short-term memory load. Yet, there was no effect of load on the percentage signal change in either the right anterior IPS (F3,14 = 1.34, P = 0.27) or superior parietal cortex ROI (F3,14 = 1.65, P = 0.19). Even when only considering the clear differential effect of load between 1 and 2 object displays, there were still no significant differences (right anterior IPS: for one object 0.06% ± 0.05 whereas for two objects −0.009% ± 0.05, t = 1.8, P = 0.1; right superior parietal: 0.07% ± 0.08 for one object and 0.03% ± 0.06 for two objects, t = 0.75, P = 0.46). The absence of a load effect in these parietal ROIs is unlikely to be due to a lack of sensitivity because other regions within the cortical attention network (10) were recruited by the load manipulation (F3,14 = 2.8, P = 0.07 in lateral frontal cortex; F3,14 = 4.2, P = 0.03 in anterior cingulate). Therefore, the parietal activations observed with the simultaneous conjunction condition in experiment 2 cannot be explained by a load effect.

Discussion

The findings of experiment 1 established that specific regions of the parietal cortex were preferentially activated by a spatial attention task relative to an object identity task when the stimulus presentations were identical across the two tasks. Additionally, in the second experiment, we showed that the parietal cortex was more activated when the objects were presented simultaneously at different locations than when presented sequentially at the same location, even when subjects performed the same conjunction task (Fig. 3A). These two sets of results complement each other in demonstrating that the parietal cortex is particularly tuned to spatial information, regardless of whether this spatial information is made explicit under task-driven conditions (experiment 1) or is implicitly present in the visual scene (experiment 2). These findings only reinforce the well-established notion of the privileged role of the parietal cortex in the representation and manipulation of spatial information (6–10).

The fact that the same parietal regions engaged in spatial information processing are also recruited with feature conjunction are suggestive of a role for spatial attention in visual feature integration. We found that two regions of the parietal cortex involved in spatial attention, the right superior parietal cortex (BA7) and anterior intra-parietal cortex, are more active during feature conjunction judgments than during single feature judgments, but only when the visual scene contains multiple objects. The results cannot be accounted for by eye movements given the brevity of the stimulus presentations. Although task difficulty or general attentional demands can modulate parietal activity (19), and might even underlie the activation difference between color and shape judgments (Fig. 3B), they cannot account for the conjunction-specific activation, nor can working memory load differences. We conclude that this activation may reflect instead the representation or manipulation of spatial information or attention during the conjunction task. The lateralization of this effect to the right hemisphere further reinforces this point (8, 26, 28).

These fMRI findings are foreshadowed by several models of feature binding (2–5, 31), and are supported by brain lesion studies (12, 13). The study of Friedman-Hill et al. (12) is particularly relevant because it showed that a parietal cortex-lesioned patient had greater difficulty performing conjunction tasks when objects were presented simultaneously at different locations than when they were presented sequentially at fixation point. Thus, two widely diverging approaches, brain lesion and fMRI, using distinct study parameters (e.g., stimulus size and duration) nevertheless converge in implicating a spatial function for the parietal cortex in visual feature integration (12, 13).

As a cautionary note, the finding that a region of parietal cortex responds to both a spatial attention manipulation and a feature integration task does not necessarily establish that the neural substrates involved in spatial attention mediate feature binding. Because the parietal cortex has been implicated in several distinct perceptual, cognitive, and motor functions (32), it is conceivable that our parietal ROIs are involved in additional functions besides spatial attention and that these other functions mediate the binding process. Although the present results cannot rule out this possibility, they at the very least demonstrate a close relationship between the neural substrates of spatial attention and feature binding.

If the spatial attention functions of the parietal cortex do promote binding, how they may achieve this is open for discussion. Some theories of feature integration purport that the parietal cortex may bind the neural activity in feature-processing areas of the temporal cortex by means of a location map that specifies the spatial relationships among features, i.e., the binding of “what” with “where” (2, 3). Other models postulate that proper feature binding requires that each object be selectively and successively (spatially) attended (4), an effect that could be mediated by the parietal cortex given its involvement in spatial shifts of attention (21). The current findings are consistent with both possibilities. However, there are recent indications that the role of the parietal cortex in feature binding may be better cast in a broader perspective: The right parietal cortex is also activated in tasks that do not require feature conjunction but that do require dissociating a target from distractors in the visual field (10). Thus, it is possible that regions of the parietal cortex may be preferentially involved in parsing ambiguous neural signals arising from simultaneously presented objects (4), as when object features must be properly associated with one another in feature integration or when targets and distractors must be dissociated from each other during target search.

The conditional recruitment of the parietal cortex helps resolve previous conflicts about the involvement of this brain region in feature conjunction. For example, it accounts for the lack of parietal activation when stimuli are presented serially at the same location (15). Yet, subjects evidently can still integrate features even under such circumstances (see Fig. 2B), suggesting that the parietal cortex may not be recruited under all binding circumstances. This conclusion is consistent with behavioral work indicating that some forms of binding may occur even with little spatial cues (33, 34) or attention (35–37). Moreover, it also suggests that the conditional involvement of the parietal cortex may not be the only stage (5, 17, 30, 38, 40) or process (41, 42) involved in visual feature binding. Indeed, it has been proposed that attention (5) and the parietal cortex (31) contribute to feature binding at a relatively late stage of visual information processing. The fact that the parietal cortex was differentially activated by the type of stimulus presentation (simultaneous vs. sequential) in the current experiment seems to suggest an early perceptual involvement in feature binding, although the data are also consistent with a role at later, working memory stages of the task. While our results do not specifically pinpoint the stage of visual information processing where the parietal cortex contributes to feature binding, they clearly establish under what conditions it is involved, namely when location cues can be used to resolve scene ambiguity.

Acknowledgments

We thank Hedy Sarofin, Terry Hickey, Cheryl Lacadie, and Pawel Skudlarski for expert technical assistance, and Isabel Gauthier, Marvin Chun, and Randolph Blake for helpful comments on earlier versions of the manuscript. This study was supported by National Institute of Neurological Disorders and Stroke Grant NS33332.

Abbreviations

fMRI, functional MRI

ROI, region of interest

IPS, intra-parietal sulcus

This paper was submitted directly (Track II) to the PNAS office.

References

- 1.DeYoe E. A. & Van Essen, D. C. (1988) Trends Neurosci. 11, 219-226. [DOI] [PubMed] [Google Scholar]

- 2.Treisman A. M. & Gelade, G. (1980) Cognit. Psychol. 12, 97-136. [DOI] [PubMed] [Google Scholar]

- 3.Treisman A. (1998) Philos. Trans. R. Soc. London B 353, 1295-1306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Luck S. J. & Ford, M. A. (1998) Proc. Natl. Acad. Sci. USA 95, 825-830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wolfe J. M. & Cave, K. (1999) Neuron 24, 11-17. [DOI] [PubMed] [Google Scholar]

- 6.Ungerleider L. G. & Mishkin, M. (1982) in Analysis of Visual Behaviour, eds. Ingle, D. J., Goodale, M. A. & Mansfield, R. J. W. (MIT Press, Cambridge, MA), pp. 549–586.

- 7.Haxby J. V., Grady, C. L., Horwitz, B., Ungerleider, L. G., Mishkin, M., Carson, R. E., Herscovitch, P., Schapiro, M. B. & Rapoport, S. I. (1991) Proc. Natl. Acad. Sci. USA 88, 1621-1625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Corbetta M., Miezin, F. M., Shulman, G. L. & Petersen, S. E. (1993) J. Neurosci. 13, 1202-1226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Colby C. L. & Goldberg, M. E. (1999) Annu. Rev. Neurosci. 22, 319-349. [DOI] [PubMed] [Google Scholar]

- 10.Marois R., Chun, M. M. & Gore, J. C. (2000) Neuron 28, 299-308. [DOI] [PubMed] [Google Scholar]

- 11.Cohen A. & Rafal, D. (1991) Psychol. Sci. 2, 106-110. [Google Scholar]

- 12.Friedman-Hill S. R., Robertson, L. C. & Treisman, A. (1995) Science 269, 853-855. [DOI] [PubMed] [Google Scholar]

- 13.Robertson L., Treisman, A., Friedman-Hill, S. & Grabowecky, M. (1997) J. Cognit. Neurosci. 9, 295-317. [DOI] [PubMed] [Google Scholar]

- 14.Ashbridge E., Cowey, A. & Wade, D. (1999) Neuropsychologia 37, 999-1004. [DOI] [PubMed] [Google Scholar]

- 15.Rees G., Frackowiak, R. & Frith, C. (1997) Science 275, 835-838. [DOI] [PubMed] [Google Scholar]

- 16.Elliott R. & Dolan, R. J. (1998) Neuroimage 7, 14-22. [DOI] [PubMed] [Google Scholar]

- 17.Prabhakaran V., Narayanan, K., Zhao, Z. & Gabrieli, J. D. E. (2000) Nat. Neurosci. 3, 85-90. [DOI] [PubMed] [Google Scholar]

- 18.Corbetta M., Shulman, G. L., Miezin, F. M. & Petersen, S. E. (1995) Science 270, 802-805. [DOI] [PubMed] [Google Scholar]

- 19.Wojciulik E. & Kanwisher, N. (1999) Neuron 23, 747-764. [DOI] [PubMed] [Google Scholar]

- 20.Donner T., Kettermann, A., Diesch, E., Ostendorf, F., Villringer, A. & Brandt, S. A. (2000) Eur. J. Neurosci. 12, 3407-3414. [DOI] [PubMed] [Google Scholar]

- 21.Corbetta M., Akbudak, E., Conturo, T. E., Snyder, A. Z., Ollinger, J. M., Drury, H. A., Linenweber, M. R., Petersen, S. E., Raichle, M. E., Van Essen, D. C. & Shulman, G. L. (1998) Neuron 21, 761-773. [DOI] [PubMed] [Google Scholar]

- 22.Humphreys G. W., Romani, C., Olson, A., Riddoch, M. J. & Duncan, J. (1994) Nature (London) 372, 357-359. [DOI] [PubMed] [Google Scholar]

- 23.Coull J. T. & Nobre, A. C. (1998) J. Neurosci. 18, 7426-7435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Le T. H., Pardo, J. V. & Hu, X. (1998) J. Neurophysiol. 79, 1535-1548. [DOI] [PubMed] [Google Scholar]

- 25.Chun M. M. (1997) Percept. Psychophys. 59, 1191-1199. [DOI] [PubMed] [Google Scholar]

- 26.Kohler S., Kapur, S., Moscovitch, M., Winocur, G. & Houle, S. (1995) NeuroReport 6, 1865-1868. [DOI] [PubMed] [Google Scholar]

- 27.Marois R., Leung, H. C. & Gore, J. C. (2000) Neuron 25, 717-728. [DOI] [PubMed] [Google Scholar]

- 28.Nobre A. C., Sebestyen, G. N., Gitelman, D. R., Mesulam, M. M., Frackowiak, R. S. & Frith, C. D. (1997) Brain 120, 515-533. [DOI] [PubMed] [Google Scholar]

- 29.Kastner S., De Weerd, P., Desimone, R. & Ungerleider, L. G. (1998) Science 282, 108-111. [DOI] [PubMed] [Google Scholar]

- 30.Cowan N. (2001) Behav. Br. Sci. 24, 87-185. [DOI] [PubMed] [Google Scholar]

- 31.Shadlen M. N. & Movshon, J. A. (1999) Neuron 24, 67-77. [DOI] [PubMed] [Google Scholar]

- 32.Culham J. C. & Kanwisher, N. G. (2001) Curr. Opin. Neurobiol. 11, 157-163. [DOI] [PubMed] [Google Scholar]

- 33.Duncan J. (1984) J. Exp. Psychol. Gen. 113, 501-517. [DOI] [PubMed] [Google Scholar]

- 34.Goldsmith M. (1998) J. Exp. Psychol. Gen. 127, 189-219. [Google Scholar]

- 35.Houck M. R. & Hoffman, J. E. (1986) J. Exp. Psychol. Hum. Percept. Perform. 12, 186-199. [DOI] [PubMed] [Google Scholar]

- 36.Ramachandran V. S. & Hubbard, E. M. (2001) Proc. R. Soc. London B 268, 979-983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Palmeri T. J., Blake, R., Marois, R., Flanery, M. A. & Whetsell, W. (2002) Proc. Natl. Acad. Sci. USA 99, 4127-4131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Rao S. C., Rainer, G. & Miller, E. K. (1997) Science 276, 821-824. [DOI] [PubMed] [Google Scholar]

- 39.Reynolds J. H. & Desimone, R. (1999) Neuron 24, 19-29. [DOI] [PubMed] [Google Scholar]

- 40.Lamme V. A. F. & Spekreijse, H. (2000) in The New Cognitive Neurosciences, ed. Gazzaniga, M. S. (MIT Press, Cambridge, MA), pp. 279–290.

- 41.Gray C. M. (1999) Neuron 24, 31-47. [DOI] [PubMed] [Google Scholar]

- 42.Lee S. H. & Blake, R. (1999) Science 284, 1165-1168. [DOI] [PubMed] [Google Scholar]