Abstract

Women are disproportionately subjected to the widespread social problem of street harassment. This study focuses on catcalling, a specific form of harassment characterized by unsolicited verbal or gestural comments often centered on physical appearance. To investigate the emotional and cognitive impact of this experience, we employ an immersive virtual reality (VR) paradigm wherein male participants embody a female avatar to experience street harassment firsthand. Participants’ emotional responses were assessed through both explicit self-reports, guided by Ekman’s emotion model, and implicit measures derived from a semantic analysis of their verbal reactions. Our findings reveal two primary outcomes: first, the experience elicited heightened feelings of anger and disgust, emotions frequently associated with moral disapproval; second, a significant correlation emerged between the intensity of these emotions and the subjective sense of virtual embodiment. This research also introduces an AI-driven method for simulating cognitive and neural patterns associated with the experience. These results underscore the potential of VR as a tool for promoting social safety, with significant applications for clinical and educational interventions.

Keywords: Virtual reality, Catcalling, Emotions, Virtual embodiment, Semantic analysis

Subject terms: Human behaviour, Psychology

Introduction

Gender-based violence remains a critical global issue, with street harassment being one of its most pervasive manifestations. This form of harassment frequently involves catcalling, defined as unsolicited comments and gestures directed at an individual’s physical appearance, typically perpetrated by men towards women1,2. Such behavior is understood not as a trivial interaction but as a form of microaggression causally linked to sexual objectification3. The scale of this problem is substantial; a recent survey4 of 171 harassment situations found that verbal harassment was the most common form, accounting for 42% of incidents reported by women.

In response to complex social issues, cognitive science and psychology have increasingly turned to Virtual Reality (VR) as a powerful methodological tool. Beyond its clinical applications in psychotherapy5,6, VR has proven effective for enhancing empathy and fostering prosocial behaviors7. Immersive environments allow individuals to engage in perspective-taking, experiencing situations from another person’s viewpoint, which has been shown to cultivate empathic responses and reduce aggressive tendencies8,9.

VR’s potential to influence and reshape social cognition is established in empirical research. Studies on virtual embodiment, for instance, have shown that inhabiting an avatar with different characteristics can alter implicit attitudes10–12.

Peck et al.13 found that White participants, after embodying a Black avatar, showed a reduction in implicit racial bias. This principle has been extended to the context of gender-based violence. Seinfeld et al.14 had male offenders embody a female victim of domestic violence, finding that the VR experience significantly improved their ability to recognize fear in female facial expressions—a deficit common in violent offenders15. Similarly, other studies using 360° videos and immersive scenarios of sexual harassment have reported marked increases in empathy and changes in violent attitudes among participants16.

While first-person perspectives in VR consistently elicit stronger behavioral and emotional reactions compared to third-person observation17, the impact on implicit biases remains an open question. Nonetheless, the literature suggests that virtually experiencing harassment can have tangible behavioral consequences, such as reducing conformity in subsequent obedience-related tasks18. These findings collectively affirm the potential of VR as a rehabilitative tool for enhancing emotional understanding and mitigating harmful behaviors.

Building on this foundation, the present study utilizes immersive VR to provide male participants with a firsthand experience of catcalling. While previous research has often focused on overt violence, our goal is to investigate the affective response to a more commonplace form of street harassment. We hypothesize that this embodied experience will elicit morally salient emotions like disgust and anger19–21. By inducing this moral discomfort, the intervention aims to foster self-awareness and encourage a reconsideration of the behavior’s impact22, serving as a potential strategy to promote behavioral change.

Materials and methods

Participants

In our study, we tested a sample of 36 male participants with a mean age of 23.77 ± 5.00 years, divided into two groups of 18 subjects. The experimental group consisted of male students with a mean age of 23.61 ± 5.03; while the control group was composed of male students with a mean age of 23.94 ± 5.11. Informed consent was obtained from all participants prior to their inclusion in the study. This document explains to participants that they will take part in an immersive simulation where they will assume the role of a woman and may encounter situations of verbal harassment. Participants were advised that they have the right to withdraw from the research at any time and for any reason, including if they experienced stress, discomfort, or distress during the study. All methods were performed in accordance with the relevant guidelines and regulations approved by the ethics committee of the University of Bologna (number protocol: 0351419).

Instruments and methods

We used the Oculus Quest 2 as the VR headset and Unity Engine 3D to create the three-dimensional environment. To build the two scenarios for our experimental situations, we used the “BasicBedroomPack” and “UrbanUnderground” packages from the Unity Assets Store. We changed some details in both scenarios (e.g., colors, furniture, avatars, etc.).

The first scenario (Fig. 1A) features a basic bedroom in which the user can see himself in the mirror, wearing the shoes of a female avatar. The second scenario (Fig. 1B) takes place in an urban underground where the user waits for a train alongside other male avatars.

Fig. 1.

In the top panel (A), the participant in the first scenario views his body, represented by a female avatar, in the mirror. In the bottom panel (B), the participant awaits a train in the underground scenario alongside three male avatars

To assess the personality traits of our participants in relation to emotions, gender identity, and personal experiences of violence, we used standardized questionnaires, including:

Toronto Alexithymia Scale TAS-2023: This test consists of 20 items rated on a 5-point Likert Scale, designed to assess the degree of alexithymia in individuals. It encompasses three specific scales: the difficulty to identify emotions (DIE); the difficulty to describe emotions (DDE); the external oriented thinking (OET); and the total score.

Modern Sexism Scale MDS24: this test consists of 8 items rated on a 7-point Likert Scale aiming to assess the bias though the female gender.

Bern Sex-Role Inventory BSRI25: this test consists of 60 items rated on a 7-point Likert Scale aiming to assess the degree of androgyny considering personal tendency through female, male or neutral behaviors.

Stalking Assessment Indices SAI26: This test is composed of 32 items related to a previous experience of violence. 16 items (SAI-V) are related to situations experienced by participants as victims and 16 items (SAI-P) are related to situations experienced by participants as perpetrators.

Next, based on the universal emotions outlined by Ekman27, which include happiness, sadness, anger, disgust, fear, and surprise, we asked participants to rate the intensity of their emotions using a 5-point Likert scale after the VR experience. Additionally, participants completed the Embodiment Questionnaire (EQ) test28 that is composed of 25 items on a Likert scale from − 3 to + 3, aiming to assess the sense of embodiment perceived by participants through six subscales: Ownership, Agency, Location, Appearance, Response and Tactile sensation. For our study, we excluded the subscale related to the tactile sensation due to the absence of haptic feedback in our virtual scenario. Furthermore, for our analysis we organized the five retained EQ subscales into two higher-order factors: Identity vs. Actionable Embodiment. This distinction allows us to separately analyze the participant’s core identification with the avatar from their capacity to operate it within the virtual environment.

Identity Embodiment is the identification with the virtual avatar as an extension of the self. It combines Ownership, as the feeling that the virtual body is “mine”, Appearance, as the cognitive link between the participant’s self-perception and the avatar’s visual form, which is crucial for identity acceptance, and Response (to external stimuli), as the reaction of participants as if their own body is in peril, indicating a deep and primal level of acceptance of the avatar as the self.

Actionable Embodiment is the participant’s perceived capacity to be present in, and exert control over, the avatar within the virtual space. It combines Agency, as the motor control and participant’s ability to willfully move and command the virtual body, and Location, as the feeling of being physically located within the virtual body.

Last, we recorded participants’ verbal responses regarding their behavior and motivations during the VR experience and used those responses to train a generative Artificial Intelligence (AI) model to learn and run an Implicit Situation Intensity Test (ISIT), designed as an AI-driven, highly formalized analogue to the principles of grounded theory29,30 able to make experimental knowledge emerge inductively from the data. Where a researcher performing grounded theory would use manual open, axial, and selective coding to identify themes, our algorithm systematically performs this function through implicit knowledge extraction performed by hybrid neurosymbolic AI. This ensures a rigorous and reproducible process for deriving the final thematic structure of grounded theory.

ISIT is a frame scoring method developed alongside an ontology of experimental conditions and results, In this case, the catcalling ontology is a schema that logically represents the frames (a frame is a cluster of concepts and relations that characterize situation types, such as feeling enraged, engage in discourse, looking at a person)31, assumed when talking about catcalling. ISIT provides a structured approach to quantifying the presence and significance of cognitive, emotional, behavioral, and social frames in participants’ experiences, without requiring direct human assessment. The method combines multiple weighted components with normalization processes to ensure reliable and contextually appropriate measurements.

We deemed an inductive ISIT test necessary because standardized tests and Ekman’s model create a closed world32, with fixed categories that may not catch the rich, unpredictable situational diversity (i.e., an open world) implicitly expressed in verbal responses33. At least one previous meta-analysis about situated emotion intensity34, and a specific study about situated emotion classification33 have established the variability of situational correlations to emotions, but to our knowledge, no standardized analysis method or test is available. In the context of this experiment, the variability of situation reports given by the subjects requires an assessment of implicit situations, e.g., events as perceived/interpreted by the subjects.

To address those needs, frames are automatically extracted using Polanyi35, a hybrid neuro-symbolic AI tool that performs the extraction of both explicit and implicit knowledge from a catcalling experience as expressed in the verbal responses of participants, and generalizes it. Polanyi adopts the Logic Augmented Generation (LAG) method39 to exploit the generative inferential power of LLMs for knowledge extraction. For example, from the text Mary stepped on John’s foot, Polanyi extracts not only the knowledge graph36,37 of literal meaning – e.g., stepping – but also that of physical, social, or emotional impacts or expectations from the described situation, e.g. pain experience, apologetic response, future avoidance, embarrassment, etc.

Then, a Generative AI (we selected Claude Sonnet 3.5 after comparing the results of state-of-the-art Large Language Models including also GPT4o and Gemini 2) runs the ISIT on top of Polanyi’s output, generating positive/negative intensity scores in the − 1 : 1 range, for each situation type (i.e., a “frame”). E.g., from the sentence A feeling of anger developed in me, the AI extracts the frames Emotional Intensity, Emotional Reactivity, and Presence Engagement, and uses them to contextually assign intensity scores as follows:

The scoring system is built on three components contributing to a balanced assessment, with a mild overweight for the frame manifestation:

Direct Frame Manifestation (40%) captures explicit frame evocations in verbal reports, with tiered scoring based on centrality and valence. The relevance of this component is justified by Fillmore’s frame semantics theory33 that suggests direct verbal articulation correlates with cognitive salience.

Emotional Alignment (30%) maps emotional indicators to relevant frames based on established emotion-cognition relationships. Its validity stems from extensive cognitive psychology research, in particular Damasio’s somatic marker hypothesis demonstrating how emotional states systematically influence cognitive framing and behavioral responses38.

Embodiment Influence (30%) incorporates physical manifestations and embodied cognition aspects, drawing on embodiment theory39, where physical experiences shape conceptual processing patterns, particularly relevant in threat and safety domains.

The following pseudo-algorithm details the scoring system:

Barsalou’s situated conceptualization model40 provides a theoretical background to the three components, and justifies the need for ISIT in absence of standard tests, and considering the situated nature of verbal reports. ISIT validity is supported by: (a) ontological grounding, since scores are anchored in the formal axioms defined in the catcalling.owl ontology, ensuring conceptual coherence; (b) a multi-dimensional approach that incorporates direct linguistic evidence of frames as emotional indicators and embodiment factors; (c) its normalization process; (d) a clustering process. Concerning normalization, it starts with the raw score calculation, followed by context adjustment and a formula that ensures that scores remain comparable across different subjects and situations. The normalization formula transforms raw scores to a standardized range of [−1, 1], where − 1 represents complete opposition to, or absence of, a frame, 0 represents neutrality or threshold presence, + 1 represents strong or definitive frame activation. This min-max normalization approach, adapted from41, ensures that frame scores maintain relative relationships while being comparable across the entire dataset, controlling for different baseline activation levels across subjects. Finally, clustering frames into four categorical clusters accounts for the unique manifestation patterns of different frame categories (behavioral, cognitive, emotional/social, safety/control).

To deeper investigate the catcalling experience, we have extended the frame extraction of ISIT to simulate the neural pathways that, based on the background knowledge synthesized in the LLM training, are associable to the extracted frame clusters. Simulated pathways activation has been added to frame clusters, obtaining an added neural layer to the clusters.

Then, using NeuroSynth, a well-established platform that virtually associates functional neuroimaging data to keywords, we created a brain activation map corresponding to the behavioral and emotional states reported by participants. The keywords submitted to Neurosynth are the neural pathway terms extracted by the LLM as plausible correspondences.

The neuro-simulation results are validated by two neuroscientist co-authors, ensuring the scientific accuracy and reliability of the neural mapping.

Procedure

First, the SAI (P-V) test was administered as a screening measure to ensure that participants had no prior experience with various forms of interpersonal aggression, including cat-calling, either as victims or perpetrators. Following this, participants were asked to complete tests to assess alexithymia, gender bias, gender tendency and aggressiveness. Next, they experienced a VR scenario in which they embodied the avatar of a young woman preparing to go to a party. The initial virtual environment featured a furnished bedroom and a mirror, in which the user can see himself in the body of the female (Fig. 1A). Following this, participants transitioned to the next scene set in an underground station, where they had to wait for a train to arrive. In this scenario, participants encountered male avatars who interacted with them (Fig. 1B). In the experimental condition, the avatars used typical Italian catcalling expressions (documented in newspaper articles and sociological research on the topic of verbal street harassment), while in the control group (condition), the avatars posed general questions to the participants. The expressions used in both conditions are summarized in Table 1. Participants were randomly assigned to one of these two groups. The control group allowed us to assess whether the stressful situation elicits negative emotions in general, or if there is a significant increase specifically related to the phenomenon of verbal street harassment.

Table 1.

The Italian verbal stimuli we used in the experimental and control conditions (extracted from the Web), and their English translation.

| Avatar | Experimental Condition | Control Condition |

|---|---|---|

| Avatar 1 |

It: “Ehi, dove vai tutta sola?” Eng: “Hey, where are you going alone?” |

It: “Scusa, che ore sono?” Eng: “Excuse me, what time is it?” |

| Avatar 2 |

It: “Wow, ma sei vera?” Eng: “Wow, are you real?” |

It: “Scusami, sai dove sono i servizi igienici?” Eng: “Please, do you know where the toilet is?” |

| Avatar 3 |

It: “Ehi, perché non mi fai un bel sorriso?” Eng: “Hey, why don’t you give me a nice smile?” |

It: “Sai a che ora arriva la metro?” Eng: “Do you know what time the subway arrives?” |

In our experimental design, we used avatars of the same nationality as the participants to avoid any possible influence of ethnic or cultural representation. The underground scenario included three male avatars in total, which participants encountered one at a time as they moved through the station. We used the same avatars and interrogative questions in both conditions with the avatars programmed to repeat the sentences to create a consistently stressful environment. Participants were not expected to respond to the avatars, as the avatars always pronounced the same phrase, calibrated based on the physical distance. Participants could move freely within the virtual environment, however we modified the scenario to ensure that they remained close to at least one of the three avatars throughout the experience.

After the VR experience, participants completed questionnaires related to virtual embodiment and the intensity of their emotions based on Ekman’s model of emotions. Then, participants provided a written explanation of their actions and the reasoning behind their behavior within the virtual environment, using Google Forms.

.

Data analysis and results

Statistical data analysis

Statistical comparisons of scores referring to MDS, BSRI, and SAI between the experimental and the control group were performed by using two tailed t-tests. Statistical comparisons of Alexithymia and emotional rating were conducted via repeated measures ANOVA. The ANOVA on Alexithymia included the following factors: 2 (Groups: experimental, control) x 2 (Treatment: catcalling, no.

catcalling) 4 (Alexithymia dimensions: DIE, DDE, OET, Tot). The ANOVA on emotional ratings included the following factors: 2 (Groups: experimental, control) x 2 (Treatment: catcalling, no catcalling) 6 (Emotions: happiness, sadness, anger, disgust, fear, and surprise). Partial-eta squared (ηp2) were calculated as effect sizes. Post-hoc analyses using the t-test Bonferroni corrected for multiple comparisons were conducted in case of significant results of the ANOVAs. A critical alpha level of α = 0.05 served as a significance threshold for all tests.

For correlation analyses we used Pearson index, once having verified that the data were normally distributed (Shapiro-Wilk p > 0.05). Statistical analysis was performed using STATISTICA (StatSoft. Inc., Tulsa, OK, USA) version 12.0 and Jasp 0.17.3.0.

Pre-VR experience results

Regarding the questionnaires administered prior to the VR experience, our results show that:

Violence experience as victim (SAI-V): on a total range ranging from 0 to 320, the experimental group has a mean score of M = 17.11, while the control group has a mean score of M = 26.5.

Violence committed as a perpetrator (SAI-P): In a total range from 0 to 320, the experimental group reports a mean score of M = 6.16, while the control group has a mean score of M = 5.33.

Bias towards women (MDS): with a total range from 8 to 56, the experimental group exhibits a bias with a mean of M = 18, compared to the control group presents a bias of M = 17.88.

Androgyny (BSRI): the experimental group shows a higher level of androgyny, with a mean difference between male and female behavior M= −2.38, compared to the control group’s mean M= −5.83)

Alexithymia (TAS-20): our sample consists of individuals with low degree of alexithymia, with the experimental group scoring a mean of M = 43.38 in the and the control group scoring a mean of M = 48.83. No significant difference between groups was found overall [(1, 34) = 2.776, p = 0.104, η2 = 0.075] for all dimensions, [(3, 102) = 2.142, p = 0.099, η2 = 0.059]. On the other hand, a significant difference was found for the four dimensions [(3, 102) = 438.7, p < 0.001, η2 = 0.928].

Based on the normal distribution of our data (Shapiro-Wilk > 0.05), we performed Pearson Correlation on the total sample. Our analysis shows that alexithymia is significantly correlated with aggressive and violent behaviors. Specifically (cf. Table 2):

Table 2.

Significant correlations between alexithymia and aggressive/violent behaviors.

| Alexithymia | Violent Behavior | r | p-value |

|---|---|---|---|

| Total Score | Hostility | 0.402 | 0.015 |

| DDF | Hostility | 0.521 | 0.001 |

| DIF | Anger | 0.412 | 0.012 |

| EOT | Perpetration | 0.337 | 0.044 |

Higher the Alexithymia (total score), higher the Hostility subscale of Aggressive Behavior (r = 0.402 p = 0.015).

Higher the Difficulty Describe Feelings, higher the Hostility subscale of Aggressive Behavior (r = 0.521 p = 0.001).

Higher the Difficulty Identify Feelings, higher the Anger subscale of Aggressive Behavior (r = 0.412 p = 0.012).

Higher the External Oriented Thinking, higher the Perpetrated Violent Behavior (r = 0.337 p = 0.044).

Post-VR experience results

Post-VR questionnaire results

Regarding the questionnaires administered after the VR experience, our results show that:

Embodiment (EQ): our results show a negative relationship between the level of Androgyny and the Embodiment perceived by participants (r= −0.336 p = 0.045). This means that the sense of Embodiment increases as Androgyny levels decrease. Participants with low levels of androgyny are more able to perceive the female body as their personal body during the VR experience.

Emotions (Ekman model): our analysis documents a significant main effect of the group factor [(1, 34) = 8.152, p = 0.007, η2 = 0.193], with higher score in the experimental group (M = 2.99) compared to the control group (M = 2.37). We also found a significant main effect of the emotions factor [(5, 170) = 10.07, p < 0.001, η2 = 0.228]. Finally, we found a significant main effect of the emotions x group interaction term [(5, 170) = 11.26, p < 0.001, η2 = 0.248], cf. Table 3.

Table 3.

The main effects Analysis - ANOVA results concerning Ekman’emotions.

| Effect | f-value | df | p-value | η² |

|---|---|---|---|---|

| Group factor | 8.152 | 1,34 | 0.007 | 0.193 |

| Emotions factor | 10.07 | 5,170 | < 0.001 | 0.228 |

| Emotions x Group Interaction | 11.26 | 5,170 | < 0.001 | 0.248 |

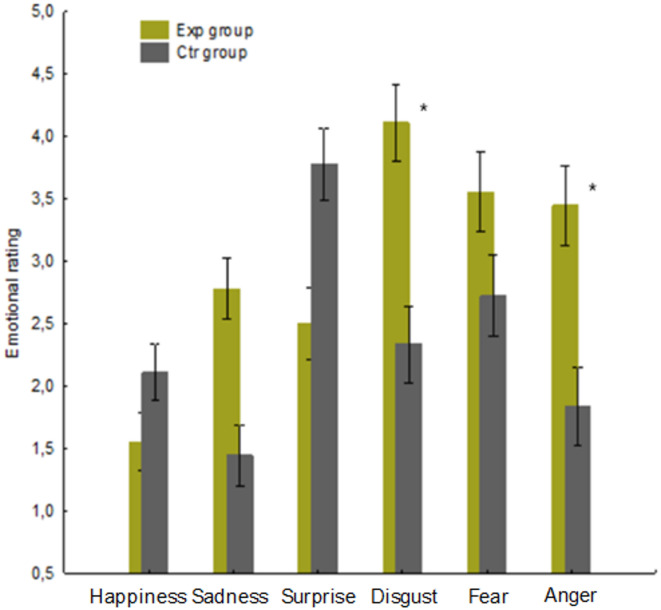

Bonferroni corrected t-test indicates a significant between groups difference for the emotions of Disgust (p = 0.001, (exp group) M = 4.111 vs. (ctr. group) M = 2.333) and Anger (p = 0.006, (exp) M = 3.444 vs. (crt) M = 1.833). No further significant differences were found (p > 0.05), cf. Figure 2.

Fig. 2.

Emotional intensity comparison between experimental and control groups. * indicates significant results p < 0.001 (t-test analysis)

Our findings show also that both experimental and control conditions are able to elicit negative emotions in the participants. Specifically, the increase of Fear is significantly related to the increase of Ontological (r = 0.514 p = 0.001), Functional (r = 0.470 p = 0.004), and Total Embodiment (r = 0.544 p = < 0.001). It means that the increase of virtual embodiment lets participants perceive the situation as real, raising their fear, cf. Table 4.

Table 4.

Significant correlation between virtual embodiment dimensions and fear.

| Virtual Embodiment | Emotion | R | p-value |

|---|---|---|---|

| Functional | Fear | 0.514 | 0.001 |

| Ontological | Fear | 0.470 | 0.004 |

| Total | Fear | 0.544 | < 0.001 |

- Interaction with avatars: in the experimental group only 1 out of 18 participants interacted with the avatars, exhibiting aggressive verbal behavior within the virtual environment. In contrast, in the control group, 9 out of 18 participants interacted with the avatars, providing the requested information.

Post-VR ISIT results

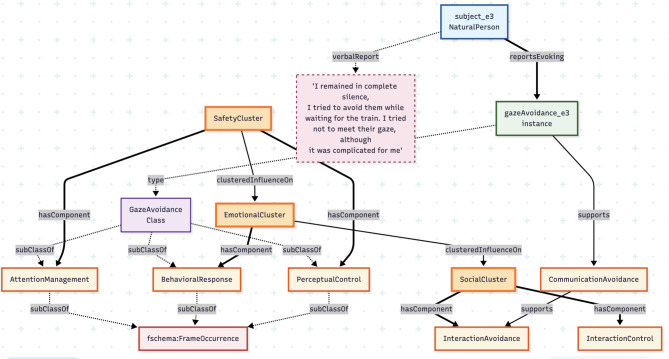

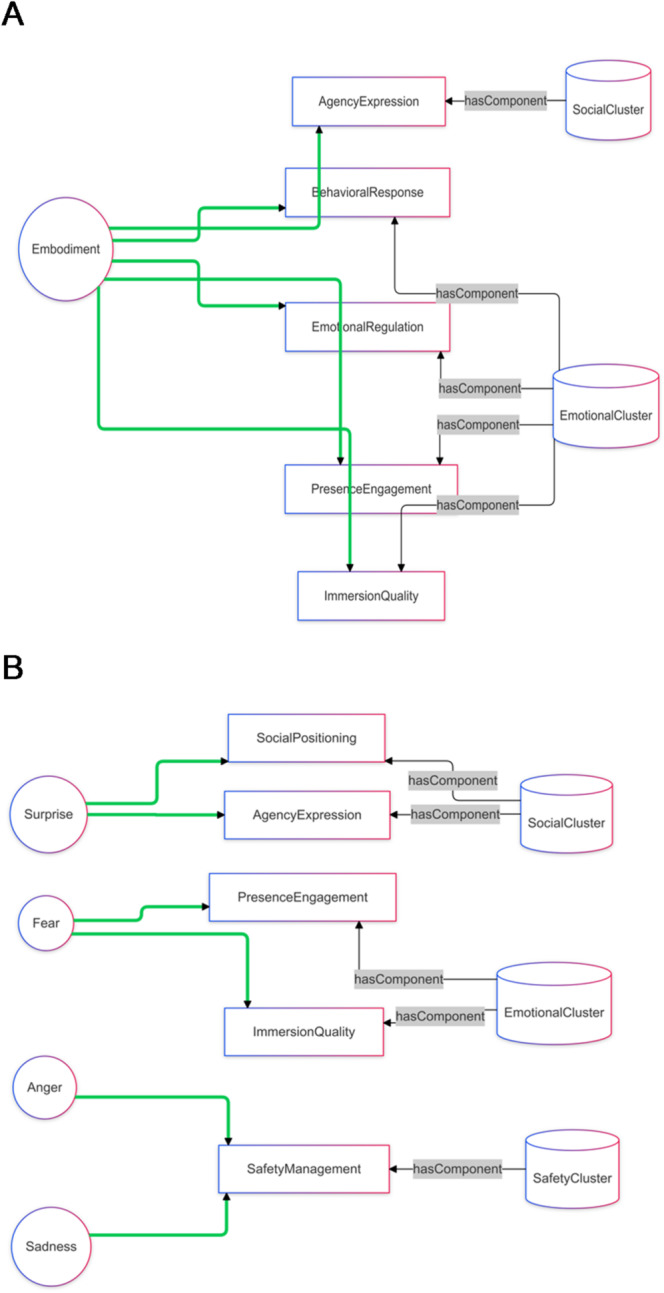

The catcalling experience frames extracted by the Polanyi knowledge graph extractor have been used to derive a logical schema, which is aligned to public semantic resources to achieve interoperable meaning with traditional cognitive categories (cf. Figures 3 and 4). The full Catcalling Ontology and knowledge graphs are available on the experiment’s GitHub.1

Fig. 3.

The exemplified core conceptual schema of the catcalling.owl ontology: EmotionalRegulation is a sample Frame generalized by the Polanyi frame extraction. EmotionalRegulation is also a subclass (a “situation type”) of emotion regulation situations, which are globally included in the FrameOccurrence class. In the schema derived from the knowledge graph, all frame occurrences (situations) may require the presence of, may be influenced by, triggered by, or preceded by other frames. These relations represent the temporal or causal dependencies that emerge as typical of catcalling frames from the experimental reports

Fig. 4.

A specific example of the knowledge graph from the catcalling.owl ontology: participant subject_e3’s verbal report (in the dashed box) reports evoking (among others) a GazeAvoidance frame. That frame is a situation type that is supposed (background knowledge available from the LLM) to support CommunicationAvoidance situations (and transitively InteractionAvoidance ones). The background knowledge emerging in the ontology provides further connections of GazeAvoidance situations as cases of AttentionManagement, BehavioralResponse and PerceptualControl. Those situations are components of the clusters emerged from the semantic analysis of the experimental reports, which eventually show the reported gaze avoidance as associated to both SafetyCluster and EmotionalCluster, which has also influence on the SocialCluster. In this way, a simple sentence from a participant report lets us automatically reconstruct a complex network of entities and concepts, which would be a lengthy and subjective manual process

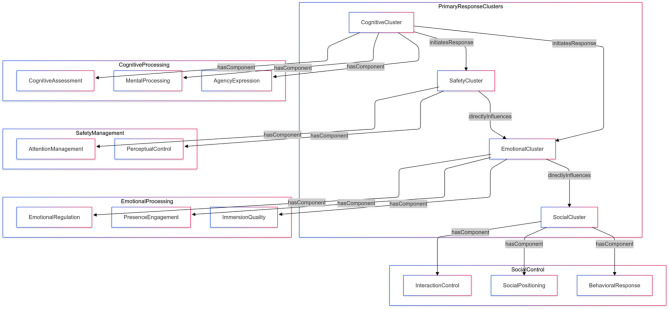

Starting from the knowledge graph, the generative AI (Claude Sonnet 3.5) reused 98 core frames extracted from the responses of all subjects, and categorized them into a taxonomy under 15 top-level frames, on their turn clustered into 4 main clusters i.e., cognitive, social, safety, emotional (Table 5), and to induce causal relations among them (Fig. 5). This can be considered as the underlying semantic schema of the catcalling experience as reported by experimental subjects. An OWL2 ontology of the schema, aligned to public semantic frame resources, is available on GitHub.

Table 5.

Examples of verbal responses collected after the VR experience (catcalling condition) associated with the detected semantic frames and their cluster.

| Cluster | Verbal response | Core Frames |

|---|---|---|

| Safety Cluster | I felt unsafe, I felt the need to move to an area where I could be alone, but then I realized this would only put me in more danger and in a condition of vulnerability in the eyes of those individuals, so I decided to move forward, but still the sensation was physically and perceptually unpleasant | threat assessment, safety seeking, spatial strategy, physical vulnerability awareness |

| Emotional Cluster | A feeling of anger developed in me | emotional intensity, emotional reactivity, presence engagement |

| Social Cluster | I moved away because I was a woman, if I had been a man, I would have responded | gender identity, social positioning, vulnerability |

| Cognitive Cluster | I had the sensation I needed to move away, although initially, intrigued by the VR experience, I approached the avatars. For the rest of the experiment, while waiting for the metro, I felt the need to isolate myself and wait for the train away from the other avatars | experiential learning, response evolution, cognitive assessment |

Fig. 5.

The semantic frame clusters and their relations emerging from the automated analysis of users’ verbal responses in the catcalling situation. The figure shows the four clusters (Cognitive, Safety, Emotional, Social) in the box on the right; and their components in the small boxes around it

The overall precision of the extracted frames for the verbal reports of all subjects, calculated using the explicit text from the reports as well as their inferable implicit or contextual knowledge is 0.93, the precision for the experimental subjects’ reports only is 0.94, and the precision for the control subjects’ reports only is 0.91. The common pattern in the cases of unjustified extraction is an over attribution of complexity to minimal or basic expressions, even when considering implicit knowledge and context.

To quantitatively combine knowledge graphs, emotion intensity, and embodiment, we have trained the AI via In Context Learning using Logic Augmented Generation37 obtaining a locally adapted ISIT test that generated positive/negative intensity scores in the − 1 : +1 range for each Top-level Frame in the knowledge graphs. Frame Intensity scores are based on positive or negative manifestations and intensity of each frame as results from the verbal response of the users. Table 5 contains examples of verbal responses for each cluster, associated with detected frames. Frame Intensity scores, jointly with Emotion Intensity and Embodiment scores, have been analyzed using Pearson correlations.

Post-VR correlational analysis

We have correlated the results from ISIT to the Ekman’s emotion intensity as reported by participants, and to the avatar embodiment, consisting of Total Embodiment score (the joint score based on the EQ test), Identity Embodiment score (the subset joint score only considering ownership, appearance and response aspects) and Actionable Embodiment score (the subset score only considering agency and location aspects).

Correlation of results in the catcalling condition from post-VR questionnaries and ISIT scoring, show that:

Virtual Embodiment correlates positively with agency expression (total embodiment r = 0.819 p = < 0.001; identity embodiment r = 0.735 p = < 0.001; actionable embodiment r = 0.792 p = < 0.001); behavioral response (total embodiment r = 0.514 p = < 0.029; actionable embodiment r = 0.504 p = 0.033); emotional regulation (total embodiment r = 0.595 p = < 0.009; identity embodiment r = 0.604 p = < 0.004); presence engagement (total embodiment r = 0.964 p = < 0.001; identity embodiment r = 0.905 p = < 0.001; actionable embodiment r = 0.871 p = < 0.001); and immersion quality (total embodiment r = 0.959 p = < 0.001; identity embodiment r = 0.899 p = < 0.001; actionable embodiment r = 0.868 p = < 0.001), cf. Figure 6A.

Fig. 6.

the significant correlations (in the catcalling condition) between Virtual Embodiment (A), Emotions (B), and the top-level frames and their clusters. Green color indicates positive correlations

Emotions correlate with some core frames, including: Surprise correlates positively with social positioning (r = 0.619 p = 0.006), and agency expression (r = 0.479 p = 0.045); Fear correlates positively with immersion quality (r = 0.614 p = 0.007) and presence engagement (r = 0.613 p = 0.007); Anger and Sadness correlate positively with safety management (anger r = 0.526 p = 0.025; sadness r = 0.528 p = 0.024).No significant results were found in relation to virtual embodiment and top-level frame in the control condition. Concerning emotions, in the control condition there is a negative relationship between sadness and behavioral response (r= −0.512 p = 0.030) and a positive relationship between fear and social positioning (r = 0.518 p = 0.028), cf. Figure 6B.

Simulated neural pathways associable to semantic frame clusters

ISIT’s frame clusters have been associated to potential (LLM-simulated, cf. Section 2.2) pathway activation. Those activations have been evaluated by expert neuroscientists (Cronbach alpha agreement: 0.61), and the validated ones have been added to the catcalling experiment knowledge graph,

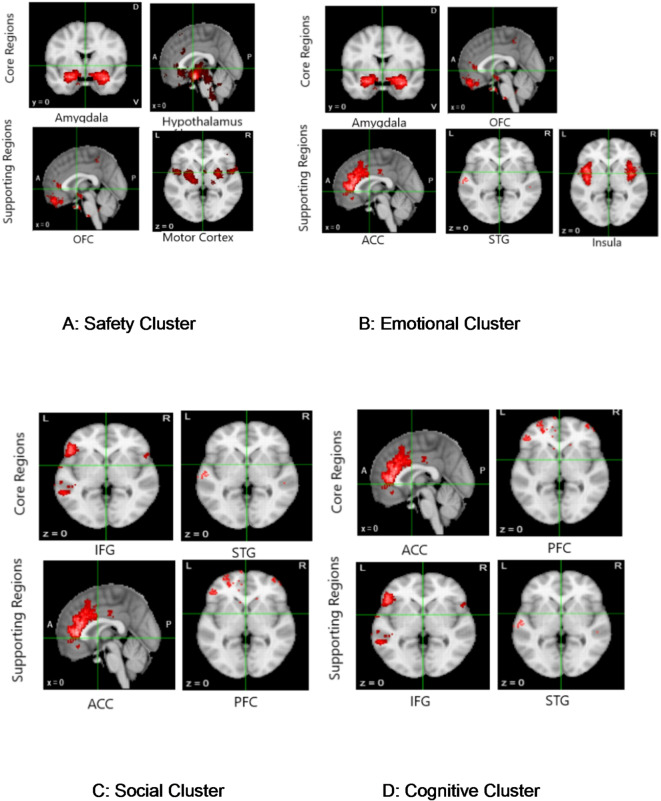

Our analysis (cf. Table 6; Fig. 7) shows that:

Table 6.

A synthesis of the neural pathways simulated activation with respect to the four frame clusters from ISIT.

| Cluster | Core Brain Regions | Supporting Brain Regions |

|---|---|---|

| Safety |

Amygdala (91%) Hypothalamus (91%) |

OFC (73%) Supplementary Motor Cortex (73%) |

| Emotional |

Amygdala (82%) OFC (73%) |

ACC (45%) STG (45%) Insula (45%) |

| Social |

IFG (91%) STG (91%) |

ACC (73%) PFC (73%) |

| Cognitive |

ACC (67%) PFC (67%) |

IFG (42%) STG (42%) |

Fig. 7.

The brain activation of the main areas involved in catcalling situations, considering the four frame clusters: Safety (A), Emotional (B), Social (C) and Cognitive (D). Core brain regions on top, supporting brain regions in the bottom

In the Safety cluster, Amygdala and Hypothalamus serve as the primary core regions. This configuration reflects fundamental processes such as threat anticipation and monitoring detection and physical avoidance42. The amygdala can be understood as an alarm system for detecting potential dangers, while the hypothalamus coordinates the body’s stress response. The supporting regions include the Orbitofrontal Cortex (OFC), involved in risk assessment and decision-making capabilities43; and supplementary Motor Cortex, involved in motor responses.

In the Emotional cluster, there is a more distributed processing pattern, in which the Amygdala maintains a central role, and the Orbitofrontal Cortex (OFC) serves as co-core region reflecting the integration of emotional experience with higher-order cognitive regulation and value-based decision making. The supporting network includes the Anterior Cingulate Cortex (ACC), involved in emotional awareness and conflict monitoring44; Superior Temporal Gyrus (STG), involved in language comprehension including the inferior frontal gyrus45; and Insula involved in interoceptive awareness and emotional embodiment46.

In the Social cluster, there is higher involvement of the Language Circuit with the Inferior Frontal Gyrus (IFG) and the Superior Temporal Gyrus (STG), providing the communicative foundation necessary for social interaction. In a supporting role, the Anterior Cingulate Cortex (ACC), related to cognitive control, conflict monitoring, emotional regulation, and PFC, involved in executive functions47.

The Cognitive cluster shows the most balanced distribution of brain regions. The core regions include the Amygdala, Hypothalamus, Anterior Cingulate Cortex (ACC) and Prefrontal Cortex (PFC), which suggest the involvement of the emotional, physiological, attentional, and executive control systems. The supporting regions include the Inferior Frontal Gyrus (IFG) and Superior Temporal Gyrus (STG), providing additional contextual language comprehension and production capabilities.

Discussion

Considering the increasingly prevalent phenomenon of catcalling1, this study applied immersive virtual reality to let male participants directly experience catcalling within the context of daily mobility. Participants navigated an everyday life scenario involving public transport in a large city while embodying a female avatar. Our goal was to sensitize men to the violent behavior directed toward women by using virtual embodiment to elicit negative emotions. We recorded the emotions reported by participants following the VR experience.

Our results indicate that experiencing catcalling situations from a first person-VR-mediated perspective significantly increases participants’ emotions of disgust and anger. These emotions are particularly relevant as triggers of self-awareness and moral discomfort, serving as potential motivators for self-reflection and corrective action. Rozin et al.48 highlighted that disgust is a key moral emotion that promotes the rejection of behaviors perceived as degrading or harmful, even when such behaviors are committed by oneself. Moreover, Schnall et al.49 found that inducing disgust can increase moral sensitivity, making individuals more likely to view harmful behaviors as wrong.

The emotion of anger can be interpreted as a catalyst for change50. For example, Tangney et al.51 discussed how emotions like anger lead to reparative actions, as they increase moral discomfort and self-awareness. Therefore, both disgust and anger can increase self-awareness and moral discomfort, acting as the first step toward behavior change. Importantly, previous studies found reduced feelings of disgust and anger in perpetrators. For instance, Bondü and Richter22 indicated that lower moral disgust sensitivity predicted higher levels of relational aggression in men. This suggests that individuals who are less sensitive to feelings of moral disgust may be more prone to engage in aggressive behaviors. Moreover, Molho et al.52 found that both anger and disgust could be associated with desires to punish moral offenders.

Regarding fear, our results show that this emotion is higher in the catcalling situation, however, there is no significant difference with the control condition. This suggests that experiencing an urban underground environment at night from a woman’s perspective is inherently fear-inducing, independent of explicit harassment.

Regarding verbal responses, we extracted a detailed Catcalling ontology and knowledge graph that capture the catcalling experience by extracting the underlying knowledge of framed situations. The ontology has been designed using both the extracted frames, and the embodiment and emotion categories used in the tests. It has then been used to train (via in-context conditioning) a Generative Artificial Intelligence model (the Claude LLM) to run an Implicit Situation Intensity Test (ISIT) that quantifies the intensity and polarity of catcalling frames as evoked in individual verbal responses of subjects. The ISIT learned for this study enabled us to go beyond standardized tests and the Ekman model, which assume a closed world, with fixed categories that may not catch the rich situational diversity of an ecologically sound open world, which is implicitly expressed in verbal responses. The instruments and methods for ISIT design are reusable beyond this study, leveraging the generative ability of Large Language Models (LLM).

The correlation between embodiment, emotion, and the intensity of frame experienced during the catcalling condition reveals the following insights: increased virtual embodiment and fear are associate with stronger emotional involvement within the virtual environment (e.g., they feel anger developing in them); greater virtual embodiment and surprise correlate with stronger social positioning (e.g., they report a need to move away due to gender identity); and heightened feelings of sadness and anger correlates with safety management (e.g., they feel uncomfortable). These findings highlight the potential of virtual embodiment and emotional engagement as tools for reducing violent behavior, letting subjects connect to the emotional and psychological consequences of their actions.

Semantic frame clusters extracted from verbal responses have been associated with simulated activation of neural pathways, again using the LLM and expert validation. It confirmed a neural correspondence to the four frame clusters, each characterized by a unique pattern of core and supporting brain regions. The Safety cluster, anchored by the Amygdala and Hypothalamus, reflects a primary threat-detection and stress-response system. The Emotional cluster maintains the Amygdala as a central hub but integrates the Orbito-Frontal Cortex (OFC) as a co-core region, highlighting the modulation of emotional experiences by higher-order cognitive processes. The Social cluster shows a clear shift towards language and communication, with the Inferior Frontal Gyrus (IFG) and Superior Temporal Gyrus (STG) forming its core. The Cognitive cluster displays the most distributed network, involving a balanced integration of Amygdala, Hypothalamus, Anterior Cingulate Cortex (ACC), and Prefrontal Cortex (PFC).

Conclusion

Our study demonstrates that first-person virtual embodiment of a female target of catcalling is a useful method for eliciting morally salient negative emotions in male participants. Our findings indicate that this simulated experience goes beyond mere observation, inducing significant increases in disgust and anger – emotions intrinsically linked to moral evaluation and behavioral change. The study not only validates virtual reality as a tool for perspective-taking, but also introduces a novel computational approach to quantify the nuanced, implicit dimensions of this experience.

Our findings contribute to cognitive and methodological advancements as much as for promoting social safety. Employing virtual embodiment to enhance emotional sensitivity in men holds promise for both clinical and educational applications. In clinical settings, it may serve as an intervention to increase emotional awareness and empathy among individuals who have engaged in harassment, with the aim of modifying their behavior. In educational contexts, VR can be employed to simulate ecological environments that vividly illustrate the negative impact of street harassment, such as catcalling, by enabling participants to directly experience the emotional distress caused by such situations. Unlike real-world harassment, Virtual Reality simulation can be immediately terminated if distress becomes excessive, and it is able to offer embodiment experiences impossible through traditional methods.

Author contributions

Conceptualization: C.L., C.M.V., A.G., C.S.; methodology: C.L., A.G.; C.S., M.S.; formal analysis: C.M.V.; A.G.; C.L.; F.T.; investigation C.L., C.P.M.; writing original draft preparation, C.L.C.M.V., A.G.; writing review and editing, C.L., A.G., C.M.V., C.S., F.T.; supervision, A.G., C.M.V. All authors have read and agreed to the published version of the manuscript. Co-Authorship: Chiara Lucifora and Aldo Gangemi.

Data availability

The full Catcalling Ontology and knowledge graphs are available on the experiment’s GitHub: https://github.com/aldogangemi/catcalling.

Declarations

Conflict of interest

The authors declare no conflict of interest.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.WHO. https://cdn.who.int/media/docs/default-source/campaigns-and-initiatives/prseah/who-policy-on-preventing-and-addressing-sexual-misconduct.pdf?sfvrsn=7bb1dd (2024). 5b_28&download=true

- 2.McDonald, L. Cat-Calls, compliments and coercion. Pac. Philos. Q.103 (1), 208–230 (2022). [Google Scholar]

- 3.Sue, D. W. et al. Racial microaggressions in everyday life: implications for clinical practice. Am. Psychol.62 (4), 271 (2007). [DOI] [PubMed] [Google Scholar]

- 4.Stark, J. & Meschik, M. Women’s everyday mobility: frightening situations and their impacts on travel behaviour. Transp. Res. Part. F: Traffic Psychol. Behav.54, 311–323 (2018). [Google Scholar]

- 5.Vicario, C. M. & Martino, G. Psychology and technology: how virtual reality can boost psychotherapy and neurorehabilitation. AIMS Neurosci.9 (4), 454–459 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ferraioli, F. et al. Virtual reality exposure therapy for treating fear of contamination disorders: A systematic review of healthy and clinical populations. Brain Sci.14 (5), 510 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lucifora, C. et al. Enhanced fear acquisition in individuals with evening chronotype. A virtual reality fear conditioning/extinction study. J. Affect. Disord.311, 344–352 (2022). [DOI] [PubMed] [Google Scholar]

- 8.Lucifora, C., Schembri, M., Poggi, F., Grasso, G. M. & Gangemi, A. Virtual reality supports perspective taking in cultural heritage interpretation. Comput. Hum. Behav.148, 107911 (2023). [Google Scholar]

- 9.Lucifora, C., Schembri, M., Asprino, L., Follo, A. & Gangemi, A. Virtual empathy: usability and immersivity of a VR system for enhancing social cohesion through cultural heritage. ACM J. Comput. Cult. Herit.17 (4), 1–11 (2024). [Google Scholar]

- 10.Kishore, S. et al. A virtual reality embodiment technique to enhance helping behavior of Police toward a victim of Police Racial aggression. PRESENCE: Virtual Augmented Real.28, 5–27 (2019). [Google Scholar]

- 11.Seinfeld, S. et al. Domestic violence from a child perspective: impact of an immersive virtual reality experience on men with a history of intimate partner violent behavior. J. Interpers. Violence. 38 (3–4), 2654–2682 (2023). [DOI] [PubMed] [Google Scholar]

- 12.Barnes, N., TORAO-ANGOSTO, M., Slater, M. & SANCHEZ-VIVES, M. V. Virtual reality for mental health and in the rehabilitation of violent behaviours. Fonseca J. Communication. 28, 10–46 (2024). [Google Scholar]

- 13.Peck, T. C., Seinfeld, S., Aglioti, S. M., & Slater, M. (2013). Putting yourself in the skin of a black avatar reduces implicit racial bias. Consciousness and Cognition, 22(3), 779–787. [DOI] [PubMed]

- 14.Seinfeld, S., Arroyo-Palacios, J., Iruretagoyena, G., Hortensius, R., Zapata, L. E., Borland, D., ... & Sanchez-Vives, M. V. (2018). Offenders become the victim in virtual reality: impact of changing perspective in domestic violence. Scientific reports, 8(1), 2692 [DOI] [PMC free article] [PubMed]

- 15.Gillespie, S. M., Rotshtein, P., Satherley, R. M., Beech, A. R. & Mitchell, I. J. Emotional expression recognition and attribution bias among sexual and violent offenders: a signal detection analysis. Front. Psychol.6, 595 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Ventura, S., Cardenas, G., Miragall, M., Riva, G. & Baños, R. How does it feel to be a woman victim of sexual harassment? The effect of 360-video-based virtual reality on empathy and related variables. Cyberpsychology Behav. Social Netw.24 (4), 258–266 (2021). [DOI] [PubMed] [Google Scholar]

- 17.Gonzalez-Liencres, C. et al. Being the victim of intimate partner violence in virtual reality: first-versus third-person perspective. Front. Psychol.11, 507601 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Neyret, S. et al. An embodied perspective as a victim of sexual harassment in virtual reality reduces action conformity in a later milgram obedience scenario. Sci. Rep.10 (1), 6207 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Vicario, C. M. et al. Indignation for moral violations suppresses the tongue motor cortex: preliminary TMS evidence. Social Cogn. Affect. Neurosci.17 (1), 151–159 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Vicario, C. M., Kuran, K. A., Rogers, R. & Rafal, R. D. The effect of hunger and satiety in the judgment of ethical violations. Brain Cognition. 125, 32–36 (2018). [DOI] [PubMed] [Google Scholar]

- 21.Lomas, T. Anger as a moral emotion: A bird’s eye systematic review. Counselling Psychol. Q.32 (3–4), 341–395 (2019). [Google Scholar]

- 22.Bondü, R. & Richter, P. Interrelations of justice, rejection, provocation, and moral disgust sensitivity and their links with the hostile attribution bias, trait anger, and aggression. Front. Psychol.7, 795 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Taylor, G. J., Bagby, M. & Parker, J. D. The revised Toronto alexithymia scale: some reliability, validity, and normative data. Psychother. Psychosom.57 (1–2), 34–41 (1992). [DOI] [PubMed] [Google Scholar]

- 24.Swim, J. K., Aikin, K. J., Hall, W. S. & Hunter, B. A. Sexism and racism: Old-fashioned and modern prejudices. J. Personal. Soc. Psychol.68 (2), 199 (1995). [Google Scholar]

- 25.Bem, S. L. The measurement of psychological androgyny. J. Consult. Clin. Psychol.42 (2), 155 (1974). [PubMed] [Google Scholar]

- 26.McEwan, T. E., Simmons, M., Clothier, T. & Senkans, S. Measuring stalking: the development and evaluation of the stalking assessment indices (SAI). Psychiatry Psychol. Law. 28 (3), 435–461 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ekman, P. An argument for basic emotions. Cognition Emot.6 (3–4), 169–200 (1992). [Google Scholar]

- 28.Gonzalez-Franco, M. & Peck, T. C. Avatar embodiment. Towards a standardized questionnaire. Frontiers Robotics AI. 5, 74 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Glaser, B. G. & Strauss, A. L. The Discovery of Grounded Theory: Strategies for Qualitative Research (Aldine Publishing Company, 1967).

- 30.Strauss, A. & Corbin, J. Basics of Qualitative Research: Grounded Theory Procedures and Techniques (Sage, 1990).

- 31.Fillmore, C. J. Frame semantics. Cognitive linguistics: Basic readings, 34, 373–400. (2006).

- 32.Reiter, R. On closed world data bases. In Readings in artificial intelligence 119–140 (Morgan Kaufmann, 1981). [Google Scholar]

- 33.Coppini, S., Lucifora, C., Vicario, C. M. & Gangemi, A. Experiments on real-life emotions challenge ekman’s model. Sci. Rep.13 (1), 9511 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.De Leersnyder, J., Koval, P., Kuppens, P. & Mesquita, B. Emotions and concerns: situational evidence for their systematic co-occurrence. Emotion18 (4), 597–614 (2018). [DOI] [PubMed] [Google Scholar]

- 35.De Giorgis, S., Gangemi, A. & Russo, A. Neurosymbolic graph enrichment for grounded world models. Information Manage. Processing, 62,4, (2025).

- 36.Hogan, A. et al. Knowledge graphs. ACM Comput. Surv. (Csur). 54 (4), 1–37 (2021). [Google Scholar]

- 37.Gangemi, A. & Nuzzolese, A. G. Logic augmented generation. J. Web Semant.85, 1570–8268 (2025). [Google Scholar]

- 38.Damasio, A. R. Descartes’ error: Emotion, reason, and the human brain (Putnam, 1994).

- 39.Lakoff, G. & Johnson, M. Philosophy in the Flesh: the Embodied Mind and its Challenge To Western Thought (Basic Books, 1999).

- 40.Barsalou, L. W. Simulation, situated conceptualization, and prediction. Philosophical Trans. Royal Soc. B: Biol. Sci.364 (1521), 1281–1289 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Jain, A., Nandakumar, K. & Ross, A. Score normalization in multimodal biometric systems. Pattern Recogn.38 (12), 2270–2285 (2005). [Google Scholar]

- 42.Bertram, T. et al. Human threat circuits: threats of pain, aggressive conspecific, and predator elicit distinct BOLD activations in the amygdala and hypothalamus. Front. Psychiatry. 13, 1063238 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wallis, J. D. Orbitofrontal cortex and its contribution to decision-making. Annu. Rev. Neurosci.30 (1), 31–56 (2007). [DOI] [PubMed] [Google Scholar]

- 44.Stevens, F. L., Hurley, R. A. & Taber, K. H. Anterior cingulate cortex: unique role in cognition and emotion. J. Neuropsychiatry Clin. Neurosci.23 (2), 121–125 (2011). [DOI] [PubMed] [Google Scholar]

- 45.Bigler, E. D. et al. Superior Temporal gyrus, Language function, and autism. Dev. Neuropsychol.31 (2), 217–238 (2007). [DOI] [PubMed] [Google Scholar]

- 46.Zaki, J., Davis, J. I. & Ochsner, K. N. Overlapping activity in anterior Insula during interoception and emotional experience. Neuroimage62 (1), 493–499 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Menon, V. & D’Esposito, M. The role of PFC networks in cognitive control and executive function. Neuropsychopharmacology47 (1), 90–103 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Rozin, P., Lowery, L., Imada, S. & Haidt, J. The CAD triad hypothesis: A mapping between three moral emotions (contempt, anger, disgust) and three moral codes (community, autonomy, divinity). J. Personal. Soc. Psychol.76 (4), 574–586 (1999). [DOI] [PubMed] [Google Scholar]

- 49.Schnall, S., Haidt, J., Clore, G. L. & Jordan, A. H. Disgust as embodied moral judgment. Pers. Soc. Psychol. Bull.34 (8), 1096–1109 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Shuman, E., Halperin, E. & Reifen Tagar, M. Anger as a catalyst for change? Incremental beliefs and anger’s constructive effects in conflict. Group. Processes Intergroup Relations. 21 (7), 1092–1106 (2018). [Google Scholar]

- 51.Tangney, J. P., Stuewig, J. & Mashek, D. J. Moral emotions and moral behavior. Ann. Rev. Psychol.58, 345–372 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Molho, C., Tybur, J. M., Güler, E., Balliet, D. & Hofmann, W. Disgust and anger relate to different aggressive responses to moral violations. Psychol. Sci.28 (5), 609–619 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The full Catcalling Ontology and knowledge graphs are available on the experiment’s GitHub: https://github.com/aldogangemi/catcalling.