Abstract

Background

The World Health Organization predicts that by 2030, chronic obstructive pulmonary disease (COPD) will be the third leading cause of death and the seventh leading cause of morbidity worldwide. Pulmonary function tests (PFT) are the gold standard for COPD diagnosis. Since COPD is an incurable disease that takes a considerable amount of time to diagnose, even by an experienced specialist, it becomes important to provide an analysis of abnormalities in a simple manner. Although many deep learning (DL) methods based on computed tomography (CT) have been developed to identify COPD, the pathological changes of COPD based on CT are multi-dimensional and highly spatially heterogeneous, and their predictive performance still needs to be improved.

Objective

The purpose of this study was to develop a DL-based multimodal feature fusion model to accurately estimate PFT parameters from chest CT images and verify its performance.

Materials and methods

In this retrospective study, participants underwent chest CT examination and PFT at the Fourth Clinical Medical College of Xinjiang Medical University between January 2018 and July 2024. In this study, the 1-s forced expiratory volume (FEV1), forced vital capacity (FVC), 1-s forced expiratory volume ratio forced vital capacity (FEV1/FVC), 1-s forced expiratory volume to predicted value (FEV1%), and forced vital capacity to predicted value (FVC%) of PFT parameters were used as predictors and the corresponding chest CT of 3108 participants. The data were randomly assigned to the training group and the validation group at a ratio of 9:1, and the model was cross-validated using 10-fold cross-validation. Each parameter was trained and evaluated separately on the DL network. The mean absolute error (MAE), mean squared error (MSE), and Pearson correlation coefficient (r) were used as evaluation indices, and the consistency between the predicted and actual values was analyzed using the Bland-Altman plot. The interpretability of the model’s prediction process was analyzed using the Grad-CAM visualization technique.

Results

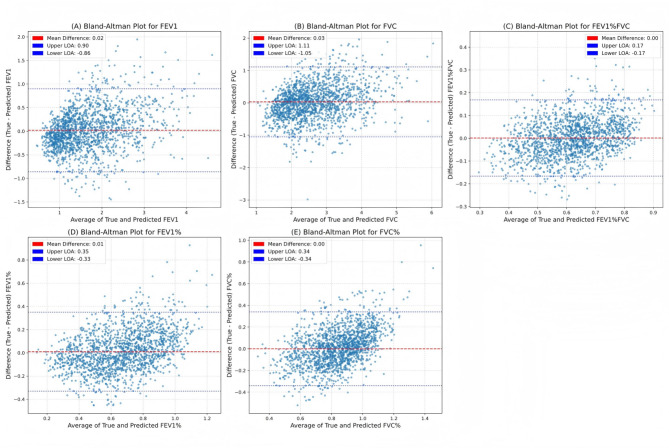

A total of 2408 subjects were included (average age 66 ± 12 years; 1479 males). Among these, 822 cases were used for encoder training to extract image features, and 1,586 cases were used for the development and validation of a multimodal feature fusion model based on a multilayer perceptron (MLP). The MAE, MSE, and r predicted between PFT and model estimates for FEV1 were 0.34, 0.20, and 0.84, respectively. For FVC, the MAE, MSE, and r were 0.42, 0.31, and 0.81, respectively. For FEV1/FVC, the MAE, MSE, and r were 6.64, 0.73, and 0.77, respectively. For FEV1%, the MAE, MSE, and r were 13.42, 3.01, and 0.73, respectively. For FVC%, the MAE, MSE, and r were 13.33, 2.97, and 0.61, respectively. It was observed that there was a strong correlation between the measured and predicted indices of FEV1, FVC, FEV1/FVC, and FEV1%. The Bland-Altman plot analysis showed good consistency between the estimated values and the measured values of all PFT parameters.

Conclusions

The preliminary research results indicate that the MLP-based multimodal feature fusion model has the potential to predict PFT parameters in COPD patients in real time. However, it is worth noting that the study used indicators before the use of bronchodilators, which may affect the interpretation of the results. Future studies should use measurements taken after bronchodilator administration to better align with clinical standards.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12890-025-03957-7.

Keywords: Chronic obstructive pulmonary disease, Deep learning, Chest CT, Pulmonary function tests, Model, MLP

Introduction

Chronic obstructive pulmonary disease (COPD) is a heterogeneous respiratory disease characterized by irreversible airflow limitation [1], and its global disease burden is increasing year by year, ranking third among the leading causes of death according to epidemiological statistics [2]. Moreover, China’s COPD mortality rate is the highest in the world, and the problem of insufficient disease diagnosis is very serious [3, 4]. Although functional assessment based on pulmonary function test (PFT) remains the gold standard for the clinical diagnosis of COPD, it requires high patient compliance [5] and is unable to capture dynamic changes in local anatomical structures of the lungs [6]. In this context, computed tomography (CT) with its sub-millimeter spatial resolution has gradually become a key tool for quantifying the distribution of emphysema and thickening of airway walls and other morphological abnormalities [7]. However, traditional CT image analysis methods are limited by the efficiency of manual segmentation and the differences among observers, which restricts their application potential in precision medicine [8].

The innovation of artificial intelligence technology provides an opportunity to break through the above bottlenecks [9, 10]. In recent years, deep learning (DL)-based feature extraction methods have proved capable of identifying occult biomarkers closely related to the pathophysiology of COPD from CT images [11]. For instance, the improved model of the U-Net architecture can be trained end-to-end to automatically segment the lung parenchyma and quantify the volume of emphysema regions, and its prediction results are significantly better than traditional indicators [12]. Notably, Park et al. [13] developed a model based on a three-dimensional convolutional neural network (CNN) that successfully predicted the 1-s forceful expiratory volume (FEV1) in COPD patients (CCC = 0.91), indicating a high degree of agreement between the model predictions and the real PFT parameters. However, the current prediction of COPD patients’ PFT based on DL CT images still faces some challenges. On one hand, the pathology of COPD is highly complex, and the lack of accurate labeling of lesions in CT images makes it difficult to train and evaluate the model. On the other hand, the existing DL methods have a poor effect on extracting features when dealing with small sample datasets with high noise and sparseness. How to improve the prediction performance of the model in complex pathology and limited data is an urgent problem to be solved in the current research.

In this study, we aim to utilize the DL algorithm to analyze CT images of COPD patients to predict PFT parameters. By proposing effective feature extraction and model construction methods, we can overcome the shortcomings of existing studies and improve the accuracy and reliability of PFT prediction. Specifically, we will discuss how to use DL technology to fully mine the feature information in CT images and combine the clinical data of COPD patients to construct a multimodal PFT prediction model to provide strong support for the clinical diagnosis and treatment of COPD.

Materials and methods

Study sample

This study retrospectively selected individuals who underwent PFT and CT examinations at the Fourth Clinical Medical College of Xinjiang Medical University from January 2018 to July 2024. We collected CT images, PFT parameters, demographic information, and clinical details for each participant. To ensure the correspondence and comparability of imaging information and physiological parameters in predictive analysis, the average time interval (± standard deviation) between PFT and CT examinations in this study was 15 ± 7 days. The inclusion criteria were defined as follows: patients diagnosed with COPD who had complete clinical data, PFT results, and chest CT imaging data. The exclusion criteria included the following: (1) Medical records could not be accessed for the individual. (2) The individual had a cardiac stent, pacemaker, or metallic foreign body. (3) Concurrent diseases (e.g., extensive bronchiectasis, pulmonary tuberculosis, lung tumors, prior lung surgery, massive pleural effusion, or pulmonary consolidation) were present that could impair image quality. (4) Cases were identified as outliers and contained incomplete data. (5) CT images had unaligned voxels. The study ultimately included 2,408 participants. 822 eligible cases were used for encoder training to extract CT image features, and 1586 cases were used for the development and validation of the subsequent fusion model prediction of PFT parameters. The detailed process is shown in Fig. 1.

Fig. 1.

Inclusion and exclusion criteria for the data set

Pulmonary function test

The 1-s forced expiratory volume (FEV1), forced vital capacity (FVC), 1-s forced expiratory volume ratio forced vital capacity (FEV1/FVC), 1-s forced expiratory volume to predicted value (FEV1%), and forced vital capacity to predicted value (FVC%) were measured using the CHESTAC-8800 pulmonary function analyzer. Due to the insufficient number of participants with post-bronchodilator PFT, pre-bronchodilator PFT parameters were used in this study.

Chest CT examination

In this study, the Definition Flash 64-slice dual-source spiral CT equipment was used for scanning. The patients were in the supine position, and the scanning range covered the entire lung area from the apex to the base. The scanning parameters were set as tube voltage 120 kV, tube current in the automatic adjustment mode, and the acquisition matrix was 512 × 512. The lung window was set with a window width of 1200 HU and a window level of -600 HU, which focused on displaying the CT image information with HU values in the range of [-1200, 0]. To ensure the quality of the image data, only thin-slice CT images with a scan layer thickness of ≤ 1 mm were included. These images have higher spatial resolution. The final images were stored in DICOM format. All CT image acquisition and processing processes were anonymized to effectively protect patient privacy and security.

Image preprocessing

In the medical field, the preprocessing of CT images is essential to highlight disease foci and optimize model performance [14]. Before building the model algorithm, we preprocessed the CT images. The primary goal of this preprocessing pipeline is to enhance the accuracy and reliability of model predictions by ensuring clean and consistent input data. In this experiment, the PyTorch-based MONAI framework was used for image preprocessing, and the process included five steps: image segmentation, image resampling, data enhancement, CT value normalization processing, and radiomics feature extraction. See supplementary materials for details.

Model construction and training

In the crossover research of medical image analysis and DL, this study first used the DenseNet model based on CNN as an encoder to extract deep features from chest CT images, which can effectively retain and transmit the spatial structure information and pathological features in the images. Subsequently, to fuse the CT image features extracted by the encoder with demographic data (such as age, gender, smoking history, etc.) and radiomics features (such as intensity features, morphological features, texture features, etc.), a multimodal feature fusion model based on Multilayer Perceptron (MLP) was constructed to achieve the purpose of multi-source information joint representation.

DL architecture

The core part of our multimodal feature fusion model mainly consists of three parts. The first part is the independent feature transformation layer, which is used to process image features and clinical data separately. The second part is the gated network layer, which is used to dynamically generate feature weights for each target. The third part is the goal-specific prediction head layer, which is used to perform regression predictions for different targets. The specific architecture of the model is shown in the supplementary materials.

Experimental setup

The DL model was trained using the training set data, and the Adam optimization algorithm was used to minimize the loss function between the predicted value and the real PFT value as Smooth L1 Loss. The fixed learning rate was 0.001, the maximum epoch was 100, and the batch size was 64. During the training process, record the performance indicators of the model on the training set and the validation set to observe whether overfitting occurred. After the training was completed, the final evaluation of the optimized model was performed using ten-fold cross-validation, and the performance indicators of the model were calculated.

Statistical analysis

Data analysis was conducted using SPSS 26.0 software. All measures (age, height, weight) were expressed using mean ± standard deviation, and count data (gender, smoking history, GOLD stage) were expressed using the number of cases (percentage).

The study used Python 3.10 to calculate the average performance indicators of the model, such as the mean absolute error (MAE), the mean squared error (MSE), and the Pearson correlation coefficient (r), to evaluate the stability and generalization ability of the model. To rigorously evaluate the robustness of the model, a ten-fold cross-validation strategy was adopted for the entire fusion prediction system (focusing on the MLP fusion model). Bland-Altman plots were plotted to analyze the mean difference between the predicted and measured values. Classification performance based on model-predicted thresholds versus clinically established cut-offs was assessed as area under the receiver operating characteristic curve (AUC), accuracy, sensitivity, and specificity. Gradient-weighted class activation mapping (Grad-CAM) [15] was used to generate heat maps to visualize areas of information in chest CT images and explain the model prediction process.

Results

Clinical characteristics of the study sample

Table 1 presents the demographic data and functional parameters of the dataset. Among the 2,408 patients included in the study, the average age was 66 ± 12 years. Of these, 1,479 (61.42%) were male and 929 (38.58%) were female. The average height was 164.47 ± 9.21 cm. The average weight was 69.17 ± 19.06 kg. Among the 2,408 participants, 981 (40.74%) were current or former smokers. The average FEV1 was 1.72 ± 0.81 L, the average FVC was 2.68 ± 0.93 L, the average FEV1/FVC was 62.38 ± 13.94%, the average FEV1% was 68.50 ± 25.82%, and the average FVC% was 85.41 ± 21.77%. Cases were classified according to the Global Initiative for Chronic Obstructive Lung Disease (GOLD) classification system, with 30.73% classified as GOLD stage 0, 15.16% as GOLD stage 1, 32.89% as GOLD stage 2, 14.95% as GOLD stage 3, and 6.27% as GOLD stage 4.

Table 1.

Baseline characteristics of the study sample

| Characteristic | Mean(± std)/count(proportion%) |

|---|---|

| Age (years) | 66 ± 12 |

| Sex | |

| M | 1479(61.42) |

| F | 929(38.58) |

| Height (cm) | 164.47 ± 9.21 |

| Weight (kg) | 69.17 ± 19.06 |

| Smoking status | |

| Smoking | 981(40.74) |

| Non-smoking | 1427(59.26) |

| Lung function | |

| FEV1 (L) | 1.72 ± 0.81 |

| FVC (L) | 2.68 ± 0.93 |

| FEV1/FVC (%) | 62.38 ± 13.94 |

| FEV1% (%) | 68.50 ± 25.82 |

| FVC% (%) | 85.41 ± 21.77% |

| GOLD Staging System | |

| GOLD 0 | 740(30.73) |

| GOLD 1 | 365(15.16) |

| GOLD 2 | 792(32.89) |

| GOLD 3 | 360(14.95) |

| GOLD 4 | 151(6.27) |

FEV1 1-s forced expiratory volume, FVC forced vital capacity, FEV1/FVC 1-s forced expiratory volume ratio forced vital capacity, FEV1% 1-s forced expiratory volume to predicted value, FVC% forced vital capacity to predicted value

Prediction performance of the multimodal feature fusion model

Table 2; Fig. 2 summarize the prediction performance measures of our proposed model in the dataset. FEV1, FVC, FEV1/FVC, and FEV1% were strongly correlated, and FVC% was moderately correlated. The MAE and MSE were predicted to be 0.34 and 0.20 for FEV1, 0.42 and 0.31 for FVC, 6.64 and 0.73 for FEV1/FVC, 13.42 and 3.01 for FEV1%, and 13.33 and 2.97 for FVC.

Table 2.

Prediction performance of the multimodal feature fusion model

| Pulmonary Function Test | MAE | MSE | r |

|---|---|---|---|

| FEV1 | 0.34 | 0.20 | 0.84 |

| FVC | 0.42 | 0.31 | 0.81 |

| FEV1/FVC | 6.64 | 0.73 | 0.77 |

| FEV1% | 13.42 | 3.01 | 0.73 |

| FVC% | 13.33 | 2.97 | 0.61 |

FEV1 1-s forced expiratory volume, FVC forced vital capacity, FEV1/FVC 1-s forced expiratory volume ratio forced vital capacity, FEV1% 1-s forced expiratory volume to predicted value, FVC% forced vital capacity to predicted value, MAE mean absolute error, MSE mean squared error, r Pearson correlation coefficient

Fig. 2.

The model predicts the regression performance of FEV1 (A), FVC (B), FEV1/FVC (C), FEV1% (D), FVC% (E). The Bland-Altman plots show the systematic bias (red dashed line) and the 95% confidence interval limits of agreement (blue dashed line)

To explore the correlation between predicted FEV1% and the severity of emphysema. The study used Pearson correlation analysis to assess the correlation between quantitative indicators of emphysema (percentage of low attenuation areas at -950 HU, LAA − 950%) and predicted FEV1%. Preliminary results indicate a statistically significant negative correlation (r = -0.29, p < 0.001), albeit with a weak correlation strength, between the two variables. This negative correlation suggests that as emphysema severity increases, predicted FEV1% shows a decreasing trend. However, severe pulmonary function impairment is not solely attributable to emphysema; small airway disease also plays a significant role. Therefore, this weak correlation reflects that the model’s prediction of PFT indicators may incorporate other imaging features associated with small airway pathology (such as luminal narrowing and airway wall thickening), rather than relying solely on the degree of emphysema.

Risk prediction of multimodal feature fusion model

The categorical performance of the risk group is summarized in Table 3, and the receiver operating characteristic curve is shown in Fig. 3. The high-risk group was defined as: FEV1/FVC less than 70%, FEV1% and FVC% less than 80%, FEV1 less than 2.5 L, FVC less than 3 L, indicating the presence of airflow limitation.

Table 3.

Risk prediction of multimodal feature fusion model

| Pulmonary Function Test | AUC | Accuracy | Sensitivity | Specificity |

|---|---|---|---|---|

| FEV1 | 0.94 | 0.92 | 0.97 | 0.68 |

| FVC | 0.91 | 0.84 | 0.91 | 0.69 |

| FEV1/FVC | 0.89 | 0.84 | 0.91 | 0.66 |

| FEV1% | 0.86 | 0.81 | 0.90 | 0.61 |

| FVC% | 0.80 | 0.73 | 0.61 | 0.82 |

FEV1 1-s forced expiratory volume, FVC forced vital capacity, FEV1/FVC 1-s forced expiratory volume ratio forced vital capacity, FEV1% 1-s forced expiratory volume to predicted value, FVC% forced vital capacity to predicted value, AUC area under the receiver operating

Fig. 3.

The receiver operating characteristic curves of the model for the classification of FEV1 (A), FVC (B), FEV1/FVC (C), FEV1% (D), and FVC% (E) in the dataset

The classification of high-risk respiratory populations yielded high AUC and accuracy across all PFT parameters. The AUC of FEV1 was 0.94, the AUC of FVC was 0.91, the AUC of FEV1/FVC was 0.89, the AUC of FEV1% was 0.86, and the AUC of FVC% was 0.80. The accuracy was 0.92 for FEV1, 0.84 for FVC, 0.84 for FEV1/FVC, 0.81 for FEV1%, and 0.73 for FVC%.

Interpretation of the model using saliency mapping

To improve the clinical credibility and decision-making transparency of the model, the Gradient-weighted Class Activation Mapping (Grad-CAM) technique was applied [16]. The highly highlighted regions in the heatmap represent the spatial locations of radiological features that the model focuses on most when predicting COPD patients. As shown in Fig. 4, the highlighted regions in the axial view are primarily concentrated in the lung tissue surrounding the bronchi, which is closely related to FEV1, indicating the degree of airway obstruction, and FEV1/FVC, indicating the severity of obstruction. In the coronal view, the areas of high intensity are predominantly concentrated in the lower lung fields. This spatial distribution pattern corresponds with the typical distribution of lower lobe emphysema. Notably, recent studies indicate that this lower-lobe-predominant distribution of emphysema is significantly associated with impaired lung function and increased risk of acute exacerbations in COPD patients [17, 18].

Fig. 4.

Gradient-weighted class activation mapping (Grad-CAM) shows the salient areas where the model predicts pulmonary function test (PFT) parameters. The selected axial and coronal views highlight the distinctive features of the Grad-CAM heatmap in COPD patients. Patient A is a 76-year-old female with GOLD stage 1, FEV1 of 1.52 L, FVC of 2.71 L, FEV1/FVC ratio of 55.96%, FEV1% of 92.99%, and FVC% of 115.49%. Patient B is an 81-year-old male with GOLD stage 2, FEV1 of 1.76 L, FVC of 3.13 L, FEV1/FVC ratio of 56.39%, FEV1% of 61.70%, and FVC% of 80.90%

Discussion

The high readmission rate and risk of disability and mortality in patients with COPD have become important challenges in global public health [19]. However, the pathological process of the disease is significantly insidious, and early small airway lesions (bronchioles with an inner diameter of < 2 mm) have been characterized by thickening of the tube wall and mucus embolism, but it is difficult to achieve timely screening with conventional CT examination [20, 21]. As a result, many patients are not diagnosed effectively at an early stage, which causes them to miss the optimal window for intervention and leads to irreversible disease progression. The current dilemma in the diagnosis of COPD highlights the necessity of DL model development, which may provide a new way to break through the bottleneck of early diagnosis.

The model proposed in this study can predict PFT parameters such as FEV1/FVC and FEV1% through chest CT images (with the best prediction accuracy of 0.84), breaking through the reliance of traditional methods on disease staging. However, it should be noted that in practice, there are a number of factors that can affect PFT readings, including the demographic characteristics and clinical manifestations of the patients [22]. Therefore, this study adopts a multimodal model that fuses CT image features, radiomics features, and clinical information, which not only effectively enhances the feature extraction that characterizes lung texture and morphology, but also provides additional supplementary information for CT image analysis by using supplementary clinical information. This study makes full use of the automatic feature learning ability of DL to dig deep into the rich information in CT images. In addition, the data noise of CT images and the difficulty of 3D segmentation are solved by using appropriate preprocessing methods and a 3D CNN architecture, and the stability and prediction accuracy of the model are improved. The CNN-based DenseNet model we used as the encoder can effectively integrate the multi-layer information of lung lesions in CT images by using its dense connection ability, so as to further improve the effect of the fusion model. The predictive performance of intermediate and back-end fusion was explored, and the best performance was selected.

Although DL-based chest CT image analysis has made significant progress in the field of COPD, most of the existing studies have focused on binary classification tasks of disease screening. For example, González et al. [23] diagnosed patients with COPD based on a CNN model (AUC = 0.856). Tang et al. [24] screened individuals with COPD in lung cancer smokers using residual networks (AUC = 0.889). Sun et al. [25] identified COPD based on attention-based multi-instance learning (AUC = 0.934). Zhang et al. [26] used DenseNet to determine the presence of COPD (AUC = 0.853) and the accuracy of COPD GOLD grading. Sugimori et al. [27] performed a four-categorical analysis of COPD GOLD grading based on the residual network to improve the accuracy. However, this study achieved end-to-end prediction of PFT parameters from CT images to continuous PFT parameters, providing clinical value for prognosis and treatment. Although the DL model developed by Park et al. [13] was able to predict PFT parameters using low-dose CT, the individuals in their database were participants in health check-ups and only contained 1% COPD cases, so the generalization ability of this model in real patient populations still needs to be verified. In our study, the GOLD 1–4 stages accounted for 69.27%, among which the GOLD 3 & 4 stages accounted for 21.22%, verifying the predictive performance of the DL model in COPD diseases.

The multimodal feature fusion model developed in this study has its unique advantages. First of all, in the case of effectively combining imaging features and clinical data, radiomics features are integrated to make full use of multi-source data information. Secondly, the gated attention mechanism introduced plays the role of a “task-aware feature selector”. This mechanism not only improves the performance of the model in multi-task learning but also ensures computational efficiency. Finally, residual learning is used to adapt to different dimensions: different PFT metrics (FEV1, FVC, etc.) have different dimensions and ranges, and independent residual prediction heads can be adapted separately, allowing the model to learn the differences between indicators to capture more complex mappings. The multi-task residual MLP design of gated fusion retains the advantages of MLP general function approximation while addressing the challenge of medical multimodal data fusion. Therefore, in the medical image data fusion task, these advantages are particularly important.

Our study has the following clinical significance: First, by analyzing CT images using DL, we can quantitatively assess the spatial distribution characteristics associated with COPD. For example, imaging features extracted from CT images can capture abnormal areas in the lungs associated with COPD. These abnormal areas are closely related to PFT results. This helps establish a mapping relationship between structural changes in specific areas and overall PFT parameters. Second, although the CT-based model we propose has limitations in terms of cost, time, and radiation exposure, and is not suitable for repeated monitoring, its true value lies in providing a new approach for opportunistic screening. This method is particularly suitable for two key groups: (1) patients who have already undergone chest CT scans for other reasons (such as lung cancer screening, preoperative examinations, etc.). (2) Patients who are unable to complete PFTs that meet quality control requirements. For these individuals, our model can provide valuable lung function assessments from existing CT imaging data without the need for additional scans. This aids in the early detection of undiagnosed COPD patients. Third, we did not develop this model with the intention of completely replacing PFT, the gold standard, with CT scans. Rather, our core objective was to explore the information provided by CT scans that differs from that provided by PFT, to provide auxiliary support for more refined phenotypic analysis and risk assessment of COPD in the future. Finally, our model is not only related to the severity of emphysema but also utilizes imaging features from small airway disease, reflecting the multifactorial nature of COPD pathophysiology.

There are some limitations to this study. First, this study is a single-center retrospective study design, which may lead to selection bias, and it still needs to be verified by multicenter prospective studies in the future. Second, regarding the data set used in the study, the sample covered the broad disease stages of COPD (GOLD 0–4), with a large standard deviation. Therefore, for small sample sizes, model accuracy needs to be improved in the future to accommodate a wider range of values. Third, this study focused on predicting static PFT indicators and did not address the prediction of changes in lung function or other clinically significant endpoints (such as acute exacerbations or mortality). Future studies will construct models capable of predicting individual disease progression trajectories and prognosis. Finally, this study did not distinguish the effect of COPD subtypes on predictive power. In future studies, COPD subtypes will be further integrated to improve the accuracy of individualized diagnosis and treatment.

In conclusion, the multimodal feature fusion model based on MLP has a broad application prospect in the field of PFT prediction in COPD patients. Although this study uses the PFT index as a reference standard for validating the predictive model, the PFT itself has limitations. The core value of this study lies in uncovering spatial localization information and microstructural features in CT images that go beyond the PFT. Future work will directly explore the direct association between the deep features extracted by this model and clinical outcomes (such as acute exacerbations) to further enhance the clinical applicability of the model.

Supplementary Information

Acknowledgements

Not applicable.

Abbreviations

- COPD

Chronic obstructive pulmonary disease

- PFT

Pulmonary function test

- DL

Deep learning

- CT

Computed tomography

- FEV1

1-s Forced expiratory volume

- FVC

Forced vital capacity

- FEV1/FVC

1-s Forced expiratory volume ratio forced vital capacity

- FEV1%

1-s Forced expiratory volume to predicted value

- FVC1%

Forced vital capacity to predicted value

- Grad-CAM

Gradient-weighted class activation mapping

- GOLD

Global Initiative for Chronic Obstructive Lung Disease

Authors’ contributions

CRediT authorship contribution statement Ruihan Li: Writing – review & editing, Writing – original draft, Software, Resources, Project administration, Methodology, Investigation, Formal analysis, Data curation, Conceptualization.Hui Guo*: Writing – review & editing, Supervision, Methodology, Conceptualization.Qian Wu: Resources, Investigation.Jinhuan Han: Resources, Investigation.Shuqin Kang: Resources, Investigation.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Data availability

The datasets used and analyzed in the current study are available from the corresponding author upon reasonable request.

Declarations

Ethics approval and consent to participate

This study was conducted in accordance with the Declaration of Helsinki and approved by the Medical Ethics Committee of the Fourth Clinical College of Xinjiang Medical University, with informed consent requirements waived (2025XE-GS115).

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Vestbo J, Hurd SS, Agustí AG, et al. Global strategy for the Diagnosis, Management, and prevention of chronic obstructive pulmonary disease GOLD executive summary. Am J Respir Crit Care Med. 2013;187(4):347–65. [DOI] [PubMed] [Google Scholar]

- 2.Agustí A, Celli BR, Criner GJ, et al. Global initiative for chronic obstructive lung disease 2023 report: GOLD executive summary. Eur Resp J. 2023;61(4):26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Yang X. Application and prospects of artificial intelligence technology in early screening of chronic obstructive pulmonary disease at primary healthcare institutions in China. Int J Chronic Obstr Pulm Dis. 2024;19:1061–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yin P, Wu JY, Wang LJ, et al. The burden of COPD in China and its provinces: findings from the global burden of disease study 2019. Front Public Health. 2022;10:11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jia JN, Marges ER, De Vries-Bouwstra JK, et al. Automatic pulmonary function Estimation from chest CT scans using deep regression neural networks: the relation between structure and function in systemic sclerosis. IEEE Access. 2023;11:135272–82. [Google Scholar]

- 6.Yang YJ, Li W, Kang Y, et al. A novel lung radiomics feature for characterizing resting heart rate and COPD stage evolution based on radiomics feature combination strategy. Math Biosci Eng. 2022;19(4):4145–65. [DOI] [PubMed] [Google Scholar]

- 7.Lynch DA, Austin JHM, Hogg JC, et al. CT-Definable subtypes of chronic obstructive pulmonary disease: A statement of the Fleischner society. Radiology. 2015;277(1):192–205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chen WJ, Sin DD, FitzGerald JM, et al. An individualized prediction model for Long-term lung function trajectory and risk of COPD in the general population. Chest. 2020;157(3):547–57. [DOI] [PubMed] [Google Scholar]

- 9.Pu Y, Zhou XX, Zhang D, et al. Re-Defining high risk COPD with parameter response mapping based on machine learning models. Int J Chronic Obstr Pulm Dis. 2022;17:2471–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yoshida A, Kai C, Futamura H, et al. Spirometry test values can be estimated from a single chest radiograph. Front Med. 2024;11:1335958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wallace GMF, Winter JH, Winter JE, et al. Chest X-rays in COPD screening: are they worthwhile?. Respir Med. 2009;103(12):1862–5. [DOI] [PubMed] [Google Scholar]

- 12.Zunair H, Ben Hamza A, Sharp. U-Net: depthwise convolutional network for biomedical image segmentation. Comput Biol Med. 2021;136:13. [DOI] [PubMed] [Google Scholar]

- 13.Park H, Yun JHY, Lee SM, et al. Deep Learning-based approach to predict pulmonary function at chest CT. Radiology. 2023;307(2):11. [DOI] [PubMed] [Google Scholar]

- 14.Willemink MJ, Koszek WA, Hardell C, et al. Preparing Medical Imaging Data for Machine Learning. Radiology. 2020;295(1):4–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Selvaraju RR, Cogswell M, Das A, et al. Grad-CAM: visual explanations from deep networks via Gradient-Based localization. Int J Comput Vis. 2020;128(2):336–59. [Google Scholar]

- 16.Bhati D, Neha F, Amiruzzaman M. A survey on explainable artificial intelligence (XAI) techniques for visualizing deep learning models in medical imaging. J Imaging. 2024;10(10):239. [DOI] [PMC free article] [PubMed]

- 17.Kurashima K, Takaku Y, Hoshi T, et al. Lobe-based computed tomography assessment of airway diameter, airway or vessel number, and emphysema extent in relation to the clinical outcomes of COPD. Int J Chronic Obstr Pulm Dis. 2015;10:1027–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Boueiz A, Chang YL, Cho MH, et al. Lobar emphysema distribution is associated with 5-Year radiological disease progression. Chest. 2018;153(1):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hurst JR, Vestbo J, Anzueto A, et al. Susceptibility to exacerbation in chronic obstructive pulmonary disease. N Engl J Med. 2010;363(12):1128–38. [DOI] [PubMed] [Google Scholar]

- 20.Bhatt SP, Washko GR, Hoffman EA, et al. Imaging advances in chronic obstructive pulmonary disease insights from the genetic epidemiology of chronic obstructive pulmonary disease (COPDGene) study. Am J Respir Crit Care Med. 2019;199(3):286–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Galbán CJ, Han MLK, Boes JL, et al. Computed tomography-based biomarker provides unique signature for diagnosis of COPD phenotypes and disease progression. Nat Med. 2012;18(11):1711–. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ma YN, Wang J, Dong GH, et al. Predictive equations using regression analysis of pulmonary function for healthy children in Northeast China. PLoS ONE. 2013;8(5):6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gonzalez G, Ash SY, Vegas-Sanchez-Ferrero G, et al. Disease staging and prognosis in smokers using deep learning in chest computed tomography. Am J Respir Crit Care Med. 2018;197(2):193–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Tang LYW, Coxson HO, Lam S, et al. Towards large-scale case-finding: training and validation of residual networks for detection of chronic obstructive pulmonary disease using low-dose CT. Lancet Digit Health. 2020;2(5):E259–67. [DOI] [PubMed] [Google Scholar]

- 25.Sun J, Liao X, Yan Y, et al. Detection and staging of chronic obstructive pulmonary disease using a computed tomography–based weakly supervised deep learning approach. Eur Radiol. 2022;32(8):5319–29. [DOI] [PubMed] [Google Scholar]

- 26.Zhang L, Jiang BB, Wisselink HJ, et al. COPD identification and grading based on deep learning of lung parenchyma and bronchial wall in chest CT images. Br J Radiol. 2022;95(1133):9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sugimori H, Shimizu K, Makita H et al. A comparative evaluation of computed tomography images for the classification of spirometric severity of the chronic obstructive pulmonary disease with deep learning. Diagnostics. 2021;11(6):929. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets used and analyzed in the current study are available from the corresponding author upon reasonable request.