Abstract

Motivation

Identifying promising therapeutic targets from thousands of genes in transcriptomic studies remains a major bottleneck in biomedical research. While large language models (LLMs) show potential for gene prioritization, they suffer from hallucination and lack systematic validation against expert knowledge.

Results

The framework identified 609 sepsis-relevant genes with >94% filtering efficiency, demonstrating strong enrichment for inflammatory pathways including TNF-α signaling, complement activation, and interferon responses. Literature validation yielded 30 ultra-high confidence therapeutic candidates, including both established sepsis genes (IL10, TREM1, S100A9, NLRP3) and novel targets warranting investigation. Benchmark validation against expert-curated databases achieved 71.2% recall, with systematic correlation between computational confidence and evidence quality. The final candidate set balanced discovery (11 novel genes) with validation (19 known genes), maintaining biological coherence throughout the filtering process. This framework demonstrates that rigorous methodology can transform unreliable LLM outputs into systematically validated biological insights. By combining computational efficiency with literature grounding, the approach provides a practical tool for prioritizing experimental validation efforts. The modular design enables adaptation to other diseases through knowledge base substitution, offering a systematic approach to literature-guided biomarker discovery.

Availability and implementation

We developed a two-stage computational framework that combines LLM-based screening with literature validation for systematic gene prioritization. Starting with 10 824 genes from the BloodGen3 repertoire, we applied multi-criteria evaluation for sepsis relevance, followed by retrieval-augmented generation using 6346 curated sepsis publications. A novel faithfulness evaluation system verified that LLM predictions aligned with retrieved literature evidence. Source code and implementation details are available at https://github.com/taushifkhan/llm-geneprioritization-framework, vector database at https://doi.org/10.5281/zenodo.15802241, and Interactive demonstration at https://llm-geneprioritization.streamlit.app/.

1 Introduction

Candidate gene prioritization plays a crucial role in identifying potential biomarkers from large-scale molecular profiling data. Systems-scale profiling technologies, such as transcriptomics, have revolutionized biomedical research by simultaneously measuring tens of thousands of analytes, leading to significant advances in oncology (Golub et al. 1999, van’t Veer et al. 2002), autoimmunity (Bennett et al. 2003, Chaussabel et al. 2008), and infectious diseases (Rinchai et al. 2020a, 2022). However, translating these findings into actionable clinical insights requires identifying relevant analyte panels and designing targeted profiling assays (Geiss et al. 2008, Spurgeon et al. 2008). Targeted transcriptional profiling assays enable precise, quantitative assessments of tens to hundreds of transcripts (Rinchai et al. 2020b, Altman et al. 2021), offering cost-effectiveness, rapid turnaround times, and high-throughput capability (Li et al. 2014, Chaussabel and Pulendran 2015, Brummaier et al. 2020). The critical challenge lies in selecting relevant candidate genes from extensive biomedical information volumes generated by systems-scale profiling technologies.

Traditional knowledge-driven methods face multiple challenges in efficiently processing vast literature to identify promising candidates. While gene ontologies and curated pathways provide valuable information, they rely on static knowledge bases that may not capture current research findings or miss relevant associations buried within literature (Rinchai and Chaussabel 2022). Moreover, traditional approaches rely heavily on static knowledge bases that may not capture the most current research findings or may miss relevant associations buried within the literature (Abbas et al. 2024). Manual curation, though thorough, is time-intensive and may lack comprehensive coverage due to information volume constraints. Computational approaches have emerged to address these limitations. Methods like ProGENI integrate protein–protein and genetic interactions with expression data for drug response prediction (Emad et al. 2017), while the Monarch Initiative combines multi-species data for variant prioritization (Putman et al. 2024). However, recent large language model (LLM) applications in biomedical gene prioritization show mixed results. Kim et al. achieved only 16.0% accuracy with GPT-4 for phenotype-driven gene prioritization in rare genetic disorders, lagging traditional bioinformatics tools (Kim et al. 2024). These limitations highlight the need for sophisticated approaches addressing fundamental challenges in LLM-based biomedical inference. LLMs such as GPT-4, Claude, and PaLM have demonstrated remarkable natural language understanding capabilities (Singhal et al. 2023, Abbas et al. 2024, Deng et al. 2024), trained on vast text data enabling synthesis of diverse information sources including scientific literature. However, naive LLM approaches face significant biomedical limitations: potential hallucinations, reliance on training data snapshots potentially missing current knowledge, and difficulty providing verifiable, literature-grounded justifications. Recent advances in retrieval-augmented generation (RAG) and Chain-of-Thought reasoning offer promising solutions (Chu et al. 2024). RAG systems dynamically retrieve and incorporate relevant literature, providing current and verifiable evidence for gene prioritization. Combined with faithfulness evaluation techniques verifying model outputs against retrieved evidence, these approaches significantly enhance reliability and interpretability. Chain-of-Thought reasoning enables multi-perspective evaluation and structured synthesis of competing information sources.

We previously demonstrated LLM utility in manual candidate gene prioritization (Toufiq et al. 2023, Syed Ahamed Kabeer et al. 2025), focusing on circulating erythroid cell blood transcriptional signatures associated with respiratory syncytial virus disease severity (Rinchai et al. 2020a), vaccine response (Rinchai et al. 2022), and metastatic melanoma (Rinchai et al. 2020a). Benchmarking four LLMs across multiple criteria, GPT-4 and Claude showed superior performance through consistent scoring (correlation coefficients > 0.8), strong alignment with manual literature curation, and evidence-based justifications. This established foundations for automated gene prioritization while highlighting needs for systematic validation and evidence verification (Toufiq et al. 2023, Subba et al. 2024). Building upon this work, we developed a comprehensive framework integrating multiple advanced NLP techniques to address naive LLM limitations. Our framework combines RAG using curated biomedical literature, systematic faithfulness evaluation reducing hallucinations, and Chain-of-Thought reasoning for structured evidence synthesis. This multi-layered approach enables literature-grounded gene prioritization with systematic validation against external reference standards and functional biological coherence assessment, addressing key challenges: verifiable evidence grounding, systematic uncertainty handling, and scalable processing while maintaining interpretability.

This integration of advanced NLP techniques into a validated candidate gene prioritization pipeline represents significant methodological advancement beyond routine LLM applications. The framework enables systematic processing of extensive module repertoires like BloodGen3 (Altman et al. 2021) while providing robust validation through external benchmarking, cross-model comparison, and biological coherence assessment, accelerating translation of systems-scale profiling data into validated, literature-supported targeted assays for clinical and research applications.

2 Materials and methods

2.1 Automated gene prioritization framework

We developed a comprehensive workflow accessing LLMs for automated gene prioritization using LlamaIndex (v0.12.37) for RAG development. Initial naive LLM runs were conducted using GPT-4T (April 2024) and GPT-4o (April 2025), with GPT-4o subsequently serving as RAG synthesizer and final arbitrator. The framework incorporates RAG, faithfulness evaluation, and Chain-of-Thought reasoning, with Ollama (v0.4.8) deployment for local access to open-source models (particularly Phi-4 for faithfulness evaluation). Biomedical named entity recognition used SpaCy (v3.7.5) (Montani et al. 2023). The system handles prompt generation, model communication, response processing, and data storage in an integrated pipeline using Python 3.8 and OpenAI API (v1.75). A flow chart of the pipeline is given in Fig. 1.

Figure 1.

Computational framework for sepsis gene prioritization: multi-stage LLM-based evaluation with literature validation and multi-dimensional optimization. The framework processes input gene sets through progressive filtering and validation stages to identify high-confidence sepsis therapeutic targets. The workflow was first run on the 52-gene sepsis benchmark set (results in Fig. 2) before being applied to the full BloodGen3 compendium. Stage 1: Zero-shot evaluation using Naive LLM across eight sepsis-related criteria (pathogenesis association, immune response, biomarker potential, drug target feasibility) applied to BloodGen3 gene set (>10K genes). Genes meeting scoring thresholds undergo clustering and multi-modal evaluation, generating Priority Set 1 (PS1, 609 genes). Stage 2: RAG-enhanced evaluation system processes PS1 genes through sepsis-specific knowledge base (∼6K literature sources, >10K abstracts) using SPECTER2 embeddings and LlamaIndex framework. Retrieved literature undergoes faithfulness evaluation, and RAG-validated responses combine with Naive LLM outputs in HybridLLM approach using Chain-of-Thought reasoning. Stage 3: Literature-validated genes (Priority Set 2, PS2, 442 genes) undergo functional enrichment analysis and multi-dimensional clustering. PCA-based optimization identifies top-performing gene cluster, generating ultra-high confidence Priority Set 3 (PS3, 82 genes) and candidate set (30 genes). Final Validation: PS3 candidates undergo manual curation and deep-search verification to ensure robust evidence support. Color coding distinguishes processing stages: computational evaluation, literature validation, functional optimization, and manual verification. The progressive filtering approach reduces 10 824 genes to 30 high-confidence therapeutic targets through systematic computational assessment, literature grounding, and multi-dimensional optimization.

2.2 Gene scoring and prompt design

Prompts elicited gene information including official name, function summary, and quantitative scores (0–10 scale) across biomedically relevant criteria. The scoring rubric: 0 = no evidence; 1–3 = very limited evidence; 4–6 = some evidence requiring validation; 7–8 = good evidence; 9–10 = strong evidence. For sepsis prioritization, we assessed eight criteria: pathogenesis association, host immune response, organ dysfunction, circulating leukocyte biology, clinical biomarker use, blood transcriptional biomarker potential, drug target status, and therapeutic relevance. Complete templates are in Supplementary Methods, available as supplementary data at Bioinformatics online (Section 3).

2.3 RAG pipeline implementation

2.3.1 Knowledge base construction

We curated 6346 sepsis-related documents (4441 articles, 1905 reviews, 1990–2025) from NCBI PubMed Open Access, filtered using OpenAlex for high citation percentile (>0.8) (Fig. 1, available as supplementary data at Bioinformatics online). To enhance coverage, we supplemented this collection with 9557 abstracts from relevant sepsis publications that were behind paywalls, ensuring comprehensive literature representation across both freely accessible and subscription-based sources. Documents underwent preprocessing (reference removal, chunk segmentation, biomedical NER), SPECTER2 embedding (allenai/specter2_base), and ChromaDB storage with metadata tagging. Detail of knowledge set curation and vector database is in Supplementary Methods, available as supplementary data at Bioinformatics online (Section 1).

2.3.2 Retrieval and synthesis

For each gene-query instance, we implemented two-stage retrieval: vector similarity search (top_k = 25) followed by cross-encoder reranking (SentenceTransformerRerank, top_k = 10). Retrieved context was formatted into structured prompts for GPT-4o (temperature = 0.1) with explicit source attribution, maintaining query isolation to prevent cross-contamination.

2.3.3 Faithfulness evaluation

We conducted comparative faithfulness analysis using two independent evaluators (Phi-4 and GPT-o3-mini) on a representative set of 2928 gene-query instances from 399 genes (Fig. 2, available as supplementary data at Bioinformatics online). Each GPT-4o justification received binary classification (“Pass”/“Fail”) from both evaluators. Dual-evaluator agreement reached 71.94% overall with 90.6% agreement in high-confidence cases (Table 1 and Fig. 3, available as supplementary data at Bioinformatics online). Phi-4 was selected as primary evaluator based on: (i) architectural independence from GPT-4o reducing bias, (ii) consistent alignment with GPT-o3-mini in strong-evidence cases, (iii) lower variance in ambiguous cases, and (iv) local deployment via Ollama enabling cost-effective, reproducible evaluation. Only faithfulness-passing instances proceeded to hybrid evaluation. See Supplementary Methods, available as supplementary data at Bioinformatics online for detail of evaluation (Section 2).

2.3.4 Chain-of-Thought hybrid reasoning

We developed a hybrid evaluation strategy synthesizing naive LLM knowledge (GPT-4o) with retrieved contextual evidence (RAG) to resolve inference divergences and persistent ambiguities. The Chain-of-Thought framework employed three structured roles: (i) Naive LLM Critic assessing initial predictions for assumptions and overconfidence, (ii) Retrieved Evidence Analyst evaluating contextual support quality and specificity, and (iii) Final Arbiter synthesizing perspectives with preference for strong retrieved evidence and explicit reasoning for discrepancies. This produced unified outputs: decision classification (High/Medium/Low), recalibrated score (0–10), and detailed scientific explanation. All used prompts in this work have been provided in the Supplementary Methods, available as supplementary data at Bioinformatics online (Section 3).

2.4 Framework validation through benchmark analysis

To establish framework validity before large-scale application, we systematically evaluated performance against curated sepsis gene sets from two complementary databases. DisGeNET (n = 32) (Piñero et al. 2020) was selected for mechanistic gene–disease associations derived from systematic literature curation with transparent evidence scoring (ScoreGDA), while CTD (n = 48) (Davis et al. 2023) provided therapeutic and chemical interaction relationships with experimental validation emphasis. These databases were chosen based on methodological transparency, evidence quality standards, and peer-reviewed curation processes (Supplementary Methods: “Benchmarking Strategy and Dataset Selection,” available as supplementary data at Bioinformatics online). The combined gene sets yielded 52 unique genes with established sepsis associations through expert curation (referred to as the “52-gene sepsis benchmark set”). Additionally, we have compiled a reference gene set (n = 929 genes) from other known datasets [like Entrez: DisGeNET (Piñero et al. 2020), CTD, Monarch (Putman et al. 2024), OpenTarget (Ochoa et al. 2021), Sepon and IPA (Krämer et al. 2014)] (Fig. 4, available as supplementary data at Bioinformatics online). Detail of gene set curation for evaluation is given in Supplementary Methods, available as supplementary data at Bioinformatics online (Section 4).

2.5 Gene-specific weighted scoring calculation

Each gene received scores (0–10 scale) across eight sepsis-related evaluation criteria, as mentioned above (Fig. 5, available as supplementary data at Bioinformatics online). Individual criterion scores were categorized into three confidence bins: High (≥7), Medium (4–6), and Low (≤3). For each gene, the proportion of scores in each bin was calculated by dividing the count by the total number of criteria (n = 8). Weighted scores were computed using confidence-based weights: High × 1.0, Medium × 0.7, and Low × 0.3, with final scores ranging from 0.0 to 1.0. This weighted aggregation was essential to address the inherent unreliability of individual LLM scores by strategically rewarding genes that demonstrated consistent high-confidence evidence across multiple independent evaluation criteria. This approach helps in prioritizing robust multi-dimensional relevance over potentially misleading individual assessment. This methodology was applied consistently across naive LLM, RAG, and hybrid evaluation approaches to enable direct cross-method comparison.

2.6 Statistical analysis and performance evaluation

Performance assessment used contingency tables for score transition analysis and classification reports computing precision, recall, and F1-scores. Correlation coefficients were calculated for continuous comparisons with statistical significance testing where appropriate.

3 Results

Our aim was to convert large-language-model (LLM) outputs into a rigorously validated list of sepsis-relevant genes. To do so, we built a three-stage pipeline that (i) assigns composite scores to every gene with a naïve LLM, (ii) filters those scores through a RAG step with dual-model faithfulness checks, and (iii) refines the surviving genes by multi-dimensional optimization (Fig. 1). We first asked whether this framework could recover genes that expert curators already accept as sepsis-associated before deploying it at genome scale.

3.1 Benchmark-based validation of the framework

To establish framework validity before large-scale application, we evaluated performance against a 52-gene sepsis benchmark set derived from DisGeNET (n = 32 genes) and CTD (n = 48 genes) representing mechanistic and therapeutic associations, respectively. The framework achieved 71.2% recall (37/52 genes) using our scoring threshold of ≥5 in any evaluation category, with systematic performance variation between databases: DisGeNET 84.4% recall (27/32) and CTD 70.8% recall (34/48) (Table 2, available as supplementary data at Bioinformatics online). Analysis revealed systematic correlation with expert evidence quality, with identified genes showing significantly higher curation scores than missed genes (Fig. 2A). The three evaluation approaches exhibited distinct but appropriate scoring behaviors (Fig. 2B). All methods demonstrated expected correlations with PubMed publication frequency that mirror expert-curated databases: naive LLM showed strong correlation (Spearman ρ = 0.795, P < .001), while RAG and hybrid approaches showed moderate correlations (ρ = 0.528 and ρ = 0.524, respectively, both P < .001) (Table 3, available as supplementary data at Bioinformatics online). These correlations align with benchmark database patterns (ScoreGDA ρ = 0.802, CTD ρ = 0.515), confirming that higher scores appropriately reflect genes with more robust evidence bases rather than random scoring behavior. Notably, RAG showed specific enhancements for less-studied genes (AQP1, MIR483), suggesting capability to identify promising but understudied candidates (Table 2, available as supplementary data at Bioinformatics online).

Figure 2.

Validation of scoring methods on the 53 gene sepsis benchmark set. Panel A displays box plots comparing normalized specificity scores between successfully identified genes and missed genes from CTD and DisGeNET databases, with successfully identified genes showing higher median scores and tighter distributions. Panel B presents a line graph showing weighted scores for 52 benchmark genes across three evaluation methods (NaiveLLM, RAG, and HybridLLM) compared to a PubMed popularity baseline, with genes ordered by publication frequency along the x-axis. The NaiveLLM method tracks closely with publication frequency while RAG and HybridLLM methods show more conservative scoring patterns with selective score enhancements for specific genes. Panel C consists of two bar charts comparing top-K overlap performance, showing the percentage of correctly identified top-ranked genes for K values of 5, 10, 15, 20, 25, and 30. The left chart compares against CTD rankings and the right against DisGeNET rankings. NaiveLLM achieves highest overlap percentages especially for small K values, while RAG and HybridLLM show lower but gradually improving overlap as K increases, demonstrating different performance trade-offs between ranking accuracy and literature validation stringency.

Top-K overlap analysis revealed distinct method characteristics for practical applications (Fig. 2C). NaiveLLM demonstrated superior ranking performance with 80% overlap for top-5 DisGeNET genes and 50% for CTD genes, maintaining strong performance through top-30 predictions. In contrast, RAG and HybridLLM showed substantially lower top-K performance, with only ∼20% overlap for top-5 predictions in both databases, improving gradually to 45–70% only at top-30. This pattern reveals a fundamental trade-off: while RAG and hybrid approaches sacrifice immediate ranking performance, they provide literature verification and enhanced detection of understudied candidates.

Systematic analysis identified 15 genes consistently missed across both databases, representing coherent biological categories including protein synthesis (RPL9, RPL4), metabolic regulation (OTC, CPS1, ATP5F1A, GC), cellular maintenance (MT1, MT2, MT1A, AQP1, ABCB1B, HSPA9, TGFBI), and regulatory mechanisms (MIR483, TOP2A). These categories primarily represent housekeeping functions that may be underrepresented in sepsis-specific literature despite biological relevance, highlighting the challenge of identifying functionally important but domain-understudied genes. This validation established framework reliability with characterized blind spots in metabolic pathways, providing performance boundaries essential for confident large-scale application.

3.2 Large-scale gene screening and functional validation

Building on the validation results, we conducted genome-wide screening of the BloodGen3 repertoire (10 824 genes) to comprehensively identify sepsis-relevant gene candidates across the human transcriptome.

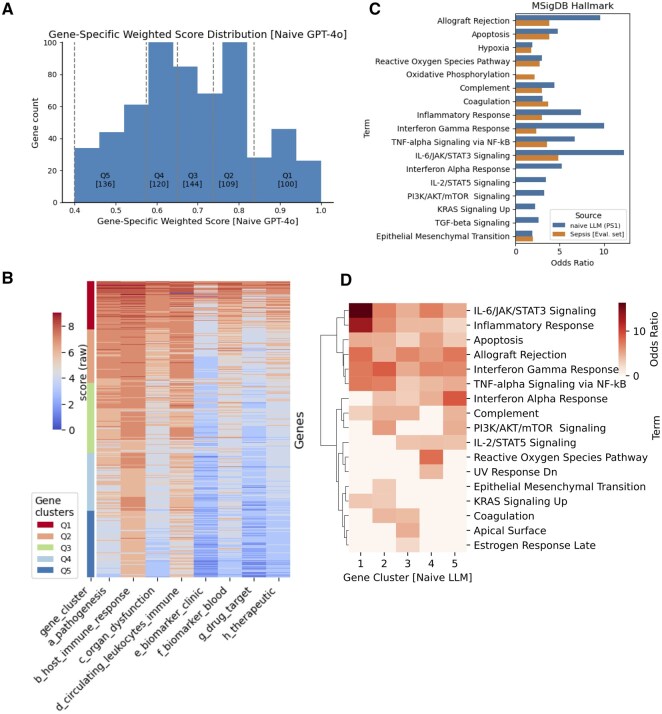

3.2.1 Genome-wide filtering and prioritization

Genome-wide screening using our eight sepsis-related evaluation criteria (pathogenesis association, immune response, clinical biomarker potential, therapeutic relevance, and others) yielded 609 genes with >94% filtering efficiency (Fig. 3A). We designated this filtered set as PS1 (Priority Set 1). To systematically analyze the score distribution, we stratified PS1 genes into five quantile-based groups using naive weighted scores: Q1 (top 100 genes), Q2 (109 genes), Q3 (144 genes), Q4 (120 genes), and Q5 (bottom 136 genes). This quantile-based stratification allows systematic examination of performance gradients across the priority gene set.

Figure 3.

Naïve-LLM scoring of the BloodGen3 gene set and definition of Priority Set 1 (PS1). (A) Gene-specific weighted score distribution from naive LLM evaluation across 10 824 BloodGen3 genes. Histogram shows filtering efficiency with 609 genes (5.6%) meeting threshold criteria for PS1 (Priority Set 1). Score distribution demonstrates clear separation between selected and filtered genes. (B) Scoring heatmap for PS1 genes across eight sepsis-related evaluation criteria. Genes are stratified into quantile-based groups (Q1–Q5) ranked by naive weighted scores. Color intensity represents score magnitude, with Q1 genes (top quantile) showing consistently high scores across multiple criteria and lower quantiles exhibiting more heterogeneous patterns. (C) Functional enrichment analysis comparing PS1 genes against MSigDB Hallmark pathways. Bar plot shows odds ratios (log scale) for significantly enriched pathways (FDR < 0.05), with colors indicating gene set source. PS1 genes demonstrate strong enrichment for sepsis-relevant inflammatory and immune response pathways, validating biological coherence of the computational selection. Comparison with pooled evaluation gene set (gray bars) confirms maintained enrichment specificity. (D) Quantile-specific pathway enrichment heatmap showing functional stratification within PS1. Color intensity represents enrichment significance, with higher-scoring quantiles (Q1–Q2) showing stronger enrichment for core inflammatory pathways and lower quantiles exhibiting more diverse enrichment patterns. This gradient supports score-based prioritization validity.

3.2.2 Cross-model validation

To assess framework robustness across different LLM versions, we conducted comparative analysis between GPT-4o (current model) and GPT-4T (April 2024) on a subset of genes. The cross-model evaluation demonstrated high concordance with 74% agreement in gene prioritization decisions (Fig. 6, available as supplementary data at Bioinformatics online), indicating that our framework generates consistent results across model iterations and supporting the reliability of our large-scale screening approach.

3.2.3 Score distribution patterns across quantiles

The scoring heatmap revealed clear performance gradients across quantile groups (Fig. 3B), as expected. Q1 genes (highest-scoring quantile) demonstrated consistently elevated scores across all eight evaluation criteria, representing the most promising sepsis candidates with strong evidence across multiple domains. Q2 genes maintained moderately high scores with some criteria-specific variations. The middle and lower quantiles (Q3–Q5) exhibited progressively more heterogeneous scoring patterns, with Q5 genes showing the most variable performance across criteria, suggesting these represent candidates with more limited or specialized evidence profiles.

3.2.4 Biological coherence and functional stratification analysis

To demonstrate that PS1 represents a biologically meaningful gene set rather than random selection artifacts, we performed functional enrichment analysis against MSigDB Hallmark pathways (Fig. 3C; Table 4, available as supplementary data at Bioinformatics online). The PS1 genes showed significant enrichment for pathways directly relevant to sepsis pathobiology, including TNF-α signaling via NF-κB, interferon gamma response, complement activation, inflammatory response, and reactive oxygen species pathways (all FDR<0.05). This enrichment pattern confirms that our computational framework successfully captured genes with coherent biological relevance to the queried sepsis context. As a validation control, we compared PS1 enrichment patterns against the pooled evaluation gene set (929 genes) used in our initial framework development. The PS1 genes demonstrated comparable or enhanced enrichment magnitudes for sepsis-relevant pathways, confirming that the genome-wide screening successfully identified contextually appropriate genes rather than introducing systematic bias.

3.2.5 Score-based functional stratification

Quantile-wise enrichment analysis revealed functional organization that correlates with our scoring hierarchy (Fig. 3D). Higher-scoring quantiles (Q1–Q2) demonstrated stronger enrichment for inflammatory response pathways including IL-6/JAK/STAT3 signaling, complement activation, and TNF-α responses, consistent with their elevated scores across multiple sepsis-related criteria. Mid-tier quantiles (Q3–Q4) showed moderate enrichment for interferon responses and cellular stress pathways, while lower-scoring genes (Q5) exhibited more heterogeneous enrichment patterns including epithelial mesenchymal transition (EMT) and metabolic processes. This functional gradient supports the validity of our scoring-based prioritization approach, suggesting that higher computational scores correspond to stronger associations with core inflammatory processes relevant to sepsis, while lower-scored genes may represent more peripheral or context-specific mechanisms. Consistent with evidence-based prioritization, PS1 gene clusters showed expected correlation with publication popularity (Fig. 7, available as supplementary data at Bioinformatics online), where higher-scoring clusters (Q1–Q2) contained genes with greater research attention compared to lower-tier clusters (Q4–Q5), validating that our framework appropriately weights established evidence while maintaining capability to identify less-studied but functionally relevant candidates.

This genome-wide screening successfully identified a functionally coherent set of sepsis candidates while maintaining high specificity for disease-relevant pathways and demonstrating robust cross-model consistency, providing a comprehensive foundation for subsequent detailed analysis.

3.3 Literature-grounded evaluation through RAG

3.3.1 RAG evaluation framework and performance

We implemented a sepsis-specific RAG system incorporating open source literature sources (reviews and research articles) and >10 000 PubMed abstracts using SPECTER 2 and LlamaIndex (see Section 2). Each of the 609 PS1 genes was evaluated across eight sepsis-related queries, generating 4872 total evaluation instances. RAG responses underwent independent faithfulness evaluation using LlamaIndex’s Faithfulness evaluator with Phi4 as an independent LLM model, providing binary Pass/Fail assessments based on alignment between retrieved literature chunks and generated justifications. Of 4872 queried instances, 1484 passed the faithfulness test (30.5% pass rate), covering 455 unique genes designated as PS2 (Fig. 4A). The stringent pass rate reflects the conservative nature of literature-grounded evaluation, ensuring high-confidence literature support for retained genes. Analysis of our scoring rubric categories revealed that high-scoring instances demonstrated the strongest correlation with faithfulness pass rates, validating our scoring criteria and confirming that computational confidence metrics appropriately reflect literature evidence quality.

Figure 4.

Retrieval-augmented evaluation and definition of Priority Set 2 (PS2). (A) RAG evaluation performance across 4872 gene-query instances. Bar plot shows pass/fail distribution across RAG score categories (Low/Medium/High), with 1484 instances passing faithfulness evaluation (30.5% overall pass rate). High-scoring instances demonstrate strongest correlation with literature validation success. (B) Gene recovery rates across PS1 quantile clusters following RAG evaluation. Bars show gene counts per cluster, demonstrating evidence-dependent recovery with the highest rates in top-tier clusters (Q1: 93%, Q2: 90%) and progressive decline in lower-tier clusters. (C) Method agreement analysis comparing evaluation approaches. Left panel shows overall agreement percentages between method pairs. Right panels show cluster-wise agreement patterns, with extreme-scoring clusters (Q1, Q5) showing the highest concordance and mid-tier clusters exhibiting more variable agreement reflecting literature context influence. (D) Sankey diagram visualizing cluster membership dynamics between NaiveLLM (PS1, left) and HybridLLM (PS2, right) approaches. Flow width represents gene count transitions, showing cluster consolidation from 5 to 4 clusters, with preserved high-confidence assignments and selective filtering of low-evidence genes. Colors correspond to cluster rankings. (E) MSigDB Hallmark pathway enrichment comparison across Evidence set (n = 1279), PS1 (n = 609), and PS2 (n = 442). Circle size represents adjusted P-values (<.05), color intensity shows odds ratios. PS2 demonstrates enhanced enrichment for core sepsis pathways with selective loss of broader regulatory processes (TGF-β, EMT). (F) BG3 module enrichment analysis for PS2 genes showing cell-type specific signatures. Strong enrichment for inflammation, interferon response, monocyte activation, and neutrophil activation modules validates immune-focused biological coherence, with selective absence of non-specific gene modules confirming functional specificity.

3.3.2 Cluster-wise recovery and evidence stratification

RAG evaluation revealed expected evidence-dependent recovery patterns across PS1 clusters (Fig. 4B). Top-tier clusters achieved the highest literature validation rates: Q1 (93% recovery, 93/100 genes) and Q2 (90% recovery, 98/109 genes), consistent with their elevated baseline scores and extensive research documentation. Q3 showed moderate recovery (75%, 109/144 genes), while Q4 and Q5 both achieved approximately 60% recovery. This gradient confirms that higher computational scores correlate with stronger literature evidence availability, supporting the validity of our initial scoring framework (Fig. 7, available as supplementary data at Bioinformatics online).

3.3.3 Method agreement and scoring dynamics

Comparative analysis between evaluation approaches revealed moderate but systematic agreement patterns (Fig. 4C). Naive vs RAG comparison showed approximately 50% agreement, reflecting the conservative nature of literature-based filtering. Naive vs Hybrid demonstrated improved concordance, particularly for extreme-scoring genes where computational and literature evidence align. Cluster-wise agreement patterns showed expected conservation: Q1 exhibited maximum overlap due to consistently high-confidence literature support, while Q5 showed substantial agreement due to consistently limited evidence. Mid-tier clusters (Q2–Q4) displayed more variable agreement, indicating that literature context significantly influences evaluation outcomes for moderately evidenced genes (Table 6, available as supplementary data at Bioinformatics online).

3.3.4 Re-clustering and cluster membership dynamics

We re-clustered the 442 (filtered gene from RAG “pass” genes) using gene-wise weighted scores from HybridLLM, considering only the 1484 RAG pass instances for scoring calculations. Following the same quantile-based clustering approach, we identified a maximum of four clusters for PS2, compared to five clusters in the original PS1 set, reflecting the more concentrated distribution of high-confidence, literature-validated genes.

The Sankey diagram reveals systematic shifts in cluster membership between NaiveLLM (PS1) and HybridLLM (PS2) approaches (Fig. 4D). The flow patterns demonstrate several key dynamics: Cluster consolidation is evident as genes from PS1’s top clusters (Q1–Q2) predominantly flow into PS2’s highest-tier clusters, indicating preserved high-confidence assignments. Upward mobility is observed where some genes from PS1’s mid-tier clusters (Q2–Q3) migrate to higher PS2 clusters, suggesting that literature context enhanced their evidence profiles. Conservative filtering is apparent through the elimination of PS1’s lowest-confidence cluster (Q5), with these genes either being filtered out entirely or redistributed into PS2’s lower-tier clusters. The flow thickness patterns indicate that cluster retention is strongest for originally high-scoring genes, while cluster reassignment is most common for moderately scored genes where literature evidence significantly influenced final classification (Table 9, available as supplementary data at Bioinformatics online).

3.3.5 Progressive pathway enrichment through filtering

Comparative functional enrichment analysis across the three gene sets reveals progressive enhancement of sepsis-relevant pathway signatures (Fig. 4E). While the evidence set (n = 927) shows broad pathway coverage with moderate enrichment intensities, both PS1 (NaïveLLM, n = 609) and PS2 (HybridLLM, n = 442) demonstrate substantially higher odds ratios for core inflammatory pathways including complement activation, TNF-α signaling, inflammatory response, and interferon pathways. This progressive enrichment intensity suggests successful noise reduction and signal enhancement through computational filtering and literature validation.

BG3 module enrichment analysis further validates PS2’s biological coherence by demonstrating specific enrichment for immune cell-type modules directly relevant to sepsis pathophysiology (Fig. 4F). PS2 genes show pronounced enrichment for inflammation-associated modules, interferon response pathways, monocyte activation signatures, and neutrophil activation modules. This cell-type specificity aligns with established sepsis immunopathology, where monocyte/macrophage dysfunction and neutrophil activation are central disease mechanisms (Hotchkiss et al. 2013, van der Poll et al. 2017, Bruserud et al. 2023, Kwok et al. 2023). Importantly, PS2 demonstrates selective absence of enrichment for non-specific gene modules, indicating successful filtering of housekeeping and broadly expressed genes that lack sepsis-specific relevance.

PS2 maintains enrichment for most core sepsis pathways present in PS1, while showing selective loss of broader regulatory pathways including TGF-β signaling and EMT. This filtering pattern, also observed in SepsisDB-derived gene sets, reflects the literature-validation process’s preference for well-documented acute inflammatory processes over tissue remodeling and resolution mechanisms.

3.4 Multi-dimensional optimization identifies high confidence priority set 3 and candidate genes

3.4.1 Cluster-specific functional analysis and final prioritization

To identify the most promising therapeutic targets from PS2, we conducted cluster-specific functional enrichment analysis followed by multi-dimensional scoring optimization. Initial analysis of PS2’s four clusters using MSigDB revealed distinct functional specialization patterns (Fig. 5A). The top-performing cluster (Cluster 1) demonstrated the strongest enrichment across core sepsis pathways including allograft rejection, IL-6/JAK/STAT3 signaling, interferon gamma response, inflammatory response, and TNF-α signaling via NF-κB, establishing it as the highest-confidence functional cluster. We designated this gene set as Priority set 3 (PS3 n = 82 genes: Tables 9 and 10, available as supplementary data at Bioinformatics online).

Figure 5.

Principal-component optimization of PS2 and selection of Priority Set 3 (PS3). (A) Cluster-specific functional enrichment analysis for PS2’s four gene clusters using MSigDB Hallmark pathways. Heatmap shows odds ratios for significantly enriched pathways (FDR < 0.05), with Cluster 1 demonstrating strongest enrichment across core sepsis pathways including allograft rejection, IL-6/JAK/STAT3 signaling, inflammatory response, and TNF-α signaling via NF-κB. Color intensity represents enrichment magnitude. (B) Principal component analysis (PCA) of PS3 genes (n = 82) based on HybridLLM scores across eight evaluation criteria. PCA reveals four distinct sub-clusters with different scoring profiles. Points are colored by priority cluster assignment, with PC1 and PC2 explaining the major variance in multi-dimensional scoring space. (C) Polar plot comparison of mean HybridLLM scores across eight evaluation dimensions for the four PCA-derived clusters. Radar chart demonstrates that Priority Cluster 1 consistently achieves the highest scores across all criteria including pathogenesis association, immune response, biomarker potential, and therapeutic relevance, establishing it as the optimal candidate set. (D) Comprehensive scoring heatmap for candidate genes (n = 30) selected from PCA Cluster 1. Shows HybridLLM scores across all eight evaluation queries with color intensity representing score magnitude (0–10 scale). Genes are annotated by discovery status: “Known” (from original evidence set) and “New” (novel candidates identified through computational pipeline). Both known and newly identified genes demonstrate consistently high scores across evaluation criteria, validating the framework’s ability to identify clinically relevant candidates while discovering novel targets with robust literature support.

3.4.2 Multi-dimensional score analysis and sub-clustering

We selected PS3 for detailed multi-dimensional analysis using principal component analysis (PCA) across HybridLLM scores for all eight evaluation categories (Fig. 5B). PCA revealed four distinct sub-clusters within this high-confidence gene set, indicating further functional or evidence-quality stratification. The PCA clustering captured genes with similar scoring profiles across the multi-dimensional evaluation space, allowing identification of the most consistently high-performing candidates. Comparative analysis using polar plots revealed distinct scoring profiles across the four PCA-derived clusters (Fig. 5C). The radar chart comparison of mean Hybrid scores across eight evaluation dimensions demonstrated that PCA Cluster 1 genes consistently achieved the highest scores across all evaluation criteria, including pathogenesis association, immune response relevance, clinical biomarker potential, and therapeutic targeting feasibility. This comprehensive high performance across multiple independent evaluation dimensions established PCA Cluster 1 as the optimal candidate set (Table 10, available as supplementary data at Bioinformatics online). This analysis established a clear hierarchy: PS2 (455 genes) → PS3 (82 genes from top cluster) → final candidate set (30 genes from optimal PCA cluster).

Based on the multi-dimensional optimization analysis of PS3 genes (n = 82), we designated the 30 genes from the top-performing PCA as candidate set, representing high-confidence sepsis genes. The comprehensive scoring heatmap reveals candidate set’s composition and validation status (Fig. 5D, Table 10, available as supplementary data at Bioinformatics online): genes are color-coded to distinguish between established candidates from our original evidence set (n = 19 “Known”) and novel discoveries identified through our computational pipeline (n = 11 “New”). All candidate genes demonstrate consistently high HybridLLM scores across the eight evaluation queries, with both known and newly identified genes showing comparable scoring profiles. Importantly, each candidate gene is backed by literature-grounded evidence from our RAG system and underwent additional verification through deep-search features to ensure robust evidence support (Supplementary Method 2, available as supplementary data at Bioinformatics online).

3.4.3 Discovery and validation balance

The final candidate set successfully balances validation and discovery objectives by including both established sepsis genes that serve as positive controls and novel candidates that represent potential breakthrough targets. The comparable scoring patterns between known and new genes validate our computational framework’s ability to identify clinically relevant candidates, while the literature grounding and deep-search verification ensure that novel discoveries possess substantial evidence support rather than representing computational artifacts.

This progressive filtering approach—from 10 824 genome-wide genes to 30 ultra-high confidence candidates—demonstrates successful integration of computational assessment, literature validation, and multi-dimensional optimization to identify the most promising sepsis therapeutic targets for immediate experimental investigation.

4 Discussion

The benchmarking validation demonstrates systematic biological coherence through 71.2% recall with strong evidence quality correlation, distinguishing our approach from routine LLM applications that lack rigorous validation against expert-curated standards. RAG’s conservative behavior—frequent zero assignments with selective enhancement only when literature support exists—reflects appropriate uncertainty handling for biomedical inference where false discoveries incur significant costs. The systematic blind spots in metabolic pathways (protein synthesis, urea cycle, cellular maintenance) reveal coherent framework limitations that likely reflect literature emphasis on inflammatory over metabolic mechanisms in sepsis research, enabling informed large-scale result interpretation.

Recent biomedical LLM applications reveal fundamental limitations requiring sophisticated solutions. Kim et al. (2024) achieved only 16.0% accuracy with GPT-4 for phenotype-driven gene prioritization, significantly underperforming traditional bioinformatics tools. Recent RAG implementations demonstrate incremental improvements but lack comprehensive validation. GeneRAG (Lin et al. 2024) achieved 39% improvement in gene question answering through Maximal Marginal Relevance integration, while MIRAGE benchmarking showed medical RAG systems improving LLM accuracy by up to 18% (Xiong et al. 2024). However, these approaches focus on general question-answering rather than systematic gene prioritization workflows. Wu et al. (2025) developed RAG-driven Chain-of-Thought methods for rare disease diagnosis from clinical notes, achieving over 40% top-10 accuracy, but without systematic faithfulness evaluation or biological validation. AlzheimerRAG (Lahiri and Hu 2024) demonstrated multimodal integration for literature retrieval, and DRAGON-AI (Toro et al. 2024) applied RAG to ontology generation, but none address the core challenges of evidence verification and systematic validation in clinical contexts.

Early literature-based gene prioritization was established by Génie (2011), which ranked genes using MEDLINE abstracts and ortholog information but relied on basic text mining without modern NLP techniques (Fontaine et al. 2011). Established methods like ProGENI (Emad et al. 2017) (protein–protein interaction integration) and the Monarch Initiative (Putman et al. 2024) (curated database integration) demonstrate superior performance through structured knowledge but lack dynamic literature access and evidence verification capabilities that modern biomedical research demands.

Our framework addresses these limitations through systematic integration of curated domain knowledge (6346 high-quality sepsis publications), dual-evaluator faithfulness assessment (71.94% inter-evaluator agreement), and Chain-of-Thought hybrid reasoning. The resulting 71.2% benchmark recall substantially exceeds recent LLM applications while maintaining biological coherence through systematic pathway enrichment validation.

The three-stage filtering approach successfully reduced computational noise while preserving biological signal, as evidenced by progressive pathway enrichment from PS1 (n = 609) to PS2 (n = 442) to PS3 (n = 82). The 94% filtering efficiency demonstrates appropriate stringency, though the 30.5% RAG faithfulness pass rate may introduce bias toward well-studied genes. The final candidate gene composition (19 known, 11 novel candidates) with comparable scoring profiles supports balanced discovery-validation dynamics while avoiding computational artifacts.

Strong enrichment for sepsis-relevant pathways (TNF-α signaling, complement activation, interferon responses) confirms successful biological signal capture. The quantile-based functional stratification validates score-based prioritization, with higher-scoring clusters demonstrating stronger inflammatory pathway enrichment. However, selective loss of broader regulatory pathways (TGF-β signaling, EMT) in literature-validated sets suggests potential under-representation of resolution mechanisms.

The 74% agreement between GPT-4o and GPT-4T versions provides moderate confidence in framework robustness across model iterations. This consistency level highlights the sensitivity of LLM-based approaches to model architecture differences. The systematic correlation between computational scores and PubMed publication frequency validates evidence-weighted behavior but reveals potential circularity favoring well-published genes.

The framework’s computational requirements present both advantages and limitations. Multi-stage evaluation requires substantial API costs and processing time that may limit accessibility. The dependency on proprietary models creates sustainability concerns for long-term research applications and reproducibility as models evolve.

Future iterations should incorporate experimental data beyond literature analysis, including functional genomics and clinical outcomes data. Integration of tissue-specific and temporal expression patterns could enhance context-relevant prioritization. Expanding beyond inflammatory mechanisms to include metabolic and repair pathways would provide more comprehensive target coverage.

The methodological framework provides a foundation for systematic AI-driven biomedical discovery with potential applications beyond sepsis research. However, continued validation against experimental outcomes and clinical data will be essential for establishing real-world utility and addressing the persistent challenge of translating computational predictions into therapeutic advances. The framework’s strength lies in systematic prioritization rather than definitive target identification, offering improved resource allocation for early-stage research while acknowledging the substantial experimental validation required for clinical translation.

5 Conclusion

We developed and validated a comprehensive computational framework for systematic gene prioritization in sepsis research, addressing critical limitations in current LLM-based biomedical applications. Through progressive filtering of 10 824 genes to 30 ultra-high confidence candidates, our approach demonstrates that rigorous methodology can transform unreliable LLM outputs into systematically validated biological insights. The framework’s key innovations include curated domain-specific knowledge base construction, dual-evaluator faithfulness assessment to reduce hallucination risks, and Chain-of-Thought hybrid reasoning that synthesizes computational predictions with literature evidence. Benchmark validation against expert-curated databases achieved 71.2% recall with strong evidence quality correlation, substantially exceeding recent LLM applications while maintaining biological coherence through systematic pathway enrichment. The identification of 11 novel high-confidence candidates alongside 19 established sepsis genes demonstrates balanced discovery-validation dynamics, though these candidates represent systematically prioritized hypotheses requiring extensive experimental validation rather than immediate therapeutic targets.

Our methodological approach—combining computational assessment, literature validation, and multi-dimensional optimization—provides a template for evaluating AI-driven biomedical discovery tools while establishing principles for responsible AI application in biomedical research. The modular design allows flexible adaptation where LLM models, gene lists, evaluation queries, and RAG databases can be systematically replaced to address any user-defined disease context or research question, extending applicability beyond sepsis to diverse biomedical domains. The systematic characterization of framework limitations, particularly blind spots in metabolic pathways and bias toward well-studied genes, enables informed interpretation of results and guides future improvements. While computational efficiency and model dependency present adoption challenges, this work demonstrates that sophisticated computational frameworks can bridge the gap between AI capabilities and clinically translatable applications, provided they incorporate rigorous validation, systematic bias characterization, and appropriate uncertainty handling. The framework’s strength lies in efficient resource allocation for early-stage research, offering a pathway for harnessing LLM potential in biomedical research while maintaining the scientific rigor essential for advancing from computational predictions to therapeutic breakthroughs.

Supplementary Material

Acknowledgements

The authors thank their colleagues at The Jackson Laboratory, Farmington, CT, USA for their support and intellectual discussions throughout this project. They would also like to thank Nico Marr for critical feedback. The authors are grateful for the computational resources and infrastructure support provided by The Jackson Laboratory’s Research IT team.

Contributor Information

Taushif Khan, The Jackson Laboratory for Genomic Medicine, 10 Discovery Drive, Farmington, Connecticut, 06032, United States.

Mohammed Toufiq, The Jackson Laboratory for Genomic Medicine, 10 Discovery Drive, Farmington, Connecticut, 06032, United States.

Marina Yurieva, The Jackson Laboratory for Genomic Medicine, 10 Discovery Drive, Farmington, Connecticut, 06032, United States.

Nitaya Indrawattana, Biomedical Research Incubator Unit, Research Department, Faculty of Medicine Siriraj Hospital, Mahidol University, Bangkok, Thailand; Siriraj Center of Research and Excellence in Allergy and Immunology, Faculty of Medicine Siriraj Hospital, Mahidol University, Bangkok, Thailand.

Akanitt Jittmittraphap, Department of Microbiology and Immunology, Faculty of Tropical Medicine, Mahidol University, Bangkok, 73170, Thailand.

Nathamon Kosoltanapiwat, Department of Microbiology and Immunology, Faculty of Tropical Medicine, Mahidol University, Bangkok, 73170, Thailand.

Pornpan Pumirat, Department of Microbiology and Immunology, Faculty of Tropical Medicine, Mahidol University, Bangkok, 73170, Thailand.

Passanesh Sukphopetch, Department of Microbiology and Immunology, Faculty of Tropical Medicine, Mahidol University, Bangkok, 73170, Thailand.

Muthita Vanaporn, Department of Microbiology and Immunology, Faculty of Tropical Medicine, Mahidol University, Bangkok, 73170, Thailand.

Karolina Palucka, The Jackson Laboratory for Genomic Medicine, 10 Discovery Drive, Farmington, Connecticut, 06032, United States.

Basirudeen Syed Ahamed Kabeer, Sidra Medicine, Doha, Qatar; Department of Pathology, Saveetha Medical College and Hospital, Saveetha Institute of Medical and Technical Sciences (SIMATS), Saveetha University, Chennai, India.

Darawan Rinchai, St. Jude Children’s Research Hospital, Memphis, TN, United States.

Damien Chaussabel, The Jackson Laboratory for Genomic Medicine, 10 Discovery Drive, Farmington, Connecticut, 06032, United States.

Author contributions

Taushif Khan (Conceptualization [equal], Data curation [lead], Formal Analysis [lead], Investigation [equal], Methodology [equal], Resources [lead], Software [lead], Supervision [equal], Validation [lead], Visualization [lead], Writing—original draft [equal], Writing—review & editing [equal]), Mohammed Toufiq (Data curation [supporting]), Marina Yurieva (Investigation [supporting], Methodology [supporting], Writing—review & editing [supporting]), Nitaya Indrawattana (Formal Analysis [equal], Investigation [supporting], Validation [equal], Writing—review & editing [supporting]), Akanitt Jittmittraphap (Data curation [equal], Investigation [equal], Writing—review & editing [supporting]), Nathamon Kosoltanapiwat (Data curation [supporting], Formal Analysis [supporting], Investigation [supporting], Writing—review & editing [supporting]), Pornpan Pumirat (Data curation [supporting], Formal Analysis [supporting], Investigation [supporting], Methodology [supporting], Writing—review & editing [supporting]), Passanesh Sukphopetch (Data curation [supporting], Methodology [supporting], Writing—review & editing [supporting]), Muthita Vanaporn (Data curation [supporting], Investigation [equal], Writing—review & editing [supporting]), Karolina Palucka (Conceptualization [supporting], Funding acquisition [equal], Supervision [supporting]), Basirudeen Kabeer (Data curation [equal], Investigation [equal], Methodology [equal], Writing—review & editing [supporting]), Darawan Rinchai (Data curation [supporting], Formal Analysis [supporting], Investigation [supporting], Methodology [equal], Writing—review & editing [supporting]), and Damien Chaussabel (Conceptualization [equal], Data curation [equal], Formal Analysis [equal], Funding acquisition [lead], Investigation [equal], Methodology [equal], Resources [equal], Supervision [equal], Validation [equal], Visualization [equal], Writing—original draft [lead], Writing—review & editing [equal])

Supplementary data

Supplementary data are available at Bioinformatics online.

Conflict of interest: None declared.

Funding

Not applicable.

Data availability

Source code and implementation details are available at https://github.com/taushifkhan/llm-geneprioritization-framework, vector database at https://doi.org/10.5281/zenodo.15802241 and Interactive demonstration at https://llm-geneprioritization.streamlit.app/.

References

- Abbas A, Rehman MS, Rehman SS. Comparing the performance of popular large language models on the national board of medical examiners sample questions. Cureus 2024;16:e55991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Altman MC, Rinchai D, Baldwin N et al. Development of a fixed module repertoire for the analysis and interpretation of blood transcriptome data. Nat Commun 2021;12:4385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett L, Palucka AK, Arce E et al. Interferon and granulopoiesis signatures in systemic lupus erythematosus blood. J Exp Med 2003;197:711–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brummaier T, Kabeer BSA, Wilaisrisak P et al. Cohort profile: molecular signature in pregnancy (MSP): longitudinal high-frequency sampling to characterise cross-omic trajectories in pregnancy in a resource-constrained setting. BMJ open 2020;10. 10.1136/bmjopen-2020-041631 [DOI] [PMC free article] [PubMed]

- Bruserud Ø, Mosevoll KA, Bruserud Ø et al. The regulation of neutrophil migration in patients with sepsis: the complexity of the molecular mechanisms and their modulation in sepsis and the heterogeneity of sepsis patients. Cells 2023;12:1003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaussabel D, Pulendran B. A vision and a prescription for big data-enabled medicine. Nat Immunol 2015;16:435–9. [DOI] [PubMed] [Google Scholar]

- Chaussabel D, Quinn C, Shen J et al. A modular analysis framework for blood genomics studies: application to systemic lupus erythematosus. Immunity 2008;29:150–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chu Z, Chen J, Chen Q et al. Navigate through enigmatic labyrinth a survey of chain of thought reasoning: advances, frontiers and future. In: Ku L-W, Martins A, Srikumar V (eds), Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Bangkok, Thailand: Association for Computational Linguistics, 2024, 1173–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davis AP, Wiegers TC, Johnson RJ et al. Comparative toxicogenomics database (CTD): update 2023. Nucleic Acids Res 2023;51:D1257–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deng L, Wang T, Zhai Z et al. Evaluation of large language models in breast cancer clinical scenarios: a comparative analysis based on ChatGPT-3.5, ChatGPT-4.0, and Claude2. Int J Surg 2024;110:1941–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emad A, Cairns J, Kalari KR et al. Knowledge-guided gene prioritization reveals new insights into the mechanisms of chemoresistance. Genome Biol 2017;18:153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fontaine J-F, Priller F, Barbosa-Silva A et al. Génie: literature-based gene prioritization at multi genomic scale. Nucleic Acids Res 2011;39:W455–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geiss GK, Bumgarner RE, Birditt B et al. Direct multiplexed measurement of gene expression with color-coded probe pairs. Nat Biotechnol 2008;26:317–25. [DOI] [PubMed] [Google Scholar]

- Golub TR, Slonim DK, Tamayo P et al. Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science 1999;286:531–7. [DOI] [PubMed] [Google Scholar]

- Hotchkiss RS, Monneret G, Payen D. Sepsis-induced immunosuppression: from cellular dysfunctions to immunotherapy. Nat Rev Immunol 2013;13:862–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J, Wang K, Weng C et al. Assessing the utility of large language models for phenotype-driven gene prioritization in the diagnosis of rare genetic disease. Am J Hum Genet 2024;111:2190–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krämer A, Green J, Pollard J Jr et al. Causal analysis approaches in ingenuity pathway analysis. Bioinformatics 2014;30:523–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwok AJ, Allcock A, Ferreira RC et al. ; Emergency Medicine Research Oxford (EMROx). Neutrophils and emergency granulopoiesis drive immune suppression and an extreme response endotype during sepsis. Nat Immunol 2023;24:767–79. [DOI] [PubMed] [Google Scholar]

- Lahiri AK, Hu QV. AlzheimerRAG: Multimodal retrieval-augmented generation for clinical use cases. MAKE 2025;7:89. 10.3390/make7030089 [DOI] [Google Scholar]

- Li S, Rouphael N, Duraisingham S et al. Molecular signatures of antibody responses derived from a systems biology study of five human vaccines. Nat Immunol 2014;15:195–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin X, Deng G, Li Y et al. GeneRAG: enhancing large language models with gene-related task by retrieval-augmented generation. 2024. 2024.06.24.600176, preprint: not peer reviewed.

- Montani I, Honnibal M, Honnibal M et al. Explosion/spaCy: v3.7.2: fixes for APIs and requirements. Zenodo 2023. https://doi: 10.5281/zenodo.10009823 [DOI]

- Ochoa D, Hercules A, Carmona M et al. Open targets platform: supporting systematic drug–target identification and prioritisation. Nucleic Acids Res 2021;49:D1302–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Piñero J, Ramírez-Anguita JM, Saüch-Pitarch J et al. The DisGeNET knowledge platform for disease genomics: 2019 update. Nucleic Acids Res 2020;48:D845–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Putman TE, Schaper K, Matentzoglu N et al. The monarch initiative in 2024: an analytic platform integrating phenotypes, genes and diseases across species. Nucleic Acids Res 2024;52:D938–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rinchai D, Altman MC, Konza O et al. Definition of erythroid cell-positive blood transcriptome phenotypes associated with severe respiratory syncytial virus infection. Clin Transl Med 2020. a;10:e244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rinchai D, Chaussabel D. A training curriculum for retrieving, structuring, and aggregating information derived from the biomedical literature and large-scale data repositories. F1000Res 2022;11:994. 10.12688/f1000research.122811.1 [DOI] [Google Scholar]

- Rinchai D, Deola S, Zoppoli G et al. ; PREDICT-19 Consortium. High-temporal resolution profiling reveals distinct immune trajectories following the first and second doses of COVID-19 mRNA vaccines. Sci Adv 2022;8:eabp9961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rinchai D, Syed Ahamed Kabeer B, Toufiq M et al. A modular framework for the development of targeted covid-19 blood transcript profiling panels. J Transl Med 2020. b;18:291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singhal K, Azizi S, Tu T et al. Large language models encode clinical knowledge. Nature 2023;620:172–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spurgeon SL, Jones RC, Ramakrishnan R. High throughput gene expression measurement with real time PCR in a microfluidic dynamic array. PLoS One 2008;3:e1662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Subba B, Toufiq M, Omi F et al. Human-augmented large language model-driven selection of glutathione peroxidase 4 as a candidate blood transcriptional biomarker for circulating erythroid cells. Sci Rep 2024;14:23225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Syed Ahamed Kabeer B, Subba B, Rinchai D et al. From gene modules to gene markers: an integrated AI-human approach selects CD38 to represent plasma cell-associated transcriptional signatures. Front Med (Lausanne) 2025;12:1510431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toro S, Anagnostopoulos AV, Bello SM et al. Dynamic retrieval augmented generation of ontologies using artificial intelligence (DRAGON-AI). J Biomed Semantics 2024;15:19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toufiq M, Rinchai D, Bettacchioli E et al. Harnessing large language models (LLMs) for candidate gene prioritization and selection. J Transl Med 2023;21:728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Poll T, van de Veerdonk FL, Scicluna BP et al. The immunopathology of sepsis and potential therapeutic targets. Nat Rev Immunol 2017;17:407–20. [DOI] [PubMed] [Google Scholar]

- van’t Veer LJ, Dai H, van de Vijver MJ et al. Gene expression profiling predicts clinical outcome of breast cancer. Nature 2002;415:530–6. [DOI] [PubMed] [Google Scholar]

- Wu D, Wang Z, Nguyen Q et al. Integrating chain-of-thought and retrieval augmented generation enhances rare disease diagnosis from clinical notes. 2025. 10.48550/arXiv.2503.12286, preprint: not peer reviewed. [DOI]

- Xiong G, Jin Q, Lu Z et al. Benchmarking Retrieval-Augmented generation for medicine. In: Ku L-W, Martins A, Srikumar V (eds), Findings of the Association for Computational Linguistics: ACL 2024. Bangkok, Thailand: Association for Computational Linguistics, 2024, 6233–51. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Montani I, Honnibal M, Honnibal M et al. Explosion/spaCy: v3.7.2: fixes for APIs and requirements. Zenodo 2023. https://doi: 10.5281/zenodo.10009823 [DOI]

Supplementary Materials

Data Availability Statement

Source code and implementation details are available at https://github.com/taushifkhan/llm-geneprioritization-framework, vector database at https://doi.org/10.5281/zenodo.15802241 and Interactive demonstration at https://llm-geneprioritization.streamlit.app/.