Abstract

Objective

To improve the initial risk assessment capability for emergency chest pain patients without relying on laboratory test results.

Methods

This study is a single-center, retrospective study. All medical records using the “chest pain”, “chest tightness”, and “palpitation” templates in the emergency department Zhongnan Hospital of Wuhan University from January 1, 2015, to December 31, 2022, were included. The original dataset was split chronologically for temporal validation (2015–2018 for training, 2019–2022 for testing), and multiple imputation was conducted separately in each subset to avoid data leakage. Different variable selection methods, including traditional methods (such as t-tests for continuous variables and chi-square tests for categorical variables) and machine learning techniques (such as Lasso, random forest, stepwise, and best subset methods), were used to select predictive factors in the training set. To address the issue of imbalanced data, the Synthetic Minority Oversampling Technique (SMOTE) was applied to the training set to balance the dataset. Then, using the selected features, predictive models were built on the training set, and their performance was evaluated on the testing set. During the model building process, hyperparameter tuning and model training were performed using five-fold cross-validation. Afterward, a prospective, observational internal validation pre-experiment was conducted from January to March 2024, comparing the best model’s performance with nurse triage and the HEART score.

Results

25 variables were selected for building the predictive models. Six machine learning models using six algorithms (extreme gradient boosting, logistic regression, decision tree, naive bayes, random forest, and support vector machine) have been developed and evaluated. The XGB model achieved the highest AUC in the training set (0.933 [0.931–0.934]). The LR model’s AUC was the highest in the testing set (0.804, [0.802–0.806]). Except for the LR and Naive Bayes models, the AUC values of all models decreased on the testing set compared to the training set. In the prospective validation pre-experiment, the XGB model achieved an AUC of 0.820 [0.779–0.857] and its results were consistent with nurse triage results.

Conclusion

The model demonstrated comparable predictive performance to the HEART score and nurse triage, with higher discrimination in some metrics. It relies on easily obtainable, quantifiable, and measurable variables, making it practical for integration into our department’s system. It may also be adaptable for pre-hospital settings pending further validation and could complement rapid ED-based stratification workflows.

Clinical trial number

Not applicable.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12911-025-03226-x.

Keywords: Chest pain, Machine learning

Introduction

Acute chest pain, a leading complaint in emergency departments (ED), represents a group of diseases primarily presenting with chest discomfort [1]. The etiologies of acute chest pain ranging from rapidly progressing, life-threatening conditions such as acute coronary syndromes (ACS), acute aortic dissection (AAD), acute pulmonary embolism (PE), to moderate and low-risk conditions like gastroesophageal reflux disease (GERD) and intercostal neuralgia [2]. High-risk chest pain should be promptly diagnosed and managed through emergency protocols, while moderate-risk cases require dynamic assessment and monitoring, and low-risk patients should be safely discharged early [2].

However, to exclude serious and life-threatening conditions, physicians often conduct comprehensive evaluations, consuming significant medical resources and resulting in prolonged patient wait times and high diagnostic costs [2]. Over-testing not only wastes medical resources but also imposes unnecessary psychological stress on patients. Thus, balancing the reduction of missed diagnoses while avoiding over-testing of low-risk patients remains a crucial challenge.

The advent of the era of smart healthcare signifies the central role of data-driven decision support systems. As a branch of artificial intelligence (AI), machine learning (ML) has the capability to analyze vast amounts of data, identifying patterns and regularities that can subsequently be used to predict new data. With the proliferation of Electronic Medical Records (EMRs), it has become possible to systematically capture and analyze medical data, providing precise scientific evidence for disease prevention, diagnosis, treatment, and management. The integration of ML with smart healthcare is propelling the transformation of healthcare delivery, realizing personalized and precision medicine.

The application of ML technology in emergency medicine primarily focuses on enhancing the identification of acute critical conditions, optimizing patient care, and improving the allocation of emergency medical resources [3–5]. Current studies of ML for risk stratification and prediction of chest pain patients predominantly focus on single diseases such as ACS or acute myocardial infarction (AMI) [6], with relatively few targeting multiple high-risk chest pain conditions [7]. Moreover, many studies have not fully utilized historical data for in-depth analysis, often excluding symptoms as variables in their ML models [6, 8], due to the difficulty of analyzing free-text clinical records.

The ED of Zhongnan Hospital of Wuhan University has a structured EMR system, integrating data from multiple terminals and documenting conditions using fixed-sequence templates. This system optimizes medical record management and provides a rich data platform for advanced analytics.

This study aims to utilize big data and ML technologies to identify key factors that help clinicians distinguish between different risk levels in chest pain patients, excluding variables that require waiting time for laboratory tests. The goal is to develop a new chest pain screening tool to improve the initial risk assessment of patients presenting to the ED with chest pain as the chief complaint.

Method

Study population

This study is a single-center cohort study conducted at Zhongnan Hospital of Wuhan University. Emergency medical records from January 1, 2015, to December 31, 2022, of patients using “chest pain,” “chest tightness,” and “palpitations” templates were collected to establish training and testing datasets for model development. For prospective validation, records from January 1, 2024, to March 31, 2024, corresponding to the on-duty days of participating physicians, were included.

Zhongnan Hospital of Wuhan University is a provincial Class-A tertiary teaching hospital. As the core component of the hospital’s chest pain center, the ED plays a vital role in the assessment of daily ST-segment elevation myocardial infarction (STEMI) patients, assisting the cardiology department in performing over 200 emergency percutaneous coronary intervention (PCI) procedures annually.

The emergency department at Zhongnan Hospital of Wuhan University uses structured EMR templates to ensure a systematic and comprehensive documentation by healthcare providers. The attending physicians select the template based on the patient’s chief complaint at presentation. Given the lexical diversity of the Chinese language, this study includes all EMR templates related to chest discomfort, specifically “chest pain,” “chest tightness,” and “palpitations.”

Exclusion criteria are as follows: patients younger than 18 years old; medical records with chief complaints irrelevant to the study focus (e.g., medication refills, trauma); duplicate records of visits; and records missing the year of consultation.

The flowchart outlining the construction of the machine learning model in this study is shown in Fig. 1.

Fig. 1.

Study flow chart

Ethical review of clinical research

This study has been approved by the Ethics Committee of Zhongnan Hospital of Wuhan University (retrospective: Ethics Approval No. 2024028 K; prospective: Ethics Approval No. 2024094 K). Given that this study does not involve experimental research on human subjects and poses no significant risk to participants, the Ethics Committee has granted a waiver for informed consent. This segment of the study adheres to the TRIPOD guidelines (Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis).

Annotation of chest pain risk stratification

According to the definition provided in the 2021 Guideline for the Evaluation and Diagnosis of Chest Pain jointly issued by seven major associations, including the American Heart Association (AHA) [2], the primary outcome in this study was high-risk chest pain diagnoses documented in emergency medical records. These included ACS, AAD, PE, esophageal rupture (ER), and tension pneumothorax. Patients categorized under this group were considered high-risk and potentially faced life-threatening conditions, while others were labeled as non-high-risk.

The emergency diagnoses were made by experienced physicians after thoroughly evaluating patients’ vital signs, symptoms, laboratory test results, electrocardiograms, imaging findings, and other relevant examinations conducted during their emergency department visits. As such, these diagnoses were regarded as endpoint indicators reflecting patients’ conditions during that specific period. Additionally, given the retrospective nature of the model development phase in this study, the evaluation of content and outcomes relied on archived data. The diagnoses recorded in emergency medical records represented real-time clinical judgments and diagnostic conclusions made by physicians, free from the potential bias introduced by subsequent prospective evaluations. Thus, blinding was not required during data processing. This approach ensured an objective assessment of the recorded data, unaffected by prognostic speculation, thereby maintaining the integrity of the evaluation process.

Selection of independent variables

After data preprocessing, the dataset was screened for the following variables (numbers of variables in total: 87): symptoms, chest pain intensity, vital signs, demographic data (sex and age), self-reported past health status, surgical history, history of coronary artery disease, chronic disease history, medication history, allergy history, smoking history, alcohol consumption history, and the location of referred pain. Symptoms and physical examination findings were derived from EMRs documented by physicians during patient consultations. Vital signs were measured using bedside monitors or related medical devices and manually entered into the patient’s medical records. The patient’s medical history was obtained during nurse triage and emergency department visits. In the dataset, the recorded variable refers to sex (biological attribute) rather than gender.

Initially, all categorical variables in the dataset underwent a preselection process, retaining only those with a positive rate greater than 0.05% (n = 27). This step aimed to exclude categorical variables with very low frequencies in the sample, thereby reducing model complexity and avoiding overfitting. Then, we employed various feature selection methods including traditional methods (t-tests for continuous variables and chi-square tests for categorical variables), Least Absolute Shrinkage and Selection Operator (LASSO), optimal subset selection, stepwise selection, and random forest methodology.

Construction of machine learning models

The original dataset was split chronologically into two subsets based on visit year for temporal validation: data from 2015 to 2018 were used for model development (training set), and data from 2019 to 2022 were used for model testing (testing set). To avoid data leakage, multiple imputation was performed independently within each subset. Predictor variable selection and model construction were carried out using the 2015–2018 training set, and model performance was evaluated on the 2019–2022 testing set. The ROC curves of the models were plotted using the entire testing set, while five-fold cross-validation was employed to validate other evaluation metrics of the model.

Synthetic Minority Oversampling Technique (SMOTE) has been applied to address class imbalance in the training set. Specifically, a sampling rate of 3.4 was used to generate synthetic samples for the minority class, with 5 nearest neighbors considered for interpolation.

In this study, six types of machine learning techniques were used for model development: Logistic Regression (LR), Decision Tree, Naive Bayes, Random Forest (RF), eXtreme Gradient Boosting (XGBoost), and Support Vector Machine (SVM). For prospective validation, the XGB model, which demonstrated the best overall performance, was compared with the HEART score and nurse triage results.

This study employs a nested cross-validation approach for the automated selection of hyperparameters. During model development, a random search strategy is used for hyperparameter tuning.

The model development and validation (testing) process employs a repeated five-fold cross-validation method, repeated ten times.

Model performance evaluation metrics

The following metrics are used to evaluate the model’s performance: Mean Misclassification Error (MMCE) and Accuracy (ACC); the Receiver Operating Characteristic (ROC) curve; the calibration curve; Positive Predictive Value (PPV) and Negative Predictive Value (NPV); True Negative Rate (TNR, also known as specificity), False Negative Rate (FNR), False Positive Rate (FPR), True Positive Rate (TPR, also known as sensitivity); and the F1 score.

Prospective validation of the machine learning model

To conduct a prospective validation of the model’s performance, we used a non-interventional, prospective data collection method to gather a small-scale dataset from patients.

During the data collection process, attending physicians in the ED will act as data collectors. To ensure efficient and accurate data collection, an online patient information collection form was created based on the model’s variables. In this form, binary variables were set up for the physicians to select predefined labels “0” and “1” to accurately record patient information. For numerical variables, the physicians manually inputted data. A researcher conducted daily checks on the data collected for that day. This process included retrieving patient medical records and cross-referencing them with the results entered by physicians.

Additionally, this data collection also included the triage information recorded by nurses and data related to the patient’s HEART score for comparison and reference. Given the subjectivity in parts of the HEART score such as “History” and “Risk factors,” a detailed reference table from the Heart-Pathway study [9] has been established to guide annotators. The annotation of the HEART score was performed by two specially trained physicians.

The results of the prospective pre-experiment included an evaluation of the model’s predictive performance, as well as a comparison with nurse triage outcomes and HEART scores. Original nurse triage categorizes patients into four levels. In this study, nurse triage levels 1 and 2 were combined and labeled as “high risk”, while levels 3 and 4 were marked as “non high risk.”

Our department referenced the French triage guidelines and referred to the Guidelines for the Classification of Emergency Patients issued by the Ministry of Health of China in 2011. The quantified four-level emergency pre-triage standards assess “basic vital signs” collected based on emergency symptoms of systemic and specialty-related diseases, categorizing emergency patients as follows:

Level I: Critical patients – Conditions that may immediately threaten the patient’s life and require life-saving interventions.

Level II: Severe patients – Conditions that may progress to Level I in a short time or result in severe disability.

Level III: Urgent patients – Conditions with no immediate life-threatening or severely disabling signs, a low likelihood of progression to severe disease or complications, but require emergency care to alleviate symptoms.

Level IV: Non-urgent patients – Conditions without acute symptoms, minimal or no discomfort, and a clinical judgment of requiring limited emergency medical resources.

Level I and II patients are immediately transferred to the resuscitation room for treatment. Level III and IV patients wait in the designated waiting area.

Analytical methods

All analyses were performed using R Studio software (version 4.2.3). Continuous data were presented as mean values with standard deviations. Categorical data were presented as frequencies and percentages. Continuous variables were normalized using the min-max standardization method to meet the requirements of algorithms that require standardized numerical predictors. The agreement between nurse triage results and model predictions was assessed using Cohen’s Kappa statistic. The level of statistical significance was set at p < 0.05.

Results

Baseline characteristics

After the exclusion of 1,414 cases with irrelevant chief complaints, 321 patients under 18 years of age, 23 duplicated admissions, and 1,749 patients without the year of ED visit, the final chest pain dataset included 22,460 cases. This dataset was then split into the 2015–2018 training set (7,632 cases) and the 2019–2022 testing set (14,828 cases). Baseline characteristics of the training set and the prospective set are presented in Table 1.

Table 1.

Baseline demographic characteristics of the retrospective training set and the prospective cohort

| Training set (n = 7632) | Prospective set (n = 605) | |||

|---|---|---|---|---|

| Non high risk | High risk | Non high risk | High risk | |

| 5911 | 1721 | 503 | 102 | |

| Sex = Male (%) | 3153 (53.3) | 1106 (64.3) | 246 (48.9) | 72 (70.6) |

| Age (Mean (SD)) | 50.79 (18.70) | 61.47 (14.58) | 49.77 (18.71) | 63.25 (13.04) |

| SBP (Mean (SD)) | 132.19 (22.85) | 135.62 (26.38) | 134.01 (21.07) | 141.62 (29.61) |

| DBP (Mean (SD)) | 77.11 (13.74) | 78.18 (16.38) | 78.25 (14.64) | 82.51 (19.48) |

| P (Mean (SD)) | 84.45 (22.80) | 79.47 (19.55) | 91.01 (25.16) | 82.27 (18.69) |

| SPO2 (Mean (SD)) | 0.99 (0.02) | 0.98 (0.03) | 0.98 (0.03) | 0.97 (0.03) |

| Palpitations (%) | 2595 (43.9) | 690 (40.1) | 249 (49.5) | 44 (43.1) |

| Vomiting (%) | 251 (4.2) | 104 (6.0) | 34 (6.8) | 15 (14.7) |

| Altered Mental Status (%) | 83 (1.4) | 43 (2.5) | 1 (0.2) | 2 (2.0) |

| Sense Of Fear (%) | 26 (0.4) | 43 (2.5) | 4 (0.8) | 3 (2.9) |

| Dyspnea (%) | 636 (10.8) | 135 (7.8) | 50 (9.9) | 11 (10.8) |

| Fever (%) | 115 (1.9) | 12 (0.7) | 20 (4.0) | 2 (2.0) |

| Sweating (%) | 534 (9.0) | 624 (36.3) | 30 (6.0) | 40 (39.2) |

| Anxious (%) | 87 (1.5) | 100 (5.8) | 6 (1.2) | 11 (10.8) |

| Cough (%) | 474 (8.0) | 78 (4.5) | 73 (14.5) | 5 (4.9) |

| Radiating Pain (%) | 29 (0.5) | 166 (9.6) | 39 (7.8) | 31 (30.4) |

| Medication History (%) | 101 (1.7) | 52 (3.0) | 130 (25.8) | 32 (31.4) |

| Self Report of Past Health Condition - Healthy (%) | 2991 (50.6) | 610 (35.4) | 248 (49.3) | 34 (33.3) |

| Surgical History (%) | 473 (8.0) | 205 (11.9) | 74 (14.7) | 19 (18.6) |

| History of Coronary Heart Disease (%) | 424 (7.2) | 228 (13.2) | 65 (12.9) | 21 (20.6) |

| History of Chronic Disease (%) | 2012 (34.0) | 990 (57.5) | 220 (43.7) | 67 (65.7) |

| Shoulder (%) | 134 (2.3) | 131 (7.6) | 12 (2.4) | 9 (8.8) |

| Back (%) | 324 (5.5) | 229 (13.3) | 29 (5.8) | 26 (25.5) |

| Pain characteristic - dull (%) | 1966 (33.3) | 722 (42.0) | 324 (64.4) | 74 (72.5) |

| Pain characteristic - severe (%) | 594 (10.0) | 370 (21.5) | 36 (7.2) | 27 (26.5) |

Out of the 22,460 chest pain patients, 4,779 (21.3%) were labeled as high-risk. Among high-risk chest pain patients, there were 4,372 (91.5%) cases of ACS; 410 (8.6%) cases of AAD; 85 (1.8%) cases of PE; 2 (0.04%) cases of ER; and 6 (0.1%) cases of tension pneumothorax. Some patients presented with more than one diagnosis related to high-risk chest pain simultaneously. High-risk patients constituted 22.5% of the training set and 20.6% of the testing set. High-risk patients had a mean age (standard deviation) of 61.47 (14.58) years in the training set and 61.03 (14.08) years in the testing set, while non-high-risk patients had a mean age of 50.79 (18.70) years in the training set and 51.45 (19.08) years in the testing set.

Results of independent variable selection

The results of predictor variable selection using traditional statistical methods, LASSO regression, and the Random Forest algorithm are presented in the Supplementary Materials (sTables 1, 2, and 3, sFigures 1, and 2). We included 25 predictor variables for constructing the final model to identify high-risk chest pain patients. These include:

Demographic Characteristics: sex (biological attribute), age;

Symptoms: altered mental status, dyspnea, fever, sweating, anxious, cough, vomiting, palpitations, sense of fear, radiating pain;

Vital Signs: pulse (P), systolic blood pressure (SBP), diastolic blood pressure (DBP), blood oxygen saturation (SPO2);

Past Medical History: self-reported past health condition, surgical history, history of coronary artery disease (CAD), history of chronic diseases, medication history;

Location of Pain: shoulder, back;

Chest Pain Characteristics: severe pain, dull pain.

Comparison of different model performances

The performance metrics for the models on both the training set and the testing set are listed in supplementary materials (sTables 4 and 5).

During cross-validation on the training and testing sets, the XGB model achieved the highest AUC in the training set (0.933 [0.931–0.934]), but its performance decreased slightly in the testing set (0.802, [0.800-0.804]). The LR model’s AUC was the highest in the testing set (0.804, [0.802–0.806]). Except for the LR and Naive Bayes models, the AUC values of all models decreased on the testing set compared to the training set.

In the training set, the XGB model demonstrated the highest TNR at 91.8% [91.5%-92.0%], followed by the Decision Tree model at 89.4% [88.8%-90.0%] and the RF model at 86.4% [86.2%-86.7%]. In the testing Set, the Decision Tree model achieved the highest TNR at 97.1% [96.9%-97.2%]. In the training set, the XGB model had the highest PPV at 90.7% [90.5%-91.0%]. Decision Tree and RF models followed with PPVs of 86.3% [85.6%-86.9%] and 84.5% [84.3%-84.7%], respectively. The highest PPV in the testing set was observed in the Decision Tree model at 71.8% [70.8%-72.8%]. In both the training and testing sets, the accuracy of the XGB model and RF model remained above 80%. Additionally, the XGB model achieved the highest F1 score (0.858, [0.856–0.860]) and NPV (83.2%, [83.0%-83.4%]) in the training set, as well as the second-highest F1 score (0.475, [0.471–0.480]) and NPV (85.4%, [85.2%-85.5%]) in the testing set.

For the validation results on the entire testing set, the LR model achieved the highest AUC at 0.794 [0.785–0.803] (Fig. 2), followed closely by the XGB model with an AUC of 0.774 [0.765–0.783].

Fig. 2.

ROC curves of the 6 machine learning models

The calibration curves for the six models are presented in Fig. 3. The XGBoost model demonstrated closer alignment with the ideal calibration line compared to other models. However, as the curve tends to fall below the ideal line, the model slightly overestimate the predicted probabilities overall. The calibration slope for the XGBoost model was 0.719, and the intercept was 0.028.

Fig. 3.

Calibration curves for the six models

In conclusion, based on the overall performance across various metrics, the XGB model, which demonstrated the most stable and comprehensive performance, was selected for further validation in the prospective dataset.

Prospective validation results

The prospective preliminary dataset included 605 medical records. Compared to the 7,632 cases in the training set, statistically significant differences were observed in several baseline characteristics, including sex, age, systolic and diastolic blood pressure, pulse, vomiting, sweating, anxiety, cough, radiating pain, self-reported health status, history of chronic disease, radiating pain location (back, shoulder), and the nature of pain (severe pain). The remaining baseline characteristics were consistent. In the prospective dataset, 16.9% of patients were diagnosed with high-risk chest pain.

In this study, the predictive performance of the XGB model, nurse triage, and HEART score in the prospective dataset was compared in terms of TPR, TNR, FPR, FNR, PPV, NPV, MMCE, ACC, and F1 score (Table 2). Additionally, the consistency of results between the XGB model, nurse triage, and HEART score was assessed.

Table 2.

Comparison of XGB model’s performance with nurse triage and HEART score in the prospective pre-experiment cohort

| TPR | TNR | FPR | FNR | PPV | NPV | F1 | MMCE | ACC | |

|---|---|---|---|---|---|---|---|---|---|

| XGB model |

0.510 (0.409–0.610) |

0.885 (0.853–0.911) |

0.115 (0.089–0.147) |

0.490 (0.390–0.591) |

0.473 (0.377–0.570) |

0.899 (0.869–0.924) |

0.491 (0.406–0.571) |

0.179 (0.147–0.210) |

0.821 (0.789–0.851) |

| Nurse triage |

0.696 (0.597–0.783) |

0.917 (0.889–0.939) |

0.083 (0.061–0.111) |

0.304 (0.217–0.403) |

0.628 (0.532–0.717) |

0.937 (0.912–0.957) |

0.660 (0.582–0.728) |

0.121 (0.094–0.147) |

0.879 (0.851–0.904) |

| HEART |

0.294 (0.208–0.393) |

0.964 (0.944–0.979) |

0.036 (0.021–0.056) |

0.706 (0.607–0.792) |

0.625 (0.474–0.760) |

0.871 (0.840–0.897) |

0.400 (0.303–0.493) |

0.149 (0.121–0.177) |

0.851 (0.820–0.879) |

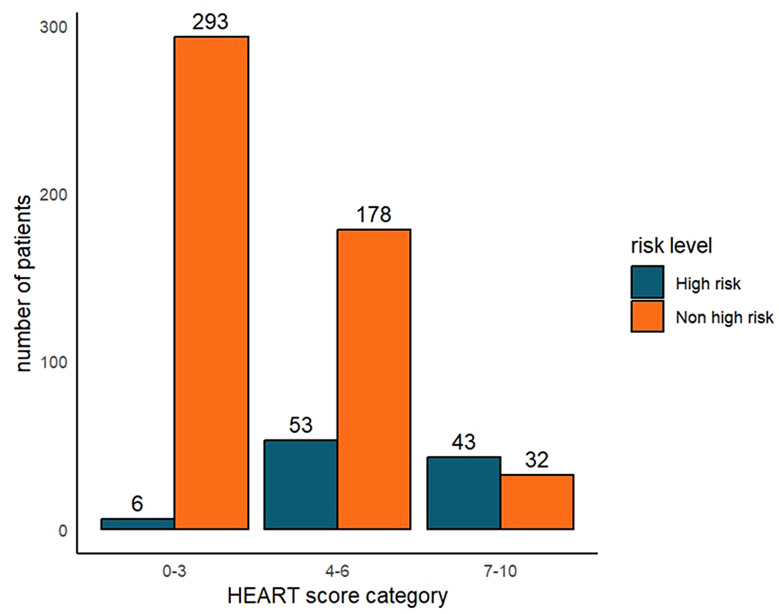

The distribution of high-risk and non-high-risk chest pain diagnoses across different risk categories according to HEART scores is illustrated in Fig. 4. Most chest pain patients were classified as the low-risk category (n = 299, 49.4%), followed by medium-risk (n = 231, 38.2%), and high-risk (n = 75, 12.4%). Among those in the low-risk category (0–3), 98.0% were non-high-risk diagnoses, whereas 57.3% in the high-risk category [7–10] were high-risk diagnoses.

Fig. 4.

Distribution of outpatient diagnoses among high-risk and non-high-risk patients in the HEART risk assessment categories

The model’s AUC was 0.820 [0.779–0.857] (sFigure 3), demonstrating better classification performance compared to the result in the testing set. In terms of predicting high-risk versus low-risk chest pain patients, at the threshold of 0.5, the XGB model’s TPR was 51.0% [40.9%-61.0%], which was higher than the result in the testing set but lower than the sensitivity of nurse triage (69.6%, [59.7%-78.3%]). The FPR of the XGB model was 11.5% [8.9%-14.7%], while that of nurse triage was 8.3% [6.1%-11.1%], indicating a similarly low misdiagnosis rate for both. The XGB model’s ACC (0.821, [0.789–0.851]) and F1 score (0.491, [0.406–0.571]) were relatively stable compared to the result in the retrospective datasets. McNemar’s test yielded a P-value of 0.501, indicating no statistically significant difference between the model’s predictions and the high-risk chest pain diagnoses made in the ED.

For consistency in identifying high-risk and non-high-risk diagnoses, the kappa test results showed fair agreement between the model and nurse triage (kappa = 0.346), the model and HEART score (kappa = 0.383), and between nurse triage and HEART score (kappa = 0.364). McNemar’s test between the model’s prediction and the nurse’s triage results yielded a P-value of 0.8545, indicating no statistically significant difference between the model’s predictions and the nurse triage classifications.

Discussion

Advances in AI and big data offer promising improvements for the management of patients with chest pain [10]. This study used ML methods to construct a risk prediction model for ED patients presenting with chest pain that does not rely on ECG or laboratory test results. The chosen XGB model is interpretable, easy to construct and optimize, and can potentially be integrated into critical care management systems or smart wearable devices. The main application of the model is to provide early-stage risk stratification, potentially even before hospital arrival. Early identification of low-risk patients could support more efficient allocation of medical resources, especially in resource-limited settings, reducing unnecessary referrals and improving ED efficiency [11, 12]. This model offers a preliminary approach that warrants further evaluation in real-world workflows.

The symptom variables included in the final model were: “altered mental status,” “dyspnea,” “fever,” “sweating,” “anxious,” “vomiting,” “palpitations,” “cough,” “sense of fear,” and “radiating pain.” The presence of these symptoms predicted high-risk status, aligning with previous research. For example, the HEART score includes typical symptoms such as “severe pain,” “sweating,” “radiating pain,” and “vomiting” [9], and the EDACS score includes “sweating” and “radiating pain” [13].

Regarding predictive ability, the six models showed relatively low sensitivity (< 40%) but high specificity (>90%) in identifying high-risk chest pain patients within the testing set. Compared to previous predictive models for AMI and ACS, the sensitivity of the retrospective models in this study is lower, whereas these models typically have a sensitivity range of 65% to 95% [7]. However, this study focuses on predicting a broader range of acute high-risk chest pain diagnoses, not limited to AMI or ACS. Our aim was to evaluate whether machine learning could approximate physician-level high-risk designation based on readily available variables. This is not a diagnostic test for a single disease but a stratification tool to support early triage. Most existing predictive tools rely on ECG results, troponin levels, and other lab test results, which can extend patient wait times in the ED [14–16]. However, our XGB models’ AUC reached 0.820 [0.779–0.857] in the prospective preliminary trial dataset, indicating good discrimination between high-risk and non-high-risk patients. Moreover, the sensitivity was approximately 51%, higher than the HEART score.

The HEART score is a common tool for risk assessment of ED chest pain patients [2, 17] and holds strong screening value for ACS [18]. However, ACS is only one aspect of acute high-risk chest pain diagnoses. Additionally, the HEART score components “History” and “Risk Factors” vary across studies and are subjective, potentially leading to inconsistent results based on different clinicians’ judgments. In this study, the prospective validation showed that the HEART score had moderate predictive effectiveness for overall high-risk chest pain, with a sensitivity of 29.4%.

Previous studies have used ML to predict diseases such as ACS with chest pain as the primary complaint. Khera et al. [8] utilized data from the American College of Cardiology-MI (Myocardial Infarction) registry to develop and validate XGBoost, Neural Network, and meta-classifier machine learning models for predicting in-hospital mortality in AMI patients, comparing them with LR models. The results showed that the new ML models did not outperform LR in differentiating post-AMI death risk [8]. Similarly, LR exhibited relatively stable AUC performance in this study. Martin et al. [6] developed the MI3 (Myocardial-ischemic-injury-index) algorithm incorporating age, sex, and high-sensitivity troponin I levels to predict MI risk. However, these studies still rely on complex or time-consuming test indicators and do not fully utilize patient medical record-based data, such as comprehensive symptom indicators, likely due to the less structured nature of medical records in other countries [19].

The structured EMR system at our department standardizes medical record formats, facilitating data extraction and analysis. This system facilitated systematic data collection and may offer a practical platform for future prospective validation and integration of decision-support tools. This setup could overcome the above-mentioned limitations of ML in clinical applications. Additionally, our team’s developed symptom-based intelligent pre-triage system has matured and yielded satisfactory results. Building on this, we developed pre-triage software using smart wearable devices. Future research could explore integrating the model into wearable devices or mobile platforms to facilitate pre-triage risk assessment, subject to usability and performance testing. By advancing pre-triage, patients can receive preliminary risk assessment results without visiting the hospital, determining whether they need to seek medical attention promptly.

In the prospective part of this study, the nurse triage system demonstrated slightly better results than the XGB model. This advantage can be attributed to the incorporation of visual inspection and subjective clinical judgments, which are not fully captured in the structured data used by the model. Nurses assess a wide range of factors through observation, including skin conditions, respiratory patterns, pupil responses, body odor, speech patterns, patient posture, and general physical and emotional state. These nuanced observations provide vital clinical information that enhances the accuracy of triage decisions, especially in situations where data may be incomplete or ambiguous. While ML models excel at processing large volumes of structured data, they currently lack the ability to interpret unstructured, observational inputs inherent to human expertise. However, the statistical analysis revealed no significant difference between the model’s predictions and nurse triage classifications, suggesting that the model may approximate clinical assessments under data-rich conditions. Further refinement of the model could help bridge the gap between data-driven predictions and clinical intuition.

As noted in previous relative research, most current studies focus solely on the performance of ML models, with little consideration for integrating these models into clinical systems for real-world application [7]. This study aims to bridge that gap by combining the model with clinical practice, enabling a more comprehensive evaluation of its practical application value and providing a reference for future studies.

However, this study also has certain limitations. This is a single center, retrospective study. The data for this study were collected from Zhongnan Hospital of Wuhan University, a large tertiary hospital with a high annual outpatient volume and good representativeness. While this internal validation supports the model’s reliability, external validation remains essential to establish the generalizability of the findings to other institutions and populations. We conducted temporal validation to adress this issue. Additionally, during model development, the retrospective nature of this study and missing information such as ECG results and 30-day MACE occurrence limited direct comparisons with existing risk stratification tools (like the HEART score) that rely on ECG components. As a result, we conducted a separate comparison between our model and the HEART Score in a smaller, prospective observational cohort (n = 605), where paper-based ECG reports were manually collected and interpreted to ensure accuracy. Some patients discharged from the ED may not have a definitive diagnosis at the time of discharge, which could potentially lead to misclassification of cases. We acknowledge this as a potential source of bias. However, based on our department’s quality control system and clinical experience, emergency physicians demonstrate a relatively high level of accuracy in identifying high-risk conditions. The purpose of this study was not to predict a specific diagnosis but rather to facilitate early identification of patients with overall high-risk profiles, enabling timely intervention and optimized resource allocation in the emergency setting.

The model is currently integrated into the clinical information management system for preliminary internal evaluation, and further implementation studies will follow. Future plans involve single-center prospective validation followed by a transition to multi-center prospective validation. Related prospective research plans have been developed to refine experiment design, enhance model performance, and enable comparisons with other scoring systems or clinical decision pathways, evaluating the model and its applications from multiple dimensions such as patient treatment and clinical decision optimization.

Conclusion

This study constructed six ML-based risk prediction models for emergency chest pain, with the XGB model demonstrating the best classification performance. The model was developed using only non-laboratory, non-imaging variables, offering a potentially faster, resource-sparing approach to risk assessment that complements existing tools.

External validation will be necessary in future studies to confirm the generalizability of the model. Furthermore, our research team plans to integrate the predictive model into clinical information management systems or smart wearable devices to enable early warning for chest pain patients. We aim to advance the warning process to the pre-triage stage, optimizing emergency resource allocation, enhancing clinical work efficiency and patient safety. The method could enable faster and more effective risk assessments while advancing current initiatives to improve data-driven decision-making within emergency medicine.

Supplementary Information

Below is the link to the electronic supplementary material.

Acknowledgements

Not applicable.

Abbreviations

- AAD

Acute Aortic Dissection

- ACC

Accuracy

- ACS

Acute Coronary Syndrome

- AI

Artificial Intelligence

- AMI

Acute Myocardial Infarction

- AUC

Area Under the Curve

- CAD

Coronary Artery Disease

- ECG

Electrocardiogram

- ED

Emergency Department

- EMR

Electronic Medical Records

- EMS

Emergency Medical Service

- ER

Esophageal Rupture

- FNR

False Negative Rate

- FPR

False Positive Rate

- GERD

Gastroesophageal Reflux Disease

- LR

Logistic Regression

- MACE

Major Adverse Cardiovascular Events

- ML

Machine Learning

- MMCE

Mean Misclassification Error

- NPV

Negative Predictive Value

- P

Pulse

- PCI

Percutaneous Coronary Intervention

- PE

Pulmonary Embolism

- PPV

Positive Predictive Value

- RF

Random Forest

- ROC

Receiver Operating Characteristic

- STEMI

ST-segment Elevation Myocardial Infarction

- SVM

Support Vector Machine

- TNR

True Negative Rate

- TPR

True Positive Rate

- XGBoost

eXtreme Gradient Boosting

Author contributions

Y.Z designed the study and obtained the fundings; S.J, S.D, C.J, F.Y, X.T and C.L contributed to the acquisition and the collection of the original data; C.L helped with the installation and management of softwares; Y.L analyzed and interpretated the data; Y.L drafted the article; S.J, S.D, M.W and C.J substantively revised it.

Funding

This research receives funding from the Transformation Project of Scientific and Technological Achievements of Zhongnan Hospital of Wuhan University for the year 2023 (Grant No. 2022CGZH-ZD011) and the 2020 annual funding for discipline construction of Zhongnan Hospital of Wuhan University (Grant No. XKJS202013).

Data availability

Original data are not publicly available to preserve individuals’ privacy. The model is available upon reasonable request.

Declarations

Ethics approval and consent to participate

The study protocol was established according to the ethical guidelines of the Helsinki Declaration and was approved by the Human Ethics Committee of Zhongnan Hospital of Wuhan University (retrospective: Ethics Approval No. 2024028 K; prospective: Ethics Approval No. 2024094 K). Given that this study does not involve experimental research on human subjects and poses no significant risk to participants, the Ethics Committee has granted a waiver for informed consent.

Consent for publication

Not applicable.

Author information (optional)

Not applicable.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Chengwei Li, Email: ZN001311@whu.edu.cn.

Yan Zhao, Email: doctoryanzhao@whu.edu.cn.

References

- 1.Goodacre S, Cross E, Arnold J, Angelini K, Capewell S, Nicholl J. The health care burden of acute chest pain. Heart. 2005;91(2):229–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gulati M, Levy PD, Mukherjee D, Amsterdam E, Bhatt DL, Birtcher KK, et al. AHA/ACC/ASE/CHEST/SAEM/SCCT/SCMR guideline for the evaluation and diagnosis of chest pain: a report of the American College of Cardiology/American Heart Association Joint Committee on Clinical Practice Guidelines. Circulation. 2021;144(22):e368-e454. [DOI] [PubMed]

- 3.Wartelle A, Mourad-Chehade F, Yalaoui F, Laplanche D, Sanchez S. Analysis of saturation in the emergency department: A Data-Driven queuing model using machine learning. Stud Health Technol Inf. 2022;294:88–92. [DOI] [PubMed] [Google Scholar]

- 4.Feretzakis G, Karlis G, Loupelis E, Kalles D, Chatzikyriakou R, Trakas N, et al. Using machine learning techniques to predict hospital admission at the emergency department. J Crit Care Med (Targu Mures). 2022;8(2):107–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Goto T, Camargo CA Jr., Faridi MK, Freishtat RJ, Hasegawa K. Machine Learning-Based prediction of clinical outcomes for children during emergency department triage. JAMA Netw Open. 2019;2(1):e186937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Than MP, Pickering JW, Sandoval Y, Shah ASV, Tsanas A, Apple FS, et al. Machine learning to predict the likelihood of acute myocardial infarction. Circulation. 2019;140(11):899–909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Stewart J, Lu J, Goudie A, Bennamoun M, Sprivulis P, Sanfillipo F, et al. Applications of machine learning to undifferentiated chest pain in the emergency department: A systematic review. PLoS ONE. 2021;16(8):e0252612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Khera R, Haimovich J, Hurley NC, McNamara R, Spertus JA, Desai N, et al. Use of machine learning models to predict death after acute myocardial infarction. JAMA Cardiol. 2021;6(6):633–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mahler SA, Riley RF, Hiestand BC, Russell GB, Hoekstra JW, Lefebvre CW, et al. The HEART pathway randomized trial: identifying emergency department patients with acute chest pain for early discharge. Circ Cardiovasc Qual Outcomes. 2015;8(2):195–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dawson LP, Smith K, Cullen L, Nehme Z, Lefkovits J, Taylor AJ, et al. Care models for acute chest pain that improve outcomes and efficiency: JACC State-of-the-Art review. J Am Coll Cardiol. 2022;79(23):2333–48. [DOI] [PubMed] [Google Scholar]

- 11.Mahler SA, Lenoir KM, Wells BJ, Burke GL, Duncan PW, Case LD, et al. Safely identifying emergency department patients with acute chest pain for early discharge. Circulation. 2018;138(22):2456–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Poldervaart JM, Reitsma JB, Backus BE, Koffijberg H, Veldkamp RF, Ten Haaf ME, et al. Effect of using the HEART score in patients with chest pain in the emergency department: A Stepped-Wedge, cluster randomized trial. Ann Intern Med. 2017;166(10):689–97. [DOI] [PubMed] [Google Scholar]

- 13.Scheuermeyer FX, Wong H, Yu E, Boychuk B, Innes G, Grafstein E, et al. Development and validation of a prediction rule for early discharge of low-risk emergency department patients with potential ischemic chest pain. CJEM. 2014;16(2):106–19. [DOI] [PubMed] [Google Scholar]

- 14.Al-Maskari M, Al-Makhdami M, Al-Lawati H, Al-Hadi H, Nadar SK. Troponin testing in the emergency department: real world experience. Sultan Qaboos Univ Med J. 2017;17(4):e398–403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mohammad OH, Naushad VA, Purayil NK, Sinan L, Ambra N, Chandra P, et al. Diagnostic performance of Point-of-Care troponin I and laboratory troponin T in patients presenting to the ED with chest pain: A comparative study. Open Access Emerg Med. 2020;12:247–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stoyanov KM, Biener M, Hund H, Mueller-Hennessen M, Vafaie M, Katus HA, et al. Effects of crowding in the emergency department on the diagnosis and management of suspected acute coronary syndrome using rapid algorithms: an observational study. BMJ Open. 2020;10(10):e041757. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Six AJ, Cullen L, Backus BE, Greenslade J, Parsonage W, Aldous S, et al. The HEART score for the assessment of patients with chest pain in the emergency department: a multinational validation study. Crit Pathw Cardiol. 2013;12(3):121–6. [DOI] [PubMed] [Google Scholar]

- 18.Fanaroff AC, Rymer JA, Goldstein SA, Simel DL, Newby LK. Does this patient with chest pain have acute coronary syndrome? The rational clinical examination systematic review. JAMA. 2015;314(18):1955–65. [DOI] [PubMed] [Google Scholar]

- 19.Murdoch TB, Detsky AS. The inevitable application of big data to health care. JAMA. 2013;309(13):1351–2. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Original data are not publicly available to preserve individuals’ privacy. The model is available upon reasonable request.