Abstract

Exam protocoling is a significant non-interpretive task burden for radiologists. The purpose of this work was to develop a natural language processing (NLP) artificial intelligence (AI) solution for automated protocoling of standard abdomen and pelvic magnetic resonance imaging (MRI) exams from basic associated order information and patient metadata. This Institutional Review Board exempt retrospective study used de-identified metadata from consecutive adult abdominal and pelvic MRI scans performed at our institution spanning 2.5 years from 2019 to 2021 to fine-tune an AI model to predict the exam protocol. The NLP algorithm Bidirectional Encoder Representations from Transformers (BERT) was employed in sequence classification mode. Twelve months of data from the COVID pandemic were excluded to avoid bias from known practice and referral pattern disruptions, with approximately 46,000 MRI exams in the resulting cohort. The final trained model had an accuracy of 88.5% with a Matthews correlation coefficient of 0.874, a true positive rate of 0.872, and a true negative rate of 0.995. Subsequent expert review of the errors performed to satisfy departmental leadership showed 81.9% were in fact correct or reasonable alternative protocols, yielding real-world performance accuracy of 97.9%. We conclude that NLP algorithms, including “smaller” large language models like the BERT family often overlooked today, can predict MRI imaging protocols for the abdomen and pelvis with high real-world performance, offering to decrease radiologists’ non-interpretive task load and increasing departmental efficiency.

Keywords: AI (artificial/augmented intelligence), NLP (natural language processing), BERT (Bidirectional Encoder Representations from Transformers (an NLP model)), MRI (magnetic resonance imaging), Protocoling

Introduction

MRI exams are ideally tailored to best serve the patient and answer clinical questions. At our tertiary referral institution, every exam is protocolled by a radiologist, and there are many protocol options. Presently, protocoling occurs after scheduling, usually within days prior to a scheduled exam. Radiologists review details from the order, referring clinician, and clinical documentation to determine the right resource, appointment type, and duration for the exam. When protocoling occurs after scheduling, any violations of assumptions made in scheduling may disrupt the schedule for all patients as radiologists and staff do their best to balance scanner time with scheduled commitments. Accurate and efficient protocol assignment is essential to adapt and provide the best patient care. Factors in play include increasing patient load, increasing complexity of MRI studies performed, reducing patient callback due to scans which did not ideally address the clinical question, optimizing scanner utilization, providing an ideal patient experience, ensuring patient safety, and improving staff satisfaction. However, if the protocol assumed by the scheduler substantially differs from the final protocol, e.g., requiring additional table positions, a specific contrast agent, sequences that may require a different magnet, or several additional lengthy sequences, the schedule and patient flow are disrupted. While this is an obvious target for algorithmic support, any algorithm must meet stringent performance requirements. This is an identified area for artificial intelligence (AI) to enhance and support radiology workflows [1–6].

Prior work incorporating artificial intelligence (AI) to improve radiology workflows and the protocoling process reveals wide variability in prior approaches [7–17]. Several reports have only predicted limited categories such as the presence or absence of IV contrast [9, 14], others built systems to protocol a specific modality such as computed tomography (CT) [11], and others have attempted to protocol by subspecialty, e.g., neuroradiology protocols alone [7, 9, 16, 17, 17]. To date, only one neuroradiology-specific publication has attempted de novo construction of MRI exam protocols at a sequence level [8]; all others have predicted at most specific protocols or groups. The heterogeneity in these prior approaches illustrates differences in ordering patterns, practice workflows, IT systems, and protocols between institutions which strongly limit generalizability. No commercial options for exam protocoling exist, likely because practice variance across institutions requires unique solutions.

Given these points, the decision was made to train an in-house natural language processing (NLP) solution tailored to our practice on our own data. We chose to employ the large language model (LLM) architecture Bidirectional Encoder Representations from Transformers (BERT). While much attention has recently been given to so-called “generative” LLMs such as GPT and its specific variants like ChatGPT, the classification task at hand is not well suited to generative architectures. BERT and its variants perform at the state of the art across multiple NLP tasks, in particular sentence classification, and showed strong promise in recent prior work [11]. The bidirectional connectedness inherent to the BERT model allows BERT to understand the entire context of a given input rather than only looking in one direction. BERT is thus preferred for this task over the unidirectional architecture of purely generative alternative language models, e.g., GPT and its variants [18–21]. As described below, BERT in sentence classification mode was applied to input metadata, provided as a standard sentence, to predict the correct protocol. Decision tree rules then pre-populate the correct MRI scanner, appointment type, and duration for the schedulers.

Benefits of this workflow include suggesting protocols and time slots immediately after order, rather than shortly before they are performed. Exam schedulers then can use these suggestions ahead of radiologist protocol, optimizing departmental workflow and the patient experience. Practice standardization and efficiency also benefit from standardized suggestions provided to protocoling radiologists and trainees. However, we also require the ability to adapt this tool with the evolving practice, rather than accept lagging predictions with practice updates while new data is gathered.

The primary literature contributions this work brings are insights to the preprocessing and quality control process, best practices for iterative re-valuation of trained model performance, and a framework to prospectively adapt and re-train such predictive algorithms alongside an evolving practice.

Materials and Methods

This study was exempt from Institutional Review Board approval as it was conducted for internal practice improvement use and exclusively used de-identified exam metadata. De-identified metadata from adult MRI exams were used in aggregate to train a predictive model. Prior to training, a retrospective review was conducted of metadata associated with consecutive adult abdominal and pelvic MRI scans performed at our institution spanning 2.5 years from 1/1/2019 to 7/31/2021 on patients 18 years of age and older. Twelve months of data from the COVID-19 pandemic were excluded due to known practice and referral pattern disruptions, to avoid potential bias due to deviations from typical referral and disease patterns. The final cohort included over 46,000 abdominal or pelvic MRI exams, which were further divided into training, validation, and test sets. The test set was the most recent 3 months of data, amounting to 18.3% of the cohort. The remaining data was split randomly into training (90%) and validation (10%) sets.

For each exam, de-identified metadata was obtained including the patient’s age, sex, free-text exam indication entered by the referring clinician, associated diagnosis, the original exam ordered, ordering department, ordering physician, and the free-text order comment (if any, this field is optional), as well as the final protocol performed. All abdominal and/or pelvic exams were included in one unified predictive model. Importantly, inclusion criteria were based on the ordered exam and were deliberately wide, including MRI exam orders which would typically route to other sections (e.g., MR Bony Pelvis or MR Angiogram of the Abdomen) because there is a crossover in the real world and the algorithm must train and recognize these. The available exam orders have a small amount of detail beyond the generic, e.g., with or without contrast, and there is an order for enterography, but the amount of detail is insufficient to protocol exams and may be inaccurate.

The protocol options and infrastructure at our tertiary referral institution are complex. A brief background with some key concepts is thereby necessary to guide the reader. Exam protocols are defined through our radiology information system (RIS), Radiant (Epic Systems Corporation, Verona, WI). MRI protocols can be divided into three main categories:

“Single-button” protocols presented in a protocol dashboard.

Named protocols entered by name as free text; these do not have dedicated buttons but are fully defined and known to technologists.

Completely bespoke protocols with each sequence and orientation fully defined by the radiologist.

Most exam protocols employed are in category #1, with the overwhelming remainder in category #2; category #3 is rarely employed. Necessary orders that must be included to complete an exam are automatically included for “single-button” protocols, e.g., IV or oral contrast for protocols that require them. However, for categories 2 and 3, these must be defined by the radiologist.

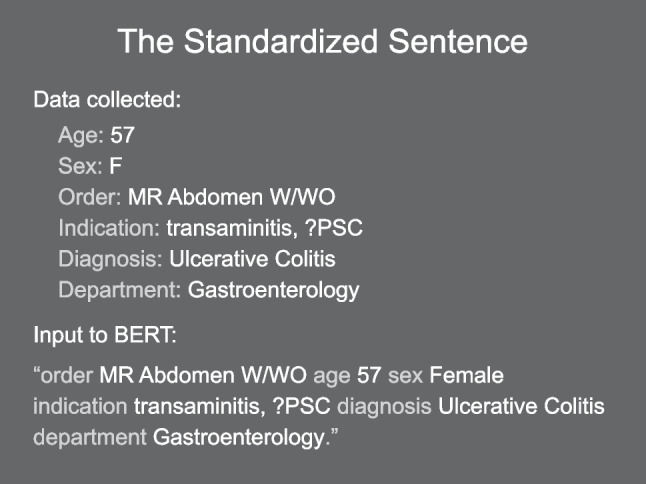

This free-text data was used to train an NLP algorithm derived from BERT [18]. BERT is trained and operates on one to two sentences, so a standardized sentence encapsulating the context and metadata of the ordered exam was constructed from the free-text metadata (see Fig. 1). The model was blind to the protocol names. Many variants of BERT are publicly available; the original model was trained on general text from the internet, but variants of the BERT base architecture fine-tuned for a specific subdomain often perform better. We evaluated several variants fine-tuned on medical notes or literature, with minor performance improvements. Our final model was based on BlueBERT, a public domain model based on BERT, and fine-tuned on PubMed and the MIMIC-III clinical notes database released by the National Center for Biotechnology Information [22]. We used this model with its default vocabulary and tokenizer, publicly available from HuggingFace. The BlueBERT model family is derived from BERT Uncased, so the tokenizer ignores the case in input text during tokenization.

Fig. 1.

Example construction of the standardized sentence input to BERT. This example is not from clinical data but rather a plausible example of a routine exam order. All text inputs to BERT were constructed from exam metadata in this manner. The six data elements shown are the final set of data elements used. Additional data elements explored but discarded were a free-text order comment and the specific MRI scanner used

The BlueBERT-Base model with MIMIC-III clinical note fine-tuning was our base model, obtained from Hugging Face. The tokenizer was the default tokenizer. All models in the BlueBERT family are uncased, so case information was automatically removed during tokenization. The software stack used was Python 3.10 with the GPU interface provided through the PyTorch package and BlueBERT model and tokenizer from the HuggingFace package, and required supporting libraries. All software in the toolchain was open source. Model fine-tuning was performed on a Nvidia RTX 3090 Founders Edition GPU with 24 GB of VRAM. A single fine-tuning run required 90–120 min on this hardware. Batch inference using a trained model is not an implementation bottleneck with the RTX 3090 capable of over 1000 predictions per second.

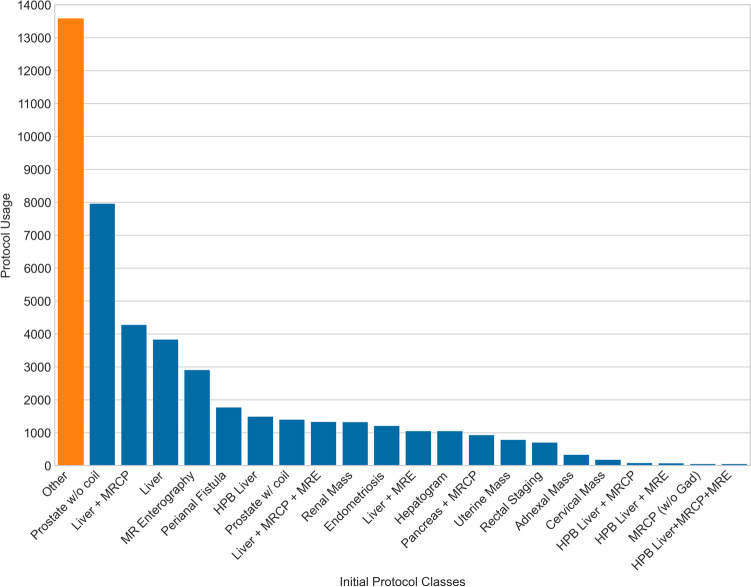

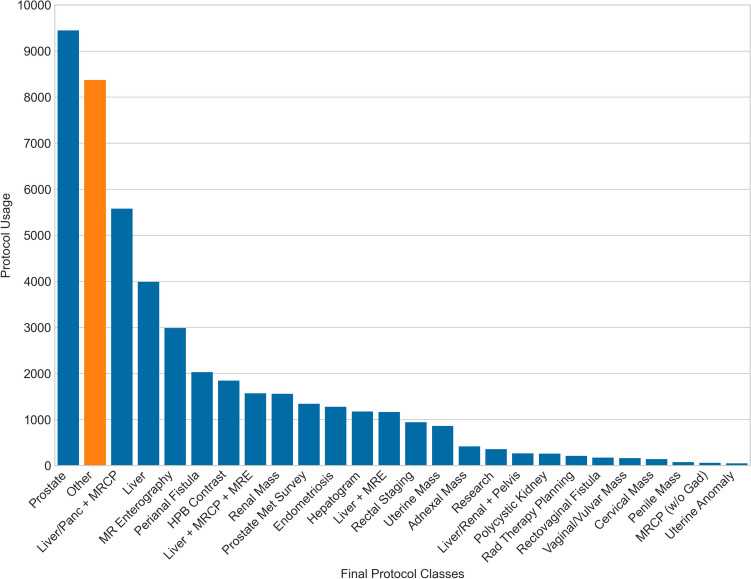

The exam protocoling task was cast as a sequence classification problem to BERT, with classes being annotated protocols or protocol groups. Training was an iterative process. The primary hyperparameter optimizations explored were training rate, Adam optimizer parameters such as weight decay and learning rate, and termination criteria. Initially, only protocols in category #1 were defined as predictive classes as well as the “Other” category (Fig. 2). Analyzing these early model predictions revealed systematic errors, which were found related to variations in practice or changes in practice during the period from which training data was obtained. When practice changes were discovered and verified, the training data were corrected in retrospect to reflect the current practice. This process is expanded upon below in the “Discussion” section. The final set of protocols included 26 discrete classes, with improved performance despite broadening from the original group of 22 classes (Fig. 3).

Fig. 2.

Initial protocol category frequency analysis sorted by class size in descending order. The largest overall category was “Other,” including all exams not destined for the abdominal division as well as protocol categories #2 and #3. Several exam protocols were so rarely used and they could not be well predicted. Abbreviations and shorthand used for readability: magnetic resonance elastography (MRE), hepatobiliary contrast (HPB), magnetic resonance cholangiopancreatography (MRCP), gadolinium (Gad), and endorectal coil for prostate referred to as “coil”

Fig. 3.

Final protocol category frequency analysis sorted by class size in descending order. The largest overall category is now prostate, a combined group including exams with and without endorectal coil. This grouping reflects practice evolution and was decided by section leadership. The “Other” category is now much smaller due to manual QC and annotation of several protocols from category #2 into discrete protocol classes, including prostate metastatic survey, research/IRB, and polycystic kidney. Several exam protocols that were rarely used and could not be effectively predicted have been grouped, including hepatobiliary contrast into a single combined category

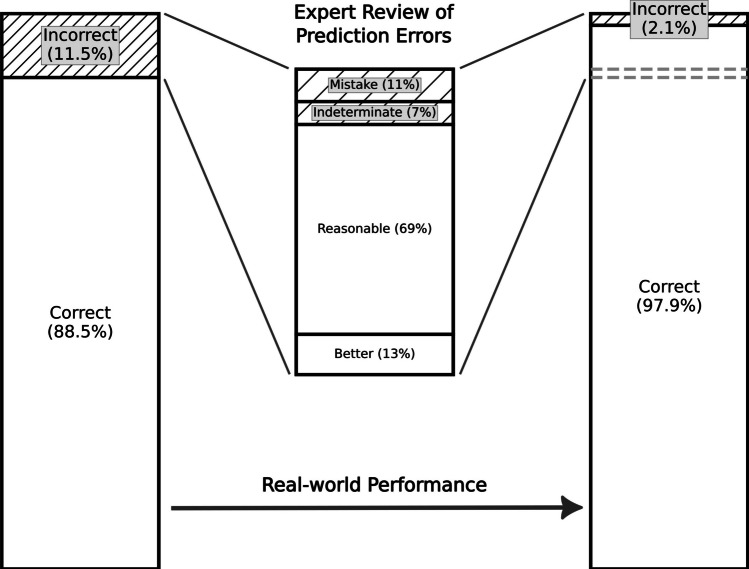

Finally, we re-evaluated a sample of prediction errors from the final model applied to the test dataset after observing in our QC process, similar to Cronister et al. [10] and Yao et al. [15], that the majority of errors were reasonable alternatives. To satisfy departmental leadership prior to implementation, each marked erroneous protocol classification was assigned one of four categories by a board-certified, abdominal fellowship-trained radiologist. The four categories of error were as follows: “More correct” for a prediction judged superior to the protocol chosen in practice on basis of established internal practice guidelines, “Reasonable” for reasonable alternative predicted protocols that expected to also answer the question or produce a correct diagnosis, “Indeterminate” for when there was insufficient information available for the reviewer to confidently distinguish if the model prediction or the human selected protocol was the better choice, or “Mistake” for when the predicted protocol was unsuitable. These results are shown in Fig. 4.

Fig. 4.

Evaluation of prediction errors made by the final model. This figure shows the model performance before (left bar chart) and after (right bar chart) an expert radiologist reviewed a representative proportion (25%) of the model predictions marked as errors. Each error was classified into one of four categories: more correct than the employed protocol (abbreviated better above); a reasonable alternative; indeterminate where insufficient information was available; or mistake where the algorithm was truly incorrect. Within the representative sample, 81.9% of the errors were either more correct than the protocol chosen in practice, or reasonable alternatives, yielding projected real-world performance of 97.9% correct protocol predictions

Results

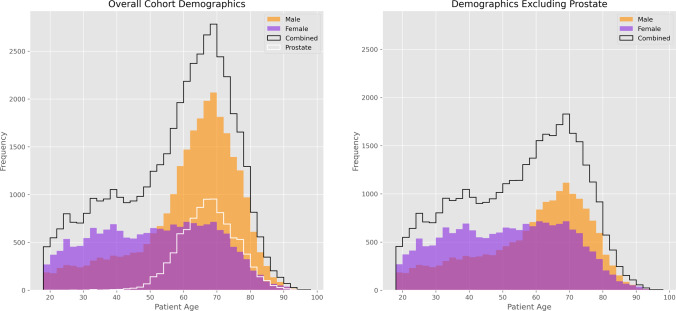

The cohort was overall 40.8% female and 59.2% male, with the imbalance due to a high volume of prostate MRI. Excluding prostate MRI, the cohort sex balance was similar to the general population (51.2% female and 48.8% male). Histogram comparisons of cohort demographics are shown in Fig. 5.

Fig. 5.

Histograms of cohort demographics. On the left, the overall cohort is a black line broken down by male (orange) and female (purple). The large proportion of prostate MRI exams is shown as a white line. On the right, these histograms are plotted with prostate MRI removed holding the Y-axis scale constant for comparison. Note there is a sex-based difference in exam ordering frequency pattern by age, with a relatively constant rate of female MRI exams from age 30–70 whereas male MRI exams peak strongly in the late 60 s, with or without prostate exams included. This effect is consistent and was not corrected as it was assumed a feature of the practice/ordering pattern

Table 1 displays the model performance during development. We report accuracy, Matthews correlation coefficient (MCC), and both the macroscopic and microscopic variants of the F1 score, true negative rate (TNR) and true positive rate (TPR). Regarding macro/micro scores, microscopic scores weigh by class size and are considered more correct for the skewed proportionality between classes as seen here whereas the macroscopic scores treat all classes equally. However, both are typically reported as divergence between them is often illustrative. The original model included all available clinical data elements and demonstrated relatively poor performance with an accuracy of 67.1%, a Matthews correlation coefficient (MCC) of 0.64, and a true positive rate (TPR) of both macroscopic and microscopic of 0.42. The original model had a poor F1 score macroscopic aggregate of 0.37, but the microscopic aggregate was much higher at 0.67. Performance increased by narrowing the data elements included initially to 5 and then adding one more for an ultimate total of 6 data elements which were reliably present and unlikely to impair generalization, as well as through hyperparameter optimization and quality control (QC) checks.

Table 1.

Evolution of model performance during development, from earliest to final trained model. The initial run included all available clinical data elements. The five data elements chosen limited the standardized sentence to only order, age, sex, diagnosis, and free-text indication. Later, the ordering department was added for a total of six elements. Gaps in the column notes represent hyperparameter experimentation or ongoing QC. The largest gains occurred during the appropriate grouping of similar classes and performing QC, including manual annotations of commonly used classes from the Other group (see Figs. 2 and 3). The final model maximized TNR (better performance shunting exams to human review) with a slight loss in TPR.

| Run | Notes | Accuracy | MCC | Kappa | F1 macro | F1 micro | TNR macro | TNR micro | TPR macro | TPR micro |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | BERT base, all data elements | 0.670 | 0.641 | 0.629 | 0.371 | 0.670 | 0.983 | 0.984 | 0.424 | 0.424 |

| 1 | Limit to five data elements | 0.813 | 0.791 | 0.789 | 0.621 | 0.813 | 0.991 | 0.991 | 0.626 | 0.626 |

| 2 | 0.810 | 0.789 | 0.788 | 0.633 | 0.810 | 0.991 | 0.991 | 0.644 | 0.644 | |

| 3 | 0.717 | 0.700 | 0.693 | 0.629 | 0.717 | 0.987 | 0.987 | 0.700 | 0.700 | |

| 4 | 0.808 | 0.787 | 0.786 | 0.639 | 0.808 | 0.991 | 0.991 | 0.648 | 0.648 | |

| 5 | 0.810 | 0.789 | 0.788 | 0.633 | 0.810 | 0.991 | 0.991 | 0.644 | 0.644 | |

| 6 | 0.811 | 0.790 | 0.789 | 0.628 | 0.811 | 0.991 | 0.991 | 0.639 | 0.639 | |

| 7 | Experiments with groups | 0.842 | 0.823 | 0.823 | 0.806 | 0.842 | 0.990 | 0.991 | 0.817 | 0.817 |

| 8 | 0.830 | 0.810 | 0.809 | 0.771 | 0.830 | 0.990 | 0.990 | 0.783 | 0.783 | |

| 9 | 0.843 | 0.825 | 0.824 | 0.815 | 0.843 | 0.990 | 0.991 | 0.818 | 0.818 | |

| 10 | 0.845 | 0.827 | 0.826 | 0.776 | 0.845 | 0.991 | 0.991 | 0.785 | 0.785 | |

| 11 | 0.799 | 0.778 | 0.776 | 0.570 | 0.799 | 0.990 | 0.991 | 0.590 | 0.590 | |

| 12 | 0.842 | 0.823 | 0.823 | 0.777 | 0.842 | 0.990 | 0.991 | 0.787 | 0.787 | |

| 13 | Add ordering department | 0.843 | 0.824 | 0.824 | 0.779 | 0.843 | 0.990 | 0.991 | 0.791 | 0.791 |

| 14 | 0.723 | 0.705 | 0.698 | 0.620 | 0.723 | 0.987 | 0.987 | 0.709 | 0.709 | |

| 15 | Move to BlueBERT | 0.794 | 0.777 | 0.773 | 0.786 | 0.794 | 0.988 | 0.988 | 0.858 | 0.858 |

| 16 | 0.794 | 0.777 | 0.773 | 0.786 | 0.794 | 0.988 | 0.988 | 0.858 | 0.858 | |

| 17 | QC begins | 0.749 | 0.734 | 0.728 | 0.681 | 0.749 | 0.991 | 0.991 | 0.844 | 0.844 |

| 18 | QC ongoing, grouping experiments | 0.789 | 0.773 | 0.770 | 0.739 | 0.789 | 0.991 | 0.991 | 0.834 | 0.834 |

| 19 | 0.780 | 0.764 | 0.760 | 0.732 | 0.780 | 0.991 | 0.991 | 0.832 | 0.832 | |

| 20 | 0.775 | 0.759 | 0.755 | 0.729 | 0.775 | 0.991 | 0.991 | 0.829 | 0.829 | |

| 21 | 0.765 | 0.750 | 0.745 | 0.728 | 0.765 | 0.991 | 0.991 | 0.848 | 0.848 | |

| 22 | 0.822 | 0.806 | 0.802 | 0.774 | 0.822 | 0.992 | 0.992 | 0.878 | 0.878 | |

| 23 | 0.881 | 0.869 | 0.868 | 0.773 | 0.881 | 0.995 | 0.995 | 0.838 | 0.838 | |

| 24 | QC complete | 0.885 | 0.874 | 0.873 | 0.813 | 0.885 | 0.995 | 0.995 | 0.872 | 0.872 |

Bold values denote the highest value attained in each column; in the case of a tie both are bolded

Our final trained algorithm based on BlueBERT had an accuracy of 88.5% with an MCC of 0.87, a true positive rate of 0.87, and a true negative rate of 0.99. In other words, for every 25 exams, 22 would be exactly correctly protocolled. When reviewing errors in prediction from the final model, as noted in recent prior studies [10, 15], most errors were reasonable alternatives where employing the chosen protocol would have resulted in correct diagnosis. To quantify this, a sample of 25% of the errors made was reviewed by an expert radiologist and classified into one of four categories. The algorithm's predictions were found to be more correct than the protocol used in practice (based on current practice standards and guidelines) 13.2% of the time, reasonable alternatives likely to yield a correct diagnosis 68.7% of the time, indeterminate with insufficient information provided 7.5% of the time, and only 10.6% represented true errors. Given that 81.9% of the errors were in fact correct or reasonable alternatives, the real-world practical accuracy of this model is extrapolated to be 97.9% (Fig. 4).

Discussion

Our final model was based on BlueBERT, a public domain model fine-tuned on PubMed and the MIMIC-III clinical notes database released by the National Center for Biotechnology Information [22]. While BERT variants fine-tuned on radiology reports exist (e.g., RadBERT) and have been applied to protocoling in prior work [11], the input exam metadata is free text generated by referring clinicians, so BlueBERT was more appropriate.

The inclusion criteria were deliberately broad to encompass any exam ordered which might result in an abdominal or pelvic MRI protocol. Put differently, the inclusion criteria were predicated on the expected a priori information a protocoling algorithm would have in live production, blind to the final selected protocol. This means the cohort deliberately included, for example, general MRI pelvis orders which may be protocoled as a perianal fistula evaluation, or a musculoskeletal pelvis exam routed to a different subspecialty.

Before training, a crucial first step was data exploration to define all relevant classes. Initially, all single-button protocols (category #1) were discrete classes while all exams from protocol categories #2 or #3 as well as a widely inclusive set of criteria including exams which were typically redirected to other subspecialties (e.g., MSK pelvis, MR angiography) were combined into a bucket category named “Other.” This category is critically important and large, initially consisting of 29.3% (13,576/46,293) of the cohort, due to the inclusion of MSK pelvic exams, vascular exams, and research exams (Fig. 2). The “Other” category allows the algorithm to learn to handle real-world mistakes in ordering patterns, admit it is uncertain when faced with unknown situations or outliers, and prompt human involvement when predicting “Other” rather than confidently selecting an incorrect protocol. Iterative analysis of mistakes and engagement of usage in practice allowed narrowing the “Other” category by adding classes from protocol categories #2 via manual annotation and quality control. For example, some radiologists preferred to manually type protocol names, even for protocols in category #1. This was also a fallback mechanism during occasional downtime. Once realized, these were searched for and merged with the appropriate category #1 protocol classes. Several protocols from categories #2 or #3, initially in the “Other” category, appeared to confound the performance of similar category #1 protocols. Of interest, in multiple cases, overall algorithm performance increased when certain protocols from category #2 were manually labeled and added as discrete classes; e.g., when “total kidney volume” (initially a category #2 protocol) was added as a discrete class alongside “renal mass,” both classes and the overall model performance improved. These were separated from the “Other” category into discrete, labeled classes via manual annotation. Ultimately, the Other category was reduced in size by 38% to approximately 8300 exams (see Fig. 3 and compare to Fig. 2).

Through this process, we also learned some used rarely classes are too small to train effectively. Such protocols were grouped with similar categories, e.g., rarely used subcategories employing hepatobiliary contrast media. During development, the criteria for endorectal coil use in prostate MRI were further narrowed, prompting the grouping of the prostate MRI protocols both with and without an endorectal coil. This yielded the final, best-performing model (Table 1). The overall performance was highest, and the true negative ratio was maximized.

Because our model is based on a “smaller” large language model (BERT), which precedes ChatGPT and the rapid development of extremely large models, it can be efficiently run in real time across a large enterprise on a single consumer GPU. Our trained model can perform inference for our entire data cohort spanning multiple years in less than 1 min on the RTX 3090 GPU it was trained on. State-of-the-art large language models, at the time of interpretation ChatGPT-4o, require many datacenter-level GPUs to simply run the model with high costs per token. With real-world performance approaching 98%, incremental gains (if any) would be minimal and cost–benefit at best difficult to justify.

Our trained algorithm provides excellent performance today and appears to outperform similar algorithms reported in the literature, though for the aforementioned reasons, such comparisons are limited due to uniqueness in approach and practice. Narrowing the comparison to recent algorithms with similar NLP approaches, our algorithm selects between a cohort of 26 closely related abdominal and pelvic MRI protocols, using BlueBERT fine-tuned on 44 k exams with real-world accuracy of almost 98% after prediction error analysis. The closest competitors also used BERT or BERT derivatives [15–17]. Yao et al. [15] used a very broad model with BERT Base trained on 222 k exams across 300 protocol categories including all subspecialties. The F1 score was about 0.9; accuracy predictions for exam priority triage and grouping into the top 5 protocols were up to 99% but limiting such an algorithm to 5 of 300 protocols strongly hampers clinical utility in real practice. Interestingly, Yao also notes that many “errors” were in fact reasonable alternatives but did not quantify this as we did. Eghbali et al. [16] fine-tuned a BERT derivative named DistilBERT with 32 k musculoskeletal and spine subspecialty MRI exams to select between 17 protocols with an ultimate accuracy of 83%. It is surprising this performance was not higher, as their protocol space had at most two protocols to select from once the correct joint is identified. Perhaps, a subset of cases did not include sufficient information in the indication to uniquely specify the specific joint. Finally, Talebi et al. [17] fine-tuned four different BERT derivatives to select between 10 neuroradiology MRI protocols using 88 k exams, with F1 scores up to 0.92. Unique to their approach was the digital up-sampling of rarer exam indications to account for differences in ordering frequency, which is worth consideration, but we decided against it as we felt a production model should be trained on data with protocol usage frequencies matching the practice.

Medicine is always changing. Inevitably, the practice will drift away from any static predictive model. Thus, our predictive tools must evolve alongside the practice. The retrospective data for our model extended to January 2019, and our practice changed in multiple ways during the retrospective period. Examples include improved body coils enabling routine PI-RADS 2.1 compliant prostate MRI without an endorectal coil, decreasing use of the endorectal coil protocol. We also expanded the use of hepatobiliary contrast agents and the use of MR elastography evolved. The goal of this work is to create an algorithm that matches current practice on an ongoing basis, but to do so requires one of two approaches:

Limit training data only to exams since the last known practice change.

Impose drift on the retrospective training data by correcting it to match current practice.

Limiting the data is problematic as this would reduce performance and force the algorithm to always lag changes in the practice. We chose the latter approach.

Collaborating closely with the practice, the retrospective cohort was analyzed and, where appropriate, corrected to reflect the current state of the practice. Over 14,000 exams in the training set were QC checked and, if necessary, corrected during this process. We evaluated known changes in the practice as well as iteratively analyzed model predictions searching for systematic errors in the test set. The latter approach also revealed subtle variations between radiologists’ protocol preferences indicating opportunities for practice standardization. Correction of training data and iterative re-training to adapt to known changes in practice is a powerful technique but should be wielded cautiously and only where necessary.

Future work includes a sister project completed with a similar approach for neuroradiology MRI protocol prediction. Once these pilots are in production, we expect the general approach to rapidly expand to other divisions and modalities. The described approach to adapt these algorithms to practice changes will be critical to enable robustness and continued accuracy alongside the evolving practice. Per-protocol prediction strength can be provided by the final layer of BlueBERT, and we are exploring options to express this as an analogue for prediction confidence in the protocoling user interface via text or a color overlay.

Our approach should be generalizable, but unique factors in practice, language employed by ordering clinicians, and differences in patient population limit the generalizability of any model beyond a particular institution.

Conclusions

A highly performant natural language processing algorithm (BERT) was trained to predict abdominopelvic MRI protocols at a tertiary referral institution, with nearly 98% real-world accuracy. Methods to optimize or adapt the algorithm’s performance in the context of practice changes are discussed, including careful correction of the training data such that the training data match current (or desired) practice. Best practices for data curation and quality control are crucial to realizing ideal performance, including an “Other” category and broad inclusion criteria that encompass incorrect orders that will be encountered in production.

MRI protocol prediction is a time-consuming non-interpretive task. Prediction of the correct protocol with high accuracy increases efficiency, improves practice standardization, reduces staff workload, and enables this information to be incorporated into the scheduling pipeline for schedulers, opening the door for patient exam self-scheduling. The clinical impacts of highly performant protocol prediction include optimizing MRI schedule efficiency and thus access to imaging, practice standardization, and protocoling efficiency. Secondary benefits include decreasing radiologist interruptions related to protocoling/scheduling and improved radiologist work-life balance by reducing non-interpretive task burden.

Declarations

Conflict of Interest

The authors declare no competing interests.

Footnotes

All figures and text are original and never previously published.

No AI tools were used in the composition of this manuscript.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Eberhard M, Alkadhi H. Machine learning and deep neural networks: applications in patient and scan preparation, contrast medium, and radiation dose optimization. Journal of Thoracic Imaging 2020;35:S17-S20. [DOI] [PubMed] [Google Scholar]

- 2.Kalra A, Chakraborty A, Fine B, Reicher J. Machine learning for automation of radiology protocols for quality and efficiency improvement. Journal of the American College of Radiology 2020;17(9):1149-1158. [DOI] [PubMed] [Google Scholar]

- 3.Lakhani P, Prater AB, Hutson RK, Andriole KP, Dreyer KJ, Morey J, Prevedello LM, Clark TJ, Geis JR, Itri JN. Machine learning in radiology: applications beyond image interpretation. Journal of the American College of Radiology 2018;15(2):350-359. [DOI] [PubMed] [Google Scholar]

- 4.Pierre K, Haneberg AG, Kwak S, Peters KR, Hochhegger B, Sananmuang T, Tunlayadechanont P, Tighe PJ, Mancuso A, Forghani R. Applications of Artificial Intelligence in the Radiology Roundtrip: Process Streamlining, Workflow Optimization, and Beyond. Seminars in Roentgenology 2023;58(2):158–169. [DOI] [PubMed]

- 5.Raju N, Woodburn M, Kachel S, O'Shaughnessy J, Sorace L, Yang N, Lim RP. A Review of Published Machine Learning Natural Language Processing Applications for Protocolling Radiology Imaging. arXiv preprint arXiv:220611502 2022.

- 6.Sandino CM, Cole EK, Alkan C, Chaudhari AS, Loening AM, Hyun D, Dahl J, Wang AS, Vasanawala SS. Upstream machine learning in radiology. Radiologic Clinics 2021;59(6):967-985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Brown AD, Marotta TR. A natural language processing-based model to automate MRI brain protocol selection and prioritization. Academic Radiology 2017;24(2):160-166. [DOI] [PubMed] [Google Scholar]

- 8.Brown AD, Marotta TR. Using machine learning for sequence-level automated MRI protocol selection in neuroradiology. Journal of the American Medical Informatics Association 2018;25(5):568-571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chillakuru YR, Munjal S, Laguna B, Chen TL, Chaudhari GR, Vu T, Seo Y, Narvid J, Sohn JH. Development and web deployment of an automated neuroradiology MRI protocoling tool with natural language processing. BMC medical informatics and decision making 2021;21(1):1-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cronister C, Issa TB, Uminsky D, Filice RW. Protocol Selection of Advanced Imaging Exams using Multi-steps Deep Learning Models. Open Science Index 2022;16(2);346–353.

- 11.Lau W, Aaltonen L, Gunn M, Yetisgen M. Automatic Assignment of Radiology Examination Protocols Using Pre-trained Language Models with Knowledge Distillation. AMIA Annu Symp Proc 2021;2021:668–676. [PMC free article] [PubMed] [Google Scholar]

- 12.Lee YH. Efficiency improvement in a busy radiology practice: determination of musculoskeletal magnetic resonance imaging protocol using deep-learning convolutional neural networks. Journal of digital imaging 2018;31:604-610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.López-Úbeda P, Díaz-Galiano MC, Martín-Noguerol T, Luna A, Ureña-López LA, Martín-Valdivia MT. Automatic medical protocol classification using machine learning approaches. Computer Methods and Programs in Biomedicine 2021;200:105939. [DOI] [PubMed] [Google Scholar]

- 14.Trivedi H, Mesterhazy J, Laguna B, Vu T, Sohn JH. Automatic determination of the need for intravenous contrast in musculoskeletal MRI examinations using IBM Watson’s natural language processing algorithm. Journal of Digital Imaging 2018;31:245–251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yao J, Alabousi A, Mironov O. Evaluation of a BERT Natural Language Processing Model for Automating CT and MRI Triage and Protocol Selection. Canadian Association of Radiologists Journal 2024;0(0):1–8. 10.1177/08465371241255895 [DOI] [PubMed]

- 16.Eghbali N, Siegal D, Klochko C, Ghassemi MM. Automation of Protocoling Advanced MSK Examinations Using Natural Language Processing Techniques. AMIA Summits on Translational Science Proceedings 2023;2023:118–127. [PMC free article] [PubMed]

- 17.Talebi S, Tong E, Li A, Yamin G, Zaharchuk G, Mofrad MRK. Exploring the performance and explainability of fine-tuned BERT models for neuroradiology protocol assignment. BMC Medical Informatics Decision Making 2024;24(40):1-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Devlin J, Chang M-W, Lee K, Toutanova K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:181004805 2018. 10.1007/s10278-024-01128-4

- 19.Brown T, Mann B, Ryder N, Subbiah M, Kaplan JD, Dhariwal P, Neelakantan A, Shyam P, Sastry G, Askell A. Language models are few-shot learners. Advances in neural information processing systems 2020;33:1877-1901. [Google Scholar]

- 20.Radford A, Narasimhan K, Salimans T, Sutskever I. Improving language understanding by generative pre-training. OpenAI 2018.

- 21.Radford A, Wu J, Child R, Luan D, Amodei D, Sutskever I. Language models are unsupervised multitask learners. OpenAI blog 2019;1(8):9. [Google Scholar]

- 22.Peng Y, Yan S, Lu Z. Transfer Learning in Biomedical Natural Language Processing: An Evaluation of BERT and ELMo on Ten Benchmarking Datasets. Proceedings of the Conference of Association for Computational Linguistics 2019;58–65.