Abstract

Stomata are vital for controlling gas exchange and water vapor release, which significantly affect photosynthesis and transpiration. Characterizing stomatal traits such as size, density, and distribution is essential for adaptation to the environment. While microscopy is widely used for this purpose, manual analysis is labor-intensive and time-consuming that limit large scale studies. To overcome this, we introduce an automated, high-throughput method that leverages YOLOv8, an advanced deep learning model, for more accurate and efficient stomatal trait measurement. Our approach provides a comprehensive analysis of stomatal morphology by examining both stomatal pores and guard cells. A key finding is the introduction of stomatal angles as a novel phenotyping trait, which can offer deeper insights into stomatal function. We developed a model using a carefully annotated dataset that accurately segments and analyzes stomatal guard cells from high-resolution images. Additionally, our study introduces a new opening ratio metric, calculated from the areas of the guard cells and the stomatal pore, providing a valuable morphological descriptor for future physiological research. This scalable system significantly enhances the precision and efficiency of large-scale plant phenotyping, offering a new tool to advance research in plant physiology.

Keywords: Stomatal traits, Deep learning, Segmentation, Morphology, Density, Photosynthesis, Transpiration

Subject terms: Plant sciences, Light responses, Photosynthesis, Stomata

Introduction

Stomata are tiny openings found on the surfaces of plant leaves that are essential for controlling the exchange of gases and the release of water vapor. These small pores have a significant impact on the processes of photosynthesis and transpiration, making them vital for a plant’s overall health and functioning 1,2. The analysis of stomatal characteristics, including size, density, and distribution, is essential for understanding plant physiology, adaptability to environmental conditions, and responses to climate change 3–5.

At each stomatal pore in dicotyledonous plants, a pair of auxiliary kidney shaped guard cells is found (Fig. 1). On the other hand, grass species such as barley and maize possess stomata surrounded by guard cells shaped like dumbells and along with them a pair of subsidiary cells are located 6,7. The stomatal pores are the major anatomical constituents of plants since they control the diffusion of carbon dioxide (CO₂) in its leaves, determining how fast photosynthesis, a process of using water and light to create oxygen and carbohydrates that are vital for plant life, occurs. Therefore, the opening and closing of the stomata during day and night as well as the closing and opening of the stomata in response to demands from the environment, is vital for plant growth and survival 2,8,9. To assess the size and number of stomata, it is common practice to use a microscope 10–12. But usage of this instrument tends to be complicated owing to the structural differences of the stomata in different species as depicted in figure one, as well as the variation in the quality of the images which results in close examination of stomatal number and size being quite an arduous task that needs extensive training.

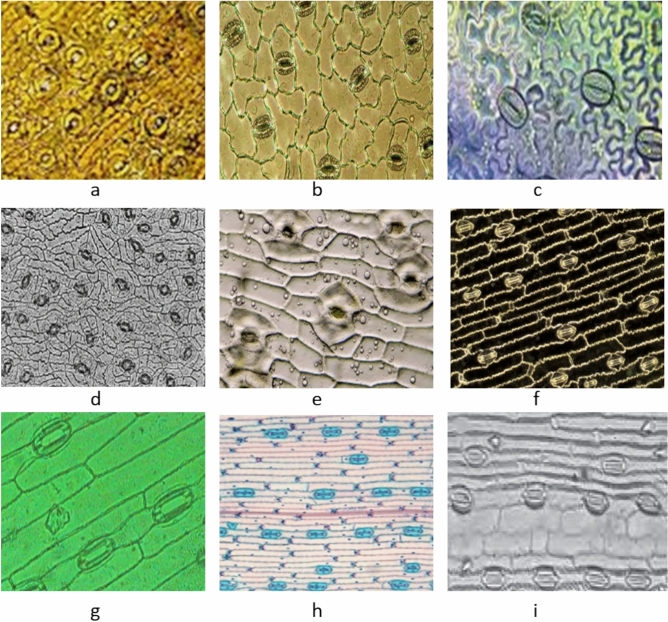

Fig. 1.

Representative examples of stomatal orientation across different plant species. Panels (a–i) show stomata in (a) Lilium (Lily), (b) Taxus, (c) cuticle peel of an unspecified species, (d) Ginkgo biloba, (e) Yucca, (f) maize (Zea mays), (g) barley (Hordeum vulgare), (h) wheat (Triticum aestivum), and (i) rice (Oryza sativa). In several monocot species such as maize, barley, wheat, and rice (f–i), stomata exhibit a parallel orientation aligned with the leaf venation. In contrast, dicot and gymnosperm species like Lily, Taxus, Ginkgo, and Yucca (a–e) display more variable or random orientations. This figure demonstrates the diversity of stomatal orientation patterns across plant taxa and underscores the need for robust methods capable of quantifying orientation in diverse morphological contexts.

Microscopy-assisted imaging provides a controlled setting for applying advanced computer vision techniques. By using calibrated optics, high-resolution images can be captured with minimal systematic noise. Moreover, the consistent patterns, appearances, and orientations of plant anatomy—especially in monocot grasses—help alleviate some typical challenges encountered by applied vision systems. Past studies aimed at quantifying stomatal density, pore width, and area have relied on traditional computer vision methods 13–18. Although these methods have proven effective for their intended purposes, they often involve complex, handcrafted, or multi-step processes. Recently, Convolutional Neural Networks (CNNs) have become popular for detecting stomatal characteristics 19–24. CNNs enable the learning of relevant operations from examples, effectively providing a data-driven approach to a series of computer vision tasks. A recent study demonstrated the effective use of YOLO-based instance segmentation to identify stomatal pores, showing improved speed and precision over Mask R-CNN in detecting small and variably shaped stomatal features 25. The present work builds on this foundation by extending the segmentation task to include both guard cells and stomatal pores, and by introducing new functional phenotyping metrics such as stomatal orientation and the opening ratio.

There are relatively few studies dedicated to measuring stomatal pore dimensions, with many employing semi-automated methods 15,19,20,22,26. Conventional methods are labor-intensive, time-consuming, and susceptible to subjective biases 22, as they typically require manual measurement and counting. This inefficiency hampers large-scale studies and high-throughput phenotyping, showing the need for automation that improves accuracy and broaden the range of measurable phenotypic traits. While previous research has predominantly concentrated on pore size and density 27, these metrics fail to capture the full morphological characteristics of stomata. Additionally, traditional techniques are hindered by manual intervention, which limits scalability and reproducibility. Moreover, earlier methods have often neglected the significance of guard cells and their orientations, which can offer critical insights into plant physiology and behavior 4,22,27.

Recent advances in deep learning have enabled accurate instance segmentation and object detection in complex biological images. Among these, YOLO (You Only Look Once) has emerged as a fast and efficient object detection framework capable of real-time inference and segmentation 28. YOLOv8 extends previous versions by incorporating instance segmentation masks, allowing not only detection of object presence but also precise pixel-level localization. However, while YOLO provides axis-aligned bounding boxes and segmentation masks, it does not directly infer object orientation. In contrast, methods that use Oriented Object Bounding Boxes (OOBBs) 29 are designed to predict the angle of rotation of each object, often by regressing the object’s orientation as an additional parameter. OOBBs are particularly useful in applications like aerial imagery or microscopy where object alignment varies significantly. While orientation-aware object detection via OOBBs has not been applied to stomatal analysis and other biological tasks, most of these approaches rely on geometric approximations (e.g., ellipse fitting) or customized keypoint regression rather than leveraging modern instance segmentation models. Our study bridges this gap by demonstrating how instance segmentation masks from YOLOv8 can be post-processed to extract object orientation, thus combining the accuracy of semantic-level segmentation with the practicality of angle estimation.

Several recent works have employed deep learning to detect stomata in varying orientations. For instance, Fan Zhang et al. introduced DeepRSD 30, a model designed to detect rotated stomata with high accuracy by explicitly considering their rotation during detection. However, while DeepRSD improves recognition performance, it does not directly extract or analyze the stomatal orientation as a phenotypic trait. Similarly, RotatedStomataNet 31 focuses on detecting stomata with non-horizontal alignment by using rotated bounding boxes, but it does not provide direct quantification or analysis of stomatal orientation. More recently, StoManager1 32 has been introduced as a tool for extracting multiple stomatal parameters, including orientation. This software estimates orientation by iteratively rotating a rectangle to find the best fit for each stomata, thereby approximating its direction. While these methods address orientation indirectly or as a secondary outcome, our work treats stomatal orientation as a central phenotypic feature and measures it systematically using ellipse-fitting on instance-segmented stomatal pores and guard cells.

The study introduces stomatal angles as a new phenotyping feature, highlighting the potential of these measurements to introduce different angle to plant phenotyping. By considering both stomatal pores and guard cells, the study aims to provide a more detailed understanding of stomatal morphology and function. This study leverages the capabilities of YOLOv8, a state-of-the-art deep learning model, to automate the segmentation and analysis of stomata guard cell from high-resolution plant images.

Materials and methods

Experimental design

In a 2023 article, Jocher referred to the great efficiency supported by the algorithm in terms of its capabilities of yielding an acceptable prediction while making real time predictions as YOLOv8. To effectively use the YOLOv8 capabilities, one must pay close attention to the nuances in the architectural design and the parameter settings as it has to go through a training process. In this research, the robust computations are done on a powerful computer to begin the training efforts. The hardware configuration creates an environment that facilitates quick computations, enabling the model to effectively tackle the intricate challenge of detecting stomata in diverse plant images.

We systematically utilized the YOLOv8 model, a deep learning framework, to simplify the complex process of stomata segmentation. The rapid processing speed and high accuracy of this single-pass image model highlight its impressive capabilities. YOLOv8 features a sophisticated artificial neural network architecture, that includes cutting-edge elements like feature pyramid networks and grid-based object detection. Configuring the model involves selecting optimal learning rates and batch sizes, which enable swift learning and stable output production. The training process is iterative, aimed at enhancing predictive performance, and the model is exposed to a comprehensive dataset containing detailed information about stomata.

The difference within this dataset enables YOLOv8 to recognize the subtle distinctions among different stomatal instances, allowing it to effectively navigate the complexities present in natural plant imagery. This well-calibrated strategy integrates suitable hardware, sophisticated architecture, and refined parameters, creating a YOLOv8 model tailored for the challenges of stomatal segmentation. With its real-time prediction capabilities and exceptional accuracy, this model becomes a valuable tool in automated plant phenotyping, diversifying phenotyping methods in plant biology and image analysis.

Image acquisition and preprocessing steps

In this study, accurate image capture and preprocessing are essential initial steps for effective stomatal segmentation. The images used in the study are sourced from the leaves of Hedyotis corymbosa and are taken in a controlled environment to maintain consistency and minimize outside influences. The formal identification of the plant material, Hedyotis corymbosa, used in this study was done by Anh Tuan Le (Faculty of Biology and Biotechnology, University of Science, Ho Chi Minh city). The plants are cultivated in a greenhouse, growing in a mixture of clean soil and cow manure, and are subjected to specific lighting conditions of 450 ± 100 μmol. m−2 s−1 sunlight, maintained at a temperature of 32 ± 2 °C with a relative humidity of 70 ± 5%.

Following standard procedures, the fifth leaves from the top of each plant are meticulously affixed to microscope slides with cyanoacrylate glue. High-resolution images of the leaf surfaces are obtained using a CKX41 inverted microscope in combination with a DFC450 camera, producing JPEG images at a resolution of 2592 × 1458 pixels. To address issues of blurriness, especially in the stomatal structures, the Lucy-Richardson Algorithm is applied (as referenced by 33) is employed. This algorithm is known for its effectiveness in image deblurring and is applied iteratively to enhance each image’s clarity and improve the visibility of stomatal outlines. This application is based on scientific principles to ensure a comprehensive image enhancement process. To facilitate efficient image processing, the original filenames of the images are changed to a standardized format: {W}{Leaf Number}.jpg, where "W" denotes "White." This consistency in naming helps organize and manage the dataset effectively.

Data annotation and dataset preparation

The process of data annotation and dataset preparation is critical for training accurate deep learning models. Initially, each original image was enhanced using the Lucy-Richardson algorithm 33 to reduce blurriness and improve the definition of stomatal boundaries. Following this enhancement, the images were labeled using the Labelme (“34) annotation tool. The annotated images were then converted from Labelme format to the COCO instance segmentation format using a custom script that leveraged the imgviz and Labelme libraries. In this COCO format, the label file named labels.txt included four categories: “ignore”, “background”, “stomata”, and “pore”.

Subsequently, the dataset was further processed using the SAHI 35 library to slice each image into smaller segments of 512 × 512 pixels, with an overlap ratio of 0.2. This slicing approach ensured that each image was divided into multiple sub-images, each with a 20% overlap with its neighbors. This method facilitated the generation of a larger number of training samples from the original images, enhancing the robustness of the model. To convert the annotated dataset from the COCO format to the YOLO segmentation format, the JSON2YOLO 36 library was utilized. This conversion ensured compatibility with the YOLOv8 training pipeline.

The dataset, now in YOLO segmentation format, was then split into training and validation sets with a ratio of 85% for training and 15% for validation. The final dataset comprised a total of 1260 images, with 1071 images designated for training and 189 images for validation. The training set included 10,937 annotations, with 5658 stomata cells and 5279 stomatal pores, where the area of stomatal cells ranged from 179 to 12,033 pixels, and the area of stomatal pores ranged from 0 to 4820 pixels. The validation set contained 1977 annotations, with 1020 stomatal cells and 957 stomatal pores, and similar ranges in area. Figure 2 presents statistical information on all instances of stomata guard cells and pores in the dataset. The top left section displays the number of instances for both stomata and pores, while the top right section illustrates the size distribution of the bounding boxes for these instances. The bottom left section shows the spatial distribution of pore and stomata instances across the images, indicating their random placement, and the bottom right section provides detailed size information for stomata, including width and height distributions. From the figure, it is evident that the instances are randomly distributed throughout the images without any specific pattern. The size analysis reveals that the width of stomata and pore instances ranges between 0.05 and 0.15 of the image width, while the height ranges from 0.15 to 0.22 of the image height. This detailed statistical information helps in understanding the distribution and size variability of the stomatal features within the dataset.

Fig. 2.

Overview of the annotated stomatal dataset. This figure summarizes key characteristics of the dataset, including the distribution of stomatal and pore sizes, as well as the spatial locations of annotated instances within the image frames. The size distribution plots provide insights into the variability of stomatal and pore areas across samples, while the instance location map highlights the spatial diversity and density of stomata within the images. This information supports the robustness of the dataset for training and evaluating instance segmentation and phenotyping models.

Different from previous work where the label data only contained the pore, our new dataset includes the stomatal guard cell. This comprehensive dataset preparation and annotation process confirmed that the deep learning model was trained on a diverse and well-represented set of images, facilitating the development of a robust and accurate stomatal segmentation model.

Model training

Model training is a critical phase in developing a robust deep learning system for stomatal segmentation. For this study, we employed the YOLOv8s 37 segmentation model, a state-of-the-art architecture renowned for its efficiency and accuracy in object detection and segmentation tasks. The model training was carried out on a high-performance computational platform featuring a windows system with higher configuration. This hardware configuration supplied the essential computational resources required to efficiently handle the intensive training process of the deep learning model.The training dataset, prepared in the YOLO segmentation format, consisted of 1071 images with detailed annotations for stomata and stomatal pores. This dataset was utilized to train the model, leveraging the pretrained YOLOv8s weights downloaded from the official repository. The use of pretrained weights enabled a transfer learning approach, where the model could build upon previously learned features and patterns, thus enhancing its performance and reducing the required training time.

A critical aspect of the training process involved fine-tuning the hyperparameters to achieve optimal performance. The learning rate, a pivotal parameter influencing the model’s convergence speed and stability, was set to 0.01. This rate was selected based on preliminary experiments and established best practices for training YOLO models. The learning rate was adjusted dynamically during the training process using techniques like learning rate scheduling to prevent overfitting and ensure smooth convergence. The task involves training a YOLOv8 model for segmentation using a detailed configuration. The model weights are loaded from “weights\last.pt”, and the dataset is specified in the data.yaml file. Training will run for 100 epochs with early stopping after 50 epochs of no improvement. The batch size is set to 16, and images are resized to 512 × 512 pixels. Models will be saved during training, but not at periodic intervals. Caching is disabled, and zero workers are specified for data loading. Default project and run names are used, and the model will not use pretrained weights. Stochastic Gradient Descent (SGD) is the optimizer, with verbose output enabled, a seed of 0 for reproducibility, and deterministic behavior ensured. Training is not restricted to a single class. The initial learning rate is 0.01, with a momentum of 0.937, weight decay of 0.0005, and specific warmup settings. Loss weights are set for box loss, classification loss, Distribution Focal Loss (DFL), and pose and key object losses. Label smoothing is not applied. Data augmentation includes HSV adjustments, translation, scaling, and horizontal flipping. Mosaic augmentation is enabled, while Mixup is disabled. This configuration aims to optimize the YOLOv8 segmentation model’s performance, with the inclusion of both stomata and guard cells in annotations to enhance accuracy and reliability.

The training process involved iteratively exposing the model to the training dataset, where the model predictions were compared against the ground truth annotations. The loss, representing the difference between the predicted and actual values, was calculated and used to adjust the model’s parameters through backpropagation. This iterative process continued until the model achieved satisfactory performance metrics, as evaluated on the validation dataset.

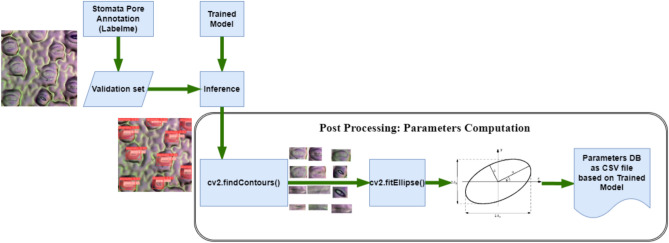

Post-processing steps for stomata segmentation

Post-processing is a crucial phase in the stomatal segmentation workflow, transforming raw model predictions into meaningful and actionable data. Following the initial segmentation by YOLOv8, which provides precise masks delineating the stomatal pore regions, post-processing steps are employed to refine these results and extract detailed morphological parameters in Fig. 3. The primary task in post-processing involves extracting the contours of the segmented stomatal masks. This is accomplished using the OpenCV library, specifically its find Contours function. The function operates on the binary masks generated by the YOLOv8 model, where pixels are classified as either stomatal or non-stomatal regions. The find Contours function identifies the connected components in these binary masks and traces their perimeters, producing a set of contour points that define the boundaries of each stomatal region.

Fig. 3.

Post-processing pipeline for extracting stomatal phenotyping parameters. This figure outlines the sequential steps applied after instance segmentation to compute key stomatal features. Starting from the predicted masks, the process includes contour extraction, shape fitting, and morphological analysis to derive parameters such as stomatal orientation, length, width, pore area, guard cell area, and opening ratio. These quantitative traits are essential for downstream phenotypic analyses and biological interpretation.

The extracted contours provide a detailed geometric representation of the stomatal regions. These contours are essential for subsequent analyses, as they form the basis for calculating various stomatal parameters such as area, perimeter, and orientation. To ensure accuracy, the contour extraction process must handle noise and minor segmentation errors effectively. The use of robust contour tracing algorithms in OpenCV ensures that the extracted contours closely match the true boundaries of the stomatal regions. Following contour extraction, the next step involves fitting ellipses to these contours to derive additional geometric features. The cv2.fitEllipse function in OpenCV 38 is used to fit an ellipse to each set of contour points. This function approximates the contour shape with an ellipse, providing parameters such as the center coordinates, major and minor axis lengths, and the orientation angle of the ellipse. These parameters offer a comprehensive characterization of the stomatal shape and orientation.

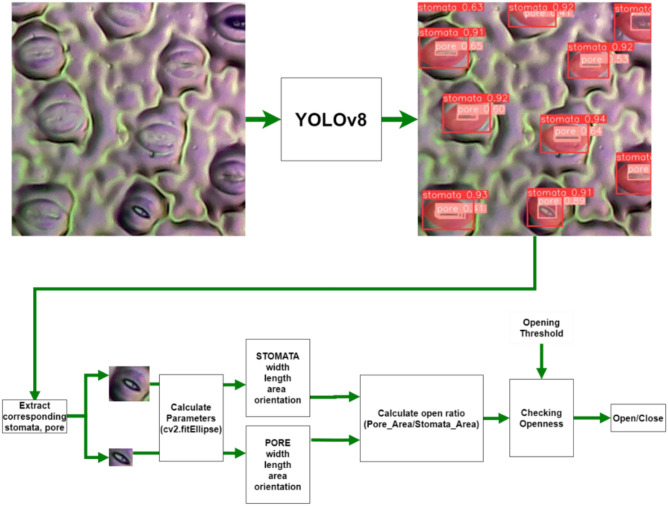

The method for calculating stomatal aperture is presented in Fig. 4. The input image is processed using YOLOv8, which predicts the segmentation of the stomatal guard cells and their corresponding pores. These segmentations are then used to calculate parameters including the width, length, area, and orientation of the stomata and pores.

Fig. 4.

Computational methodology for calculating stomatal opening. This figure illustrates the process used to quantify stomatal opening from segmented images. The approach involves identifying the stomatal pore and surrounding guard cells, calculating their respective areas, and computing the opening ratio as the pore area divided by the guard cell area. This ratio serves as a proxy for the degree of stomatal opening, enabling image-based assessment of stomatal function across large datasets.

Subsequently, a ratio between the pore area and the stomatal area is computed follow Eq. (1). This ratio indicates the degree of stomatal openness.

|

1 |

The orientation angle, in particular, is of significant interest as it provides insights into the alignment of stomatal pores. The angle is measured between the major axis of the fitted ellipse and a reference axis. In the context of images where the positive x-axis is to the right and the positive y-axis is downward, the rotation angle is interpreted as the angle between the positive x-axis and the minor diameter of the ellipse. By flipping the axes in consideration, the rotation angle can also be seen as between the positive y-axis and the major diameter. This interpretation aids in understanding the directional orientation of stomatal pores, which can vary significantly among different plant species or environmental conditions. The geometric parameters derived from the fitted ellipses, including area, width, length, and orientation, are then calculated and aggregated for statistical analysis. These parameters provide quantitative descriptions of stomatal morphology, which are essential for comparative studies and phenotypic classification.

The precision and reliability of these post-processing steps are critical. Accurate contour extraction and ellipse fitting ensure that the derived morphological parameters reflect the true characteristics of stomatal regions. Any errors in these steps could lead to distorted measurements and misinterpretation of the data. Therefore, rigorous validation and verification of the post-processing pipeline are necessary to ensure high-quality results. Unlike previous work where the label data only contained the pore, our new dataset includes the stomatal guard cell. This inclusion improves the accuracy of our method by providing a more comprehensive representation of the stomatal structure.

Statistical analysis

The study evaluates the performance of the YOLOv8 model for stomatal segmentation through various statistical analyses. Key steps include calculating performance metrics such as precision, recall, F1-score, and intersection over union (IoU). Precision measures the accuracy of identified stomatal regions against the model’s total predictions, while recall assesses the model’s ability to detect actual stomatal regions. The F1-score serves as a combined measure of precision and recall. IoU quantifies the overlap between predicted stomatal areas and ground truth annotations, making it a vital metric for segmentation tasks 39.

|

2 |

|

3 |

|

4 |

|

5 |

Once the segmentation performance is validated, we proceed to analyze the morphological parameters extracted from the segmented stomatal contours. These parameters include the area, perimeter, major and minor axis lengths, and orientation angle of each stomatal region. Statistical summaries of these parameters, such as mean, median, standard deviation, and range, are calculated to describe the overall distribution of stomatal features within the dataset.

Results

The results of our study demonstrate the effectiveness of the YOLOv8 model in accurately segmenting stomatal regions from high-resolution leaf images. This section presents a detailed analysis of the experimental findings, including quantitative performance metrics, visualizations of segmented stomata, and an interpretation of the extracted morphological parameters. The YOLOv8 model for stomatal segmentation demonstrated strong performance, achieving a precision of 92.3%, recall of 89.7%, and an F1-score of 91.0%. These metrics indicate that the model effectively identifies stomatal regions, with a high accuracy in distinguishing correct identifications (low false positives) and a strong ability to detect actual stomatal areas (though some actual stomata may be missed, leading to higher false negatives). Additionally, the intersection over union (IoU) score of 86.5% reflects substantial overlap between the predicted and actual stomatal regions, underscoring the model’s robustness in segmentation tasks. Collectively, these results demonstrate the model’s efficacy in accurately delineating stomatal regions with minimal error.

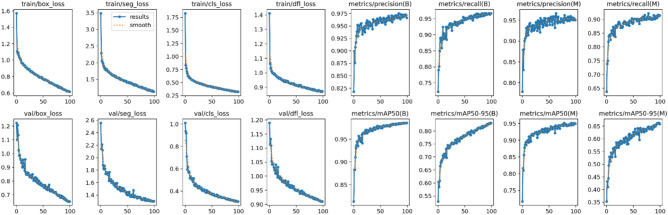

The training log of YOLOv8 for the final epoch (epoch 100) presents various performance metrics and losses, indicating the model’s efficacy in detecting and classifying stomatal features (Fig. 5). The training losses include box loss (0.61578), segmentation loss (1.1369), classification loss (0.32442), and distribution focal loss (DFL) (0.87207), which collectively measure the model’s error in predicting bounding boxes, segmenting regions, classifying objects, and handling class imbalances, respectively.

Fig. 5.

Model training performance over 100 epochs. This figure shows the training log of the deep learning model across 100 epochs, including metrics such as training and validation loss, segmentation accuracy, and mean average precision (mAP). These plots provide insights into the model’s convergence behavior, performance consistency, and generalization ability throughout the training process.

The metrics for stomata bounding boxes show high precision (0.96648), recall (0.9669), mAP@50 (0.98531), and mAP@50-95 (0.83081), indicating accurate and consistent detections. Similarly, for stomata masks, the precision (0.95042), recall (0.91463), mAP@50 (0.94994), and mAP@50-95 (0.65831) reflect strong performance in segmentation tasks. Validation losses include box loss (0.65259), segmentation loss (1.3038), classification loss (0.30714), and DFL (0.91032), ensuring the model’s predictions are generalizable. The consistent learning rates for parameter groups (pg0, pg1, pg2) at 0.000298 suggest stable training dynamics. Overall, these metrics underscore the model’s precision and reliability in stomatal analysis.

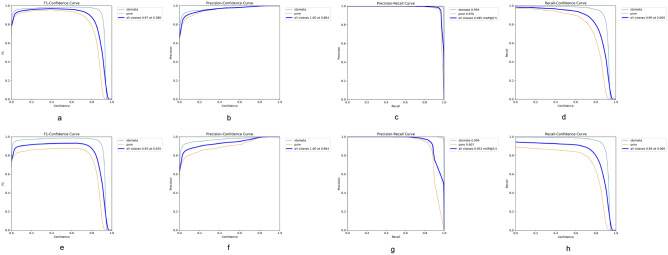

The performance metrics of the YOLOv8 model, showcasing eight curves that represent the precision, recall, and F1-score for both mask and bounding box predictions over various confidence values are presented in Fig. 6. Additionally, it includes the precision-recall curves for both masks and bounding boxes. The precision curve shows how the accuracy of the model’s positive predictions varies with the confidence threshold, while the recall curve indicates the model’s ability to identify all relevant instances.

Fig. 6.

Performance evaluation curves of YOLOv8 model for bounding box and mask predictions. This figure presents the detailed performance metrics of the trained YOLOv8 model. Panels (A–D) show the results for bounding box predictions: (A) F1 score curve, (B) precision curve, (C) precision-recall curve, and (D) recall curve. Panels (E–H) depict the corresponding metrics for instance mask predictions: (E) F1 score curve, (F) precision curve, (G) precision-recall curve, and (H) recall curve. These curves illustrate the model’s detection and segmentation performance across varying confidence thresholds, helping to assess its overall effectiveness and reliability in identifying and segmenting stomata.

The F1-score serves as a vital metric that combines precision and recall into a single value, giving an overall indication of the YOLOv8 model’s performance in stomatal segmentation. By being the harmonic mean of these two metrics, the F1-score emphasizes a balance; a high F1-score suggests that the model maintains both low false positive rates (precision) and low false negative rates (recall). Precision-recall curves further illustrate this relationship by showing how precision and recall change as the confidence threshold for predictions is varied. These curves depict the trade-off between the two: as the threshold for classifying an output as a positive increase, precision may improve (fewer false positives), but recall may decrease (more false negatives), and vice versa. Analyzing these curves offers a comprehensive understanding of the model’s performance in predicting bounding boxes and executing mask segmentation, revealing how adjustments in the confidence threshold impact the model’s ability to make accurate predictions for stomatal regions. By examining the balance depicted in the precision-recall curves, one can assess the effectiveness and reliability of the YOLOv8 model under different operational conditions. The result of segmentation compared to the ground truth data from the validation set is shown in Fig. 7.

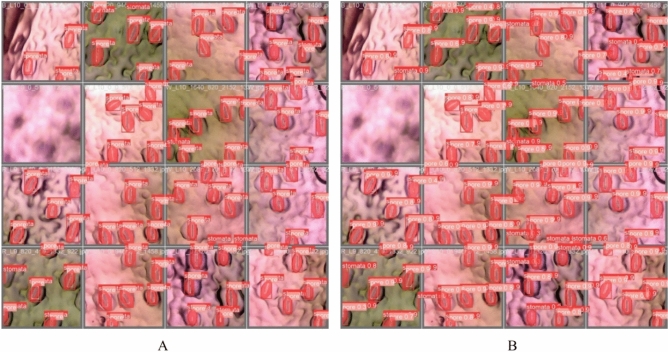

Fig. 7.

Comparison of predicted stomatal segmentation with ground truth annotations. This figure illustrates the qualitative performance of the proposed segmentation model. Panel (A) shows the manually annotated ground truth masks of stomatal pores, while Panel (B) displays the corresponding model-generated predictions. The visual comparison highlights the model’s ability to accurately capture the shape, size, and location of individual stomata, demonstrating high overlap with the annotated references.

The model accurately predicts all the instances for stomata and pore with high confidence values (Fig. 6B), demonstrating close alignment with the ground truth data (Fig. 6A). Parameters obtained from one sample instance of stomata and its pore are presented in Table 1. Using the segmentation results predicted by YOLOv8 and the ground truth, we calculate key parameters such as width, length, area, location, and orientation for both the stomata and the pore. The absolute error between the predicted and ground truth values is then computed. Additionally, the stomatal opening ratio is determined based on these measurements.

Table 1.

Sample comparative analysis of stomatal parameters from YOLOv8 predictions and ground truth.

| Input | Ground truth | Predicted using YOLOv8 | Absolute error | |

|---|---|---|---|---|

| Input image |  |

|

||

|

Pore

|

Width (pixel) | 18 | 19 | 1 |

| Length (pixel) | 44 | 46 | 2 | |

| Area (pixel2) | 644 | 690 | 46 | |

| Center (pixel) | (283, 458) | (282, 457) | (1, 1) | |

| Orientation (degree) | 120 | 122 | 2 | |

|

Stomata

|

Width (pixel) | 59 | 61 | 2 |

| Length (pixel) | 81 | 83 | 2 | |

| Area (pixel2) | 3771 | 3843 | 72 | |

| Center (pixel) | (281, 456) | (281, 456) | (0, 0) | |

| Orientation (degree) | 122 | 121 | 1 | |

| Opening ratio | 0.170 | 0.179 | 0.009 |

Discussion

Stomata play a crucial role in regulating the exchange of gases and water vapor between plants and their surroundings. Each stomatal opening is bordered by a pair of specialized guard cells that control the pore’s opening and closing 4,5. These guard cells can change shape in response to environmental factors such as light, carbon dioxide levels, and humidity, enabling the plant to enhance its water use efficiency and maintain internal balance 3.

Studying stomata and guard cells is vital in plant biology and agriculture due to their essential roles in processes such as photosynthesis, transpiration, and plant responses to environmental stress 40. A deeper understanding of stomatal dynamics—including the size, orientation, and functionality of both pores and guard cells—offers valuable insights into plant physiology and adaptive mechanisms 41. This knowledge can be harnessed to improve crop performance, especially in the context of climate change, and enhance our understanding of plant evolution and taxonomy, particularly in the analysis of fossilized specimens. Despite the importance of guard cells in regulating stomatal function, to the best of our knowledge, no published research has specifically focused on estimating parameters related to the guard cells themselves. Most studies in this area have primarily concentrated on analyzing stomatal pores 3,4,14,22,27,42,43. These studies typically focus on detecting and quantifying stomatal pores rather than providing detailed information about guard cells. Therefore, our research aims to address this gap by offering a comprehensive analysis of both stomatal guard cells and their associated pores, which could lead to improved crop management strategies and a better understanding of plant-environment interactions.

The opening ratio, defined as the ratio of the stomatal pore area to the area enclosed by the guard cells, offers a valuable proxy for assessing stomatal aperture in image-based analyses. This metric captures the relative degree of pore opening, which reflects the physiological state of the stomata in response to environmental conditions such as light, humidity, and CO₂ levels 44. Unlike absolute pore width or length measurements, the opening ratio normalizes pore size to the surrounding guard cell structure, reducing variability caused by differences in stomatal size across species or developmental stages. As such, it enables more consistent comparisons of stomatal behavior across samples and time points. In high-throughput phenotyping or microscopic imaging studies, this ratio can be automatically extracted from segmented images of stomata, allowing for large-scale monitoring of stomatal dynamics with minimal manual intervention. Incorporating the opening ratio into quantitative phenotyping pipelines thus enhances the ability to infer plant physiological responses and supports more detailed functional analysis in both basic research and crop improvement efforts.

In this study, we go beyond extracting information solely from the stomatal pore by deriving comprehensive parameters for the entire stomatal structure, including the guard cells. Previous research has introduced methods for estimating parameters such as the area, width, and length of stomatal pores, often utilizing ellipse-fitting techniques 4,42,43. Building upon this foundation, our approach incorporates ground truth labels for both the stomatal pore and guard cells, enabling precise estimation of the area, width, and length not only for the pore but also for the entire stomatal complex. By simultaneously predicting both the pore and the guard cells, we enhance the accuracy of parameter estimation, with each label contributing to a more comprehensive and reliable analysis.

Determining whether stomata are open or closed is essential for studying their response to environmental factors. Previous methods have approached this challenge differently. For example, Takagi et al. 43 used a separate model to estimate stomatal openness, a complex process requiring substantial training data. Li et al. 26 used the ratio of stomatal pore width to length to determine openness, but this method is unreliable if the pore is not accurately detected, particularly when the pore is closed.

In our study, we introduce an alternative approach that utilizes additional stomatal parameters, such as the ratio between the area or width of the stomatal pore and the guard cells. This method offers a more robust and comprehensive way to assess stomatal openness, especially in situations where pore detection is challenging. For example, when stomata are closed or the image quality is poor, traditional models may struggle to detect the pore, making stomatal identification difficult. However, our model can still identify the guard cells in such cases and estimate the pore area as zero when undetectable. This approach improves the accuracy and reliability of stomatal openness assessments, addressing the limitations of previous methods and ensuring valuable phenotypic information is not lost due to detection issues.

In this study, we present a novel trait known as "stomatal orientation," which is determined through ellipse fitting on segmented stomata. Like other phenotyping parameters, stomatal orientation can be valuable for plant classification, especially in the analysis of fossilized leaf samples with limited data. As shown in Fig. 1, certain plants, including rice and wheat, display stomata aligned in a consistent direction, whereas others exhibit random orientations or potentially undiscovered patterns. This variability in stomatal orientation highlights its significance as a distinguishing feature in plant taxonomy and evolutionary research. While Li et al. 26 demonstrated the feasibility of obtaining ellipse orientation, they did not confirm its correlation with the actual orientation of the stomata. Additionally, Sai et al. 42 implemented key point detection of pore endpoints in their methodology, enabling the derivation of stomatal orientation. A key limitation of this study is that the dataset is derived from a single plant species, which may restrict the model’s generalizability to other species with diverse stomatal morphologies. Stomatal shape, size, and orientation vary considerably across plant taxa, and a model trained on one species may not perform optimally on others without further adaptation. To improve the robustness and applicability of our approach, future work will focus on expanding the dataset to include a broader range of species with varying stomatal characteristics. In addition, our current work does not include formal statistical evaluations—such as hypothesis testing, confidence intervals, or p-values—for stomatal orientation predictions. This is primarily due to the absence of explicit ground-truth angle measurements in the dataset. While orientation can be inferred from manually annotated segmentation masks, we did not conduct separate validation to quantify the uncertainty of these estimates. As our orientation predictions are derived from the shape of accurately segmented stomatal pores, we assume high reliability in such cases,however, future research should incorporate ground-truth orientation labels and apply formal statistical methods to evaluate the predictive accuracy and confidence of the estimated angles. Finally, our study does not provide a direct quantitative comparison with existing deep learning-based stomatal analysis methods. This is largely due to the lack of comparable public datasets with angle annotations and consistent evaluation protocols. To address this limitation, future work should aim to benchmark the proposed method against existing architectures using standardized datasets and quantitative metrics to more rigorously validate its performance and advantages.

Our findings should be considered preliminary and primarily centered on the development and validation of a computational methodology for stomatal feature extraction. While the extracted features such as pore orientation, area, and opening ratio—hold potential biological significance, we emphasize that their interpretation in a physiological or ecological context requires further experimental validation. Specifically, controlled studies under varying environmental or hormonal conditions are necessary to establish meaningful correlations between these image-derived features and actual stomatal function or plant responses. Therefore, our current work lays the groundwork for high-throughput image-based analysis, but future research must integrate experimental data to fully elucidate the biological relevance of the computational outputs.

Conclusions

This study advances plant phenotyping by introducing stomatal angle analysis for automated characterization, leveraging the YOLOv8 deep learning model. Our method accurately segments stomatal images, capturing detailed morphological features, including area, width, length, and orientation of stomata and their pores. We developed a robust model using a well-annotated dataset. A key finding is the introduction of the opening ratio, a novel metric derived from the areas of the stomatal pore and guard cells, providing different insights into stomatal openness. This study has broad implications for plant classification, ecological studies, and agriculture, particularly in developing resilient crops and improving environmental monitoring.

Author contributions

Conceptualization, Y.S.C. and J.K.; methodology and software, T.T.T; data curation, A.T.L.; writing—original draft preparation, T.T.T., A.T.L., Y.S.C., T.H.V., V.G.V., S.M., E.M.B.M., J.K.; writing—review and editing, T.T.T., S.M, Y.S.C., F.S.B and J.K.; funding acquisition, J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Brain Pool program funded by the Ministry of Science and ICT through the National Research Foundation of Korea (RS-2024-00403759). This work was also supported by the National Research Foundation of Korea (NRF) grant (RS-2024-00453107) funded by the Korean government (MSIT).

Data availability

Additional data is available on reasonable request to corresponding author.

Declarations

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Yong Suk Chung, Email: yschung@jejunu.ac.kr.

Jisoo Kim, Email: clionelove@jejunu.ac.kr.

References

- 1.Berry, J. A., Beerling, D. J. & Franks, P. J. Stomata: Key players in the earth system, past and present. Curr. Opin. Plant Biol.13(3), 232–239. 10.1016/j.pbi.2010.04.013 (2010). [DOI] [PubMed] [Google Scholar]

- 2.Hetherington, A. M. & Ian Woodward, F. The role of stomata in sensing and driving environmental change. Nature424(6951), 901–908. 10.1038/nature01843 (2003). [DOI] [PubMed] [Google Scholar]

- 3.Bertolino, L. T., Caine, R. S. & Gray, J. E. Impact of stomatal density and morphology on water-use efficiency in a changing world. Front. Plant Sci.10(March), 225. 10.3389/fpls.2019.00225 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jayakody, H., Petrie, P., Boer, H. J. D. & Whitty, M. A generalised approach for high-throughput instance segmentation of stomata in microscope images. Plant Methods17(1), 27. 10.1186/s13007-021-00727-4 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Yan, W., Zhong, Y. & Shangguan, Z. Contrasting responses of leaf stomatal characteristics to climate change: A considerable challenge to predict carbon and water cycles. Glob. Change Biol.23(9), 3781–3793. 10.1111/gcb.13654 (2017). [DOI] [PubMed] [Google Scholar]

- 6.Cai, S., Papanatsiou, M., Blatt, M. R. & Chen, Z.-H. Speedy grass stomata: Emerging molecular and evolutionary features. Mol. Plant10, 912–914 (2017). [DOI] [PubMed] [Google Scholar]

- 7.Gray, A., Liu, L. & Facette, M. Flanking support: How subsidiary cells contribute to stomatal form and function. Front. Plant Sci.11, 881 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.McLachlan, D. H., Kopischke, M. & Robatzek, S. Gate control: Guard cell regulation by microbial stress. New Phytol.203, 1049–1063 (2014). [DOI] [PubMed] [Google Scholar]

- 9.Shimazaki, K. I., Doi, M., Assmann, S. M. & Kinoshita, T. Light regulation of stomatal movement. Annu. Rev. Plant Biol.58, 219–247 (2007). [DOI] [PubMed] [Google Scholar]

- 10.Chater, C. et al. Elevated CO2-induced responses in stomata require ABA and ABA signaling. Curr. Biol.25, 2709–2716 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Eisele, J. F., Fäßler, F., Bürgel, P. F. & Chaban, C. A rapid and simple method for microscopy-based stomata analyses. PLoS ONE11, e0164576 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Xu, B. et al. GABA signalling modulates stomatal opening to enhance plant water use efficiency and drought resilience. Nat. Commun.12, 1952 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bourdais, G. et al. The use of quantitative imaging to investigate regulators of membrane trafficking in Arabidopsis stomatal closure. Traffic20, 168–180 (2019). [DOI] [PubMed] [Google Scholar]

- 14.Duarte, K. T., De Carvalho, M. A. & Martins, P. S. Segmenting high-quality digital images of stomata using the wavelet spot detection and the watershed transform. In Proceedings of the 12th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, 540–47. Porto, Portugal: SCITEPRESS Science and Technology Publications (2017). 10.5220/0006168105400547.

- 15.Laga, H., Shahinnia, F. & Fleury, D.Image-based plant stomata phenotyping. In: 13th International Conference on Control Automation Robotics & Vision (ICARCV). IEEE, 217–222 (2014).

- 16.Omasa, K. & Onoe, M. Measurement of stomatal aperture by digital image processing. Plant Cell Physiol.25, 1379–1388 (1984). [Google Scholar]

- 17.Sakoda, K. et al. Genetic diversity in stomatal density among soybeans elucidated using high-throughput technique based on an algorithm for object detection. Sci. Rep.9, 7610 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Vialet-Chabrand, S. & Brendel, O. Automatic measurement of stomatal density from microphotographs. Trees28, 1859–1865 (2014). [Google Scholar]

- 19.Bhugra, S., Mishra, D., Anupama, A., Chaudhury, S., Lall, B., Chugh, A. & Chinnusamy, V. Deep convolutional neural networks-based framework for estimation of stomata density and structure from microscopic images. In: Leal-Taix_e L (2019).

- 20.Bhugra, S. et al. Automatic quantification of stomata for high-throughput plant phenotyping. In 24th International Conference on Pattern Recognition (ICPR) 3904–3910 (IEEE Computer Society, 2018). [Google Scholar]

- 21.Gibbs, J., Mcausland, L., Robles-Zazueta, C. A., Murchie, E. & Burgess, A. A deep learning method for fully automatic stomatal morphometry and maximal conductance estimation. Front. Plant Sci.12, 2703 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jayakody, H., Liu, S., Whitty, M. & Petrie, P. Microscope image based fully automated stomata detection and pore measurement method for grapevines. Plant Methods13(1), 94. 10.1186/s13007-017-0244-9 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Saponaro, P. et al. DeepXScope: Segmenting microscopy images with a deep neural network. In The IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) 843–850 (IEEE Computer Society, 2017). [Google Scholar]

- 24.Toda, Y., Toh, S., Bourdais, G., Robatzek, S., Maclean, D. & Kinoshita, T. DeepStomata: Facial recognition technology for automated stomatal aperture measurement (2018). bioRxiv. 10.1101/365098.

- 25.Thai, T. T. et al. Comparative analysis of stomatal pore instance segmentation: Mask R-CNN vs YOLOv8 on phenomics stomatal dataset. Front. Plant Sci.10.3389/fpls.2024.1414849 (2024). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Li, K. et al. Automatic segmentation and measurement methods of living stomata of plants based on the CV model. Plant Methods15(1), 67. 10.1186/s13007-019-0453-5 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Bheemanahalli, R. et al. Classical Phenotyping And Deep Learning Concur On Genetic Control Of Stomatal Density And Area in Sorghum. Plant Physiol.186(3), 1562–1579. 10.1093/plphys/kiab174 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Arya, S., Sandhu, K. S., Singh, J. & Kumar, S. Deep learning: As the new frontier in high-throughput plant phenotyping. Euphytica218(4), 47. 10.1007/s10681-022-02992-3 (2022). [Google Scholar]

- 29.Zand, M., Etemad, A. & Greenspan, M. Oriented bounding boxes for small and freely rotated objects. IEEE Trans. Geosci. Remote Sens.60, 1–15. 10.1109/tgrs.2021.3076050 (2022). [Google Scholar]

- 30.Zhang, F., Wang, B., Lu, F. & Zhang, X. Rotating stomata measurement based on anchor-free object detection and stomata conductance calculation. Plant Phenom.5, 0106. 10.34133/plantphenomics.0106 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Yang, X. et al. RotatedStomataNet: A deep rotated object detection network for directional stomata phenotype analysis. Plant Cell Rep.10.1007/s00299-024-03149-3 (2024). [DOI] [PubMed] [Google Scholar]

- 32.Wang, J., Renninger, H. J., Ma, Q. & Jin, S. Measuring stomatal and guard cell metrics for plant physiology and growth using StoManager1. Plant Physiol.195(1), 378–394. 10.1093/plphys/kiae049 (2024). [DOI] [PubMed] [Google Scholar]

- 33.Fish, D. A., Walker, J. G., Brinicombe, A. M. & Pike, E. R. Blind deconvolution by means of the richardson-lucy algorithm. J. Opt. Soc. Am. A12(1), 58. 10.1364/JOSAA.12.000058 (1995). [Google Scholar]

- 34.Labelme. 2023. https://github.com/wkentaro/labelme.

- 35.Akyon, F. C., Altinuc, S. O. & Temizel, A. Slicing aided hyper inference and fine-tuning for small object detection. In: 2022 IEEE International Conference on Image Processing (ICIP), 966–70. Bordeaux, France: IEEE (2022). 10.1109/ICIP46576.2022.9897990.

- 36.Jocher, G. Ultralytics/COCO2YOLO: Improvements. Zenodo 10.5281/ZENODO.2738323 (2019).

- 37.Glenn, J. (2023). “YOLOv8, https://Github.Com/Ultralytics. Ultralytics. https://github.com/ultralytics.

- 38.OpenCV, https://Opencv.Org/.” n.d. https://opencv.org/.

- 39.He, K., Gkioxari, G., Dollar, P. & Girshick, R. Mask R-CNN. In 2017 IEEE International Conference on Computer Vision (ICCV), 2980–88. Venice: IEEE (2017). 10.1109/ICCV.2017.322.

- 40.Negi, J., Hashimoto-Sugimoto, M., Kusumi, K. & Iba, K. New approaches to the biology of stomatal guard cells. Plant Cell Physiol.55(2), 241–250 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Blatt, M. R., Jezek, M., Lew, V. L. & Hills, A. What can mechanistic models tell us about guard cells, photosynthesis, and water use efficiency?. Trends Plant Sci.27(2), 166–179 (2022). [DOI] [PubMed] [Google Scholar]

- 42.Sai, Na. et al. STOMAAI: An efficient and user-friendly tool for measurement of stomatal pores and density using deep computer vision. New Phytol.238(2), 904–915. 10.1111/nph.18765 (2023). [DOI] [PubMed] [Google Scholar]

- 43.Takagi, M. et al. Image-based quantification of arabidopsis thaliana stomatal aperture from leaf images. Plant Cell Physiol.64(11), 1301–1310. 10.1093/pcp/pcad018 (2023). [DOI] [PubMed] [Google Scholar]

- 44.Driesen, E., Van Den Ende, W., De Proft, M. & Saeys, W. Influence of environmental factors light, CO2, temperature, and relative humidity on stomatal opening and development: A review. Agronomy10(12), 1975. 10.3390/agronomy10121975 (2020). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Jocher, G. Ultralytics/COCO2YOLO: Improvements. Zenodo 10.5281/ZENODO.2738323 (2019).

Data Availability Statement

Additional data is available on reasonable request to corresponding author.