Abstract

Background

This study introduces a novel deep learning methodology for the automated detection of a wide range of dental prostheses, including crowns, bridges, and implants, as well as various dental treatments such as fillings, root canal therapies, and endodontic posts.

Methods

Critically, our model was trained and validated using a diverse, multi-regional population dataset comprising 2,235 panoramic radiographs sourced from three distinct dental colleges. This diverse dataset enhances the generalizability and robustness of our approach. Our rigorous workflow encompassed dataset preparation, deep learning model selection, comprehensive training and evaluation procedures, and detailed result analysis and interpretation. We evaluated the performance of three state-of-the-art deep learning architectures: You Only Look Once (YOLO)v11, Faster Region-based Convolutional Neural Network (Faster R-CNN), and Vision Transformer (ViT). Each model was rigorously tested using a standardized protocol involving the division of the dataset into training, validation, and test sets.

Results

Our results demonstrate the superior performance of the ViT model, achieving a remarkable detection accuracy of 94.15%, coupled with a high precision of 94.64% and an F1-score of 93.52%. Interestingly, while ViT excelled in these specific metrics, Faster R-CNN yielded the best mean average precision (mAP) of 82.0% at an Intersection over Union (IoU) threshold of 0.50. This comparative analysis provides valuable insights into the strengths and weaknesses of different deep learning architectures for this specific task.

Conclusion

This research provides compelling evidence supporting the practicality and effectiveness of AI-driven automated dental restoration identification from panoramic radiographs, offering a highly promising and efficient solution for enhancing clinical diagnostic accuracy and streamlining workflows in dental practice. This AI, achieving 94.15% accuracy on diverse panoramic radiographs, automates the detection of numerous dental restorations. It provides compelling evidence for AI’s practicality in significantly enhancing diagnostic precision and streamlining clinical workflows, offering a highly efficient tool for modern dental practice and improved patient record management.

Keywords: Artificial intelligence, Deep learning models, Dentistry, Dental prosthesis, Dental restorations, Dental implants, Health care access, Panoramic radiography

Background

The integration of artificial intelligence (AI) in dentistry has dramatically improved the diagnostic capabilities, predictions, and clinical decisions, particularly from radiographic image analysis [1, 2]. Currently, the majority of the commercial software for dental radiography, intraoral scanners, and dental caries detection is based on AI-coded algorithms [3]. It is no longer difficult to envision the future impact of artificial intelligence on healthcare. The development of the algorithm requires a large and diverse dataset, including radiographic images (such as bitewings, periapical, panoramic, lateral cephalometric, and cone-beam computed tomograms), clinical images, and patient data [4]. Recently, Uribe et al. reported the publicly available datasets for dental images to study AI [5]. These publicly available datasets are valuable for enhancing data diversity and minimizing algorithmic bias.

Conventional dental radiographs, such as panoramic (OP), play a crucial role in assessing dental diseases, teeth, jaws, temporomandibular joints (TMJ), the relation of the inferior alveolar nerve to impacted third molar, dental restorations, dental prosthesis, and endodontic treatments. However, manual interpretation is considered challenging because of complex anatomical structures and pathologies. It remains time-intensive, prone to inter-observer variability, and prone to human error. These challenges, along with limited manpower, highlight the need for automated and standardized computer-aided detection (CAD) systems to improve diagnostic accuracy and efficiency. Previous studies have already concluded that the application of artificial intelligence is effective in detecting and predicting dental caries, alveolar bone loss [6], endodontic lesions [7], dental trauma [8], dental cysts, missing teeth [9], orthodontic treatment needs [10], dental prosthesis [11], dental restorations, and dental implants [12].

This study aimed to develop a deep learning model for the automated identification of various dental restorations, including fillings, root canal materials, endodontic posts, dental crowns, bridges, and implants, in panoramic radiographs. The proposed framework incorporated three advanced deep-learning models for object detection, trained on datasets from Pakistan, Thailand, and the United States. This diverse, multicentre dataset strengthens the model’s ability to adapt to different patient populations and clinical handling techniques, thereby enhancing its generalizability across various imaging conditions. However, differences in contrast quality, anatomical visibility, and artifact presence pose unique challenges, underscoring the need to develop an AI model that remains robust and reliable in real-world clinical scenarios.

Methods

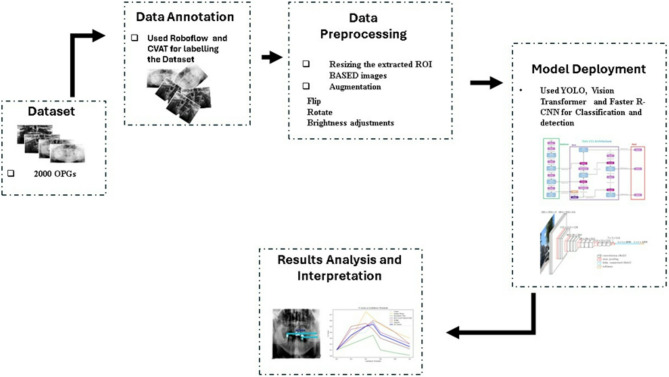

This methodology describes the complete pipeline for training a YOLOv11, Faster region-based convolutional neural network (Faster R-CNN), and Vision Transformer (ViT) to detect dental fillings, root canal material, endodontic posts, and dental prostheses (crowns and bridges) in panoramic radiographs. The workflow (Fig. 1) involves the preparation of the data set, the selection of the models, the training, the evaluation, and the deployment. This study was reported using the Checklist for Artificial Intelligence in Medical Imaging (CLAIM) 2024.

Fig. 1.

Workflow diagram of the methodology showing the Data annotation, preprocessing and Model deployment

Dataset

Multiple sources provide more than 2,235 radiographic images that originated from Pakistan [13], Thailand, and the USA [14]. Summary of the dental panoramic X-ray dataset, including image count, source distribution, and dataset splits for training, validation, and testing, is presented in Table 1. The collection contains radiographic images that showcase numerous dental conditions due to differences in prosthetic dentistry structure types and oral health patterns among populations. Table 2 presents the distribution of annotated instances of the six classes in the dataset with the division into training and validation sets. The highest number of instances is the Dental Fillings class and then the Crown and then the root canal treated tooth. The dataset contains 11,462 instances altogether. Radiographs, from patients who have undergone various treatment histories ranging from untreated decay to past procedures and complex prosthetic work, include dental radiographs (panoramic) with labels for six dental treatments: fillings, implants, crowns and bridges (dental prosthesis), root canal materials, endodontic posts used in root canals, and dental implants. The provided code uses this dataset to train various models for detecting these treatments, experimenting with different methods and settings. For more details, please see the READ ME.txt file [15]. The utilization of dental radiographs from various international regions allows the model to adapt effectively to different patient and clinical situations. The dataset represents a wide range of imaging techniques and exposure conditions, as well as different radiographic qualities, to improve the detection model’s performance reliability. Different imaging conditions within the dataset bring specific challenges that affect such aspects as contrast quality, anatomical visibility, and artifact detection because the dataset remains faithful to actual clinical scenarios. The study excludes implant-supported bridges, long-span dental bridges, orthodontic mini-implants, cases of dental trauma, deciduous and mixed dentition, as well as panoramic (OP) with excessive noise or artifacts. Included in the study are dental prostheses, dental restorations, endodontic posts, root canal-treated teeth, and dental implants. This study was approved by the Human Research Ethics Committee of the faculty of Dentistry, Chulalongkorn University (HREC-DCU 2024-093).

Table 1.

Summary of the dental panoramic X-ray dataset, including image count, source distribution, and dataset splits for training, validation, and testing

| Category | Details |

|---|---|

| Total Images | 2,235 dental X-rays (Orthopantomograms) |

| Source Distribution | - Tufts University (USA): 300 images |

| - Thailand: 800 images | |

| - Pakistan: 1,135 images | |

| Train Set | 1,835 images (~ 82%) |

| Validation Set | 200 images (~ 9%) |

| Test Set | 200 images (~ 9%) |

Table 2.

Instance-level class distribution of the dataset, stratified into training and validation sets

| Class | Training Instances | Validation Instances | Total Instances |

|---|---|---|---|

| Crown | 1,541 | 77 | 1,618 |

| Dental Fillings | 5,881 | 325 | 6,206 |

| Endodontic Post | 632 | 38 | 670 |

| Root Canal Treated Tooth | 1,400 | 91 | 1,491 |

| Bridge | 604 | 22 | 626 |

| Implant | 838 | 13 | 851 |

| Total | 10,896 | 566 | 11,462 |

Data labelling

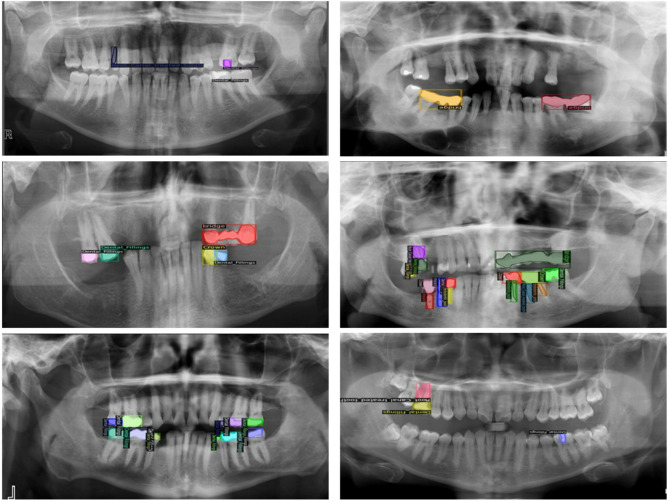

Annotation of the data set led to the proper training of YOLOv11, ViT, and Faster R-CNN, which resulted in the accurate interpretation and classification of dental radiographic images. Roboflow and CVAT are two different platforms that were used for the data annotation process. The annotation procedures required bounding boxes and polygons to surround target objects while preparing datasets that suited YOLOv11, Faster R-CNN, and ViT. Figure 2 displays the six annotated classes: crown, dental fillings, endodontic post, root canal-treated tooth, bridge, and implant. The detection performance required detailed labelling because dental structures have different levels of complexity and radiographic images show variable image quality.

Fig. 2.

Data annotation of different classes by using Roboflow

YOLOv11 and ViT demanded annotation through.txt files containing object class IDs together with normalized bounding box values (Xcenter, Ycenter, width, and height). The optimized detection performance comes from this processing format, which maintains efficient speed during real-time operations. The annotations for Faster R-CNN were saved with bounding-box coordinates listed in absolute pixel values through Pascal VOC (.xml) and COCO (.json) formats. Detectable dental prostheses and fillings can benefit from these formats as they enable the model to identify spatial features between different dental prostheses and filling objects.

Different OPGs displayed multiple dental labelling as defined earlier, so the annotation process needed to handle more than one label. Multiple structures with overlapping boundaries received specific labels for distinction purposes. The model received specific attention for instances where dental implants near crowns because the model needed to correctly recognize these dental structures. Manual review procedures were used to solve annotation problems caused by differences in radiographic levels and image noise between images acquired from Pakistan, Thailand, and Tufts University [13–15]. To ensure high-quality ground truth, annotations were carried out by three dental specialists: a prosthodontist, an endodontist and a restorative dentistry consultant, each with more than 10 years of clinical experience. Categories were defined based on established restorative dentistry guidelines. Ambiguous cases, such as differentiating resin-based versus metallic posts or post–crown combinations following root canal therapy were resolved through consensus discussion. The annotation protocol required clear delineation of restoration boundaries using bounding boxes or polygons, depending on structural complexity. To confirm reliability, 15% of the dataset was randomly selected for cross-review, yielding a high inter-rater agreement (Cohen’s κ = 0.89), thereby demonstrating consistency and accuracy of the labeling process. After labelling data scientist helped in model selection and automated the conversions of the formats of the dataset.

Data preprocessing

To ensure optimal training performance for YOLOv11, Faster R-CNN, and ViT, several preparatory stages were used to standardize the dataset, increase image quality, and improve model generalization. As there were a lot of variations in the data because of different demographics, preprocessing was necessary to handle differences in resolution, contrast, and imaging quality.

a) image resizing

Diverse image qualities existed in the dataset because radiographic images originated from multiple sources in Pakistan, Thailand, and Tufts University, creating inconsistencies in resolution, pixel density, and aspect ratios. Receiving inconsistent input sizes from these datasets became a problem for deep learning models because they produced substandard feature extraction while requiring more computing power. The input requirements of YOLOv11 and Faster R-CNN guided the homogenization process for all images by resizing them to specified dimensions without distorting their anatomical integrity.

The YOLOv11 input images and Vision Transformer received 640 × 640 pixel dimensions, which serve as the conventional standard measure for real-time object detection applications. The chosen resizing process delivered optimal processing efficiency without harming either performance speed or feature maintenance capabilities. A fixed input size proved essential to YOLO since grid-based detection requires this to avoid mapping inconsistencies.

Faster R-CNN operates on images with a 1024 × 1024 pixel size because the model depends on precise anatomical details for effective object detection. Since Faster R-CNN operates on proposals instead of grids, it requires higher resolution images to function optimally. Better localization of small dental elements, including web-based fillings and endodontic posts, becomes possible through enhanced resolution.

Maintaining correct aspect ratios between image dimensions formed a main barrier in the process of resizing dental prostheses without distortion. When images were resized directly, they deformed their anatomical structures, thus impairing learning capabilities for the model. The problem required resolution through letterboxing (zero-padding) applications. This technique ensured the preservation of original proportions through black padding, which adjusted the shorter dimension to create a square input without any dimension deformation. Techniques that resize images take into consideration the differences found in X-ray imaging quality. The enhancement of radiograph visibility through the combination of resizing techniques and contrast normalization occurred because various imaging machines and exposure protocols created radiographs with different pixel intensity levels. The resizing process protected the necessary structural information that allowed the reliable detection of dental prostheses among various groups of patients.

b) data augmentation

The radiographic image dataset included multiple normative variations in imaging methodology, as well as structural dental data and prosthetic formats between images. Some classes, especially dental fillings and endodontic posts, appeared infrequently, which could create model bias. The results required corrections because of data disturbances, so data augmentation techniques corrected these problems to support better model generalization functions. Increasing methods artificially increased the diversity of images by applying variances in image dimensions without modifying anatomical structures.

HSV (Hue Saturation Value) augmentation procedures were utilized for imaging variation simulation based on different X-ray machine types and exposure settings. The Value channel adjustment method in this technique simulated both excessive and insufficient exposure while enhancing the lighting variations tolerance of the model. Adjusting Saturation parameters enabled steady image sharpness by using both saturation increase and decrease methods for grayscale tones. The original black and white picture format was modified through small hue changes to represent pixel brightness fluctuation, which generated realistic image deviations. Different artificial modifications of the model collectively improved its ability to learn generalizable patterns, which led to reliable performance across diverse radiographic imaging scenarios.

Augmentation through scaling has been employed for random width and height transformations up to ± 10% that produced different prosthesis dimensions. The X-ray acquisition angle variations up to 15 degrees were considered through rotation transformation intervals. The introduction of shearing and translation functions established minor image movement that replicated various imaging point perspectives. The model utilized horizontal flipping methods only on prostheses presenting symmetrical elements to enhance its performance in recognizing mirrored patterns. The use of these augmentations resulted in a substantial enhancement of model robustness, which enabled it to accurately detect dental prostheses within various imaging conditions.

|

|

Whereas “I” represents the image, w is its width, h represents height, and x and y are the horizontal and vertical indices of a pixel, respectively.

The model received Gaussian noise through controlled mechanisms as a method to make it more resilient against X-ray quality variations. The model-training process incorporated different levels of simulated image graininess to acquire the ability to detect prostheses across high-quality and poor-quality X-ray images. Some classes, such as Endodontic posts, were less represented in the dental data. Choosing augmentation methods enabled the development of a more extensive number of examples for underrepresented classes compared to the more common ones. More intensive manipulation of the rarely seen classes took place during augmentation to preserve their natural anatomical forms. A better clinical representation of the dataset was achieved through the application of techniques, which included contrast modulation with geometric distortion and noise layering and selective augmentation of minority groups. The detection system operated effectively with dental prostheses under any medical imaging or patient demographic conditions.

Object model deployment

After training and evaluation, the optimized models were deployed for real-time inference and clinical integration. All YOLOv11, Vision Transformer, and Faster R-CNN were deployed using efficient strategies tailored to their respective architectures, ensuring accurate and efficient detection of dental prostheses, dental fillings, root canal treatments, and endodontic posts in radiographic images.

YOLOv11

YOLOv11 is the latest addition to the YOLO family, designed to further improve accuracy, efficiency, and real-time performance. Built on the foundation of its predecessors, YOLOv11 introduces novel enhancements in feature extraction, multi-scale processing, and prediction refinement, making it highly effective for object detection in complex environments such as dental prosthesis detection in radiographic images.

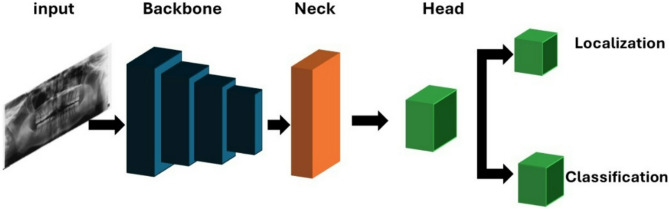

Backbone network

The backbone network is responsible for extracting spatial and semantic information from input images. YOLOv11 uses an advanced convolutional architecture in as shown in Fig. 3. The key features of the backbone include:

Fig. 3.

YOLO Framework with backbone, neck, and dual-output head for localization and classification tasks

Lightweight Convolutional Layers: Uses depth-wise separable convolutions to reduce computational load while preserving high-quality feature extraction.

Efficient downsampling: Implements strided convolutions and pooling layers to progressively reduce spatial dimensions while retaining significant object information.

Residual Connections: Inspired by ResNet, these connections improve gradient flow, reducing the likelihood of vanishing gradients and enhancing model stability.

Feature pyramid network (FPN)

To facilitate accurate detection across different object sizes, YOLOv11 integrates a Feature Pyramid Network (FPN). This component enhances the model’s ability to detect small and overlapping objects by combining features at multiple resolutions. The key improvements in FPN include:

Multi-Scale Feature Fusion: Merges fine-grained small-scale details with global semantic information, improving detection accuracy.

Bidirectional Feature Flow: Allows two-way information exchange between different layers, refining feature representation across multiple resolutions.

Detection head

The detection head in YOLOv11 has been redesigned to improve both localization and classification accuracy. The primary enhancements include:

Anchor-Free Object Detection: Unlike previous YOLO models, YOLOv11 eliminates the use of anchor boxes, reducing computational load and improving adaptability to various object sizes.

Adaptive Receptive Fields: Dynamically adjusts receptive field size based on object position, improving the detection of overlapping dental prostheses.

Optimized Prediction Heads: Uses multiple output layers to predict class labels, confidence scores, and precise bounding box coordinates in a single forward pass.

Efficiency and computational optimization

To achieve real-time performance without compromising accuracy, YOLOv11 integrates multiple efficiency optimizations:

Model Quantization and Pruning: Reduces model size and computational requirements, enabling deployment on low-power edge devices.

Optimized Activation Layers: Uses SiLU (Sigmoid Linear Unit) activation to accelerate convergence and stabilize gradient updates.

Parallelized Computation: Implements multi-threaded GPU acceleration, reducing inference latency and improving detection speed.

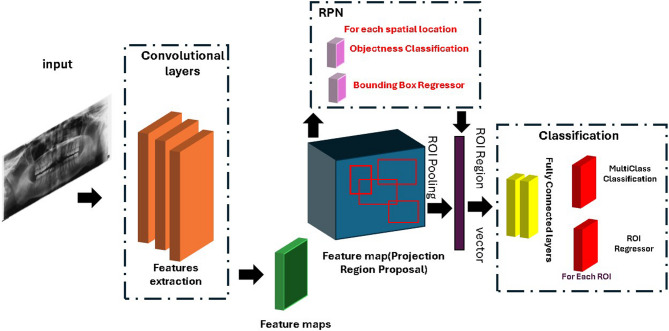

Faster R-CNN

Faster R-CNN is a real-time object detection model with high accuracy, incorporating a Region Proposal Network (RPN) for efficient region selection. Unlike traditional two-stage detectors that rely on computationally expensive algorithms such as Selective Search, Faster R-CNN dynamically generates region proposals, significantly improving speed and accuracy. The framework (Fig. 4) consists of several key components, including the backbone network for feature extraction, the RPN for proposal estimation, RoI pooling for fixed-size feature generation, and the detection head for classification and localization.

Fig. 4.

Faster RCNN Architecture showing the complete pipeline from input image through convolutional layers, feature map extraction, region proposal network (RPN) with object classification

Backbone network

The backbone network is responsible for extracting meaningful feature representations from the input image. Faster R-CNN typically utilizes deep CNN architectures such as ResNet or VGG to enhance feature learning. The backbone consists of:

Hierarchical Convolutions: Captures both low-level edges and high-level object features.

Feature Maps: Provides representations for downstream processing in the RPN.

Pretrained Weights: Leverages ImageNet-trained models to improve detection accuracy.

Region proposal network (RPN)

The RPN generates object proposals directly from feature maps, eliminating the need for external methods. It consists of:

Sliding Window Convolution: Runs a lightweight network over feature maps.

Anchor Boxes: Uses predefined anchor boxes at multiple scales to detect objects of varying sizes.

Proposal Refinement: Classifies each region as foreground (object) or background, refining bounding boxes for better accuracy.

Region of interest (RoI) pooling

RoI pooling extracts a fixed-size feature representation from region proposals. This step ensures that all proposed regions have a uniform shape for further processing. It includes:

Spatial Warping: Normalizes region proposals to a fixed dimension.

Max Pooling: Aggregates the most relevant features within each RoI.

Detection head

The detection head classifies objects and refines bounding box coordinates for accurate localization. It consists of:

Softmax Classifier: Assigns a class label to each detected object.

Bounding Box Regression: Adjusts bounding box coordinates to improve localization precision.

Non-Maximum Suppression (NMS): Removes redundant or low-confidence predictions, ensuring that only the most reliable detections remain.

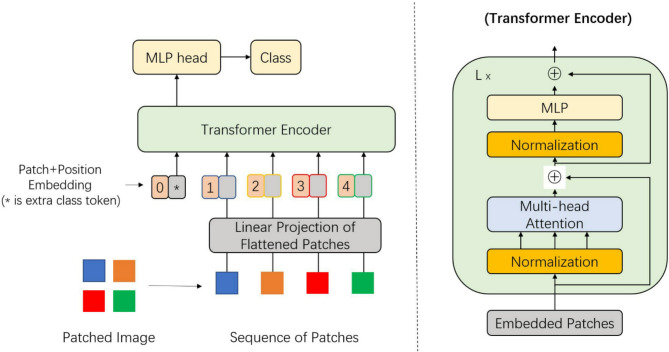

Vision transformer

Vision Transformer (ViT) is a groundbreaking deep learning model that uses transformer architecture, which was originally designed for Natural Language Processing (NLP), in computer vision features. Unlike transport-neutral networks (CNNS), which rely on an interdisciplinary filter to capture local spatial properties, ViT uses a collaborative mechanism to model global relationships in an image. This global meditation is especially powerful for complex image classification features, such as dental diagnosis, where locally distant properties can be important. In our study, we use a ViT-based model to classify dental temperatures into six categories: tooth crown, dental filling, bridge, dentist mail, root filling, and dental implant. The Vision Transformer (ViT) provides a visual diagram (Fig. 5) which represents an image classification process through patch division and linear projection to sequence with position embeddings, followed by a transformer encoder and an MLP head [16].

Fig. 5.

The Vision Transformer (ViT) provides a visual diagram which represents an image classification process through patch division and linear projection to sequence with position embeddings followed by a transformer encoder and an MLP head

Image processing

The ViT images first process images by dividing them into fixed-size non-subject tags, usually 16 × 16 pixels. Each patch is flattened and led through a linear layer to create a high-dimensional built-in. Since transformers do not originally understand the spatial structure, patch order and position coding are added to build in to preserve the spatial ratio.

Transformer encoder and classification

These rich built-ins are then passed through a series of transformer coder layers, each of which includes multi-headed self-rights and forward networks (FFN). A learning classification token (CLS symbol) is presented to the patch sequence, and it learns how to gather information in all patches. After the final coder block, the CLS symbols are pulled out and passed through a multilayer Perceptron (MLP) head, which sends out class options for six tooth categories.

Advantages and suitability

It allows the architectural model to capture both local and global patterns, especially suitable for tooth image analysis. Although ViT requires sufficient data and calculation resources, its ability to model long-distance dependency makes it a powerful alternative to traditional CNNs. Pretrained ViTs fine-tuned on datasets can significantly increase the performance of the dental image scenarios on the domain-specific dataset.

Evaluation metrics

YOLOv11, Faster R-CNN, and ViT have been utilized for the detection and classification of dental restorations and prosthesis in panoramic radiographs. These models vary a lot in their architecture and evaluation matrices. YOLOv11, Faster R-CNN are object detection models, while ViT, being based on transformers, is an image classification model. Consequently, different metrics of evaluation have been used in the related models. Object detection models usually evaluate the quality of predicted bounding boxes for the consideration of their performance (e.g., mean Average Precision (mAP) and Average Precision (AP) at different Intersection over Union (IoU) thresholds). IoU calculates the overlaps between the predicted bounding boxes and the actual bounding boxes as the ratio of the intersection to the union. Some of the common IoU thresholds used in evaluating how close a detection is to the ground truth include 0.50 and 0.75, among others.

On the other hand, the classification models such as ViT focus on the measures of accuracy, precision, recall, the F1-score, and the loss. Leading to these differences in the criteria of evaluation, and non-uniform presentation of metrics for all models, Precision has been chosen as the common criterion to use for comparative analysis. Precision is always indicative of the model’s capability of finding true positives correctly, which is of particular importance in the case of medical imaging applications, whereby false positives may have clinical implications.

Results

A dataset that involved 2235 high-resolution tooth panoramic images was used to train and evaluate the performance of YOLOv11, ViT, and Faster R-CNN for dental treatment detections. Summary of the results in Table 2. All images were annotated and labelled using Roboflow and secured high-quality ground truth. To prepare a dataset for training, to shape images into 640 pixels, and prepare stages such as implementing extensive computer text techniques for model generalization and improving the class balance.

There have been different numbers of training epochs for models – YOLOv11–400 epochs, Faster R-CNN – 500 epochs, ViT – 200 epochs. These differences are unique convergence behaviours, learning rates, and architectural characteristics unique to each model. Transformer-based models such as ViT tend to achieve best results earlier, i.e., in fewer epochs, because it has effective global feature extraction through self-attention mechanisms. On the other hand, region-based detection models like Faster R-CNN need more epochs to fine-tune the region proposal stage as well as the classification stage. The Epoch values were chosen according to the early validation performance and loss stabilization to achieve effective learning without risking overfitting.

YOLOv11

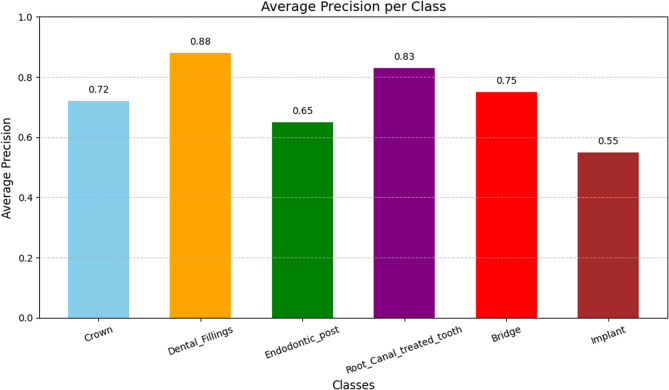

The YOLOv11 model was trained for 400 epochs using an adaptive learning rate and Adam optimizer, as well as extracting facilities with many growth methods and increasing the ability to increase the ability to classify accurately. As a result, YOLOv11 achieved the average precision (mAP) of 70.7% at 50% cross on the Union (IoU) limit. It indicates good identification capability. The model also obtained a precision of 73.6% and accuracy of 69%, showing that most of the predictions were correct and lacked 60.6%, reflecting its ability to identify most of the real positivity. These results (Fig. 6) show that YOLOv11 is very effective in detecting sharp and accurate dentures, making it suitable for real-time applications. The Average Precision (AP) of a YOLO object detection model on various dental classes is presented in Fig. 7. Crowns are the ones that have the highest AP (0.88), then there comes Bridges and Implants. The performance of Dental Fillings, Endodontic Posts, and Root Canal-treated teeth is lower.

Fig. 6.

The F1 scores in this graph reach peak values between 0.4-0.6 confidence thresholds in dental subject areas, while Dental Fillings demonstrate the highest accuracy level and Endodontic Post shows the lowest results

Fig. 7.

Class-wise Average Precision (AP) of the YOLO model for different dental conditions

Faster RCNN

The Faster RCNN model has been run for 500 epochs and showed better performance in several evaluation measurements (Fig. 8). It reached an average precision (AP) of 0.82 at IoU = 0.50, and more extensive OU = 0.50: 0.516 in the 0.95 area. In addition, it achieved an AP of 0.577 in the IoU area of 0.75, highlighting the strength of accurate object location. The model also performed well in the remembered matrix, IU for 100 detections, IU = 0.50: Average recall (AR) of 0.642 at 0.95. For object size-specific recall, it performed best in medium-sized objects with 66.7%, followed by small (59.6%) and large (50.9%) objects. (Fig. 9) indicates the Precision scores of a Faster R-CNN model on six classes of dental images. The best precision was implemented with Implants (0.9459), then Dental Fillings (0.9358), then Crowns (0.9333). The model had great overall performance, although precision was relatively low on Bridges (0.8913), Root Canal Treated Teeth (0.8500), and Endodontic Posts (0.8800).

Fig. 8.

F-RCNN results while predicting different classes correctly

Fig. 9.

Class-wise Average Precision of the F-RCNN model for different dental conditions

Vision transformer (ViT)

The Vision Transformer (ViT) model was trained for 200 epochs to classify six types of dental conditions: tooth crown, dental filling, bridge, dental mail, root filling tooth and dental implant. In evaluation, the model performed strongly in several matrices, loss of 0.0012 and an accuracy of 94.15%. In addition, the accuracy and recall were 94.64%and 94.15%, respectively, with an F1 score of 93.52%. These results indicate that the model effectively distinguishes between six dental categories with high accuracy and reliability. Call values of accuracy and recall suggest that the model maintains a good balance between false positives and false negatives and shows continuous performance in all classes. The confusion matrix in Fig. 10 also shows the classification ability of the model; it reveals that most predictions were correct, with only a little error. For example, 74 examples were classified correctly in one category, while 41 and 18 were properly identified in others, reflecting strong classic recognition. Overall, the model shows strong normalization and accurate prediction on the unsettled tooth image data, highlighting the suitability of the vision transformer architecture for automated tooth image classification functions.

Fig. 10.

Confusion matrix showing the classification ability of the VIT that most classes are predicted accurately

Figure 11 presents the Average Precision (AP) of a Vision Transformer model on various classes of dentals. The AP of crowns is the largest (0.95), then Implants (0.92), Bridges (0.89). The output of Dental Fillings, Endodontic Posts, and Root Canal-treated teeth is lower.

Fig. 11.

Average Precision by Class for Vision Transformer

Ablation study

In an ablation study, YOLOv11 was chosen over YOLOv7 and YOLOv8 due to its recent improvements in anchor-free detection, bidirectional feature flow, and real-time efficiency, making it particularly suitable for rapid screening tasks in clinical workflows. Faster R-CNN was included as a representative two-stage detector, known for its high precision in object localization, thus serving as a strong benchmark for detecting complex prosthetic structures. In contrast, the Vision Transformer (ViT) was selected to explore the potential of transformer-based global feature learning for the classification of dental prostheses, recognizing that it lacks explicit localization but offers robust performance in holistic image classification.

To improve transparency and reproducibility, Table 3 provides a detailed summary of the training configurations for each model, including optimizer, batch size, learning rate schedules, loss functions and number of epochs. Hyperparameters were selected based on established benchmarks and adjusted empirically for convergence stability. For example, YOLOv11 and ViT used the AdamW optimizer with linear/constant learning rate schedules, while Faster R-CNN used SGD with a MultiStep scheduler. Cross-entropy and IoU-based loss functions were applied to detection models, while ViT training utilized categorical cross-entropy. These design choices reflect a balance between computational feasibility, convergence behavior, and alignment with previous literature (Table 4).

Table 3.

YOLOv11, faster R-CNN, and vision transformer (ViT) comparison in terms of training hyperparameters and performance

| Aspect | YOLOv11 (Detection) | Faster R-CNN (Detection) | Vision Transformer (ViT) (Classification) |

|---|---|---|---|

| Key Hyperparameters | |||

| Batch Size | 16 | 8 | 24 |

| Optimizer | AdamW | SGD | AdamW |

| Initial Learning Rate | 3e-4 | 1e-2 | 2e-5 |

| Weight Decay | 5e-4 | 1e-4 | 0.01 |

| Scheduler | Linear Decay | MultiStep (milestones) | Constant |

| Training Details | |||

| Epochs | 400 | 500 | 200 |

| Performance Metrics | |||

| Accuracy | 69.0% | 75.1% | 94.15% |

| Precision | 73.6% | 82.0% | 94.64% |

| Recall | 60.6% | 64.2% (AR@100) | 94.15% |

Table 4.

An analytical overview of the advantages, weaknesses, and clinical utility of YOLOv11, faster R-CNN, and vision transformer (ViT) models, backed by evidence from the modern literature

| References | YOLOv11 | Faster R-CNN | Vision Transformer (ViT) |

|---|---|---|---|

| Chen et al. (2023) [16] | Matches reported challenges in detecting subtle features (e.g., treated teeth). | N/A | N/A |

| Ayhan et al. (2025) [17] | Suggests attention mechanisms (e.g., CBAM) could enhance small-object detection. | N/A | N/A |

| Bacanlı et al. (2023) [19] | N/A | Corroborates high precision (0.92 mAP) but notes slow processing speeds. | N/A |

| Park et al. (2023) [10] | N/A | N/A | Outperforms their model (88.53% accuracy vs. 94.15%). |

| Rashidi Ranjbar & Zamanifar (2023) [21] | Highlights dataset heterogeneity challenges. | Highlights dataset heterogeneity challenges. | Highlights dataset heterogeneity challenges. |

| Santos et al. (2023) [21] | Emphasizes the need for balanced datasets to address class imbalance. | Emphasizes the need for balanced datasets to address class imbalance. | Emphasizes the need for balanced datasets to address class imbalance. |

| Khurshid et al. (2023) [8] | Supports the use of streamlined annotations for complex detection tasks. | Supports the use of streamlined annotations for complex detection tasks. | Supports the use of streamlined annotations for complex detection tasks. |

| Our Work | High speed but struggles with small objects; requires architectural improvements. | High precision but slow; optimal for offline use. | Highest accuracy (94.15%) but struggles with overlaps; needs hybrid designs. |

Analysis of the models

The proposed study demonstrates high internal performance across multiple deep learning architectures; we acknowledge the absence of external validation and direct clinician feedback. Real-world deployment of AI systems in dentistry faces challenges such as variations in radiographic quality, heterogeneity in patient populations, and the risk of misclassifying subtle or rare restorations. Misclassification, even at low rates, may have consequences for treatment planning and patient safety, necessitating careful clinical oversight. To address these limitations, future work will focus on validating our models on independent multi-center datasets, incorporating expert clinician feedback through structured user studies, and designing clinician-in-the-loop diagnostic pipelines. In addition, we plan to evaluate real-time system integration within dental workflows, ensuring that automated detection complements rather than replaces professional judgment.

Discussion

This research delivered a complete assessment of three advanced deep learning models, YOLOv11 and Faster R-CNN, alongside Vision Transformer (ViT) for the detection and classification of dental prostheses and restorations in panoramic radiographs. Each model demonstrated distinct strengths and limitations in challenging dental image analysis, particularly in detecting prosthodontic components such as crowns, bridges, implants, and root canal-treated teeth. Similarly, teeth and prosthesis detection in dental panoramic radiographs using a CNN-based object detector [11]. YOLOv11 delivered exceptional outcomes using recall and precision measurements, which means it would function well for real-time clinical situations that need quick responses [17]. Designing its architecture to maximize speed and accuracy simultaneously made YOLOv11 excellent at identifying fundamental dental restorations and prosthodontic components, including crowns and bridges, and dental implants. The results indicate its capability as a powerful diagnostic instrument for dental clinics when dealing with large screening sessions. YOLOv11 proved inadequate at identifying small endodontic materials and root canal-treated dental structures because of their unclear appearance during radiographic view. Standard object detection frameworks face difficulties in analysing such structures because of radiographic features with low contrast, together with overlapping anatomical elements and ill-defined edge definitions. The failure to detect subtle dental features matches the findings reported by Chen et al. (2023) in their CNN-based panoramic image detection framework concerning the identification of treated teeth and retained root structures [18]. YOLOv11 showed general stability in detecting dental treatments (root canal treatment, endodontic post, and dental fillings) regardless of its challenges with small object identification. The testing calls for additional development of high-resolution training methods and changed anchor box techniques, and customized loss functions to maximize detection effectiveness on compact dental objects. Ayhan et al. (2025) applied the Convolutional Block Attention Module (CBAM) to YOLOv7 for enhancing the detection of dental caries under fixed dental prostheses (FDPs) [19]. The detection capability improved notably after their new method was applied, especially for identifying carious lesions, which led to a mAP increase from 0.800 to 0.846. Research indicates that attention mechanisms alongside other model augmentations have great potential to advance the refined performance of YOLOv11 for detecting detailed dental features.

The detection model Faster R-CNN achieved exceptional precision when finding medium-sized dental items through its two-stage detection procedure [20]. The RPN component built into Faster R-CNN provided precise box detection capabilities, surpassing YOLOv11, which resulted in enhanced detection of intricate prosthodontic objects. Increased accuracy in dental outcome detection from YOLOv11 required a reduced processing speed operation, which hinders its real-time clinical application effectiveness. According to Bacanlı et al. (2023), the examination of multiple object detection models by their research team focused on dental filling recognition within periapical radiographs [21]. The team found Faster R-CNN with ResNet101 as the backbone achieved 0.92 mAP while maintaining high precision numbers, yet needed extra time for processing because of its complex nature. Faster R-CNN provides the best detection accuracy, but its performance makes it appropriate only for offline or research tasks, which do not require quick processing speeds [22].

The Vision Transformer (ViT) achieved the highest accuracy (94.15%) and F1-score (93.52%) among the evaluated models due to its global attention. ViT showed particular strength in classifying dental implants and fillings, although it reduced performance in cases involving poorly defined or overlapping structures. The localized image features sensitivity and insufficient hierarchical feature extraction of ViT create challenges in achieving excellent performance, similar to CNN-based models. As to the study by Park et al. (2023), while their model achieved an accuracy of 88.53% with an F1 score of 84.00%, our ViT model attained an impressive accuracy of 94.15% and an F1 score of 93.52%, indicating better overall classification performance [12]. These results suggest that the ViT model excels in distinguishing dental implants, showcasing its higher precision and reliability in detecting implant-related conditions.

A significant challenge in this study was the heterogeneity of the dataset, which included panoramic radiographs from different countries and imaging protocols. Multiple institutions based in different geographic regions provided radiographic images that resulted in variations of contrast, alongside brightness levels and resolution, and illumination settings. The model struggled to generalize correctly because of the differences among data sources, while it also produced unpredictable detection results for unusual dental items. Rashidi Ranjbar & Zamanifar (2023) demonstrate similar image quality inconsistencies in their work on autonomous dental treatment planning that restricted object detection model effectiveness [23]. For better underrepresented class representation, our research demonstrates that standard data augmentation provided limited results, and future work requires generative adversarial networks (GANs) to synthesize data. The object classes among the dataset had an unbalanced distribution where endodontic posts and dental fillings appeared less often than crowns and implants. Rarity among classes in the dataset reduced performance efficiency in learning these categories because of class imbalance. Research by Santos et al. (2023) about dental implant identification through artificial intelligence achieved better accuracy and model stability by using dataset designs that were balanced specifically toward implant images [23]. Stately datasets that show appropriate representation of every important clinical category demonstrate the need for precise selection and preparation of data according to research.

The detection and classification tasks of dental radiographs became challenging due to the multiple anatomical regions that overlapped. YOLOv11 provided quick object detection for large separate objects but struggled when processing crowded or overlapping features [9, 17]. The study by Khurshid et al. (2023) introduced piecewise annotations combined with object detection to determine Kennedy class in dental arches. The research method successfully detected dental arch characteristics together with their directional orientation by utilizing a compact data set, which proves that streamlined annotation systems advance model abilities in intricate dental identification work [9]. Faster R-CNN achieved better identification of these entities by employing its proposal-based strategy, although it became slower compared to other methods. ViT successfully detected individual features but struggled with overlapping areas in complex images, thus requiring better techniques to transform features from integrated object units. Our work finds that dental image analysis through deep learning offers different advantages according to clinical requirements, between rapid detection tasks and detailed classification needs, and research-related localization purposes. The rapid screening demand makes YOLOv11 preferred, but Faster R-CNN stands strongest for defining boundaries of complex objects, whereas ViT shows potential due to spatial abilities despite facing difficulties when structures strongly overlap. Multiple research findings indicate ensemble model methods represent future advancements in this field because a single model does not excel at all tasks, and customized architectures need high-quality, balanced datasets. The restorations examined in this study (e.g., crowns, metallic posts, and implants) are generally characterized by high radiographic density and are readily visible to experienced clinicians; automated detection offers several practical benefits. First, in wide dental practices or public health programs, automated systems can accelerate population-level screening and reduce the burden on clinicians. Second, AI-driven annotation can enhance consistency in dental record-keeping and facilitate longitudinal monitoring of restorative treatments. Finally, such systems are particularly valuable in low-resource settings or remote clinics, where access to prosthodontic specialists is limited and automated decision support can provide substantial diagnostic assistance.

The cited research emphasizes that both model design improvement and dataset refinement will drive the creation of automatic diagnostic instruments for dentistry, which can integrate naturally into clinical environments.

Future directions

Future work will focus on three-dimensional imaging approaches to overcome the limitations of 2D X-ray images for prosthesis understanding. To address class imbalance, we plan to design Generative Adversarial Networks (GANs) for generating realistic training samples of rare dental restorations, thereby creating a more balanced and robust dataset without compromising anatomical integrity. Simultaneously, we will expand our dataset by increasing population diversity and including a broader spectrum of dental complications and pathologies. Architecturally, we aim to construct a hybrid model combining the detection speed of YOLOv11, the accuracy of Faster R-CNN, and the contextual forecasting ability of ViT to achieve unparalleled accuracy and generalization. The ultimate goal is the seamless integration of these refined models into real clinical setups through the development of real-time diagnostic software systems, providing dental experts with immediate, AI-driven information to enhance diagnostic efficiency, accuracy, and patient outcomes.

Conclusion

This study successfully evaluated the capabilities of YOLOv11, Faster R-CNN, and ViT for dental restoration detection, directly addressing the aim of assessing their accuracy and diagnostic potential. ViT demonstrated the highest performance with 94.15% accuracy, 94.64% precision, and 94.15% recall, while Faster R-CNN achieved an 82.0% mAP for object detection at IoU = 0.50. These findings suggest that deep-learning-based automated detection can significantly enhance the speed, prediction, and diagnostic accuracy within dental practice. We recommend the integration of such AI-assisted tools to support clinicians in real-time decision-making, streamline workflows, and improve diagnostic reliability across various dental specialities, including restorative, prosthodontic, endodontic, and implant dentistry. This advancement holds substantial promise for elevating dental education, simulation practice, and ultimately, patient outcomes by bridging the gap between manual assessment and advanced automated diagnostics.

Acknowledgements

The authors would like to thank the OralAI group for their valuable mentorship and continuous support throughout the preparation of this manuscript. (https://oralai.org/).

Declaration of generative AI and AI-assisted technologies in the writing process

During the revision of this work, the author(s) used ChatGPT-4 and Grammarly to improve the English language in a few paragraphs, not the whole manuscript. After using this tool/service, the author(s) reviewed and edited the content as needed and take(s) full responsibility for the content of the publication.

Abbreviations

- AI

Artificial Intelligence

- AP

Average Precision

- AR

Average Recall

- CAD

Computer-aided Detection

- CLAIM

Checklist for Artificial Intelligence in Medical Imaging

- CLS

Learning Classification Token

- CNNS

Transport-neutral Networks

- FDPs

Fixed Dental Prostheses

- FFN

Forward Networks

- FPN

Feature Pyramid Network

- GANs

Generative Adversarial Networks

- HSV

Hue Saturation Value

- IoU

Intersection over Union

- mAP

Mean Average Precision

- MLP

Multilayer Perceptron

- NLP

Natural Language Processing

- NMS

Non-Maximum Suppression

- OP

Panoramic

- OPG

Orthopantomogram

- R-CNN

Region-based Convolutional Neural Network

- RoI

Region of Interest

- RPN

Region Proposal Network

- SiLU

Sigmoid Linear Unit

- TMJ

Temporomandibular Joints

- ViT

Vision Transformer

- YOLO

You Only Look Once

Authors’ contributions

Zohaib Khurshid & Fatima Faridoon contributed to the conceptualization, methodology, validation, formal analysis, investigation, project administration, and writing original draft. Thantrira Porntaveetus, Onanong Chai-U-Dom Silkosessak, and Vorapat Trachoo contributed to the investigation, project administration, writing review & editing. Maria Waqas contributed to the conceptualization, methodology, validation, formal analysis, investigation, project administration, and writing of original drafts. Shehzad Hasan contributed to the conceptualization, methodology, visualization, and critical revision of the manuscript.

Funding

This research was supported by Health Systems Research Institute (68 − 032, 68 − 059), Faculty of Dentistry (DRF69_015), Thailand Science Research and Innovation Fund Chulalongkorn University (HEA_FF_68_008_3200_001).

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Declarations

Ethics approval and consent to participate

Ethical approval for the study was obtained from the Human Research Ethics Committee, Faculty of Dentistry, Chulalongkorn University (HREC-DCU 2024-093). The Institutional Review Board (IRB) waived the need for individual informed consent, as the data used was fully anonymized and did not contain any patient-identifiable information. The data from Pakistan [11] and the USA [12] are publicly available. This study was conducted in accordance with the principles of the Declaration of Helsinki.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Samaranayake L, et al. The transformative role of artificial intelligence in dentistry: a comprehensive overview. Part 1: fundamentals of AI, and its contemporary applications in dentistry. Int Dent J. 2025;75(2):383–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rewthamrongsris P, et al. Accuracy of large language models for infective endocarditis prophylaxis in dental procedures. Int Dent J. 2025;75(1):206–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Michou S, et al. Automated caries detection in vivo using a 3d intraoral scanner. Sci Rep. 2021;11(1):21276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kahurke S. Artificial Intelligence Algorithms and Techniques for Dentistry. in 2023 1st International Conference on Cognitive Computing and Engineering Education (ICCCEE). 2023.

- 5.Uribe SE, et al. Publicly available dental image datasets for artificial intelligence. J Dent Res. 2024;103(13):1365–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lee SW, et al. Evaluation by dental professionals of an artificial intelligence-based application to measure alveolar bone loss. BMC Oral Health. 2025;25(1):329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Karkehabadi H, et al. Deep learning for determining the difficulty of endodontic treatment: a pilot study. BMC Oral Health. 2024;24(1):574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hua Y, Chen R, Qin H. YOLO-DentSeg: a lightweight real-time model for accurate detection and segmentation of oral diseases in panoramic radiographs. Electronics. 2025;14(4):805. [Google Scholar]

- 9.Khurshid Z, et al. Deep learning architecture to infer Kennedy classification of partially edentulous arches using object detection techniques and piecewise annotations. Int Dent J. 2025;75(1):223–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Marya A, et al. The role of extended reality in orthodontic treatment planning and simulation-a scoping review. Int Dent J. 2025;75(6):103855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ali MA, Fujita D, Kobashi S. Teeth and prostheses detection in dental panoramic x-rays using CNN-based object detector and a priori knowledge-based algorithm. Sci Rep. 2023;13(1):16542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Park W, Huh JK, Lee JH. Automated deep learning for classification of dental implant radiographs using a large multi-center dataset. Sci Rep. 2023;13(1):4862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Waqas MH, Khurshid S, Kazmi Z, Shakeel. OPG dataset for Kennedy classification of partially edentulous arches. Mendeley Data; 2024.

- 14.Panetta K, et al. Tufts dental database: a multimodal panoramic x-ray dataset for benchmarking diagnostic systems. IEEE J Biomed Health Inform. 2022;26(4):1650–9. [DOI] [PubMed] [Google Scholar]

- 15.khurshid zW, Faridoon M, PORNTAVEETUS F, Trachoo THANTRIRA, Vorapat. Annotated OPG dataset for dental Fillings, prostheses (Crowns & Bridges), endodontic Treatments, endodontic Posts, and dental implants. Mendeley Data; 2025.

- 16.Wang Y, et al. Vision transformers for image classification: a comparative survey. Technologies. 2025;13(1):32. [Google Scholar]

- 17.Jegham NK, Abdelatti CY, Hendawi M. Abdeltawab, Evaluating the evolution of YOLO (You only look Once) models: A comprehensive benchmark study of YOLO11 and its predecessors. arXiv; 2024.

- 18.Chen SLC, Mao TY, Lin YC, Huang SY, Chen YY, Lin CA, Hsu YJ, Li YM, Chiang CA, Wong WY, Abu KY, Angela P. Automated detection system based on Convolution neural networks for retained Root, endodontic treated Teeth, and implant recognition on dental panoramic images. IEEE Sens J. 2022;22(23):23293–306. [Google Scholar]

- 19.Ayhan B, Ayan E, Atsü S. Detection of dental caries under fixed dental prostheses by analyzing digital panoramic radiographs with artificial intelligence algorithms based on deep learning methods. BMC Oral Health. 2025;25(1):216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Girshick R. Fast R-CNN. in 2015 IEEE International Conference on Computer Vision (ICCV). 2015.

- 21.Bacanli Ge. a., Dental filling detection using deep learning in periapical radiography, in 6th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT 2022). 2022: Ankara, Turkey. pp. 721–724.

- 22.Tian J, Lee S, Kang K. Faster R-CNN in Healthcare and Disease Detection: A Comprehensive Review. in 2025 International Conference on Electronics, Information, and Communication (ICEIC). 2025.

- 23.van Nistelrooij N, et al. Combining public datasets for automated tooth assessment in panoramic radiographs. BMC Oral Health. 2024;24(1):387. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.