Abstract

Humanoid robots are the latest bet of the robotics community in advancing ways robots carry out a large variety of tasks that generate profit or increase quality of life for people. While their capabilities might extend to assistive care tasks, such as feeding, dressing, or household tasks, it is unclear if people are comfortable with having humanoid robots in their homes assisting with those tasks. In this paper we explore people’s attitudes towards assistive humanoid robots in the the home. We present two questionnaire studies, with 76 total participants, in which people are shown imaginary images of humanoid robots performing assistance tasks in the home, along with special purpose robot alternatives. Participants are asked to rate and compare robots in the context of eight different tasks and share their reasoning. The second study also shows participants pictures of real humanoid robot both without any context and in the context of in-home assistance tasks, and asks their opinions about these robots. Our findings indicate that people prefer special purpose robots over humanoids in most cases and their preferences vary by task. Although people think that humanoids are acceptable for assistance with some tasks, they express concerns about having them in their homes.

I. Introduction

There has been a recent surge of interest and investment in humanoids—robots with human-sized proportions and human-like morphology, including two-legs, two arms with 5-fingered hands, and a head. While a number of startup companies are going all in towards making humanoids practical, big tech companies have started significant internal efforts or invested in smaller companies. Humanoid startups have been exceedingly successful in raising funding. For instance, only a year ago, Figure AI raised $675M in Series B funding ($2.6B valuation) and has now reached $1.5B in investments ($39.5B valuation) [1], including from Microsoft, OpenAI, and NVIDIA. Tesla is spending significant capital towards the development of its humanoid robot, Optimus [2]. Outside the USA, China is rushing to catch up and lower the costs of humanoids, with over $10B government investments [3]. Goldman Sachs estimates that the global market for humanoids could reach $38B by 2035 [4].

One of the central arguments for making robots that match human form and structure, is that they can replace human labor in the way tasks and work environments are currently structured. As such, many companies making the robots target applications in sectors with high labor shortages where they could safely be deployed in the short term, such as manufacturing, logistics, shipping, distribution, and retail. A subset of the companies are, however, envisioning humanoids assisting humans in homes (Fig. 1). For example, Figure1 states that humanoids will revolutionize “assisting individuals in the home (2+ billion)” and “caring for the elderly (1 billion).” Marketing materials for Figure humanoids show the robots handing an apple to a human, making coffee with a coffee machine, putting away groceries and dishes, pouring drinks, and watering a plant. The Neo humanoid by 1X Technologies2 seems to be solely targeted for in-home applications, with the company stating that “1X bets on the home” and that they are “building a world where we do more of what we love, while our humanoid companions handle the rest.” Neo is shown doing tasks like vacuuming, preparing and serving tea, wiping windows and tables, carrying laundry and grocery bags, and handing people things like their keys or a T-shirt. Similarly, Tesla’s humanoid Optimus is marketed as “an autonomous assistant” and is shown folding clothes, cracking eggs, taking out groceries, receiving package deliveries, and playing games with a family.

Fig. 1.

Screenshots from marketing videos of three humanoid robots specifically advertised for in-home use, shown performing a range of tasks in homes. (Top) Neo by 1X Technologies, (Middle) Figure 02 by Figure, and (Bottom) Optimus by Tesla.

Although many people could benefit from assistance with such tasks, whether due to busy lifestyles or disabilities that prevent them from doing certain tasks independently, it is unclear if people are comfortable with having humanoid robots in their homes. Given the scale of investment towards developing such robots, we believe it is critical and timely to investigate the merits of in-home humanoid robots from a user-centered perspective. Hence, in this paper we set out to investigate people’s attitudes towards humanoid robots assisting with a variety of tasks in the home, focusing specifically on assistance of older adults. Through a series of questionnaires showing pictures of imaginary and real-world robots, we find that most people would prefer to have special purpose robots rather than a generalist humanoid. People nonetheless think that humanoids are acceptable for assistance with some tasks, but express important and valid concerns, such as space, safety, and privacy.

II. Related Works

A. In-Home Assistive Robots

People’s attitudes towards physically assistive robots (PARs) have been investigated in a number of formative studies, focusing on older adults [5]–[7] and people with motor limitations [8], [9]. A recent survey covers advances made in developing PARs for people with disabilities [10], showing increasing efforts to include target users in the design and evaluation of PARs and deploying more robots in homes. Some studies involving target users of PARs reveal preferences of users about such robots. For example, Bhattacharjee et al. demonstrated that users with motor limitations do not necessarily want fully-autonomous robots and would prefer to have control over the robot for some parts of the feeding process [11].

A recent scoping survey identified nine “humanoid” robots (e.g., Care-o-bot, TIAgo, PR2) for assisting people with physical disabilities in activities of daily living [12]; however, none of those robots use legged locomotion and they are all far from the human form. Similarly, recent research on PARs involves using general-purpose non-humanoid robots, like the Stretch mobile manipulator [13] that looks more like a coat-hanger than a human. We are unaware of any prior work investigating the use of humanoid robots similar to the ones highlighted in Sec. I for physical assistance in which potential users are included in the research to assess their attitudes and acceptance. The humanoid form is common in socially assistive robots [14], [15], but humanoids used for social assistance are significantly different from the newer, function-oriented humanoids studied in this paper. Those humanoids are often much smaller and simpler, and they have more friendly, cartoon-like features (e.g., Nao3).

B. Attitudes Towards Humanoid Robots

Prior work in the field of human-robot interaction has investigated people’s attitudes towards humanoid robots, especially in the context of the uncanny uncanny valley effect [16], [17], which indicates that highly human-like robots might create a negative emotional response in people. A recent study investigated people’s perception of 251 real-world robots from the ABOT database4, demonstrating there might be an uncanny valley effect even for robots that have lower human-likeness [18]. Some studies compare people’s perception of specific robots, e.g., Honda’s ASIMO versus Robovie [19], or Sofbank’s Nao versus Pepper [20]. Haring et al. demonstrated cultural differences between Australia and Japan in attitudes towards humanoids [21]. A recent study focused specifically on people’s perceotion of humanoid robot faces [22]—something many recent humanoids lack. Overall, the literature suggests that some decisions made in the design of the new wave of humanoid robots (e.g., colors, lack of facial features), as well as, advances in making their motion smooth and natural might help with their acceptance, but people might still have reservations about having such human-like robots in their homes. However, there is lack of concrete evidence.

III. Methods

We investigate people’s attitudes towards in-home humanoid robots by tackling the questions of (1) whether people want to have humanoid robots in their homes, (2) whether they prefer humanoid robots over special purpose robots, and (3) whether their preferences change based on the assistive task. To answer these questions we devise a series of questionnaires asking people to rate, compare, and comment on imaginary and real humanoid and special purpose robots across a variety of in-home assistance tasks.

A. Materials

In order to allow a direct and consistent comparison of humanoid and special purpose robots for in-home care, we use generative AI tools to create speculative images of assistive robots. We cover eight different tasks inspired by prior work on robotics assistance for older adults [5], including assistance for eating, dressing, managing medications, navigation, going up/down the stairs, cooking, vacuuming, and laundry (Fig. 2). The tasks have varying levels of proximity to the user, physical interaction, and risks for physical harm. The humanoid robot appears to be the same in all images. Special purpose robots are different in all images. Some of them correspond to existing products (e.g., Roomba for vacuuming, Obi for feeding assistance, medication management device, stairlift, and motorized wheelchair). Others are speculative, corresponding to ongoing research efforts in developing robot arms that can assist with dressing, cooking, and laundry. The humanoid robot assistance scenarios also vary in how realistic they are. While vacuuming, doing laundry, and assisting with meals correspond to existing humanoid robot functionalities (Fig. 1), others like feeding a person or carrying a person are more speculative. All images depict older adults as the main users of the robots.

Fig. 2.

AI-generated images of humanoid and special purpose robots across eight tasks used in our questionnaires.

We used both OpenAI’s ChatGPT with GTP-4o and Gemini with ImageGen3 with iteratively refined prompts to generate a number of candidate images and selected a coherent set from those. To ensure coherence across images, prompts included details about the robots, the environment, and the user5. To explore people’s attitudes towards real humanoid robots we use two sets of pictures. First we present participants with photos of nine current humanoid robots, shown in Fig. 3. The photos show each robot standing up with a white background, lacking any task context. They are shown in a 3-by-3 grid, allowing participants to see each of them in close detail. Second, we present participants with images of the three robots specifically marketed for in-home use (Neo, Figure, Optimus), shown in Fig. 1. The photos are taken from the company webpages or screenshot from company videos, edited slightly for uniform brightness, and cropped to center the robot in the task context.

Fig. 3.

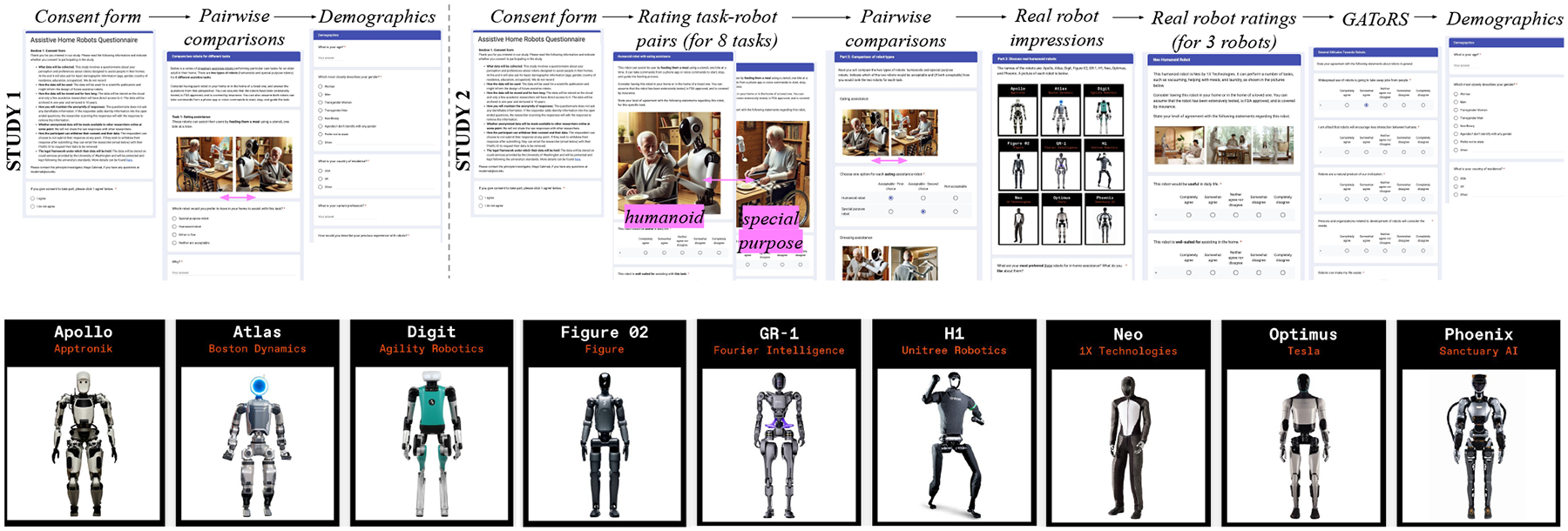

(Top) Flow of questionnaires in the two studies. (Bottom) Robot cards for nine humanoid robots featured in a recent IEEE Spectrum article [23].

B. Study design

We conducted two studies with a different set of questionnaires, detailed in Sec. III-C. Study 1 focuses on the direct comparison of humanoid and special purpose robots and emphasizes open-ended questions to get insights about people’s reasoning. Study 2 includes a longer series of questionnaires, first asking people to rate each robot-task combination across 8 tasks, and then directly comparing robots. Afterwards, it asks people to share their opinions about real humanoid robots, first without any context using open-ended questions, and later with the marketing materials from home settings via rating scales. In both studies, the order in which the speculative humanoid and special purpose robot pictures (Fig. 2) are presented to the participant is counterbalanced. The procedure for the two studies is summarized in Fig. 3.

C. Questionnaires

1). Comparison questions:

Study 1 involves showing participants pairs of images for each task, a humanoid robot and a special purpose robot, side by side. Participants are asked the forced-choice question: Which robot would you prefer to have in your home to assist with this task? with the answer options (a) humanoid robot, (b) special-purpose robot, (c) either are fine, and (d) neither is acceptable.

Study 2 involves a preference question asked slightly differently. Participants are shown the same pair of robot images and are asked to pick one of the following options for both the humanoid and the special-purpose robot: (a) Acceptable - First choice, (b) Acceptable - Second choice, and (c) Not acceptable. Although this question gives participants the same options, it provides additional information, i.e., whether a robot is acceptable even if it is not their first choice. Further, the redundancy in the question serves as an attention check; i.e., if a participant selects first choice or second choice for both robots, it means they are not paying attention.

2). Rating robot-task pairs:

Although we are primarily interested in people’s bottom-line preference for whether they would want to have the different assistive robots in their homes, we also hope to identify the different factors that influence their preferences and how the type of assistive task impacts their preference. To that end, we created a series of questionnaire statements with 5-point Likert scale agree-disagree answers that participants respond to in the context of a single robot-task pair. The statements, listed in Table I, were adapted from questionnaires used in prior work [5], [24] to be robot and task specific (e.g., mention “this robot” and “this task”). They address comfort, perceived reliability, trust, comfort around others, sense of agency, privacy concerns.

TABLE I.

List of statements used in the questionnaire.

| S1: This robot would be useful in daily life. |

| S2: This robot is well-suited for assisting with this task. |

| S3: I would feel comfortable having this robot in my or my loved one’s home. |

| S4: I would feel safe around this robot while performing this task. |

| S5: Having this robot assist with this task would reduce my sense of agency*. |

| S6: I would trust this robot to perform this task reliably. |

| S7: I would be concerned about my privacy while performing this task with this robot’s assistance*. |

| S8: I would feel comfortable having this robot around others. |

| S9: I would prefer this task to be assisted by a human, rather than this robot*. |

| S10: Overall, I would want to have this robot to assist me or a loved one with this task. |

Note that higher agreement with the statements marked with * mean lower preference for the robot being rated. Later in Study 2, the same statements modified to be non-task-specific are used to assess real humanoid robots shown doing multiple tasks in the home.

3). Open-ended questions:

In Study 1, comparison questions (Sec. III-C.1) are followed by a “Why?” asking participants to explain their choice for each task. In Study 2, participants are given the open-ended response prompt “Please explain factors that influenced your responses above and list any concerns you would have about having this robot” after the ten rating scale questions asked for each of the 16 robot-task pairs. The same prompt is also given after rating questions for the three real in-home humanoid robots. The comparison questions in Study 2 are followed by two open-ended questions after all eight comparisons: “What factors influenced your preferences above?” and “Did your preference change depending on the task? Why or why not?” Lastly, people’s reactions to real humanoids without context were elicited with the two open-ended questions: “What are your most preferred three robots for in-home assistance? What do you like about them?” and “What are your least preferred three robots for in-home assistance? What do you dislike about them?”

4). Participant profile:

To assess the broader attitudes of participant towards robots in Study 2, we use the General Attitudes towards Robots Scale (GAToRS) questionnaire [25] with 20 statements covering personal and societal, positive and negative attitudes. To be consistent with the rest of the questionnaire, we used a 5-point agreements scale rather than the 7-point scale of the original questionnaire. At the end of both studies, participants completed demographic questions.

D. Data Analysis

We treat the rating scales in the questionnaires as ordinal data, and hence provide median and standard error for descriptive statistics. We conduct Wilxocon Signed-Rank tests to compare the paired ratings between humanoid and special purpose robots. Qualitative data from the open-ended questions (described in Sec. III-C.3) is analyzed using an iterative inductive methodology for thematic coding [26].

IV. Results/Findings

A. Participants

We conducted both studies on Prolific, an online research platform [27]. Study 1 included 40 participants (24 women, 15 men, 1 non-binary) between the ages 20–74 (M=40.1, SD=14.1), from USA (32) and UK (8). 11 participants had moderate level of experience with robots; the rest had limited or no experience. Participants took 13m24s to complete the study on average and they were paid $4. Study 2 included 36 participants (12 women, 24 men) between the ages 18–66 (M=37.7, SD=13.5), from USA (25) and UK (11). Although 40 participants were recruited, data for 4 participants was removed due to failing an attention check (described in Sec III-C.1). Four participants had moderate level of experience with robots; the rest had limited or no experience. Participants took 49m10s to complete the study on average and they were paid $10. On GAToRS, participants had high agreement with personal and societal level positive attitude statements, with an overall median of 4 (“somewhat agree”) for both (SE=0.09 for P+, SE=0.07 for S+). They also had low agreement with personal level negative statements, with a median of 2 (SE=0.09 for P-), but surprisingly, had a high agreement with societal level negative attitudes with a median of 4 (SE=0.1 for S-).

B. Preferences for robot types

Both studies demonstrate that people’s preferences between humanoid and special purpose robots is highly task dependent, with an overall preference leaning towards special purpose robots. In Study 1 (Fig. 4) more than half of the participants choose the special purpose robot for medication management, navigation, stairs, and vacuuming. The same was true for humanoid robots only for the dressing. Very few participants think neither robot would be acceptable, e.g., for dressing (3), cooking (3), and laundry (4). When asked about overall preferences between having one humanoid robot versus a number of special purpose robots for different tasks, more people choose special purpose robots (45%) versus humanoids (37.5%); 17.5% are okay with either.

Fig. 4.

Participant preferences from Study 1 (choosing between humanoid robots, special purpose robots, either, or neither) across 8 tasks and overall (asked separately for the categories of robots).

In Study 2, more people have the special purpose robot as their first choice for eating, medications, navigation, stairs, vacuuming. In contrast, more people place the humanoid robot as their first choice for dressing, cooking, and laundry. When asked about overall preferences, 36.5% of participants have the humanoid robot as their first choice, whereas 58.3% of participants have the special purpose robots as their first choice. Compared to Study 1, a larger portion of the participants think that the humanoid robots are not acceptable (16.7% to 55.6% across tasks, 19.5% overall). In contrast, fewer than 20% of participants think that the special purpose robots are unacceptable across tasks, expect for cooking (33.3%). When asked about special purpose robots overall, 11.1% say they are not acceptable.

Agreement ratings in Study 2 show that participants are overall positive about having robots in their home with a rating of 3 (“Neither agree nor disagree”) or higher for all robots across all tasks, except for the humanoid robot for stair assistance (Fig. 6(a)). Their ratings are significantly higher for special purpose robots for medication management, navigation, stair assistance, and vacuuming. There are no significant differences between robots for the other tasks.

Fig. 6.

Median Likert scale agreement ratings for humanoid and special purpose robots across eight tasks in Study 2. Statistically significant differences in the Wilcoxon signed-rank test are indicated with a *. Note that lower agreement for the last three statements correspond to higher preference.

Participant responses to open-ended questions also support an overall preference towards special purpose robots over humanoid robots. Participants believe that special-purpose robots are more practical and cost-efficient. These robots are perceived as being designed to perform particular tasks effectively, making them more reliable and more efficient. Participants also note that special-purpose robots are smaller, “less intrusive” and “more discreet” than humanoid robots, which are seen as “bulky” and “unnecessary” for simple tasks. One participant notes “seems like a waste to have a humanoid robots for most singular basic tasks.” Another says that humanoids “were just too big for their given tasks, .. seemed like it caused more hassle than needed.”

C. Usefulness, appropriateness

Overall people agreed that both humanoid and special purpose robots would be useful in daily life, with a median agreement of 4 (somewhat agree) or 5 (completely agree). Their agreement was significantly higher for the special purpose robots assisting with navigation, stairs, and vacuuming (Fig. 6(b)). They similarly had overall high agreement that the robots are well-suited for the task, except for the humanoid stair assistance for which they were neutral. People thought the special purpose robots are significantly more well-suited for medication management, navigation, stairs, and vacuuming—the four tasks where the special purpose robot are less speculative.

D. Comfort, safety, reliability

Median participant agreement levels with statements about feeling safe around the robot, trusting that the robot would function reliably, and being comfortable having the robot in their homes all had the same distribution across tasks, with one example shown in Fig. 6(d). People feel significantly more safe with the special purpose robots for medication management, navigation, stairs, vacuuming, and laundry. There were no tasks for which the humanoid robot was thought to be more safe, reliable, or comfortable to be around. Yet, the ratings had a median of 3.5 or above except for the humanoid robot stair assistance, overall agreeing that they would feel safe and comfortable and would trust the reliability of humanoid robots. The ratings were very similar when people were asked about their comfort with having the robot around others (Fig. 6(e)).

The high ratings for safety, comfort, and reliability could be partly because our instructions stated that participants could assume that the robots (both humanoid and special purpose) were “extensively tested” and “approved by the FDA.” Indeed, participants explicitly refer to the these instructions in justifying their positive ratings, e.g., “I trust that it would perform the task reliably since it has been extensively tested and FDA-approved. I would feel safe and comfortable having it in my or a loved one’s home, as long as it functions smoothly” (Humanoid, T2: Dressing). Similar mentions are made to humanoid robots in the context of eating, medication, navigation, stairs, and cooking assistance. Others express doubt despite the reassurances in our instructions, e.g., “While the robot is FDA approved, I’m not sure if it would be able to accurately tell if it is performing the task correctly without injury” (Humanoid, T2: Dressing).

Participants express significant concerns about the safety of the humanoid robot carrying people up and down the stairs and strongly object to it: “I would absolutely not in a million years want this.” Stair assistance was indeed the most speculative use of a humanoid robot and not a functionality that humanoid companies have explored. Participants fear that the robot could “trip, lean too far back, or stumble”, “glitch”, “run out of battery” or “malfunction” while carrying a person, even if “the technology might be advanced.” They worry that a failure in its ability to hold someone securely could lead to severe injury. Concerns also include the potential for the robot be remotely controlled and possibly take the user somewhere unintended. This security and safety concern is expressed by another participant more generally about humanoid robots as “..any device that is able to connect to the internet may be hacked into.. it is possible that those with knowledge of advanced technology could manipulate such robots remotely for nefarious purposes.”

Participants also express concerns about the safety of cooking assistance by both types of robots. Potential risks mentioned by participants include fires, spills, food contamination, and overheating, referencing the robots’ ability to interact with “hot surfaces” and “sharp objects.” The generated image for the special purpose robot including a sharp utensil might have contributed to the humanoid robot being more preferred for this task; e.g., one participant refers to the robot as “a little scary with silver ware.”

E. Privacy

People disagree that they would be concerned about their privacy around special purpose robots. In contrast, they are mostly neutral about privacy concerns related to humanoid robots. Nevertheless, for specific tasks such as dressing, medication management, and stair assistance, they express significantly more privacy concerns regarding humanoid robots compared to special purpose robots.

Participants point to the humanoid robot design as a factor that increases their privacy concerns; e.g., “it’s just the design of its eerie black blank face that unsettles me, and would raise just the slightest concern over privacy during use” and “..what bothers me most about the humanoid robots, it’s the back monitor like face, it’s a bit uncertain and uncanny, like a void, and it reminds me heavily of a security camera. I would feel my family data is being collected just by looking at it.” One participant fears that AI-controlled robots could also be “remotely controlled by an outside source” and that is “not acceptable to me at this time.. too many privacy issues.” Participants are generally privacy aware, referencing robot cameras and questioning whether the robot “collects or processes sensitive information.”

F. Sense of agency

Overall, participants are neutral about how their sense of agency would be impacted by having the robots assist with the different tasks, with most median ratings being around 3. They have a median rating of 4 agreement (“Somewhat agree”) that their sense of agency would be reduced, only for the humanoid robot assisting with eating (Fig. 6(g)). This could be because most older adults do not need feeding assistance unless they have severe motor limitations and the idea of being fed by a robot can feel infantalizing. Although safety is a bigger concern for stair assistance, a similar agency-related issue is mentioned in that context for humanoid robots: “it would feel humiliating to be carried around .. by a robot.” One participant expresses an opposing view, highlighting increased autonomy for users brought by robot feeding assistance: “I completely agree with having a humanoid eating assistance robot because it would provide significant support for individuals who have difficulty feeding themselves, improving their independence and quality of life… it would reduce the need for constant human assistance, offering dignity and autonomy to users.”

G. Comparison to human assistance

Participant responses vary the most across tasks when asked whether they agree that they would rather have a human perform the task, instead of the humanoid or special purpose robots. For eating, dressing, and cooking assistance people agree they would rather have a human instead of either robots. There is no significant difference between the two robot types. In contrast for medications, navigation, stairs, and vacuuming people agreed they would prefer a human over the humanoid robot, but not for the special purpose robots. The difference was statistically significant for all four tasks (Fig. 6(h)). The median ratings were 3 or higher for all task-robot combinations, except for the special purpose vacuuming robot for which people “somewhat” disagree that they would rather have a human do the vacuuming instead of a Roomba-like robot.

H. Real humanoid preferences

Participant reactions to pictures of nine real world humanoid robots without any context (Fig. 3) are mixed. As shown in Fig. 7, Optimus is mentioned the most times in top three most preferred humanoids, and mentioned the fewest times in the bottom three. Phoenix and GR-1 appear the fewest times as most preferred robots, and are ranked as second and third least preferred robots. H1 is mentioned most times as least preferred, but is also mentioned by 10 participants as most preferred. Similarly, participants are split on the rest of the robots, with relatively high number of mentions among both most preferred and least preferred lists.

Fig. 7.

Number of mentions of each humanoid robot (from Fig. 3) when asked to list the three most preferred and three least preferred robots. Note that some participants did not list specific robots and hence did not contribute to these counts.

In articulating why they like certain robots, people mention attributes like “compact” (Digit), “sleek” (Neo), “professional” (Neo), “colourful” (Digit) and “modern” (Optimus, Figure 02, Neo). Participants express differing opinions about anthropomorphism. On one side, people express reasons for preferring some robots as “they are the most human like and could easily be acclimated in a home” (Neo, Figure 02, H1), “the form is closer to human” (Optimus, Figure 02 and Neo), or “less mechanical looking than the others” (Figure 02, H1, Optimus). Similarly, reasons for least preferred robots include “lack of human like features” (H1) and “industrial look” (GR-1). On the other hand, one participant says they prefer Digit, GR-1, and Atlas because of “the fact that they look more like a robot than a human.” One participant explicitly stated the uncanny valley effect for listing Neo in their least preferred list. Others alluded to a similar effect with varying levels of clarity; e.g., “these appear too uncanny, and frightening” (Atlas, Phoenix, Neo), “awful, looks like a human dressed as a robot” (Neo), “the eyes makes it look a bit creepy” (Apollo), and “Neo’s shape makes me uncomfortable.” One participant says they prefer Atlas, Digit, and H1 because “without a ‘face’ mask, it takes away the eerie sensation and idea that there may be something watching you.” Another participant does not list any robot in their most preferred saying “None really, all look creepy.”

In expressing reasons for listing robots as least preferred, participants also touch on safety concerns; e.g., “they look threatening” (Atlas, H1, Phoenix), “scary to be around” (Apollo, Phoenix, H1), “seems to be taking an aggressive stance” (H1), “these look scary and unfriendly” (Neo, H1, Figure 02), and “bulky, intimidating” (Atlas). Participants explicitly point out the “tubes” or “wires” around Apollo’s arms, saying they “could get in the way,” “seem like a potential hazard,” and “is a safety issue.”

Participant familiarity with some of the robots on the list can also impact their preferences. In most cases, familiarity leads to higher preference, e.g., “Boston Dynamics .. known to be a high-performing robotics company” (Atlas), “Tesla’s cutting-edge AI” (Optimus), or “I trust the companies that manufacture the robots” (Optimus, Atlas, Apollo). But, the impact of familiarity with a company may extend to factors beyond the users’ prior experiences with their products, as in one participant’s preferences being “negatively influenced by Elon Musk’s political stance” (Optimus).

I. Attitudes towards real humanoids in the home

When shown images of Neo, Figure 02, and Optimus doing a few home tasks (Fig. 1) people are overall positive towards the robots. They agree that the robot would be useful, is well-suited for the home, reliable, and safe, and they would feel comfortable having it in their home or the home of a loved one and around other people. They were neutral about how having these robots would impact their sense of agency and about privacy concerns. There were no significant differences between the three robots, except in two cases: people agreed significantly more with S1 (“This robot would be useful in daily life”) and significantly less with S9 (“I would prefer this task to be assisted by a human, rather than this robot”) for Optimus versus Neo.

V. Discussion

Our studies demonstrate that people generally prefer special purpose robots over humanoids. Despite articulating a number of concerns and negative feelings about humanoid robots, quantitative findings indicate that humanoid robots are nevertheless acceptable. While we think humanoid companies like 1X and Figure must have similar positive indications of a market for humanoids in the home based on extensive user research, we maintain skepticism about widespread adoption of such robots. At the Physical Caregiving Robots at HRI 2025, we asked a panel of six people with disabilities whether they would like to have a humanoid robot assist them with everyday tasks. All of them said no. One panelist remarked “trying to make assistive robots with humanoids would be like trying to make autonomous cars by making humanoids sit on the driver seat and drive like a human.” Other panelists expressed serious concerns about safety of such robots. One panelist said “that would be creepy.” Although many participants share these views, unlike the panelists, most of them still think that humanoids in homes are acceptable. And indeed for low-stake tasks, like folding laundry, they might be acceptable if they work reliably and do not cost more than the alternatives, which are still “big if”s.

However, the discrepancy between people who are already using robots in their homes for assistance (the panelists) and our study participants, points to some of the limitations of our work. Although our participants are told to imagine having the robot in their home or in the home of a loved one, doing that based on a picture cannot approximate how they would feel with a 6-foot moving metal object standing in a room with them. Similarly, imagining growing old and needing assistance with eating, dressing, or moving around, is not the same as already needing assistance with those tasks on a daily basis. In future work, we hope to address these limitations by both conducting our study with participants who have more stake in making assistive robots a reality (older adults and people with physical limitations), and by doing in-lab or in-context studies where participants get to experience interactions with different robots.

Fig. 5.

Participant preferences from Study 2 (choosing between first choice, second choice, or unacceptable for both humanoid and special purpose robots) across 8 tasks.

Acknowledgments

This project was supported by the National Institute of Biomedical Imaging and Bioengineering (NIBIB) grant #1R01EB034580-01 “NRI: Adaptive Teleoperation Interfaces for In-Home Assistive Robots.”

Footnotes

An example chat transcript for generating the images is available at: https://tinyurl.com/chatgpt-robot-image

References

- [1].Prabhu A, “Figure ai to grab 1.5bfundingat39.5b valuation; eyes to produce 100,000 robots: What about competition?” Tech Funding News, 2025. [Online]. Available: https://techfundingnews.com/ [Google Scholar]

- [2].McKenna G, “There are plausible explanations for the $1.4 billion gap on tesla’s financial statements,” Fortune Finance, March 2025. [Online]. Available: https://fortune.com/2025/03/23/tesla-billion-gone-astray-questions-controls/ [Google Scholar]

- [3].Liu J, “Elon musk thinks robots are a $10 trillion business. he’s got some competition from china,” CNN Business, March 2025. [Online]. Available: https://www.cnn.com/2025/03/25/tech/china-robots-market-competitiveness-intl-hnk/index.html [Google Scholar]

- [4].“The global market for humanoid robots could reach $38 billion by 2035,” Goldman Sachs - Artificial Intelligence, 2024. [Online]. Available: https://www.goldmansachs.com/insights/articles/the-global-market-for-robots-could-reach-38-billion-by-2035 [Google Scholar]

- [5].Smarr C-A, Mitzner TL, Beer JM, Prakash A, Chen TL, Kemp CC, and Rogers WA, “Domestic robots for older adults: attitudes, preferences, and potential,” International journal of social robotics, vol. 6, pp. 229–247, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Bugmann G and Copleston SN, “What can a personal robot do for you?” in Conference Towards Autonomous Robotic Systems. Springer, 2011, pp. 360–371. [Google Scholar]

- [7].Broadbent E, Stafford R, and MacDonald B, “Acceptance of healthcare robots for the older population: Review and future directions,” International journal of social robotics, vol. 1, no. 4, p. 319, 2009. [Google Scholar]

- [8].Arevalo Arboleda S, Pascher M, Baumeister A, Klein B, and Gerken J, “Reflecting upon participatory design in human-robot collaboration for people with motor disabilities: challenges and lessons learned from three multiyear projects,” in Pervasive technologies related to assistive environments conference, 2021, pp. 147–155. [Google Scholar]

- [9].Brose SW, Weber DJ, Salatin BA, Grindle GG, Wang H, Vazquez JJ, and Cooper RA, “The role of assistive robotics in the lives of persons with disability,” American Journal of Physical Medicine & Rehabilitation, vol. 89, no. 6, pp. 509–521, 2010. [DOI] [PubMed] [Google Scholar]

- [10].Nanavati A, Ranganeni V, and Cakmak M, “Physically assistive robots: A systematic review of mobile and manipulator robots that physically assist people with disabilities,” Annual Review of Control, Robotics, and Autonomous Systems, vol. 7, 2023. [Google Scholar]

- [11].Bhattacharjee T, Gordon EK, Scalise R, Cabrera ME, Caspi A, Cakmak M, and Srinivasa SS, “Is more autonomy always better? exploring preferences of users with mobility impairments in robot-assisted feeding,” in ACM/IEEE international conference on human-robot interaction, 2020, pp. 181–190. [Google Scholar]

- [12].Sørensen L, Johannesen DT, and Johnsen HM, “Humanoid robots for assisting people with physical disabilities in activities of daily living: a scoping review,” Assistive Technology, pp. 1–17, 2024. [Google Scholar]

- [13].Ranganeni V, Dhat V, Ponto N, and Cakmak M, “Accessteleopkit: A toolkit for creating accessible web-based interfaces for tele-operating an assistive robot,” in ACM Symposium on User Interface Software and Technology, 2024, pp. 1–12. [Google Scholar]

- [14].Abdi J, Al-Hindawi A, Ng T, and Vizcaychipi MP, “Scoping review on the use of socially assistive robot technology in elderly care,” BMJ open, vol. 8, no. 2, p. e018815, 2018. [Google Scholar]

- [15].Matarić MJ and Scassellati B, “Socially assistive robotics,” Springer handbook of robotics, pp. 1973–1994, 2016. [Google Scholar]

- [16].Mathur MB and Reichling DB, “Navigating a social world with robot partners: A quantitative cartography of the uncanny valley,” Cognition, vol. 146, pp. 22–32, 2016. [DOI] [PubMed] [Google Scholar]

- [17].Zhang J, Li S, Zhang J-Y, Du F, Qi Y, and Liu X, “A literature review of the research on the uncanny valley,” in Cross-Cultural Design. User Experience of Products, Services, and Intelligent Environments. Springer, 2020, pp. 255–268. [Google Scholar]

- [18].Kim B, de Visser E, and Phillips E, “Two uncanny valleys: Reevaluating the uncanny valley across the full spectrum of real-world human-like robots,” Computers in Human Behavior, vol. 135, p. 107340, 2022. [Google Scholar]

- [19].Kanda T, Miyashita T, Osada T, Haikawa Y, and Ishiguro H, “Analysis of humanoid appearances in human–robot interaction,” IEEE transactions on robotics, vol. 24, no. 3, pp. 725–735, 2008. [Google Scholar]

- [20].Manzi F, Massaro D, Di Lernia D, Maggioni MA, Riva G, and Marchetti A, “Robots are not all the same: young adults’ expectations, attitudes, and mental attribution to two humanoid social robots,” Cyberpsychology, Behavior, and Social Networking, vol. 24, no. 5, pp. 307–314, 2021. [DOI] [PubMed] [Google Scholar]

- [21].Haring KS, Silvera-Tawil D, Takahashi T, Velonaki M, and Watanabe K, “Perception of a humanoid robot: a cross-cultural comparison,” in IEEE International symposium on robot and human interactive communication (RO-MAN). IEEE, 2015, pp. 821–826. [Google Scholar]

- [22].Andrighetto L, Sacino A, Cocchella F, Rea F, and Sciutti A, “Cognitive processing of humanoid robot faces: Empirical evidence and factors influencing anthropomorphism,” International Journal of Social Robotics, pp. 1–15, 2025. [Google Scholar]

- [23].Guizzo E, Klett R, Vrielink E, Ackerman E, Strickland E, Kumagai J, Montgomery M, and Goldstein H, “Where is my robot?” February 2025. [Online]. Available: https://spectrum.ieee.org/ai-robots

- [24].Aymerich-Franch L and Gómez E, “Public perception of socially assistive robots for healthcare in the eu: A large-scale survey,” Computers in Human Behavior Reports, vol. 15, p. 100465, 2024. [Google Scholar]

- [25].Koverola M, Kunnari A, Sundvall J, and Laakasuo M, “General attitudes towards robots scale (gators): A new instrument for social surveys,” International Journal of Social Robotics, vol. 14, no. 7, pp. 1559–1581, 2022. [Google Scholar]

- [26].Maguire M, “Doing a thematic analysis: A practical, step-by-step guide for learning and teaching scholars,” 2017.

- [27].“Prolific - online participant recruitment for academic research,” Prolific, 2025. [Online]. Available: https://www.prolific.com [Google Scholar]