Overview

In monkeys, foraging strategies depend not only on a context established by spatial or symbolic cues, but also on the relations among cues. Genovesio et al. recorded the activity of prefrontal cortex neurons while monkeys chose a strategy based on the relation between consecutive symbolic cues. For the same cues and actions, the monkeys also learned fixed responses to the same symbols. Many neurons had activity selective for a given strategy, others for whether the monkeys’ response choice depended on a symbol or the relation between symbols. These findings indicate that the primate prefrontal cortex contributes to implementing abstract strategies.

Keywords: frontal lobe, behavioral neurophysiology, cognition, problem solving, rules

Summary

Many monkeys adopt abstract response strategies as they learn to map visual symbols to responses by trial-and-error. According to the repeat-stay strategy, if a symbol repeats from a previous, successful trial, the monkeys should stay with their most recent response choice. According to the change-shift strategy, if the symbol changes, the monkeys should shift to a different choice. We recorded the activity of prefrontal cortex neurons while monkeys chose responses according to these two strategies. Many neurons had activity selective for the strategy used. In a subsequent block of trials, the monkeys learned fixed stimulus–response mappings with the same stimuli. Some neurons had activity selective for choosing responses based on fixed mappings, others for choosing based on abstract strategies. These findings indicate that the prefrontal cortex contributes to the implementation of the abstract response strategies that monkeys use during trial-and-error learning.

Introduction

In Words and Rules, Pinker (1999) explores the dichotomy between rote memorization and knowledge that depends on rules. Memorization plays a central role in associationist thought, including animal learning theory, computations involving neural networks and, most generally, philosophical empiricism. Rules, strategies and other abstractions figure prominently in cognitive neuroscience, computations involving symbols and philosophical rationalism. The interaction of—and the tension between—exemplar-based, empirical knowledge and abstract, theoretical knowledge plays a central role in cognitive creativity.

In this neurophysiological study, monkeys made response choices based on both exemplars and abstractions. At the exemplar level, a symbolic visual stimulus appeared on each trial and the monkeys chose a response based on that symbol. Each of three symbols instructed a different response, and the monkeys learned each of these arbitrary, stimulus–response mappings by trial-and-error. They could not, however, apply this knowledge to novel stimuli. At the abstract level, the monkeys learned two response strategies—called repeat-stay and change-shift—and they could apply these strategies to novel stimuli. In previous studies (Bussey et al., 2001; Wise and Murray, 1999), some monkeys have spontaneously adopted these strategies as they learned new stimulus–response mappings, nearly doubling their reward rate in the earliest phases of learning. Other monkeys have adopted one or neither strategy.

Parts of the prefrontal cortex (PF) play a necessary role in the implementation of these and other strategies (Bussey et al., 2001), yet little is known about their neural basis. PF has been viewed as a neural substrate for rules and strategies in humans (Brass et al., 2003; Bunge, 2005; Bunge et al., 2003; Owen et al., 1990; 1996; Robbins, 2000; Rushworth et al., 2002) and monkeys (Collins et al., 1998; Gaffan et al., 2002), and previous neurophysiological studies of PF have found rule- and strategy-related activity (Asaad et al., 2000; Barraclough et al., 2004; Fuster et al., 2000; Hoshi et al., 2000; Wallis and Miller, 2003b; White and Wise, 1999). However, there have been no neurophysiological studies of the strategies that monkeys spontaneously adopt as they solve a cognitive problem such as learning arbitrary pairings between symbols and responses. The present study did so in order to test the hypothesis that neurons in PF play an important role in implementing abstract response strategies as well as exemplar-based responses.

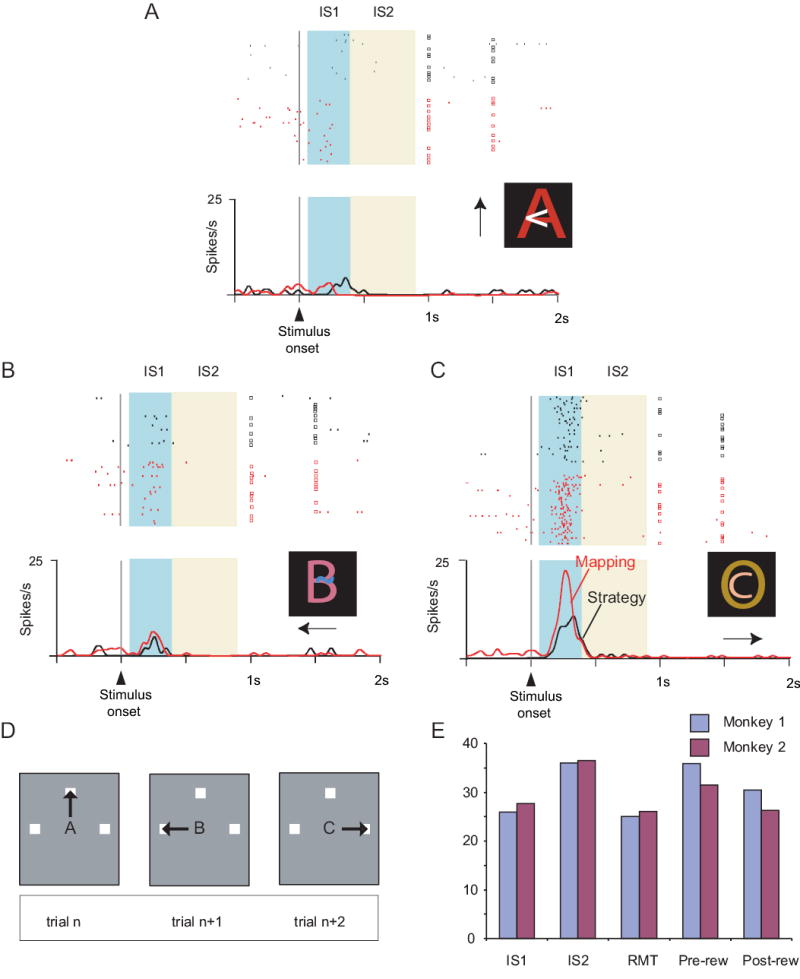

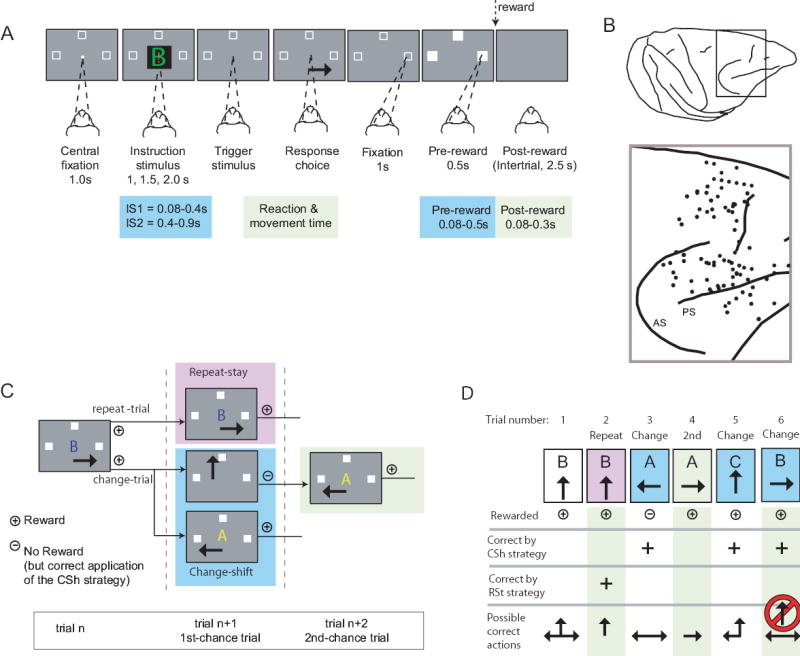

Fig. 1A shows the sequence and timing of events for each trial. As the monkeys fixated a central light spot, a visual instruction stimulus (IS) appeared at that location and three potential response targets appeared elsewhere: left, up, and right from center. One IS was selected on each trial from a set of three, and those ISs differed from each other in colors and shapes. The disappearance of the IS served as the signal for the monkeys to choose a response target by making a saccade to it.

Fig. 1.

A. Sequence of task events. Gray rectangles represent the video screen, white squares show the three potential response targets (not to scale), the white dot illustrates the fixation point, and the converging dashed lines indicate gaze angle. Disappearance of the instruction stimulus was the trigger stimulus, after which the monkeys made a saccade (solid arrow) and maintained fixation at the chosen target. The target squares then filled with white, and reinforcement (dotted arrow), when appropriate. B. Penetration sites. Composite from both monkeys, relative to sulcal landmarks. C. Strategy task. Responses shown by the thick arrows in the middle column represent correct applications of the repeat-stay (pink background) or change-shift (blue background) strategies. + indicates a rewarded response; − an unrewarded response. If unrewarded, the monkeys then got a second chance to respond, and received reinforcement for choosing the saccade made least recently (right). D. Example sequence for the strategy task. The red circle and slash indicates a disallowed response.

The monkeys performed four tasks having this sequence of events, in separate blocks of ~100 trials each. While recording from a given group of neurons, we usually began with the standard version of the strategy task (Fig. 1C), using novel ISs, followed by three control tasks: a mapping task that used the same ISs, a high-reward version of the strategy task, also using the same ISs, and a mapping task that used highly familiar ISs, called the familiar mapping task.

As two rhesus monkeys performed these tasks, we sampled the activity of single neurons in both the dorsolateral part of PF (PFdl) and a dorsomedial part (PFdm) (Fig. 1B). The PFdl population was largely confined to area 46, in the caudal half of the principal sulcus. PFdm spanned three cytoarchitectonic regions: homotypical area 9, dysgranular area 8, and a rostral part agranular area 6, which is sometimes called pre-PMd and is thought to be more closely allied with PF than with motor cortex (Picard and Strick, 2001).

Results

Strategy task: Standard version

In the strategy task (Fig. 1C), the monkeys responded to a set of three novel ISs according to the repeat-stay and change-shift strategies. These strategies depended on the fact that a second-chance procedure, explained below, ensured that the monkeys ended a series of trials with a rewarded response, in effect “setting up” the next trial. On that next trial, if the IS was the same as that on the previous trial (called a repeat trial), then the monkeys should stay with their previous response to receive a reward. If the IS differed from that on the previous trial (called a change trial), then the monkeys should shift from their previous response. Choice of one of the two potential shift responses, randomly selected, would be rewarded. If a monkey correctly shifted its response, but failed to receive a reward, the same IS was presented on a series of second-chance trials until it chose the remaining shift response, which was rewarded (Fig. 1C).

By design, the monkeys could not learn any fixed stimulus–response mappings in the strategy task, and Fig. 1D shows why. In that example, after stimulus B on trial 1, the top choice was rewarded. When stimulus B appeared on trial 2, it was a repeat trial and the top choice was again rewarded. When stimulus B appeared on the trial 6, however, it was a change trial, and the top choice was precluded because it had been chosen on the previous trial.

Our analysis focused on task-related neurons, defined as a significant difference in discharge rate, compared to a reference period, in any of five task periods: the IS1, IS2, reaction and movement time (RMT), pre-reward, and post-reward periods (see Experimental Procedures and Fig. 1A). Suppl. Table 1 presents the numbers of task-related cells by task, monkey, and task period.

Supplementary Table 1.

The number of task-related neurons by task period for each monkey and task (Mann-Whitney U Test), with the percentage of the cells tested in parentheses. The sample size (N) was the same for all task periods in a row, except for the post-reward period because some cells lacked a sufficient number of rewarded trials for analysis. Abbreviation: Mk, monkey. Each cell counts no more than once per task period.

| Task | Mk | IS1 | IS2 | RMT | Pre-reward | Post-reward | N |

|---|---|---|---|---|---|---|---|

| Task related in strategy task* | 1 | 428 (61%) | 353 (50%) | 315 (45%) | 391 (56%) | 412 (59%) | 700 |

| 2 | 479 (66%) | 413 (56%) | 450 (62%) | 434 (59%) | 455 (62%) | 731 | |

| Task related in strategy task** | 1 | 88 (23%) | 92 (24%) | 99 (26%) | 100 (26%) | 85/325 (26%) | 387 |

| 2 | 121 (25%) | 96 (20%) | 103 (21%) | 106 (22%) | 84/452 (18%) | 487 | |

| Task related in mapping task | 1 | 95 (25%) | 84 (22%) | 98 (25%) | 93 (24%) | 86/325 (26%) | 387 |

| 2 | 143 (29%) | 128 (26%) | 124 (25%) | 132 (27%) | 105/452 (23%) | 487 |

Recorded in strategy task only

Recorded in both the strategy and mapping tasks

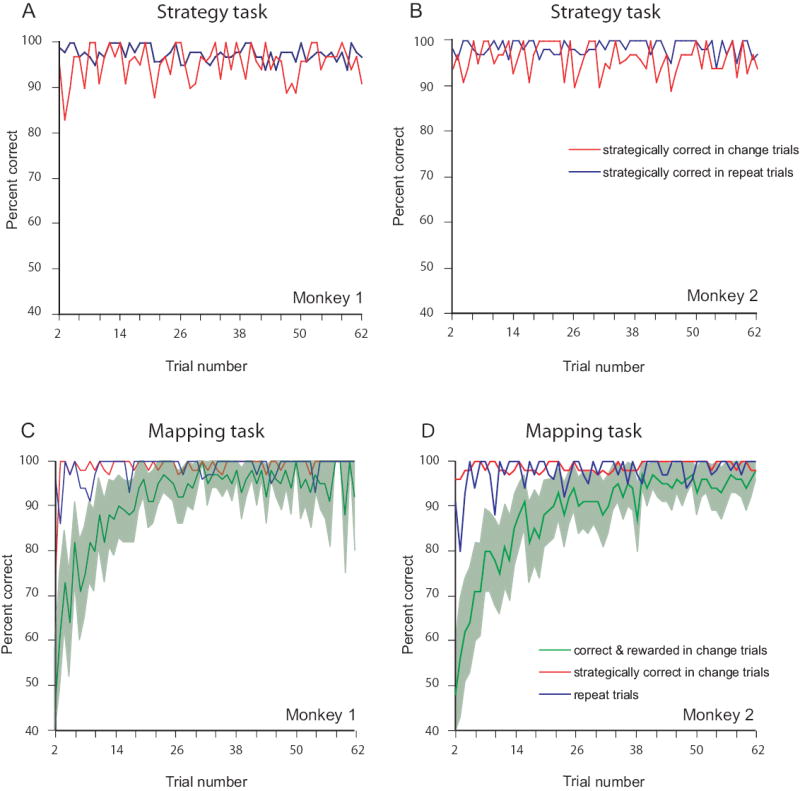

Overall, both monkeys performed the strategy task at 96% correct (Fig. 2A and B). Suppl. Table 2 shows an analysis of error types, and. Table 3 gives the reaction times, which did not differ significantly between repeat and change trials for either monkey. Suppl. Table 4 shows that the saccades were highly accurate in both trial types.

Fig. 2.

Performance curves. A, B. Strategy task. C, D. Mapping task. A, C. Monkey 1. B, D. Monkey 2. The percentage of correct responses, averaged over ~130 problem sets, as a function of trial number. Blue curves show performance on repeat trials. Green curves show percentage of rewarded saccades, change trials only. Background shading indicates 95% confidence limits. Red curves show percentage of saccades that were chosen according to the change-shift strategy, change trials only.

Supplementary Table 2.

Types of errors by task and by monkey. Correct: overall percentage of correct application of the repeat-stay and change-shift strategies. Rewarded: percent of all trials resulting in reinforcement. CSh: failure to shift to a different action after the IS changed from that on the previous trial. RSt: failure to stay with a previous, rewarded action, even though the IS repeated from the previous trial. RE: repetition of one of the two most recently executed actions on second-chance trials, after a correct, but unrewarded application of the change-shift strategy.

In the strategy task, CSh errors differed significantly from both of the other types (χ2=600, d.f.=2, p<0.001; post hoc multiple χ2 tests with Bonferroni correction). In the mapping task, all error types differed significantly from each other (χ2=645, d.f.=2, p<0.001). Note that, because there were two correct actions according to the change-shift strategy but only one according to repeat-stay, a random selection of response would lead to a 33% error rate on change trials versus 67% on repeat trials. This fact could, in part, account for the smaller number of errors on change trials (CSh errors) than on repeat trials (RSt errors). In both tasks, the primary cause of RE errors was choosing the action made two trials back, rather than excluding it as required. This finding shows that the monkeys did not confuse the second-chance trials with repeat trials, although the IS repeated from the previous trial in both. A lapse in the short-term memory of which action occurred two trials back probably accounts for most RE errors in the strategy task.

| Task | Monkey | Correct by strategy | Rewarded | CSh errors | RSt errors | RE errors |

|---|---|---|---|---|---|---|

| Strategy task | 1 | 96.3% | 48.2% | 1.1% | 3.2% | 6.5% |

| 2 | 96.2% | 48.1% | 1.7% | 4.0% | 7.7% | |

| Mapping task | 1 | 99.0% | 94.0% | 0.6% | 1.2% | 6.3% |

| 2 | 98.7% | 94.4% | 0.4% | 1.5% | 12.7% |

Supplementary Table 3.

Reaction time by trial type. Abbreviations: Ch, change trials; Rpt, repeat trials, 2nd, second-chance trials.

| Task | Monkey | Change trials | Repeat trials | Second-chance trials | Statistically significant difference* |

|---|---|---|---|---|---|

| Strategy task | 1 | 285 ± 47 | 288 ± 57 | 295 ± 54 | 2nd ≠ (Ch & Rpt) |

| 2 | 279 ± 93 | 281 ± 96 | 265 ± 86 | 2nd ≠ (Ch & Rpt) | |

| Mapping task | 1 | 295 ± 74 | 290 ± 46 | 277 ± 48 | 2nd ≠ (Ch & Rpt) |

| 2 | 278 ± 76 | 281 ± 71 | 267 ± 78 | 2nd ≠ (Ch & Rpt) | |

| Familiar mapping task | 1 | 280 ± 83 | 280 ± 43 | rare | None |

| 2 | 275 ± 75 | 278 ± 70 | rare | None |

Mann-Whitney U-test (p < 0.05)

Supplementary Table 4.

Saccade error, by task and strategy for top, right, and left targets during the strategy task, in degrees of visual angle, ± S.D. Abbreviations: Mk, Monkey; x, horizontal eye position; y, vertical eye position.

| Mk | trial type | top (x) | top (y) | right (x) | right (y) | left (x) | left (y) |

|---|---|---|---|---|---|---|---|

| repeat | −0.24 ± 2.01 | 0.18 ± 1.59 | −0.31 ± 1.74 | −0.13 ± 1.52 | −0.13 ± 2.21 | 0.21 ± 1.70 | |

| 1 | change | −1.26 ± 2.29 | 0.33 ± 1.59 | −0.31 ± 1.85 | 0.22 ± 1.39 | −0.26 ± 2.16 | −0.05 ± 1.59 |

| repeat | −0.16 ± 4.09 | 0.03 ± 3.59 | −0.26 ± 3.82 | 0.28 ± 3.70 | −0.10 ± 3.86 | −0.02 ± 3.71 | |

| 2 | change | −0.10 ± 4.08 | 0.00 ± 3.08 | −0.01 ± 3.63 | 0.37 ± 3.67 | −0.50 ± 3.94 | −0.02 ± 3.81 |

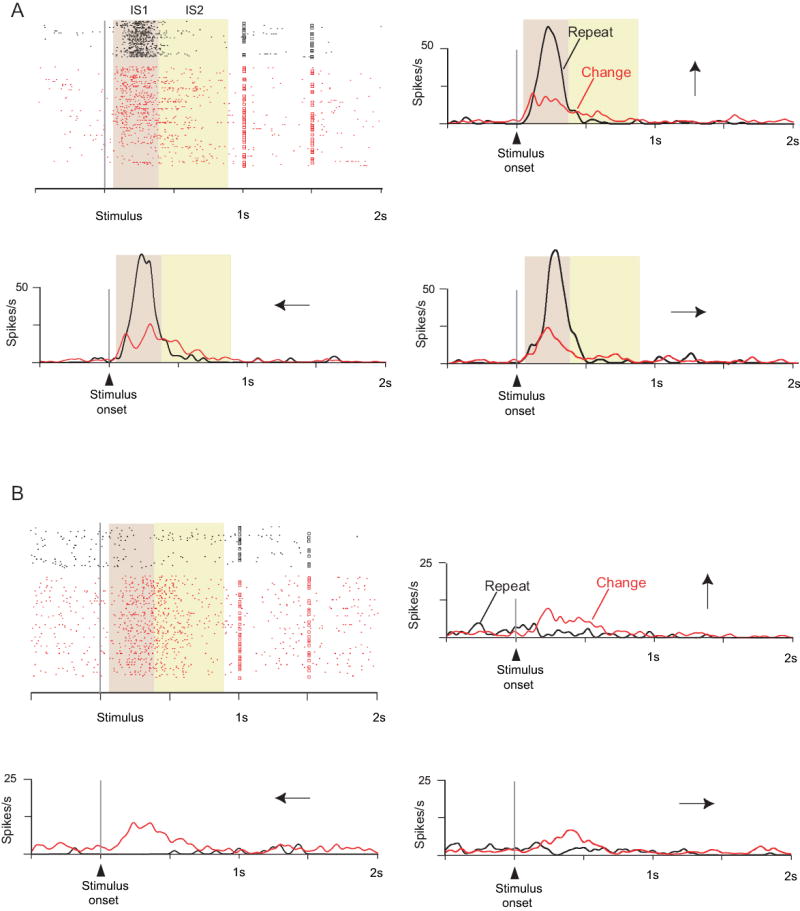

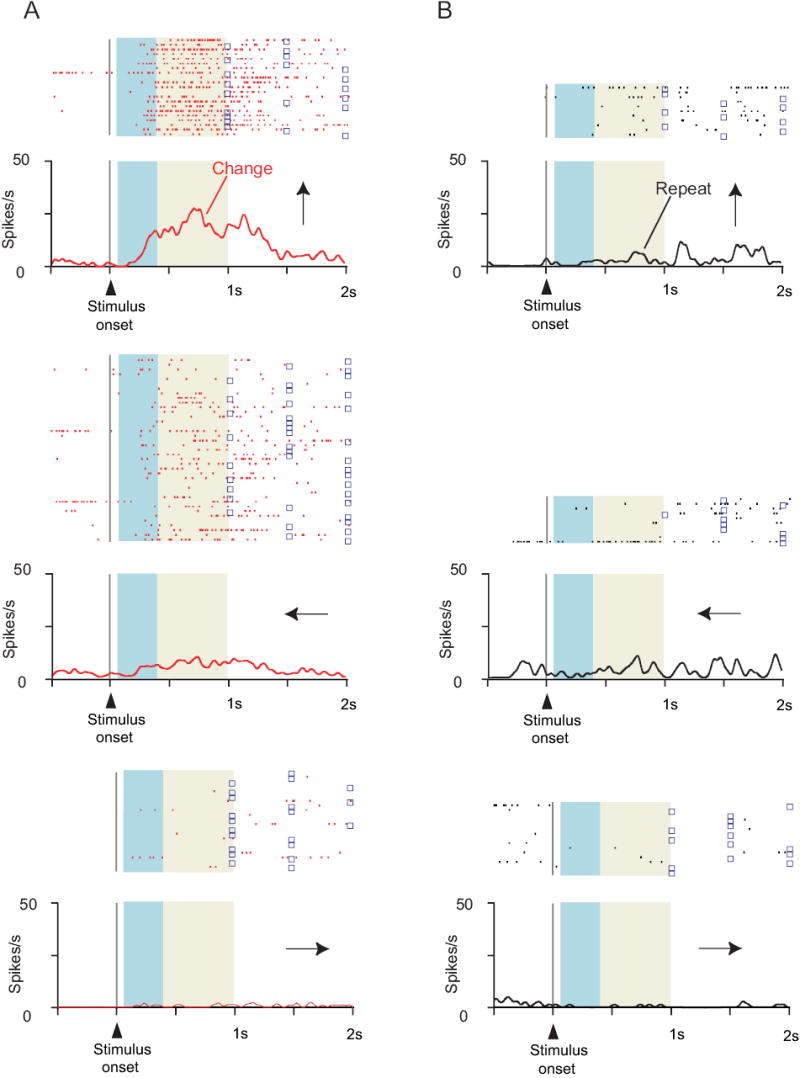

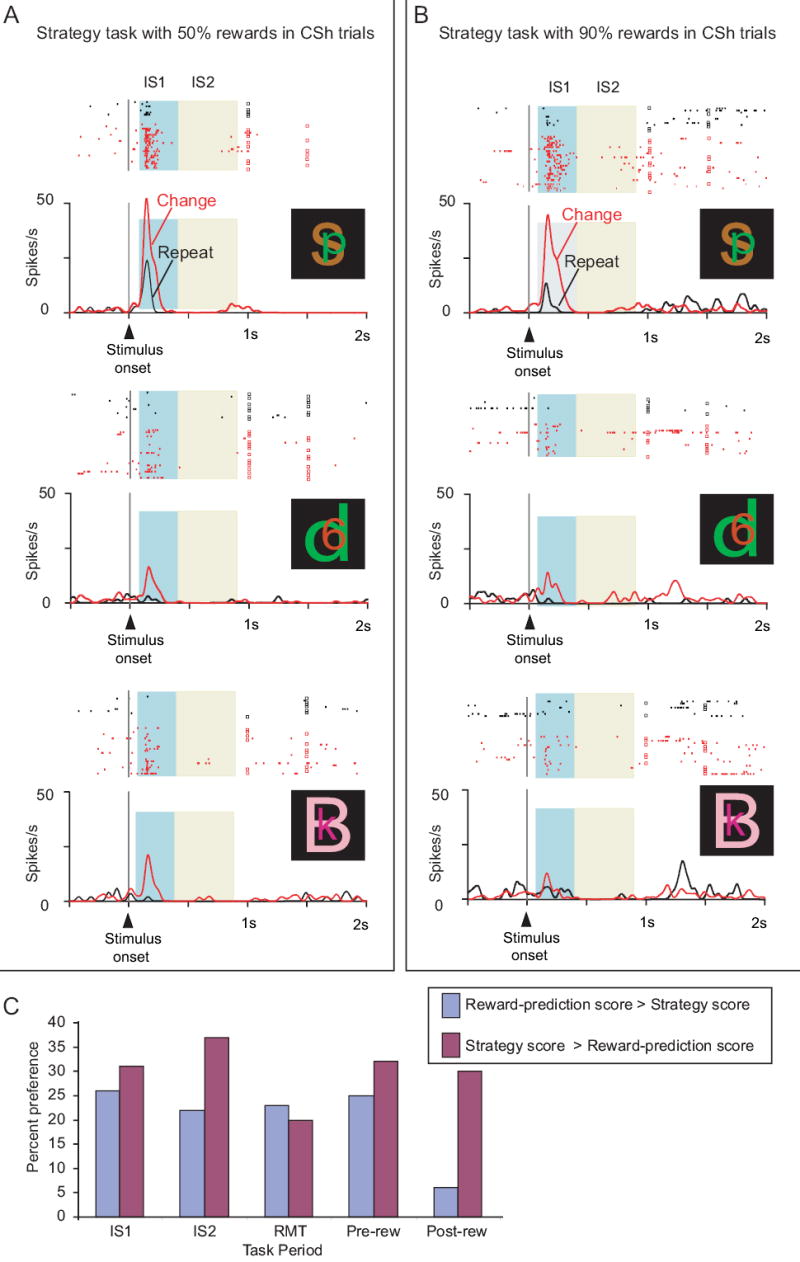

We examined whether activity differed significantly according to the strategy used to choose a response: repeat-stay vs. change-shift. Fig. 3 shows the activity of two PF cells during the strategy task. After IS presentation, the cell illustrated in Fig. 3A increased its activity for both strategies at first, but after ~125 ms the activity during repeat trials strongly exceeded that during change trials. This activity decayed rapidly, and in the IS2 period the cell’s preference switched from a strong preference for the repeat-stay strategy to a weak one for the change-shift strategy. The neuron illustrated in Fig. 3B showed a preference for the change-shift strategy in both the IS1 and IS2 periods, which began ~120 ms after the appearance of the IS, regardless of which IS appeared on that trial.

Fig. 3.

Two cells with strategy effects: A from the rostral part of PFdm, B from PFdl. The saccade directions are shown by the arrows. The squares on each line of the raster show the time that the trigger stimulus occurred; each dot corresponds to the time of a neuronal action potential. The background shading identifies the task periods. The cell in A had much greater activity for repeat trials (black) than for change trials (red) in the IS1 period, regardless of stimulus or saccade direction. The cell B had the opposite preference, and also showed some preference for responses to the left.

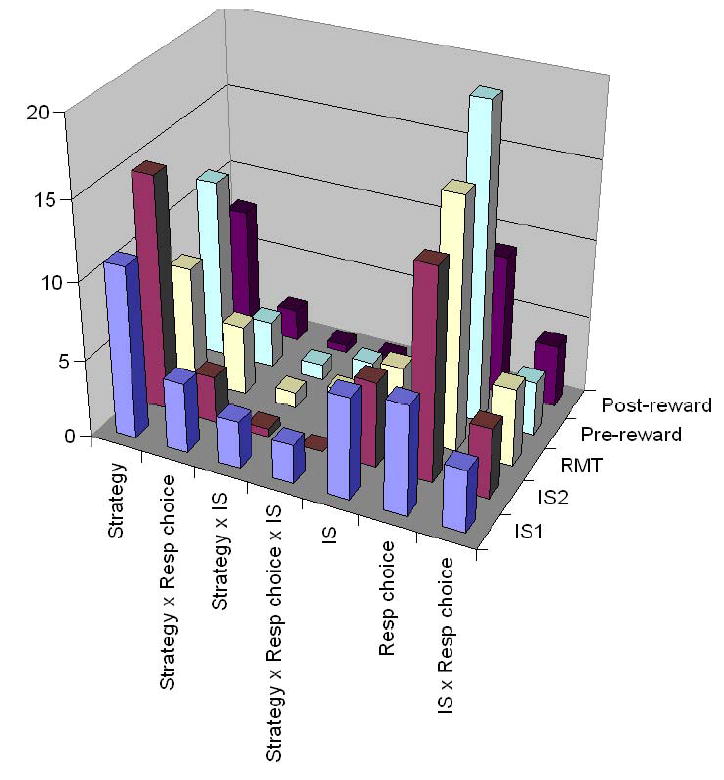

When statistically significant, we called such activity contrasts strategy effects. Table 1 gives the numbers of cells with significant strategy effects, based on analysis of variance (ANOVA, α=0.05). The ANOVA was performed on neurons showing statistically significant task-related activity, and used three factors: Strategy, IS, and Response choice. In the IS1 period, for example, 428 of 700 neurons monitored during the strategy task were task related in Monkey 1, as were 479 of 731 neurons in Monkey 2. Of these 907 task-related neurons, there was a main strategy effect in 145 cells (16%) and an interactive effect involving strategy in another 227 cells (25%), for a total of 41%. Table 1 presents analogous data for the other periods. Suppl. Table 5 and Suppl. Fig. 1 show a breakdown by monkey with the specific interactive effects enumerated.

Table 1.

Strategy effects. Cells showing a significant effect of strategy (three-way ANOVA, p<0.05), IS, or choice (i.e., saccade direction). Both monkeys, combined. Task periods are defined in Experimental Procedures and illustrated in Fig. 1A. Top three rows: Numbers (and percentages of N, bottom row) of neurons with a significant main effect of each row’s factor. Fourth row: numbers (and percentages) of neurons with either main or interactive effects involving strategy. Suppl. Table 5 shows these data by monkey and the specific interactive terms, and Suppl. Fig. 2 illustrated these data.

| Factors | IS1 | IS2 | RMT | Pre-reward | Post-reward |

|---|---|---|---|---|---|

| Strategy | 145 (16%) | 152 (20%) | 89 (12%) | 136 (16%) | 113 (13%) |

| IS | 105 (12%) | 82 (11%) | 72 (9%) | 53 (6%) | 56 (6%) |

| Choice | 156 (17%) | 141 (18%) | 129 (17%) | 209 (25%) | 122 (14%) |

| Strategy, incl. interactive | 372 (41%) | 288 (38%) | 263 (34%) | 309 (37%) | 264 (30%) |

| N | 907 | 766 | 765 | 825 | 867 |

Supplementary Table 5.

Strategy effects, by monkey and task period (ANOVA and Mann-Whitney U Test). Cells monitored in the strategy task. Abbreviation: Resp, Response.

| Monkey | IS1 | IS2 | RMT | Pre-reward | Post-reward | Total cells* | |

|---|---|---|---|---|---|---|---|

| 1 | Strategy | 68 (16%) | 59 (17%) | 56 (18%) | 69 (18%) | 46 (11%) | 205 (32%) |

| Strategy × Resp choice | 49 (11%) | 27 (8%) | 27 (9%) | 27 (7%) | 19 (5%) | 130 (20%) | |

| Strategy × IS | 38 (9%) | 15 (4%) | 19 (6%) | 27 (7%) | 23 (6%) | 110 (17%) | |

| Strategy × Resp choice × IS | 28 (7%) | 11 (3%) | 20 (6%) | 29 (7%) | 20 (5%) | 102 (16%) | |

| IS | 42 (10%) | 36 (10%) | 27 (9%) | 33 (8%) | 29 (7%) | 142 (22%) | |

| Resp choice | 48 (11%) | 60 (17%) | 86 (27%) | 96 (25%) | 59 (14%) | 243 (38%) | |

| IS × Resp choice | 37 (9%) | 39 (11%) | 37 (12%) | 36 (9%) | 39 (9%) | 157 (25%) | |

| N | 428 | 353 | 315 | 391 | 412 | 639 | |

| 2 | Strategy | 77 (16%) | 93 (23%) | 33 (7%) | 67 (15%) | 67 (15%) | 245 (36%) |

| Strategy × Resp choice | 41 (8%) | 31 (8%) | 43 (10%) | 39 (9%) | 40 (9%) | 173 (25%) | |

| Strategy × IS | 35 (7%) | 29 (7%) | 28 (6%) | 20 (5%) | 22 (5%) | 112 (16%) | |

| Strategy × Resp choice × IS | 36 (8%) | 23 (6%) | 37 (8%) | 31 (7%) | 27 (6%) | 138 (20%) | |

| IS | 63 (13%) | 46 (11%) | 45 (10%) | 20 (5%) | 27 (6%) | 158 (23%) | |

| Resp choice | 60 (13%) | 81 (20%) | 67 (15%) | 113 (26%) | 63 (14%) | 267 (39%) | |

| IS × Resp choice | 41 (9%) | 31 (8%) | 34 (8%) | 33 (8%) | 40 (9%) | 157 (23%) | |

| N | 479 | 413 | 450 | 434 | 455 | 680 | |

each cell counts no more than once in this column, as in the others.

Supplemental Figure 1.

The results from Suppl. Table 5 as a bar graph, both monkeys combined. Abbreviations: IS, instruction stimulus; Resp, response; RMT, reaction- and movement-time period.

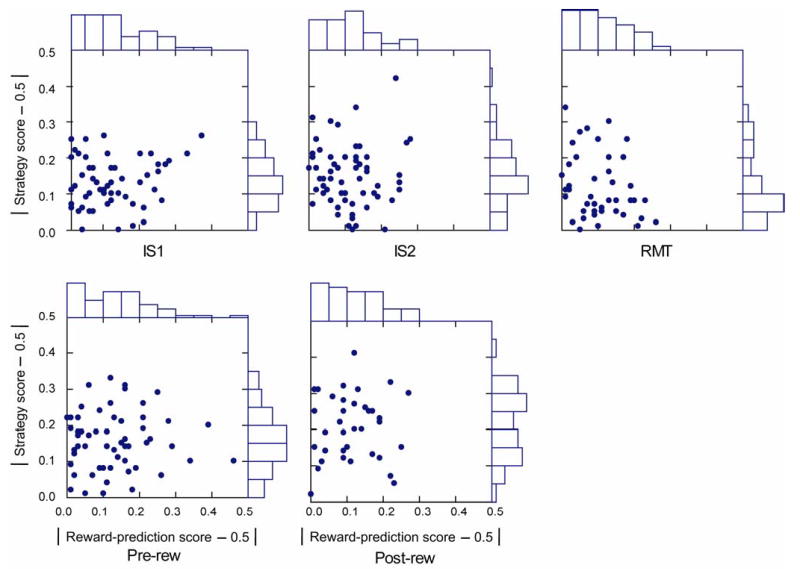

Reward-prediction score versus strategy score

To further evaluate the extent to which the cell’s activity reflected reward prediction or anticipation, as opposed to the repeat-stay and change-shift strategies, we calculated two scores, which compared each cell’s activity across several tasks: a reward-prediction score and a strategy score. In each calculation, all activity was normalized by the maximal activity for that cell and task period. Thus, normalized activity ranged between 0 and 1, and the activity for several trial types (repeat trials, change trials, mapping trials, and second-chance trials) contributed a value in that range.

For the reward-prediction score, a model assumed either a strong correlation of activity with approximate reward rate or a strong anti-correlation. Low reward rates were those of approximately 50%, high ones were those of approximately 90%. High-reward situations were second-chance trials in the standard version of the strategy task, trials late in the mapping task, and either repeat or change trials in the high-reward version of the strategy task. Low-reward situations included those early in the mapping task for change trials and all change trials in the standard version of the strategy task. A score near 0.5 indicated that the cell’s activity did not correspond to the prospect for reward, and the extent of deviation of that score towards 0 or 1 indicated a progressively larger degree of correspondence to the reward-prediction model (either in correlation or in anti-correlation with the probability of reward).

Analogously, for the strategy score, a different model assumed that the change-shift strategy would be associated with high activity levels on change trials and low activity on the repeat trials. For this model, too, a score of 0.5 indicated that the cell’s activity did not accord with either the repeat-stay strategy or the change-shift strategy, and the extent of deviation of that score towards 0 or 1 indicated a progressively larger degree of correspondence to the strategy model (either in correlation and anti-correlation with the expectation for change-shift preferences, the anti-correlation reflecting repeat-stay preferences).

To identify a preference for the strategy scores versus the reward-prediction score, we compared the absolute value of the difference between both scores and 0.5. Fig. 6C and Suppl. Fig. 2 (below) shows that the strategy score predominated over the reward-prediction score. This finding was consistent in both monkeys and across task periods, with the exception of the post-reward period, in which the number of cells with preferences for reward prediction decreased dramatically (Fig. 6C). ANOVA (α=0.05) revealed no significant effects of Monkey for either the strategy score or the reward-prediction score (F1,1=2.2, p=0.14 for the strategy score; F1,1=1.2, p=0.28 for the reward-prediction score). Accordingly, data from both monkeys were combined in Suppl. Fig. 2.

Almost half (46%) of the cells with a strategy effect also showed either a main effect of Response choice, a Strategy by Response choice interactive effect, or both. This finding varied little by task period or by monkey: 43% (N=145) in IS1, 45% (N=152) in IS2, 52% (N=89) in RMT, 51% (N=136) in pre-reward and 42% (N=113) in post-reward. This finding shows that the strategy effects do not result solely from high-level visual processing, such as the detection of stimulus change or repetition, and Fig. 4 illustrates an example. This PF cell showed a strong selectivity for change trials, but preferentially for choice of the top target. Note also that it had response selectivity only for change trials. Similarly, nearly one-third of the neurons with strategy effects showed response selectivity only for repeat trials or only for change trials (one-way ANOVA, Bonferroni-corrected α=0.025; see Suppl. Table 6 for each task period).

Fig. 4.

Cell showing that the strategy effect does not simply reflect detection of whether the IS repeats from trial-to-trial. Format as in Fig. 3. A. Change trials. B. Repeat trials. Note selectivity for change trials, but only for upward (top row) and (to a lesser extent) leftward responses (middle row).

Supplementary Table 6.

Numbers of cells with and without response selectivity, among cells with strategy effects (with percentages of the tested sample, N, in parentheses). One-way ANOVA with factor Response choice, separately for repeat- and change-trials. Only cases with at least 4 trials in each direction were included (Bonferroni corrected α = 0.025). Abbreviations: IS1, early instruction-stimulus period; IS2, late instruction-stimulus period; RMT, reaction- and movement-time period.

| Selectivity | IS1 | IS2 | RMT | Pre-reward | Post-reward |

|---|---|---|---|---|---|

| Response-choice selective in only one strategy | 38 (32%) | 37 (29%) | 21 (26%) | 30 (28%) | 14 (20%) |

| Response-choice selective in both strategies | 19 (16%) | 21 (16%) | 8 (10%) | 10 (9%) | 2 (3%) |

| Not response-choice selective | 62 (52%) | 71 (55%) | 52 (64%) | 66 (62%) | 54 (77%) |

| N | 119 | 129 | 81 | 106 | 70 |

Of the remaining cells (54%), which showed a strategy effect but were not selective for the response, only a few showed selectivity for the IS type: 20% (N=91) in IS1, 7% (N=74) in IS2, 15% (N=45) in RMT, 3% (N=62) in pre-reward and 7% (N=67) in post-reward. Thus, stimulus coding was not a prominent feature of the present results.

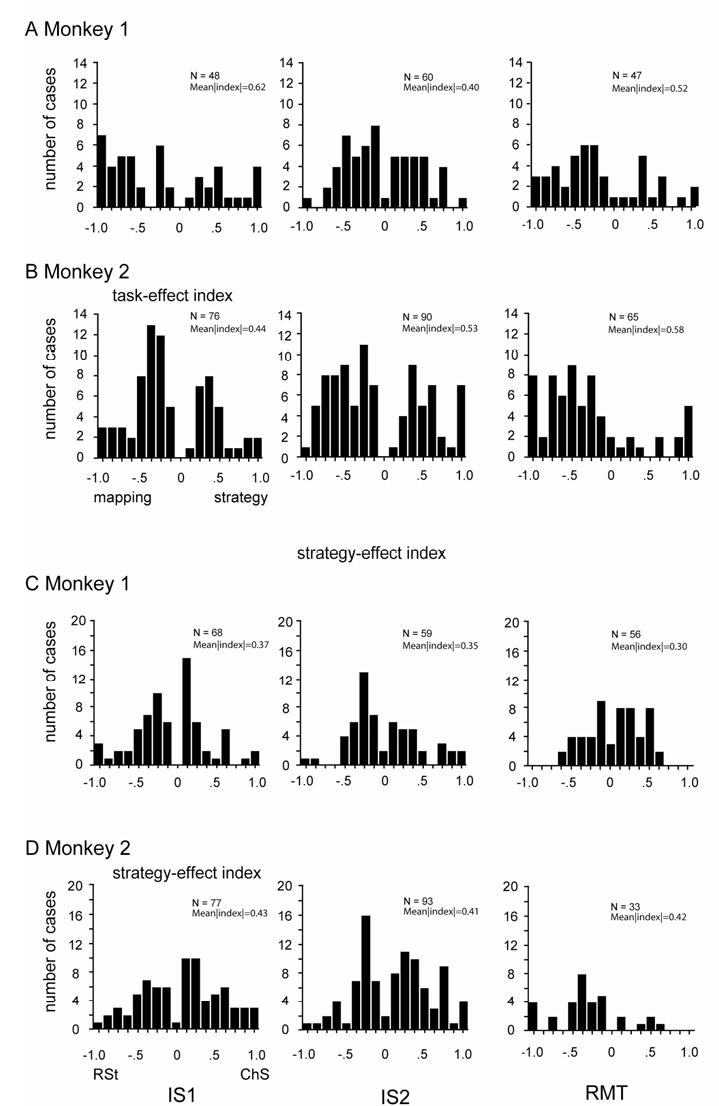

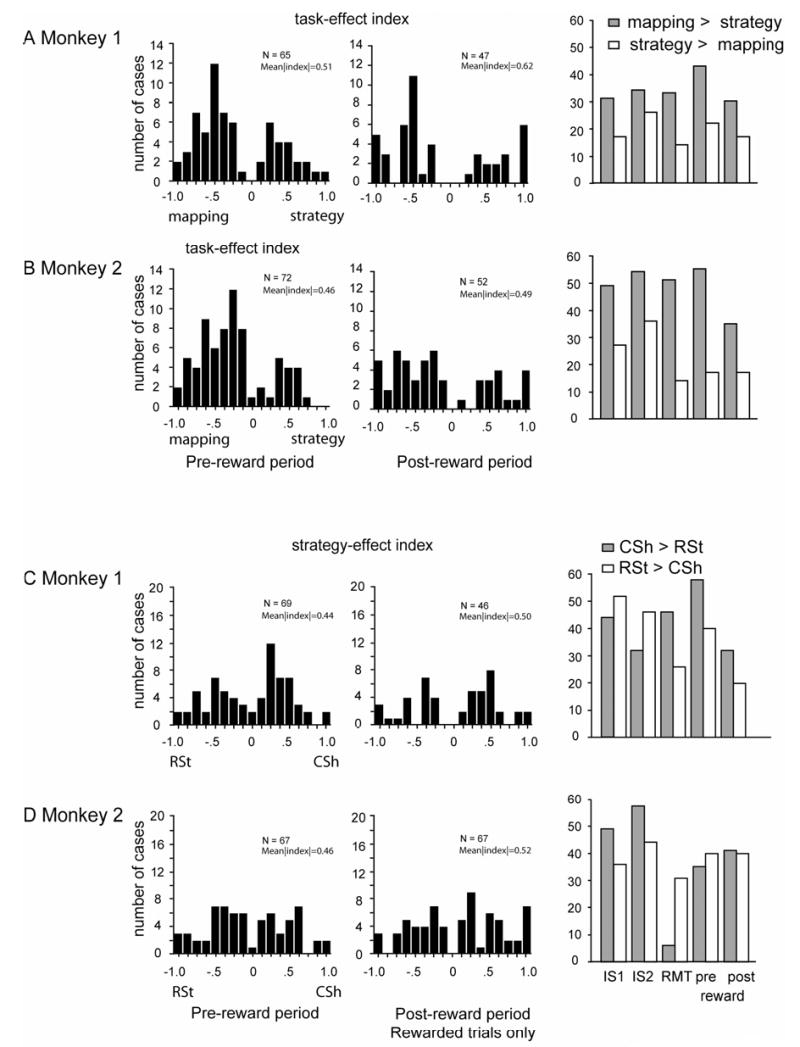

After establishing the significance of the strategy effect by ANOVA, we assessed its magnitude with a post-hoc analysis called the strategy-effect index (see Experimental Procedures). An Istrat of 0 indicated no activity difference between the strategies. In the IS period (IS1 and IS2, combined), the mean |Istrat| was 0.31 ± 0.22 (SD) for Monkey 1 and 0.38 ± 0.25 for Monkey 2. These values corresponded to an approximately two-fold difference in activity, on average, for the preferred strategy over the nonpreferred one. Suppl. Figs. 3C, D and 4C, D show the distributions of Istrat in order to indicate the overall magnitude of the strategy effect. Approximately one half of the cells (50% and 57% in Monkeys 1 and 2, respectively) exceeded a two-fold activity difference between strategies (|Istrat|=0.33) and approximately one quarter (19% and 28% in Monkeys 1 and 2, respectively) exceeded a four-fold difference (|Istrat|=0.6). When the designation of change- or repeat trial was randomly shuffled 100 times, a mean |Istrat| of 0.11 was obtained for both monkeys, corresponding to a ratio of only 1.25:1.

Supplemental Figure 3.

Distribution of Itask across the population of cells for each period. A, B. Frequency distributions for the task-effect index (Itask), for each of three task periods, labeled at bottom. C, D. Frequency distributions for strategy-effect index (Istrat). Abbreviations: IS1, early instruction-stimulus period; IS2, late instruction-stimulus period; RMT, reaction- and movement-time period. Note that the neuronal subpopulation can differ across periods.

Supplemental Figure 4.

Continuation of Suppl. Fig. 3 for pre-reward and post-reward periods. The right plot shows the relative proportion of cases with a preference for the change-shift strategy (CSh, gray bars) and the repeat-stay strategy (RSt, white bars).

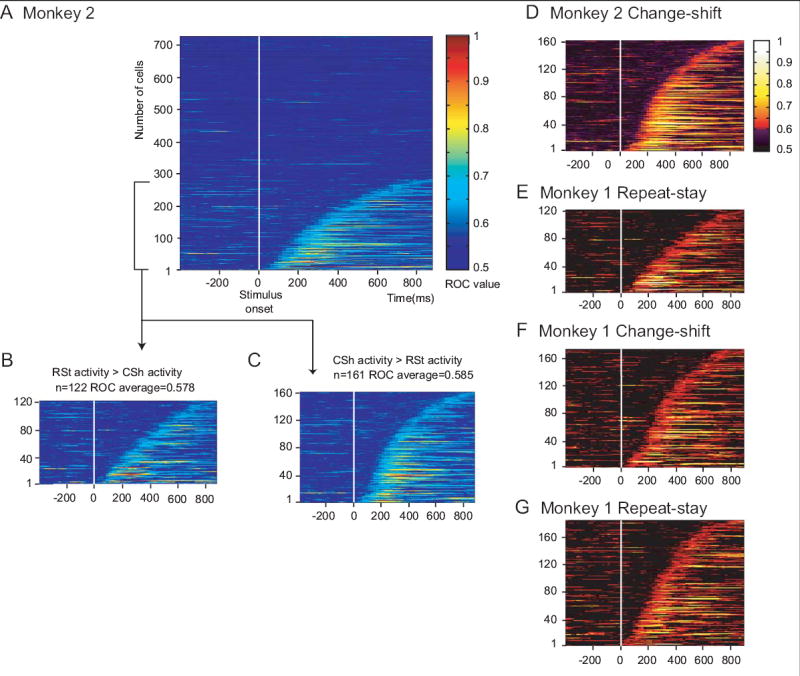

We also quantified the strength of the strategy effect by computing receiver operating characteristic (ROC) values for each PF neuron, using the mean firing rates across the IS period. The ROC values depended on the degree of overlap between two distributions of activity levels, but not a cell’s overall activity, its level of reference-period activity, or its dynamic range. The ROC curves were based on each observed discharge rate for each neuron. We plotted the proportion of change trials with activity that exceeded that rate against the proportion of repeat trials that did so. The area under this ROC curve thus served as a measure of strategy selectivity: a value of 0.5 corresponded to no selectivity, a value of 1.0 (never observed) to a complete lack of overlap of the distributions. The ROC analysis confirmed that the cells were selective for which strategy the monkeys used on a trial-by-trial basis (Fig. 5). Using a bootstrapping method (see Wallis and Miller, 2003b), random shuffling of the strategy designations 1,000 times for each neuron yielded ROC values that were significantly less than the observed values for both Monkey 1 (Wilcoxon signed-ranks test, Z=−5.87, p<0.0001, N=700) and Monkey 2 (Z=−7.44, p<0.0001, N=750). The median ROC value was 0.63 in Monkey 1 and 0.60 in Monkey 2, values that, along with their distribution, can be compared with those obtained in other studies (see Discussion).

Fig. 5.

ROC plots. Colors show the area under the ROC curve for each individual cell, ranked according to the time at which this signal develops after stimulus onset. A. All neurons in Monkey 2. B. Neurons with an ROC value >0.6 for 4 consecutive bins and a preference for the repeat-stay strategy. C. As in B, but with a preference for the change-shift strategy. D and E. Data from C and B, respectively, with a color scale approximating that used by Wallis and Miller (2003b). F and G. ROC plots from Monkey 1 in the format of D and E.

No dramatic differences were observed among task periods in the frequency of strategy effects. The strategy preference (for the change-shift or the repeat-stay strategy) and the magnitude of the strategy effects also differed little by task period (Suppl. Figs. 3C, D and 4C, D). Taken together, the existence of highly significant strategy effects, some of which depended on the response chosen on a particular trial, and the high magnitude of the effects support the hypothesis that PF neurons contribute importantly to the implementation of these abstract response strategies. This point is taken up in the Discussion.

Familiar mapping control task

To rule out the possibility that trial-to-trial changes in the IS or in the response choice accounted for the strategy effects, we tested for similar activity contrasts in the familiar mapping task. In this task, the monkeys responded to highly familiar ISs according to three well-learned stimulus–response mappings. The familiar mapping task involved the same sequence of events as did the strategy task (Fig. 1A), and both monkeys performed at >99% correct.

Cells showing statistically significant activity differences between repeat-trial and change-trial activity were considered false-positive strategy effects because the monkeys were unlikely to be using either the repeat-stay or change-shift strategy while responding according to overlearned stimulus–response mappings. For three task periods, the RMT, pre-reward, and post-reward period, these false-positive results occurred at the level expected by chance (4–6%, with α=0.05, ANOVA). For example, of the 23 cells showing a significant strategy effect during the RMT period, only 4% (1 cell) showed a statistically significant “strategy effect.” In the IS1 period, this proportion was somewhat higher: Of the 47 PF cells with strategy effects in the standard version of the task 15% (7 cells) appeared to retain this property in the familiar mapping task; in the IS2 period, 26% did so. The ISs differed in the strategy and familiar-mapping task, but virtually identical results were found when excluding cells with stimulus selectivity. These data show that an account of strategy effects in terms of a change or repetition of either the stimulus or the response choice can be rejected for the vast majority of neurons with strategy effects (74%–96%, depending on the task period). Nevertheless, the higher of these values (15% and 26%) indicate that some of the strategy effects observed during the IS period could reflect changes in either the IS or in the response choice from trial-to-trial. Perhaps this finding reflects the monkeys’ long experience with detecting IS repeats and changes while performing the strategy task. At times more remote from the IS and closer to the response, this property seems to be completely absent.

Reward-expectation control: high-reward version of the strategy task

As an important feature of the experimental design, the monkeys’ reward rates differed between change trials and repeat trials. In the standard version of the strategy task, each correct application of the change-shift strategy resulted in a reward rate of 50% on first-chance trials. In the high-reward version of the task, this reward rate was 90%, which approximated that for repeat trials in both versions of the strategy task.

In Monkeys 1 and 2, respectively, we recorded from 247 and 290 PF cells in both versions of the strategy task. Fig. 6A and B shows an example of such a comparison in one PF neuron. For that cell, the difference in reward prediction between repeat- and change trials could not account for the strategy effect: reward rate did not affect the cell’s preference for the change-shift task. The present analysis includes all task-related cells with more than 15 correctly executed repeat trials and the same minimum number of change trials.

Fig. 6.

Cell preferring the change-shift strategy and lacking a major influence of reward prediction. From PFdl. A. Standard version of the strategy task, with correct change-shift choices rewarded at a 50% rate. B. High-reward version of the task, using the same stimulus set, with correct change-shift choices rewarded at a 90% rate to more closely match the reward rate for repeat trials. C. Comparison of the strategy score and the reward-prediction score. Percent of cells with activity better matching reward probability (blue) or strategy (magenta). Abbreviations: CSh, change-shift; IS1, early instruction-stimulus period; IS2, late instruction-stimulus period; rew, reward; RMT, reaction- and movement-time period.

Notwithstanding the example in Fig. 6A and B, reward-prediction effects were observed, as in previous studies of PF (Leon and Shadlen, 1999; Roesch and Olson, 2004; Tremblay and Schultz, 2000; Wallis and Miller, 2003a; Watanabe et al., 2002). We intend to report these data elsewhere in more detail, but for the present report we performed several analyses to rule out the possibility that strategy effects depended entirely on reward prediction or related factors. We selected cells that showed a significant strategy effect in the standard version of the task and calculated the correlation of Istrat in the high-reward and standard versions. For the IS1 period, this correlation was ρ=0.73 (n=46 cells), which was statistically significant (Spearman correlation, p<0.001). This strong correlation indicated that the strategy effect was similar in both preference and magnitude for the two levels of reward tested. The analogous correlation was ρ=0.73 (n=47 cells, p<0.001) for the IS2 period, ρ=0.62 (n=27 cells, p<0.001) for the RMT period, and ρ=0.58 (n=45 cells, p<0.001) for the pre-reward period. We also found that 43 of 46 cells (93%) with a significant strategy effect in the standard version of the task maintained their strategy preference in the high-reward version (binomial test, p<0.001), despite the fact that the difference in reward rate between repeat- and change trials was very small in the high-reward version. The other task periods had similar results: 43/47 (96%) in IS2, 25/27 (93%) in RMT, and 35/45 (78%) in the pre-reward period. Furthermore, bootstrapping methods showed that most cells with strategy effects lacked any significant activity difference for their preferred strategy in the high-reward vs. standard version of the task: 26 of 46 (57%) in the IS1 period, 28 of 47 (60%) in IS2, 18 of 27 (67%) in RMT, and 29 of 45 (64%) in the pre-reward period. In addition to those tests, we calculated a reward-prediction score, which was based on whether the reward rate was near 90% or near 50% on a series of trial types across the various strategy and mapping tasks, and whether a cell’s activity corresponded to those levels. We compared that score to a strategy-score, which was based on the strategy used in the relevant trial types (see text above Suppl. Fig. 2 for these methods in more detail). Many PF neurons reflected the strategy used to a greater extent than the probability of reward (Fig. 6C; Suppl. Fig. 2). These analyses did not rule out an effect of reward prediction in some PF neurons, but did exclude an account of PF’s strategy effects solely in those terms.

Supplemental Figure 2.

Scatter plots of strategy score and reward-prediction score. Deviation from 0.5, which represents the worst fit to each model. Abbreviations: IS1, early instruction-stimulus period; IS2, late instruction-stimulus period; RMT, reaction- and movement-time period; Pre-rew, pre-reward period; Post-rew, post-reward period.

Mapping task

We also compared PF’s neuronal activity in the mapping task vs. the strategy task. In the mapping task (Fig. 7D), the monkeys learned three novel mappings of the same type as in the familiar mapping task. At the beginning of a block of trials, all three mappings were unknown, although the ISs were the same as in the just-completed block of trials on the standard version of the strategy task. Then the monkeys learned, by trial-and-error, which one of the three targets to choose in response to each IS. Reward followed each correct choice, and the absence of reward provided error feedback. After each incorrect choice, the same IS appeared on consecutive second-chance trials until the monkey chose correctly.

Fig. 7.

Cell preferring the mapping task. This neuron was located in PFdm (see Fig. 1B). A, B and C each show neuronal activity relative to the onset of the instruction stimulus. Neuronal activity averages: red for the mapping task, black for the strategy task. Change trials differ (p<0.05, Mann-Whitney U Test) but repeat trials do not (p=0.49). D. Three ISs, with arrows indicating the correct action for each. E. Percent of cells by task period showing a task effect, for each monkey. Abbreviation: rew, reward.

The monkeys learned novel mappings very quickly (green curves in Fig. 2C and D), but during the early trials in a block, prior to learning the fixed stimulus–response mappings, the monkeys used the repeat-stay and change-shift strategies. This finding is demonstrated by the fact that after a correct response, the monkeys managed scores of >80% correct for repeat trials (blue curves in Fig. 2C and D), from the beginning of the mapping block. With the exception of trial 2 in Monkey 1, they also correctly shifted their responses on >90% of change trials (red curves). Because the monkeys used these strategies, data from these early trials were discarded for the analysis described below, which contrasts activity in the strategy and mapping tasks. Suppl. Table 2 shows each monkey’s percentage of correct responses. Reaction times for the strategy vs. mapping task (Suppl. Table 3) did not differ significantly for either monkey, with one exception. For change trials, Monkey 1 had significantly faster reaction times in the strategy task (t=6.4, p<0.001), but the neurophysiological results were unlikely to be affected by this 10-ms difference in reaction time.

Except for the existence of the fixed mappings of IS to response choice, the mapping task matched the strategy task in event sequence and timing (Fig. 1A). This comparison served to control for low-order sensory and motor factors. Thus, we took advantage of the fact that the mapping task provided us with an opportunity to compare PF activity when the monkey made precisely the same saccade to precisely the same spatial target in response to precisely the same, foveally presented and attended stimulus. In the mapping task, each IS was associated with one and only one response choice. In the example illustrated in Fig. 7C, stimulus C instructed a saccade to the right target (C→right). In the strategy task, that IS–response pair was selected from among the two other pairs that occurred for saccades to the right target (A→right and B→right). There were three such pairs common to the mapping and strategy tasks, A→top, B→left, and C→right, selected from 9 pairs in the strategy task. Such a comparison eliminated any simple sensory or motor factors that might have affected neuronal activity.

For 383 and 499 PF neurons in Monkeys 1 and 2, respectively, we collected sufficient data to compare the discharge rates in the strategy and mapping tasks. These comparisons required at least five correctly executed trials in both tasks for all three IS→response pairs. Of these 882 neurons, 532 showed significant task-related activity. After eliminating the 15% of the population that showed a significant between-task difference in reference-period activity, there remained ~240 task-related neurons in each task period: 253 in IS1, 226 in IS2, 243 in RMT, 246 in pre-reward and 224 in post-reward. Task effects, defined as statistically significant activity differences between the mapping task and the standard version of the strategy task, were then identified by ANOVA (α=0.05). Fig. 7A–C illustrates the activity of a PF neuron showing a task effect. This neuron had two kinds of tuning. First, and most conventionally, the cell showed a preference for the trials involving rightward responses (Fig. 7C). Second, however, this neuron had much greater activity for the mapping task (red), when a given IS instructed that response due to a fixed mapping, compared to when that same IS guided the same response based on an abstract strategy (black).

Of the task-related neurons tested, ~30% showed a significant task effect, varying from 36% in the IS2 period (82/226) to 26% in the RMT period (62/243). Fig. 7E and Suppl. Table 7 give the breakdown by monkey and task period. In the IS1 period, 57% of these cells had greater modulation in the mapping task; in the IS2 period, 46% did so, and in the later task periods (RMT, pre-reward, and post-reward), 66% preferred the mapping task (both monkeys, combined). We measured the magnitude of the task effect using a task-effect index Itask. Suppl. Figs. 3A, B and 4A, B show the distribution of Itask across the population of cells for each period. There were no dramatic differences in task preference (strategy vs. mapping task) or the magnitude of the task effects.

Supplementary Table 7.

Task effects, by monkey and task period. Abbreviations: Mk, Monkey; IS1, early instruction-stimulus period; IS2, late instruction-stimulus period; RMT, reaction- and movement-time period.

| Mk | IS1 | IS2 | RMT | Pre-reward | Post-reward | Total* |

|---|---|---|---|---|---|---|

| 1 | 27/104 (26%) | 36/100 (36%) | 29/116 (25%) | 38/106 (36%) | 31/102 (30%) | 114/211 (54%) |

| 2 | 41/149 (28%) | 46/126 (37%) | 33/127 (26%) | 44/140 (31%) | 32/122 (26%) | 136/263 (52%) |

| sum | 71/253 (28%) | 82/226 (36%) | 62/243 (26%) | 82/246 (33%) | 63/224 (28%) | 250/474 (53%) |

each cell counts once

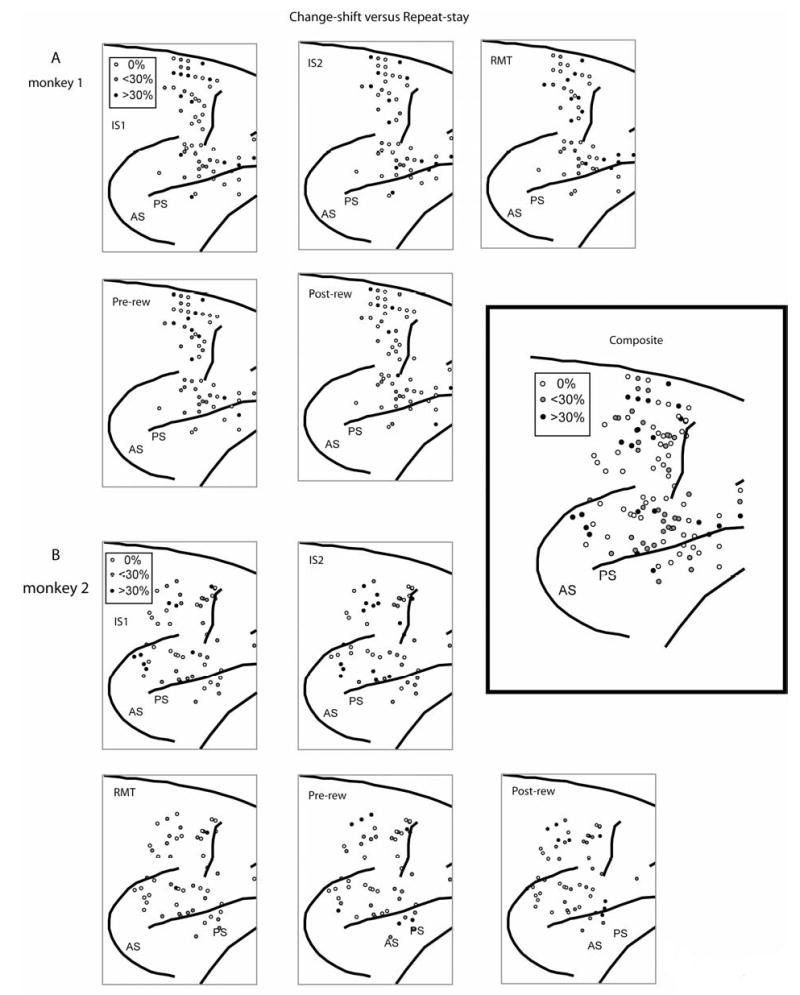

We did not observe any noteworthy anatomical distributions of task- or strategy effects or preferences (Suppl. Figs. 5 and 6).

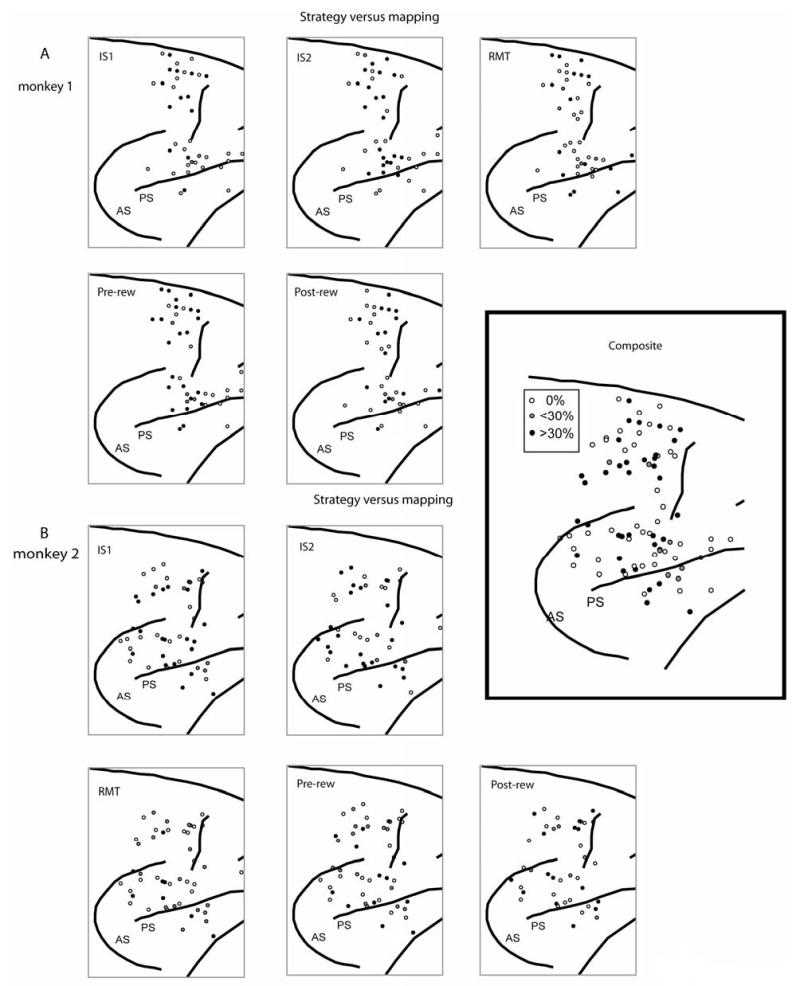

Supplemental Figure 5.

Surface plots of the locations of cells with strategy effects, by task period. A. Monkey 1. B. Monkey 2. Inset: composite of both monkeys, all task periods. Shading shows penetrations with either more than 30% of cells showing a significant preference for the strategy task (black circles), with some of that type, but less than 30% (gray circles), or no such cells (unfilled circles). Rostral to the right; dorsal up.

Supplemental Figure 6.

Surface plots of the locations of cells with task effects, by task period. A. Monkey 1. B. Monkey 2. Inset: composite of both monkeys, all task periods. Format as in Suppl. Fig. 5.

Discussion

We studied PF activity as monkeys responded to symbolic visual cues according to two abstract strategies, called repeat-stay and change-shift. In separate blocks of trials, they learned and responded according to memorized stimulus–response mappings. We chose to study the repeat-stay and change-shift strategies because other monkeys have spontaneously adopted them while learning stimulus–response mappings (Wise and Murray, 1999). The present monkeys did so, as well. Previous work has shown that parts of PF play a necessary role in the implementation of these and other strategies (Bussey et al., 2001), and the present results reveal important insights into their neural basis.

Successful implementation of these strategies required several cognitive processes and their coordination. To perform the task successfully, the monkeys had to: (1) remember the spatial target (or saccade) most recently chosen (a spatial memory); (2) remember the stimulus (or stimulus–response pair) preceding that choice (a nonspatial memory); (3) use the nonspatial memory to evaluate whether the stimulus on the current trial had changed from the previous trial; and (4) use the spatial memory to choose the same target when the stimulus repeated and to reject that target when the stimulus changed.

We hypothesized that neurons in PF played an important role in implementing abstract response strategies, as well as exemplar-based responses. Their properties were consistent with that hypothesis and with each of the four cognitive processes enumerated above. We do not report here the well known spatial and nonspatial memory signals observed in PF neurons (Rainer et al., 1998), which correspond to processes 1 and 2, listed above. We observed those signals in the present data and will describe them in a subsequent report. Unique to the present task, we found that many PF neurons were selective for either the repeat-stay or change-shift strategy, but showed no selectivity for the particular response chosen. These characteristics coincide with one or more aspects of process 3, above: the evaluation of stimulus repetition or change, recall of the correct strategy, and implementation of that strategy. In many of PF cells, however, the strategy-related activity was specific for a particular response choice. For example, some cells had selectivity for trials that involved responses to the top target after the stimulus had changed (Fig. 4). Such findings rule out an account of the strategy effects solely in terms of high-level vision (cognitive process 3), and point to a role in process 4: the selection of a response based on stimulus repetition or change. Neurons with strategy effects selective for a particular target could perform an computation that bridges the gap from the repeat–change evaluation to the selection of the upcoming response target.

Previous neurophysiological studies

The evaluation of whether the stimulus had repeated or changed from the previous trial resembles a matching rule. Match (or nonmatch) signals have been reported in PF previously, but relatively rarely. For example, Miller et al. (1996) found that only a minority of PF cells conveyed pure “match” information. Instead, the majority (65%) of cells with activity indicative of matches were also stimulus selective. In contrast, our cells with strategy effects showed a much smaller proportion of stimulus selectivity (20% in the IS1 period, less in other task periods). Along with the response selectivity of many cells with strategy effects (Fig. 4), this finding points to a role beyond stimulus identification or the detection of stimulus repetition to a more-general role in the implementation of the change-shift or repeat-stay strategy.

In addition, the strategy task differed importantly from such matching tasks. The strategy task combined a match/nonmatch rule with the need maintain a short-term memory of the previous response, as well as with the use of the match/nonmatch decision to choose a response based on that memory. This requirement contrasts with a traditional matching task, in which a response reports the match/nonmatch decision based on long-term memory. For example, monkeys often release a bar if they detect a match (Wallis et al., 2001; Wallis and Miller, 2003b). The greater load on short-term memory in the present task may account, in part, for the strong strategy selectivity that we observed, compared to the degree of rule selectivity observed by Wallis and Miller. Such comparisons have problems, of course, but comparing our data with theirs has some strengths, as well. We used the same cell selection strategy; neither they nor we searched for and isolated task-related neurons. Although their analysis involved a different number of trials per cell (300–400 per rule) than ours (30–100 per strategy), because we moved our electrodes more often, we studied the same general regions within PF, and both analyses used a 200-ms window advanced in steps of 10 ms. According to the present ROC analysis (Fig. 5), PF neurons reflected the repeat-stay and change-shift strategies more strongly than PF the neurons studied by Wallis and Miller reflected matching and nonmatching rules (compare Fig. 5D–G with Fig. 7 of Wallis and Miller, 2003b). For Monkey 1, the median ROC value was higher in the current data than in theirs (0.63 vs. 0.57), as was the upper interquartile range (IQR) (0.58 vs. 0.62). For Monkey 2, a similar difference was observed for the median (0.60 vs. 0.57) and upper IQR (0.66 vs. 0.62). The difference between the two data sets was highly significant (Kolmogorov-Smirnov two-sample test, two-sided, D=0.25, n=567, m=700, p<0.0001 for Monkey 1; D=0.20, n=567, m=751, p<0.0001 for Monkey 2). Furthermore, only 5% of the sample collected by Wallis and Miller showed ROC values in excess of 0.7 for their rules, whereas 21% of the sample in Monkey 1 and 17% in Monkey 2 did so for our strategies. Although the number of trials differed, this should have increased the noise in our sample, and thus decreased our ROC values, yet the observed ROC values in our task were significantly higher. The strategy selectivity during the IS period was also higher than their median ROC value (0.52) for rule selectivity in the test phase, which did not differ significantly from chance level.

Other studies have also reported PF activity related to rules and strategies. A representation of rules was found for location-matching and shape-matching rules (Hoshi et al., 2000). One previous study compared neuronal activity for spatial, object, and associative rules and found many cells with activity that reflected each rule, even though the stimuli and response did not differ (Asaad et al., 2000). Other studies have compared arbitrary response rules with spatial ones (Fuster et al., 2000; White and Wise, 1999), and some have concentrated on visuospatial “rules” (Zhang et al., 1997), although the relationship of such spatial remapping rules to the abstract and symbolic rules studied here remains unclear. Barraclough et al. (2004) studied win-stay and lose-shift strategies. (Note that the current monkeys’ change-shift strategy, which followed reward, differed importantly from their monkeys’ lose-shift strategy, which followed non-reward.) Barraclough et al. found that signals related to the animal’s past choices and their outcomes were combined in PF neurons, suggesting a role of PF in optimizing decision-making strategies.

We do not mean to imply that PF is the only part of the brain that contributes to rules and strategies. For example, the posterior parietal cortex has been implicated in related functions (Stoet and Snyder, 2003), and studies on patients with Parkinson’s disease implicate the basal ganglia in rule- and strategy-based behavior (Cools et al., 2004; Shohamy et al., 2004; Swainson and Robbins, 2001). Furthermore, interactions between inferotemporal cortex and PF play a crucial role in rule-guided behavior, when these rules depend on nonspatial visual inputs (Gaffan et al., 2002; Parker and Gaffan, 1997).

The present study differed from previous ones in that the specific response strategies studied here were the ones that some monkeys adopt spontaneously as they learn symbolic stimulus–response mappings (Wise and Murray, 1999). Of course, when the monkeys performed the strategy task here, they did not use those strategies spontaneously, a topic taken up below in Interpretational Issues, below.

Studies in humans

The involvement of PF in the use of different strategies has been supported by neuroimaging studies, as summarized by Bunge (2005), and in studies of event-related potentials (Folstein and Van Petten, 2004). Huettel and McCarthy (2004), for example, used a variant of the oddball task and found an increase of activity in PF associated with dynamic changes in response strategy from a default “positional strategy”, in which the position of the target guides the movement, to a “shape strategy,” in which the shape of the stimulus guides the movement. Huettel and Misiurek (2004) found that activity in PF reflected the number of response rules excluded by a stimulus. The present findings have provided support for those results and others from both humans (Brass et al., 2003; Bunge, 2005; Bunge et al., 2003; Owen et al., 1990; 1996; Rogers et al., 1998; Rushworth et al., 2002) and monkeys (Asaad et al., 2000; Barraclough et al., 2004; Collins et al., 1998; Gaffan et al., 2002; Hoshi et al., 2000; Wallis and Miller, 2003b; White and Wise, 1999; Wise and Murray, 1999) that point to a role for PF in the selection and implementation of rules and strategies.

Interpretational problems and limitations

Above, we ruled out interpretations of strategy effects solely in terms of evaluating whether the stimulus had changed or repeated. The fact that many PF neurons have activity that is selective for either repeat- or change trials, but preferentially when the monkeys choose (or reject) a particular response, shows that such an account is inadequate. A different subpopulation of PF cells, however, may play a role in detecting stimulus change and repetition, as discussed above. Here we consider other alternative interpretations.

Response change.

Some frontal activity reflects response changes from trial-to-trial (Matsuzaka and Tanji, 1996). But a comparison of activity in the familiar mapping task versus the strategy task shows that, for the majority of the cells, changing the forthcoming response from trial-to-trial could not have accounted for the strategy effects.

Attention.

Many studies of PF have pointed to a role in top-down control of attention, including those based on neuropsychological (Koski and Petrides, 2002; Rueckert and Grafman, 1996; Stuss et al., 1999), neuroimaging (Cabeza and Nyberg, 2000; e.g., Corbetta et al., 1993; Pessoa et al., 2003; Thiel et al., 2004; Woldorff et al., 2004), and neurophysiological (Lebedev et al., 2004) methods. However, because both strategies (change-shift and repeat-stay) and tasks (strategy and mapping) required that the monkeys attended to the nonspatial features of the IS, selective attention per se could not have accounted for strategy or task effects. But this argument does not exclude the possibility that strategy effects mainly occurred when the monkey attended to whether the stimulus had changed or repeated from trial to trial. This idea limits the interpretability of task effects (contrasts between the mapping and strategy tasks), but not that of strategy effects (contrasts between the repeat-stay and change-shift strategies). Abstract attentional factors associated with the repeat–change evaluation were the same for all trials in the strategy task and thus could not have accounted for strategy effects or preferences.

Low-order sensory and motor factors.

When we compared activity in the strategy and mapping tasks, we restricted the analysis to a comparison of identical responses (the three saccades) and identical stimuli, all of which occurred within spatial coordinate frames that were the identical in eye-centered, head-centered, body-centered, and extrinsic coordinates. Accordingly, our experimental design and analysis ruled out simple motor, sensory, or spatial factors as accounts for the task effects. ANOVA revealed that such low-order factors could not account for strategy effects, either.

Task difficulty.

The proportion of correct responses was nearly the same across strategies and tasks, which indicated that the monkeys found them of approximately the same difficulty (95–99% correct). Reaction-time measures should also be sensitive to task difficulty, and we found nothing in those data that could account for the neuronal activity contrasts observed in this experiment.

Reward expectation.

Previous studies have reported that reward expectation affects PFdl activity (Leon and Shadlen, 1999; Roesch and Olson, 2004; Wallis and Miller, 2003a; Watanabe et al., 2002). We confirmed the existence of these signals in PFdl and PFdm, but they could not have accounted for either the strategy or task effects reported here. The differential activity between repeat and change trials was maintained in the high-reward version of the task, although the difference in reward expectation between these types of trials was minimal. With few exceptions, changing the reward expectation did not change the preference for repeat-stay and change-shift strategies. Furthermore, in contrast to previous studies in which the expectation of a larger reward correlated with greater activity (Leon and Shadlen, 1999; Roesch and Olson, 2004), the present task revealed a different relationship. In the standard version of the strategy task, the change-shift strategy was associated with a lower rate of reward than the repeat-stay strategy, but as many cells preferred the former as the latter.

Short-term memory.

The repeat-stay or change-shift strategies required an assessment of whether the stimulus had repeated from the previous trial. Both strategies therefore necessitated the maintenance of short-term, working memories, which had to persist over the intertrial interval and beyond. Once thought to represent PF’s exclusive or main function (e.g., Goldman-Rakic, 1987), the working-memory theory has failed to account for several key observations made recently (Lebedev et al., 2004; Petrides, 2000; Rowe and Passingham, 2001; Rushworth et al., 1997). Nevertheless, PF does contribute to short-term, working memory, as one among its many functions. Although memory-related signals were observed (and will be described in a subsequent report), the strategy effects reported here could not have depended on short-term memory because the repeat- and change-trials had no differences in memory load or content. Task effects, however, could have been influenced by the requirement for remembering the most recent IS and response in the strategy task, but not in the mapping task.

Training a strategy.

The monkeys were operantly conditioned to apply the strategies that monkeys we have studied in the past have adopted spontaneously. Perhaps monkeys that have received such conditioning adopt “abnormal” variants of these strategies. Against this possibility, the data shown in Fig. 2 closely resembled those observed previously (Bussey et al., 2001; Murray et al., 2000; Wise and Murray, 1999). The present monkeys also applied the repeat-stay and change-shift strategies very effectively at the beginning of learning new mappings, and their performance on those trials resembled that in the strategy task. It remains possible, however, that they used different neural mechanisms in different blocks of trials.

Conclusions

We emphasized above that we do not mean to imply that PF is only part of the brain with neurons that contribute to the implementation of abstract response rules and strategies. Nor do we mean to imply that its neurons are limited to such functions. What, then, do PF neurons do? Our answer is that they participate in most, if not all, of the cognitive functions important to the life of primates, including categorization of events and stimuli (Freedman et al., 2001; 2002; 2003), prediction of forthcoming events (Rainer et al., 1999), task selection (Asaad et al., 2000; Hoshi et al., 1998), top–down attention (Lebedev et al., 2004; Miller et al., 1996), and sequencing events and actions (Averbeck et al., 2002; Hoshi and Tanji, 2004; Ninokura et al., 2003; 2004; Quintana and Fuster, 1999), among other cognitive functions. Some experts maintain that PF functions in general intelligence, implying that it contributes to problem solving whenever those problems exceed routine levels of difficulty (Duncan et al., 1996; Duncan and Owen, 2000; Gaffan, 2002). Others maintain that PF or parts of it function to monitor information in short-term memory, including plans and intentions (Lau et al., 2004; Owen et al., 1996; Petrides et al., 2002; Rowe et al., 2002; Rowe and Passingham, 2001), as well as actively maintaining that information (Goldman-Rakic, 1987).

These ideas are not incompatible. The idea that PF neurons function in all of the behaviors important to the cognitive life of primates lacks the appeal of some simpler notions, but we think that the evidence indicates that this is “what PF neurons do.” Following Passingham and his colleagues, it seems likely that the functions of PF neurons can be expressed most succinctly in terms of “attentional selection” of responses (Rowe and Passingham, 2001), which involves, at a minimum, top–down biasing of inputs to PF, integration of information about context, mapping context to a potential action or goal, competition among potential actions or goals based on predicted outcomes, choosing an action or goal appropriate to the current context, actively maintaining that context and those choices in memory—perhaps along with some alternatives—and updating all of the foregoing based on changing contexts. Response choices based on abstract strategies requires each of these processes, and PF probably contributes to them all.

Experimental Procedures

Animals

We studied two male rhesus monkeys (Macaca mulatta), 8.8 kg and 7.7 kg. They sat in a primate chair, with their heads fixed, and faced a video monitor 32 cm away. All procedures conformed with the Guide for the Care and Use of Laboratory Animals (1996, ISBN 0-309-05377-3) and were approved by the NIMH IACUC.

Recording methods and apparatus

We monitored eye position with an infrared oculometer (Bouis Instruments, Karlsruhe, Germany). Single-unit potentials were isolated with quartz-insulated platinum-iridium electrodes (80 μm outer diameter, impedance, 0.5–1.5 MΩ at 1 KHz) advanced by a 16-electrode microdrive (Thomas Recording, Giessen, Germany) through a custom, concentric recording head with 518 μm electrode spacing. These highly selective electrodes recorded spike potentials over a range of only a few hundred μm, which precludes recording a neuron’s activity on two or more electrodes. The signal from each electrode was discriminated either on-line using a Multi Spike Detector (Alpha-Omega Engineering, Nazareth, Israel) or a Multichannel Acquisition Processor (Plexon, Dallas, Texas) or off-line. Every unit’s isolation was scrutinized off-line using Off Line Sorter (Plexon), and we accepted only individual spike waveforms that clustered clearly in 3-D principal-component space, lacked interspike intervals <1 ms, had waveforms grouped tightly with other spikes in the time domain, and had stable and clearly differentiated waveforms over the course of the recordings. We recorded an average of 6.8 and 4.9 cells per electrode penetration in Monkeys 1 and 2, respectively (1–1.5 cells simultaneously), for electrodes that isolated at least one cell’s activity. CORTEX (www.cortex.salk.edu/) controlled behavior and collected data; MatOFF (dally.nimh.nih.gov/matoff/matoff.html), SPSS (www.spss.com), and custom programs were used for analysis.

Behavioral methods

A trial began when a 0.7° white circle—the fixation spot—appeared at the center of the video screen (Fig. 1A), along with the presentation of three potential saccade targets (2.2° unfilled white squares), 14° left, right and up from center. The monkeys maintained fixation on the fixation spot (± 7.5°) for 1.0 s. Then, the fixation spot disappeared and a visual instruction stimulus (IS) appeared at the same location for a variable delay period of 1.0, 1.5, or 2.0 s (pseudorandomly selected). Each IS comprised two ASCII characters, superimposed, as illustrated in Fig. 3. We made no attempt to determine which features of the stimuli were responsible for a cell’s activity because previous work has shown that such complex stimuli elicit robust activity from PF neurons (Asaad et al., 2000; Miller et al., 1996), which sufficed for the present purpose. The disappearance of the IS served as the trigger stimulus (TS). Next, the monkeys had to make a saccade to one of the three targets within 2.0 s and fixate it (± 6.7°) for 1.0 s. Then all three targets filled with white, and, if appropriate, a 0.1 ml drop of fluid reward was delivered 0.5 s later. The targets disappeared from the screen at that time, on both rewarded and unrewarded trials, and a 2.5 s intertrial interval began. After an erroneous response, the monkeys had an unlimited number of second-chance trials, which the monkeys performed until they made a correct response.

Surgery

Using aseptic techniques and isofluorane anesthesia (1–3%, to effect), a 27 by 36 mm recording chamber was implanted over the exposed dura mater of the right frontal lobe, along with head restraint devices.

Analytical methods

We quantified activity in specific task periods: a reference period of 1000 ms during fixation and prior to the IS, an IS1 period from 80—400ms after IS onset, an IS2 period from 400–1000 ms after IS onset (i.e., until TS onset), a reaction and/movement time (RMT) period from TS onset until saccade termination, a pre-reward period of 420 ms before the reward, and a post-reward period of 220 ms after it (Fig. 1A).

To examine strategy effects, we used correctly executed second-chance trials. For all task-related neurons, we performed a three-way ANOVA (p<0.05) with factors Strategy (repeat-stay, change-shift), Response choice (left, right, up), and Stimulus (three levels). To measure the size of the strategy effect, we calculated a strategy-effect index Istrat=(AC − AR)/(AC + AR), where AC was activity during the change trials and AR was that during repeat trials. The size and reliability of the strategy effect was also measured with a receiver operating characteristic (ROC) analysis (Green, 1966). We calculated ROC values in 200 ms bins from 500 ms before the IS presentation until the TS, advancing in 10- or 20-ms steps.

To test for task selectivity, we selected the stimuli and responses in the strategy task that matched those used in the corresponding mapping task. This analysis involved only correctly executed trials and excluded second-chance trials. We also eliminated the first 10 trials for each stimulus–response pair in each block of the mapping task. Thus, the activity changes accompanying the steepest phase of the learning curve could not contribute to any task effect (Fig. 2). (For the post-reward period, we also excluded unrewarded change trials.) For each neuron sampled, we identified task-related activity by contrasting the reference period with that in each of the other task periods (Mann-Whitney U test; p<0.05), and, when the activity passed that test in a given task period, we tested for differences between strategy trials and mapping trials (one-way ANOVA, p<0.05). Occasionally, a Mann-Whitney U test (p<0.05) substituted for ANOVA, when the latter was inappropriate due to complete inactivity in one of the tasks. The task-effect index Itask=(AS − AM)/(AS + AM), where AM was activity during the mapping task and AS was activity during the strategy task.

To test for false-positive strategy effects due to trial-to-trial changes in stimuli or response, we examined data from the familiar mapping task, compared to the standard version of the strategy task. The monkeys performed the familiar mapping task so well that they were unlikely to use either strategy. We used a two-factor ANOVA, with factors Mapping (three levels) and Strategy (repeat-stay, change-shift).

Histological Analysis

Near the end of physiological data collection, we made electrolytic lesions (15 μA for 10 s, anodal current) at two depths in selected locations. After ~10 days, the animal was deeply anesthetized with barbiturates, then perfused with buffered formaldehyde (3% by weight) after steel pins were inserted at known chamber coordinates. The brain was later removed, photographed, sectioned on a freezing microtome at 40 μm thickness, mounted on glass slides, and stained for Nissl substance with thionin. We plotted the surface projections of the recording sites by reference to the recovered electrolytic lesions and the pin holes.

Acknowledgments

We thank Drs. Jonathan D. Wallis and Sylvia A. Bunge for their comments on a previous version of this paper. The two anonymous referees were unusually helpful. We also thank Mr. Alex Cummings, who prepared the histological material, and Mr. James Fellows, who helped train the animals.

References

- Asaad WF, Rainer G, Miller EK. Task-specific neural activity in the primate prefrontal cortex. J Neurophysiol. 2000;84:451–459. doi: 10.1152/jn.2000.84.1.451. [DOI] [PubMed] [Google Scholar]

- Averbeck BB, Chafee MV, Crowe DA, Georgopoulos AP. Parallel processing of serial movements in prefrontal cortex. Proc Natl Acad Sci USA. 2002;99:13172–13177. doi: 10.1073/pnas.162485599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Brass M, Ruge H, Meiran N, Rubin O, Koch I, Zysset S, Prinz W, von Cramon DY. When the same response has different meanings: recoding the response meaning in the lateral prefrontal cortex. Neuroimage. 2003;20:1026–1031. doi: 10.1016/S1053-8119(03)00357-4. [DOI] [PubMed] [Google Scholar]

- Bunge, S. A. (2005). How we use rules to select actions: A review of evidence from cognitive neuroscience. Cogn. Affect. Behav. Neurosci., in press. [DOI] [PubMed]

- Bunge SA, Kahn I, Wallis JD, Miller EK, Wagner AD. Neural circuits subserving the retrieval and maintenance of abstract rules. J Neurophysiol. 2003;90:3419–3428. doi: 10.1152/jn.00910.2002. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Wise SP, Murray EA. The role of ventral and orbital prefrontal cortex in conditional visuomotor learning and strategy use in rhesus monkeys. Behav Neurosci. 2001;115:971–982. doi: 10.1037//0735-7044.115.5.971. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Nyberg L. Imaging cognition II: An empirical review of 275 PET and fMRI studies. J Cogn Neurosci. 2000;12:1–47. doi: 10.1162/08989290051137585. [DOI] [PubMed] [Google Scholar]

- Collins P, Roberts AC, Dias R, Everitt BJ, Robbins TW. Perseveration and strategy in a novel spatial self-ordered sequencing task for nonhuman primates: Effects of excitotoxic lesions and dopamine depletions of the prefrontal cortex. J Cogn Neurosci. 1998;10:332–354. doi: 10.1162/089892998562771. [DOI] [PubMed] [Google Scholar]

- Cools R, Clark L, Robbins TW. Differential responses in human striatum and prefrontal cortex to changes in object and rule relevance. J Neurosci. 2004;24:1129–1135. doi: 10.1523/JNEUROSCI.4312-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Miezin FM, Shulman GL, Petersen SE. A PET study of visuospatial attention. J Neurosci. 1993;13:1202–1226. doi: 10.1523/JNEUROSCI.13-03-01202.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 2000;23:475–483. doi: 10.1016/s0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Duncan J, Emslie H, Williams P, Johnson R, Freer C. Intelligence and the frontal lobe: the organization of goal-directed behavior. Cogn Psychol. 1996;30:257–303. doi: 10.1006/cogp.1996.0008. [DOI] [PubMed] [Google Scholar]

- Folstein JR, Van Petten C. Multidimensional rule, unidimensional rule, and similarity strategies in categorization: event-related brain potential correlates. J Exp Psychol Learn Mem Cogn. 2004;30:1026–1044. doi: 10.1037/0278-7393.30.5.1026. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Categorical representation of visual stimuli in the primate prefrontal cortex. Science. 2001;291:312–316. doi: 10.1126/science.291.5502.312. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. Visual categorization and the primate prefrontal cortex: Neurophysiology and behavior. J Neurophysiol. 2002;88:929–941. doi: 10.1152/jn.2002.88.2.929. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. A comparison of primate prefrontal and inferior temporal cortices during visual categorization. J Neurosci. 2003;23:5235–5246. doi: 10.1523/JNEUROSCI.23-12-05235.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM, Bodner M, Kroger JK. Cross-modal and cross-temporal association in neurons of frontal cortex. Nature. 2000;405:347–351. doi: 10.1038/35012613. [DOI] [PubMed] [Google Scholar]

- Gaffan D. Against memory systems. Phil Trans Roy Soc Lond B Biol Sci. 2002;357:1111–1121. doi: 10.1098/rstb.2002.1110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaffan D, Easton A, Parker A. Interaction of inferior temporal cortex with frontal cortex and basal forebrain: Double dissociation in strategy implementation and associative learning. J Neurosci. 2002;22:7288–7296. doi: 10.1523/JNEUROSCI.22-16-07288.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman-Rakic PS. 1987. Circuitry of primate prefrontal cortex and regulation of behavior by representational memory. In Handbook of Physiology: The Nervous System, Vol 5, F Plum and V B Mountcastle, eds (Bethesda: American Physiological Society), pp. 373–417

- Green, C. G. (1966). Signal Detection Theory and Psychophysics. (New York: Wiley).

- Hoshi E, Tanji J. Area-selective neuronal activity in the dorsolateral prefrontal cortex for information retrieval and action planning. J Neurophysiol. 2004;91:2707–2722. doi: 10.1152/jn.00904.2003. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Shima K, Tanji J. Task-dependent selectivity of movement-related neuronal activity in the primate prefrontal cortex. J Neurophysiol. 1998;80:3392–3397. doi: 10.1152/jn.1998.80.6.3392. [DOI] [PubMed] [Google Scholar]

- Hoshi E, Shima K, Tanji J. Neuronal activity in the primate prefrontal cortex in the process of motor selection based on two behavioral rules. J Neurophysiol. 2000;83:2355–2373. doi: 10.1152/jn.2000.83.4.2355. [DOI] [PubMed] [Google Scholar]

- Huettel SA, McCarthy G. What is odd in the oddball task? Prefrontal cortex is activated by dynamic changes in response strategy. Neuropsychologia. 2004;42:379–386. doi: 10.1016/j.neuropsychologia.2003.07.009. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Misiurek J. Modulation of prefrontal cortex activity by information toward a decision rule. Neuroreport. 2004;15:1883–1886. doi: 10.1097/00001756-200408260-00009. [DOI] [PubMed] [Google Scholar]

- Koski L, Petrides M. Distractibility after unilateral resections from the frontal and anterior cingulate cortex in humans. Neuropsychologia. 2002;40:1059–1072. doi: 10.1016/s0028-3932(01)00140-3. [DOI] [PubMed] [Google Scholar]

- Lau HC, Rogers RD, Ramnani N, Passingham RE. Willed action and attention to the selection of action. Neuroimage. 2004;21:1407–1415. doi: 10.1016/j.neuroimage.2003.10.034. [DOI] [PubMed] [Google Scholar]

- Lebedev MA, Messinger A, Kralik JD, Wise SP. Representation of attended versus remembered locations in prefrontal cortex. PLoS Biology. 2004;2:1919–1935. doi: 10.1371/journal.pbio.0020365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- Matsuzaka Y, Tanji J. Changing directions of forthcoming arm movements: Neuronal activity in the presupplementary and supplementary motor area of monkey cerebral cortex. J Neurophysiol. 1996;76:2327–2342. doi: 10.1152/jn.1996.76.4.2327. [DOI] [PubMed] [Google Scholar]

- Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J Neurosci. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA, Bussey TJ, Wise SP. Role of prefrontal cortex in a network for arbitrary visuomotor mapping. Exp Brain Res. 2000;133:114–129. doi: 10.1007/s002210000406. [DOI] [PubMed] [Google Scholar]

- Ninokura Y, Mushiake H, Tanji J. Representation of the temporal order of visual objects in the primate lateral prefrontal cortex. J Neurophysiol. 2003;89:2868–2873. doi: 10.1152/jn.00647.2002. [DOI] [PubMed] [Google Scholar]

- Ninokura Y, Mushiake H, Tanji J. Integration of temporal order and object information in the monkey lateral prefrontal cortex. J Neurophysiol. 2004;91:555–560. doi: 10.1152/jn.00694.2003. [DOI] [PubMed] [Google Scholar]

- Owen AM, Downes JJ, Sahakian BJ, Polkey CE, Robbins TW. Planning and spatial working memory following frontal lobe lesions in man. Neuropsychologia. 1990;28:1021–1034. doi: 10.1016/0028-3932(90)90137-d. [DOI] [PubMed] [Google Scholar]

- Owen AM, Evans AC, Petrides M. Evidence for a two-stage model of spatial working memory processing within the lateral frontal cortex: A positron emission tomography study. Cerebral Cortex. 1996;6:31–38. doi: 10.1093/cercor/6.1.31. [DOI] [PubMed] [Google Scholar]

- Parker A, Gaffan D. Memory after frontal/temporal disconnection in monkeys: conditional and non-conditional tasks, unilateral and bilateral frontal lesions. Neuropsychologia. 1998;36:259–271. doi: 10.1016/s0028-3932(97)00112-7. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Kastner S, Ungerleider LG. Neuroimaging studies of attention: from modulation of sensory processing to top-down control. J Neurosci. 2003;23:3990–3998. doi: 10.1523/JNEUROSCI.23-10-03990.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M. The role of the mid-dorsolateral prefrontal cortex in working memory. Exp Brain Res. 2000;133:44–54. doi: 10.1007/s002210000399. [DOI] [PubMed] [Google Scholar]

- Petrides M, Alivisatos B, Frey S. Differential activation of the human orbital, midventrolateral, and mid-dorsolateral prefrontal cortex during the processing of visual stimuli. Proc Natl Acad Sci USA. 2002;99:5649–5654. doi: 10.1073/pnas.072092299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Picard N, Strick PL. Imaging the premotor areas. Curr Opin Neurobiol. 2001;11:663–672. doi: 10.1016/s0959-4388(01)00266-5. [DOI] [PubMed] [Google Scholar]

- Pinker, S. (1999). Word and Rules. (New York: Basic Books).

- Quintana J, Fuster JM. From perception to action: Temporal integrative functions of prefrontal and parietal neurons. Cerebral Cortex. 1999;9:213–221. doi: 10.1093/cercor/9.3.213. [DOI] [PubMed] [Google Scholar]