Abstract

Since the death of positivism in the 1970s, philosophers have turned their attention to scientific realism, evolutionary epistemology, and the Semantic Conception of Theories. Building on these trends, Campbellian Realism allows social scientists to accept real-world phenomena as criterion variables against which theories may be tested without denying the reality of individual interpretation and social construction. The Semantic Conception reduces the importance of axioms, but reaffirms the role of models and experiments. Philosophers now see models as “autonomous agents” that exert independent influence on the development of a science, in addition to theory and data. The inappropriate molding effects of math models on social behavior modeling are noted. Complexity science offers a “new” normal science epistemology focusing on order creation by self-organizing heterogeneous agents and agent-based models. The more responsible core of postmodernism builds on the idea that agents operate in a constantly changing web of interconnections among other agents. The connectionist agent-based models of complexity science draw on the same conception of social ontology as do postmodernists. These recent developments combine to provide foundations for a “new” social science centered on formal modeling not requiring the mathematical assumptions of agent homogeneity and equilibrium conditions. They give this “new” social science legitimacy in scientific circles that current social science approaches lack.

The last time we looked, a theoretical model could predict the charge on an electron out to the 12th decimal place and an experimenter could produce a real-world number out to the 12th decimal place—and they agreed at the 7th decimal place. Recent theories of genetic structure coupled with lab experiments now single out genes that cause things like sickle-cell anemia. These outcomes are treated like science in action. There is rock-hard institutional legitimacy within universities and among user communities around the world given to the physics of atomic particles, genetic bases of health and illness, theories expressed as axiom-based mathematical equations, and supportive experiments. This legitimacy has been standard for a century.

A recent citation search compares 5,400 social science journals against the 100 natural science disciplines covered by INSPEC (>4,000 journals) and Web of Science (>5,700 journals) indexes (1). It shows keywords comput* and simulat* peak at around 18,500 in natural science, whereas they peak at 250 in economics and around 125 in sociology. For the keyword nonlinear citations peak at 18,000 in natural science, at roughly 180 in economics, and near 40 in sociology. How can it be that sciences founded on the mathematical linear determinism of classical physics have moved more quickly toward the use of nonlinear computer models than economics and sociology—where those doing the science are no different from social actors—who are Brownian Motion?

Writ large, social sciences appear to seek improved scientific legitimacy by copying the century-old linear deterministic modeling of classical physics—with economics in the lead (2)—at the same time natural sciences strongly rooted in linear determinism are trending toward nonlinear computational formalisms (1, 3). The postmodernist perspective takes note of the heterogeneous-agent ontology of social phenomena, calling for abandoning classical normal science epistemology and its assumptions of homogeneous agent behavior, linear determinism, and equilibrium. But postmodernists seem unaware of the “new” normal science alternative being unraveled by complexity scientists. These scientists assume, and then model, autonomous heterogeneous agent behavior, and from this model study how supra-agent structures are created (4–6). Social scientists need to thank postmodernists for their constant reminder about the reality of heterogeneous social agent behavior, but they need to stop listening to postmodernists at this point and instead study the epistemology of “new” normal science. Finally, social scientists need to take note of the other nonpostmodernist postpositivisms that give legitimacy: scientific realism, evolutionary epistemology, and the model-centered science of the Semantic Conception (7–9).

We begin by describing recent trends in philosophy of science, starting with Suppe's (10) epitaph for positivism. Next we present the most recent view of the role of models in science espoused by Morgan and Morrison (11). Then we briefly mention complexity science as a means of framing the elements of “new” normal science concerns and epistemology—a theme starting with Mainzer (12) and resting on foundational theories by other leading scientists. Our connection of postmodernist ontology with “new” normal science ontology comes next (13). Finally, we use this logic chain to establish the institutional legitimacy of agent-based social science modeling and its rightful claim at the center of “new” Social Science.

Post-Positivist Philosophy of Science

Although philosophy originally came before science, since Newton, philosophers have been trailing with their reconstructed logic (9) of how well science works. But there is normal science and then there is social science. Like philosophy of science, social science always seems to follow older normal science epistemology—but searching for institutional legitimacy rather than reconstructed logic. At the end of the 20th century, however, (i) normal science is leading efforts to base science and epistemology directly on the study of heterogeneous agents, thanks to complexity science; and (ii) philosophy of science is also taking great strides to get out from under the classical physicists' view of science. Social science lags behind—especially the modeling side—mostly still taking its epistemological lessons from classical physics. At this time, useful lessons for enhancing social science legitimacy are emerging from both normal science and philosophy of science. We begin with the latter.

Suppe (10) wrote the epitaph for positivism and relativism. Although the epitaph has been written, a positivist legacy remains, details of which are discussed by Suppe and McKelvey (9). The idea that theories can be unequivocally verified in search for a universal unequivocal “Truth” is gone; the idea that “correspondence rules” can unequivocally connect theory terms to observation terms is gone. The role of axioms as a basis of universal Truth, absent empirical tests, is negated. However, the importance of models and experiments is reaffirmed.

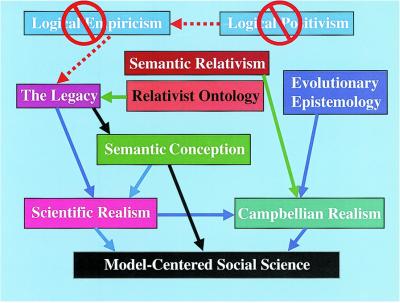

Although the term “postpositivism” often refers to movements such as relativism, poststructuralism, critical theory, and postmodernism, there is another trail of postpositivisms shown in Fig. 1. These lead to a reaffirmation of a realist, model-centered epistemology; this is the new message from philosophy for social science.

Fig. 1.

Recent trends in the philosophy of science.

Campbellian Realism.

A model-centered evolutionary realist epistemology has emerged from the positivist legacy. Elsewhere (9), it is argued that both model-centered realism—accountable to the legacy of positivism—and evolutionary realism—accountable to the dynamics of science after relativism fell—continue under the label Campbellian Realism. Campbell's view may be summarized into a tripartite framework that replaces historical relativism (9) for the purpose of framing a dynamic realist epistemology. First, much of the literature from Lorenz forward has focused on the selectionist evolution of the human brain, our cognitive capabilities, and our visual senses (9, 14), concluding that these capabilities do indeed give us accurate information about the world in which we live (8).

Second, Campbell (15) draws on the hermeneuticists' coherence theory to attach meaning to terms. He argues that, over time, members of a scientific community attach increased scientific validity to an entity as that entity's meanings increasingly cohere across members. The coherentist approach selectively winnows out the worst of theories and approaches a more probable truth.

Third, Campbell (16) combines scientific realism with semantic relativism. Nola (9) separates relativism into three kinds:

Ontologically nihilistic. “Ontological relativism is the view that what exists, whether it be ordinary objects, facts, the entities postulated in science, etc., exists only relative to some relativizer, whether that be a person, a theory or whatever” (9).

Epistemologically nihilistic. Epistemological relativisms may allege that what is known or believed is relativized to individuals, cultures, or frameworks; what is perceived is relative to some incommensurable paradigm; and that there is no general theory of scientific method, form of inquiry, rules of reasoning or evidence that has privileged status. Instead they are variable with respect to times, persons, cultures, and frameworks.

Semantically weak. Semantic relativism holds that truth and falsity are “… relativizable to a host of items from individuals to cultures and frameworks. What is relativized is variously sentences, statements, judgments or beliefs” (9).

Nola observes that relativists (Kuhn, among others) espouse both semantic and epistemological relativism (9). Relativisms familiar to social scientists range across all three kinds. Campbell considers himself a semantic relativist and an ontological realist (16). This produces an ontologically strong relativist dynamic epistemology. On this account, the coherence process within a scientific community continually develops in the context of selectionist testing for ontological validity. Thus, the socially constructed coherence enhanced theories of a scientific community are tested against real-world phenomena. The real-world phenomena, i.e., ontological entities, provide the criterion variables against which semantic variances are eventually narrowed and resolved. Less ontologically correct theoretical entities are winnowed out. This process does not guarantee error free “Truth,” but it does move science in the direction of increased verisimilitude.

Campbellian Realism is crucial because elements of positivism and relativism remain in social science. Campbell folds into one epistemology: (i) dealing with metaphysical terms; (ii) objectivist empirical investigation; (iii) recognition of socially constructed meanings of terms; and (iv) a dynamic process by which a multiparadigm discipline might reduce to fewer, more significant theories. He defines a critical, hypothetical, corrigible, scientific realist, selectionist evolutionary epistemology as follows (9):

A scientific realist postpositivist epistemology that maintains the goal of objectivity in science without excluding metaphysical terms.

A selectionist evolutionary epistemology governing the winnowing out of less probable theories, terms, and beliefs in the search for increased verisimilitude may do so without the danger of systematically replacing metaphysical terms with operational terms.

A postrelativist epistemology that incorporates the dynamics of science without abandoning the goal of objectivity.

An objectivist selectionist evolutionary epistemology that includes as part of its path toward increased verisimilitude the inclusion of, but also the winnowing out of the more fallible, individual interpretations and social constructions of the meanings of theory terms comprising theories purporting to explain an objective external reality.

The epistemological directions of Campbellian realism have strong foundations in the scientific realist and evolutionary epistemology communities (8). The singular advantage of realist method is its empirically based, self-correcting approach to the discovery of truth. Broad consensus does exist that these statements reflect what is best about current philosophy of science.

To date, evolutionary realism has amassed a considerable body of literature, as reviewed by Azevedo (8). Along with Campbell, Azevedo stands as a principal proponent of realist social science. Nothing is more central in Azevedo's analysis than her “mapping model of knowledge”—making her epistemology as model-centered as is presented here. Furthermore, she and we emphasize isolated idealized structures. Her analysis elaborates the initial social constructionist applications of realism to social science by Bhaskar (17) and Campbell (16), and accounts for heterogeneous agent behavior.

The New “Model-Centered” Epistemology.

In the development of Campbellian Realism (9) model-centeredness is a key element of scientific realism. In this section, the development of a model-centered social science is fleshed out by defining the Semantic Conception and its rejection of the axiomatic definition of science. It closes with a scale of scientific excellence based on model-centering.

Models may be iconic or formal. Most social science lives in the shadow of economics departments dominated by economists trained in the context of theoretical (mathematical) economics. Axioms are defined as self-evident truths comprised of primitive syntactical terms. In the axiomatic conception of science, one assumes that formalized mathematical statements of fundamental laws reduce back to a basic set of axioms and that the correspondence rule procedure is what attaches discipline-specific semantic interpretations to the common underlying axiomatic syntax. In logical positivism, formal syntax is “interpreted” or given semantic meaning by means of correspondence rules (C-rules). For positivists, theoretical language, VT, expressed in the syntax of axiomaticized formal models becomes isomorphic to observation language, VO, as follows (10):

The terms in VT are given an explicit definition in terms of VO by correspondence rules C—that is, for every term “F ” in VT, there must be given a definition for it of the following form: for any x, Fx ≡ Ox.

Thus, given appropriate C-rules, scientists are to assume VT in an “identity” relation with VO.

The advantage of this view is that there seems to be a common platform to science and a rigor of analytical results. This conception eventually died for three reasons: (i) axiomatic formalization and correspondence rules proved untenable and were abandoned; (ii) newer 20th century sciences did not appear to have any common axiomatic roots and were not easily amenable to the closed-system approach of Newtonian mechanics; and (iii) parallel to the demise of the Received View, the Semantic Conception of theories developed as an alternative approach for attaching meaning to syntax.

Essential Elements of the Semantic Conception.

Parallel to the fall of The Received View [Putnam's (9) term combining logical positivism and logical empiricism] and its axiomatic conception, and starting with Beth's (3, 9) seminal work dating back to the Second World War, we see the emergence of the Semantic Conception of theories, Suppes (9), Suppe (7, 10), van Fraassen (9), and Giere (9). Cartwright's (9, 11) “simulacrum account” follows, as does the work of Thompson (18) in biology and Read (19) in anthropology among others. Suppe (7) says, “the Semantic Conception of Theories today probably is the philosophical analysis of the nature of theories most widely held among philosophers of science.” There are four key aspects:

From axioms to phase-spaces.

Following Suppe, we will use phase-space. A phase-space is defined as a space enveloping the full range of each dimension used to describe an entity. Thus, one might have a regression model for school systems in which variables, e.g., district performance as measured by student achievement, spending per student, and measures of administrative intensity, might range from near zero to whatever number defines the upper limit on each dimension. These dimensions form the axes of an n-dimensional Cartesian phase-space. Phase-spaces are defined by their dimensions and by all possible configurations across time as well. In the Semantic Conception, the quality of a science is measured by how well it explains the dynamics of phase-spaces—not by reduction back to axioms. Suppe (10) recognizes that in social science a theory may be “qualitative” with non-measurable parameters, whereas Giere (9) says theory is the model (which for him is stated in set-theoretic terms—a logical formalism). Nothing precludes “improvements” such as symbolic/syntactic representation, set-theoretic logic, symbolic logic, mathematical proofs, or foundational axioms.

Isolated idealized structures.

Semantic Conception epistemologists observe that scientific theories never represent or explain the full complexity of some phenomenon. A theory (i) “does not attempt to describe all aspects of the phenomena in its intended scope; rather it abstracts certain parameters from the phenomena and attempts to describe the phenomena in terms of just these abstracted parameters” (10); (ii) assumes that the phenomena behave according to the selected parameters included in the theory; and (iii) is typically specified in terms of its several parameters with the full knowledge that no empirical study or experiment could successfully and completely control all of the complexities that might affect the designated parameters. Azevedo (8) uses her mapping metaphor to explain that no map ever attempts to depict the full complexity of the target area, seeking instead to satisfy the specific interests of the mapmaker and its potential users. Similarly, a theory usually predicts the progression of the idealized phase-space over time, predicting shifts from one abstraction to another under the assumed idealized conditions. Classic examples given are the use of point masses, ideal gasses, frictionless slopes, and assumed uniform behavior of atoms, or rational actors. Laboratory experiments are always carried out in the context of closed systems whereby many of the complexities of real-world phenomena are ignored—manipulating one variable, controlling some variables, assuming others are randomized, and ignoring the rest. They are isolated from the complexity of the real world, and the systems represented are idealized.

Model-centered science and bifurcated adequacy tests.

Models compose the core of the Semantic Conception. Its view of the theory–model phenomena relationship is: (i) theory, model, and phenomena are viewed as independent entities; and (ii) science is bifurcated into two related activities, analytical and ontological, where theory is indirectly linked to phenomena mediated by models. The view presented here, with models as centered between theory and phenomena, sets them up as autonomous agents is consistent with others in Morgan and Morrison (11)—although for us model autonomy comes more directly from the Semantic Conception than for Morrison or Cartwright.

Analytical Adequacy focuses on the theory–model link. It is important to emphasize that in the Semantic Conception “theory” is always expressed by means of a model. “Theory” does not attempt to use its “If A, then B ” epistemology to explain “real-world” behavior. It only explains “model” behavior. It does its testing in the isolated idealized world of the model. A mathematical or computational model is used to structure up aspects of interest within the full complexity of the real-world phenomena and defined as “within the scope” of the theory. Then the model is used to elaborate the “If A, then B ” propositions of the theory to consider how a social system—as modeled—might behave under various conditions.

Ontological Adequacy focuses on the model–phenomena link. Developing a model's ontological adequacy runs parallel with improving the theory–model relationship. How well does the model represent real-world phenomena? How well does an idealized wind-tunnel model of an airplane wing represent the behavior of a full sized wing in a storm? How well might a computational model from biology, such as Kauffman's (4) NK model that has been applied to firms actually represent coevolutionary competition in, say, the laptop computer industry. In some cases it involves identifying various coevolutionary structures, that is, behaviors that exist in some domain and building these effects into the model as dimensions of the phase-space. If each dimension in the model adequately represents an equivalent behavioral effect in the real world, the model is deemed ontologically adequate (9).

Theories as families of models.

A difficulty encountered with the axiomatic conception is the belief that only one theory–model conception should build from the underlying axioms. Because the Semantic Conception does not require axiomatic reduction, it tolerates multiple theories and models. Set-theoretical, mathematical, and computational models are considered equal contenders to more formally represent real-world phenomena. In evolutionary theory there is no single “theory” of evolution. Instead, evolutionary theory is a family of theories that includes theories explaining the processes of variation, natural selection, and heredity, and a taxonomic theory of species (9). Because the Semantic Conception does not require axiomatic reduction, it tolerates multiple theories and models. Thus, “truth” is not defined in terms of reduction to a single model. Set-theoretical, mathematical, and computational models are considered equal contenders to more formally represent real-world phenomena. Under the Semantic Conception, social sciences may progress toward improved analytical and ontological adequacy with families of models and without an axiomatic base.

A Guttman Scale of Effective Science.

So far, four nonrelativist postpositivisms have been identified that remain credible within the present-day philosophy of science community: the Legacy of positivism, Scientific Realism, Selectionist Evolutionary Epistemology, and the Semantic Conception. As a simple means of summarizing the most important elements of these four literatures and showing how well social science measures up in terms of the institutional legitimacy standards inherent in these postpositivisms, seven criteria are distilled that are essential to the pursuit of effective science. The seven criteria appear as a Guttman scale from easiest to most difficult. To be institutionally legitimate, current epistemology holds that theories in social science must be accountable to these criteria. Strong sciences, i.e., physics, chemistry, and biology, meet all criteria. Many, if not most, social science theory applications to social phenomena do not meet any but the first and second criteria.

Avoidance of metaphysical terms.

If we were to hold to the “avoid metaphysical entities at all costs” standard of the positivists, social science would fail this minimal standard because it is difficult to gain direct knowledge of even the basic entity, the social system. Scientific realists Aronson, Harré, and Way (9) remove this problem by virtue of their “principle of epistemic invariance.” They argue that the “metaphysicalness” of terms is independent of scientific progress toward truth. The search and truth-testing process of science is defined as fallibilist with “probabilistic” results. It is less important to know for sure whether the fallibility lies with fully metaphysical terms (e.g., “school governance policy”), eventually detectable terms (e.g., “learning ability”), or as measurement error with regard to observation terms (e.g., “number of school buses”), or with the probability that the explanation or model differs from real-world phenomena (9). Whatever the reason, empirical findings are only true with some probability, and selective elimination of error improves the probability.

Nomic necessity.

Nomic necessity holds that one kind of protection against attempting to explain a possible accidental regularity occurs when rational logic can point to a strong relation between a transcendental generative mechanism and a result—if A were to occur, then regularity B would occur. Consider the “discovery” that “… legitimization affects rates of [organizational] founding and mortality… ” (9). The posited causal proposition is “if legitimacy, then growth.” But there is no widely agreed on underlying causal structure, mechanism, or process that explains the observed regularity. Because there are many firms with no legitimacy that have grown rapidly because of a good product, the proposition seems false. But one aspect to the theory of population dynamics is clearly not an accidental regularity. In a niche with defined resources, a population will grow almost exponentially when it is small relative to the resources available, and growth will approach zero as the it reaches the carrying capacity of the niche. This proposition explains changes in population growth by identifying an underlying causal mechanism—the difference between resources used and resources available—formalized as the Lotka–Volterra logistic growth model: dN/dt = rN(K − N/K). The model expresses the underlying causal mechanism, and it is presumed that if the variables are measured and their relationship over time is as the model predicts then the underlying mechanism is mostly likely present—truth always being probable and fallible.

Bifurcated model-centered science.

The use of “model-centeredness” has two implications: (i) are theories mathematically or computationally formalized; and (ii) are models the center of bifurcated scientific activities—the theory–model link and the model–phenomena link? A casual review of most social science journals indicates that social science is a long way from routinely formalizing the meaning of a theoretical explanation, as is common in physics and economics. Few data based empirical studies in social science have the mission of empirically testing the real-world fit of a formalized model—they mostly try to test unformalized hypotheses directly on the full complexity of the real world.

Experiments.

Meeting nomic necessity by specifying underlying causal mechanisms is only half the problem. Using experiments to test propositions reflecting law-like relations is critically important. Cartwright (11) goes so far as to say that even in physics all theories are attached to causal findings—like stamps on an envelope. Lalonde (20) shows that the belief of many econometricians—that econometrics substitutes for experiments (including even the 2-stage model leading to Heckman's Nobel Prize)—is false. The recourse is to set up an experiment, take away cause A, and see whether regularity B disappears—add A back in and see whether B reappears.

Separation of analytical and ontological tests.

This standard augments the nomic necessity, model-centeredness, and analytical results criteria by separating theory-testing from model-testing. In mature sciences, theorizing and experimenting are usually done by different scientists. This practice presumes that most people are unlikely state-of-the-art on both. Thus, if we are to have an effective science applied to social systems, we should eventually see two separate activities: (i) theoreticians working on the theory–model link, using mathematical or computational model development, with analytical tests carried out by means of the theory–model link; and (ii) empiricists linking model-substructures to real-world structures. The prevailing social science tendency toward attempting only direct theory–phenomena adequacy tests follows a mistaken view of how effective sciences progress.

Verisimilitude by means of selection.

For selection to produce movement toward less fallible truth, the previous standards have to have been met across an extensive mosaic of trial-and-error learning adhering to separate analytical and ontological adequacy tests. Population ecology meets this standard. As the Baum (21) review indicates, there is a 20-year history of theory–model and model–phenomena studies with a steady inclination over the years to refine the adequacy of both links by the systematic removal of the more fallible theories and/or model ideas and the introduction and further testing of new ideas.

Instrumental reliability.

Classical physics achieves success because its theories have high instrumental reliability, meaning that they have high analytical adequacy—every time a proposition is tested in a well-constructed test situation the theories predict correctly and reliably. It has high ontological adequacy because its formal models contain structures or phase-space dimensions that accurately represent real-world phenomena “within the scope” of various theories used by engineers and scientists for their studies. Idealizations of models in classical physics have high isomorphism with the physical systems about which scientists and engineers are able to collect data.

It seems unlikely that social science will be able to make individual event predictions. Even if social science moves out from under its archaic view of research—that theories are tested by looking directly to real-world phenomena—it still will suffer in instrumental reliability compared with the natural sciences. Natural scientists' lab experiments more reliably test nomic-based propositions, and their lab experiments have much higher ontological representative accuracy. Consequently, natural science theories will usually produce higher instrumental reliability.

The good news is that the Semantic Conception makes this standard easier to achieve. Our chances improve if we split analytical adequacy from ontological adequacy. By having some research focus only on the predictive aspects of a theory–model link, the chances improve of finding models that test propositions with higher analytical instrumental reliability—the complexities of uncontrolled real-world phenomena are absent. By having other research activities focus only on comparing model-structures and processes across the model–phenomena link, ontological instrumental reliability will also improve. In these activities, reliability hinges on the isomorphism of the generative effects causing both model and real-world behavior, not on whether predictions occur with high probability. Thus, in the Semantic Conception, instrumental reliability now rests on the joint probability of two elements: predictive analytic reliability and model-structure reliability, each of which is higher by itself.

Instrumental reliability is no guarantee of improved verisimilitude in transcendental realism. The Semantic Conception protects against this with the bifurcation discussed above. Instrumental reliability does not guarantee “predictive analytical reliability” tests of theoretical relationships about transcendental causes. If this part fails, the truth-test fails. However, this does not negate the “success” and legitimacy of a science resulting from reliable instrumental operational-level event predictions even though the theory may be false. Ideally, analytic adequacy and verisimilitude eventually catch up and replace false theories.

If science is not based on nomic necessity and centered on formalized computational or mathematical models, it has little chance of moving up the Guttman scale. Such is the message of late 20th century postpositivist philosophy of normal science. This message tells us very clearly that in order for social science to improve its institutional legitimacy it must become model-centered.

Molding Effects of Models on Social Science

Models as Autonomous Agents.

There can be little doubt that mathematical models have dominated science since Newton. Further, mathematically constrained language (logical discourse), since the Vienna Circle (circa 1907), has come to define good science in the image of classical physics. Indeed, mathematics is good for a variety of things in science. More broadly, math plays two roles in science. In logical positivism (which morphed into logical empiricism; see ref. 10), math supplied the logical rigor aimed at assuring the truth integrity of analytical (theoretical) statements. As Read (19) observes, the use of math for finding “numbers” actually is less important in science than its use in testing for rigorous thinking. But, as is wonderfully evident in the various chapters in the Morgan and Morrison (11) anthology, math is also used as an efficient substitute for iconic models in building up a “working” model valuable for understanding not only how an aspect of the phenomena under study behaves (the empirical roots of a model), but for better understanding the interrelation of the various elements comprising a transcendental realist explanatory theory (the theoretical roots).

Traditionally, a model has been treated as a more or less accurate “mirroring” of theory or phenomena—as a billiard ball model might mirror atoms. In this role it is a sort of “catalyst” that speeds up the course of science but without altering the chemistry of the ingredients. Morgan and Morrison et al. take dead aim at this view, however, showing that models are autonomous agents that can affect the chemistry. It is perhaps best illustrated in a figure supplied by Boumans (11). He observes that Cartwright, in her classic 1983 book “… conceive[s] models as instruments to bridge the gap between theory and data.” Boumans gives ample evidence that many ingredients influence the final nature of a model. Ingredients impacting models are metaphors, analogies, policy views, empirical data, math techniques, math concepts, and theoretical notions. Boumans' analyses are based on business cycle models by Kalecki, Frisch, and Tinbergen in the 1930s and Lukas (11) that clearly illustrate the warping resulting from “mathematical molding” for mostly tractability reasons and the influence of the various nontheory and non-data ingredients.

Models as autonomous agents, thus, become so both from math molding and influence by all of the other ingredients. Because the other ingredients could reasonably influence agent-based models as well as math models—as formal, symbol-based models, and because math models dominate formal modeling in social science (mostly in economics)—we now focus only on the molding effects of math models rooted in classical physics. As is evident from the four previously mentioned business cycle models, Mirowski's (2) broad discussion (not included here), and Read's (19) analysis (see below), the math molding effect is pervasive.

Much of the molding effect of math as an autonomous model/agent, as developed in classical physics and economics, makes two heroic assumptions: First, mathematicians in classical physics made the “instrumentally convenient” homogeneity assumption. This made the math more tractable. Second, physicists principally studied phenomena under the governance of the First Law of Thermodynamics (2), and within this Law, made the equilibrium assumption. Here the math model accounted for the translation of order from one form to another and presumed all phenomena varied around equilibrium points.¶

Math's Molding Effects on Sociocultural Analysis.

Read's (19) analysis of the applications of math modeling in archaeology illustrates how the classical physics roots of math modeling and the needs of tractability give rise to assumptions that are demonstrably antithetical to a correct understanding, modeling, and theorizing of human social behavior. Although his analysis is ostensibly about archaeology, it applies generally to sociocultural systems. Most telling are assumptions he identifies that combine to show just how much social phenomena have to be warped to fit the tractability constraints of the rate studies framed within math molding process of calculus. They focus on universality, stability, equilibrium, external forces, determinism, global dynamics at the expense of individual dynamics, etc.

Given the molding effect of all these assumptions, it is especially instructive to quote Read, the mathematician, worrying about equilibrium-based mathematical applications to archaeology and sociocultural systems.

In linking “empirically defined relationships with mathematically defined relationships… [and] the symbolic with the empirical domain… a number of deep issues… arise… . These issues relate, in particular, to the ability of human systems to change and modify themselves according to goals that change through time, on the one hand, and the common assumption of relative stability of the structure of … [theoretical] models used to express formal properties of systems, on the other hand… . A major challenge facing effective—mathematical—modeling of the human systems considered by archaeologists is to develop models that can take into account this capacity for self-modification according to internally constructed and defined goals.”

“In part, the difficulty is conceptual and stems from reifying the society as an entity that responds to forces acting on it, much as a physical object responds in its movements to forces acting on it. For the physical object, the effects of forces on motion are well known and a particular situation can, in principle, be examined through the appropriate application of mathematical representation of these effects along with suitable information on boundary and initial conditions. It is far from evident that a similar framework applies to whole societies.”

“Perhaps because culture, except in its material products, is not directly observable in archaeological data, and perhaps because the things observable are directly the result of individual behavior, there has been much emphasis on purported ‘laws’ of behavior as the foundation for the explanatory arguments that archaeologists are trying to develop. This is not likely to succeed. To the extent that there are ‘laws’ affecting human behavior, they must be due to properties of the mind that are consequences of selection acting on genetic information… ‘laws’ of behavior are inevitably of a different character than laws of physics such as F = ma. The latter, apparently, is fundamental to the universe itself; behavioral ‘laws’ such as ‘rational decision making’ are true only to the extent to which there has been selection for a mind that processes and acts on information in this manner… . Without virtually isomorphic mapping from genetic information to properties of the mind, searching for universal laws of behavior … is a chimera.”

Common throughout these and similar statements are Read's observations about “the ability of [reified] human systems to change and modify themselves,” be “self-reflective,” respond passively to “forces acting” from outside, “manipulation by subgroups,” “self-evaluation,” “self-reflection,” “affecting and defining how they are going to change,” and the “chimera” of searching for “behavioral laws” reflecting the effects of external forces.

Molding Effects on Economic Analysis.

The “attack” on the homogeneity and equilibrium assumptions in Orthodox Economics occurred when Nelson and Winter (22) tried to shift the exemplar science from physics to biology. They argue that Orthodoxy takes a static view of order-creation in economies, preferring instead to develop the mathematics of thermodynamics in studying the resolution of supply/demand imbalances within a broader equilibrium context. Also, Orthodoxy takes a static or instantaneous conception of maximization and equilibrium. Nelson and Winter introduce Darwinian selection as a dynamic process over time, substituting routines for genes, search for mutation, and selection via economic competition.

Rosenberg¶ observes that Nelson and Winter's book fails because Orthodoxy still holds to energy conservation mathematics (under the1st Law of Thermodynamics) to gain the prediction advantages of thermodynamic equilibrium and the latter framework's roots in the axioms of Newton's orbital mechanics. Also, whatever weakness in predictive power Orthodoxy has, Nelson and Winter's approach failed to improve it. Therefore, economists had no reason to abandon Orthodoxy since, following physicists, they emphasize predictive science. Rosenberg goes on to note that biologists have discovered that the mathematics of economic theory actually fits biology better than economics, especially because gene frequency analysis meets the equilibrium stability requirement for mathematical prediction. He notes, in addition, that two other critical assumptions of mathematical economics, infinite population size and omniscient agents hold better in biology than in economics.

In parallel, complexity scientists Hinterberger¶ and Arthur et al. (23) critique economic orthodoxy and its reliance on the equilibrium assumption. The latter describe economies as follows:

Dispersed Interaction—dispersed, possibly heterogeneous, agents active in parallel;

No Global Controller or Cause—coevolution of agent interactions;

Many Levels of Organization—agents at lower levels create contexts at higher levels;

Continual Adaptation—agents revise their adaptive behavior continually;

Perpetual Novelty—by changing in ways that allow them to depend on new resources, agents coevolve with resource changes to occupy new habitats;

Out-of-Equilibrium Dynamics—economies operate “far from equilibrium,” meaning that economies are induced by the pressure of trade imbalances, individual to individual, firm to firm, country to country, etc.

Despite their anthology's focus on economies as complex systems, after reviewing all of the chapters, most of which rely on mathematical modeling, the editors ask, “… in what way do equilibrium calculations provide insight into emergence?” (23). Durlauf (24) says, “a key import of the rise of new classical economics has been to change the primitive constituents of aggregate economic models: although Keynesian models used aggregate structural relationships as primitives, in new classical models individual agents are the primitives so that all aggregate relationships are emergent.” Scrapping the equilibrium and homogeneity assumptions and emphasizing instead the role of heterogeneous agents in social order-creation processes is what brings the ontological view of complexity scientists in line with the ontological views of postmodernists.¶

Parallels Between Connectionist Modeling and Postmodernism

Postmodernism rails against positivism and normal science in general (25) and has a “lunatic fringe.” But, at its core is a process of sociolinguistic order-creation isomorphic to processes in agent-based modeling. The order-creation core, when connected with agent-based modeling, provides an additional platform of institutional legitimacy for social science. In short, its ontology is on target but its trashing of normal science epistemology is based on defunct logical positivist dictums.

The term postmodernism originated with the artists and art critics of New York in the 1960s (26). Then French theorists such as Saussure, Derrida, and Lyotard took it up. Subsequently, it was picked up by those in the “Science, Technology, and Society Studies… feminists and Marxists of every strip, ethnomethodologists, deconstructionists, sociologists of knowledge, and critical theorists” (25). From Koertge's perspective, some key elements of postmodernism and its critique of science are:

“… content and results [of science]… shaped by… local historical and cultural context;”

“… products of scientific inquiry, the so-called laws of nature, must always be viewed as social constructions. Their validity depends on the consensus of ‘experts’ in just the same way as the legitimacy of a pope depends on a council of cardinals;

“… the results of scientific inquiry are profoundly and importantly shaped by the ideological agendas of powerful elites.”

“… scientific knowledge is just ‘one story among many’… Euroscience is not objectively superior to the various ethnosciences and shamanisms described by anthropologists or invented by Afrocentrists.”

A comprehensive view of postmodernism is elusive because its literature is massive and exceedingly diverse (27). But if a “grand narrative” were framed, it would be self-refuting, because post-modernism emphasizes localized language games searching for instabilities. Further, it interweaves effects of politics, technology, language, culture, capitalism, science, and positivist/relativist epistemology as society has moved from the Industrial Revolution through the 20th century (6). Even so, Alvesson and Deetz capture some elements of postmodernism in the following points (27):

Reality, or “‘natural’ objects,” can never have meaning that is less transient than the meaning of texts that are locally and “discursively produced,” often from the perspective of creating instability and novelty rather than permanency.

“Fragmented identities” dominate, resulting in subjective and localized production of text. Meanings created by autonomous individuals dominate over objective “essential” truths proposed by collectives (of people).

The “indecidabilities of language take precedence over language as a mirror of reality.”

The impossibility of separating political power from processes of knowledge production undermines the presumed objectivity and truth of knowledge so produced—it loses its “sense of innocence and neutrality.”

The “real world” increasingly appears as “simulacra”—models, simulations, computer images, etc.—that “take precedence in contemporary social order.”

The key insight underlying the claim that postmodernism offers institutional legitimacy to social science comes when the latter is viewed as creating order through heterogeneous agent behavior. This insight is from a wonderful book by Paul Cilliers (13). He draws principally from Saussure, Derrida, and Lyotard. He interprets postmodernism from the perspective of a neural net modeler, emphasizing connections among agents rather than attributes of the agents themselves—based on how brains and distributed intelligence function. In the connectionist perspective, brain functioning is not in the neurons, or “in the network” but rather “is the network” (6). Distributed intelligence also characterizes most social systems (6). Cilliers sets out ten attributes of complex adaptive systems (shown in italics) and connects these attributes to key elements of postmodern society:

“Complex systems consist of a large number of elements.” Postmodernists focus on individuality, fragmented identities, and localized discourse.

“The elements in a complex system interact dynamically.” Postmodernists emphasize that no agent is isolated; their subjectivity is an intertwined “weave” of texture in which they are decentered by a constant influx of meanings from their network of connections.

“… Interaction is fairly rich.” Postmodernists view agents as subject to a constant flow and alteration of meanings applied to texts they are using at any given time.

“Interactions are nonlinear.” Postmodernists hold that interactions of multiple voices and local interactions lead to change in meanings of texts, that is, emergent meanings that do not flow evenly. Thus, social interaction is not predictably systematic, power and influence are not evenly distributed, and few things are stable over time. Emergent interpretations and consequent social interactions are nonlinear and could show large change outcomes from small beginnings.

“The interactions are fairly short range.” Postmodernists emphasize “local determination” and “multiplicity of local ‘discourses’.” Locally determined, socially constructed group level meanings, however, inevitably seep out to influence other groups and agents within them.

“There are loops in the interconnections.” Postmodernists emphasize reflexivity. Local agent interactions may form group level coherence and common meanings. These then, reflexively, supervene back down to influence the lower-level agents. This fuels their view that meanings—interpretations of terms—are constantly in flux—“they are contingent and provisional, pertaining to a certain context and a certain time frame.”

“Complex systems are open systems.” An implicit pervasive subtext in postmodernism it is that agents, groups of agents, and groups of groups, etc., are all subject to outside influences on their interpretations of meanings. Postmodernists see modern societies as subject to globalization and to the complication of influence networks.

“Complex systems operate under conditions far from equilibrium.” McKelvey translates the concept of “far from equilibrium” into adaptive tension.¶ In postmodern society the mass media provide local agents, and groups constant information about disparities in the human condition in general. The disparities set up adaptive tensions generating energy and information flows that create conditions: (i) fostering social self-organization and increasing complexity; and (ii) disrupting equilibria; that (iii) lead to rapid technological change, scientific advancement and new knowledge, which in turn reflex back to create more disparity and nonlinearity.

“Complex systems have histories.” Postmodernists see history as individually and locally interpreted. Therefore, histories do not appear as grand narratives uniformly interpreted across agents.

“Individual elements are ignorant of the behavior of the whole system in which they are embedded.” Agents are not equally well connected with all other parts of a larger system. Any agent's view of a larger system is at least in part colored by the localized interpretations of other interconnected agents.

Postmodernism is notorious for its anti-science views (25). Many anti-science interpretations may be dismissed as totally off the mark. In the evolutionary epistemological terms of Campbellian realism, they will be quickly winnowed out of epistemological discourse. It is also true that much of postmodernist rhetoric is based on the positivists' reconstructions of epistemology based on classical physicists' linear deterministic equilibrium analyses of phenomena. As such, its rhetoric is archaic—it is based on a reconstruction of science practice that has since been discredited (10). The core of postmodernism described here does, however, support a strong interconnection between “new” normal science—as reflected in complexity science—and postmodernism; both rest on parallel views of socially connected, autonomous, heterogeneous, human agents.

Conclusion: Agent-Based Models as “Philosophically Correct”

The title of this paper, “Foundations of ‘New’ Social Science,” may sound pretentious. But consider the following.

Model-Centered Science.

Toward the end of the 20th century, philosophers moved away from positivism to adopt a more probabilistic view of truth statements. Campbell's contribution is to recognize that real-world phenomena may act as external criterion variables against which theories may be tested without social scientists having to reject individual interpretationist tendencies and social construction. Models are the central feature of the Semantic Conception as in the bifurcation of scientific activity into tests of the theory–model relationship and the model–phenomena link. In this view, theory papers should end with a (preferably) formalized model and empirical papers should start by aiming to test the ontological adequacy of one. Most social science papers are not so oriented.

Math Molding Effects.

The message from the Morgan and Morrison chapters speaks to the autonomous influence of math models on science. Read points to the fundamental molding effect of math models on social science. He points to their fundamental limitation, “… A major challenge facing effective—mathematical—modeling… is to develop models that can take into account… [agents'] capacity for self-modification according to internally constructed and defined goals.” Basically, the assumptions required for tractable mathematics steer models away from the most important aspects of human behavior. To the extent that there are formal models in social science they tend to be math models—a clear implication to be drawn from Henrickson's citation survey. Few social scientists use models immune to the molding effects of the math model.

Postmodernism's Connectionist Core.

We do need to give relativists and postmodernists credit for reminding us that “We ARE the Brownian Motion!” Most natural scientists are separated from their “agents” by vast size or distance barriers. Social scientists are agents doing their science right at the agent level. Most sciences do not have this luxury. But it also means a fundamental difference. We are face to face with stochastic heterogeneous agents and their interconnections. Social scientists should want a scientific modeling epistemology designed for studying bottom-up order-creation by agents. Unfortunately, many postmodernists base their anti-science rhetoric on an abandoned epistemology and ignore a “new” normal science ontological view very much parallel to its own. As Cilliers argues, postmodernism zeros in on the web of interconnections among agents that give rise to localized scientific textual meanings. In fact, its ontology parallels that of complexity scientists. The lesson from complexity science is that natural scientists have begun finding ways to practice normal science without assuming away the activities of heterogeneous autonomous agents. There is no reason, now, why social scientists cannot combine “new” normal science epistemology with postmodernist ontology. Yet very few have done so.

Legitimacy.

Given the connectionist parallels between complexity science and postmodernist views of human agents, we conclude that their ontological views are isomorphic. Complexity science ontology has emerged from the foundational classic and quantum physics and biology. Postmodernist ontology has emerged from an analysis of the human condition. Thus, an epistemology based on complexity science and its agent-based modeling approaches may be applied to social science ontology as reflected in the agent-based ontology of postmodernism. “New” social science also draws legitimacy from other sources:

Campbellian realism, coupled with the model-centered science of the Semantic Conception, bases scientific legitimacy on theories aimed at explaining transcendental causal mechanisms or processes, the insertion of models as an essential element of epistemology, and the use of real-world phenomena as the criterion variable leading to a winnowing out of less plausible social constructions.

The core of postmodernism sets forth an ontology that emphasizes meanings based on the changing interconnections among autonomous, heterogeneous social agents—this connectionist-based, social agent-based ontology from postmodernism offers social science second basis of improved legitimacy.

The “new” normal science emerging from complexity science has developed an agent- and model-centered epistemology that couples with the ontological legitimacy from postmodernism. This offers a third basis of improved legitimacy.

Model-centered science is a double-edged sword. On the one hand, formalized models are reaffirmed as a critical element in the already legitimate sciences and receive added legitimacy from the Semantic Conception in philosophy of science. On the other, the more we learn about models as autonomous agents—that offer a third influence on the course of science, in addition to theory and data—the more we see the problematic molding effects math models have on social science. In short, math models are inconsistent with the new agent- and model-centered epistemology, as they require assuming away both the core postmodernist ontology and “new” normal science ontology. Thus, alternative formal modeling approaches—such as agent-based modeling—gain credibility. This offers a fourth basis of improved legitimacy.

In a classic paper, Cronbach (28) divided research into two essential technologies: experiments and correlations. Since then we have added math modeling. As Henrickson's journal survey shows, nonlinear computational models are rapidly on the increase in the natural sciences. Rounding out the social scientists research tool bag and finding a technology that fits well with social phenomena surely adds a fifth basis of improved legitimacy.

Agent-based models offer a platform for model-centered science that rests on the five legitimacy bases described in the previous points: agent-based models support a model-centered social science that rests on strongly legitimated connectionist, autonomous, and heterogeneous agent-based ontology and epistemology. Yet very few social scientists have connected the use of agent-based models with the five bases of legitimacy.

Agent-based modeling should emerge as the preferred modeling approach. Future, significant, social science contributions will emerge more quickly if science-based beliefs are based the joint results of both agent-modeling and subsequent empirical corroboration. Each of the five bases of legitimacy that support our use of the word, should, rest on solid ontological and epistemological arguments where “analytical adequacy” builds from agent-based models and “ontological adequacy” builds from the presumption that social behavior results from the interactions of heterogeneous agents.

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “Adaptive Agents, Intelligence, and Emergent Human Organization: Capturing Complexity through Agent-Based Modeling,” held October 4–6, 2001, at the Arnold and Mabel Beckman Center of the National Academies of Science and Engineering in Irvine, CA.

A recent view is that the most significant dynamics in bio- and econospheres are not variances around equilibria, but are caused by the interactions of autonomous, heterogeneous agents energized by contextually imposed tensions. A review of these causes of emergent order in physics, biology, and the econosphere can be found in McKelvey's paper presented at the Workshop on Thermodynamics and Complexity Applied to Organizations, European Institute for Advanced Studies in Management, Brussels, September 28, 2001.

References

- 1.Henrickson, L. (2002) Nonlinear Dynamics, Psychology and the Life Sciences, in press. [PubMed]

- 2.Mirowski P., (1989) More Heat than Light (Cambridge Univ. Press, Cambridge, U.K.).

- 3.McKelvey B. (1997) Org. Sci. 8, 351-380. [Google Scholar]

- 4.Kauffman S. A., (1993) The Origins of Order (Oxford Univ. Press, New York).

- 5.Kauffman S., (2000) Investigations (Oxford Univ. Press, New York).

- 6.McKelvey B. (2001) Int. J. Innovation Manage. 5, 181-212. [Google Scholar]

- 7.Suppe F., (1989) The Semantic Conception of Theories and Scientific Realism (Univ. of Illinois Press, Urbana-Champaign).

- 8.Azevedo J., (1997) Mapping Reality (State Univ. of New York Press, New York).

- 9.McKelvey B. (2001) in Companion to Organizations, ed. Baum, J. A. C. (Blackwell, Oxford), pp. 752–780.

- 10.Suppe F., (1977) The Structure of Scientific Theories (Univ. of Chicago Press, Chicago).

- 11.Morgan M. S. & Morrison, M., (2000) Models as Mediators (Cambridge Univ. Press, Cambridge, U.K.).

- 12.Mainzer K., (1997) Thinking in Complexity (Springer, New York).

- 13.Cilliers P., (1998) Complexity and Postmodernism (Routledge, London).

- 14.Campbell D. T. (1974) in The Philosophy of Karl Popper, The Library of Living Philosophers, ed. Schilpp, P. A. (Open Court, La Salle, IL), Vol. 14, parts I and II, pp. 47–89.

- 15.Campbell D. T. (1991) Int. J. Educ. Res. 15, 587-597. [Google Scholar]

- 16.Campbell D. T. & Paller, B. T. (1989) in Issues in Evolutionary Epistemology, eds. Hahlweg, K. & Hooker, C. A. (Univ. of New York Press, New York), pp. 231–257.

- 17.Bhaskar R., (1975) A Realist Theory of Science (Leeds Books, London).

- 18.Thompson P., (1989) The Structure of Biological Theories (State Univ. of New York Press, Albany).

- 19.Read D. W. (1990) in Mathematics and Information Science in Archaeology, ed. Voorrips, A. (Holos, Bonn), pp. 29–60.

- 20.Lalonde R. J. (1986) Am. Econ. Rev. 76, 604-620. [Google Scholar]

- 21.Baum J. A. C. (1996) in Handbook of Organization Studies, eds. Clegg, S. R., Hardy, C. & Nord, W. R. (Sage, Thousand Oaks, CA), pp. 77–114.

- 22.Nelson R. & Winter, S., (1982) An Evolutionary Theory of Economics (Belknap, Cambridge, MA).

- 23.Arthur W. B., Durlauf, S. N. & Lane, D. A., (1997) The Economy as an Evolving Complex System. Proceedings of the Santa Fe Institute (Addison–Wesley, Reading, MA), Vol. XXVII, pp. 1–14.

- 24.Durlauf S. N. (1997) Complexity 2, 31-37. [Google Scholar]

- 25.Koertge N., (1989) A House Built on Sand (Oxford Univ. Press, New York).

- 26.Sarup M., (1993) An Introductory Guide to Post-Structuralism and Postmodernism (Univ. of Georgia Press, Athens, GA).

- 27.Alvesson M. & Deetz, S. (1996) in Handbook of Organization Studies, eds. Clegg, S. R., Hardy, C. & Nord, W. R. (Sage, Thousand Oaks, CA), pp. 191–217.

- 28.Cronbach L. J. (1957) Am. Psychol. 12, 671-684. [Google Scholar]