Summary

To overcome the resistance to applications of biotechnology, research on risk perception must take a closer look at the public's reasons for rejecting this technology

As the intense debate about genetically modified (GM) crops and food in Europe and elsewhere shows, the public has become increasingly wary of new technologies that harbour possible risks. Probably even more important, they are not willing to accept some applications of these technologies without previous and thorough debate on their implications and potential hazards (Frewer, 2003). Although some of the earlier resistance has disappeared—for instance, against the use of GM bacteria to produce drugs—current developments and applications of gene technology still give rise to controversy. The debate over the potential dangers of GM crops is not a new development—in fact, molecular biology shares this fate with other technological advances, most notably nuclear power production.

...the level of perceived risk of a new technology or product is an important early indicator of the public's alertness about its potential hazards

The controversies over modern technologies usually centre on their risks—the known, potential and as yet unknown hazards that they may pose to human health, the environment or society. A major problem in these discussions is the fact that experts—scientists and engineers—and the general public often have very different notions of risk, which has hampered communication between these groups. This is due not only to communication strategies that played down the role of dialogue in the public communication of risk, but also to a flawed view of the public's attitude to, and experience of, risk. Consequently, this article focuses on risk perception or, to be more specific, on how the public perceives and understands risk in contrast to experts' calculations. A better understanding of peoples' reactions to new developments helps not only to devise better communication strategies but also to identify new and potential problems. In fact, the level of perceived risk of a new technology or product is an important early indicator of the public's alertness to its potential hazards.

Owing to its important role in communication, research on risk perception has become increasingly important for the management of technology and hazards because it is believed to be a driving force behind policy decisions, such as the demand for risk reduction. Social scientists, many of them psychologists, have conducted studies of risk perception and attitudes for almost 25 years (Slovic, 2000), but there is no consensus on what drives public attitudes. The field of risk perception research was stimulated by a seminal paper by Fischhoff and colleagues (Fischhoff et al, 1978), which established what was to become a paradigmatic approach to risk perception, called the 'psychometric model'. The underlying theory of the model is that the public's perception of risk is driven by emotional reactions—often referred to as dread or 'gut feelings'—and ignorance. A further thesis of this model is that risk perception directly drives risk policy. However, a distinction between risk perception and policy is rarely made with this model. It is also commonly argued that the gap between experts and the public needs to be eliminated and to do so we must establish trust in the judgements made by experts and risk managers (Slovic, 1993; Slovic et al, 1991). Nevertheless, people seem to be less affected by their trust in experts or industry than by the extent to which they believe that science does not have all the answers—that is, by the limits of scientific knowledge (Sjöberg, 2001a).

...people seem to be less affected by their trust in experts or industry than by the extent to which they believe that science does not have all the answers—that is, by the limits of scientific knowledge

These views dominate the field but they tend to be misleading, as we have found in our own research during the past 15 years. Indeed, current models of risk perception only account for a relatively modest share of the overall variance of how and why people regard something as risky or hazardous. Dread, for instance, is not the most important factor in perceived risk; more important is whether a technology is seen as interfering with natural processes (Sjöberg, 1996b, 2000b; Frewer et al, 1996a).

In this article, we present various data on the perceived risk of genetic engineering from several studies. In general, we found that risk is perceived as relatively small when compared with a large number of other risks, and that risk to others is seen as clearly more dangerous than a risk to oneself. We also found that trust has only a moderate role in risk perception, and that New Age beliefs have some role in accounting for risk perception. Another important factor is 'interference with nature', which is clearly relevant in the case of GM organisms. This factor is closely related to moral beliefs, which have been to some extent neglected in risk perception research (Sjöberg & Winroth, 1986).

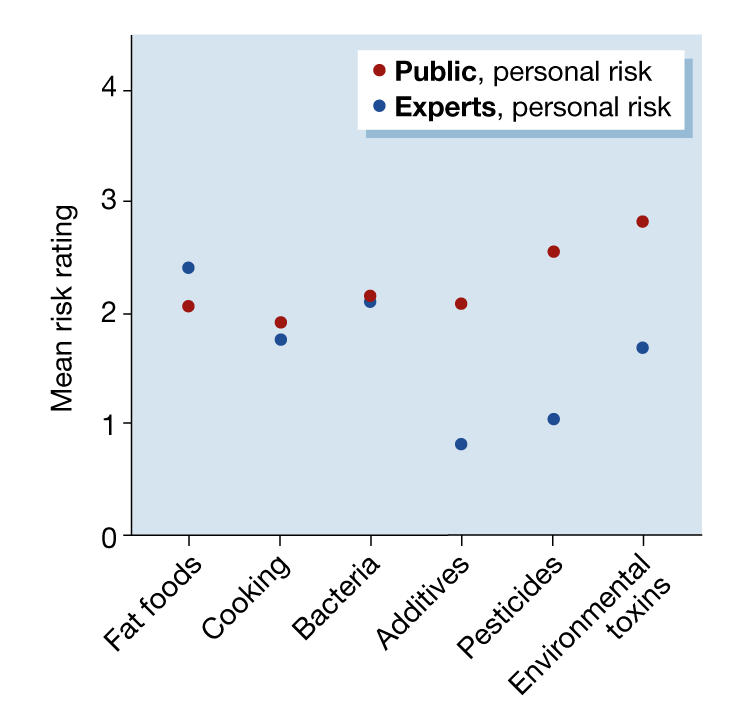

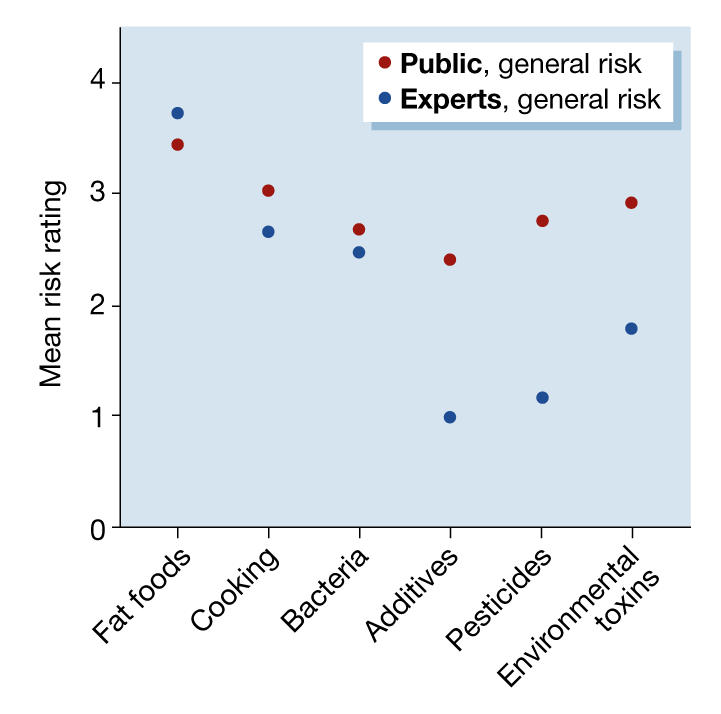

Another important finding is that experts' perceptions of risk are actually not that different from public perceptions. Experts make risk judgements on the basis of factors and thought structures that are similar to those of the public, but their level of perceived risk will be drastically lower than that of the public (Sjöberg, 2002a). There may be interesting exceptions to this thesis because experts may assume the different roles of 'protectors' and 'promoters' (Fromm, 2004; Sjöberg, 1991), but this issue needs more investigation. It is often stated that experts' risk perception is affected by the probability and extent of harm, but not by 'qualitative' factors, such as voluntary risk, newness of risk, and so on (Slovic et al, 1979). This assertion, mainly based on data from the 1970s, has not been supported by later work (Sjöberg, 2002a). Because there is a dramatic gap between experts' and managers' risk perceptions and those of the public and many politicians, this is clearly a conflict that needs to be resolved. Figures 1 and 2 give risk perception data for experts and the public with regard to food-related risks (Sjöberg, 1996a; Sjöberg et al, 1997). The figures show that experts judged some of the risks as much smaller than did the public. This was true for both general and personal risks. The differences in the level of perceived risk were dramatic and even the rank order of risks was reversed. It is interesting to note that the difference was most pronounced for risks that could be associated with food production rather than the behaviour of consumers. The latter is not as clearly within the domain of responsibility of the experts.

Experts make risk judgements on the basis of factors and thought structures that are similar to those of the public...

Figure 1.

Mean ratings of perceived food risk, personal risk (for six types of risk)

Figure 2.

Mean ratings of perceived food risk, general risk (for six types of risk)

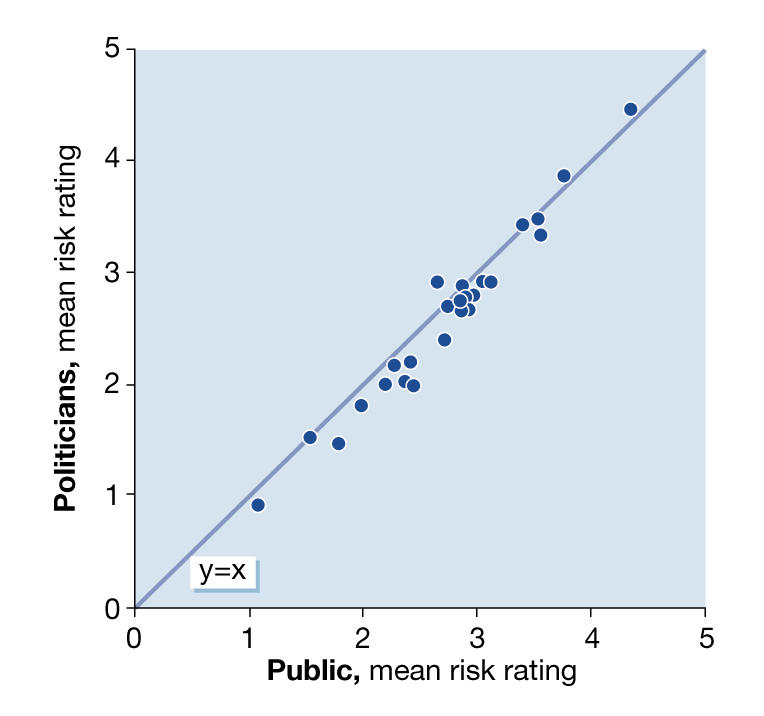

There have been only a few studies on the risk perception of other special groups, such as politicians. In one major study at the local level in Swedish municipalities, we found that politicians saw risks in a similar way to the public (Fig 3; Sjöberg, 1996b). This type of finding adds an important dimension to the expert–public gap in risk perception: politicians tend to side with the public. In a way, this is as it should be in a democracy (Sjöberg, 2001b), however, people's (whether citizens or politicians) unwillingness to accept scientific risk assessments remains a societal problem.

Figure 3.

Mean risk ratings by politicians plotted against those of the public

Risk is mainly related to the probability of negative events. A risk communicator often wants to provide information about probabilities, but probabilities are hard to understand, and are based on elaborate and debatable models and assumptions. People want to avoid disastrous consequences no matter how small the experts assert their probability to be. Experts who vouch for the probabilities as being negligible are taking a big risk. Unexpected events occur all the time, and today's complex technologies tend to behave, at times, in unexpected ways. Demand for risk mitigation is not strongly related to perceived risk, but to the expected consequences of accidents or other unwanted events (Sjöberg, 2000a). Risk communication strategies are frequently proposed to solve this conflict (Frewer et al, 1998), but communication may have the paradoxical effect of increasing risk awareness, no matter what its contents (Scholderer & Frewer, 2003). For instance, one study found that more knowledgeable people were more ambivalent about genetic testing (Jallinoja & Aro, 2000). Consequently, risk communication remains a difficult task and has achieved only limited progress thus far (Fischhoff, 1995).

Given the public's strong resistance to some applications of genetic engineering technologies, it is not surprising that this technology has become a focus of research on risk perception. Siegrist & Bühlmann (1999) performed a multidimensional scaling of various gene technology applications and found two factors that have a major role in how the risks of these technologies are construed: the type of application, such as food versus medicine, and the organism modified, such as plants versus animals. The first factor seems to be most important for technology acceptance, which was low for food and high for medicine. Other researchers found low acceptance of genetically engineered food, with perceived benefits counteracting this tendency (Frewer et al, 1996b, 1997). Frewer and colleagues (1996b, 1998) emphasized the variation of responses to different technologies. In addition, there is a geographical factor: sceptical attitudes to some applications of gene technology seem to be more common in Europe (Siegrist, 2003) than in the USA (Madsen et al, 2003). Possibly, media reports about new hazards have had an important role in Europe (Frewer et al, 2002). At the same time, increased negative media coverage, starting at the end of the 1990s, seemed to elicit worry and concern among sectors of the American public too (Shanahan et al, 2001).

Other variables that have been correlated with perceived risk and acceptance of gene technology are world views (Siegrist, 1999), gender and environmental attitudes (Siegrist, 1998). Trust has also been mentioned as an important variable (Siegrist, 2000). Siegrist's work on trust is interesting but his conclusions can be disputed. Some of his work shows fairly modest relationships between trust and risk perception, such as a 15–20% explained variance in risk perception on the basis of trust, and is in line with most other work on this topic (Sjöberg, 1999). But in a few of his data sets, the amount of explained variance is much higher. In those cases, perceived risk and trust were both measured by attitude scales that were formally similar and had the same response scale (agree–disagree, a so-called Likert scale). We recently found the same results in a special methodological study (Sjöberg, 2002b). Perceived risk and trust were both measured by the usual methodology of rating scales, and the relationship was found to be modest, with some 15% explained variance. However, when both dimensions were measured by means of attitude scale items (Likert scales) using the same response format, the explained variance increased to 30%. The role of trust in risk perception has therefore been questioned in recent work. Eiser, Miles and Frewer (2002) found evidence against the notion of trust and/or risk being factors causally related to technology acceptance. They suggested that trust, risk and acceptance all reflected similar notions.

Many of the surveys conducted on gene technology, such as the European Union's Eurobarometer, is opinion polling rather than attitude research. For example, Eurobarometer 46.1 reported that people had little knowledge about and were negative towards biotechnology (Biotechnology and the European Public Concerted Action Group, 1997), and subsequent work (Eurobarometer 52.1) has verified these findings (Gaskell et al, 2003). Moral issues, and concerns about 'unnatural' technologies emerged as important aspects influencing this attitude (Gaskell et al, 2000). Pardo, Midden and Miller (2002), who suggested a more thorough analysis of attitudes towards gene technology, achieved better results in the sense that they were able to explain more of the variance of perceived risk.

The surveys conducted in the Eurobarometer programme may be difficult for the lay public to understand, as they include scientific terms such as 'stem cell' or 'cloning'. In addition, relatively few questions are asked and an in-depth understanding of attitudes is consequently hard to achieve. To address these problems, we collected risk perception data from a total of 2,338 respondents in three waves in 1996, for an EU project that has recently ended. Risks for oneself (personal risk) and for others (general risk) were studied, which is an important distinction between this and other work on risk perception (Sjöberg, 2003).

Data on risk perception on genetic engineering can be seen in Table 1. The data show that the hazards of genetic engineering were judged to be very small (personal risk) or small (general risk), and rated very low in a list of 34 hazards. Only about 4% of the respondents rated the risk as large, and about 15% were uncertain as to how to rate the risk. So, if less than 5% are seriously concerned about the risks of genetic engineering, why is the population at large still against many applications of this technology? There have been several attempts to explain this, the most well-known being Fischhoff's psychometric model. The two major dimensions of this theory—novelty and dread—are claimed to account for social 'ripple effects' that occur when a risk becomes known to the public. Thus, strong negative reactions to nuclear technology were explained (Fischhoff et al, 1978) by referring to data that showed nuclear power to be rated as new and dreaded, suggesting that the reactions were due to the public's ignorance and emotional response. However, the psychometric model usually accounts for a mere 20% of the variation in perceived risk among individuals, so there are obviously several other contributory factors. It does not, for instance, include background and demographic data, although women usually rate risks as both more dangerous and more likely than do men. In a similar way, older people rate risks as greater than do younger people. There is also a moderate relationship between perceived risk and socio-economic status, with poor people rating risks as greater than do rich people. Demographic data usually account for about 5% of the variation in risk perception.

People want to avoid disastrous consequences no matter how small the experts predict their probability to be

Table 1.

Judgements of the risk of genetic engineering (n = 2338)

| Personal risk | General risk | |

|---|---|---|

| Mean on 0–7 scale | 1.69 | 2.59 |

| Rank among 34 hazards | 31.0 | 28.0 |

| Number rating risk as 'large' or 'very large' | 3.7% | 5.3% |

| Number of 'don't know' | 15.4% | 15.4% |

In our work, we have therefore looked for other factors that determine people's perception of a given technology's risk—in our case, genetic engineering. We found clear evidence for a third dimension of hazard quality, which we termed 'interference with nature'. Technologies that are seen as disturbing and that interfere with nature and natural processes tend to be perceived as risky, and sometimes also as immoral. The 'interference with nature' factor has been confirmed several times and is about as important to risk perception as the 'severity of consequences'. In one study, participants were asked to rate 25 characteristics of the GM food hazard. Three main factors appeared: severity of consequences, new risk and interference with nature. Scores were computed for each factor and correlated with the rating applied to the risk of genetic engineering (Table 2). These data show the importance of the 'interference with nature' factor in assessing risk, and also the weak relationship between perceived risk and its novelty. Several other studies support this finding: novelty does not seem to be very important in assessing perceived risk.

Table 2.

Correlations* between the perceived risk of genetic engineering and risk dimensions (n = 152)

| Personal risk | General risk | |

|---|---|---|

| Severity of consequences | 0.65 | 0.69 |

| Novelty | 0.24 | 0.17 |

| Interfering with nature | 0.50 | 0.56 |

*The correlations in this and later tables are all significant at the 0.05 level or better, unless otherwise stated.

Trust has also been suggested as an important determinant of perceived risk (Slovic et al, 1991). Trust can be specific and refer explicitly to a given hazard, or it can be general. We have constructed four scales measuring trust in corporations, in the general honesty of people, in politicians and in social harmony. These scales were correlated with personal and general risk of genetic engineering (Table 3). The table shows systematic and negative correlations between four types of trust on one hand, and personal and general risk of genetic engineering on the other. It is noteworthy that trust in corporations is particularly important for perceived risk. Most previous research has been oriented towards trust in government or experts.

Table 3.

Correlations between the perceived risk of genetic engineering and risk dimensions (n = 152)

| Trust in: | Personal risk | General risk |

|---|---|---|

| Corporations | −0.40 | −0.44 |

| General honesty | −0.29 | −0.37 |

| Politicians | −0.21 | −0.27 |

| Social harmony | −0.23 | −0.30 |

These correlations are sizable but may not always be replicable. In our major EU study, it was possible to detect and define a fifth factor called 'cynical suspiciousness'. This is a new aspect of trust not studied before. Correlations between this factor and the perceived risk of genetic engineering are given in Table 4. These data suggest that the trust dimension explains some 15% of the variance. Other studies of trust and risk perception have given similar results, although an interesting exception was also noted (Viklund, 2003).

Table 4.

Correlations between the perceived risk of genetic engineering and risk dimensions (n = 720)

| Trust in: | Personal risk | General risk |

|---|---|---|

| Corporations | −0.10 | −0.26 |

| General honesty | −0.04 | −0.18 |

| Politicians | −0.05 | −0.15 |

| Social harmony | −0.03 | −0.09 |

| Cynical suspiciousness | 0.10 | 0.21 |

We also studied the influence of New Age beliefs on risk perception and found that they were also related to perceived risk of genetic engineering (Table 5; Sjöberg & af Wåhlberg, 2002). The four dimensions we discovered and measured were: New Age beliefs about a spiritual dimension of existence; beliefs in the physical reality of the soul; denial of the relevance of analytical thinking and scientific knowledge; and belief in various paranormal phenomena. This type of variable, combined for all four dimensions, accounted for some 10% of the variance.

Table 5.

Correlations between the perceived risk of genetic engineering and New Age-type beliefs (n = 152)

| Personal risk | General risk | |

|---|---|---|

| New Age beliefs | 0.33 | 0.30 |

| Beliefs in the physical reality of the soul | 0.22 | 0.24 |

| Denial of analytical knowledge | 0.19 | 0.20 |

| Belief in paranormal phenomena | 0.20 | 0.21 |

A final and well-known approach to modelling risk perception is the so-called 'cultural theory' suggested by Douglas & Wildavsky (1982). This theory, as put into practice by Wildavsky & Dake (1990), posits that there are four 'world views'—hierarchy, egalitarianism, individualism and fatalism—which account for risks in various ways. Separate measurements of these views were correlated with perceived risk, and Wildavsky & Dake (1990) found that correlations were fairly large and in accordance with expectations from the theory. We constructed scales to measure these views, and related the findings to the perceived risk of genetic engineering (Table 6). Although the largest of these correlations is statistically significant, they are all very small. 'Cultural theory' alone, therefore, cannot explain risk perception. These results, as well as similar results by others, led to the conclusion that cultural theory is only very weakly related to perceived risk.

Table 6.

Correlations between the perceived risk of genetic engineering and world views according to Cultural theory (n = 797)

| Personal risk | General risk | |

|---|---|---|

| Hierarchy | −0.08 | −0.07 |

| Egalitarianism | 0.09 | 0.08 |

| Individualism | −0.05 | −0.09 |

| Fatalism | 0.06 | 0.01 |

Overall, the level of public alertness concerning the risks of genetic engineering is rather low in the Swedish population. However, a minority is quite concerned and a considerable number of respondents are uncertain. Although novelty and dread have traditionally been asserted as being the most important factors in accounting for perceived risk, they obviously do not provide the whole picture. What we have seen from these studies is that novelty is, in fact, not that important, whereas other factors, such as 'interference with nature', have a major role.

Other important variables also exist. Social trust accounts for about 10% of the variance, as do New Age and anti-science beliefs and 'trendy' superstitions. It is interesting to note that since the 1970s, opposition to technology has evolved in parallel with the New Age movement. However, just as traditional folk superstitions were not correlated with risk perception, risk research and, accordingly, risk communication have so far been hampered by the seldom tested and erroneous assumption that public opposition and demands for risk reduction are driven by the perceived risk of a potentially hazardous activity. This is simply not true. Our research has shown that there are clearly more factors driving risk perception than novelty and dread, which are related to the severity and consequences of possible accidents. Risk research and communication should therefore also consider more general attitudes among the public, such as 'interference with nature' and New Age beliefs. Research on risk perception is clearly important if risk managers and policy makers want to overcome public resistance to genetic engineering and other new technologies.

Acknowledgments

This paper was supported by a grant from the Knut and Alice Wallenberg Foundation.

References

- Biotechnology and the European Public Concerted Action Group (1997) Europe ambivalent on biotechnology. Nature 387: 845–847 [DOI] [PubMed] [Google Scholar]

- Douglas M, Wildavsky A (1982) Risk and Culture. Berkeley, CA, USA: University of California Press [Google Scholar]

- Eiser JR, Miles S, Frewer L (2002) Trust, perceived risk, and attitudes toward food technologies. J Appl Soc Psychol 32: 1–12 [Google Scholar]

- Fischhoff B (1995) Risk perception and communication unplugged: twenty years of process. Risk Anal 15: 137–145 [DOI] [PubMed] [Google Scholar]

- Fischhoff B, Slovic P, Lichtenstein S, Read S, Combs B (1978) How safe is safe enough? A psychometric study of attitudes towards technological risks and benefits. Policy Sci 9: 127–152 [Google Scholar]

- Frewer L (2003) Societal issues and public attitudes towards genetically modified foods. Trends Food Sci Technol 14: 319–332 [Google Scholar]

- Frewer L, Howard C, Shepherd R (1997) Public concerns in the United Kingdom about general and specific applications of genetic engineering: risk, benefit and ethics. Sci Technol Human Values 22: 98–124 [DOI] [PubMed] [Google Scholar]

- Frewer L, Howard C, Aaron JI (1998) Consumer acceptance of transgenic crops. Pestic Sci 52: 388–393 [Google Scholar]

- Frewer L, Miles S, Marsh R (2002) The media and genetically modified foods: evidence in support of social amplification of risk. Risk Anal 22: 701–711 [DOI] [PubMed] [Google Scholar]

- Frewer LJ, Howard C, Hedderley D, Shepherd R (1996a) What determines trust in information about food-related risk? Risk Anal 16: 473–486 [DOI] [PubMed] [Google Scholar]

- Frewer LJ, Howard C, Shepherd R (1996b) The influence of realistic product exposure on attitudes towards genetic engineering of food. Food Quality Pref 7: 61–67 [Google Scholar]

- Fromm J (2004) Overemphasized or underestimated risks: a study of professionals in different fields of expertise. J Risk Res (in press) [Google Scholar]

- Gaskell G et al. (2000) Biotechnology and the European public. Nat Biotechnol 18: 935–938 [DOI] [PubMed] [Google Scholar]

- Gaskell G, Allum N, Stares S (2003) Europeans and Biotechnology in 2002. Brussels, Belgium: DG Research

- Jallinoja P, Aro AR (2000) Does knowledge make a difference? The association between knowledge about genes and attitude toward gene tests. J Hlth Commun 5: 29–39 [DOI] [PubMed] [Google Scholar]

- Madsen KH, Lassen J, Sandoe P (2003) Genetically modified crops: a US farmer's versus an EU citizen's point of view. Acta Agric Scand B – Soil Plant Sci 53: 60–67 [Google Scholar]

- Pardo R, Midden C, Miller JD (2002) Attitudes toward biotechnology in the European Union. J Biotechnol 98: 9–24 [DOI] [PubMed] [Google Scholar]

- Scholderer J, Frewer L (2003) The biotechnology communication paradox: experimental evidence and the need for a new strategy. J Consumer Policy 26: 125–157 [Google Scholar]

- Shanahan J, Scheufele D, Lee E (2001) Attitudes about agricultural biotechnology and genetically modified organisms. Public Opin Quart 65: 267–281 [DOI] [PubMed] [Google Scholar]

- Siegrist M (1998) Belief in gene technology: the influence of environmental attitudes and gender. Personality Indiv Diff 24: 861–866 [Google Scholar]

- Siegrist M (1999) A causal model explaining the perception and acceptance of gene technology. J Appl Soc Psychol 29: 2093–2106 [Google Scholar]

- Siegrist M (2000) The influence of trust and perceptions of risks and benefits on the acceptance of gene technology. Risk Anal 20: 195–204 [DOI] [PubMed] [Google Scholar]

- Siegrist M (2003) Perception of gene technology, and food risks: results of a survey in Switzerland. J Risk Res 6: 45–60 [Google Scholar]

- Siegrist M, Bühlmann R (1999) Die Wahrnehmung verschiedener gentechnischer Anwendungen: Ergebnisse einer MDS-Analyse. Zeitschrift für Sozialpsychologie 30: 32–39 [Google Scholar]

- Sjöberg L (1991) Risk Perception by Experts and the Public. Rhizikon: Risk Research Report No. 4. Stockholm, Sweden: Center for Risk Research [Google Scholar]

- Sjöberg L (1996a) Kost och hälsa—riskuppfattningar och attityder. Resultat av en enkätundersökning. Uppsala, Sweden: Livsmedelsverket

- Sjöberg L (1996b) Risk Perceptions by Politicians and the Public. Rhizikon: Risk Research Reports No. 23. Stockholm, Sweden: Center for Risk Research [Google Scholar]

- Sjöberg L (1999) in Social Trust and the Management of Risk (eds Cvetkovich G, Löfstedt RE) pp 89–99. London, UK: Earthscan [Google Scholar]

- Sjöberg L (2000a) Consequences matter, 'risk' is marginal. J Risk Res 3: 287–295 [Google Scholar]

- Sjöberg L (2000b) Perceived risk and tampering with nature. J Risk Res 3: 353–367 [Google Scholar]

- Sjöberg L (2001a) Limits of knowledge and the limited importance of trust. Risk Anal 21: 189–198 [DOI] [PubMed] [Google Scholar]

- Sjöberg L (2001b) Political decisions and public risk perception. Reliability Engn Syst Safety 72: 115–124 [Google Scholar]

- Sjöberg L (2002a) The allegedly simple structure of experts' risk perception: an urban legend in risk research. Sci Technol Human Values 27: 443–459 [Google Scholar]

- Sjöberg L (2002b) Attitudes to technology and risk: going beyond what is immediately given. Policy Sci 35: 379–400 [Google Scholar]

- Sjöberg L (2003) The different dynamics of personal and general risk. Risk Mgmt: Int J 5: 19–34 [Google Scholar]

- Sjöberg L, af Wåhlberg A (2002) New Age and risk perception. Risk Anal 22: 751–764 [DOI] [PubMed] [Google Scholar]

- Sjöberg L, Winroth E (1986) Risk, moral value of actions, and mood. Scandinavian J Psych 27: 191–208 [Google Scholar]

- Sjöberg L, Oskarsson A, Bruce Å, Darnerud PO (1997) Riskuppfattning hos experter inom området kost och hälsa. Uppsala, Sweden: Statens Livsmedelsverk

- Slovic P (1993) Perceived risk, trust, and democracy. Risk Anal 13: 675–682 [Google Scholar]

- Slovic P (ed) (2000) The Perception of Risk. London, UK: Earthscan [Google Scholar]

- Slovic P, Fischhoff B, Lichtenstein S (1979) Rating the risks. Environment 21: 14–20, 36–39 [Google Scholar]

- Slovic P, Flynn JH, Layman M (1991) Perceived risk, trust, and the politics of nuclear waste. Science 254: 1603–1607 [DOI] [PubMed] [Google Scholar]

- Viklund M (2003) Trust and risk perception: a West European cross-national study. Risk Anal 23: 727–738 [DOI] [PubMed] [Google Scholar]

- Wildavsky A, Dake K (1990) Theories of risk perception: who fears what and why? Daedalus 119: 41–60 [Google Scholar]