Abstract

Background: The use of clinical decision support systems to facilitate the practice of evidence-based medicine promises to substantially improve health care quality.

Objective: To describe, on the basis of the proceedings of the Evidence and Decision Support track at the 2000 AMIA Spring Symposium, the research and policy challenges for capturing research and practice-based evidence in machine-interpretable repositories, and to present recommendations for accelerating the development and adoption of clinical decision support systems for evidence-based medicine.

Results: The recommendations fall into five broad areas—capture literature-based and practice-based evidence in machine-interpretable knowledge bases; develop maintainable technical and methodological foundations for computer-based decision support; evaluate the clinical effects and costs of clinical decision support systems and the ways clinical decision support systems affect and are affected by professional and organizational practices; identify and disseminate best practices for work flow–sensitive implementations of clinical decision support systems; and establish public policies that provide incentives for implementing clinical decision support systems to improve health care quality.

Conclusions: Although the promise of clinical decision support system–facilitated evidence-based medicine is strong, substantial work remains to be done to realize the potential benefits.

Clinical decision support systems (CDSSs) have been hailed for their potential to reduce medical errors1 and increase health care quality and efficiency.2 At the same time, evidence-based medicine has been widely promoted as a means of improving clinical outcomes, where evidence-based medicine refers to the practice of medicine based on the best available scientific evidence. The use of CDSSs to facilitate evidence-based medicine therefore promises to substantially improve health care quality.

The Evidence and Decision Support track of the 2000 AMIA Spring Symposium examined the challenges in realizing the promise of CDSS-facilitated evidence-based medicine. This paper describes the activities of this track and summarizes discussions in specific research and policy recommendations for accelerating the development and adoption of CDSSs for evidence-based medicine.

Definitions

We introduce a new term, “evidence-adaptive CDSSs,” to distinguish a type of CDSS that has technical and methodological requirements that are not shared by CDSSs in general. To clarify this distinction between evidence-adaptive and other CDSSs, we define the following terms as they are used in this paper:

Evidence-based medicine. Evidence-based medicine is the management of individual patients through individual clinical expertise integrated with the conscientious and judicious use of current best evidence from clinical care research.3 This approach makes allowances for missing, incomplete, or low-quality evidence and requires the application of clinical judgment.

The scientific literature is the major source of evidence for evidence-based medicine, although literature-based evidence should often be complemented by local, practice-based evidence for individual and site-specific clinical decision making. Evidence-based medicine is conducted by the health care provider and may or may not be computer-assisted.

Clinical decision support system (CDSS). In this paper, we define clinical decision support systems to be software that designed to be a direct aid to clinical decision-making, in which the characteristics of an individual patient are matched to a computerized clinical knowledge base and patient-specific assessments or recommendations are then presented to the clinician or the patient for a decision.

Evidence-adaptive CDSS. This paper focuses on a subclass of CDSSs that are evidence-adaptive, in which the clinical knowledge base of the CDSS is derived from and continually reflects the most up-to-date evidence from the research literature and practice-based sources. For example, a CDSS for cancer treatment is evidence-adaptive if its knowledge base is based on current evidence and if its recommendations are routinely updated to incorporate new research findings. Conversely, a CDSS that alerts clinicians to a known drug–drug interaction is evidence-based but not evidence-adaptive if its clinical knowledge base is derived from scientific evidence, but no mechanisms are in place to incorporate new research findings.

Process

The speakers for the Evidence and Decision Support track are listed at the end of this paper. The track consisted of three panels and two break-out discussion sessions.

The first panel addressed the role of information technology in the dissemination and critical appraisal of research evidence, the technical challenges and opportunities of evidence-adaptive computerized decision support, and the organizational and workflow issues that arise when effecting practice change through information technology (Haynes, Tang, and Kaplan, respectively).

The second panel presented two case studies of evidence-based quality improvement projects (Packer, Stone) and summarized the status of the GuideLine Interchange Format (GLIF), a developing foundational technology for distributed evidence-adaptive CDSSs (Greenes). Finally, a commentator panel expanded on some of the pitfalls to changing practice through technology (Gorman) and on the information-technology funding agenda of the Agency for Healthcare Research and Quality (Burstin).

Interspersed with these panel presentations were two moderated break-out sessions, in which participants worked to identify the research and policy needs and priorities for effective computer-supported practice change.

All conference sessions were audiotaped. Using these audiotapes, we distilled five central areas of activity that are essential to the goal of increased adoption of CDSSs for evidence-based medicine.

Capture of both literature-based and practice-based research evidence into machine-interpretable formats suitable for CDSS use

Establishment of a technical and methodological foundation for applying research evidence to individual patients at the point of care

Evaluation of the clinical effects and costs of CDSSs, as well as how CDSSs affect and are affected by professional and organizational practices

Promotion of the effective implementation and use of CDSSs that have been shown to improve clinical performance or outcomes

Establishment of public policies that provide incentives for implementing CDSSs to improve health care quality

The Role of Evidence in Evidence-adaptive CDSSs

Clinical decision support systems can be only as effective as the strength of the underlying evidence base. That is, the effectiveness of CDSSs will be limited by any deficiencies in the quality or relevance of the research evidence. Therefore, one key step in developing more effective CDSSs is to generate not simply more clinical research evidence, but more high-quality, useful, and actionable evidence that is up-to-date, easily accessible, and machine interpretable.

Literature-based Evidence

Only about half the therapeutic interventions used in inpatient4,5 and outpatient6 care in internal and family medicine are supported in the research literature with evidence of efficacy. The other half of the interventions either have not been studied or have only equivocal supportive evidence. Several problems exist with using the research literature for evidence-based medicine. First, the efficacy studies of clinical practice that form the basis for evidence-based medicine constitute only a small fraction of the total research literature.7 Furthermore, this clinical research literature has been beset for decades with study design and reporting problems8,9—problems that still exist in the recent randomized trial,10 systematic review,11,12 and guidelines13 literature. As the volume of research publication explodes while quality problems persist, it is not surprising that most clinicians consider the research literature to be unmanageable14 and of limited applicability to their own clinical practices.15,16

The full promise of CDSSs for facilitating evidence-based medicine will occur only when CDSSs can “keep up” with the literature—that is, when evidence-adaptive CDSSs can monitor the literature for new relevant studies, identify those that are of high quality, and then incorporate the best evidence into patient-specific assessments or practice recommendations. Automation of these tasks remains an open area of research. In the meantime, the best electronic resources for evidence-based medicine include the Cochrane Library, Best Evidence, and Clinical Evidence, resources that cull the best of the literature to provide an up-to-date solid foundation for evidence-based practice. The drawback to these resources is that their contents are textual and thus not machine-interpretable by present-day CDSSs.

In contrast, if the research literature were available as shared, machine-interpretable knowledge bases, then CDSSs would have direct access to the newest research for automated updating of their knowledge bases. The Trial Bank project is a collaboration with the Annals of Internal Medicine and JAMA to capture the design and results of randomized trials directly into structured knowledge bases17 and is a first step toward the transformation of text-based literature into a shared, machine-interpretable resource for evidence-adaptive CDSSs.

Practice-based Evidence

Although the research literature serves as the foundation for evidence-based practice, it is not uncommon that local, practice-based evidence is required for optimizing health outcomes. For example, randomized trials have shown that patients with symptomatic carotid artery stenosis have fewer strokes if they receive a surgery called carotid endarterectomy.18 If complication rates from the surgery are greater than about 6 percent, however, the benefits are nullified.19 Despite this, only 19 percent of physicians know the CEA complication rates of the hospitals in which they operate or to which they refer patients.20 For clinical problems with locally variable parameters, therefore, developers of CDSSs should place a high priority on obtaining local practice-based evidence to complement the literature-based evidence.

Practice-based evidence may also be useful for the development of practice guidelines. Although the evidentiary support for individual decision steps in a guideline comes primarily from literature-based evidence, as discussed above, a guideline's process flow is usually constructed on the basis of expert opinion only. With more practice-based information on clinical processes and events, however, guideline developers may be able to improve the way they design process flows.

As useful as practice-based evidence may be, it is often not easy to come by. The informatics community can foster this much-needed research by developing information technologies for practice-based research networks to automatically capture clinical processes and events in diverse outpatient settings. Many research and policy issues concerning these research networks—from the standardization of data items to data ownership and patient privacy—are active areas of inquiry.21–24

Patient-directed Evidence

The Internet and other sources of research evidence have provided patients with many more options for obtaining health information but have also increased the potential for patients to misinterpret or become misinformed about research results.24,25 As a result, patients are now less dependent on clinicians for information, but still trust clinicians the most for help with selecting, appraising, and applying a profusion of information to health decisions.26 Clinical decision support systems can support this growing involvement of patients in clinical decision making through interactive tools that allow patients to explore relevant information that can foster shared decision making.27,28 Systems that provide both patients and clinicians with valid, applicable, and useful information may result in care decisions that are more concordant with current recommendations, are better tailored to individual patients, and ultimately are associated with improved clinical outcomes. The actual effects of these CDSSs on care decisions and outcomes should be evaluated.

Recommendations

The gap between the current state of CDSSs and the full promise of CDSSs for evidence-based medicine suggests a research and development agenda. On the basis of the expert panels and discussion sessions at the Congress, we recommend the following steps for researchers, developers, and implementers to take in the five areas of activity essential to increasing adoption of evidence-adaptive CDSSs.

Capture of Literature-based and Practice-based Evidence

If clinical research is to improve clinical care, it must be relevant, of high quality, and accessible. The research should provide evidence of efficacy, effectiveness, and cost-effectiveness for typical inpatient and outpatient practice settings.29 If CDSSs are to help translate this research into practice, CDSSs must have direct machine-interpretable access to the research literature, so that automated methods can be brought to bear on the myriad tasks involved in “keeping up with the literature.” Thus, the establishment of shared, machine-interpretable knowledge bases of research and practice-based evidence is a critical priority. On the basis of discussions at the conference, we identify six specific recommendations for action:

Recommendations for Clinical and Informatics Researchers

Conduct better quality clinical research on the efficacy, effectiveness, and efficiency of clinical interventions, particularly in primary care settings.

Continue to develop better methods for synthesizing results from a wide variety of study designs, from randomized trials to observational studies .

Develop shareable, machine-interpretable repositories of up-to-date evidence of multiple types (e.g., from clinical trials, systematic reviews, decision models).

Develop shareable, machine-readable repositories of executable guidelines that are linked to up-to-date evidence repositories.

Define and build standard interfaces among these repositories, to allow evidence to be linked automatically among systems for systematic reviewing, decision modeling, and guideline creation and maintenance.

Develop an informatics infrastructure for practice-based research networks to collect practice-based evidence.

Establishment of a Technical and Methodological Foundation

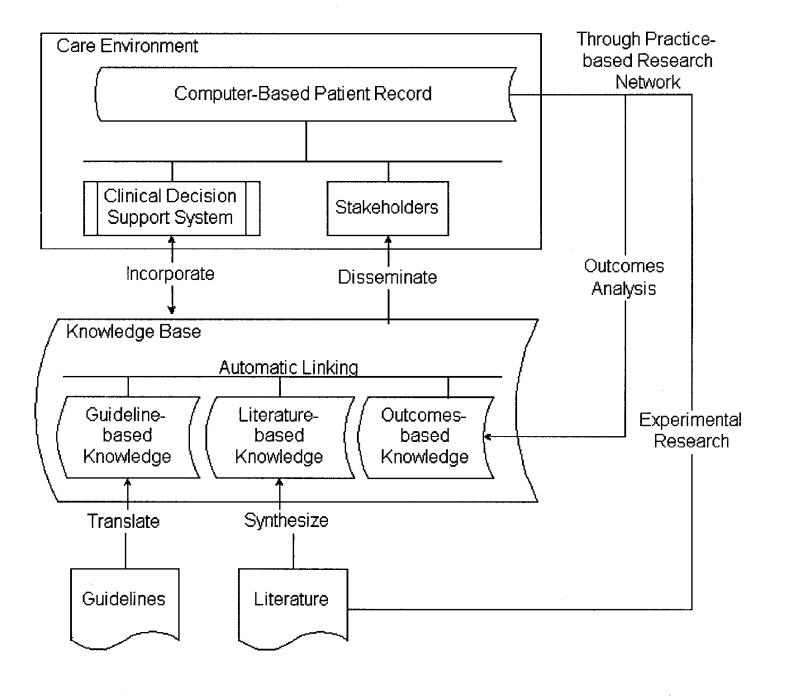

Figure 1▶ depicts the informatics architecture that we suggest is needed for CDSSs to facilitate evidence-based practice. In this architecture, CDSSs are situated in a distributed environment that comprises multiple knowledge repositories as well as the electronic medical record. Vocabulary and interface standards will be crucial for interoperation among these systems. To provide patient-specific decision support at the point of care, CDSSs need to interface with the electronic medical record to retrieve patient-specific data and, increasingly, also to effect recommended actions through computerized order entry. Evidence-adaptive CDSSs also need to interface with up-to-date repositories of clinical research knowledge. No longer should CDSSs be thought of as stand-alone expert systems.

Figure 1 .

Architecture for the capture and use of literature-based and practice-based evidence, showing the distributed nature of the knowledge and functionality involved in the use of CDSSs to support evidence-based medicine. Vocabulary and interface standards are needed for inter-operation of the various systems.

In addition to establishing standardized communication among CDSSs, electronic medical records, and knowledge repositories, we also need better models of individualized patient decision making in real-world settings. Formal models of decision making such as decision analysis are not commonly used; much methodological work remains to be done on mapping real-world decision-making challenges to tractable computational approaches.

We identify several additional priorities for evidence-adaptive CDSSs in particular. These priorities include the development of methods for adjusting for the quality of the evidence base, and efficient, sustainable methods for ensuring that CDSS recommendations reflect up-to-date evidence.

Recommendations for Researchers and Developers

Continue development of a comprehensive, expressive clinical vocabulary that can scale from administrative to clinical decision support needs.

Continue to develop shareable computer-based representations of clinical logic and practice guidelines.

Develop tools for knowledge editors to incorporate new literature-based evidence into CDSS knowledge bases; specify the clinical context in which that knowledge is applicable (e.g., that a rule is for the treatment of stable outpatient diabetic patients only); and customize the literature-based evidence for local conditions (e.g., factoring in local surgical complication rates).

Explore and develop automatic methods for updating CDSS knowledge bases to reflect the current state and quality of the literature-based evidence.

Develop more flexible models of decision making that can accommodate clinical evidence of varying methodological strength and relevance, so that evidence from randomized trials (Level I evidence by U.S. Preventive Services Task Force criteria30) is accorded more weight than evidence from case reports or expert opinion (Level III evidence).

Develop models of decision making that can simultaneously accommodate the beliefs, perspectives, and values of multiple decision makers, including those of physicians and patients.

Develop methods for constructing and selecting among decision models of scalable granularity and specificity that are neither too general nor too specific for the case at hand.

Recommendations for Current CDSS Developers

Adopt and use standard vocabularies and standards for knowledge representation (e.g., GLIF) as they become available.

We consider it axiomatic that CDSSs must be based on the best available evidence. Incorporate into the CDSS knowledge base the current best literature-based and practice-based evidence, and either provide mechanisms for keeping the knowledge base up-to-date or explain why keeping up with the evidence is not applicable.

Explicitly describe the care delivery setting and clinical scenarios for which the CDSS is applicable (e.g., that a CDSS for diabetes treatment is intended for the management of stable outpatient diabetics only).

Integrate CDSSs with electronic medical records and other relevant systems using appropriate interoperability standards (e.g., HL-7).

Develop more CDSSs for outpatient settings. Approximately 60 percent of U.S. physicians practice in outpatient settings, where an aging population is requiring increasingly complex diagnostic, treatment, and supportive services.

Recommendation for Policy Makers, Organizations, and Manufacturers

Fund development and demonstration of inter-organizational sharing of evidence-based knowledge and its application in diverse CDSSs.

Evaluation of Clinical Decision Support Systems

Despite the promise of CDSSs for improving care, formal evaluations have shown that CDSSs have only a modest ability to improve intermediate measures such as guideline adherence and drug dosing accuracy.31–34 The effect of CDSSs on clinical outcomes remains uncertain.32 Thus, more evaluations of CDSSs are needed to produce valid and generalizable findings on the clinical and organizational aspects of CDSS use. A wide variety of evaluation methods are available,35–37 and both quantitative and qualitative methods should be used to provide complementary insight into the use and effects of CDSSs. All types of evaluation studies, not just randomized trials, deserve increased attention and funding.38,39

In light of the current focus on errors in medicine, a special class of evaluation study deserves particular mention. These studies are ongoing, iterative reevaluations and redesigns of CDSSs that identify and amplify system benefits while identifying and mitigating unanticipated system errors or dangers. The rationale for these types of studies is that automation in other industries has not always been beneficial, and indeed, automation can interfere with and degrade overall organizational performance.40 Woods and Patterson41 offer a cautionary note from the transportation industry:

Despite the fact that these systems are often justified on the grounds that they would help offload work from harried practitioners, we find that they in fact create new additional tasks, force the user to adopt new cognitive strategies, require more knowledge and more communication, at the very times when the practitioners are most in need of true assistance .

Clinicians and health care managers must be continuously vigilant against unforeseen adverse effects of CDSS use.

Recommendations for Evaluators

Evaluate CDSSs using an iterative approach that identifies both benefits and unanticipated problems related to CDSS implementation and use: all CDSSs can benefit from multiple stages and types of testing, at all points of the CDSS life cycle.

Conduct more CDSS evaluations in actual practice settings, including ambulatory settings.

Use both quantitative and qualitative evaluation methodologies to assess multiple dimensions of CDSS use and design (e.g., the correctness, reliability, and validity of the CDSS knowledge base; the congruence of system-driven processes with clinical roles and work routines in actual practice; and the return-on-investment of system implementation). Qualitative studies should incorporate the expertise of ethnographers, sociologists, organizational behaviorists, or other field researchers from within and without the medical informatics community, as applicable.

If preliminary testing suggests that a CDSS could improve health outcomes, the CDSS should be evaluated to establish the presence or absence of clinical benefits. Any randomized clinical trials that are conducted should have sufficient sample sizes to detect clinically meaningful outcomes, should randomize physicians or clinical units rather than patients, and should be analyzed using methods appropriate for cluster randomization studies.

Establish partnerships between academic groups and community practices to conduct evaluations.

Promotion of the Implementation of CDSSs

Relatively few examples of CDSSs can be found in practice. In part, this limited adoption may be because CDSSs are as much an organizational as a technical intervention, and organizational, professional, and other challenges to implementing CDSSs may be as daunting as the technical challenges.

Recommendations for CDSS Implementers

Establish a CDSS implementation team composed of clinicians, information technologists, managers, and evaluators to work together to customize and implement the CDSS.

Develop a process for securing clinician agreement regarding the science underlying the recommendations of a CDSS. For evidence-adaptive CDSSs, a process is also needed for maintaining clinician awareness of and agreement with any changes in CDSS recommendations that may result from new evidence.

Plan explicitly for work flow re-engineering and other people, organizational, and social issues and incorporate change management techniques into system development and implementation. For example, a CDSS that recommends immediate angioplasty instead of thrombolysis as a new treatment option for acute coronary syndromes will necessitate a major restructuring of the hospital's resource use and work practices.

Establishment of Public Policies That Provide Incentives for Implementing CDSSs

Significant financial and organizational resources are often needed to implement CDSSs, especially if the CDSS requires integration with the electronic medical record or other practice systems. In a competitive health care marketplace, financial and reimbursement policies can therefore be important drivers both for and against the adoption of effective CDSSs. As more evaluation studies become available, policy makers will be better able to tailor these policies to promote only those CDSSs that are likely to improve health care quality.

Recommendations for Policy Makers

Develop financial and reimbursement policies that provide incentives for health-care providers to implement and use CDSSs of proven worth.

Develop and implement financial and reimbursement policies that reward the attainment of measurable quality goals, as might be achieved by CDSSs.

Promote coordination and leadership across the health care and clinical research sectors to leverage informatics promotion and development efforts by government, industry, AMIA, and others.

Conclusions

The coupling of CDSS technology with evidence-based medicine brings together two potentially powerful methods for improving health care quality. To realize the potential of this synergy, literature-based and practice-based evidence must be captured into computable knowledge bases, technical and methodological foundations for evidence-adaptive CDSSs must be developed and maintained, and public policies must be established to finance the implementation of electronic medical records and CDSSs and to reward health care quality improvement.

Acknowledgments

The authors thank the many discussion participants whose anonymous comments were included in this paper. They also thank Patricia Flatley Brennan for her helpful comments on an earlier draft of this manuscript, and Amy Berlin for her assistance in preparing the manuscript.

Appendix

Panelists and Group Leaders

Keynote Panelists:

R. Brian Haynes, MD, PhD, Chief, Health Information Research Unit, McMaster University

Paul Tang, MD, Medical Director of Clinical Informatics, Palo Alto Medical Foundation

Bonnie Kaplan, PhD, Yale Center for Medical Informatics and President, Kaplan Associates

Case Studies and Guidelines Panelists:

Marvin Packer, MD, Harvard Pilgrim Health Care

Tamara Stone, MBS, PhD, Assistant Professor of Health Management, University of Missouri

Robert Greenes, MD, PhD, Director, Decision Sciences Group, Brigham and Women's Hospital, Boston, Massachusetts

Expert Commentator Panelists:

Paul Gorman, MD, Assistant Professor, Oregon Health and Science University

Helen Burstin, MD, MPH, Director, Center for Primary Care Research, Agency for Healthcare Research and Quality

Discussion Group Leaders:

Gordon D. Brown, PhD, Health Management and Informatics, University of Missouri

Richard Bankowitz, MD, MBA, University Health System Consortium

Harold Lehmann, MD, PhD, Director of Medical Informatics Education, Johns Hopkins University School of Medicine

This work was supported in part by a United States Presidential Early Career Award for Scientists and Engineers awarded to Dr. Sim and administered through grant LM-06780 of the National Library of Medicine.

References

- 1.Bates DW, Cohen M, Leape LL, et al. Reducing the frequency of errors in medicine using information technology. J Am Med Inform Assoc. 2001;8:299–308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Teich JM, Wrinn MM. Clinical decision support systems come of age. MD Comput. 2000;17(1):43–6. [PubMed] [Google Scholar]

- 3.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence-based medicine: what it is and what it isn't [editorial]. BMJ. 1996;312(7023):71–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nordin-Johansson A, Asplund K. Randomized controlled trials and consensus as a basis for interventions in internal medicine. J Intern Med. 2000;247(1):94–104. [DOI] [PubMed] [Google Scholar]

- 5.Suarez-Varela MM, Llopis-Gonzalez A, Bell J, Tallon-Guerola M, Perez-Benajas A, Carrion-Carrion C. Evidence-based general practice. Eur J Epidemiol. 1999;15(9):815–9. [DOI] [PubMed] [Google Scholar]

- 6.Ellis J, Mulligan I, Rowe J, Sackett DL. Inpatient general medicine is evidence based. A-Team, Nuffield Department of Clinical Medicine. Lancet. 1995;346(8972):407–10. [PubMed] [Google Scholar]

- 7.Haynes RB. Where's the meat in clinical journals [editorial]? ACP J Club. 1993;Nov–Dec:A16.

- 8.Schor S, Karten I. Statistical evaluation of medical journal manuscripts. JAMA. 1966;195(13):1123–8. [PubMed] [Google Scholar]

- 9.Fletcher RH, Fletcher SW. Clinical research in general medical journals: a 30-year perspective. N Engl J Med. 1979;301(4):180–3. [DOI] [PubMed] [Google Scholar]

- 10.Moher D, Jadad AR, Nichol G, Penman M, Tugwell P, Walsh S. Assessing the quality of randomized controlled trials: an annotated bibliography of scales and checklists. Control Clin Trials. 1995;16(1):62–73. [DOI] [PubMed] [Google Scholar]

- 11.Sacks HS, Reitman D, Pagano D, Kupelnick B. Meta-analysis: an update. Mt Sinai J Med. 1996;63(3–4):216–24. [PubMed] [Google Scholar]

- 12.Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement—Quality of Reporting of Meta-analyses. Lancet. 1999;354(9193):1896–900. [DOI] [PubMed] [Google Scholar]

- 13.Shaneyfelt TM, Mayo-Smith MF, Rothwangl J. Are guidelines following guidelines? The methodological quality of clinical practice guidelines in the peer-reviewed medical literature. JAMA. 1999;281(20):1900–5. [DOI] [PubMed] [Google Scholar]

- 14.Williamson JW, German PS, Weiss R, Skinner EA, Bowes FD. Health science information management and continuing education of physicians: a survey of U.S. primary care practitioners and their opinion leaders. Ann Intern Med. 1989;110(2):151–60. [DOI] [PubMed] [Google Scholar]

- 15.McAlister FA, Graham I, Karr GW, Laupacis A. Evidence-based medicine and the practicing clinician. J Gen Intern Med. 1999;14(4):236–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Greer AL. The state of the art vs. the state of the science: the diffusion of new medical technologies into practice. Int J Technol Assess Health Care. 1988;4(1):5–26. [DOI] [PubMed] [Google Scholar]

- 17.Sim I, Owens DK, Lavori PW, Rennels GD. Electronic trial banks: a complementary method for reporting randomized trials. Med Decis Making. 2000;20(4):440–50. [DOI] [PubMed] [Google Scholar]

- 18.Cina CS, Clase CM, Haynes BR. Refining the indications for carotid endarterectomy in patients with symptomatic carotid stenosis: a systematic review. J Vasc Surg. 1999;30(4):606–17. [DOI] [PubMed] [Google Scholar]

- 19.Chassin MR. Appropriate use of carotid endarterectomy [editorial]. N Engl J Med. 1998;339(20):1468–71. [DOI] [PubMed] [Google Scholar]

- 20.Goldstein LB, Bonito AJ, Matchar DB, et al. U.S national survey of physician practices for the secondary and tertiary prevention of ischemic stroke: design, service availability, and common practices. Stroke. 1995;26(9):1607–15. [DOI] [PubMed] [Google Scholar]

- 21.Nutting PA. Practice-based research networks: building the infrastructure of primary care research. J Fam Pract. 1996; 42(2):199–203. [PubMed] [Google Scholar]

- 22.Nutting PA, Baier M, Werner JJ, Cutter G, Reed FM, Orzano AJ. Practice patterns of family physicians in practice-based research networks: a report from ASPN. Ambulatory Sentinel Pratice Network. J Am Board Fam Pract. 1999;12(4):278–84. [DOI] [PubMed] [Google Scholar]

- 23.van Weel C, Smith H, Beasley JW. Family practice research networks: experiences from 3 countries. J Fam Pract. 2000; 49(10):938–43. [PubMed] [Google Scholar]

- 24.Kaplan B, Brennan PF. Consumer informatics supporting patients as co-producers of quality. J Am Med Inform Assoc. 2001;8:309–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Jadad AR, Haynes RB, Hunt D, Browman GP. The Internet and evidence-based decision-making: a needed synergy for efficient knowledge management in health care. CMAJ. 2000; 162(3):362–5. [PMC free article] [PubMed] [Google Scholar]

- 26.Harris Interactive. Ethics and the Internet: Consumers vs. Webmasters, Internet Healthcare Coalition, and National Mental Health Association. Oct 5, 2000.

- 27.Morgan MW, Deber RB, Llewellyn-Thomas HA, Gladstone P, O'Rourke K, et al. Randomized, controlled trial of an interactive videodisc decision aid for patients with ischemic heart disease. J Gen Intern Med. 2000;15(10):685–93. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Health Dialog Videos. Available at: http://www.healthdialog.com/video.htm. Accessed Feb 20, 2001.

- 29.Haynes B. Can it work? Does it work? Is it worth it? The testing of healthcare interventions is evolving [editorial]. BMJ. 1999;319(7211):652–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.United States Preventive Services Task Force. Guide to Clinical Preventive Services. 2nd ed. Appendix A: Task Force Ratings. 1996. Available at: http://text.nlm.nih.gov/ftrs/directBrowse.pl?dbName=cps&href=APPA. Accessed Jun 18, 2001.

- 31.Kaplan B. Evaluating informatics applications: review of the clinical decision support systems evaluation literature. Int J Med Inf. 2001;64:15–37. [DOI] [PubMed] [Google Scholar]

- 32.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998;280(15):1339–46. [DOI] [PubMed] [Google Scholar]

- 33.Balas E, Austin S, Mitchell J, Ewigman B, Bopp K, Brown G. The clinical value of computerized information services. Arch Fam Med. 1996;5:271–8. [DOI] [PubMed] [Google Scholar]

- 34.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting. J Am Med Inform Assoc. 1996;3:399–409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Friedman CP, Wyatt JC. Evaluation methods in medical informatics. New York: Springer-Verlag, 1997.

- 36.Anderson JG, Aydin CE. Evaluating the impact of health care information systems. Int J Technol Assess Health Care. 1997; 13(2):380–93. [DOI] [PubMed] [Google Scholar]

- 37.Kaplan B. Evaluating informatics applications: social interactionism and call for methodological pluralism. Int J Med Inf. 2001;84:39–56. [DOI] [PubMed] [Google Scholar]

- 38.Tierney WM, Overhage JM, McDonald CJ. A plea for controlled trials in medical informatics [editorial]. J Am Med Inform Assoc. 1994;1(4):353–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Stead WW, Haynes RB, Fuller S, Friedman CP, Travis LE, Beck JR, et al. Designing medical informatics research and library: resource projects to increase what is learned. J Am Med Inform Assoc. 1994;1(1):28–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Woods D. Testimony of David Woods, Past President, Human Factors and Ergonomics Society. In: National Summit on Medical Errors and Patient Safety Research. Washington, DC: Quality Interagency Coordination Task Force, Sep 11, 2000.

- 41.Woods DD, Patterson ES. How unexpected events produce an escalation of cognitive and coordinative demands. In: Hancock PA, Desmond PA (eds). Stress Workload and Fatigue. Hillsdale, NJ: Erlbaum, 2001.