Abstract

Background: Increasing data suggest that error in medicine is frequent and results in substantial harm. The recent Institute of Medicine report (LT Kohn, JM Corrigan, MS Donaldson, eds: To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press, 1999) described the magnitude of the problem, and the public interest in this issue, which was already large, has grown.

Goal: The goal of this white paper is to describe how the frequency and consequences of errors in medical care can be reduced (although in some instances they are potentiated) by the use of information technology in the provision of care, and to make general and specific recommendations regarding error reduction through the use of information technology.

Results: General recommendations are to implement clinical decision support judiciously; to consider consequent actions when designing systems; to test existing systems to ensure they actually catch errors that injure patients; to promote adoption of standards for data and systems; to develop systems that communicate with each other; to use systems in new ways; to measure and prevent adverse consequences; to make existing quality structures meaningful; and to improve regulation and remove disincentives for vendors to provide clinical decision support. Specific recommendations are to implement provider order entry systems, especially computerized prescribing; to implement bar-coding for medications, blood, devices, and patients; and to utilize modern electronic systems to communicate key pieces of asynchronous data such as markedly abnormal laboratory values.

Conclusions: Appropriate increases in the use of information technology in health care— especially the introduction of clinical decision support and better linkages in and among systems, resulting in process simplification—could result in substantial improvement in patient safety.

Our goal in this manuscript is to describe how information technology can be used to reduce the frequency and consequences of errors in health care. We begin by discussing the Institute of Medicine report and the evidence that errors and iatrogenic injury are a problem in medicine, and also briefly mention the issue of inefficiency. We then define our scope of discussion (in particular, what we are considering an error) and then discuss the theory of error as it applies to information technology, and the importance of systems improvement. We then discuss the effects of clinical decision support, and errors generated by information technology. That is followed by management issues, the value proposition, barriers, and recent developments on the national front. We conclude by making a number of evidence-based general and specific recommendations regarding the use of information technology for error prevention in health care.

The Institute of Medicine Report and Iatrogenesis

Errors in medicine are frequent, as they are in all domains in life. While most errors have little potential for harm, some do result in injury, and the cumulative consequences of error in medicine are huge.

When the Institute of Medicine (IOM) released its report To Err is Human: Building a Safer Health System in November 1999,1 the public response surprised most people in the health care community. Although the report's estimates of more than a million injuries and nearly 100,000 deaths attributable to medical errors annually were based on figures from a study published in 1991, they were news to many. The mortality figures in particular have been a matter of some public debate2,3 although most agree that whatever the number of deaths is, it is too high.

The report galvanized an enormous reaction from both government and health care representatives. Within two weeks, Congress began hearings and the President ordered a government-wide feasibility study, followed in February by a directive to governmental agencies to implement the IOM recommendations. During this time, professional societies and health care organizations have begun to re-assess their efforts in patient safety.

The IOM report made four major points—the extent of harm that results from medical errors is great; errors result from system failures, not people failures; achieving acceptable levels of patient safety will require major systems changes; and a concerted national effort is needed to improve patient safety. The national effort recommended by the IOM involves all stakeholders—professionals, health care organizations, regulators, professional societies, and purchasers. Health care organizations are called on to work with their professionals to implement known safe practices and set up meaningful safety programs in their institutions, including blame-free reporting and analysis of serious errors. External organizations—regulators, professional societies, and purchasers—are called on to help establish standards and best practice for safety and to hold health care organizations accountable for implementing them.

Some of the best available data on the epidemiology of medical injury come from the Harvard Medical Practice Study.4 In that study, drug complications were the most common adverse event (19 percent), followed by wound infections (14 percent) and technical complications (13 percent). Nearly half the events were associated with an operation. Most work on prevention to date has focused on adverse drug events and wound infections. Compared with the data on inpatients, relatively few data on errors and injuries outside the hospital are available, although errors in follow-up5 and diagnosis are probably especially important in non-hospital settings.

While the IOM report and Harvard Medical Practice Study deal primarily with injuries associated with errors in health care, the costs of inefficiencies related to errors that do not result in injury are also great. One example is the effort associated with “missed dose” medication errors, when a medication dose is not available for a nurse to administer and a delay of at least two hours occurs or the dose is not given at all.6 Nurses spend a great deal of time tracking down such medications. Although such costs are harder to assess than the costs of injuries, they may be even greater.

Scope of Discussion

In this paper, we are discussing only clear-cut errors in medical care and not suboptimal practice (such as failure to follow a guideline). Clearly, this is not a dichotomous distinction, and some examples may be helpful. We would consider a sponge left in the patient after surgery an error, whereas an inappropriate indication for surgery would be suboptimal practice. We would consider it an error if no postoperative anticoagulation were used in patients in whom its benefit has clearly been demonstrated (for example, patients who have just had hip surgery). However, we would not consider it an error if a physician failed to follow a pneumonia guideline and prescribed a commonly used but suboptimal antibiotic, even though adherence to such guidelines will almost certainly improve outcomes. Although we believe that information technology can play a major role in both domains, we are not addressing suboptimal practice in this discussion.

Theory of Error

Although human error in health care systems has only recently received great attention, human factors engineering has been concerned with error for several decades. Following the accident at Three Mile Island in the late 1970s, the nuclear power industry was particularly interested in human error as part of human factors concerns, and has produced a number of reports on the subject.7 The U.S. commercial aviation sector is also very interested in human error at present, because of massive overhaul of the air traffic control network. A few excellent books on human error generally are available.8–10

While it is easy and common to blame operators for accidents, investigation often indicates that an operator “erred” because the system was poorly designed. Testimony of an operator of the Three Mile Island nuclear power plant in a 1979 Congressional hearing11 makes the point, “ If you go beyond what the designers think might happen, then the indications are insufficient, and they may lead you to make the wrong inferences.…[H]ardly any of the measurements that we have are direct indications of what is going on in the system.”

The consensus among man–machine system engineers is that we should be designing our control rooms, cockpits, intensive care units, and operating rooms so that they are more “transparent”—that is, so that the operator can more easily “see through” the displays to the actual working system, or “what is going on.” Situational awareness is the term used in the aviation sector. Often the operator is locked into the dilemma of selecting and slavishly following one or another written procedure, each based on an anticipated causality. The operator may not be sure what procedure, if any, fits the current not-yet-understood situation.

Machines can also produce errors. It is commonly appreciated that humans and machines are rather different and that the combination of both thus has greater potential reliability than either alone. However, it is not commonly understood how best to make this synthesis. Humans are erratic, and err in surprising and unexpected ways. Yet they are also resourceful and inventive, and they can recover from both their own and the equipment's errors in creative ways. In comparison, machines are more dependable, which means they are dependably stupid when a minor behavior change would prevent a failure in a neighboring component from propagating. The intelligent machine can be made to adjust to an identified variable whose importance and relation to other variables are sufficiently well understood. The intelligent human operator still has usefulness, however, for he or she can respond to what at the design stage may be termed an “unknown unknown” (a variable which was never anticipated, so that there was never any basis for equations to predict it or computers and software to control it).

Finally, we seek to reduce the undesirable consequences of error, not error itself. Senders and Moray10 provide some relevant comments that relate to information technology: “The less often errors occur, the less likely we are to expect them, and the more we come to believe that they cannot happen…. It is something of a paradox that the more errors we make, the better we will be able to deal with them.” They comment further that, “eliminating errors locally may not improve a system and might cause worse errors elsewhere.”

A medical example relating to these issues comes from the work of Macklis et al. in radiation therapy12; this group has used and evaluated the safety record of a record-and-verify linear accelerator system that double-checks radiation treatments. This system has an error rate of only 0.18 percent, with all detected errors being of low severity. However, 15 percent of the errors that did occur related to use of the system, primarily because when an error in the checking system occurred, the human operators assumed the machine “had to be right,” even in the face of important conflicting data. Thus, the Macklis group expressed concern that over-reliance on the system could result in an accident. This example illustrates why it will be vital to measure to determine how systems changes affect the overall rate of not only errors but accidents.

Systems Improvement and Error Prevention

Although the traditional approach in medicine has been to identify the persons making the errors and punish them in some way, it has become increasingly clear that it is more productive to focus on the systems by which care is provided.13 If these systems could be set up in ways that would both make errors less likely and catch those that do occur, safety might be substantially improved.

A system analysis of a large series of serious medication errors (those that either might have or did cause harm)13 identified 16 major types of system failures associated with these errors. Of these system failures, all of the top eight could have been addressed by better medical information.

Currently, the clinical systems in routine use in health care in the United States leave a great deal to be desired. The health care industry spends less on information technology than do most other information-intensive industries; in part as a result, the dream of system integration been realized in few organizations. For example, laboratory systems do not communicate directly with pharmacy systems. Even within medication systems, electronic links between parts of the system—prescribing, dispensing, and administering—typically do not exist today. Nonetheless, real and difficult issues are present in the implementation of information technology in health care, and simply writing a large check does not mean that an organization will necessarily get an outstanding information system, as many organizations have learned to their chagrin.

Evaluation is also an important issue. Data on the effects of information technology on error and adverse event rates are remarkably sparse, and many more such studies are needed. Although such evaluations are challenging, tools to assess the frequency of errors and adverse events in a number of domains are now available.14–19 Errors are much more frequent than actual adverse events (for medication errors, for example, the ratio in one study6 was 100:1). As a result, it is attractive from the sample size perspective to track error rates, although it is important to recognize that errors vary substantially in their likelihood of causing injury.20

Clinical Decision Support

While many errors can be detected and corrected by use of human knowledge and inspection, these represent weak error reduction strategies. In 1995, Leape et al.13 demonstrated that almost half of all medication errors were intimately linked with insufficient information about the patient and drug. Similarly, when people are asked to detect errors by inspection, they routinely miss many.21

It has recently been demonstrated that computerized physician order entry systems that incorporate clinical decision support can substantially reduce medication error rates as well as improve the quality and efficiency of medication use. In 1998, Bates et al.20 found in a controlled trial that computerized physician order entry systems resulted in a 55 percent reduction in serious medication errors. In another time series study,22 this group found an 83 percent reduction in the overall medication error rate, and a 64 percent reduction even with a simple system. Evans et al.23 have also demonstrated that clinical decision support can result in major improvements in rates of antibiotic-associated adverse drug events and can decrease costs. Classen et al.24 have also demonstrated in a series of studies that nosocomial infection rates can be reduced using decision support.

Another class of clinical decision support is computerized alerting systems, which can notify physicians about problems that occur asynchronously. A growing body of evidence suggests that such systems may decrease error rates and improve therapy, thereby improving outcomes, including survival, the length of time patients spend in dangerous conditions, hospital length of stay, and costs.25–27 While an increasing number of clinical information systems contain data worthy of generating an alert message, delivering the message to caregivers in a timely way has been problematic. For example, Kuperman et al.28 documented significant delays in treatment even when critical laboratory results were phoned to caregivers. Computer-generated terminal messages, e-mail, and even flashing lights on hospital wards have been tried.29–32 A new system, which transmits real-time alert messages to clinicians carrying alphanumeric pagers or cell phones, promises to eliminate the delivery problem.33,34 It is now possible to integrate laboratory, medication, and physiologic data alerts into a comprehensive real-time wireless alerting system.

Shabot et al.33,34 have developed such a comprehensive system for patients in intensive care units. A software system detect alerts and then sends them to caregivers. The alert detection system monitors data flowing into a clinical information system. The detector contains a rules engine to determine when alerts have occurred.

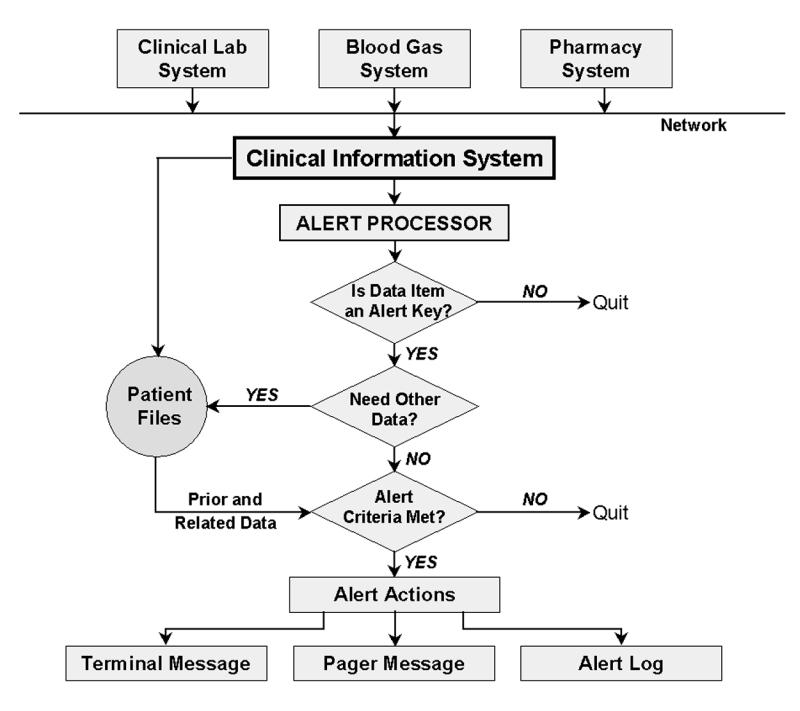

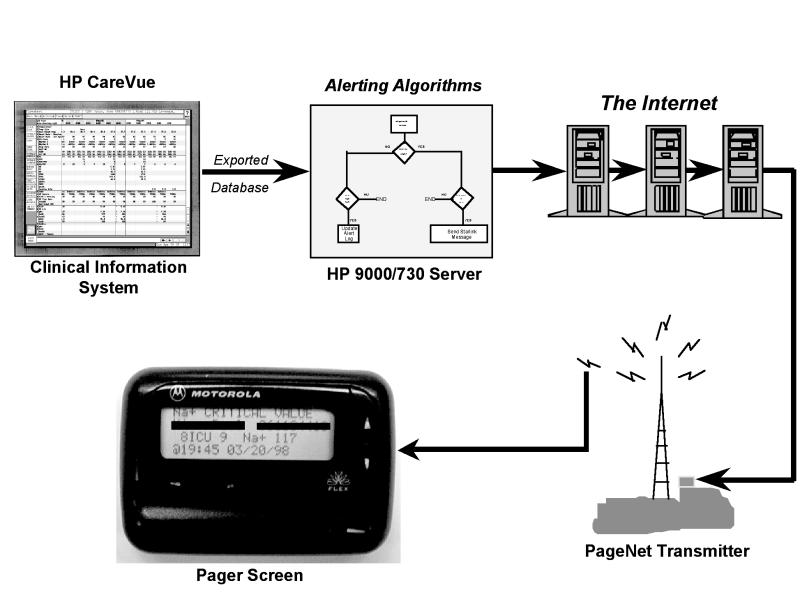

For some kinds of alert detection, prior or related data are needed. When the necessary data have been collected, alerting algorithms are executed and a decision is made as to whether an alert has occurred (Figure 1▶). The three major forms of critical event detection are critical laboratory alerts, physiologic “exception condition” alerts, and medication alerts. When an alert condition is detected, an application formats a message and transmits it to the alphanumeric pagers of various recipients, on the basis of a table of recipients by message type, patient service type, and call schedule. The message is sent as an e-mail to the coded PIN (personal identification number) of individual caregivers' pagers or cell phones. The message then appears on the device's screen and includes appropriate patient identification information (Figure 2▶).

Figure 1.

Alert detection system. Three major forms of critical event detection occur—critical laboratory alerts, physiologic “exception condition” alerts, and medication alerts.

Figure 2.

Wireless alerting system. In the Cedars-Sinai system, alerts are initially detected by the clinical system, then sent to a server, then via the Internet, then sent over a PageNet transmitter to a two-way wireless device.

Alerts are a crucial part of a clinical decision support system,35 and their value has been demonstrated in controlled trials.27,35 In one study, Rind et al.27 alerted physicians via e-mail to increases in serum creatinine in patients receiving nephrotoxic medications or renally excreted drugs. Rind et al. reported that when e-mail alerts were delivered, medications were adjusted or discontinued an average of 21.6 hours earlier than when no e-mail alerts were delivered. In another study, Kuperman et al.35 found that when clinicians were paged about “panic” laboratory values, time to therapy decreased 11 percent and mean time to resolution of an abnormality was 29 percent shorter.

As more and different kinds of clinical data become available electronically, the ability to perform more sophisticated alerts and other types of decision support will grow. For example, medication-related, laboratory, physiologic data can be combined to create a variety of automated alerts. (Table 1▶ shows a sample of those currently included in the system used at Cedars-Sinai Medical Center, Los Angeles, California.) Furthermore, computerization offers many tools for decision support, but because of space limitations we have discussed only some of these; Among the others are algorithms, guidelines, order sets, trend monitors, and co-sign forcers. Most sophisticated systems include an array of these tools.

Table 1 .

▪ Sample of Wireless Alerts Currently in Use at Cedars-Sinai Medical Center, Los Angeles, California

| Laboratory alerts: |

| Chemistries: |

| Sodium |

| Potassium |

| Chloride |

| Calcium |

| Hematology: |

| Hemoglobin |

| Hematocrit |

| White blood cell count |

| Prothrombin time |

| Partial thromboplastin time |

| Arterial blood gas: |

| pH |

| Po2 |

| Pco2 |

| Laboratory trend alerts: |

| Hematocrit |

| Sodium |

| Drug levels: |

| Phenytoin |

| Theophylline |

| Phenobarbital |

| Quinidine |

| Lidocaine |

| Procainamide |

| NAPA (N-acetyl-procainamide) |

| Digoxin |

| Thiocyanate |

| Gentamicin |

| Tobramycin |

| Cardiac enzymes: |

| Troponin I |

| Exception alerts: |

| Fio2 > 60% for > 4 hours |

| PEEP (positive end-respiratory pressure) > 15 cm H20 |

| Systolic BP < 80 mm Hg and no pulmonary artery catheter |

| Systolic BP < 80 mm Hg and pulmonary wedge pressure < 10 mm Hg |

| Pulmonary wedge pressure > 22 mm Hg |

| Urine output < 0.3 cc/kg/hour and patient not admitted in chronic renal failure |

| Ventricular tachycardia |

| Ventricular fibrillation |

| Code Blue |

| Re-admission to intensive care unit < 48 hours after discharge |

| Medication dose alerts: |

| Gentamicin≥200 mg |

| Tobramycin≥200 mg |

| Vancomycin≥1,500 mg |

| Phenytoin≥1,000 mg |

| Digoxin≥0.5 mg |

| Heparin flush≥500 units |

| Heparin injection≥5000 units |

| Enoxaparin≥30 mg |

| Epogen (epoetin alfa)≥20,000 units |

| Medication–physiology alerts: |

| Alert if urine output is low (< 0.3 cc/kg/hour for 3 hours) and the patient is receiving gentamicin, tobramycin, vancomycin, penicillin, ampicillin, Augmentin (amoxicillin/clavulanic acid), piperacillin, Zosyn (piperacillin/tazobactam), oxacillin, Primaxin (imipenem/ cilastatin), or Unasyn (ampicillin/sulbactam). |

| Medication–laboratory data trend alerts: |

| Alert if serum creatinine level increases by > 0.5 mg/dL in 24 hours and the patient is receiving any of the following drugs: gentamicin, tobramycin, amikacin, vancomycin, amphotericin, digoxin, procainamide, Prograf (tacrolimus), cyclosporin, or ganciclovir. |

Errors Generated By Information Technology

Although information technology can help reduce error and accident rates, it can also cause errors. For example, if two medications that are spelled similarly are displayed next to each other, substitution errors can occur. Also, clinicians may write an order in the wrong patient's record.

In particular, early adopters of vendor-developed order entry have reported significant barriers to successful implementation, new sources of error, and major infrastructure changes that have been necessary to accommodate the technology. The order entry process with many computerized physician order entry systems currently on the market is error-prone and time-consuming. As a result, prescribers may bypass the order entry process totally and encourage nurses, pharmacists, or unit secretaries to enter written or verbal drug orders. Also, most computerized physician order entry systems are separate from the pharmacy system, which requires double entry of all orders. This may result in electronic/computer-generated medication administration records (MARs) that are derived from the order entry system database, not the pharmacy database, which can result in discrepancies and extra work for nurses and pharmacists.

Furthermore, many computerized physician order entry systems lack even basic screening capabilities to alert practitioners to unsafe orders relating to overly high doses, allergies, and drug–drug interactions. While visiting hospitals in 1998, representatives of the Institute for Safe Medication Practices (ISMP) tested pharmacy computers and were alarmed to discover that many failed to detect unsafe drug orders. Subsequently, ISMP asked directors of pharmacy in U.S. hospitals to perform a nationwide field test to assess the capability of their systems to intercept common or serious prescribing errors.36 To participate, pharmacists set up a test patient in their computer system, then entered actual physician prescription errors that had actually led to a patient's death or serious injury during 1998 (Table 2▶). Only a small number of even fatal errors were detected by current detection methods.

Table 2 .

▪ Percentage of Pharmacy Computer Systems That Failed to Provide Unsafe Order Alerts*

| Order | Unsafe Order Not Detected | Can Override Without Note |

|---|---|---|

| Cephradine oral suspension IV | 61 | 36 |

| Ketorolac 60 mg IV (patient allergic to aspirin) | 12 | 64 |

| Vincristine 3 mg IV x 1 dose (2-year-old) | 62 | 56 |

| Colchicine 10 mg IV for 1 dose (adult) | 66 | 55 |

| Cisplatin 204 mg IV x 1 dose (26-kg child) | 63 | 62 |

* All these orders are unsafe and have resulted in at least one fatality in the United States. However, most pharmacy systems did not detect them, and even among those that did, a large percentage allowed an override without a note. Data reprinted, with permission, from ISMP Medication Safety Alert! Feb 10, 1999.36 Copyright © Institute for Safe Medication Practices.

These anecdotal data suggest that current systems may be inadequate and that simply implementing the current off-the-shelf vendor products may not have the same effect on medication errors that has been reported in research studies. Improvement of vendor-based systems and evaluation of their effects is crucial, since these are the systems that will be implemented industry-wide.

Management Issues

A major problem in creating the will to reduce errors has been that administrators have not been aware of the magnitude of problem. For example, one survey showed that, while 92 percent of hospital CEOs reported that they were knowledgeable about the frequency of medication errors in their facility, only 8 percent said they had more than 20 per month, when in fact all probably had more than this.37 Probably in part as a result, the Advisory Board Company found that reducing clinical error and adverse events ranked 133rd when CEOs were asked to rank items on a priority list.38 A number of efforts are currently under way to increase the visibility of the issue. For example, a video about this issue, which was developed by the American Hospital Association and the Institute for Healthcare Improvement, has been sent to all hospital CEOs in the United States, and a number of indicators suggest that such efforts may be working.

The Value Proposition

For information technology to be implemented, it must be clear that the return on investment is sufficient, and far too few data are available regarding this in health care. Furthermore, there are many horror stories of huge investments in information technology that have come to naught.

Positive examples relate to computer order entry. At one large academic hospital, the savings were estimated to be $5 million to $10 million annually on a $500 million budget.39 Another community hospital predicts even larger savings, with expected annual savings of $21 million to $26 million, representing about a tenth of its budget.40 In addition, in a randomized controlled trial, order entry was found to result in a 12.7 percent decrease in total charges and a 0.9 day decrease in length of stay.41 Even without full computerization of ordering, substantial savings can be realized: data from LDS Hospital23 demonstrated that a program that assisted with antibiotic management resulted in a fivefold decrease in the frequency of excess drug dosages and a tenfold decrease in antibiotic-susceptibility mismatches, with substantially lower total costs and lengths of stay.

Barriers

Despite these demonstrated benefits, only a handful of organizations have successfully implemented clinical decision support systems. A number of barriers have prevented implementation. Among these are the tendency of health care organizations to invest in administrative rather than clinical systems; the issue of “silo accounting,” so that benefits that accrue across a system do not show up in one budget and thus do not get credit; the current financial crisis in health care, which has been exacerbated by the Balanced Budget Amendment and has made it very hard for hospitals to invest; the lack, at many sites, of leaders in information technology; and the lack of expertise in implementing systems.

One of the greatest barriers to providing outstanding decision support, however, has been the need for an extensive electronic medical record system infrastructure. Although much of the data required to implement significant clinical decision support is already available in electronic form at many institutions, the data are either not accessible or cannot be brought together to be used in clinical decision support because of format and interface issues. Existing and evolving standards for exchange of information (HL7) and coding of this data are simplifying this task. Correct and consistent identification of patients, doctors, and locations is another area in which standards are needed. Approaches to choosing which information should be coded and how to record a mixture of structured coded information and unstructured text are still immature.

Some organizations have moved ahead with adopting such standards on their own, and this can have great benefits. For example, a technology architecture guide was developed at Cedars-Sinai Medical Center to help ensure that its internal systems and databases operate in a coherent manner. This has allowed them to develop what they call their “Web viewing system,” which allows clinicians to see nearly all results on an Internet platform. Many health care organizations are hamstrung, because they have implemented so many different technologies and databases that information stays in silos.

A second major hurdle is choosing the appropriate rules or guidelines to implement. Many organizations have not developed processes for developing and implementing consensus choices in their physician groups. Once the focus has been determined, the organization must determine exactly what should be done about the selected problem. Regulatory and legal issues have also prevented vendors from providing this type of content. Finally, despite good precedents for delivering feedback to clinicians for simple decision support, changing provider behavior for more complex aspects of care remains challenging.

The National Picture

A national commitment to safer health care is developing. Although it is too soon to determine how it will “play out” (the initial fixation on mandatory reporting has been an unwelcome diversion, for example), it seems clear that many stakeholders have a real interest in improving safety. Doctors and other professionals are in the interesting position of being expected to be both leaders in this movement and the recipients of its attention. Already a national coalition involving many of the leading purchasers, the Leapfrog Group, which includes such companies as General Motors and General Electric, have announced their intention to provide incentives to hospitals and other health care organizations to implement safe practices.42 One of the first of these practices will be the implementation of computerized physician order entry systems. Similarly, a recent Medicare Patient Advisory Commission report suggested that that the Health Care Financing Administration consider providing financial incentives to hospitals that adopt physician order entry systems.43 The Agency for Healthcare Research and Quality has received $50 million in funding to support error reduction research, including information technology–related strategies. California recently passed a law mandating that non-rural hospitals implement computerized physician order entry or another application like it by 2005.44 Clearly, many look to automation to play a major role in the redesign of our systems.

Recommendations

Recommendations for using information technology to reduce errors fall into two categories—general suggestions that are relevant across domains, and very specific recommendations. It is important to recognize that these lists are not exhaustive, but they do contain many of the most important and best-documented precepts. Although many of these relate to the medication domain, this is because the best current evidence is available for this area; we anticipate that information technology will eventually be shown to be important for error reduction across a wide variety of domains, and some evidence is already available for blood products, for example.45,46 The strength of these recommendations is based on a standard set of criteria for levels of evidence.47 For therapy and prevention, evidence level 1a represents multiple randomized trials, level 1b is an individual randomized trial, level 4 is case series, and level 5 represents expert opinion.

General Recommendations

Implement clinical decision support judiciously (evidence level 1a). Clinical decision support can clearly improve care,48 but it must be used in ways that help users, and the false-positive rate of active suggestions should not be overly high. Such decision support should be usable by physicians.

Consider consequent actions when designing systems (evidence level 1b). Many times, one action implies another, and systems that prompt regarding this can dramatically decrease the likelihood of errors of omission.49

Test existing systems to ensure that they actually catch errors that injure patients (evidence level 5). The match between the errors that systems detect and the actual frequency of important errors is often suboptimal.

Promote adoption of standards for data and systems (evidence level 5). Adoption of standards is critical if we are to realize the potential of information technology for error prevention. Standards for constructs such as drugs and allergies are especially important.

Develop systems that communicate with each other (evidence level 5). One of the greatest barriers to providing clinicians with meaningful information has been the inability of systems, such as medication and laboratory systems, to readily exchange data. Such communication should be seamless. Adopting enterprise database standards can vastly simplify this issue.

Use systems in new ways (evidence level 5). Electronic records will soon facilitate new, sophisticated prevention approaches, such as risk factor profiling and pharmacogenomics, in which a patient's medications are profiled against their genetic makeup.

Measure and prevent adverse consequences (evidence level 5). Information technology in general and clinical decision support in particular can certainly have perverse and opposite consequences; continuous monitoring is essential.50 However, such monitoring has often not been carried out. It should also be routine to measure how often recommendations are presented and how often suggestions are accepted and to have some measures of downstream outcomes.

Make existing quality structures meaningful (evidence level 5). Quality measurement and improvement groups are often suboptimally effective. Increasing the use of computerization should make it dramatically easier to measure quality continually. Such information must then be used to make ongoing changes.

Improve regulation and remove disincentives for vendors to provide clinical decision support (evidence level 5). The regulation relating to information technology is hopelessly outdated and is currently being revised to address such issues as privacy in the electronic world.51 One issue that relates to error in particular is that vendors, with some cause, fear being sued if they provide action-oriented clinical decision support. Thus, the support either is not provided or is watered down. This problem must be addressed.

Specific Recommendations

Implement provider order entry systems, especially computerizing prescribing (evidence level 1b). Provider order entry has been shown to reduce the serious medication error rate by 55 percent.20

Implement bar-coding for, for example, medications, blood, devices, and patients (evidence level 4). In other industries, bar-coding has dramatically reduced error rates. Although fewer data are available for this recommendation in medicine, it is likely that bar-coding will have a major impact.52

Use modern electronic systems to communicate key pieces of asynchronous data (evidence level 1b). Timely communication of markedly abnormal laboratory tests can decrease time to therapy and the time patients spend in life-threatening conditions.

Our hope is that these recommendations will be useful for a variety of audiences. Error in health care is a pressing problem, which is best addressed by changing our systems of care—most of which involve information technology. Although information technology is not a panacea for this problem, which is highly complex and will demand the attention of many, it can play a key role. The informatics community should make it a high priority to assess the effects of information technology on patient safety.

This work is based on discussions at the AMIA 2000 Spring Congress; May 23–25, 2000; Boston, Massachusetts.

References

- 1.Kohn LT, Corrigan JM, Donaldson MS (eds). To Err Is Human: Building a Safer Health System. Washington, DC: National Academy Press, 1999. [PubMed]

- 2.McDonald CJ, Weiner M, Hui SL. Deaths due to medical errors are exaggerated in Institute of Medicine report. JAMA. 2000;284:93–5. [DOI] [PubMed] [Google Scholar]

- 3.Leape LL. Institute of Medicine medical error figures are not exaggerated [comment]. JAMA. 2000;284:95–7. [DOI] [PubMed] [Google Scholar]

- 4.Leape LL, Brennan TA, Laird NM, et al. The nature of adverse events in hospitalized patients: results from the Harvard Medical Practice Study II. N Engl J Med. 1991;324:377–84. [DOI] [PubMed] [Google Scholar]

- 5.Gurwitz JH, Field T, Avorn J, et al. Incidence and preventability of adverse drug events in nursing homes. Am J Med. 2000;109:87–94. [DOI] [PubMed] [Google Scholar]

- 6.Bates DW, Boyle DL, Vander Vliet MB, Schneider J, Leape LL. Relationship between medication errors and adverse drug events. J Gen Intern Med. 1995;10:199–205. [DOI] [PubMed] [Google Scholar]

- 7.Rasmussen J. Human errors: a taxonomy for describing human malfunction in industrial installations. J Occup Accid. 1982;4:311–35. [Google Scholar]

- 8.Reason J. Human Error. Cambridge, UK: Cambridge University Press, 1990.

- 9.Norman DA. The Design of Everyday Things. New York: Basic Books, 1988.

- 10.Senders J, Moray N. Human Error: Cause, Prediction and Reduction. Mahwah, NJ: Lawrence Erlbaum, 1991.

- 11.Testimony of the Three Mile Island Operators. United States President's Commission on the Accident at Three Mile Island, vol 1. Washington, DC: U.S. Government Printing Office, 1979:138.

- 12.Macklis RM, Meier T, Weinhous MS. Error rates in clinical radiotherapy. J Clin Oncol. 1998;16:551–6. [DOI] [PubMed] [Google Scholar]

- 13.Leape LL, Bates DW, Cullen DJ, et al. Systems analysis of adverse drug events. ADE Prevention Study Group. JAMA. 1995;274(1):35–43. [PubMed] [Google Scholar]

- 14.Brennan TA, Leape LL, Laird N, et al. Incidence of adverse events and negligence in hospitalized patients: results from the Harvard Medical Practice Study I. N Engl J Med. 1991;324:370–6. [DOI] [PubMed] [Google Scholar]

- 15.Barker KN, Allan EL. Research on drug-use-system errors. Am J Health Syst Pharm. 1995;52:400–3. [DOI] [PubMed] [Google Scholar]

- 16.Classen DC, Pestotnik SL, Evans RS, Burke JP. Computerized surveillance of adverse drug events in hospital patients. JAMA. 1991;266:2847–51. [PubMed] [Google Scholar]

- 17.Jha AK, Kuperman GJ, Teich JM, et al. Identifying adverse drug events: development of a computer-based monitor and comparison to chart review and stimulated voluntary report. J Am Med Inform Assoc. 1998;5(3):305–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gandhi TK, Seger DL, Bates DW. Identifying drug safety issues—from research to practice. Int J Qual Health Care 2000;12:69–76. [DOI] [PubMed] [Google Scholar]

- 19.Karson AS, Bates DW. Screening for adverse events. J Eval Clin Pract. 1999;5:23–32. [DOI] [PubMed] [Google Scholar]

- 20.Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280(15):1311–6. [DOI] [PubMed] [Google Scholar]

- 21.Bates DW, Cullen D, Laird N, et al. Incidence of adverse drug events and potential adverse drug events: implications for prevention. JAMA. 1995;274:29–34. [PubMed] [Google Scholar]

- 22.Bates DW, Miller EB, Cullen DJ, et al. Patient risk factors for adverse drug events in hospitalized patients. Arch Intern Med. 1999;159:2553–660. [DOI] [PubMed] [Google Scholar]

- 23.Evans RS, Pestotnik SL, Classen DC, et al. A computer-assisted management program for antibiotics and other anti-infective agents. N Engl J Med. 1998;338:232–8. [DOI] [PubMed] [Google Scholar]

- 24.Classen DC, Evans RS, Pestotnik SL, et al. The timing of prophylactic administration of antibiotics and the risk of surgical-wound infection. N Engl J Med 1992;326:281–6. [DOI] [PubMed] [Google Scholar]

- 25.Tate K, Gardner RM, Scherting K. Nurses, pagers and patient specific criteria: three keys to improved critical value reporting. Proc Annu Symp Comput Appl Med Care. 1995;19:164–8. [PMC free article] [PubMed] [Google Scholar]

- 26.Tate K, Gardner RM, Weaver LK. A computerized laboratory alerting system. MD Comput. 1990;7:296–301. [PubMed] [Google Scholar]

- 27.Rind D, Safran C, Phillips RS, et al. Effect of computer-based alerts on the treatment and outcomes of hospitalized patients. Arch Intern Med. 1994;154:1511–7. [PubMed] [Google Scholar]

- 28.Kuperman G, Boyle D, Jha AK, et al. How promptly are inpatients treated for critical laboratory results? J Am Med Inform Assoc. 1998;5:112–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bradshaw K. Computerized alerting system warns of life-threatening events. Proc Annu Symp Comput Appl Med Care. 1986;10:403. [Google Scholar]

- 30.Bradshaw K. Development of a computerized laboratory alerting system. Comput Biomed Res. 1989;22:575–87. [DOI] [PubMed] [Google Scholar]

- 31.Shabot M, LoBue M, Leyerle B. Inferencing strategies for automated alerts on critically abnormal laboratory and blood gas data. Proc Annu Symp Comput Appl Med Care. 1989;13:54–7. [Google Scholar]

- 32.Shabot M, LoBue M, Leyerle B. Decision support alerts for clinical laboratory and blood gas data. Int J Clin Monit Comput. 1990;7:27–31. [DOI] [PubMed] [Google Scholar]

- 33.Shabot M, LoBue M. Real-time wireless decision support alerts on a palmtop PDA. Proc Annu Symp Comput Appl Med Care. 1995;19:174–7. [PMC free article] [PubMed] [Google Scholar]

- 34.Shabot M, LoBue M, Chen J. Wireless clinical alerts for critical medication, laboratory and physiologic data. Proceedings of the 33rd Hawaii International Conference on System Sciences (HICSS); Jan 4–7, 2000; Maui, Hawaii [CD-ROM]. Washington, DC: IEEE Computer Society, 2000.

- 35.Kuperman G, Sittig DF, Shabot M, Teich J. Clinical decision support for hospital and critical care. J HIMSS. 1999;13:81–96. [Google Scholar]

- 36.Institute for Safe Medication Practices. Over-reliance on computer systems may place patients at great risk. ISMP Medication Safety Alert, Feb 10, 1999. Huntingdon Valley, Pa.: ISMP, 1999.

- 37.Bruskin Goldring Research. A Study of Medication Errors and Specimen Collection Errors. Commissioned by BD (Becton-Dickinson) and College of American Pathologists. Feb 1999. Available at http://www.bd.com/bdid/whats_new/survey.html. Accessed Mar 12, 2001.

- 38.The Advisory Board Company. Prescription for change: toward a higher standard in medication management. Washington, DC: ABC, 1999.

- 39.Glaser J, Teich JM, Kuperman G. Impact of information events on medical care. Proceedings of the 1996 HIMSS Annual Conference. Chicago, Ill.: Healthcare Information and Management Systems Society, 1996:1–9.

- 40.Sarasota Memorial Hospital documents millions in expected cost savings, reduced LOS through use of Eclipsys' Sunrise Clinical Manager [press release]. Delray Beach, Fla.: Eclipsys Corporation; Oct 11, 1999. Available at: http://www.eclipsys.com. Accessed Nov 8, 2000.

- 41.Tierney WM, Miller ME, Overhage JM, McDonald CJ. Physician inpatient order writing on microcomputer workstations: effects on resource utilization. JAMA. 1993;269:379–83. [PubMed] [Google Scholar]

- 42.Fischman J. Industry Preaches Safety in Pittsburgh. U.S. News and World Report. Jul 17, 2000.

- 43.Medicare Payment Advisory Commission. Report to the Congress: Selected Medicare Issues, Jun 1999. Available at: http://www.medpac.gov/html/body_june_report.html. Accessed Mar 12, 2001.

- 44.California Senate Bill No. 1875. Chapter 816, Statutes of 2000.

- 45.Lau FY, Wong R, Chui CH, Ng E, Cheng G. Improvement in transfusion safety using a specially designed transfusion wristband. Transfus Med. 2000;10(2):121–4. [DOI] [PubMed] [Google Scholar]

- 46.Blood-error reporting system tracks medical mistakes [press release]. Dallas, Tex: The University of Texas Southwestern Medical Center at Dallas; Nov 22, 1999. Available at http://irweb.swmed.edu/newspub. Accessed Mar 12, 2001.

- 47.Centre for Evidence-based Medicine. Levels of evidence and grades of recommendation; Sep 8, 2000. Available at: http://cebm.jr2.ox.ac.uk/docs/levels.html. Accessed Mar 12, 2001.

- 48.Haynes RB, Hayward RS, Lomas J. Bridges between health care research evidence and clinical practice. J Am Med Inform Assoc. 1995;2:342–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Overhage JM, Tierney WM, Zhou X, McDonald CJ. A randomized trial of “corollary orders” to prevent errors of omission. J Am Med Inform Assoc. 1997;4:364–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Miller R, Gardner RM. Summary recommendations for responsible monitoring and regulation of clinical software systems. Ann Intern Med. 1997;127:842–5. [DOI] [PubMed] [Google Scholar]

- 51.Gostin LO, Lazzarini Z, Neslund VS, Osterholm MT. The public health information infrastructure: a national review of the law on health information privacy. JAMA. 1996;275:1921–7. [PubMed] [Google Scholar]

- 52.Bates DW. Using information technology to reduce rates of medication errors in hospitals. BMJ. 2000;320:788–91. [DOI] [PMC free article] [PubMed] [Google Scholar]