Abstract

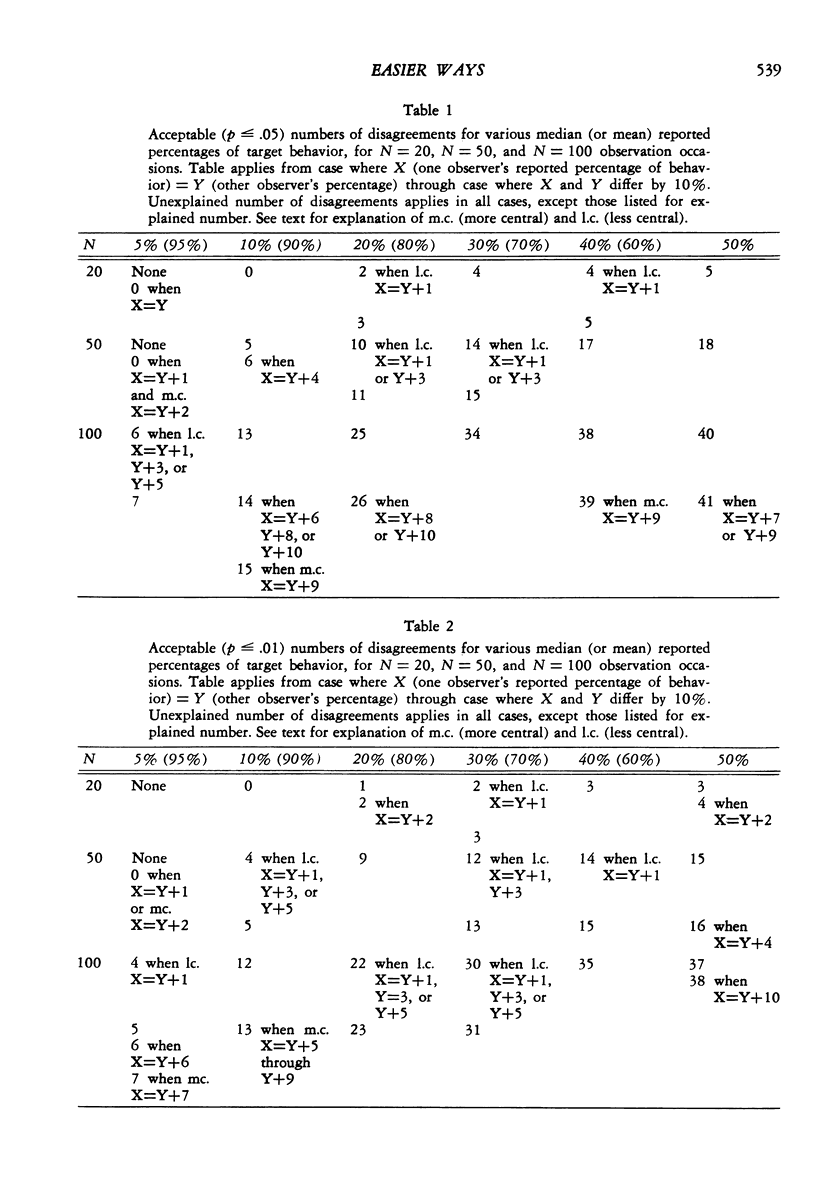

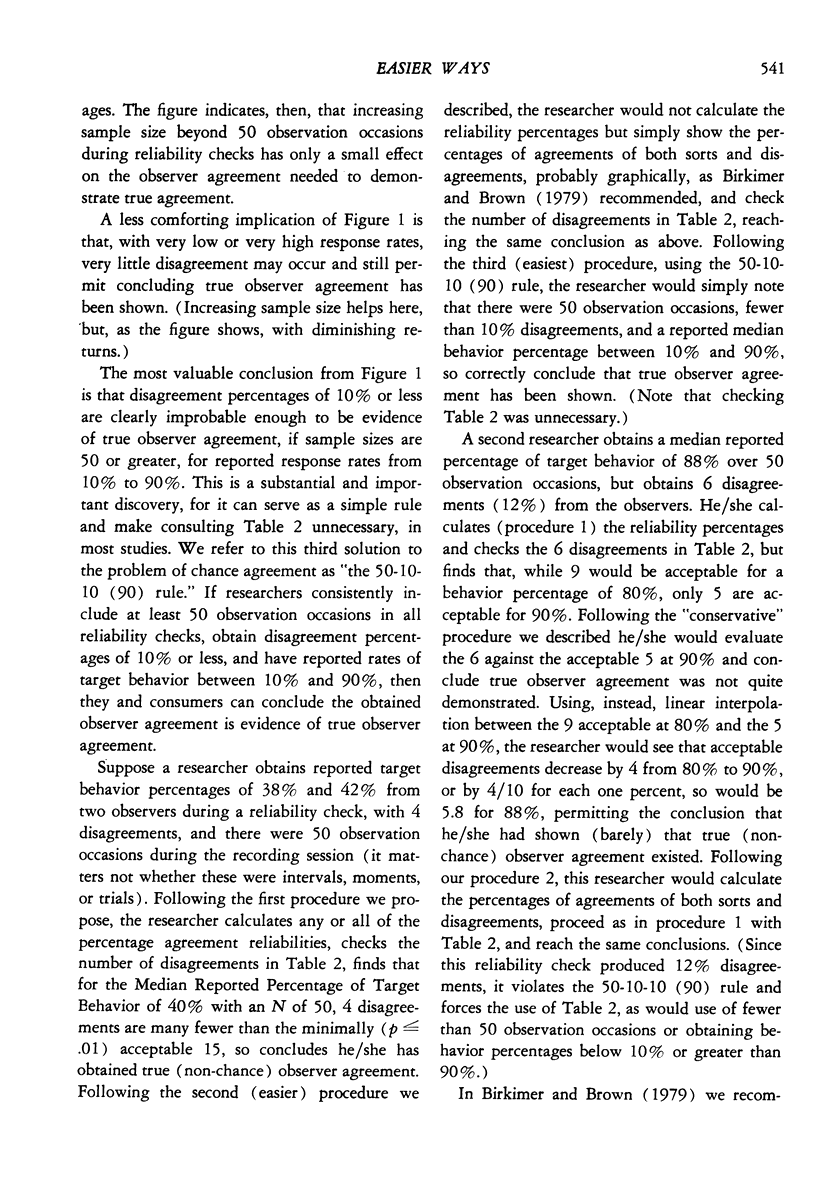

Percentage agreement measures of interobserver agreement or “reliability” have traditionally been used to summarize observer agreement from studies using interval recording, time-sampling, and trial-scoring data collection procedures. Recent articles disagree on whether to continue using these percentage agreement measures, and on which ones to use, and what to do about chance agreements if their use is continued. Much of the disagreement derives from the need to be reasonably certain we do not accept as evidence of true interobserver agreement those agreement levels which are substantially probable as a result of chance observer agreement. The various percentage agreement measures are shown to be adequate to this task, but easier ways are discussed. Tables are given to permit checking to see if obtained disagreements are unlikely due to chance. Particularly important is the discovery of a simple rule that, when met, makes the tables unnecessary. If reliability checks using 50 or more observation occasions produce 10% or fewer disagreements, for behavior rates from 10% through 90%, the agreement achieved is quite improbably the result of chance agreement.

Keywords: chance agreement, chance reliability, interobserver agreement, observational data, observational technology, percentage agreement, reliability

Full text

PDF

Selected References

These references are in PubMed. This may not be the complete list of references from this article.

- Baer D. M. "Perhaps it would be better not to know everything.". J Appl Behav Anal. 1977 Spring;10(1):167–172. doi: 10.1901/jaba.1977.10-167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baer D. M. Reviewer's comment: just because it's reliable doesn't mean that you can use it. J Appl Behav Anal. 1977 Spring;10(1):117–119. doi: 10.1901/jaba.1977.10-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birkimer J. C., Brown J. H. A graphical judgmental aid which summarizes obtained and chance reliability data and helps assess the believability of experimental effects. J Appl Behav Anal. 1979 Winter;12(4):523–533. doi: 10.1901/jaba.1979.12-523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartmann D. P. Considerations in the choice of interobserver reliability estimates. J Appl Behav Anal. 1977 Spring;10(1):103–116. doi: 10.1901/jaba.1977.10-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kelly M. B. A review of the observational data-collection and reliability procedures reported in The Journal of Applied Behavior Analysis. J Appl Behav Anal. 1977 Spring;10(1):97–101. doi: 10.1901/jaba.1977.10-97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kratochwill T. R., Wetzel R. J. Observer agreement, credibility, and judgment: some considerations in presenting observer agreement data. J Appl Behav Anal. 1977 Spring;10(1):133–139. doi: 10.1901/jaba.1977.10-133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yelton A. R., Wildman B. G., Erickson M. T. A probability-based formula for calculating interobserver agreement. J Appl Behav Anal. 1977 Spring;10(1):127–131. doi: 10.1901/jaba.1977.10-127. [DOI] [PMC free article] [PubMed] [Google Scholar]