Abstract

Nonconscious recognition of facial expressions opens an intriguing possibility that two emotions can be present together in one brain with unconsciously and consciously perceived inputs interacting. We investigated this interaction in three experiments by using a hemianope patient with residual nonconscious vision. During simultaneous presentation of facial expressions to the intact and the blind field, we measured interactions between conscious and nonconsciously recognized images. Fear-specific congruence effects were expressed as enhanced neuronal activity in fusiform gyrus, amygdala, and pulvinar. Nonconscious facial expressions also influenced processing of consciously recognized emotional voices. Emotional congruency between visual and an auditory input enhances activity in amygdala and superior colliculus for blind, relative to intact, field presentation of faces. Our findings indicate that recognition of fear is mandatory and independent of awareness. Most importantly, unconscious fear recognition remains robust even in the light of a concurrent incongruent happy facial expression or an emotional voice of which the observer is aware.

Keywords: amygdala, blindsight, affective blindsight, consciousness, nonconscious processes

Facial expressions, most notably expressions of fear, can be processed in the absence of awareness (1-3). Studies of experimentally induced nonconscious vision for facial expressions in neurologically intact viewers provide evidence for differences in brain activity for facial expressions of fear perceived with or without awareness (4). Differences between aware and unaware stimulus processing are also reflected in lateralization of amygdala activation (left amygdala for seen, right for masked presentation) (1). The existence of two processing pathways for fear stimuli is suggested most dramatically by the brain's response to facial expression of fear that a hemianope patient is unable to see consequent upon striate cortex damage (5-7). The fact that conscious and nonconscious emotional cognition involves partially different brain networks raises the possibility that the same brain at the same time may be engaged in two modes of emotional processing, conscious and nonconscious.

To understand how processing with and without awareness is expressed at the level of brain function, we studied a patient with complete loss of visual awareness in half the visual field due to a unilateral striate cortex lesion, which produces blindness in the contralateral visual field (8). It is now established that under appropriate testing conditions some of these patients can accurately guess the location or the identity of a stimulus (9) and discriminate among different facial expressions (5, 7, 10). This residual visual function is referred to as blindsight (11) or also affective blindsight (5) when it concerns emotional signals like facial expressions.

Animal studies indicate that a phylogenetically ancient fear system, at least in rodents, functions independently of a more recently evolved geniculo-striate-based visual system (12). Neuroimaging evidence from humans has produced intriguing data suggesting that a subcortical visual pathway comprising the superior colliculus, posterior thalamus, and amygdala (7) may sustain affective blindsight. This route has been implicated in nonconscious perception of facial expressions in neurologically intact observers when a masking technique was used (13). Furthermore, recent explorations of this phenomenon have suggested that the representation of fear in faces is carried in the low spatial frequency component of face stimuli, a component likely to be mediated via a subcortical pathway (14, 15). It is perhaps no coincidence that the residual visual abilities of cortically blind subjects are confined to that range (16).

Affective blindsight creates unique conditions for investigating on-line interactions between consciously and unconsciously perceived emotional stimuli because two stimuli can be presented simultaneously with the patient seeing only one of them and, thus, is unaware of conflict. Although all experiments reported here addressed the role of nonconscious processing of facial expressions upon conscious recognition, it should be noted that each of them is concerned with a different aspect of this question. Experiment 1 asks whether unseen facial expressions influence recognition of seen facial expressions. Its design is similar to studies of the classical redundant target effect (17). Recent studies have provided evidence that the effect of a redundant stimulus on a target cannot be explained by probability summation and instead suggests interhemispheric cooperation and summation across hemispheres (6, 18, 19).

In Experiment 1, we tested for this effect under circumstances where the subject was not aware of the presence of a second stimulus and was requested to categorize the emotion expressed by the seen face presented in the intact visual field (either a full face or a hemiface). In Experiment 3, we used a crossmodal variant of the redundant target effect by presenting the redundant emotional information in the auditory modality simultaneously with the visual stimulus. The situation is similar to that of Experiment 1 in the sense that the goal is also to measure the influence of an unseen facial expression on the target stimulus. Although in Experiment 1 the target stimulus is visual (a facial expression), in Experiment 3, the target stimulus is an emotional voice and, consequently, the influence of the unseen face is examined in the context of crossmodal influences. Nevertheless, Experiments 1 and 3 are similar in so far as for both cases the target stimulus (a face in Experiment 1 and a voice in experiment 3) is presented simultaneously with a redundant unseen visual stimulus and is consciously perceived. Previous results in neurologically intact viewers provide evidence that simultaneously presented emotional voices influence how facial expressions are processed and, furthermore, indicate that the amygdala plays a critical role in binding visual and auditory-presented affective information. This binding is evident by increased activation to fearful faces accompanied by voices that express fear (20-22). A further question addressed in this study is whether affective blindsight is restricted to facial expressions or extends to affective pictures, and if so, whether unseen affective pictures influence conscious processing of emotional voices. Thus, Experiment 2 paves the way for Experiment 3, where we test for face specificity of this nonconscious effect by presenting affective pictures (and faces) as the redundant stimulus in combination with emotional voices (23).

Methods

Subject. Patient GY is a 45-year-old male who sustained damage to the posterior left hemisphere of his brain caused by head injury (a road accident) when he was 7 years old. The lesion (see ref. 24 for an extensive structural and functional description of the lesion) invades the left striate cortex (i.e., medial aspect of the left occipital lobe, slightly anterior to the spared occipital pole, extending dorsally to the cuneus and ventrally to the lingual but not the fusiform gyrus) and surrounding extra-striate cortex (inferior parietal lobule). The location of the lesion is functionally confirmed by perimetry field tests (see ref. 24 for a description of GY's perimetric field). He has macular sparing extending 3° into his right (blind) hemifield. Preliminary testing ensured that throughout all experiments the materials and presentation conditions did not give rise to awareness of the presence of a stimulus presented in the blind field. GY gave informed consent to the present study, which was approved by the local hospital ethics committee.

Data Acquisition. Neuroimaging data were acquired with a 2 T Magnetom VISION whole-body MRI system (Siemens Medical Systems, Erlangen, Germany) equipped with a head volume coil. Contiguous multislice T2* weighted echoplanar images (EPIs) were obtained by using a sequence that enhanced blood oxygenation level-dependent contrast. Volumes covering the whole brain (48 slices; slice thickness 2 mm) were obtained every 4.3 s. A T1 weighted anatomical MRI (1 × 1 × 1.5 mm) was also acquired. In each experiment, a total of 320 whole-brain EPIs were acquired during a single session, of which the first eight volumes were discarded to allow for T1 equilibration effects.

Data Analysis. The functional MRI (fMRI) data were analyzed by using statistical parametric mapping (25). After realignment of all of the functional (T2* weighted) volumes to the first volume in each session, the structural (T1 weighted) MRI was coregistered into the same space. The functional data were then smoothed by using a 6-mm (full width at half maximum) isotropic Gaussian kernel to allow for corrected statistical inference. The evoked responses for the different stimulus events were modeled by convolving a series of delta (or stick) functions with a hemodynamic response function. These functions were used as covariates in a general linear model, together with a constant term and a basis set of cosine functions with a cutoff period of 512 s to remove low-frequency drifts in the blood oxygenation level-dependent signal. Linear contrasts were applied to the parameter estimates for each event type to test for specific effects (e.g., congruent fear versus congruent happy). The resulting t statistic at every voxel constitutes a statistical parametric map (SPM). Reported p values are corrected for the search volume of regions of interest: e.g., 8 mm radius sphere for amygdala, 10 mm radius sphere for posterior thalamus, and 6 mm radius sphere for superior colliculus. The significance of activations outside regions of interest was corrected for multiple comparisons across the entire brain volume.

Experiment 1: The Influence of an Unseen Facial Expression on Conscious Recognition. Here, the goal was to measure the interaction between seen and consciously recognized stimuli and unseen stimuli as a function of congruence and condition with a design adapted from ref. 6.

Experimental Design. The material was based on static grayscale images of six male and six female actors expressing fear (F) and happiness (H), taken from a standard set of pictures of facial affect (26). For one session (Session 1) a bilateral face presentation condition was used: two pictures of the same individual were on each trial shown simultaneously left and right of fixation. In the incongruent pairs, the two faces expressed two different emotions [fear left, happiness right (FH) or happiness left, fear right (HF)]. In the congruent pairs [happiness left, happiness right (HH) or fear left, fear right (FF)], they expressed the same emotion. Each face was 6.6° high and 4.4° wide, and its inner edge was 1.8° away from the fixation point. In Session 2, a chimeric face presentation condition was used. Two half faces separated by a vertical slit of 2 cm corresponding to a visual angle of 1.9° that was presented in a central location. Incongruent chimeric faces presented different expressions (FH or HF again) left and right of a vertical meridian centered on the fixation point. Congruent ones had the same expression on both sides (they were actually normal pictures of a face expressing fear or happiness). Exposure duration was 1 s with an interstimulus interval of 6 s. A two-alternative forced choice task was used requesting GY to indicate by right hand button press which expression he had perceived. Given that GY has no conscious representation of data presented in his right hemifield, these instructions amounted for him to a demand to report the expression in the left face (in the bilateral condition) or the left half face (in the chimeric condition). Within each experimental session (two full faces or one chimeric face), the four types of faces (FF, FH, HH, and HF) were presented in randomized order.

Results

Behavioral Results. For bilateral face presentations, identification of fear presented in the critical left face was better when accompanied by a congruent fearful face in the (unseen) right hemifield (pairs FF: 18 correct responses of 22 recorded trials, i.e., 81.8%) than by an incongruent happy face (pairs FH: 12 of 29 or 41.4% correct responses). The difference is significant (χ2 = 6.57, P < 0.025). Identification of happiness was practically at chance level for both congruent pairs (HH: 12 of 27 or 44.4% correct) and incongruent ones (HF: 13 of 25 or 52.0% correct), with no significant difference (χ2 < 1). The same pattern of results was obtained with chimeric presentations. There was a clear congruency effect for fear identification (FF: 17 of 22 or 77.3% correct; FH: 12 of 29 or 41.4% correct; χ2 = 4.96, P < 0.05), but none whatsoever for happiness identification (HH: 12 of 27 or 44.4% correct; HF: 11 of 25 or 44.0% correct; χ2 < 1).

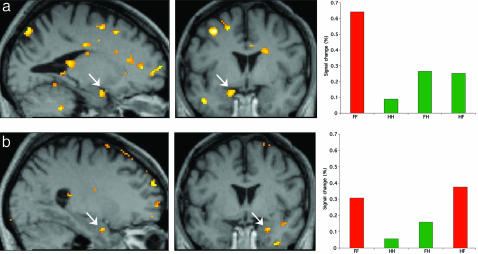

Brain Imaging Results. Conditions were entered into the analysis by using the following abbreviations: F, fear; H, happiness. Left position of the labels F or H indicates left field presentation; right position indicates right field presentation. For example, FH corresponds to the condition where a fear face is presented to the left and a happy face to the right visual field. In Session 1, a condition-specific effect for fear congruence (FF-FH)-(HH-HF) was expressed in left amygdala, pulvinar, and fusiform cortex. In Session 2 (hemifaces), a condition-specific fear congruency effect was evident in right amygdala, superior colliculus, and in left posterior fusiform gyrus (Fig. 1 and Table 1).

Fig. 1.

Experiment 1. SPMs for blindfield presentation of fear. (a) Fear and happy full faces: Fear congruency effect in left amygdala (arrows) is shown as SPM superimposed on GY's brain (Left and Middle) and condition-specific parameter estimates from a peak voxel in left amygdala for each of the conditions (Right). (b) Fear and happy half face: Fear congruence effect shown as SPM projected on GY's brain showing right amygdala (Left and Middle) and parameter estimates from a peak voxel in right amygdala for each of the conditions (Right). Coordinates are given in Table 1.

Table 1. Overview of xyz coordinates of activations obtained in the three experiments.

| Experiment 1

|

Experiment 2

|

Experiment 3

|

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Intact field

|

Blind field

|

Voice and fearful face: congruent–incongruent

|

Face/voice congruent–incongruent > scene/voice congruent–incongruent

|

|||||||

| Locus | Full face | Half face | Face | Scene | Face | Fearful/happy: face > scene† | Intact field | Blind field | Intact field | Blind field |

| Amyg L | –18, –6, –24* | –20, –2, –20* | –18, –2, –20* | –20, 4, –20* | –20, –2, –30* | |||||

| Amyg R | 26, –4, –12* | 18, –2, –20* | 26, –4, –12* | 28, 0, –12* | 28, –2, –20* | 22, –8, –20* | ||||

| Sup Coll | –2, –38, –12* * | 0, –30, –8* | ||||||||

| Pulvinar | 2, –24, 10* * u | |||||||||

| Fusif g. L | –28, –54, –12* * * u | |||||||||

F, fear; H, happiness; Amyg L, left amygdala; Amyg R, right amygdala; Sup Coll, superior colliculus; Fusif g. L, left fusiform gyrus. Abbreviations used for presentation conditions: left position of F or H indicates left field presentation; right position indicates right field presentation. For example, FH corresponds to the condition where a fear face is presented to the left and a happy face to the right visual field. *, P < 0.05; * *, P < 0.01; * * *, P < 0.001. u, uncorrected; otherwise all tests are corrected.

Interaction effects of emotion (fearful/happy) and stimulus category (face/scene): Fearful/happy face blind field > Fearful/happy scene blind field

Discussion

The behavioral results indicate that recognition is above chance for congruent conditions but drops significantly for presentation of an incongruous face or half face in the blind field. GY's performance for conscious identification is marginally below that obtained previously in behavioral testing (6), which we suggest partly reflects the fact that behavioral testing took place inside the scanner. The combined negative effects on performance of lateral, nonfoveal, stimulus presentation, more demanding testing conditions, and bilateral presentation probably accounts for this lower accuracy.

The fMRI data show that interhemispheric congruence effects between seen and unseen facial expressions modulate brain activity in superior colliculus, amygdala, and fusiform cortex. For Session 1, our results show an increase in left amygdala activity when there is fear congruence between hemifields. Thus, the presence of a fearful face in the blind field enhances activation of left amygdala to the seen fearful face, indicating an influence of the nonconscious on the conscious recognition. This asymmetry we suggest is in tune with psychological reality: Our unconscious desires and anxieties, for example, influence our conscious thoughts and actions, but we cannot simply consciously “think away” or remove our unconscious fears. At the functional level, the asymmetry suggests that integration between perception and behavior may vary as a function of whether the organism is engaged in (automatic) reflexive or (controlled) reflective fear behavior.

We also observed fear congruence effects in fusiform cortex, an area closely associated with overt face processing. Previously we observed right fusiform gyrus activity associated with presentation in the intact left visual field but no fusiform activity for blindfield presentation (7). The present left anterior fusiform associated with bilateral fear congruence of full faces is consistent with the role of anterior fusiform gyrus for face memory (27). Unlike right fusiform activity, left fusiform activity in this patient cannot have its origin in striate cortex. Instead it may reflect a fear-related reentrant modulation from left amygdala mediated by ipsilaterality of amygdala-fusiform connections (28).

In Session 2 with hemifaces, the results show right amygdala and the superior colliculus activation specific to fear congruence. A previously undescribed contribution from the present study is that these two structures are sensitive to the congruence between a seen and an unseen fear expression. Superior colliculus-pulvinar-based residual abilities of hemianopic patients are within a limited range of spatial frequencies (16), which is also sufficient for recognition of facial expressions in normal viewers (14, 29).

Experiment 2: Conscious and Nonconscious Processing of Facial Expressions and Affective Pictures. The purpose here was to provide a direct comparison of nonconscious processing of facial expressions and affective pictures to investigate whether an apparent privileged processing seen for fear faces is also expressed for nonface stimuli such as affective pictures. Our previous investigation of this issue had yielded moderate positive results for recognition of unseen affective pictures but a negative result as far as the crossmodal influence of the visual images on processing emotion in the voice was concerned (23). Because the difficulty in extrapolating conclusions based on one method (behavioral and electrophysiological) to another (fMRI), we wanted to assess independently whether affective pictures were processed by using fMRI. For the purpose of comparison, we used the same design as our previous fMRI study of affective blindsight for faces (7) and added a set of affective pictures.

Experimental Design. Visual materials consisted of a set of black and white photographs of facial expressions and a set of black and white affective pictures. The face set contained 12 images (six individuals, each of them once with a happy facial expression and once with a fearful one). Affective pictures were 12 black and white images selected from the International Affective Picture System (31). There were six negative pictures (snake, pit bull, two spiders, roaches, and shark; mean valence: 4.04) and six positive ones (porpoises, bunnies, lion, puppies, kitten, and baby seal; mean valence: 7.72). Image size was 6.6 × 4.4 inches, and images were presented singly in either the left (intact) or right (blind) visual hemifield by following a previously used procedure in separate blocks corresponding to face and scene pictures (23). Horizontal separation between the central fixation point and the inner edge of the images was 4.0°. Stimulus duration was 1 s, and interstimulus interval was 6 s. Stimulus onset was indicated by a circle appearing around the central fixation cross. GY was instructed to indicate by button press whether he found the image presented to his intact left visual field fearful or happy. For presentation to his blind field, a similar response was requested but GY was encouraged to answer by making a guess.

Results and Discussion

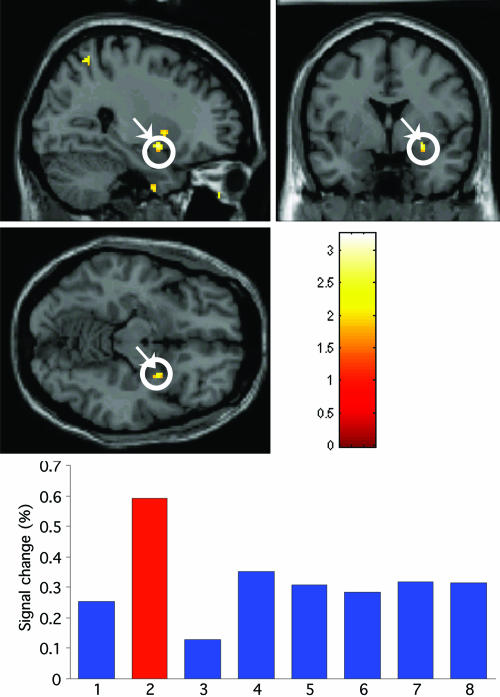

Presentation in the intact field resulted in bilateral amygdala activity for fearful faces, similar to a pattern observed in ref. 7. In contrast, the corresponding contrast for seen pictures shows activation restricted to the left amygdala (see Fig. 2). When faces and pictures were presented in the blind field, a fearful face evoked right amygdala activity (Fig. 2) as shown previously and as also observed in session 2 of Experiment 1 here. An ANOVA on the interaction of emotion (fearful/happy) and stimulus category (face/scene) showed a significant effect in right amygdala for fear faces in the blind field condition (Fig. 2). The selectivity of these effects for fearful, as opposed to happy faces, is consistent with ref. 7. Note that in our previous study, bilateral activity was observed for blindfield fear faces, but the laterality pattern for seen vs. unseen observed here is consistent with results obtained by using a masking technique in normal viewers (13).

Fig. 2.

Experiment 2. (Top and Middle) An SPM for the interaction of emotion (fearful/happy) and stimulus category (face/scene). The SPM is based upon the contrast of fearful/happy face blind field > fearful/happy scene blind field and shows a significant effect in the right amygdala. (Bottom) The parameter estimates for the response in the peak voxel in right amygdala for each of the conditions, namely, for lanes: 1, fearful face intact field; 2, fearful face blind field; 3, happy face intact field; 4, happy face blind field; 5, fearful scene intact field; 6, fearful scene blind field; 7, happy scene intact field; 8, happy scene blind field. Coordinates are given in Table 1.

Our data suggest that a privileged processing for fear, perhaps by a nonclassical cortical route, is restricted to faces. However, we would caution that this conclusion might be premature. Research on affective pictures has indicated amygdala response is weaker for nonface stimuli (31). Autonomic reactivity, measured by skin conductance responses, is also greater to facial expressions (32). Faces are more primitive biological stimuli, and as such, they may require very little cognitive mediation, whereas appraisal of the emotional content of pictures requires deeper cognitive and semantic processing (33) and may depend on interaction with intact V1 in the later stages of scene processing (23).

There is, as yet, no clear consensus concerning laterality effects in amygdala activation as a function of stimulus type. Activity related to pictures and objects has been observed in the left amygdala (34), bilaterally (32), and in right amygdala (35, 36). One possibility is that left amygdala is more specialized for processing arousal associated with affective pictures (37).

Experiment 3: The Influence of Unseen Facial Expressions and Affective Pictures on Conscious Recognition of Emotion in the Voice. The perception and integration of emotional cues from both the face and the voice is a powerful adaptive mechanism. A previous report (20) showed that emotional voices influence how facial expressions are perceived and that fear related emotional congruence between face and voice is reflected in increased amygdala activity. We have reason to believe that facial and vocal cues converge rapidly based on studies where we recorded electrical brain responses (event-related potentials) to presentation of face/voice pairs to the intact and the blind visual field while subjects attend to the auditory part of the audiovisual stimulus pair (23). Presentation of an audiovisual stimulus with emotional incongruence between the visual and the auditory component generated an increase in amplitude compared to the congruent components, but this influence of an unseen stimulus only obtained for faces and not for affective pictures indicates that conscious recognition of the affective pictures may be critical for this effect. In line with this approach, we predicted that faces would influence processing of the emotional voices but that affective pictures would not.

Experimental Design. In contrast with Experiment 2, in this experiment all trials consisted of combinations of a visual stimulus (either a face or an affective picture) paired with an emotional voice fragment. Materials and stimuli were the same as described in ref. 23. Briefly, the visual materials consisted of 12 facial expressions and 12 affective pictures. Auditory materials consisted of a total of 12 bisyllabic neutral words spoken in either a happy or a fearful tone of voice lasting for ≈400 ms (three male and three female actors each with emotional tones of voice expressing either fear or happiness). Audiovisual pairs were constructed by combining the stimuli of the two visual conditions (12 faces or 12 affective pictures) with the two types of auditory stimuli (happy of fearful words), amounting to 48 trials. Thus, a trial always consisted of a visual and an auditory component presented simultaneously. In session 1, all images (faces or scenes) were fearful and they were presented simultaneously with a voice fragment, which had either a fearful (congruent condition) or happy tone of voice (incongruent condition). In session 2, all images (faces or scenes) were happy while the voice again had either a fearful (incongruent) or happy (congruent) tone. A trial lasted for 1 s and the interstimulus interval was 6 s. Auditory stimuli were delivered over MRI-compatible headphones. GY's explicit task was to indicate whether the voice belonged to a male or to a female speaker, making responses by button presses with his right hand.

Results and Discussion

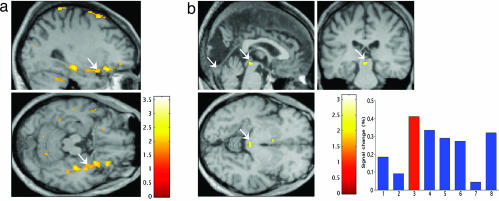

As in the previous study by using normal viewers, we choose a behavioral task requiring gender decisions, which turned attention away from the emotional task variable, and GY was near ceiling for this task. As expected, the fMRI analysis shows that fear face/voice congruence in the intact field (see Fig. 3a) is reflected in left amygdala activation, consistent with our previous results reported for neurologically intact observers (20). The critical finding is that the fear face/voice congruence effects in the blind field are reflected in enhanced activity in right amygdala. This previously uncharacterized result extends the significance of right amygdala activation for blind fear to include the influence of auditory information and, thus, audiovisual multisensory fear perception. Finally, the interaction between emotion and stimulus category in the blind field shows that fear congruency (Fig. 3b) is also reflected in enhanced superior collicular activity, pointing to a role for superior colliculus in the combined voice-unseen face processing of fear stimuli.

Fig. 3.

Experiment 3. (a) Fear congruence effects in the blind field showing right amygdala activation. (b) Interaction effects: (Voice-congruent fear face -voice-incongruent fear face blind field) > (Voice-congruent fear scene -voice-incongruent fear scene blind field) showing superior colliculus for lanes: 1, fearful face intact field with voice congruent; 2, fearful face intact with voice incongruent; 3, fearful face blind with voice congruent; 4, fearful face blind field with voice incongruent; 5, fearful scene intact field with voice congruent; 6, fearful scene intact field with voice incongruent; 7, happy scene blind field with voice congruent; 8, happy scene blind field with voice incongruent. (b Lower Right) Condition-specific parameter estimates from peak voxel in superior colliculus. Coordinates are given in Table 1.

The present data also throw light on some previous findings. For example, in a previous study of audiovisual emotion, we observed left lateralized amygdala activity and increased right fusiform cortex activity (20). One possible explanation was that left amygdala may be related to the use of linguistic stimuli. Interestingly, the present finding of right amygdala activation indicates that this relation with language need not be the case and that typical left lateralized language areas need not necessarily intervene to facilitate perceiving emotion in the voice. Next, the absence of audiovisual emotion integration with affective scenes is consistent with the notion that pairings of this type may require higher cognitive processing, such that a more semantically demanding integration process depends on feedback from higher cognitive areas to earlier visual areas involving intact striate cortex (23). Finally, our results show that superior colliculus plays a role in integrating unseen fear faces with emotional prosody. This finding, together with a similar role of amygdala for audiovisual fear stimuli, indicates a subcortical contribution in which amygdala and superior colliculus both play a role. One may argue that perception of fear expressions in face and voice are naturally linked in the production of fear responses and both share the same abstract underlying representation of fear-specific affect programs. Thus, viewing a facial expression may automatically activate a representation of its auditory correlate and vice versa (38). Electrophysiological recordings of the time course of the cross-modal bias from the face to the voice indicate that integration takes place during auditory perception of emotion (39, 40). The present finding also adds to previous findings that face expressions can bias perception of an affective tone of voice even when the face is not attended to (41) or not perceived consciously (23).

General Discussion

Taken together, the three experiments show that amygdala activation, after presentation of facial expressions of fear presented to the cortically blind field, remains robust irrespective of whether conflicting emotional cues are provided by a consciously perceived facial expression or an emotional voice.

Automatic Fear Face Processing in the Amygdala. Our three experiments each provide converging evidence of robust nonconscious recognition of fearful faces and associated automatic activation of amygdala. Our testing procedure provides a measure of automatic bias from the unattended stimulus on task performance and task-related brain activation. The biasing stimulus was not only unattended as in previous studies (4, 42) but was not consciously perceived. In this sense, our paradigm combines attention-independent processing and processing without visual consciousness.

In normal subjects, increased condition-specific covariation of collicular-amygdala responses to masked CS+ (as opposed to unmasked) fear conditioned faces was observed without a significant change in mean collicular and amygdala responses (1). In contrast, in patient GY, we previously observed a higher superior colliculus and amygdala activity associated with unseen fear expressions (masked CS+) (7). There are methodological differences between the two studies, and an important one concerns central presentation in normals versus lateral presentation in GY. This difference by itself may account for the higher collicular activity in GY given the role of superior colliculus in spatial orientation.

A Special Status for Faces? Our results do not provide evidence for nonconscious processing of stimuli other than faces and, thus, suggest a special status for faces. But further research on this issue is needed before we can conclude that faces are indeed unique in conveying fear via a subcortical pathway. The special status of faces may relate to properties such as overall face configuration but also to the individual components. Fearful eyes appear to represent a powerful automatic threat signal that has been highly conserved throughout evolution, for example, in the owl butterfly's use of eye wing markings to ward off bird predators. Birds do not have a visual cortex (like GY in his blind field), but they do have a homologue of the superior colliculus, the optic tectum, again like GY, in the blind field. Fearful eyes, therefore, may be a simple cue to which the low-resolution visual abilities of superior colliculus are tuned (43, 44). Consistent with this view, an impairment in facial fear recognition in a patient with amygdala damage compromises the ability to make normal use of information from the eye region of faces when judging emotions, a defect that may be traced to a lack of spontaneous fixations on the eyes during free viewing of faces (45). Furthermore, recent findings suggest that the sclera in fearful faces is especially effective for recruiting amygdala activation (46) and may be the specific signal rather than the face configuration that makes some emotional faces very effective. Finally, facial expressions are often imitated spontaneously (47), and this automatic reaction may facilitate residual vision abilities, for example, by compensating for lower recognition threshold. This mechanism may incorporate viscero-motor signals into decision-making processes.

Neurophysiology of Affective Blindsight. A different kind of challenge comes from understanding its neurophysiologic basis. The possibility that the remarkable visual abilities of cortically blind patients reflect residual processing in islands of intact striate cortex now seems extremely unlikely based on findings from brain imaging (24, 48).

Facial expressions have been suggested as too subtle and complex to survive processing in case of striate cortex damage (48). Many categories of information provided by the face are indeed subtle like age, gender, trustworthiness, attractiveness, and personal identity. But facial expressions can still be recognized when stimuli are much degraded. For example, the facial expression in images with spatial frequencies <8 cycles can still be recognized almost faultlessly (14, 15), and it is interesting to note that this filtering is within the range of spatial frequencies available to the majority of cortically blind patients (16).

The second claim about the subcortical route, based on work in rodents, concerns its comparative speed in contrast with the slower processes in the occipitotemporal cortex. The earliest evidence for discrimination between facial expressions was at 80-110 ms after stimulus and located in midline occipital cortex (49-51). Recordings from amygdala in animals (50) and in humans suggest that the earliest activity is ≈220 ms (47). But interestingly, a patient study with single-unit recording reports discrimination between faces and pictures expressing fear or happiness in orbitofrontal cortex observed after only 120 ms (52).

One possibility is that in GY, after his occipital lesion at age 7, experience-dependent changes have taken place in superior colliculus (SC). This finding would be consistent with reports from animal studies. The number of SC cells with enhanced responses to visual targets in monkeys increases after striate cortex lesions (53). This postlesion modification of superior colliculus connectivity may explain higher sensitivity and higher functionality of the subcortical pathway as suggested in refs. 54 and 55. Yet in the present experiments, higher sensitivity is condition-specific and does not obtain for happy facial expressions. Moreover, the laterality of SC activity observed in GY (coactivation right SC-R amygdala) is similar to what is reported in normal subjects viewing masked fear expressions. This lateralization speaks to a functional specificity of amygdala route. Thus, the argument from postlesion plasticity in SC needs to account for this selectivity. Interestingly, in research with diffusion tensor imaging, we found no volumetric difference between left and right superior colliculus in GY (B.d.G., D. Tuch, L. Weiskrantz, and N. Hadjikhani, unpublished data).

Acknowledgments

We thank GY for his collaboration and patience, W. A. C. van de Riet for assistance with the manuscript, and anonymous reviewers for constructive comments on a previous version. R.J.D. is supported by a Wellcome Trust Program Grant.

Author contributions: B.d.G. and R.J.D. designed research; B.d.G., J.S.M., and R.J.D. performed research; B.d.G., J.S.M., and R.J.D. analyzed data; and B.d.G. and R.J.D. wrote the paper.

Conflict of interest statement: No conflicts declared.

Abbreviations: fMRI, functional MRI; F, fear; H, happiness; SC, superior colliculus; SPM, statistical parametric map.

References

- 1.Morris, J. S., Ohman, A. & Dolan, R. J. (1998) Nature 393, 467-470. [DOI] [PubMed] [Google Scholar]

- 2.Whalen, P. J., Rauch, S. L., Etcoff, N. L., McInerney, S. C., Lee, M. B. & Jenike, M. A. (1998) J. Neurosci. 18, 411-418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Morris, J. S., Friston, K. J., Buchel, C., Frith, C. D., Young, A. W., Calder, A. J. & Dolan, R. J. (1998) Brain 121, 47-57. [DOI] [PubMed] [Google Scholar]

- 4.Vuilleumier, P., Armony, J. L., Clarke, K., Husain, M., Driver, J. & Dolan, R. J. (2002) Neuropsychologia 40, 2156-2166. [DOI] [PubMed] [Google Scholar]

- 5.de Gelder, B., Vroomen, J., Pourtois, G. & Weiskrantz, L. (1999) NeuroReport 10, 3759-3763. [DOI] [PubMed] [Google Scholar]

- 6.de Gelder, B., Pourtois, G., van Raamsdonk, M., Vroomen, J. & Weiskrantz, L. (2001) NeuroReport 12, 385-391. [DOI] [PubMed] [Google Scholar]

- 7.Morris, J. S., DeGelder, B., Weiskrantz, L. & Dolan, R. J. (2001) Brain 124, 1241-1252. [DOI] [PubMed] [Google Scholar]

- 8.Holmes, G. (1918) Br. J. Ophthalmol. 2, 449-468. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Weiskrantz, L., Cowey, A. & Le Mare, C. (1998) Brain 121, 1065-1072. [DOI] [PubMed] [Google Scholar]

- 10.Hamm, A. O., Weike, A. I., Schupp, H. T., Treig, T., Dressel, A. & Kessler, C. (2003) Brain 126, 267-275. [DOI] [PubMed] [Google Scholar]

- 11.Weiskrantz, L. (1986) Blindsight: A Case Study and Implications (Clarendon, Oxford).

- 12.LeDoux, J. E. (1992) Curr. Opin. Neurobiol. 2, 191-197. [DOI] [PubMed] [Google Scholar]

- 13.Morris, J. S., Ohman, A. & Dolan, R. J. (1999) Proc. Natl. Acad. Sci. USA 96, 1680-1685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vuilleumier, P., Armony, J. L., Driver, J. & Dolan, R. J. (2003) Nat. Neurosci. 6, 624-631. [DOI] [PubMed] [Google Scholar]

- 15.Schyns, P. G. & Oliva, A. (1999) Cognition 69, 243-265. [DOI] [PubMed] [Google Scholar]

- 16.Sahraie, A., Trevethan, C. T., Weiskrantz, L., Olson, J., MacLeod, M. J., Murray, A. D., Dijkhuizen, R. S., Counsell, C. & Coleman, R. (2003) Eur. J. Neurosci. 18, 1189-1196. [DOI] [PubMed] [Google Scholar]

- 17.Miller, J. (1982) Cognit. Psychol. 14, 247-279. [DOI] [PubMed] [Google Scholar]

- 18.Mohr, B., Landgrebe, A. & Schweinberger, S. R. (2002) Neuropsychologia 40, 1841-1848. [DOI] [PubMed] [Google Scholar]

- 19.Marzi, C. A., Smania, N., Martini, M. C., Gambina, G., Tomelleri, G., Palamara, A., Alessandrini, F. & Prior, M. (1996) Neuropsychologia 34, 9-22. [DOI] [PubMed] [Google Scholar]

- 20.Dolan, R. J., Morris, J. S. & de Gelder, B. (2001) Proc. Natl. Acad. Sci. USA 98, 10006-10010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.de Gelder, B. (2005) in Emotion and Consciousness, eds. Feldman Barrett, L., Niedenthal, P. M. & Winkielman, P. (Guilford, New York) pp. 123-149.

- 22.de Gelder, B. & Vroomen, J. (2000) Cognition and Emotion 14, 321-324. [Google Scholar]

- 23.de Gelder, B., Pourtois, G. & Weiskrantz, L. (2002) Proc. Natl. Acad. Sci. USA 99, 4121-4126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Barbur, J. L., Watson, J. D., Frackowiak, R. S. & Zeki, S. (1993) Brain 116, 1293-1302. [DOI] [PubMed] [Google Scholar]

- 25.Friston, K., Holmes, A., Worsley, K., Poline, J., Frith, C. & Frackowiak, R. (1995) Hum. Brain Mapp. 2, 189-210. [DOI] [PubMed] [Google Scholar]

- 26.Ekman, P. & Friesen, W.V. (1976) Pictures of Facial Affect (Consulting Psychologists, Palo Alto, CA).

- 27.Winston, J. S., Vuilleumier, P. & Dolan, R. J. (2003) Curr. Biol. 13, 1824-1829. [DOI] [PubMed] [Google Scholar]

- 28.Rotshtein, P., Malach, R., Hadar, U., Graif, M. & Hendler, T. (2001) Neuron 32, 747-757. [DOI] [PubMed] [Google Scholar]

- 29.Bechara, A., Damasio, A. R., Damasio, H. & Anderson, S. W. (1994) Cognition 50, 7-15. [DOI] [PubMed] [Google Scholar]

- 30.Vuilleumier, P., Richardson, M. P., Armony, J. L., Driver, J. & Dolan, R. J. (2004) Nat. Neurosci. 7, 1271-1278. [DOI] [PubMed] [Google Scholar]

- 31.Lang, P. J., Bradley, M. M. & Cuthbert, B. N. (1999) International Affective Picture System (IAPS): Technical Manual and Affective Ratings (Natl. Inst. of Mental Health Center for the Study of Emotion and Attention, Univ. of Florida, Gainesville, FL).

- 32.Zald, D. H. (2003) Brain Res. Rev. 41, 88-123. [DOI] [PubMed] [Google Scholar]

- 33.Hariri, A. R., Tessitore, A., Mattay, V. S., Fera, F. & Weinberger, D. R. (2002) NeuroImage 17, 317-323. [DOI] [PubMed] [Google Scholar]

- 34.Phelps, E. A., O'Connor, K. J., Gatenby, J. C., Gore, J. C., Grillon, C. & Davis, M. (2001) Nat. Neurosci. 4, 437-441. [DOI] [PubMed] [Google Scholar]

- 35.Dolan, R. J., Lane, R., Chua, P. & Fletcher, P. (2000) NeuroImage 11, 203-209. [DOI] [PubMed] [Google Scholar]

- 36.LaBar, K. S., Gitelman, D. R., Mesulam, M. M. & Parrish, T. B. (2001) NeuroReport 12, 3461-3464. [DOI] [PubMed] [Google Scholar]

- 37.Ochsner, K. N., Bunge, S. A., Gross, J. J. & Gabrieli, J. D. (2002) J. Cogn. Neurosci. 14, 1215-1229. [DOI] [PubMed] [Google Scholar]

- 38.Glascher, J. & Adolphs, R. (2003) J. Neurosci. 23, 10274-10282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.de Gelder, B. & Bertelson, P. (2003) Trends Cogn. Sci. 7, 460-467. [DOI] [PubMed] [Google Scholar]

- 40.de Gelder, B., Bocker, K. B., Tuomainen, J., Hensen, M. & Vroomen, J. (1999) Neurosci. Lett. 260, 133-136. [DOI] [PubMed] [Google Scholar]

- 41.Pourtois, G., Debatisse, D., Despland, P. A. & de Gelder, B. (2002) Brain Res. Cogn. Brain Res. 14, 99-105. [DOI] [PubMed] [Google Scholar]

- 42.Vroomen, J., Driver, J. & de Gelder, B. (2001) Cogn. Affect Behav. Neurosci. 1, 382-387. [DOI] [PubMed] [Google Scholar]

- 43.Anderson, A. K., Christoff, K., Panitz, D., De Rosa, E. & Gabrieli, J. D. (2003) J. Neurosci. 23, 5627-5633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Morris, J. S., deBonis, M. & Dolan, R. J. (2002) NeuroImage 17, 214-222. [DOI] [PubMed] [Google Scholar]

- 45.Adolphs, R., Gosselin, F., Buchanan, T. W., Tranel, D., Schyns, P. & Damasio, A. R. (2005) Nature 433, 68-72. [DOI] [PubMed] [Google Scholar]

- 46.Whalen, P. J., Kagan, J., Cook, R. G., Davis, F. C., Kim, H., Polis, S., McLaren, D. G., Somerville, L. H., McLean, A. A., Maxwell, J. S. & Johnstone, T. (2004) Science 306, 2061. [DOI] [PubMed] [Google Scholar]

- 47.Dimberg, U., Thunberg, M. & Elmehed, K. (2000) Psychol. Sci. 11, 86-89. [DOI] [PubMed] [Google Scholar]

- 48.Cowey, A. (2004) Q. J. Exp. Psychol. A 57, 577-609. [DOI] [PubMed] [Google Scholar]

- 49.Streit, M., Ioannides, A. A., Liu, L., Wolv̈er, W., Dammers, J., Gross, J., Gaebel, W. & Müller-Gärtner, H. W. (1999) Brain Res. Cogn. Brain Res. 7, 481-491. [DOI] [PubMed] [Google Scholar]

- 50.Pizzigalli, D., Regard, M. & Lehmann, D. (1999) NeuroReport 10, 2691-2698. [DOI] [PubMed] [Google Scholar]

- 51.Halgren, E., Raij, T., Marinkovic, K., Jousmaki, V. & Hari, R. (2000) Cereb. Cortex 10, 69-81. [DOI] [PubMed] [Google Scholar]

- 52.Kawasaki, H., Kaufman, O., Damasio, H., Damasio, A. R., Granner, M., Bakken, H., Hori, T., Howard, M. A., 3rd, & Adolphs, R. (2001) Nat. Neurosci. 4, 15-16. [DOI] [PubMed] [Google Scholar]

- 53.Mohler, C. W. & Wurtz, R. H. (1977) J. Neurophysiol. 40, 74-94. [DOI] [PubMed] [Google Scholar]

- 54.Pessoa, L. & Ungerleider, L. G. (2004) Prog. Brain Res. 144, 171-182. [DOI] [PubMed] [Google Scholar]

- 55.de Gelder, B., de Haan, E. & Heywood, C., eds. (2001) Out of Mind: Varieties of Unconscious Processes (Oxford Univ. Press, Oxford), pp. 205-221.