Abstract

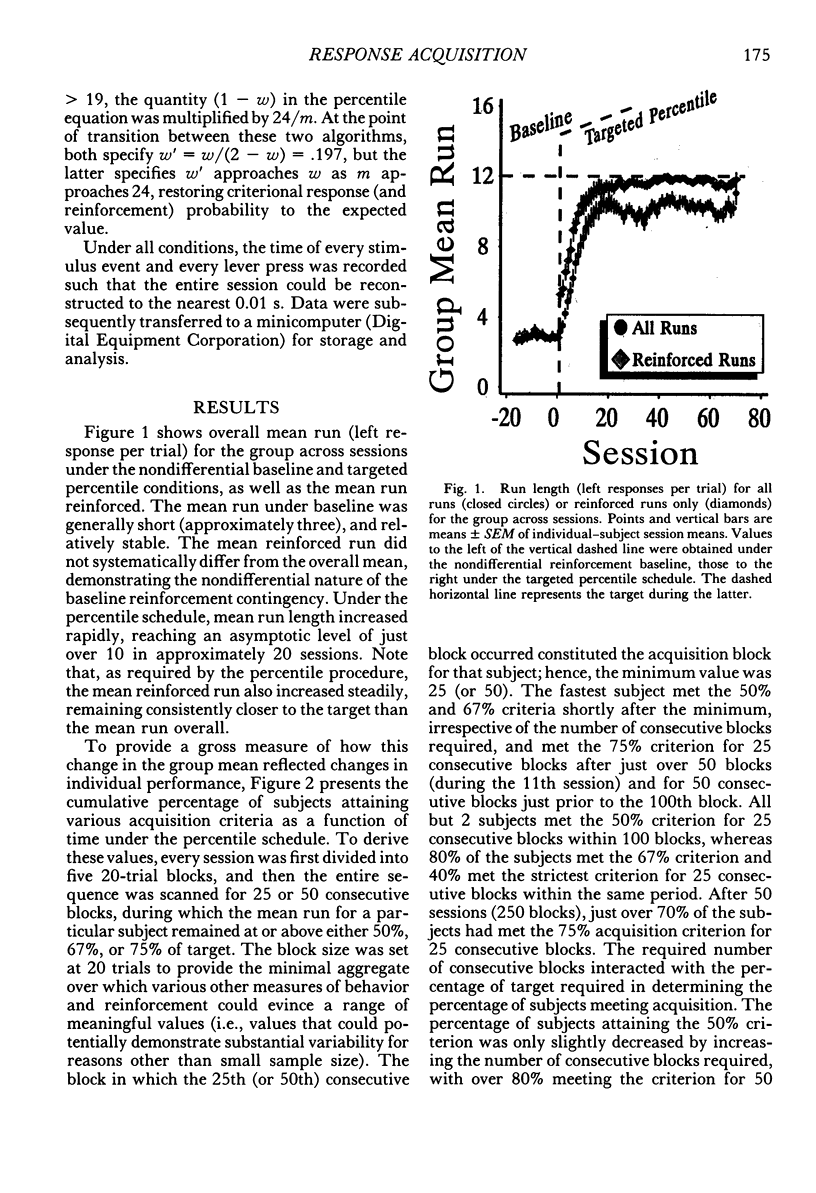

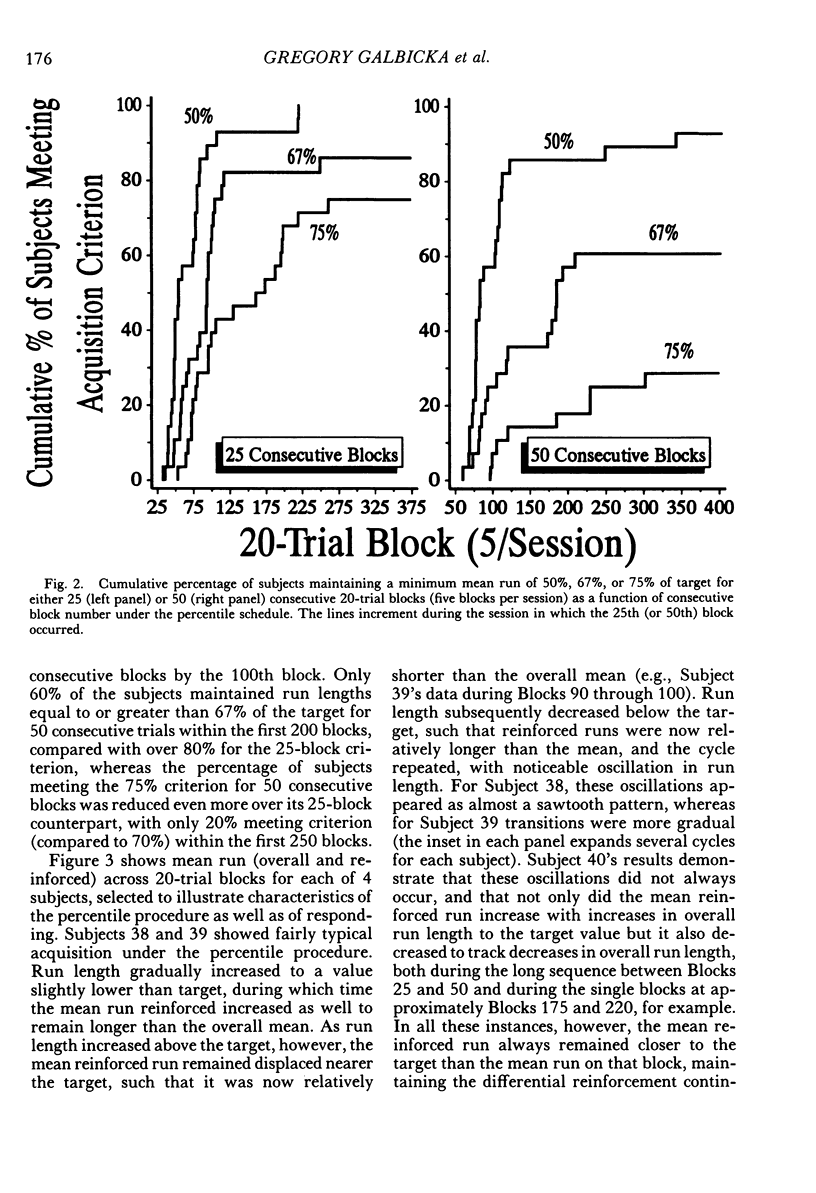

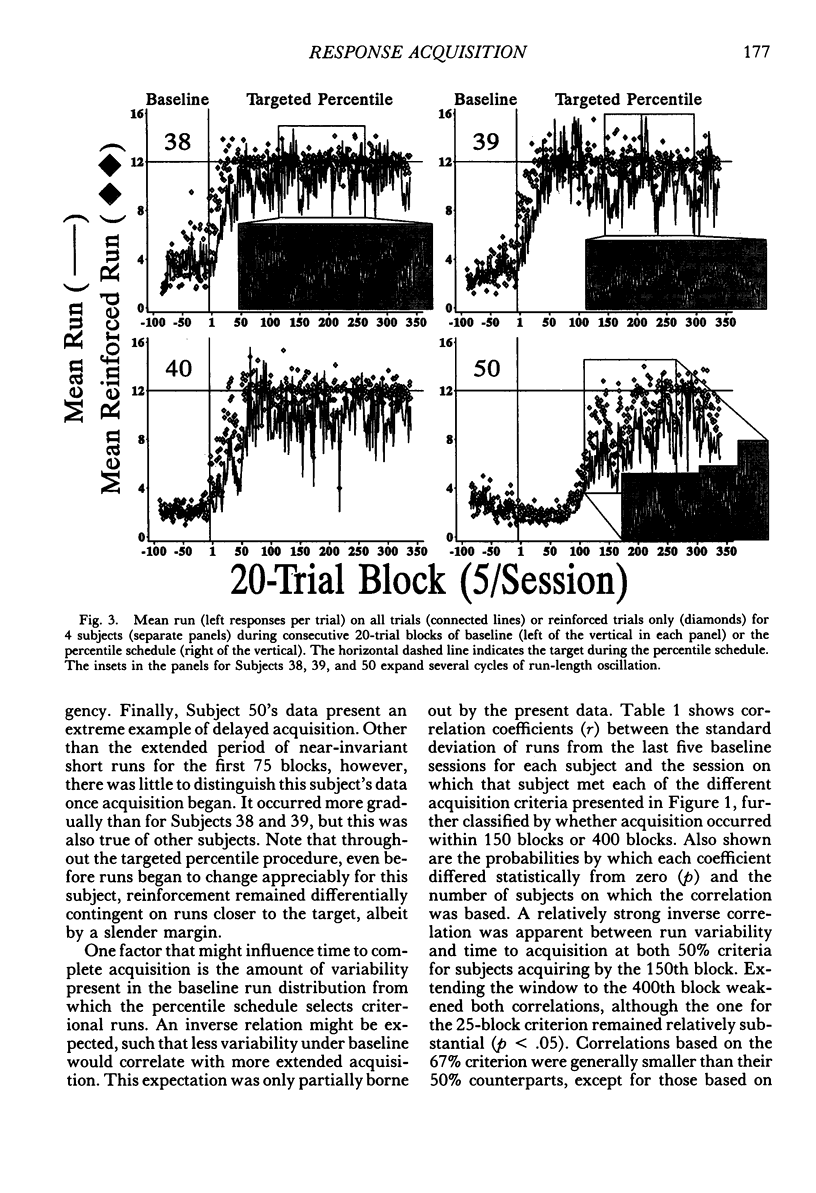

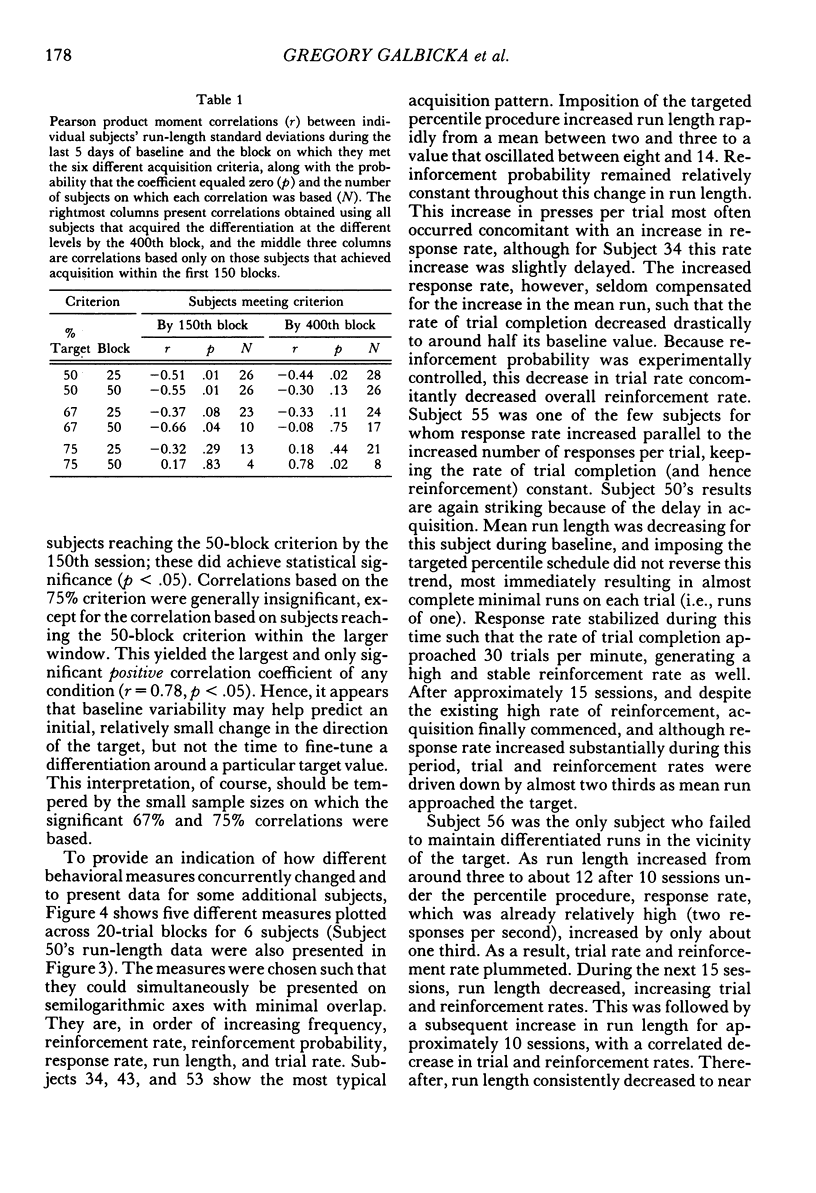

The number of responses rats made in a "run" of consecutive left-lever presses, prior to a trial-ending right-lever press, was differentiated using a targeted percentile procedure. Under the nondifferential baseline, reinforcement was provided with a probability of .33 at the end of a trial, irrespective of the run on that trial. Most of the 30 subjects made short runs under these conditions, with the mean for the group around three. A targeted percentile schedule was next used to differentiate run length around the target value of 12. The current run was reinforced if it was nearer the target than 67% of those runs in the last 24 trials that were on the same side of the target as the current run. Programming reinforcement in this way held overall reinforcement probability per trial constant at .33 while providing reinforcement differentially with respect to runs more closely approximating the target of 12. The mean run for the group under this procedure increased to approximately 10. Runs approaching the target length were acquired even though differentiated responding produced the same probability of reinforcement per trial, decreased the probability of reinforcement per response, did not increase overall reinforcement rate, and generally substantially reduced it (i.e., in only a few instances did response rate increase sufficiently to compensate for the increase in the number of responses per trial). Models of behavior predicated solely on molar reinforcement contingencies all predict that runs should remain short throughout this experiment, because such runs promote both the most frequent reinforcement and the greatest reinforcement per press. To the contrary, 29 of 30 subjects emitted runs in the vicinity of the target, driving down reinforcement rate while greatly increasing the number of presses per pellet. These results illustrate the powerful effects of local reinforcement contingencies in changing behavior, and in doing so underscore a need for more dynamic quantitative formulations of operant behavior to supplement or supplant the currently prevalent static ones.

Full text

PDF

Selected References

These references are in PubMed. This may not be the complete list of references from this article.

- Arbuckle J. L., Lattal K. A. Molecular contingencies in schedules of intermittent punishment. J Exp Anal Behav. 1992 Sep;58(2):361–375. doi: 10.1901/jeab.1992.58-361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galbicka G. Differentiating the behavior of organisms. J Exp Anal Behav. 1988 Sep;50(2):343–354. doi: 10.1901/jeab.1988.50-343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galbicka G., Fowler K. P., Ritch Z. J. Control over response number by a targeted percentile schedule: reinforcement loss and the acute effects of d-amphetamine. J Exp Anal Behav. 1991 Sep;56(2):205–215. doi: 10.1901/jeab.1991.56-205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galbicka G., Kautz M.A., Ritch Z.J. Reinforcement loss and behavioral tolerance to d-amphetamine: using percentile schedules to control reinforcement density. Behav Pharmacol. 1992 Dec;3(6):535–544. [PubMed] [Google Scholar]

- Galbicka G., Platt J. R. Parametric manipulation of interresponse-time contingency independent of reinforcement rate. J Exp Psychol Anim Behav Process. 1986 Oct;12(4):371–380. [PubMed] [Google Scholar]

- Galbicka G., Platt J. R. Response-reinforcer contingency and spatially defined operants: testing an invariance property of phi. J Exp Anal Behav. 1989 Jan;51(1):145–162. doi: 10.1901/jeab.1989.51-145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallistel C. R. Animal cognition: the representation of space, time and number. Annu Rev Psychol. 1989;40:155–189. doi: 10.1146/annurev.ps.40.020189.001103. [DOI] [PubMed] [Google Scholar]

- Hoyert M. S. Order and chaos in fixed-interval schedules of reinforcement. J Exp Anal Behav. 1992 May;57(3):339–363. doi: 10.1901/jeab.1992.57-339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S. R. Economic concepts for the analysis of behavior. J Exp Anal Behav. 1980 Sep;34(2):219–238. doi: 10.1901/jeab.1980.34-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen P. R. Mechanics of the animate. J Exp Anal Behav. 1992 May;57(3):429–463. doi: 10.1901/jeab.1992.57-429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuch D. O., Platt J. R. Reinforcement rate and interresponse time differentiation. J Exp Anal Behav. 1976 Nov;26(3):471–486. doi: 10.1901/jeab.1976.26-471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Machado A. Operant conditioning of behavioral variability using a percentile reinforcement schedule. J Exp Anal Behav. 1989 Sep;52(2):155–166. doi: 10.1901/jeab.1989.52-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDowell J. J., Bass R., Kessel R. Applying linear systems analysis to dynamic behavior. J Exp Anal Behav. 1992 May;57(3):377–391. doi: 10.1901/jeab.1992.57-377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palya W. L. Dynamics in the fine structure of schedule-controlled behavior. J Exp Anal Behav. 1992 May;57(3):267–287. doi: 10.1901/jeab.1992.57-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt J. R. Motivational and response factors in temporal differentiation. Ann N Y Acad Sci. 1984;423:200–210. doi: 10.1111/j.1749-6632.1984.tb23431.x. [DOI] [PubMed] [Google Scholar]