Abstract

Background

Failure to recruit a sufficient number of eligible subjects in a timely manner represents a major impediment to the success of clinical trials. Physician participation is vital to trial recruitment but is often limited.

Methods

Following 12 months of traditional recruitment to a clinical trial, we activated our Electronic Health Record (EHR)-based Clinical Trial Alert (CTA) system in selected outpatient clinics of a large, US academic health system. When a patient’s EHR data met selected trial criteria during the subsequent 4-month intervention period, the CTA prompted physician consideration of the patient’s eligibility and facilitated secure messaging to the trial’s coordinator. Subjects were the 114 physicians practicing at the selected clinics throughout our study. We compared differences in the number of physicians participating in recruitment and their recruitment rates before and after CTA activation.

Results

The CTA intervention was associated with significant increases in the number of physicians generating referrals (5 before, 42 after, P<0.001) and enrollments (5 before, 11 after, P=0.03), as well as with a 10-fold increase in those physicians’ referral rate (5.7/month before, 59.5/month after; rate ratio-10.44, 95% CI 7.98–13.68; P<0.001) and a doubling of their enrollment rate (2.9/month before, 6.0/month after; rate ratio-2.06, 95% CI 1.22–3.46; P=0.007).

Conclusions

EHR-based CTA use led to significant increases in physicians’ participation in and their recruitment rates to an ongoing clinical trial. Given the trend toward EHR implementation in health centers engaged in clinical research, this approach may represent a much-needed solution to the common problem of inadequate trial recruitment.

INTRODUCTION

Clinical trials are essential to the advancement of medical science and are a priority for academic health centers and research funding agencies 1, 2. Their success depends on the recruitment of a sufficient number of eligible subjects in a timely manner. Unfortunately, difficulties achieving recruitment goals are common, and failure to meet such goals can impede the development and evaluation of new therapies and can increase costs to the healthcare system 3–5.

Physician participation is critical to successful trial recruitment 3, 6. In addition to helping identify potentially eligible subjects, recruitment by a treating physician increases the likelihood that a given patient will participate in a trial 7, 8. However, due in part to the demands of clinical practice, a limited number of physicians recruit patients for clinical trials and the vast majority of patients are never offered the opportunity to participate 7, 8. Even in fields like oncology where clinical trial enrollment for all eligible patients is considered the goal, as few as 2% of patients enroll in trials 9. In addition to impeding trial completion, limited recruitment can introduce bias to a trial and prevent some patients from receiving potentially beneficial therapy. Physicians cite limited awareness of the trial, time constraints, and difficulty following enrollment procedures among their reasons for not recruiting patients 10–12.

Numerous technological approaches have been developed in attempts to enhance clinical trial recruitment 13–20. Some have shown promise by using computerized clinical databases to automate the identification of potentially eligible patients 21, 22. Electronic Health Record (EHR)-based approaches have also been described, though mostly in specialized settings and few have been subjected to controlled study or demonstrated significant benefit 23–26. It remains to be determined whether the resources of a comprehensive EHR can be leveraged for the benefit of clinical trial recruitment as effectively as they have been for patient safety and healthcare quality27.

We developed an EHR-based Clinical Trial Alert (CTA) system to overcome many of the known obstacles to trial recruitment by physicians while complying with current privacy regulations 28. The aim of this study was to determine if CTA use could enhance physicians' participation in the recruitment of subjects as well as increase physician-generated recruitment rates to an ongoing clinical trial.

METHODS

Setting

We evaluated our CTA intervention at The Cleveland Clinic, a large, US academic health system with a fully implemented, commercial ambulatory EHR (EpicCare, Epic Systems, Madison WI). This EHR served as the sole point of documentation and computerized provider order entry at nearly all of the institution’s ambulatory clinical practices, and its use at our selected clinical sites preceded this study by at least six months.

Design

We compared physicians’ trial recruitment activities during a 12-month phase of baseline recruitment to a 4-month intervention phase during which CTA use was added to baseline recruitment efforts. The pre-intervention phase began in February 2003, coincident with the onset of recruitment to the associated clinical trial. As part of the clinical trial’s recruitment strategy, physicians across the institution, including our study participants, were encouraged via traditional means (e.g. the posting of flyers, memo distribution, discussion at departmental meetings, etc.) to recruit subjects for the clinical trial. Beginning in February 2004, the CTA was activated for 4 months.

To test our intervention, we sought a clinical trial with a planned recruitment period that spanned our study and for which potential subjects would likely be encountered at main-campus and community health center clinical sites. We identified an institutional review board (IRB)-approved, multi-center trial of patients with Type 2 Diabetes Mellitus that fit our criteria. We received approval from the IRB for our study and obtained the cooperation of the clinical trial’s coordinator and site Principal Investigator (PI).

Participants

We targeted for this intervention the 10 endocrinologists and 104 general internists on staff at the selected clinical sites during both phases of our study and who therefore had the opportunity to participate in recruitment activities throughout. Selected clinical sites included the study institution’s main-campus endocrinology and general internal medicine clinics as well as the general internal medicine clinics at the institution’s twelve EHR-equipped community health centers located throughout the city and its suburbs.

Intervention

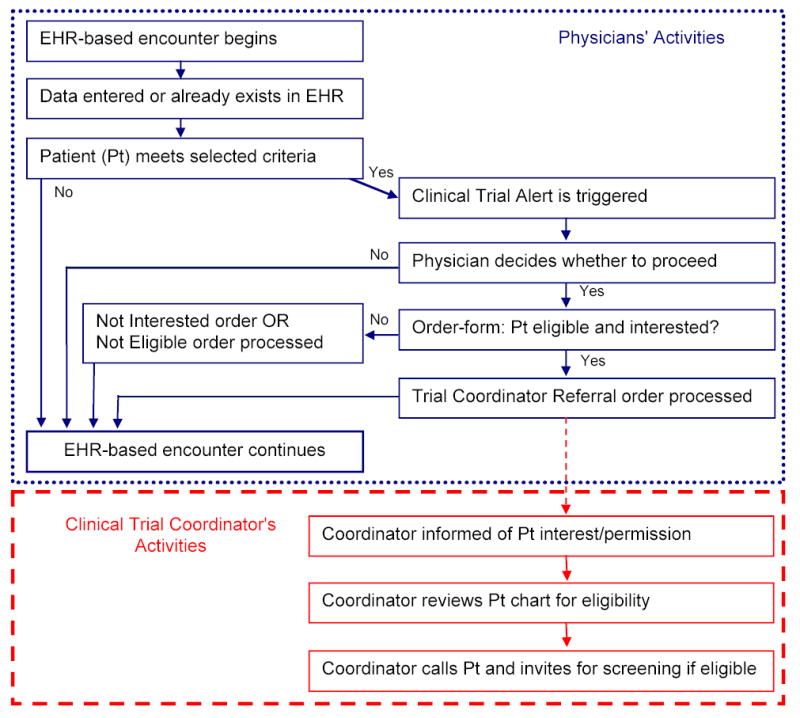

To develop the CTA, we combined features of the EHR’s clinical decision support system (CDSS) and its communications capabilities. A flow diagram of the CTA process is presented in Figure 1.

Figure 1.

Clinical Trial Alert Process: Flow Diagram of Physician and Coordinator Activities

Using the CDSS programming interface, the CTA was set to trigger during physician-patient encounters at the selected clinical sites when data in a patient’s EHR met selected trial criteria (i.e. age > 40, any ICD-9 diagnosis of Type 2 diabetes mellitus, Hemoglobin A1c > 7.4%). We chose these criteria following consultation with the trial’s PI and an analysis of which candidate criteria were present in the EHR. When triggered, the initial CTA screen alerted the physician about the ongoing trial and the current patient’s potential eligibility. If the physician opted to continue rather than dismiss the alert, a customized CTA order form appeared onscreen. This form prompted consideration of additional key eligibility criteria not consistently retrievable from the EHR (e.g. history of cardiovascular disease) and discussion of eligibility and level of interest with the current patient. The physician could then generate an order in the EHR by choosing one of three options on the CTA order form: 1) Patient meets criteria and is interested (referral order), 2) Patient does not meet study criteria at this time (not eligible order), or 3) Patient meets criteria but is not interested at this time (not interested order).

Selection of a referral order automatically transmitted a secure message within the EHR to the trial coordinator. This message included a link to the patient’s electronic chart and confirmed the patient’s permission allowing chart review for eligibility-determination purposes. After chart review, the trial coordinator telephoned all referred patients and invited those potentially eligible for further screening. A referral order also appended general trial-related information to the patient instructions printed at the close of the clinic visit. These after-visit instructions informed patients that they should expect to be contacted by the trial’s coordinator within 2 weeks regarding their eligibility, and that they were not obligated to proceed should they change their minds. Presence of a referral order prevented a CTA from triggering at a future visit for that patient. Prior to activation in the operational EHR system, proper functioning of the CTA was confirmed in a test environment.

Data Collection

We monitored the number of physicians contributing to trial recruitment as well as physician-generated referral and enrollment rates during both phases of our study. The clinical trial’s coordinator documented the date and source of any referrals received and, if physician-generated, from which clinics the referrals originated. Enrollments were similarly tracked, but because of the lag between a referral and its resultant enrollment, we attributed an enrollment to the study phase when its associated referral occurred. We assessed the enrollment status of all referred patients two months after the conclusion of the 4-month intervention phase. CTA events and physicians’ responses to CTAs were gathered by querying the EHR’s clinical data warehouse using a previously validated method 29.

Individual physician response data were kept confidential and were aggregated by clinical site for further analysis. Patients’ protected health information (PHI) was neither accessed nor removed from the EHR solely for the purposes of our study. Physicians and the clinical trial’s coordinator reviewed the PHI of patients only in the context of performing their patient-care or eligibility determination duties, respectively. Given practical considerations and the limited potential for harm, we were exempted from obtaining signed informed consent from our physician subjects. Instead, we sent a letter informing them of the CTA study and the plan to keep individual data confidential. Physicians could refuse to participate whenever presented with a CTA by opting not to proceed.

Statistical Analyses

Descriptive analyses were generated by practice type for the numbers of physicians participating in recruitment and for their monthly recruitment rates. To test the significance of differences between the numbers of physicians who participated in recruitment efforts during each study phase, we applied McNemar’s exact test for matched data. For referral and enrollment rates, we assumed a Poisson distribution. Differences between referral and enrollment rates before and after the intervention were tested using a likelihood ratio test based on the Poisson distribution with an offset for the unequal time periods. Confidence intervals were calculated from the resultant likelihood ratios. All statistical calculations were performed using SAS (version 8, SAS Institute, Inc., Cary, NC).

RESULTS

Physician Participation

During the 4-month intervention phase, all 114 physicians received at least one CTA each, and 48 (42%) participated by processing at least one CTA order form. Of those 48 who participated, 42 (88%) referred at least one patient to the trial coordinator, and 11 (23%) generated at least one enrollment. The number of physicians referring patients after CTA activation increased more than eight-fold, from 5 before to 42 after (P < 0.001), and the number generating enrollments more than doubled, from 5 before to 11 after (P = 0.03) (Table 1). These increases included participation by 36 internists and one endocrinologist who had not contributed to recruitment before the intervention.

Table 1.

Number of physicians who referred and generated enrollments

| Physician Subjects | Physicians who referred patients | Physicians who generated enrollments | ||

|---|---|---|---|---|

| Pre CTA | Post CTA activation | Pre CTA | Post CTA activation | |

| All (n=114) | 5 | 42 * | 5 | 11 † |

| Endocrinologists, Main Campus (n=10) | 5 | 6 | 5 | 4 |

| General Internists, Main Campus (n=43) | 0 | 15 | 0 | 3 |

| General Internists, Community Health Centers (n=61) | 0 | 21 | 0 | 4 |

Significant increase, p < 0.001

Significant increase, p = 0.03

Physician-generated Referral and Enrollment Rates

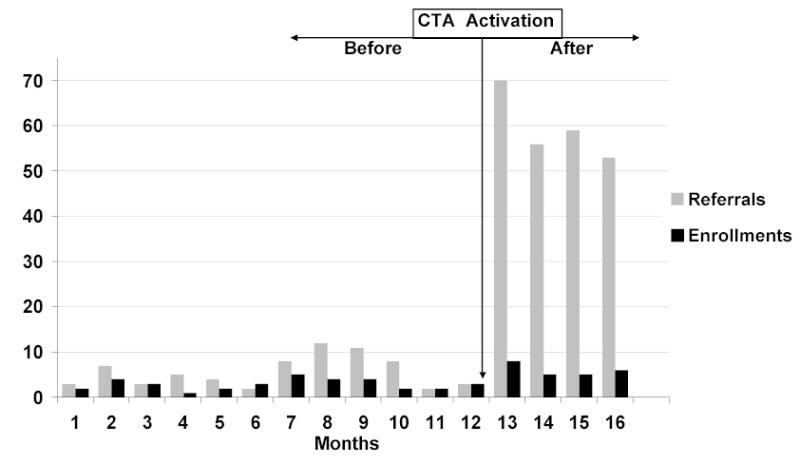

After CTA activation, the physician-generated referral rate increased more than ten-fold, from 5.7/month before to 59.5/month after (P < 0.001), and the enrollment rate more than doubled, from 2.9/month before to 6.0/month after (P = 0.007) (Table 2). Referral and enrollment rates fluctuated slightly from month-to-month before the intervention, but increased markedly after CTA activation (Figure 2). While general internists had not contributed to recruitment before, they generated 170 (71%) of the referrals and 7 (29%) of the enrollments after CTA activation. CTA use was also associated with a significant referral rate increase among endocrinologists, but their 47% enrollment rate increase did not reach statistical significance (Table 2).

Table 2.

Physician-generated referral and enrollment rates, overall and by practice setting

| Physician Groups |

No. of Referrals or Enrollments per Month (Total No.) |

Rate Ratio (95% CI) | Pvalue | |

|---|---|---|---|---|

| Before CTA | After CTA Activation | |||

| Referrals | ||||

| All physicians | 5.7/month (68) | 59.5/month (238) | 10.44 (7.97 - 13.68) | < 0.001 |

| Endocrinologists, main campus | 5.7 (68) | 17.0 (68) | 3.00 (2.14 – 4.18) | < 0.001 |

| General internists, main campus | 0 | 13.0 (52) | * | * |

| General internists, community health centers | 0 | 29.5 (118) | * | * |

| Enrollments | ||||

| All physicians | 2.9/month (35) | 6.0/month (24) | 2.06 (1.22 - 3.46) | 0.007 |

| Endocrinologists, main campus | 2.9 (35) | 4.2 (17) | 1.47 (0.82 – 2.60) | 0.20 |

| General internists, main campus | 0 | 0.8 (3) | * | * |

| General internists, community health centers | 0 | 1.0 (4) | * | * |

Abbreviations: CTA, Clinical Trial Alert; CI, Confidence Interval

Not calculated owing to zero value in pre-CTA phase

Figure 2.

Monthly physician-generated referrals and enrollments before and after CTA activation

CTA Events and Outcomes

During the 4-month intervention phase, there were 4780 CTAs triggered during clinical encounters with 3158 individual patients. Of all CTAs triggered, physicians dismissed 4295 (90%) of them without further action. Of the 485 CTAs acted upon, physicians referred 238 (49%) to the trial coordinator and indicated that the rest were either not eligible, 153 (32%), or eligible but not interested, 94 (19%). These proportions did not change significantly throughout the intervention phase.

Of the 238 referrals, the trial coordinator found that 121 (51%) remained potentially eligible after a thorough EHR chart review. Those patients were invited for further evaluation, and 60 (50%) presented for a screening visit. At final analysis, 24 (40%) of those screened had enrolled, while the rest were either ineligible or disinterested (30%) or were “on hold” awaiting further data to determine if they might eventually become eligible (30%).

COMMENT

We found that the use of an EHR-based Clinical Trial Alert (CTA) system was associated with significant increases in the number of physicians participating in trial recruitment as well as in physician-generated referral and enrollment rates to the associated clinical trial. Despite traditional efforts to promote recruitment institution-wide, a few endocrinologists practicing at the primary trial site did all of the recruiting before the intervention, a common occurrence 30, 31. While physicians at this site continued to generate most of the enrollments after CTA activation, the intervention increased their referral rate three-fold and their enrollment rate by nearly 50%, suggesting that it helped recruit subjects being missed even in that site. In addition, general internists’ participation increased dramatically after CTA activation. Their contributions during our intervention phase accounted for over two-thirds of referrals, nearly one-third of enrollments, and were essential to the statistically significant increase in the enrollment rate observed.

This enhanced recruitment among general internists following CTA activation is particularly notable given the recognized value of recruiting patients from primary-care and community-based sites but the historic difficulty of doing so 32. Beyond enhancing recruitment rates, CTA use in such practice settings could help to limit referral bias, bring the benefits of clinical trials to a wider patient population, help community physicians enjoy the benefits of contributing to trial recruitment, and potentially help overcome some of the disparities currently present in clinical trials 5, 31, 33.

While this study was not designed to assess reasons for the CTA’s impact, some of our design choices were based on factors previously recognized to influence physician participation and may have contributed to its success 10, 11, 34, 35. Traditional recruitment requires that physicians remember a clinical trial is active, recall the trial's details in order to determine patient eligibility, and take time to perform other recruitment activities. The CTA likely helped our physicians overcome some of those obstacles to participation by alerting them about their patients’ potential eligibility for the trial at the point-of-care, by facilitating communication and documentation tasks required for patient referral, and by shifting much of the work of eligibility determination away from the physician.

In addition to physician factors, patient factors play an important role in successful trial recruitment 36. Previous studies have demonstrated that being recruited by one’s treating physician, feeling actively involved in the decision-making process, and taking time to consider whether to participate in a trial all significantly increase the likelihood that a patient will enroll 7, 37. In designing our CTA, we also attempted to capitalize on these recognized success factors by prompting treating physicians to recruit their patients, providing basic trial information to the patient in the after-visit instructions, and giving patients time to consider their decision to participate before being contacted by the trial coordinator.

Driven by calls from governmental and advisory organizations 38–40, there is a trend toward implementation of comprehensive EHRs with similar functionality to the one studied in academic health centers (AHCs) 41. It may therefore be possible to replicate the CTA approach in such settings. Because past clinical trial success or failure has sometimes been considered by research funding agencies as a predictor of future performance when making research funding decisions 3, the ability to enhance trial completion with CTA technology may have implications for AHCs. Should the CTA approach prove effective at augmenting current recruitment strategies in other such settings, AHCs may view its potential benefit to their research missions as yet another reason to implement EHRs.

While our use of the EHR’s clinical decision support system (CDSS) to build the CTA may contribute to the generalizability of these findings, CDSS technology was not designed for this purpose and presented some limitations. For instance, our EHR’s CDSS did not allow activation of the CTA at the individual physician level thereby preventing exclusion of disinterested physicians from the intervention. It also limited both the kinds of EHR data we were able to use and the ways in which we could combine EHR data to trigger the CTA, thereby allowing the CTA to trigger for more ineligible patients than we would have liked. These technological limitations likely contributed to the high proportion of physician non-responders (58%) and to the high rate of dismissed CTAs (90%). Further, by allowing dismissal of CTAs without a response, the technology did not permit us to determine if such dismissals were due to physician disinterest, knowledge of the patient’s ineligibility, or some other reason.

After CTA activation, we observed a substantially higher ratio of physicians’ referrals to enrollments than previously. While some of this likely related to the technical limitations noted above, this was somewhat expected given our design choices. By choosing rather sensitive CTA triggering criteria, we decided to tolerate a potentially high “false-positive” referral rate in order to minimize “false-negatives” or missed eligible patients. While this choice almost certainly contributed to some inefficiency, the observed statistically significant increase in the enrollment rate suggests that it was an effective approach. Indeed, prior research has demonstrated that when practice sites refer patients for trial consideration based on broad entry criteria and leave eligibility determination to the researcher, they achieve a higher recruitment success rate compared with practices that try to precisely determine eligibility prior to referral 42. Moreover, we observed that the clinical trial coordinator’s ability to review CTA-referred patients’ data via the EHR allowed for pre-screening and resulted in fewer in-person screenings than might have otherwise been necessary. Whether a more specifically targeted CTA would have been as effective while also being more efficient will be examined in future studies.

This study has several limitations, some of which are noted above. We know of no other changes to ongoing trial recruitment efforts that might have impacted physician participation during the intervention phase, and recruitment trends were stable or even declining prior to our intervention (Figure 2). Nevertheless, it is still possible that unrecognized differences between the study phases may have accounted for some of the improvements observed due to our before-after study design. Furthermore, because the CTA was tested on a single clinical trial, at a single institution, using a single EHR, the benefits observed may relate to features specific to the trial, the EHR, or the study setting. A Hawthorne effect is possible given that physicians were aware their CTA responses were being monitored. It is also possible that the increased recruitment rates observed during the 4-month intervention phase might wane with prolonged use.

Future plans include refinement of the CTA system based on the lessons learned from this study and ongoing evaluations of subjects’ perceptions. We intend to test the CTA’s impact on multiple and varied clinical trials and assess the generalizability of our findings through testing in other EHRs at different health centers.

In conclusion, CTA use during EHR-based outpatient practice was associated with significant increases in physician participation and in physician-generated recruitment rates to an ongoing clinical trial. Given the trend toward adoption of similarly capable EHRs in health centers engaged in clinical research, the CTA approach may be applicable to such settings and could represent a solution to the common problem of slow and insufficient trial recruitment by a limited number of physicians. Further studies are planned to optimize the CTA approach and assess its utility in other settings.

Acknowledgments

We thank the physicians who participated as subjects in this study. We also thank Byron Hoogwerf, MD; Gary S. Hoffman, MD, MS; Ronnie D. Horner, Ph.D.; Michael G. Ison, MD, MS; James E. Lockshaw, MBA; and Mark H. Eckman, MD, MS, for their contributions to the project and this report.

Footnotes

Previous Presentation: This study was presented at the 28th annual meeting of the Society of General Internal Medicine, New Orleans, LA, May 14, 2005.

Author Contributions: Dr Embi had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

Study concept and design : Embi, Jain, Harris.

Acquisition of data: Embi, Jain, Clark, Bizjack.

Analysis and interpretation of data : Embi, Jain, Harris, Hornung.

Drafting of the manuscript: Embi, Jain.

Critical revision of the manuscript for important intellectual content: Embi, Jain, Harris, Hornung, Clark, Bizjack.

Statistical expertise: Hornung.

Financial Disclosures: None.

Funding/Support: Dr. Embi’s participation in this study was supported in part by career development award K22-LM008534 from the National Library of Medicine, National Institutes of Health.

Role of Funding Agency: The funding agency had no role in any aspect of the design, execution, or publication of this study.

References

- 1.Nathan DG, Wilson JD. Clinical Research and the NIH -- A Report Card. N Engl J Med. November 6 2003 2003;349(19):1860–1865. doi: 10.1056/NEJMsb035066. [DOI] [PubMed] [Google Scholar]

- 2.Campbell EG, Weissman JS, Moy E, Blumenthal D. Status of clinical research in academic health centers: views from the research leadership. JAMA. Aug 15 2001;286(7):800–806. doi: 10.1001/jama.286.7.800. [DOI] [PubMed] [Google Scholar]

- 3.Hunninghake DB, Darby CA, Probstfield JL. Recruitment experience in clinical trials: literature summary and annotated bibliography. Control Clin Trials. Dec 1987;8(4 Suppl):6S–30S. doi: 10.1016/0197-2456(87)90004-3. [DOI] [PubMed] [Google Scholar]

- 4.Marks L, Power E. Using technology to address recruitment issues in the clinical trial process. Trends Biotechnol. Mar 2002;20(3):105–109. doi: 10.1016/s0167-7799(02)01881-4. [DOI] [PubMed] [Google Scholar]

- 5.Sung NS, Crowley WF, Jr, Genel M, et al. Central challenges facing the national clinical research enterprise. JAMA. Mar 12 2003;289(10):1278–1287. doi: 10.1001/jama.289.10.1278. [DOI] [PubMed] [Google Scholar]

- 6.Wright JR, Crooks D, Ellis PM, Mings D, Whelan TJ. Factors that influence the recruitment of patients to Phase III studies in oncology: the perspective of the clinical research associate. Cancer. Oct 1 2002;95(7):1584–1591. doi: 10.1002/cncr.10864. [DOI] [PubMed] [Google Scholar]

- 7.Siminoff LA, Zhang A, Colabianchi N, Sturm CM, Shen Q. Factors that predict the referral of breast cancer patients onto clinical trials by their surgeons and medical oncologists. J Clin Oncol. Mar 2000;18(6):1203–1211. doi: 10.1200/JCO.2000.18.6.1203. [DOI] [PubMed] [Google Scholar]

- 8.The many reasons why people do (and would) participate in clinical trials. Available at: http://www.harrisinteractive.com Accessed 31 January 2005.

- 9.Lara PN, Jr, Higdon R, Lim N, et al. Prospective evaluation of cancer clinical trial accrual patterns: identifying potential barriers to enrollment. J Clin Oncol. Mar 15 2001;19(6):1728–1733. doi: 10.1200/JCO.2001.19.6.1728. [DOI] [PubMed] [Google Scholar]

- 10.Taylor KM, Margolese RG, Soskolne CL. Physicians' reasons for not entering eligible patients in a randomized clinical trial of surgery for breast cancer. N Engl J Med. May 24 1984;310(21):1363–1367. doi: 10.1056/NEJM198405243102106. [DOI] [PubMed] [Google Scholar]

- 11.Mansour EG. Barriers to clinical trials. Part III: Knowledge and attitudes of health care providers. Cancer. Nov 1 1994;74(9 Suppl):2672–2675. doi: 10.1002/1097-0142(19941101)74:9+<2672::aid-cncr2820741815>3.0.co;2-x. [DOI] [PubMed] [Google Scholar]

- 12.Fisher WB, Cohen SJ, Hammond MK, Turner S, Loehrer PJ. Clinical trials in cancer therapy: efforts to improve patient enrollment by community oncologists. Med Pediatr Oncol. 1991;19(3):165–168. doi: 10.1002/mpo.2950190304. [DOI] [PubMed] [Google Scholar]

- 13.Breitfeld PP, Weisburd M, Overhage JM, Sledge G, Jr, Tierney WM. Pilot study of a point-of-use decision support tool for cancer clinical trials eligibility. J Am Med Inform Assoc. Nov–Dec 1999;6(6):466–477. doi: 10.1136/jamia.1999.0060466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Seroussi B, Bouaud J. Using OncoDoc as a computer-based eligibility screening system to improve accrual onto breast cancer clinical trials. Artif Intell Med. Sep–Oct 2003;29(1–2):153–167. doi: 10.1016/s0933-3657(03)00040-x. [DOI] [PubMed] [Google Scholar]

- 15.Ash N, Ogunyemi O, Zeng Q, Ohno-Machado L. Finding appropriate clinical trials: evaluating encoded eligibility criteria with incomplete data. Proc AMIA Symp. 2001:27–31. [PMC free article] [PubMed] [Google Scholar]

- 16.Papaconstantinou C, Theocharous G, Mahadevan S. An expert system for assigning patients into clinical trials based on Bayesian networks. J Med Syst. Jun 1998;22(3):189–202. doi: 10.1023/a:1022667800953. [DOI] [PubMed] [Google Scholar]

- 17.Thompson DS, Oberteuffer R, Dorman T. Sepsis alert and diagnostic system: integrating clinical systems to enhance study coordinator efficiency. Comput Inform Nurs. Jan–Feb 2003;21(1):22–26. doi: 10.1097/00024665-200301000-00009. quiz 27–28. [DOI] [PubMed] [Google Scholar]

- 18.Ohno-Machado L, Wang SJ, Mar P, Boxwala AA. Decision support for clinical trial eligibility determination in breast cancer. Proc AMIA Symp. 1999:340–344. [PMC free article] [PubMed] [Google Scholar]

- 19.Fink E, Kokku PK, Nikiforou S, Hall LO, Goldgof DB, Krischer JP. Selection of patients for clinical trials: an interactive web-based system. Artif Intell Med. Jul 2004;31(3):241–254. doi: 10.1016/j.artmed.2004.01.017. [DOI] [PubMed] [Google Scholar]

- 20.Gennari JH, Sklar D, Silva J. Cross-tool communication: from protocol authoring to eligibility determination. Proc AMIA Symp. 2001:199–203. [PMC free article] [PubMed] [Google Scholar]

- 21.Butte AJ, Weinstein DA, Kohane IS. Enrolling patients into clinical trials faster using RealTime Recuiting. Proc AMIA Symp. 2000:111–115. [PMC free article] [PubMed] [Google Scholar]

- 22.Weiner DL, Butte AJ, Hibberd PL, Fleisher GR. Computerized recruiting for clinical trials in real time. Ann Emerg Med. Feb 2003;41(2):242–246. doi: 10.1067/mem.2003.52. [DOI] [PubMed] [Google Scholar]

- 23.Afrin LB, Oates JC, Boyd CK, Daniels MS. Leveraging of open EMR architecture for clinical trial accrual. AMIA Annu Symp Proc. 2003:16–20. [PMC free article] [PubMed] [Google Scholar]

- 24.Carlson RW, Tu SW, Lane NM, et al. Computer-based screening of patients with HIV/AIDS for clinical-trial eligibility. Online J Curr Clin Trials. Mar 28 1995;Doc No 179:[3347 words; 3332 paragraphs]. [PubMed]

- 25.Moore TD, Hotz K, Christensen R, et al. Integration of clinical trial decision rules in an electronic medical record (EMR) enhances patient accrual and facilitates data management, quality control and analysis. Paper presented at: Proc Am Soc Clin Onc. 2003;22 [Google Scholar]

- 26.Musen MA, Carlson RW, Fagan LM, Deresinski SC, Shortliffe EH. T-HELPER: automated support for community-based clinical research. Proc Annu Symp Comput Appl Med Care. 1992:719–723. [PMC free article] [PubMed] [Google Scholar]

- 27.Hersh W. Health care information technology: progress and barriers. JAMA. Nov 10 2004;292(18):2273–2274. doi: 10.1001/jama.292.18.2273. [DOI] [PubMed] [Google Scholar]

- 28.U.S. Health Insurance Portability and Accountability Act of 1996. Available at: http://www.cms.hhs.gov/hipaa/ Accessed 31 January 2005.

- 29.Jain A, Atreja A, Harris CM, Lehmann M, Burns J, Young J. Responding to the rofecoxib withdrawal crisis: a new model for notifying patients at risk and their health care providers. Ann Intern Med. Feb 1 2005;142(3):182–186. doi: 10.7326/0003-4819-142-3-200502010-00008. [DOI] [PubMed] [Google Scholar]

- 30.Mannel RS, Walker JL, Gould N, et al. Impact of individual physicians on enrollment of patients into clinical trials. Am J Clin Oncol. Apr 2003;26(2):171–173. doi: 10.1097/00000421-200304000-00014. [DOI] [PubMed] [Google Scholar]

- 31.Cohen GI. Clinical research by community oncologists. CA Cancer J Clin. Mar–Apr 2003;53(2):73–81. doi: 10.3322/canjclin.53.2.73. [DOI] [PubMed] [Google Scholar]

- 32.Crosson K, Eisner E, Brown C, Ter Maat J. Primary care physicians' attitudes, knowledge, and practices related to cancer clinical trials. J Cancer Educ. Winter 2001;16(4):188–192. doi: 10.1080/08858190109528771. [DOI] [PubMed] [Google Scholar]

- 33.King TE., Jr Racial disparities in clinical trials. N Engl J Med. May 2 2002;346(18):1400–1402. doi: 10.1056/NEJM200205023461812. [DOI] [PubMed] [Google Scholar]

- 34.Winn RJ. Obstacles to the accrual of patients to clinical trials in the community setting. Semin Oncol. Aug 1994;21(4 Suppl 7):112–117. [PubMed] [Google Scholar]

- 35.Fallowfield L, Ratcliffe D, Souhami R. Clinicians' attitudes to clinical trials of cancer therapy. Eur J Cancer. Nov 1997;33(13):2221–2229. doi: 10.1016/s0959-8049(97)00253-0. [DOI] [PubMed] [Google Scholar]

- 36.Cox K, McGarry J. Why patients don't take part in cancer clinical trials: an overview of the literature. Eur J Cancer Care (Engl) Jun 2003;12(2):114–122. doi: 10.1046/j.1365-2354.2003.00396.x. [DOI] [PubMed] [Google Scholar]

- 37.Kinney AY, Richards C, Vernon SW, Vogel VG. The effect of physician recommendation on enrollment in the Breast Cancer Chemoprevention Trial. Prev Med. Sep–Oct 1998;27(5 Pt 1):713–719. doi: 10.1006/pmed.1998.0349. [DOI] [PubMed] [Google Scholar]

- 38.Kohn LT, ed. Academic Health Centers: Leading Change in the 21st Century. Washington, D.C.: Institute of Medicine. The National Academies Press; 2003. [PubMed]

- 39.Kohn L, Corrigan JM, Donaldson, M, ed. To Err is Human: Building a Safer Health System. Washington, D.C.: Institute of Medicine; 1999. [PubMed]

- 40.Brailer DJ. The Decade of Health Information Technology: Delivering Consumer-centric and Information-rich Health Care. Framework for Strategic Action. Available at: http://www.hhs.gov/healthit/frameworkchapters.html Accessed 31 January 2005.

- 41.Ash JS, Bates DW. Factors and forces affecting EHR system adoption: report of a 2004 ACMI discussion. J Am Med Inform Assoc. Jan–Feb 2005;12(1):8–12. doi: 10.1197/jamia.M1684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Bell-Syer SE, Moffett JA. Recruiting patients to randomized trials in primary care: principles and case study. Fam Pract. Apr 2000;17(2):187–191. doi: 10.1093/fampra/17.2.187. [DOI] [PubMed] [Google Scholar]