Abstract

In order to guide the movement of the body through space, the brain must constantly monitor the position and movement of the body in relation to nearby objects. The effective ‘piloting’ of the body to avoid or manipulate objects in pursuit of behavioural goals (Popper & Eccles, 1977, p. 129), requires an integrated neural representation of the body (the ‘body schema’) and of the space around the body (‘peripersonal space’). In the review that follows, we describe and evaluate recent results from neurophysiology, neuropsychology, and psychophysics in both human and non-human primates that support the existence of an integrated representation of visual, somatosensory, and auditory peripersonal space. Such a representation involves primarily visual, somatosensory, and proprioceptive modalities, operates in body part-centred reference frames, and demonstrates significant plasticity. Recent research shows that the use of tools, the viewing of one’s body or body parts in mirrors, and in video-monitors, may also modulate the visuotactile representation of peripersonal space.

Keywords: PERIPERSONAL SPACE, FRONTO-PARIETAL, VISUOTACTILE, TOOL-USE

Introduction

When we walk hurriedly, with our attention on other things, we might fail to notice certain objects such as a low, overhanging branch crossing our path. When these potentially harmful objects approach our body at speed, however, we find ourselves automatically and sometimes convulsively avoiding them. How are these complex and incredibly rapid sensory-motor sequences represented and actuated in our brains? Which brain areas contain neurons sensitive to the information available about such threatening (as well as other, non-threatening) situations, and what kind of representations of the physical world are made explicit in these areas? These questions can be restated as: What is the neural representation of peripersonal space?

Peripersonal space is defined as the space immediately surrounding our bodies (Rizzolatti, Fadiga, Fogassi, & Gallese, 1997). Objects within peripersonal space can be grasped and manipulated; objects located beyond this space (in what is often termed ‘extrapersonal space’) cannot normally be reached without moving toward them, or else their movement toward us (see Previc, 1998, for a review of different concepts of external space; and see below). It makes sense, then, that the brain should represent objects situated in peripersonal space differently from those in extrapersonal space. If an engineer were to design a robot that could move through the world, selecting and grasping objects as it went, the best use of its limited computational resources might well be to plan only grasping movements to those objects of interest within direct reach, and to plan only locomotive movements to those objects situated at a distance. Similarly, the avoidance of objects that are of potential harm must be a primary goal for all organisms fighting for their survival. Those objects that are of most immediate threat are those that are closest to, and moving most rapidly toward, the body (see Graziano, Taylor, & Moore, 2002, for intriguing electrical stimulation studies on defensive and avoidance movements).

How does the brain represent objects situated in different positions in the space surrounding the body? Objects at a distance can typically be perceived though a limited number of senses, namely vision, audition, and olfaction (although note that thermoreceptors in the skin are also sensitive to radiant heat emanating from hot objects situated at a distance). By contrast, objects nearer to, or in contact with, the body, can impact upon all our sensory systems (i.e., including gustation and all of the submodalities of touch – see Craig & Rollman, 1999, for a review). We might therefore assume that the brain’s processing of objects in peripersonal space is more thorough, more complex, and involves more modalities of sensory information than for objects located in extrapersonal space. Indeed, such a distinction may be justified given that, following right hemisphere brain damage, certain patients show behavioural deficits when perceiving objects or performing actions in peripersonal space, for example in bisecting a line on a piece of paper, but do not show such impairments when this bisection is carried out with a laser pointer in extrapersonal space (Halligan & Marshall, 1991).

This paper will describe and evaluate the results of recent research on the representation of peripersonal space in both animals and humans. First, the neural circuits underlying the multisensory representation of peripersonal space will be outlined, focusing on the growing body of single-neuron studies of visuotactile integration in macaque monkeys. Next, the plasticity of multisensory representations of peripersonal space will be examined; when humans and animals look at themselves in mirrors or in video-monitors, or perhaps more surprisingly still, while viewing artificial body parts, their brain’s representation of peripersonal space may be altered to encompass the new visually-perceived configuration of their bodies with respect to the external world. These situations induce conflicts between the different senses. In a mirror, in video monitors, and when viewing artificial body parts, we may see what appears to be our body in one position, while feeling it to be in another position (via proprioception, our bodies’ position-sense). What effect does this have on our brain’s representation of peripersonal space? In the final section of this article, some recent findings regarding the modulation of peripersonal space following active tool-use will be reviewed. The use of inanimate objects to extend the physical reach and capability of our bodies has important implications not only for craftsmen and athletes, but also for our understanding of the representation of peripersonal space in the brain. One central question that arises is, to what extent can inanimate objects, such as tennis racquets, cutlery, or even bicycles, be incorporated into what Head and Holmes (1911–1912) first termed the ‘Body Schema?’1 (see Bonnier, 1905, for an early history of this concept from 1893 onward).

Understanding the brain’s representation of the body and of peripersonal space also has important implications for neurological patients suffering from disturbances of the body schema, or from putatively spatial disorders of attention such as neglect or extinction. Such knowledge may also enable the design of virtual haptic reality technology (Held & Durlach, 1993), prostheses for amputees (Ramachandran & Rogers-Ramachandran, 1996; Ramahandran, Rogers-Ramachandran, & Cobb, 1995; Sathian, Greenspan, & Wolf, 2000), and navigational aids for the visually impaired (see Hoover, 1950). Finally, the convergence of findings from different areas of neuroscience, neurology, and psychology offers exciting opportunities for a more broadly based experimental, conceptual, and philosophical understanding of these fascinating problems and ideas concerning the brain’s representation of the body and of peripersonal space.

The neural circuitry of peripersonal space

The neural representation of peripersonal space is built up through a network of interacting cortical and subcortical brain areas. To represent the space around the body and individual body parts that can be reached with the hands, the brain must compute the position of the arms in space. Such a representation might be instantiated in a variety of different reference frames, for example, body-centred or eye-centred frames of reference2. A body-centred reference frame, that represents the body surface topographically, might exist in the primary somatosensory cortex and several other brain areas (e.g., secondary somatosensory cortex, the putamen, premotor cortex, and primary motor cortex). Incoming visual signals concerning the location of the body parts could be addressed to the relevant portion of such a somatotopic representation in order to convey the visual space around individual body parts. Alternatively, an eye-centred frame of reference could compute the location of body parts with respect to a retinotopic or eye-centred map in the visual cortices. The brain seems to use the reference frame most appropriate to the information being encoded: visual signals in retinotopic frames; eye-movements in eye-centred frames; auditory signals and head-movements in head-centred frames (Cohen & Anderson, 2002). For visuotactile peripersonal space and the representation and control of bodily position and movements, a reference frame centred on individual body parts might be the most useful. In fact, several brain areas have been found to encode a multisensory map of space in a body part-centred frame of reference including the putamen, area 7b, and the ventral intraparietal cortex (VIP; Graziano & Gross, 1995). In this review, we shall focus on two further somatotopic maps in the premotor cortex, and in area 5 and the intraparietal sulcus of the posterior parietal cortex.

Premotor cortex

Premotor cortex has become a brain region of great interest in the last decade, being the site in monkey brains (area F5) where ‘mirror neurons’ were first discovered (Rizzolatti, Fadiga, Gallese, & Fogassi, 1996). Mirror neurons are cells that respond to particular goal-directed actions both when the monkey executes that action, and when those same actions are performed, for instance by an experimenter, and observed only passively. Just posterior to area F5 lies area F4, also part of the ventral premotor cortex (PMv – see Figure 1). This area borders the primary motor cortex, and contains somatotopic maps of the arms, hands and face. Many neurons in PMv respond both to somatosensory stimulation (e.g., stroking the skin or manipulating the joints of the hand or arm) and to visual stimulation. Significantly, the region of space within which visual stimulation is effective in exciting these neurons is modulated by the position of the arm in space (e.g., Fogassi, Gallese, Fadiga, Luppino, Matelli, & Rizzolatti, 1996; Graziano, Yap, & Gross, 1994). For example, some PMv cells with tactile receptive fields (RFs) on the right arm respond most vigorously to visual stimuli on the right side of space when the arm is held stretched out behind the monkey’s back. When the arm is moved into the centre of the visual field, with the hand just in front of the face, the same neurons now respond preferentially to visual stimuli in the centre of the visual field, on both left and right sides of the midline. When certain neurons with tactile RFs on the face were tested, visual RFs were found to move when the head was turned, but not when visual fixation changed (Graziano, Hu, & Gross, 1997a,b). These results provide strong evidence for a body part-centred reference frame, since the neuronal responses were tested both when the monkeys were fixating a particular position, and when fixation was not controlled. Regardless of the position of the eyes (and hence of the direction of gaze), visual stimuli presented near a specific body part activated neurons with somatosensory RFs on that body part. For some neurons, the spontaneous or stimulus-evoked firing rate was modulated by eye position, but the portion of space eliciting the maximal visual response did not change when the eyes moved.

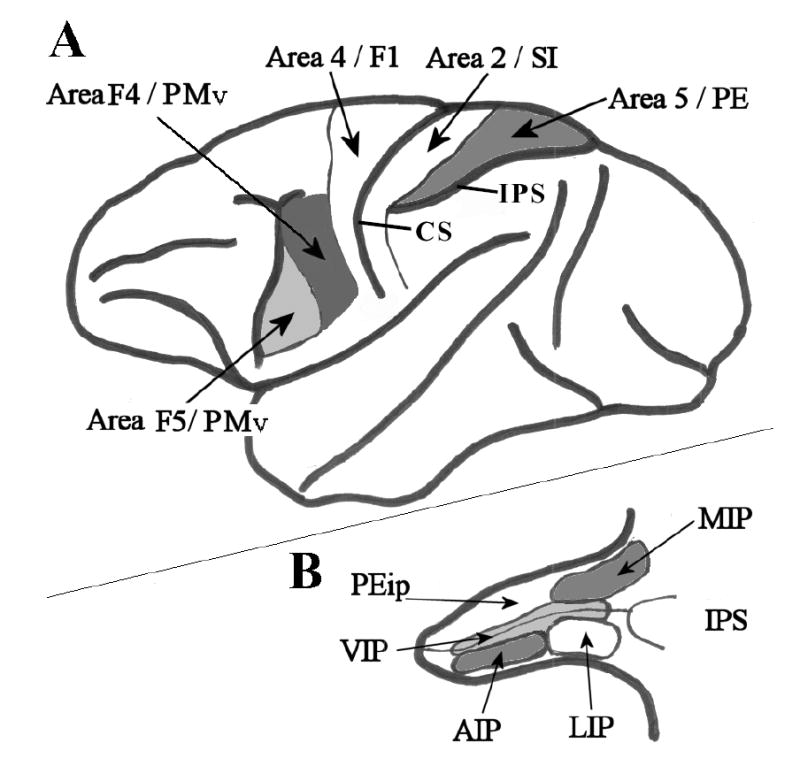

Figure 1.

Selected cortical areas of the Macaque monkey brain. A. Lateral view of the whole brain. Thick black lines represent major cortical boundaries and sulci. Thin black lines represent cortical area boundaries. Area 4/F1 - primary motor cortex; Area 2/SI – primary somatosensory cortex; Area 5/PE – posterior parietal association cortex/superior parietal lobule; Area F5/PMv – where ‘mirror neurons’ were first recorded. Area F4/PMv – ventral premotor cortex. IPS – intraparietal sulcus; CS – central sulcus. B. The intraparietal sulcus has been opened up to reveal multiple and heterogeneous visual and somatosensory posterior parietal areas. Thick lines represent the superficial border of the sulcus; thin black lines mark the fundus of the sulcus. Other lines indicate the boundaries of cortical areas as follows: MIP – medial intraparietal sulcus; LIP – lateral intraparietal sulcus; AIP – anterior intraparietal sulcus; VIP –ventral intraparietal sulcus; PEip – intraparietal portion of area PE. (Redrawn from Rizzolatti et al., 1998).

Three other findings are particularly relevant here. First, neurons in PMv, which responded to visual stimuli presented in peripersonal space, continued responding when the object could no longer be seen (i.e., when the lights were turned off). If the object was then silently and invisibly removed while still in darkness, the neuron continued to respond. The firing rate only decreased when the lights were turned back on again, and the object was seen to have moved out of peripersonal space (Graziano et al., 1997a). Such neurons seem to be encoding the presence of objects in peripersonal space independently of the sensory modality in which they are initially perceived. Indeed, certain areas of premotor cortex may be specialized both for the detection of threatening or approaching visual objects, and for the planning and execution of defensive or object-avoidance movements (Graziano et al., 2002; see also Moll & Kuypers, 1977; Farnè, Demattè, & Làdavas, 2003). Second, not only bimodal visuotactile, but also trimodal cells were discovered in PMv (Graziano, Reiss, & Gross 1999). These cells had somatosensory, visual, and auditory RFs centred on the side and back of the head. The auditory responses of most of these cells were modulated either by the distance of a stimulus from the head, by stimulus amplitude, or independently by both of these parameters. Finally, neurons responding to visual stimuli approaching the monkey’s arm were modulated by the presence of a hairy, taxidermied monkey arm placed in a realistic posture (Graziano, 1999). When the monkey’s real arm was moved, the visual RF moved with it – that is, the optimal responses were obtained when visual stimuli approached the position of the arm. When the real arm was hidden below a screen, and only proprioceptive information was available concerning arm position, this shift in the centre of the visual RF when the real arm moved was reduced. Furthermore, when the artificial arm was placed on the screen above the monkey’s own arm (which was again hidden from direct view), the movement of the artificial arm also caused a shift in the visual RF, even though the real arm remained stationary. This visually-induced shift in the RF was not as large as that observed when the monkey’s own arm was moved, but was larger than when the monkey’s arm was moved out of view under the screen (i.e., when only proprioceptive signals were available).

Collectively, these results demonstrate that the ventral premotor cortex instantiates a multisensory representation of peripersonal space. This representation is body part-centred, representing the space around the arms, hands, and face, and it integrates visual, somatosensory, and auditory information regarding stimulus location relative to the body.

Posterior parietal cortex

Neuronal response properties somewhat similar to those reported in ventral premotor cortex have also been recorded from single units in the posterior parietal cortex, particularly in areas VIP and 7b (Graziano & Gross, 1995). Since neurons in these areas share quite similar response properties to those of neurons in premotor cortex, we shall not discuss these parietal areas further here, but will concentrate on a different area of the posterior parietal lobe, namely area 5. Somatosensory impulses project from the thalamus to the primary somatosensory cortices (Brodmann’s areas 1, 2, & 3) in the central sulcus and post-central gyrus. Brodmann’s area 5 of the posterior parietal cortex lies just posterior to the post-central somatosensory cortex (Brodmann’s area 2), and receives input from primary somatosensory cortex (see Figure 1). Area 5 is thought to encode the posture and movement of the body, responding to somatosensory impulses pertaining, for example, to the position of the limbs (Graziano, Cooke, & Taylor, 2000). Area 5 also has a role in the planning and execution of movements (Chapman, Spidalieri, & Lamarre, 1984; Kalaska, Cohen, Prud’homme, & Hyde, 1990). Finally, neurons in this cortical region also respond to visual information concerning arm position. When a taxidermied monkey arm was placed in a realistic posture on one side of the body, neurons that were sensitive to the felt position of the real arm (situated underneath an opaque cover), would respond more vigorously when the artificial arm was seen to be in a similar position. The firing rate of these neurons was modulated most by the felt position of the arm, but was also significantly modulated by visual information. The sensitivity of these neurons to the appearance of the artificial arm was striking – their firing rate was unaffected by the sight of a piece of paper equal in size to the arm and placed in the same orientation, or by an artificial arm placed in an unrealistic posture, such as backward, or with a left arm seeming to protrude from the right shoulder. Single neurons were even sensitive to the visual appearance and orientation of the hand – neurons selective for the right arm when it was hidden from view in a palm-down posture, were not ‘fooled’ by a left arm placed palm-up on the right side.

Although area 5 neurons integrate visual and proprioceptive signals in coding the location of parts of the body, these neurons do not appear to be representing body part-centred multisensory peripersonal space as such. When tested for visual responses to a hand-held probe stimulus, their visual response properties were unrelated to the position of the monkey’s arm (Graziano et al., 2000). This finding is important to the present discussion, because another group of researchers have come to quite different conclusions regarding the same area’s involvement in the encoding of multisensory peripersonal space. In order to highlight some important methodological issues in ‘plotting’ multisensory peripersonal space in macaques, we shall provide a critical discussion of the work of Iriki and coworkers.

In their seminal study, Iriki, Tanaka, and Iwamura (1996a) recorded from somatosensory neurons in the anterior bank of the intraparietal sulcus (aIPS, also termed the medial bank, or area PEip, perhaps homologous to area 5 in humans; Rizzolatti, Luppino, & Matelli, 1998). The neurons in Iriki and colleagues’ studies also responded to moving stimuli held in the experimenter’s hand. It was suggested that these cells represented the visual space around the arm in a body-centred reference frame. However, there are several issues that need to be addressed before this conclusion can be unequivocally accepted.

First, cells in the medial or anterior bank of the IPS are most commonly thought to be somatosensory, with perhaps some residual visual responses, particularly toward the fundus of the IPS and the border with VIP. Indeed, naïve monkeys in Iriki et al.’s (1996a) study did not show reliable, explicit visual responses to the presentation of a variety of visual stimuli. Second, visual fixation was not controlled for in Iriki et al.’s experiments. It is also of particular note that the most effective stimulus to evoke a response in these cells was a piece of food held in the experimenter’s hand (e.g., a 1 cm3 piece of apple or raisin, the same stimuli that were used to reward the animals during 2–4 weeks of tool-use training). In their study, Graziano et al. (2000) used a piece of apple to distract the attention of the monkeys from the visual test stimulus, and noted (as one might expect) that the monkeys tended to fixate the apple slice. Typically, neurons representing peripersonal space in the premotor cortex show visual responses only to three dimensional objects (such as ping-pong balls, or squares of cardboard) moving within peripersonal space, primarily toward the tactile RF on the animal’s body (rather than when moving away from it). Interestingly, neurons representing peripersonal space do not respond to conventional visual stimuli such as bars or gratings projected onto a tangent screen, even when the screen is very close to the animal (under 10 cm). Importantly, such neurons respond whether tested in anaesthetised or awake animals, and importantly when the stimulus is unrelated to any potential response or reward, and the monkey has learnt to ignore it (Graziano et al., 1997b).

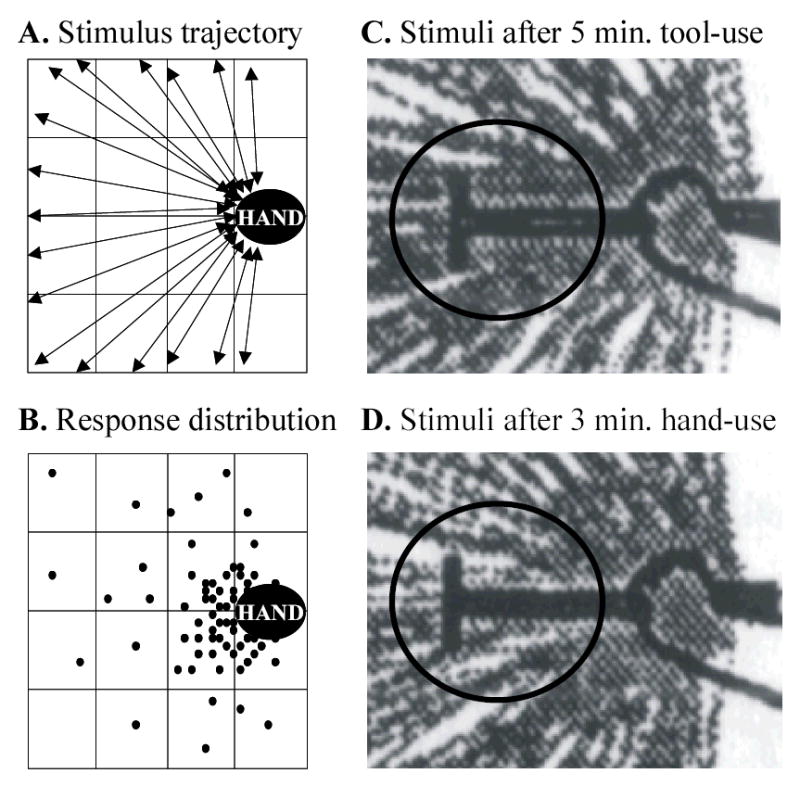

Third, and most importantly, the stimuli used to test the putative visual RFs in Iriki and colleagues’ studies were always presented on a centripetal trajectory approaching and receding from the hand of the monkey (Iriki et al., 1996a; Iriki, Tanaka, Obayashi, & Iwamura, 2001; Obayashi et al., 2000; see Figure 2). In the studies of Fogassi et al. (1996) and Graziano et al. (1997b), formal testing of visual RFs was carried out with robotically-controlled stimuli presented along parallel trajectories and controlled independently from hand, eye, and head position. With a concentration of experimental stimuli presented near the hand (Iriki et al., 1996a, see Figure 2C–D), it is difficult to conclude that the concentration of neuronal responses when the stimulus was near the hand actually reflects a neuronal response selective for stimuli presented within body part-centred peripersonal space.

Figure 2.

The stimuli delivered and responses obtained in Iriki et al.’s (1996a) study of tool-use, the body-schema, and peripersonal space. A. Visual stimuli were moved either toward or away from the hand in a centripetal or centrifugal fashion respectively (as shown by the double-headed arrows). Note that the hand area was stimulated much more frequently than any other part of the visual field. B. Filled circles represent the position in the visual field where the visual stimulus was when the neuron increased its firing rate to above 3 action potentials/second. C. The trajectories of stimuli (grey lines) presented after 5 minutes of tool-use activity (experimental condition). The monkey’s hand is on the right, and the tool (dark ‘T’-shape) extends toward the left. D. The trajectories of stimuli presented after 3 minutes of retrieving food using only the unaided hand, but studied with the tool held in the monkey’s hand (control condition). Note that the frequency and density of stimulation in the critical part of the putative visual receptive field (i.e., around the tip of the tool, shown by the black ellipse) does not seem to be comparable across the two conditions. The proposed ‘extension of visual receptive fields’ may in fact therefore be an artifact of the method of stimulus presentation. (Panels C and D were digitized and adapted from Iriki et al., 1996a).

The intraparietal sulcus is a large and heterogeneous area in the monkey, and at least five functionally- and neuroanatomically-distinct sub regions are found here (Colby & Duhamel, 1991; Rizzolatti et al., 1998, see Figure 1), with a variety of neuronal response properties ranging from purely somatosensory, to purely visual. It is therefore important to plot visual and somatosensory RFs while controlling for eye, head, and body movements. Even then, the influence of attention and response preparation may be serious confounding factors. Neurons in the area studied by Iriki et al. (1996a) have been studied elsewhere (Iriki, Tanaka, Iwamura, 1996b; Kalaska, Cohen, Prud’homme, & Hyde, 1990; MacKay & Crammond, 1987; Mountcastle, Lynch, Georgopoulos, Sakata, & Acuna, 1975). Two thirds of the cells in this area have been reported to be purely somatosensory, responding mainly to joint manipulation, and responding more vigorously for active than for passive movements (Mountcastle et al., 1975).

Several other interesting properties of these neurons have been documented. For example, Mountcastle et al. (1975) observed a sub-class of area 5 neurons that responded only when the monkey reached out to grasp or manipulate an item of interest, such as a food reward, but not when the monkeys made simple movements of the same joints and muscles, or reached toward other uninteresting objects. These neurons were active before the actual movement of the hand, and had a very low baseline firing rate of perhaps a few spikes a second. Furthermore, such neurons maintained their response rate after a target object was identified, even if vision of the object was subsequently occluded. MacKay and Crammond (1987) observed neurons in area 5 that seemed to ‘predict’ somatosensory events. Such cells would fire when an object was seen to approach the neuron’s somatosensory RF. While this may suggest a visual RF centred on the somatosensory RF, the authors noted that these cells would also respond just before the monkey placed its arm onto its chair (which was out-of-sight), and argued against these cells having visual RFs per se. Rather, such cells were seen as encoding somatosensory preparatory activity and reward-expectation unrelated to the sensory modality of stimulus presentation. Finally, Iriki et al. (1996b) found that neurons throughout the postcentral sulcus and anterior/medial bank of the intraparietal sulcus were strongly modulated by attentional and motivational factors – during a rewarded button-pushing task, cells in the anterior bank of the IPS began firing strongly when the cue stimulus was lit, maintained their firing throughout a trial, and decreased firing once the reward had been received.

The above discussion highlights some important issues concerning neurophysiological evidence for the multisensory representation of peripersonal space. Neurons should respond to visually presented three dimensional objects when: a) the visual stimulus is irrelevant and meaningless to the animal, being unrelated to any response or reward; b) the position, velocity, and direction of the stimulus is manipulated and controlled independently from the position of the animal’s head, eyes, other body parts, and the animal’s orientation in the testing room; c) the neurons respond similarly when tested in anaesthetised or awake subjects (though there may be certain differences, for example in background or stimulus-induced firing rate, between these two situations); d) visual responses should be organised in a body-part centred reference frame, representing the space surrounding the neuron’s tactile RF; e) electromyographic (EMG) activity from the relevant body parts should be recorded during visual stimulus presentation to rule out certain ‘motor’ interpretations of neuronal firing; and finally, f) visual and tactile stimuli should also be applied when the subject’s eyes are closed, to rule out any extraneous tactile (e.g., air movement, static electricity, thermal stimuli) or visual responses (in the case of testing tactile RFs within view of the subject; for further discussion of these points, see Graziano et al., 1997b).

Multisensory peripersonal space in humans

Given that single-unit studies in humans are not possible outside of a clinical setting, direct evidence for neuronal integration of signals from multiple sensory modalities in the human brain has not yet become available. Instead, patients following brain damage as well as normal human participants have been tested in a variety of paradigms in order to try and elucidate the nature of peripersonal space. A particularly important question has been: do the same principles of visuotactile integration in peripersonal space apply in humans as have been documented in animals?

The most convincing neuropsychological evidence to date for the existence of an integrated visuotactile representation of peripersonal space in humans comes from the work of Làdavas and her colleagues (see Làdavas, 2002, for a review). They have studied neuropsychological patients in a variety of testing situations to determine how brain damage affects the nature of visuotactile representations of peripersonal space. Many of the patients they have described suffered from left tactile extinction following right hemisphere brain damage. In such cases, patients can typically detect a single touch on the left or right hand in isolation, but if two tactile stimuli are presented simultaneously, one to the right and the other to the left hand, only the right touch can reliably be detected (e.g., di Pellegrino, Làdavas, & Farnè, 1997; Mattingley, Driver, Beschin, & Robertson, 1997).

These deficits can be explained in terms of the competition between neural representations of the hands in the left and right cerebral hemispheres. A tactile stimulus delivered to the right hand activates a somatosensory representation of the touched body part in the left hemisphere, to which sensory information from the right side of the body is projected initially and primarily. By contrast, a left tactile stimulus activates a right-hemisphere representation. In the case of these right brain-damaged patients, the representation in the left hemisphere is ‘stronger’ than that in the right. It has been argued that, under dual-stimulation conditions, the stronger right hemisphere representation of the left tactile stimulus may compete for limited processing resources with the contralateral representation (e.g., Mattingley et al., 1997). Consequently, a failure to detect the left tactile stimulus occurs more frequently for bilateral double simultaneous stimulation than for unilateral single stimulation. Now, if peripersonal space is coded in terms of a bimodal, visuotactile representation, and if a right hemisphere lesion affects this representation, then peripersonal visual stimuli on the right should interfere with the detection of tactile stimuli on the left. This is just what di Pellegrino et al. (1997) found (see also Mattingley et al., 1997). Visual stimulation of the peripersonal space (i.e., the wiggling of the experimenter’s finger just above the patient’s right hand) resulted in a complete extinction of all simultaneous left tactile stimuli (0 out of 30 detected).

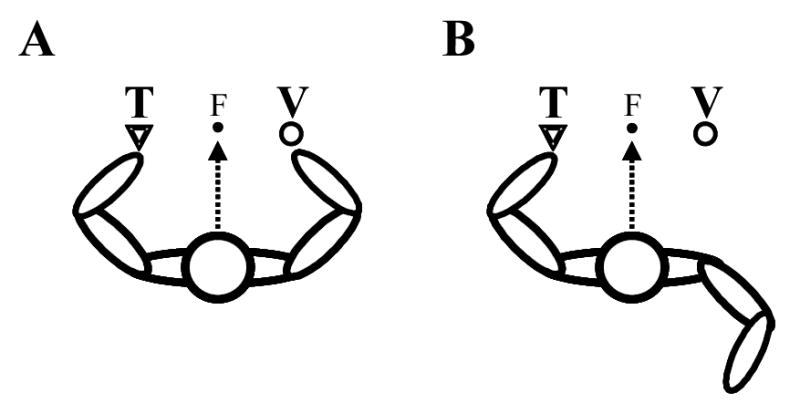

When the right visual stimulus was presented far from the hand, or when the patient held their own hand behind their back (see Figure 3), detection of left tactile stimuli improved significantly. Furthermore, when the arms were held in a crossed position (such that the left hand lay in the right hemispace and vice versa for the right hand), visual stimulation near the right hand still induced extinction of left hand tactile stimuli. This demonstrates that visuotactile spatial interactions centred on the hand change according to the position of the hand in space, and supports the view that visuotactile peripersonal space is represented in body part-centred coordinates, as shown previously by single-unit recording studies of monkey premotor and posterior parietal cortices (e.g., Graziano & Gross, 1995; Graziano et al., 1997b).

Figure 3.

Crossmodal extinction in peripersonal space. The patient sits at a table, and visually fixates a point at the same level as, and equidistant between their two hands (‘F’, filled circle). A. Visual stimuli (V, open circles) presented near the right hand interfere with the detection of simultaneously presented tactile stimuli (‘T’, open triangles) on the left hand. B. This interference is markedly reduced if the right hand is held away from the visual stimulus, behind the patient’s back. See main text and di Pellegrino et al. (1997) for details.

Research on patients displaying crossmodal extinction has also highlighted several additional important findings. First, the right-sided visual stimulus must be presented near the visible right hand in order for the most profound crossmodal extinction effects to occur (e.g., Làdavas, Farnè, Zeloni, & di Pellegrino, 2000). Stimulation of the same point in space near the hand, when the hand is occluded from view, produces extinction only to the same degree as for visual stimuli presented far from the right hand (e.g., Mattingley et al., 1997).3 Thus, it would appear that proprioceptive signals are insufficient to allow a visual peripersonal stimulus to activate strongly the visuotactile representation of the hand.

Second, not only has crossmodal extinction of simultaneously presented stimuli been documented, but also the crossmodal facilitation of deficient tactile detection (Làdavas, di Pellegrino, Farnè, & Zeloni, 1998; Làdavas et al., 2000). In accordance with the assumption that the deficit is caused by the unequal competition between the integrated visuotactile representations in the left and right hemispheres, simultaneous tactile stimulation of, and visual stimulation near, the left hand and tactile stimulation of the right hand increased the proportion of left tactile stimuli detected, compared to simultaneous bilateral tactile stimulation alone. This result is consistent with the findings of Halligan, Marshall, Hunt, and Wade (1996) who studied a patient who could not reliably detect touch on his left arm. Vision of his left hand being touched by the experimenter, however, induced a tactile sensation (see also Haggard, Taylor-Clarke, & Kennett, 2003; Rorden, Heutink, Greenfield, & Robertson, 1999). Similarly, di Pellegrino and Frassinetti (2000) observed a patient with right parieto-temporal damage, in whom the perception of left visual stimuli was enhanced when the patient’s hand was placed next to the stimulus screen. In summary, combined tactile stimulation of a body part and visual stimulation near that same body part can ameliorate both tactile and visual perceptual deficits.

Third, not only arm-centred, but also face-centred, deficits in peripersonal space have been documented. Làdavas, Zeloni, and Farnè (1998) have reported a study in which visual stimuli near the right side of the face were shown to be as effective as tactile stimulation of the right cheek in inducing extinction of simultaneous tactile stimuli presented to the left cheek. The detrimental effects of right visual extinction were less pronounced for stimuli presented far from the face (45 cm), and were ameliorated when visual stimulation was presented immediately adjacent to the left side of the face.

Finally, whilst the majority of studies of crossmodal extinction may have presented stimuli in vision and touch, the existence of auditory-tactile extinction, and by implication, of an auditory representation of peripersonal space, has also been suggested (Làdavas, Pavani, & Farnè, 2001). In their patient C.A., right auditory stimulation interfered with the detection of a simultaneous left tactile stimulus (the experimenter tapped the patient’s skin behind her left ear) much more when auditory stimuli were presented close to the patient’s head (20 cm) than when presented far away from it (70 cm). The intensity of the sound measured at the head was always 70 dB, and the sounds were presented from the same direction in both cases. Both pure tones and white noise auditory stimuli were used, and while both induced a certain level of crossmodal extinction, the white noise stimulus was more effective when presented closer to the head (probably because of the greater localizability of sounds with a broad frequency spectrum as compared to pure tones; see King, 1999). This initial finding has more recently been replicated and extended in a group study of 18 patients (Farnè & Làdavas, 2002). It would clearly be both interesting and important to test these auditory-tactile crossmodal extinction effects with computer-controlled tactile stimulation, in order to test the generalizability of these effects. This additional control would allow issues of timing, intensity, and type of tactile stimulation to be studied for their interactions with auditory stimuli presented within peripersonal space.

Similarly, over 50 % of single units recorded from ventral premotor cortex which have tactile RFs on the back of the monkey’s head also respond to auditory stimulation (Graziano et al., 1999). However, rather than representing auditory space restricted to the space around the tactile RF alone, they seem to represent the space around the head as a whole. Over a third of this subpopulation of neurons were driven more effectively by auditory stimuli 10 cm from the monkey’s head, and less effectively by stimuli at distances of 20 or 50 cm. The influence of many different cues to auditory stimulus distance, such as the intensity of familiar sounds, reverberation in the testing room, the amplitude and spectral characteristics and differences in these parameters between the two ears need to be investigated further for neurons representing peripersonal space (Graziano et al., 1999). These multisensory neurons, and the damaged right hemisphere representations in patients with auditory crossmodal extinction, seem to be encoding the auditory space immediately surrounding the head. Such an auditory peripersonal representation might function as part of a system for alerting the animal or human to auditory events very close to the head to which a rapid orienting response may well be required. The sudden appearance of a buzzing mosquito near the ear could, for instance, serve to initiate orienting, avoidance, or even mosquito-swatting movements (see Graziano et al., 2002).

In summary, the deficits found in human neuropsychological patients would appear to reflect the same principles of multisensory integration as the findings from studies of single units in monkey premotor cortex. Representations of peripersonal space are body-centred or body part-centred, restricted to the space immediately surrounding the body (extending to about 20–40 cm from the skin surface in monkeys, and up to perhaps 70 cm in humans), and involve the integration of information from multiple sensory modalities (somatosensory, proprioceptive, visual, and auditory).

Plasticity of visuotactile peripersonal space in humans

In the course of bodily development, the limbs grow longer, and so become increasingly capable of reaching and grasping nearby objects. The representation of visual peripersonal space must therefore adapt over long periods of time with the changing dynamics of the body. However, recent research on both normal human participants and brain-damaged patients also suggests that representations of the body, and of the space around the body, may be altered after minutes or even seconds of sensory manipulation. The use of mirrors, the ‘rubber arm illusion’ (sometimes known as the ‘virtual body’ effect; Austen, Soto-Faraco, & Kingstone, 2001; Pavani, Spence, & Driver, 2000), and the use of tools to extend the body’s reaching space, have all been studied with simultaneous visual and tactile stimulation. The results of these studies suggest that the brain’s representation of visuotactile peripersonal space can be modulated to ‘incorporate’ mirror images, inanimate objects, and tools held in the hand.

Peripersonal space in the mirror, and near artificial hands

When we look at our bodies in a mirror, we see ourselves as we usually see other people – looking toward us, their right hand on the left, and left hand on the right. When we look directly at our own hands, the left is on the left and the right on the right. Yet we recognise ourselves in the mirror and can direct movements effectively to locations on our own body under visual guidance in the mirror’s reflection. The ability to recognise ourselves and to guide movements in the mirror may depend, at least in part, on the plasticity of the representation of our own body and of peripersonal space.

This suggestion was tested by Maravita, Spence, Clarke, Husain, and Driver (2000) when they studied a neuropsychological patient (B.V.) exhibiting crossmodal extinction. The patient either viewed his hands in a mirror positioned in extrapersonal space (60 cm from his hands, with direct vision of his hands occluded), or viewed an artificial rubber hand seen at the same position and apparent distance as his reflected real hands (i.e., 120 cm away). The patient’s task was to detect tactile stimuli applied to his left hand. At the same time, visual stimuli (flashes of light) were presented either next to his real hands (and therefore viewed only in the mirror), or next to the rubber hand illuminated behind the half-silvered mirror. When the visual stimulus was presented next to his real hands and viewed in the mirror, it was effective in extinguishing 33 % of left tactile stimuli. By contrast, when the same light flash was presented next to the rubber hand, only 19 % of tactile stimuli were extinguished. When B.V. was further tested with triple stimulation (both left and right tactile stimulation plus right visual stimulation), the percentage of left tactile stimuli extinguished increased to 72 % in the mirror condition, but to only 33 % in the rubber hand condition.

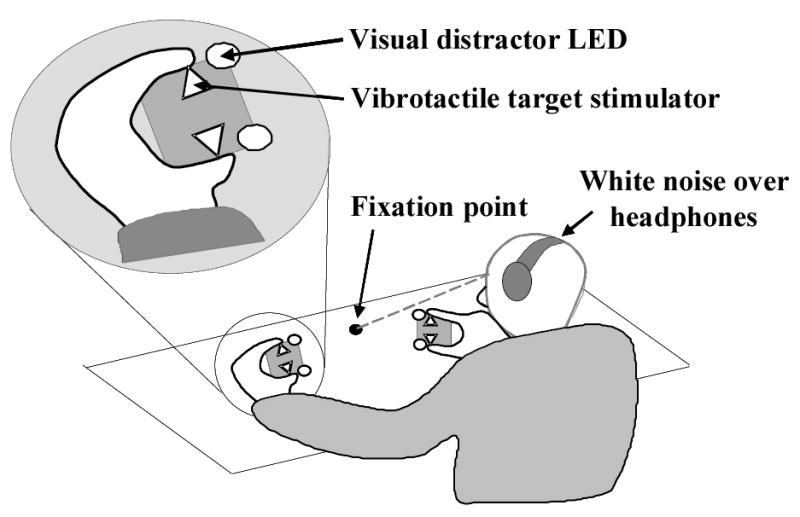

This finding of increased visuotactile extinction when the real hands are viewed in a mirror was examined more thoroughly in a crossmodal congruency task performed by normal human participants (Maravita, Spence, Sergent, & Driver, 2002). Participants in this study were required to make speeded discrimination responses regarding the elevation of single vibrotactile stimuli delivered either to the thumb (lower) or the forefinger (upper) of either the left or right hand. At the same time as each vibrotactile stimulus was presented, a bright visual distractor was presented from one of the four locations (by the thumb or forefinger of either hand). Participants were told to ignore the visual distractor stimuli as much as possible, while maintaining central fixation (see Figure 4). This crossmodal congruency task has been shown in many previous studies to produce a reliable crossmodal congruency effect (Spence, Pavani, & Driver, 2003). When the visual distractor appears at the same (congruent) elevation as the vibrotactile target (regardless of whether it was presented on the same or on different sides), reaction times are faster (often by more than 100 ms), and fewer tactile discrimination errors are made as compared to when the visual and vibrotactile stimuli are presented at different (incongruent) elevations (see Spence, Pavani, Maravita, & Holmes, 2003, for a recent review).

Figure 4.

The visuotactile crossmodal congruency task. Human participants hold a ‘stimulus cube’ containing vibrotactile stimulators (open triangles) and visual distractor LEDs (open circles). Participants look at a central fixation point (filled circle) situated midway between the two hands. White noise presented over headphones masks the sound of the vibrotactile stimulators (see text for further details). The inset shows a magnified view of the participant’s left hand holding one of the stimulus cubes.

The novel finding to emerge from the mirror version of the crossmodal congruency task (Maravita et al., 2002b) was that crossmodal congruency effects were consistently higher when participants viewed their own hands in a mirror than when they viewed either visual distractors alone, a pair of rubber hands ‘holding’ the visual stimuli, or an experimental accomplice holding the distractors at an equivalent location. These results suggest that a rapid, plastic re-mapping of the visuotactile peripersonal space around the hands occurs when the hands are viewed in a mirror. Identification with a body part seen in extrapersonal space appears to result in visual stimuli applied to that body part, which are seen to be in extrapersonal space (i.e., as if coming from behind the mirror), being remapped as peripersonal stimuli through the mirror reflection (see also Nielsen, 1963).

Rubber hands have also been used to examine visuotactile effects under direct vision, rather than in a mirror reflection. If normal human participants view an artificial rubber hand being stroked while simultaneously feeling an identical stroking motion on their own, unseen hand, the seen touches on the rubber hand come to be felt as touches on the participant’s own hand (Botvinick & Cohen, 1998); at least when the artificial hand is in a realistic posture with respect to the participant’s own body. Vision of an artificial hand being touched also improves the detection of simultaneous tactile stimulation to the real unseen hand following brain damage (Rorden et al., 1999). Likewise, in the case of crossmodal extinction of left tactile stimuli, if an artificial rubber right hand is placed in front of patients, visual stimuli applied to the artificial right hand extinguish simultaneous tactile stimuli on the left hand (Farnè, Pavani, Meneghello, & Làdavas, 2000). Importantly, the location and orientation of the artificial rubber hand must be sufficiently similar to the real hand to induce this effect. When the rubber arm is placed at 90º with respect to the orientation of the real arm, crossmodal extinction is no worse than for far, extrapersonal visual stimulation. This is similar to the finding of Graziano et al. (2000) mentioned earlier: namely, that area 5 neurons coding arm position were more active when the artificial arm was seen to be in the same posture as the monkey’s real arm, that is, when somatosensory and visual information were not conflicting.

Pavani et al. (2000) used the crossmodal congruency task to test the effect of the orientation of rubber arms on visuotactile interference in normal human participants. In accordance with the findings of studies of both crossmodal extinction and of single neurons, when the rubber hands were placed in an incongruent orientation with respect to real, unseen arms, there was very little effect of the presence of the rubber hands in modulating the magnitude of the crossmodal congruency effect. In contrast, when the rubber hands were present and aligned with the real arms, the crossmodal congruency effect was 50 ms greater than when the artificial arms were absent. This increase in ‘visual capture’ induced interference on vibrotactile discrimination performance suggests that artificial body parts, positioned in realistic orientations with respect to real body parts, can modulate the brain’s representation of visuotactile peripersonal space. Visual stimuli occurring near artificial hands have a much greater interference effect on tactile discriminations when the hands are in a realistic posture.

Tool-use modifies visuotactile peripersonal space

We have seen that peripersonal space can be ‘shifted’ to incorporate the seen positions of artificial but realistic body parts. What about modifying peripersonal space to include objects that do not look like body parts, but serve a similar function (i.e., of perceiving and acting upon objects in peripersonal and extrapersonal space)? Can the use of tools also modulate peripersonal space? Again, this question has been addressed in several ways; first, in neuropsychological patients with dissociations in neglect for near versus far space, and in crossmodal extinction; and second, in normal human participants using the crossmodal congruency task. Several papers by Iriki and colleagues (Iriki et al., 1996a, 2001; Obayashi et al., 2000) also address the issue of tool-use. Although the original paper published in 1996 has been very influential in stimulating cognitive neuroscientific research on the understanding of tool-use, for the reasons discussed earlier the results from this research can only be taken as preliminary, and further control studies need to be conducted (for example, one critical factor concerns the spatial control of the visual stimuli – see Figure 2).

Iriki et al.’s (1996a) suggestion that tool-use might extend the visuotactile representation of peripersonal space in monkeys has prompted several research groups working with neuropsychological patients to include tool-use as an experimental variable in their studies. These studies are based on demonstrations of dissociations between neglect for near and far space in man (e.g., Halligan & Marshall, 1991) that implicated the existence of separate neural systems for encoding peripersonal and extrapersonal space. Halligan and Marshall asked patients to bisect lines in near and far space. In near space, the patients pointed to the perceived midpoint of the lines with their finger. In far space, they indicated the midpoint with a laser pointer. While rightward errors were observed for bisections carried out in near space, no such errors were found for bisections in far space.

Berti and Frassinetti (2000) extended this line of research by asking a patient (P.P.) with severe left visuospatial neglect to bisect lines in near (50 cm from her body) and in far (100 cm) space. The patient bisected the lines by reaching with her hand, indicating with a laser pointer, or reaching with a stick to mark the lines in far space, all the time keeping her right, pointing hand close to the body midline. When using the laser pointer to bisect lines in near space, she made an average rightward error of 24 %. In far space, still bisecting with the laser pointer, this rightward error was diminished, at only 9 %. When the patient reached with her finger in near space, the rightward error was comparable to the pointing condition at 29 %. But when the patient reached with a stick to bisect the far lines, the average rightward error increased from 9 % to 27 %. The use of a tool to act upon objects in far space, resulted in the patients evidencing a left neglect for far space equal to that for near space. Without the tool, the patient’s deficit was severe only in near space. There remains the possibility that the deficit, in this patient at least, depends upon the complexity of the motor task being performed. If the neglect disturbance is more severe for difficult movements than for simple ones, then a difficult movement (pointing with a stick) in extrapersonal space might lead to greater deficits than for a more simple movement (pointing with a finger or laser-pointer for example). This issue of visuospatial versus motor components of neglect needs to be addressed specifically with respect to the use of tools.

Two other studies of crossmodal extinction in brain-damaged patients also support the view that tool-use can modulate peripersonal space. First, Farnè and Làdavas (2000) asked seven patients with unimodal tactile extinction to retrieve objects from far space (small, red, plastic fish at various locations, 85 cm from the patients) using a rake held in their right hand. After five minutes of this active tool-use practice, crossmodal extinction was tested with visual stimuli presented in extrapersonal space at the end of the tool. Left tactile stimuli presented simultaneously with the right visual stimuli were detected on only 53 % of trials immediately following tool-use. In three control conditions – before tool-use, following a short delay after tool-use and after a control, pointing task – left tactile stimuli were detected on 69–75 % of trials. Thus, for a short period following active tool-use to manipulate objects in extrapersonal space, the visual space around the end of the tool was incorporated into peripersonal space, and stimuli presented near the end of the tool thus interfered more with the detection of simultaneous left tactile stimuli. In short, tool-use seems to ‘capture’ extrapersonal space and results in it being incorporated into peripersonal space.

Second, and more recently, Maravita, Husain, Clarke, and Driver (2001) introduced several further control conditions to examine the factors contributing to the putative modulation of peripersonal space. They tested for crossmodal extinction in patient B.V. in four different conditions: with visual stimuli next to the hand; when two long sticks were held firmly in the hands; when the sticks were placed in front of the patient to act as a visual control; and presenting the visual stimulus in far extrapersonal space in the absence of the sticks. Peripersonal right-hand visual stimuli extinguished 94 % of simultaneous left tactile stimuli, while extrapersonal right visual stimulation extinguished only 34 % of simultaneous left touches. When the far visual stimulus was now ‘connected’ to the right hand by means of the stick, 69 % of left tactile stimuli were extinguished. Only when the sticks were firmly and actively held in position, connecting the far visual stimulus to the body, was crossmodal extinction of left tactile stimuli significantly increased.

Finally, the crossmodal congruency task has been used successfully to examine the modulation of visuotactile peripersonal space by tool-use in normal participants (Maravita, Spence, Kennett, & Driver, 2002). In the crossmodal congruency task, visual distractor stimuli are usually presented next to the hands, where two vibrotactile target stimuli are also located. However, in their study of tool-use, Maravita and colleagues placed the visual distractors in upper and lower locations at the tip of a golf club ‘tool’ held in each hand. As described above, the participants were required to discriminate the elevation of the vibrotactile targets while trying to ignore the elevation of the visual distractors. During the experiment, the golf clubs were crossed and uncrossed after every four trials, so that the visual distractors at the tip of the left club would be situated in the right visual field for half the trials and in the left field for the other half. Usually, visual distractors on the same side of space have a greater interference effect on the tactile discrimination task than visual distractors on the other side of the midline. However, with the tools in a crossed position, the opposite spatial contingency was found. With the tools crossed, left visual space was ‘connected’ to the right hand, and right visual space to the left hand. The congruency effects for visual stimuli on the opposite side to the tactile stimuli were larger than for same side visual distractors when the tools were crossed. Interestingly, this modulation of visuotactile space depended on sustained, active usage of the tools, since it was not observed when participants simply held the tools passively, and was only seen in the second half of the experiment (i.e., in the last six of ten blocks of 48 trials).

The question of how tool-use results in the modulation of normal human peripersonal space is being addressed further in our laboratory (e.g., Holmes, Calvert, & Spence, 2003). One especially important line of research here concerns the manipulation of spatial attention and/or response preparation during complex tool-use tasks. Furthermore, it is of particular interest to us whether tool-use results in an extension of the brain’s representation of the body per se, and/or of the space surrounding the body. Is it appropriate to say, for example, that the tool is literally incorporated into the brain’s ‘body schema’ (Head & Holmes, 1911–1912), which is used for maintaining and updating a postural model of the body in space? Or is it rather a remapping of extrapersonal visual space as peripersonal space? It may be very difficult to separate the neural systems involved in representing the body itself from those that represent the space around the body. When single neurons can respond both to tactile stimulation of an arm and to visual stimulation of the space immediately surrounding that arm, it may not even make neural sense to try to discriminate between the body and peripersonal space, at least at the level at which these particular neuronal populations are operating.

Conclusions

If you walk home tonight and branches overhang your path, your brain’s representation of peripersonal space should protect you! Your posterior parietal area 5 will maintain an updated ‘model’ of the position of your body in space, while your visual cortices will be processing objects and events in the visual world in front of you. These two streams of inter-related information are integrated and further processed in the posterior parietal cortex and ventral premotor cortex, which constantly monitor the position of objects in space in relation to your body, and prepare avoidance movements as and when they are required. It is not the case that any one single brain area is responsible either for maintaining a representation of the body, or of space in general, as was once thought (see Rizzolatti et al., 1998, for a review of the new concepts in this area). Rather, the ‘body schema’ and ‘peripersonal space’ are emergent properties of a network of interacting cortical and subcortical centres. Each centre processes multisensory information in a reference frame appropriate to the body part concerning which it receives information, and with which responses are to be made.

Acknowledgments

N. P. Holmes is supported by a Wellcome Prize Studentship (number 065696/Z/01/A) from The Wellcome Trust. Correspondence regarding this article should be addressed to Nicholas Holmes, Room B121, Department of Experimental Psychology, University of Oxford, Oxford, UK. OX1 3UD. E-mail:nicholas.holmes@psy.ox.ac.uk

Footnotes

Head and Holmes (1911–1912, pp. 186–189) distinguished two principal aspects of the ‘body schema’ based on a neurological understanding of the dissociations in bodily sensibility that follow selective lesions of the spinal cord, brainstem, thalamus, and cerebral cortex. The ‘postural schema’ represents the position and movement of the body, and is derived primarily from proprioceptive and kinaesthetic afferent impulses. Their second schema is derived from cutaneous afferent impulses signalling the location of tactile stimuli on the surface of the body. These schemata are independent from, though related to, conscious ‘images’ of the body. Many separate classifications of ‘body schema’ and ‘body image’ have followed this original definition. For clarity, however, we adhere to the neurological taxonomy of Head and Holmes, while acknowledging that the body schema should not necessarily be restricted to proprioceptive and somatosensory modalities alone, but should also incorporate visual and perhaps auditory information (for a range of perspectives on the body schema see, for example, Berlucchi & Aglioti, 1997; Schilder, 1935, the chapters in Bermúdez, Marcel, & Eilan, 2003, and the proceedings of a recent conference at http://allserv.rug.ac.be/~gvdvyver/body-image/hoofdblad.htm).

The term ‘reference frame’ is used to refer to the centre of a coordinate system for representing objects, including the body itself, and relations between objects (e.g., Cohen & Andersen, 2002). For example, a retinotopic reference frame would take as its centre the fovea of each retina, and represent visual objects in relation to this origin. In a head-centred reference frame, objects are represented independently of eye movements, and in a body-centred frame, independently of both head and eye movements. Both body-centred and body part-centred frames may be important in the representation of peripersonal space.

The requirement for the hands to be visible in order that ‘visual’ peripersonal space can be stimulated more effectively raises an interesting question. Does the reduction in visuotactile extinction when the right hand is not visible depend on there being a physical barrier between the visual stimulus and the hand, or simply on the occlusion of vision of the hand itself? Farnè et al. (2003) have recently addressed this question in a patient suffering from crossmodal extinction. They showed that the insertion of a transparent barrier between the visual stimulus applied near the right hand and the hand itself had no effect on the proportion of left tactile stimuli extinguished in comparison to peripersonal right visual stimulation in the absence of any barrier. By contrast, an opaque barrier that occluded vision of the hand resulted in a significant decrease in the number of left tactile stimuli extinguished by simultaneous right visual stimuli just above the barrier. This result suggests that it is not simply the presence of a barrier itself, but rather the occlusion of vision of the body part that is important in modulating visuotactile crossmodal extinction.

References

- Austen EL, Soto-Faraco S, Pinel JPJ, Kingstone AF. Virtual body effect: Factors influencing visual-tactile integration. Abstracts of the Psychonomic Society. 2001;6:2. [Google Scholar]

- Berlucchi G, Aglioti SA. The body in the brain: Neural bases of corporeal awareness. Trends in Neurosciences. 1997;20:560–564. doi: 10.1016/s0166-2236(97)01136-3. [DOI] [PubMed] [Google Scholar]

- Berti A, Frassinetti F. When far becomes near: Re-mapping of space by tool-use. Journal of Cognitive Neuroscience. 2000;12:415–420. doi: 10.1162/089892900562237. [DOI] [PubMed] [Google Scholar]

- Bonnier P. L’Aschématie. [Aschematia] Revue Neurologique. 1905;13:606–609. [Google Scholar]

- Botvinick M, Cohen J. Rubber hands 'feel' touch that eyes see. Nature. 1998;391:756. doi: 10.1038/35784. [DOI] [PubMed] [Google Scholar]

- Burmúdez, J. L., Marcel, A. J., & Eilan, N. (Eds.) (2003). The body and the self Cambridge, MA: MIT Press.

- Chapman CE, Spidalieri G, Lamarre Y. Discharge properties of area 5 neurones during arm movements triggered by sensory stimuli in the monkey. Brain Research. 1984;309:63–77. doi: 10.1016/0006-8993(84)91011-4. [DOI] [PubMed] [Google Scholar]

- Cohen YE, Andersen RA. A common reference frame for movement plans in the posterior parietal cortex. Nature Reviews Neuroscience. 2002;3:553–562. doi: 10.1038/nrn873. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel J. Heterogeneity of extrastriate visual areas and multiple parietal areas in the macaque monkey. Neuropsychologia. 1991;29:517–537. doi: 10.1016/0028-3932(91)90008-v. [DOI] [PubMed] [Google Scholar]

- Craig JC, Rollman GB. Somesthesis. Annual Review of Psychology. 1999;50:305–331. doi: 10.1146/annurev.psych.50.1.305. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G, Frassinetti F. Direct evidence from parietal extinction of enhancement of visual attention near a visible hand. Current Biology. 2000;10:1475–1477. doi: 10.1016/s0960-9822(00)00809-5. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G, Làdavas E, Farnè A. Seeing where your hands are. Nature. 1997;388:730. doi: 10.1038/41921. [DOI] [PubMed] [Google Scholar]

- Farnè A, Demattè ML, Làdavas E. Beyond the window: Multisensory representation of peripersonal space across a transparent barrier. International Journal of Psychophysiology. 2003;50:51–61. doi: 10.1016/s0167-8760(03)00124-7. [DOI] [PubMed] [Google Scholar]

- Farnè A, Làdavas E. Dynamic size-change of hand peripersonal space following tool use. NeuroReport. 2000;11:1645–1649. doi: 10.1097/00001756-200006050-00010. [DOI] [PubMed] [Google Scholar]

- Farnè A, Làdavas E. Auditory peripersonal space in humans. Journal of Cognitive Neuroscience. 2002;14:1030–1043. doi: 10.1162/089892902320474481. [DOI] [PubMed] [Google Scholar]

- Farnè A, Pavani F, Meneghello F, Làdavas E. Left tactile extinction following visual stimulation of a rubber hand. Brain. 2000;123:2350–2360. doi: 10.1093/brain/123.11.2350. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Gallese V, Fadiga L, Luppino G, Matelli M, Rizzolatti G. Coding of peripersonal space in inferior premotor cortex (area F4) Journal of Neurophysiology. 1996;76:141–157. doi: 10.1152/jn.1996.76.1.141. [DOI] [PubMed] [Google Scholar]

- Graziano MSA. Where is my arm? The relative role of vision and proprioception in the neuronal representation of limb position. Proceedings of the National Academy of Sciences, USA. 1999;96:10418–10421. doi: 10.1073/pnas.96.18.10418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano MSA, Cooke DF, Taylor CSR. Coding the location of the arm by sight. Science. 2000;290:1782–1786. doi: 10.1126/science.290.5497.1782. [DOI] [PubMed] [Google Scholar]

- Graziano MSA, Gross CG. A bimodal map of space: Somatosensory receptive fields in the macaque putamen with corresponding visual receptive fields. Experimental Brain Research. 1993;97:96–109. doi: 10.1007/BF00228820. [DOI] [PubMed] [Google Scholar]

- Graziano, M. S. A., & Gross, C. G. (1995). The representation of extrapersonal space: A possible role for bimodal, visual-tactile neurons. In Gazzaniga, M. S. (Ed.), The cognitive neurosciences (pp. 1021–1034). Cambridge, MA: MIT Press.

- Graziano MSA, Hu XT, Gross CG. Coding the locations of objects in the dark. Science. 1997a;277:239–241. doi: 10.1126/science.277.5323.239. [DOI] [PubMed] [Google Scholar]

- Graziano MSA, Hu XT, Gross CG. Visuospatial properties of ventral premotor cortex. Journal of Neurophysiology. 1997b;77:2268–2292. doi: 10.1152/jn.1997.77.5.2268. [DOI] [PubMed] [Google Scholar]

- Graziano MSA, Reiss LA, Gross CG. A neuronal representation of the location of nearby sounds. Nature. 1999;397:428–430. doi: 10.1038/17115. [DOI] [PubMed] [Google Scholar]

- Graziano MSA, Taylor CSR, Moore T. Complex movements evoked by microstimulation of precentral cortex. Neuron. 2002;34:841–851. doi: 10.1016/s0896-6273(02)00698-0. [DOI] [PubMed] [Google Scholar]

- Graziano MSA, Yap GS, Gross CG. Coding of visual space by premotor neurons. Science. 1994;266:1054–1057. doi: 10.1126/science.7973661. [DOI] [PubMed] [Google Scholar]

- Haggard P, Taylor-Clarke M, Kennett S. Tactile perception, cortical representation and the bodily self. Current Biology. 2003;13:170–173. doi: 10.1016/s0960-9822(03)00115-5. [DOI] [PubMed] [Google Scholar]

- Halligan PW, Hunt M, Marshall JC, Wade DT. When seeing is feeling: Acquired synaesthesia or phantom touch? Neurocase. 1996;2:21–29. [Google Scholar]

- Halligan PW, Marshall JC. Left neglect for near but not far space in man. Nature. 1991;350:498–500. doi: 10.1038/350498a0. [DOI] [PubMed] [Google Scholar]

- Head H, Holmes HG. Sensory disturbances from cerebral lesions. Brain. 1911–1912;34:102–254. [Google Scholar]

- Held, R., & Durlach, N. (1993). Telepresence, time delay and adaptation. In S. R. Ellis, M. K. Kaiser, & A. C. Grunwald (Eds.), Pictorial communication in virtual and real environments (pp. 232–246). London: Taylor & Francis.

- Holmes, N. P., Calvert, G. A., & Spence, C. (2003). Does tool-use extend peripersonal space? Evidence from the crossmodal congruency task. Perception & Psychophysics, submitted.

- Hoover, R. E. (1950). The cane as a travel aid. In P. A. Zahl (Ed.), Blindness (pp. 353–365). Princeton: Princeton University Press.

- Iriki A, Tanaka M, Iwamura Y. Coding of modified body schema during tool use by macaque postcentral neurones. NeuroReport. 1996a;7:2325–2330. doi: 10.1097/00001756-199610020-00010. [DOI] [PubMed] [Google Scholar]

- Iriki A, Tanaka M, Iwamura Y. Attention-induced neuronal activity in the monkey somatosensory cortex revealed by pupillometrics. Neuroscience Research. 1996b;25:173–181. doi: 10.1016/0168-0102(96)01043-7. [DOI] [PubMed] [Google Scholar]

- Iriki A, Tanaka M, Obayashi S, Iwamura Y. Self-images in the video monitor coded by monkey intraparietal neurons. Neuroscience Research. 2001;40:163–175. doi: 10.1016/s0168-0102(01)00225-5. [DOI] [PubMed] [Google Scholar]

- Kalaska JF, Cohen DAD, Prud'homme M, Hyde ML. Parietal area 5 neuronal activity encodes movement kinetics, not movement dynamics. Experimental Brain Research. 1990;80:351–364. doi: 10.1007/BF00228162. [DOI] [PubMed] [Google Scholar]

- King AJ. Sensory experience and the formation of a computational map of auditory space in the brain. BioEssays. 1999;21:900–911. doi: 10.1002/(SICI)1521-1878(199911)21:11<900::AID-BIES2>3.0.CO;2-6. [DOI] [PubMed] [Google Scholar]

- Làdavas E. Functional and dynamic properties of visual peripersonal space. Trends in Cognitive Sciences. 2002;6:17–22. doi: 10.1016/s1364-6613(00)01814-3. [DOI] [PubMed] [Google Scholar]

- Làdavas E, di Pellegrino G, Farnè A, Zeloni G. Neuropsychological evidence of an integrated visuotactile representation of peripersonal space in humans. Journal of Cognitive Neuroscience. 1998;10:581–589. doi: 10.1162/089892998562988. [DOI] [PubMed] [Google Scholar]

- Làdavas E, Farnè A, Zeloni G, di Pellegrino G. Seeing or not seeing where your hands are. Experimental Brain Research. 2000;131:458–467. doi: 10.1007/s002219900264. [DOI] [PubMed] [Google Scholar]

- Làdavas E, Pavani F, Farnè A. Auditory peripersonal space in humans: A case of auditory-tactile extinction. Neurocase. 2001;7:97–103. doi: 10.1093/neucas/7.2.97. [DOI] [PubMed] [Google Scholar]

- Làdavas E, Zeloni G, Farnè A. Visual peripersonal space centred on the face in humans. Brain. 1998;121:2317–2326. doi: 10.1093/brain/121.12.2317. [DOI] [PubMed] [Google Scholar]

- MacKay WA, Crammond DJ. Neuronal correlates in posterior parietal lobe of the expectation of events. Behavioural and Brain Research. 1987;24:167–179. doi: 10.1016/0166-4328(87)90055-6. [DOI] [PubMed] [Google Scholar]

- Maravita A, Husain M, Clarke K, Driver J. Reaching with a tool extends visual-tactile interactions into far space: Evidence from cross-modal extinction. Neuropsychologia. 2001;39:580–585. doi: 10.1016/s0028-3932(00)00150-0. [DOI] [PubMed] [Google Scholar]

- Maravita A, Spence C, Clarke K, Husain M, Driver J. Vision and touch through the looking glass in a case of crossmodal extinction. NeuroReport. 2000;11:3521–3526. doi: 10.1097/00001756-200011090-00024. [DOI] [PubMed] [Google Scholar]

- Maravita A, Spence C, Kennett S, Driver J. Tool-use changes multimodal spatial interactions between vision and touch in normal humans. Cognition. 2002a;83:25–34. doi: 10.1016/s0010-0277(02)00003-3. [DOI] [PubMed] [Google Scholar]

- Maravita A, Spence C, Sergent C, Driver J. Seeing your own touched hands in a mirror modulates cross-modal interactions. Psychological Science. 2002b;13:350–355. doi: 10.1111/j.0956-7976.2002.00463.x. [DOI] [PubMed] [Google Scholar]

- Mattingley JB, Driver J, Beschin N, Robertson IH. Attentional competition between modalities: Extinction between touch and vision after right hemisphere damage. Neuropsychologia. 1997;35:867–880. doi: 10.1016/s0028-3932(97)00008-0. [DOI] [PubMed] [Google Scholar]

- Moll L, Kuypers HGJM. Premotor cortex ablations in monkeys: Contralateral changes in visually guided reaching behavior. Science. 1977;198:317–319. doi: 10.1126/science.410103. [DOI] [PubMed] [Google Scholar]

- Mountcastle VB, Lynch JC, Georgopoulos AP, Sakata H, Acuna C. Posterior parietal association cortex of the monkey: Command functions for operation within extrapersonal space. Journal of Neurophysiology. 1975;38:871–908. doi: 10.1152/jn.1975.38.4.871. [DOI] [PubMed] [Google Scholar]

- Nielsen TI. Volition: A new experimental approach. Scandinavian Journal of Psychology. 1963;4:225–230. [Google Scholar]

- Obayashi S, Tanaka M, Iriki A. Subjective image of invisible hand coded by monkey intraparietal neurons. NeuroReport. 2000;11:3499–3505. doi: 10.1097/00001756-200011090-00020. [DOI] [PubMed] [Google Scholar]

- Pavani F, Spence C, Driver J. Visual capture of touch: Out-of-the-body experiences with rubber gloves. Psychological Science. 2000;11:353–359. doi: 10.1111/1467-9280.00270. [DOI] [PubMed] [Google Scholar]

- Popper, K. R., & Eccles, J. C. (1977). The self and its brain London: Springer-Verlag.

- Previc FH. The neuropsychology of 3-D space. Psychological Bulletin. 1998;124:123–164. doi: 10.1037/0033-2909.124.2.123. [DOI] [PubMed] [Google Scholar]

- Ramachandran VS, Rogers-Ramachandran D. Synaesthesia in phantom limbs induced with mirrors. Proceedings of the Royal Society of London B. 1996;263:377–386. doi: 10.1098/rspb.1996.0058. [DOI] [PubMed] [Google Scholar]

- Ramachandran VS, Rogers-Ramachandran D, Cobb S. Touching the phantom limb. Nature. 1995;377:489–490. doi: 10.1038/377489a0. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Cognitive Brain Research. 1996;3:131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Fogassi L, Gallese V. The space around us. Science. 1997;277:190–191. doi: 10.1126/science.277.5323.190. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Luppino G, Matelli M. The organization of the cortical motor system: New concepts. Electroencephalography and Clinical Neurophysiology. 1998;106:283–296. doi: 10.1016/s0013-4694(98)00022-4. [DOI] [PubMed] [Google Scholar]

- Rorden C, Heutink J, Greenfield E, Robertson IH. When a rubber hand 'feels' what the real hand cannot. NeuroReport. 1999;10:135–138. doi: 10.1097/00001756-199901180-00025. [DOI] [PubMed] [Google Scholar]

- Sathian K, Greenspan AI, Wolf SL. Doing it with mirrors: A case study of a novel approach to neurorehabilitation. Neurorehabilitation and Neural Repair. 2000;14:73–76. doi: 10.1177/154596830001400109. [DOI] [PubMed] [Google Scholar]

- Schilder, P. F. (1935). The image and appearance of the human body: Studies in the constructive energies of the psyche Oxford: Kegan Paul.

- Spence, C., Pavani, F., & Driver J. (2003). Spatial constraints on cross-modal selective attention between vision and touch. Cognitive, Affective, & Behavioral Neuroscience Submitted [DOI] [PubMed]

- Spence, C., Pavani, F., Maravita, A., & Holmes, N. P. (2003; in press). Multisensory contributions to the 3-D representation of visuotactile peripersonal space in humans: Evidence from the crossmodal congruency task. Journal of Physiology (Paris) [DOI] [PubMed]