Abstract

Objective:

To test whether basic skills acquired on a virtual endoscopic surgery simulator are transferable from virtual reality to physical reality in a comparable training setting.

Summary Background Data:

For surgical training in laparoscopic surgery, new training methods have to be developed that allow surgeons to first practice in a simulated setting before operating on real patients. A virtual endoscopic surgery trainer (VEST) has been developed within the framework of a joint project. Because of principal limitations of simulation techniques, it is essential to know whether training with this simulator is comparable to conventional training.

Methods:

Devices used were the VEST system and a conventional video trainer (CVT). Two basic training tasks were constructed identically (a) as virtual tasks and (b) as mechanical models for the CVT. Test persons were divided into 2 groups each consisting of 12 novices and 4 experts. Each group carried out a defined training program over the course of 4 consecutive days on the VEST or the CVT, respectively. To test the transfer of skills, the groups switched devices on the 5th day. The main parameter was task completion time.

Results:

The novices in both groups showed similar learning curves. The mean task completion times decreased significantly over the 4 training days of the study. The task completion times for the control task on Day 5 were significantly lower than on Days 1 and 2. The experts’ task completion times were much lower than those of the novices.

Conclusions:

This study showed that training with a computer simulator, just as with the CVT, resulted in a reproducible training effect. The control task showed that skills learned in virtual reality are transferable to the physical reality of a CVT. The fact that the experts showed little improvement demonstrates that the simulation trains surgeons in basic laparoscopic skills learned in years of practice.

Virtual reality simulators offer new possibilities for training in minimally invasive surgery. It is important to know whether the training mechanisms in conventional and virtual reality training are principally comparable. This study demonstrates a method to compare conventional and simulator training and finds that basic skills acquired on a simulator can be transferred to physical reality.

Indications for laparoscopic interventions are constantly expanding, and currently the standard for an increasing number of operations is minimally invasive surgery (MIS). This means that surgeons in training have to learn laparoscopic techniques, having never performed the procedure in the conventional way. MIS demands psychomotor skills that are not required in conventional surgery, such as hand–eye coordination within a 3-dimensional scene seen on a 2-dimensional monitor. Moreover, traditional surgical training in which a learning surgeon performs parts of an operation guided by an experienced surgeon is hardly applicable in MIS.

The introduction of MIS was initially associated with a high complication rate.1,2 The term “learning curve” was introduced to surgery to refer to the number of operations a surgeon has to perform to reach an experience level with a low complication rate.3 Depending on the type of operation, 15 to 100 procedures are required to reach the plateau of this learning curve.4–6 Further studies showed that even experienced laparoscopic surgeons had to go through a learning curve again when they learned new laparoscopic techniques or used new instruments.7 This led to the development of special training programs, which are associated with certain problems. More realistic training usually involves training on animals, which is elaborate, expensive, and not available to many surgeons. Moreover, this training does not include pathologic situations and anatomic variations, and thus, does not allow specific training for difficult situations.

Basic training is carried out on conventional video training devices (CVT) using mechanical models. This training is inexpensive and readily available but not realistic. To overcome these problems, virtual reality (VR) trainers were developed that offer several advantages: permanent availability, training of specific skills, more or less realistic surgical scenarios, assessment of trainees, etc. Recent improvements in computer technology made the construction of advanced simulators possible, and a number of companies are now offering VR-trainers.

The increasing economic orientation of medicine in conjunction with shorter training schedules underscores the importance of specific training programs. This is especially true for MIS because of its special requirements. Despite the importance of training for MIS, there is little scientific knowledge about the learning mechanisms involved in acquiring laparoscopic skills and the methods suitable for training.8 Conventional laparoscopic training aims to overcome these problems by providing realistic training conditions using laparoscopic instruments in mechanical models. Basic and advanced tasks are chosen based on empirical considerations. As soon as the trainee has reached a certain level of expertise, the training is continued in animal models, which are assumed to provide the most realistic training conditions.

VR-trainers are likely to be an integral part of MIS training in the near future. It is therefore essential to know whether a simulator can provide a training environment that is suitable to improve surgical skills. Several studies have recently been conducted that document learning curves and training improvement with simulators.9–14 However, the important question remains whether skills acquired during simulator training can be transferred to a real situation. There are few studies on this topic, and they do not provide uniform results.15–18

Virtual laparoscopy simulators aim to resemble real instrument handling and object interactions. The degree of physical realism of the simulation varies depending on the hardware and software capabilities of a simulator. Because of limited computing power, a simulator can only represent a part of physical reality. This means that certain limitations have to be accepted for any simulation, eg, simplified surfaces of objects or organs, simplified or no haptic feedback, limited visual details, etc. The decision as to which part of reality should be simulated is based on assumptions about how laparoscopic skills are acquired and which parts of physical reality are important for successful training. Currently, there is little scientific evidence to prove that these assumptions are correct. The study of Seymour et al impressively showed that the MIST VR simulator could improve operating room performance.19 Although this study examined the training success of a VR-simulator in general, it has not yet been thoroughly validated whether a specific simulator design and specific tasks represent the reality that is needed to train laparoscopic skills. The rapid technical development and increasing availability of simulators necessitates methods to validate whether a VR-trainer provides a suitable environment for MIS training and whether the acquired skills can be transferred to real situations. The aim of this study was to compare a VR-trainer with a physically realistic environment. For the purpose of this study, a CVT served as the counterpart. The study design included specific training tasks that were identically constructed for the VR-trainer and the CVT. In doing so, we were able to directly compare the computer trainer, which is based on virtual reality, with the conventional trainer, which is based on physical reality. The chosen tasks were part of the basic skills training program and included instrument and camera manipulation.

We postulated 3 hypotheses for the current study: 1) Training results in conventional and VR-training are comparable. 2) Skills acquired on the VR-simulator are transferable to the physically realistic environment of a conventional video trainer. 3) Laparoscopically experienced surgeons perform better than novices in conventional and VR-simulator training.

METHODS

Apparatus

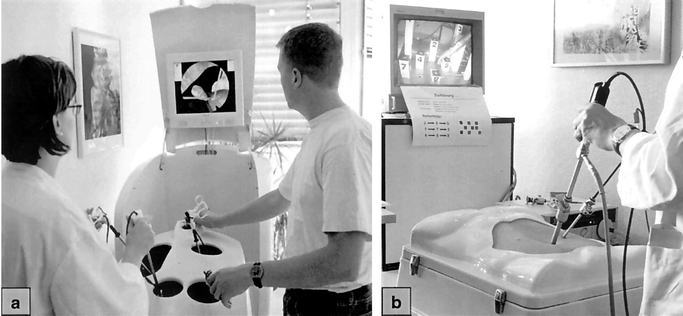

The VR-trainer we used in this study was the Virtual Endoscopic Surgery Trainer (VEST). This simulator was developed within the framework of a joint project between several departments at different German universities.20,21 The VEST hardware is equipped with 3 adjustable laparoscopic instruments, a camera, and a monitor. The software is based on the KISMET kernel and provides real-time elastodynamic simulation.22 Deformable structures such as organs and connective tissue are simulated realistically and allow real-time manipulation. The VEST software is constructed modularly so that additional scenarios can be designed with an editor and can be integrated into the system. The main purpose of the VEST system is to simulate whole laparoscopic operations, including manipulations on organs such as grasping, cutting, coagulating, etc. For the aim of this study, the advanced features of the simulation software were not used. Instead, basic laparoscopic tasks were chosen that could be simulated on the VEST system and could also be constructed as mechanical models for the CVT to ensure identical training conditions. VEST is based on a graphic workstation, and the hardware is integrated in a mobile housing. A flatscreen monitor and the Trainer Input Box are integrated in the housing and can be adjusted to variable heights (Fig. 1a). The Trainer Input Box represents the operation field and contains 3 laparoscopic instruments and a laparoscopic camera. All instruments can be moved to reflect the desired operation technique. The instruments are equipped with 5 degrees of freedom and an advanced force feedback system to provide haptic realism. The camera is rotatable, has a zoom mechanism, and can be used with different viewing angles (eg, 0°/30°). Instrument grips and camera housing are constructed using common commercial laparoscopic components. The conventional training was conducted using a CVT equipped with 2 laparoscopic graspers, a 30° angle laparoscopic video camera, and a monitor (Storz, Tuttlingen, Germany, Fig. 1b). The positioning of test persons, instruments, and monitor was identical in both devices, imitating the situation in the operating room.

FIGURE 1. (a) The Virtual Endoscopic Surgery Trainer is equipped with 3 force-feedback laparoscopic instruments, camera, and monitor. (b) Conventional Video Trainer with 30° angle camera, instruments, and monitor.

Two basic training tasks were chosen. For camera training, the participant had to guide an endoscopic camera within a 3-dimensional scene. Handling an endoscopic camera is essential for assistance in MIS, but is difficult to master without a training program. For instrument training, the participant had to interact with the scene, thus training the calculation of 3-dimensional movements shown in an enlarged and angled 2-dimensional endoscopic picture. The models used in the 2 training tasks were constructed in an identical manner: as mechanical models for the CVT and as simulated computer models for the VEST system. The computer models were constructed based on the exact dimensions, arrangement, and function of the physical models, thus building a virtual copy of the physical model.

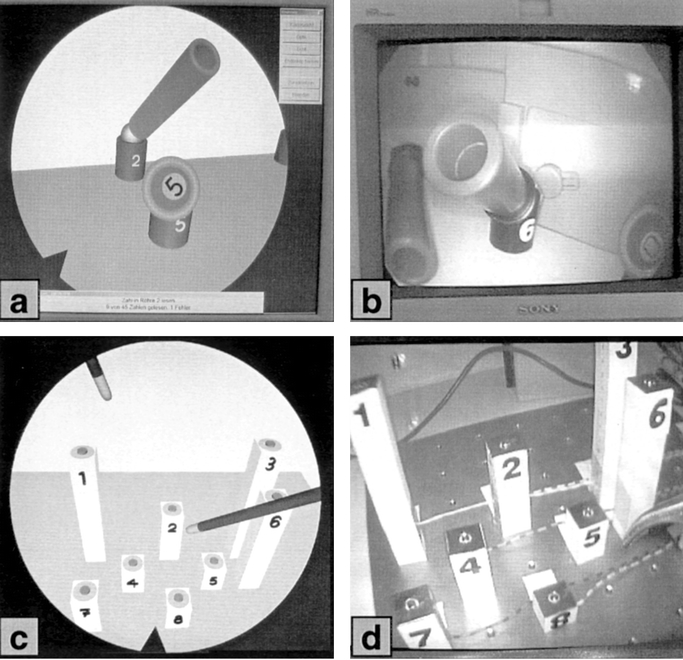

The model used for camera training consisted of 9 pipes of different length mounted on a base plate (Fig. 2 a, b). Each pipe was aimed at a different angle and was marked with a number at the bottom of the inside. In this study, a 30° angle camera was used. By rotating the camera's angle, one can point the view to different directions. The task was to move the camera and operate the angle in a way that the number on the bottom of the pipe could be seen on the monitor. Only one combination of camera position and angled view allowed the participant to view the number.

FIGURE 2. Camera tasks (a) Virtual Endoscopic Surgery Trainer (VEST) and (b) Conventional Video Trainer (CVT) and instrument training tasks (c) VEST and (d) CVT are identically constructed.

The instrument training model consisted of 9 cuboids of various length mounted on a base plate (Fig. 2 c, d). At the top of each cuboid was a designated point (3-mm diameter) for detecting successful instrument contact. A ring surrounding the point served as an indicator for failed contact, thus forcing the test person to be precise. In the instrument task, the test person had to direct the tip of a laparoscopic grasper to a designated point on a cuboid; successful contact was signaled by an audio sound. Touching the ring surrounding the designated point resulted in an error sound —the test person had to repeat the previous step. Participants were prompted to use their dominant hand for this task.

Both tasks were designed to train and assess a test person's visuospatial, perceptual, and psychomotor skills.23 The purpose of the camera task was to test a person's ability to navigate in 3-dimensional space (visuospatial skills) and to visualize the proper field of view and angle of view (perceptual skills) to accomplish the required camera movements (psychomotor skills). The emphasis of the instrument task was to assess a subject's ability in 3-dimensional navigation (visuospatial skills) and targeting (psychomotor skills).

Test Persons

Thirty-two test persons participated in the study. Of these participants, 24 were medical students without prior experience in laparoscopic surgery (hereafter referred to as “novices”). The remaining 8 participants were experienced laparoscopic surgeons employed at a university hospital (hereafter referred to as “experts”). Each test person had to fill out a questionnaire. In addition to demographic data, questions such as the handedness of participants, questions about experience with laparoscopic surgery, computers, video games, and simulators were also asked.

Study Design

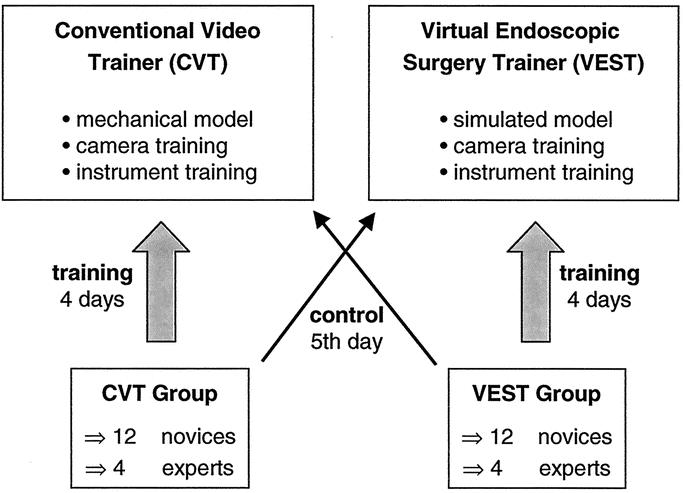

The novices and experts were randomly assigned to perform the study either on the VEST (VEST group) or on the CVT (CVT group) building 2 groups of equal size. Each group consisted of 12 novices and 4 experts. The groups performed a defined training program for 4 days on the VEST (VEST group) or the CVT (CVT group), respectively. The training program consisted of 2 basic laparoscopic tasks (camera task and instrument task) that were identically constructed for the VEST and the CVT.

The data from this part of the study were used to examine learning curves and to obtain basic data on learning speed on the respective devices. This part of the study was also used to get the participants through the steep phase of the learning curve and build the basis for the second part of the study (see below), because a pilot study showed that about 3 to 4 days were needed to get through the steep phase of the learning curve. Further studies showed that inexperienced trainees need 7 to 8 days on the VEST system to reach the plateau phase of the learning curve (unpublished data). However, for the purpose of the current study, it was important that the inexperienced participants went through the initial phase of fast learning.

The measurements taken in the second part of the study were used as reference data to examine the transfer of skills from 1 device to the other. The groups switched devices on the 5th day of training without having trained on the other device before. In doing so, we could observe the performance of the computer-trained group (VEST) on the conventional trainer (CVT) and vice versa (Fig. 3).

FIGURE 3. Each group (12 novices and 4 experts) trained for 4 days on the Conventional Video Trainer or the Virtual Endoscopic Surgery Trainer. The groups switched devices on Day 5 to test the transfer of skills.

Each task was carried out in the following manner: the test person had to complete 9 task steps (ie, viewing a pipe or touching a cuboid) in a fixed order. This cycle was repeated 5 times daily (45 task steps/d). The cycle times were recorded in seconds. For the instrument task, the number of errors was also recorded. Prior to the first day of the study, each test person obtained a schematic overview of the given tasks and oral instructions by the supervisor. The supervisor gave oral instructions for the task step order to be followed (ie, “pipe 3, pipe 6” or “cuboid 8, cuboid 2”). In addition, the order for the task steps was mounted next to the training device. There was 1 supervisor for each group, attending the test persons throughout the 5 days.

Statistics

Data are expressed as mean ± standard error of mean. Statistical comparisons were performed using the independent samples t test. Statistical significance was taken at the P < 0.05 level.

RESULTS

Questionnaire

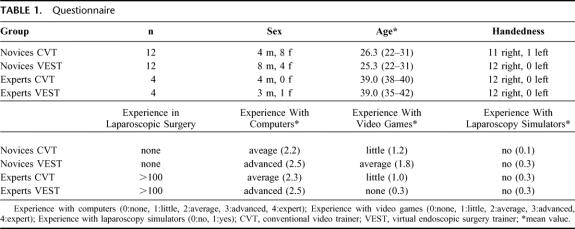

Demographic data are summarized in Table 1. There was no significant difference in age within the novice and expert groups, whereas sexes were distributed unequally in the novice groups. Subgroup analysis of the training performance showed no difference between sexes (data not shown). Only 1 test person was left-handed. No novice had prior laparoscopic experience; each expert had performed more than 100 laparoscopic operations. The mean experience in using computers did not differ among the groups nor did the experience in playing video games.

TABLE 1. Questionnaire

Training

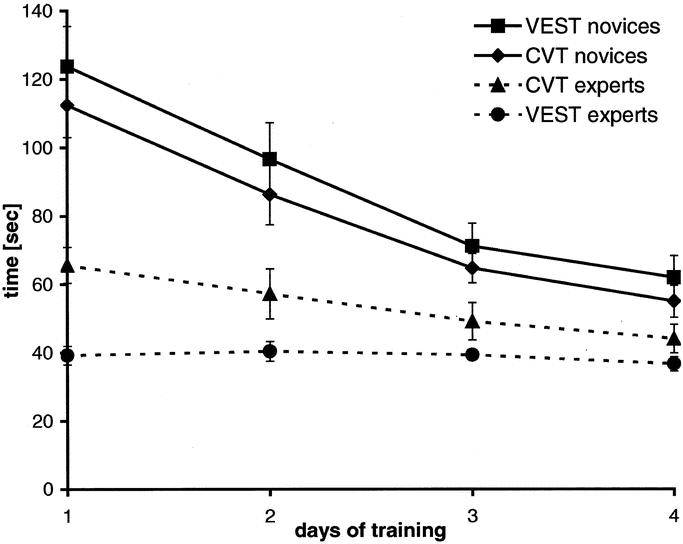

The novices in the VEST group and CVT group showed similar learning curves for the instrument task (Fig. 4). Starting near 120 seconds for completing the instrument task on the first day, times decreased to about 60 seconds on Day 4 showing an improvement of 51% for CVT novices and 50% for VEST novices. The novices’ learning curves ran parallel, and the CVT group was slightly faster than the VEST group. Experts were notably faster than novices were on the first day. Their training times improved only slightly (33% for CVT experts, 7% for VEST experts), and the experts were still faster than the novices on the last day of training.

FIGURE 4. Learning curves of novices and experts for the instrument training over 4 consecutive days (mean ± SEM).

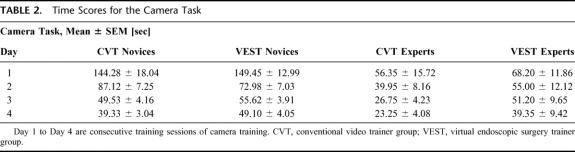

Camera training data are summarized in Table 2. The learning curves for this task were steeper, showing a strong improvement for novices (73% for CVT novices, 67% for VEST novices). Expert times improved more than in the instrument task (59% for CVT experts, 42% for VEST experts).

TABLE 2. Time Scores for the Camera Task

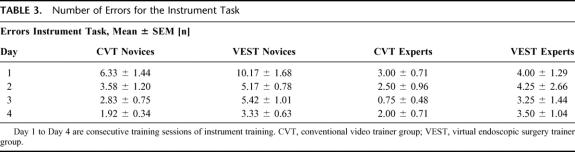

Both novice groups showed a decrease in errors for the instrument task (Table 3). Error rates of the novice and expert groups were higher on the VEST system than on the CVT. Similar to the trend of training times, experts made fewer errors and showed lesser improvement than novices.

TABLE 3. Number of Errors for the Instrument Task

Transfer of Skills

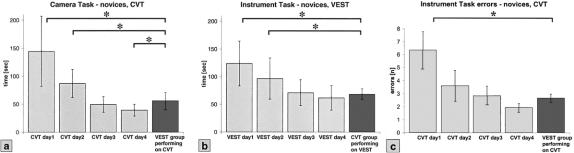

After 4 days of training, the groups switched devices for the first time. VEST novices performed the training tasks on the CVT and CVT novices performed the tasks on the VEST system. Figure 5a shows the data for the camera task: CVT novices during training Day 1 to 4 compared with VEST novices performing on the CVT the first time. The VEST novices were significantly faster on the CVT than the CVT novices on Day 1 and Day 2 (P < 0.05), achieving a training time like that of the CVT group on Day 3.

FIGURE 5. Transfer of skills for novices. (a) Training times of the CVT group on 4 consecutive days compared with the VEST group that is training for the first time on the CVT. (b) Training times of the VEST group compared with the CVT group that is training on the VEST (mean ± SD). (c) Errors of the CVT group compared with the VEST group that is training on the CVT (mean ± SD). CVT, Conventional Video Trainer; VEST, Virtual Endoscopic Surgery Trainer. *P < 0.05.

Similar data could be observed for the CVT group performing on the VEST. Figure 5b shows the instrument training times for the CVT novices performing on the VEST the first time.

Both groups (VEST, CVT) made significantly fewer errors in the instrument task when switching devices than the initial group using that trainer on Day 1 (P < 0.05). Figure 5c shows the errors for CVT novices during training days 1 to 4 compared with VEST novices performing on the CVT the first day. The expert groups showed no significant differences in performance for both training tasks when switching devices (data not shown).

DISCUSSION

In this study, we focused on the question of whether basic surgical skills training in virtual reality provides a realistic environment for MIS training. Virtual laparoscopy simulators aim to resemble instrument and object interactions in a more or less visually realistic scenario. It is not known whether the virtual reality that a simulator offers effectively represents a realistic training environment. The design of the current study included a direct comparison of the training results of a VR-trainer and a CVT for basic training tasks, such as camera and instrument navigation. A CVT offers a physically realistic training environment that is based on real instruments interacting with real objects. In comparing the 2 devices, we were able to examine the training results of a virtual simulation with its counterpart in reality.

This study focused on basic skills that build the foundation for advanced simulations. We used training tasks that were identically constructed for a VR-trainer and a conventional laparoscopy trainer to directly compare the different training methods. The study demonstrates that training results are comparable for basic laparoscopic tasks in the VEST and the CVT. Skills acquired on the VR-trainer could be transferred to the CVT and vice versa. The learning curves on the VR-trainer were nearly identical to the ones observed on the standard training device.

The simulation of surgical operations is very complex. Workstations with enough computing power to perform real-time simulations have only been available for the past few years. This has led to the development of different kinds of surgical VR-simulators. The spectrum ranges from low-cost systems with abstract exercises to high-end systems with force-feedback simulation performing whole operation scenarios. Several studies have been carried out on different surgical simulators to examine training behavior.12,13,15–17,24–27 The main problem remains whether the skills acquired in VR-training can be transferred to reality. In a recent study, this problem was approached in an elegant way.19 It was shown that training with the MIST VR-system improved operating room performance. However, the principal problem still remains whether a given virtual task matches its counterpart in reality.

The transferability of skills from a VR-simulator to physical reality is essential for its usability. VR-trainers try to resemble reality by simulating a MIS setting. Instruments, training objects, and interactions are based on mathematical models. An ideal simulator has to be realistic in multiple dimensions such as simulation physics, optical properties, and haptic feedback. Because of technical limitations, none of the simulators available currently can offer full realism. Objects consist of simplified surfaces, instruments can interact with objects only in certain predefined ways, calculated forces are dependent on simplified mathematical algorithms. In conclusion, VR-trainers offer only a part of reality. The designer of each device ultimately decides which part of reality will be simulated. Moreover, the method of training is arbitrarily selected. Currently, there is little scientific evidence about how a surgeon learns laparoscopic skills. Far less is known about the effects of VR-trainers, which, because of technical limitations, do not fully correspond with reality. Modern simulators train more than just basic tasks, and some allow the training of entire operations. Those who have tested such devices can verify that certain parts of the simulation are very realistic, whereas other parts allow operation techniques that are impossible or even dangerous in real surgery. An experienced surgeon notices these differences. A surgeon in training may have no basis of comparison to a real situation and therefore, might possibly learn incorrect techniques and try to apply them later on. Because of the special requirements and the increasing use of MIS, dedicated training programs will be essential in the future. The VR-trainer may possibly play a significant role in this context. The validation of VR-simulators is of great importance because of the aforementioned problems. The suitability of simulators for surgery must be tested to ensure the quality of surgical training and the safety of the patient.

The main focus of the current study was to examine whether fundamental skill acquisition on a VR-simulator is principally comparable to that on the CVT. The CVT is the standard device for basic laparoscopic training. Comparing the 2 devices allowed examination of basic training principles. To achieve homogeneous training groups, we used medical students with no prior surgical experience and a reference group of experienced surgeons. We used basic tasks that could be constructed identically as computer models and mechanical models. This made the direct comparison of training results between the 2 devices possible. In a pilot study conducted during a surgery training course, we established optimal training settings, eg, number of tasks steps, alignment of pipes, cuboids, and instruments. The cuboids we initially used had a point for successful contact, but no indicator for failed contact. During the pilot study, we could observe that medical students soon became faster than experts in the instrument training. The reason for this was that students learned to direct the instrument tip in very fast but imprecise movements around the top of the cuboid until successful contact. Experienced surgeons directed the instrument tip in an exact but slower movement. Adding an indicator ring for failed contact around the designated contact point forced the students to be precise. After this modification, the experts’ training times were significantly faster. This scenario was closer to a real situation, eg, precise coagulation of a bleeding vessel. This emphasizes the importance of careful simulation design. The registration of errors allowed us to obtain additional information about a participant's precision. As mentioned in the previous example, using training time as a sole measurement might not always be the best solution. Simulators offer the facility of recording the economy of motion, defined as the trainees’ path to perform a task compared with the calculated optimal path. We did not use this feature in this study, because a measurement of economy is only possible in a CVT with advanced technical equipment.28 We compensated for this problem by modifying tasks as mentioned above. With tasks optimized for precision, the training times did not only reflect the manual speed but also the accuracy of the test person.

The training times we measured were very similar for novices in the CVT and the VEST. We could observe typical learning curves for both tasks. The learning curves of both groups in each task ran nearly parallel. This indicates that the identically constructed virtual and conventional tasks had very similar levels of difficulty. Although we only measured training times and errors, this might indicate that learning behavior is similar for the VR-trainer and the CVT. Experts were notably faster than novices at the beginning of the study and improved only slightly during the 4 training days, indicating that they are already optimally trained for the given tasks. It can be assumed that the experts could apply the technical skills they gained through years of surgical practice. The skills that were tested in this study were very basic, but they build the basis for advanced manual techniques in laparoscopy. In this sense, the virtual training scheme seems to be suitable for acquiring laparoscopic skills.

Experts were still faster than novices were on the last day of training. This indicates that the novices did not fully reach the plateau phase of the learning curve. Further studies showed that novices need 7 to 8 days for the chosen training tasks on the VEST system to reach a plateau phase and to achieve training times that equal those of the expert group (unpublished data). However, the novice groups got through the steep part of the learning curve during the 4 training days, which allowed us to compare the transfer of skills on the 5th day with little interference due to an ongoing learning effect.

VEST novices were slightly slower and made more errors than novices on the CVT. This might be related to a somewhat higher difficulty level of the virtual training system. Even with identically designed training tasks, the 3-dimensional orientation is more difficult in the virtual simulator. Shadows and irregularities such as uneven coloring of surfaces or objects are important for spatial orientation in a 2-dimensional monitor-picture. Because of performance reasons, the VEST system does not provide these details. The higher difficulty in spatial orientation on the VEST might explain why novices and experts made more errors but were only slightly slower.

An important question of this study was whether the trainees could transfer their acquired knowledge from the VR-trainer to a reality-based training setting. The results showed that the VR-trained group was as fast and precise on the CVT as the CVT group on the third day of training. The VR-group did not train on the CVT before but achieved a score similar to the CVT group. The same also held true for the CVT group performing on the VEST. This demonstrates that skills can principally be transferred from one device to the other. It could also be observed that after switching devices, the test persons were slower than the group that originally trained on that device on the last day. This is likely to be due to adaptation to the new device, because an improvement during this training cycle could be seen.

In the current study, we were able to prove that training in basic laparoscopic tasks with the VEST system is comparable to training with a CVT. Devices with similar mathematical simulation models probably provide similar results. However, this must be proven for each simulator. The setting used in this study can easily be used to test different tasks on different simulators. In the future, the validation of complex scenarios will be essential to avoid teaching incorrect surgical techniques.

CONCLUSIONS

The following can be concluded in reference to the hypotheses of our study: 1) The training results in conventional and VR-training were comparable. The study showed nearly identical learning curves and similar training times for the novice groups. 2) The skills acquired on the VEST system could be transferred to the conventional video trainer and vice versa. This shows that the basic skills that were gained in virtual reality could be transferred to physical reality. 3) Experienced surgeons performed better than novices in both the conventional and the virtual training. This indicates that the basic skills that were trained in this study are important for performing laparoscopic surgery.

Footnotes

Supported by a grant from the German Federal Ministry of Education and Research (BMBF), ref.-no.: HapticIO FKZ 01IRA03C.

Reprints: Dr. Kai S. Lehmann, Department of General Surgery–Chirurgische Klinik I, Charité-Campus Benjamin Franklin, Freie- und Humboldt-Universität zu Berlin, Hindenburgdamm 30, 12200 Berlin, Germany. E-mail: kai.lehmann@charite.de.

REFERENCES

- 1.Rogers DA, Elstein AS, Bordage G. Improving continuing medical education for surgical techniques: applying the lessons learned in the first decade of minimal access surgery. Ann Surg. 2001;233:159–166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Richardson MC, Bell G, Fullarton GM. Incidence and nature of bile duct injuries following laparoscopic cholecystectomy: an audit of 5913 cases. West of Scotland Laparoscopic Cholecystectomy Audit Group. Br J Surg. 1996;83:1356–1360. [DOI] [PubMed] [Google Scholar]

- 3.Dent TL. The impact of laparoscopic surgery on health care delivery. The learning curve: skills and privileges. J Laparoendosc Surg. 1993;3:247–249. [PubMed] [Google Scholar]

- 4.Hawasli A, Lloyd LR. Laparoscopic cholecystectomy. The learning curve: report of 50 patients. Am Surg. 1991;57:542–544. [PubMed] [Google Scholar]

- 5.Moore MJ, Bennett CL. The learning curve for laparoscopic cholecystectomy. The Southern Surgeons Club. Am J Surg. 1995;170:55–59. [DOI] [PubMed] [Google Scholar]

- 6.Schauer P, Ikramuddin S, Hamad G, et al. The learning curve for laparoscopic Roux-en-Y gastric bypass is 100 cases. Surg Endosc. 2003;17:212–215. [DOI] [PubMed] [Google Scholar]

- 7.Soot SJ, Eshraghi N, Farahmand M, et al. Transition from open to laparoscopic fundoplication: the learning curve. Arch Surg. 1999;134:278–281. [DOI] [PubMed] [Google Scholar]

- 8.Park A, Witzke DB. Training and educational approaches to minimally invasive surgery: state of the art. Semin Laparosc Surg. 2002;9:198–205. [DOI] [PubMed] [Google Scholar]

- 9.Ali MR, Mowery Y, Kaplan B, et al. Training the novice in laparoscopy. More challenge is better. Surg Endosc. 2002;16:1732–1736. [DOI] [PubMed] [Google Scholar]

- 10.Darzi A, Smith S, Taffinder N. Assessing operative skill. Needs to become more objective. BMJ. 1999;318:887–888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Darzi A, Mackay S. Assessment of surgical competence. Qual Health Care. 2001;10(suppl 2):ii64-ii69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Gallagher AG, Satava RM. Virtual reality as a metric for the assessment of laparoscopic psychomotor skills. Learning curves and reliability measures. Surg Endosc. 2002;16:1746–1752. [DOI] [PubMed] [Google Scholar]

- 13.Grantcharov TP, Bardram L, Funch-Jensen P, et al. Learning curves and impact of previous operative experience on performance on a virtual reality simulator to test laparoscopic surgical skills. Am J Surg. 2003;185:146–149. [DOI] [PubMed] [Google Scholar]

- 14.Grantcharov TP, Bardram L, Funch-Jensen P, et al. Impact of hand dominance, gender, and experience with computer games on performance in virtual reality laparoscopy. Surg Endosc. 2003. [DOI] [PubMed]

- 15.Torkington J, Smith SG, Rees BI, et al. Skill transfer from virtual reality to a real laparoscopic task. Surg Endosc. 2001;15:1076–1079. [DOI] [PubMed] [Google Scholar]

- 16.Hamilton EC, Scott DJ, Fleming JB, et al. Comparison of video trainer and virtual reality training systems on acquisition of laparoscopic skills. Surg Endosc. 2002;16:406–411. [DOI] [PubMed] [Google Scholar]

- 17.Hyltander A, Liljegren E, Rhodin PH, et al. The transfer of basic skills learned in a laparoscopic simulator to the operating room. Surg Endosc. 2002;16:1324–1328. [DOI] [PubMed] [Google Scholar]

- 18.Ahlberg G, Heikkinen T, Iselius L, et al. Does training in a virtual reality simulator improve surgical performance? Surg Endosc. 2002;16:126–129. [DOI] [PubMed] [Google Scholar]

- 19.Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg. 2002;236:458–463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kuehnapfel UG, Kuhn C, Huebner M, et al. The Karlsruhe Endoscopic Surgery Trainer as an example for virtual reality in medical education. Min Invasive Ther Allied Technol. 1997;6:122–125. [Google Scholar]

- 21.Kuehnapfel U, Cakmak H, Maass H. Endoscopic surgery training using virtual reality and deformable tissue simulation. Computers Graphics. 2000;24:671–682. [Google Scholar]

- 22.Kuehnapfel UG, Neisius B. CAD-based graphical computer simulation in endoscopic surgery. Endosc Surg Allied Technol. 1993;1:181–184. [PubMed] [Google Scholar]

- 23.Satava RM, Cuschieri A, Hamdorf J. Metrics for objective assessment. Surg Endosc. 2003;17:220–226. [DOI] [PubMed] [Google Scholar]

- 24.Grantcharov TP, Bardram L, Funch-Jensen P, et al. Assessment of technical surgical skills. Eur J Surg. 2002;168:139–144. [DOI] [PubMed] [Google Scholar]

- 25.Paisley AM, Baldwin PJ, Paterson-Brown S. Validity of surgical simulation for the assessment of operative skill. Br J Surg. 2001;88:1525–1532. [DOI] [PubMed] [Google Scholar]

- 26.Smith CD, Farrell TM, McNatt SS, et al. Assessing laparoscopic manipulative skills. Am J Surg. 2001;181:547–550. [DOI] [PubMed] [Google Scholar]

- 27.Torkington J, Smith SG, Rees B, et al. The role of the basic surgical skills course in the acquisition and retention of laparoscopic skill. Surg Endosc. 2001;15:1071–1075. [DOI] [PubMed] [Google Scholar]

- 28.Smith SG, Torkington J, Brown TJ, et al. Motion analysis. Surg Endosc. 2002;16:640–645. [DOI] [PubMed] [Google Scholar]