Abstract

Objective

To develop a statistic measuring the impact of algorithm-driven disease management programs on outcomes for patients with chronic mental illness that allowed for treatment-as-usual controls to “catch up” to early gains of treated patients.

Data Sources/Study Setting

Statistical power was estimated from simulated samples representing effect sizes that grew, remained constant, or declined following an initial improvement. Estimates were based on the Texas Medication Algorithm Project on adult patients (age≥18) with bipolar disorder (n=267) who received care between 1998 and 2000 at 1 of 11 clinics across Texas.

Study Design

Study patients were assessed at baseline and three-month follow-up for a minimum of one year. Program tracks were assigned by clinic.

Data Collection/Extraction Methods

Hierarchical linear modeling was modified to account for declining-effects. Outcomes were based on 30-item Inventory for Depression Symptomatology—Clinician Version.

Principal Findings

Declining-effect analyses had significantly greater power detecting program differences than traditional growth models in constant and declining-effects cases. Bipolar patients with severe depressive symptoms in an algorithm-driven, disease management program reported fewer symptoms after three months, with treatment-as-usual controls “catching up” within one year.

Conclusions

In addition to psychometric properties, data collection design, and power, investigators should consider how outcomes unfold over time when selecting an appropriate statistic to evaluate service interventions. Declining-effect analyses may be applicable to a wide range of treatment and intervention trials.

Keywords: Program evaluation, treatment algorithm, disease management systems, severe mental illness

In this paper, we developed a new approach, called the Declining-Effects Model, to analyze longitudinal data evaluating a disease management program (DMP) for patients with chronic illness, including mental illness. This approach takes into account how health outcomes may unfold over time by comparing the course of illness between patients assigned to new treatment programs with controls who receive treatment as usual (TAU). The model was tested using data from the Texas Medication Algorithm Project (TMAP), a DMP for severe mental illness that included consensus-based medication-algorithms, as well as patient education, uniform clinical reports, expert consultation, and clinical coordinators overseeing algorithm adherence (Rush et al. 1999).

Investigators often evaluate DMPs by assigning patients to treatment tracks and repeatedly assessing their outcomes over time, beginning at baseline when treatment begins. Disease management programs are considered effective if the outcomes among treated patients are better than outcomes experienced among controls. Statisticians often summarize these differences by calculating an effect-statistic. While conceptual factors underlying program rationale often influence the choice of a primary outcome measure (McDowell and Newell 1996), the choice of an appropriate effect-statistic typically depends on: (1) the psychometric properties of selected outcome measures, (2) the research design, and (3) properties of the statistic itself. These properties include the power of the statistic to avoid falsely detecting effects that do not exist (false positives, or type I error) and failing to find effects that do exist (false negatives, or type II error) (Siegel 1956). Investigators often report the latter as statistical power, representing the chance that a statistic would significantly detect an actual effect. All things equal, the most desirable statistic is one with the greatest power for a given type I error.

Not all effect-statistics require working assumptions about how outcomes unfold over time (Lavori 1990). However, to summarize program effectiveness into a single estimate, researchers often borrowed from the efficacy trials literature to select statistics powered to detect effects that grow with time. Under a growth hypothesis, the outcomes of patients receiving efficacious therapies are expected to improve with time, while their untreated counterparts would remain the same, or get worse. Thus, when differences in outcomes between program tracks grow with time, we say outcomes exhibit an increasing-effects pattern.

In this paper, we assert that outcomes of algorithm-driven DMPs for chronic mental illness may be more complex. Specifically, we postulate that the size of an effect may increase by a lump-sum amount that accrues during an initial period following baseline. After such an initial advantage, differences may either remain constant, or decline as DMP versus TAU differences become negligible with time. We call this initial rise, then fall, of a DMP advantage a declining-effects pattern.

We thus (1) formulated an effect-statistic that could detect declining-effects patterns; (2) compared the power of both declining-effects and traditional growth statistics to detect an initial effect that either grows, remains constant, or declines with time; and (3) applied the statistic to evaluate an algorithm-driven disease-management program for outpatients with bipolar disorder.

Rationale

For Algorithm-Driven Disease Management Programs

The need for algorithm-driven DMPs for chronic mental illness is underscored by the proliferation of medical knowledge and cost-conscious practitioners who often lack time to explore the scientific literature and apply its latest discoveries. Also known as preferred-practices, evidenced-based care, clinical-pathways, or best-practices, clinical algorithms are often presented as flowcharts designed to help practitioners improve outcomes (Suppes et al. 2001; Field and Lohr 1990; American Psychiatric Association 1995; Jobson and Potter 1995) and contain costs (Lubarsky et al. 1997; La Ruche, Lorougnon, and Digbeu 1995; McFadden et al. 1995) by organizing strategic (what treatments) and tactical (how to treat) decisions into sequential stages (Rush and Prien 1995). Having been applied elsewhere (e.g., Department of Veterans Affairs VHA Directive 96-053 1996;), algorithm-driven DMPs may satisfy concerns first expressed by the late Avedis Donabedian that structured process interventions may be required before results from outcome studies can begin to influence clinical practice (Donabedian 1976).

For Declining-Effects Patterns

There are several reasons why DMP outcomes may follow a declining-effects pattern.

Treatment-as-Usual (TAU)

Sometimes ethically required, TAU, rather than no treatment, comparison groups are often used by investigators to answer policy questions raised when new modalities are being considered to replace current practices. If algorithm-driven DMPs help practitioners find the service mix that optimizes outcomes, one may hypothesize that TAU physicians will eventually find the optimum mix, allowing TAU patient outcomes to catch up to their DMP counterparts. That is, DMP enhances the speed of an ultimate recovery.

Chronic Iillness

Patients with chronic illness might relapse after therapies improve health, as treatments merely delayed an inevitable deterioration in health, or the treatment effects wore-off with time.

Derived Outcome

DMPs are intended to improve patient health outcomes by influencing provider behaviors. However, impact on practitioner behaviors may be short lived, or TAU practitioners may adopt the targeted behaviors, causing patient outcomes from the two program tracks to blend. The concept of such a derived outcome is similar to Grossman's concept of derived demand in which use of care springs from consumer wants for health (Grossman 1972).

Texas Medication Algorithm Project (TMAP)

Study data came from the Texas Medication Algorithm Project (TMAP) evaluation of the cost and outcome of a DMP consisting of consensus-based medication algorithms (Crismon et al. 1999), physician training and continued consultation, standardized chart forms, on-site clinical coordinators provided physician feedback on algorithm adherence and patient progress, and patient education about mental illness and its treatment. The study enrolled 1,421 evaluable patients who received medical treatment in 19 clinics of the Texas Department of Mental Health and Mental Retardation between March 1998 and April 1999 (Suppes et al. 2001; Gilbert et al. 1998; Rush et al. 1999; Rush et al. 1998; Crismon et al. 1999; Chiles et al. 1999; Kashner, Rush, and Altshuler 1999). Patients were long-term sufferers with severe mental illness (major depressive, schizophrenic, or bipolar disorder) in need of a medication change or required medical attention for severe psychiatric symptoms or side effects. Patients were assessed every three months for at least one year for psychiatric symptoms, health functioning, side-effect burden, patient satisfaction, quality of life, and health care costs. The DMP or TAU assignments were by clinic to avoid treatment blending (same doctor treats both intervention and control patients) and water-cooler effects (doctors from the same clinic confer about treating patients).

Program Effects

Growth-Effect Statistics

When outcomes exhibit an increasing-effects pattern, the health of both DMP and TAU patients are assumed to change with time. Outcomes are thus described in terms of a growth-rate. If the rate of change is constant for each patient, the difference in growth-rates between DMP and TAU patients is called the DMP's growth-effect. A DMP is considered effective if it results in significant and favorable growth-effects.

Growth-effect statistics can be calculated from simple growth models, including Pearson's r, Spearman's r, linear slope, and changed scores, with Kendall's τ displaying the greatest power without type I error inflation (Arndt et al. 2000). Kendall's τ is the difference between the numbers of improved and worsened outcomes, divided by either the number of possible comparisons, or comparisons reporting change. A nonparametric statistic applicable to ordinal data, larger τ values indicate growth-effects.

Growth-effects can also be estimated from random regression models that include hierarchical designs such as growth curves (Stanek and Diehl 1988; Willett, Ayoub, and Robinson 1991), random-effects (Laird and Ware 1982; Ware 1985), random regression (Gibbons et al. 1993; Jennrich and Schluchter 1986), empirical Bayesian (Maritz 1970), general mixed linear (Goldstein 1986), and hierarchical linear (Byrk and Raudenbush 1992) models. These methods are desirable because they do not depend on fixed intervals between observations, allow for missing observations, account for repeated observations nested within patient, permit more flexible covariance structures for a better model fit (Bryk and Raudenbush 1992), such as regression to the mean (Berndt et al. 1998), heteroscedastic, and autocorrelated level-1 covariances (Louis and Spiro 1984; Hedeker 1989; Chi and Reinsel 1989), and has been modified to handle ordinal data (Hedeker and Gibbons 1994).

Described in the Appendix, growth-effects estimated from First-Order Growth models are constant whenever differences between program tracks during follow-up grow at a constant rate. With Second-Order Growth models, patient outcomes are expected to grow, but at a diminishing rate with time. Thus, Second-Order Growth models can be used to estimate both a growth-effect, and the rate the growth-effect diminishes with time.

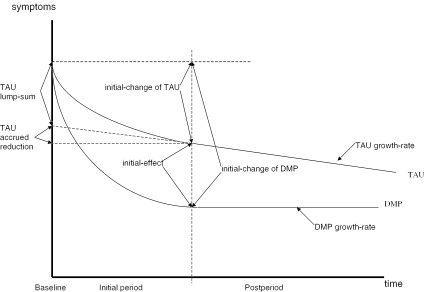

Declining-Effects Statistics

The declining-effects statistics are mathematically derived in the Appendix and displayed in Figure 1 where the Y-axis represents symptoms (smaller values indicate better health), and the X-axis represents time beginning at baseline and partitioned into initial (first three months) and post (remaining nine months) periods. Under a declining-effects pattern, the health of both DMP and TAU patients are assumed to improve with time at a constant growth-rate, with an additional, one-time lump-sum improvement accrued during the initial period. We combined the lump-sum improvement and the accumulated growth during the initial period to determine for each patient an initial-change in health. The initial-change is calculated by taking the difference in health determined at the end of the initial period and at baseline. Outcomes are thus described in terms of initial-changes and post-period growth-rates.

Figure 1.

Declining-Effects Condition

Note: “Y” axis reflects symptom scale with lower values reflecting better health.

The impact of a DMP on patient outcomes is assessed by two parameters. The initial-effect is the mean difference in initial-changes between DMP and TAU patients. A favorable initial-effect means that DMP patients experienced a more favorable improvement in health between baseline and the end of the initial period than their TAU counterparts. The growth-effect is the mean difference in growth-rates between DMP and TAU patients during the postperiod. An unfavorable growth-effect means that the outcomes of TAU patients improve at a faster rate than their DMP counterparts during the postperiod. A favorable initial-effect followed by an unfavorable growth-effect indicates a declining-effects pattern of outcomes. Thus, if DMP physicians, aided by algorithms, identify the optimal service mix before their TAU counterparts can determine an effective mix by trial and error, then DMP patient outcomes should be initially better, with TAU patient outcomes catching up with time.

One drawback is the size of the initial-effect would depend on the length of the initial period, requiring investigators to consider how outcomes unfold over time when setting assessment schedules for longitudinal studies. Setting longer initial periods will lead to smaller estimates of initial effects under a “catching-up” TAU.

Statistical Power

We constructed simulated databases to compare the power of effect-statistics based on Kendall's τ, First and Second-Order Growth Models, and Declining-Effects Models under increasing, constant, and declining-effects conditions. Health was assessed using the 30-item Inventory for Depression Symptomatology—Clinician Version (IDSC) (Rush et al. 1986; Rush et al. 1996), an interval scale of depressive symptom severity, with well-known psychometric properties, and with larger scores indicating more depressive symptoms and poorer health. The desired statistic has greatest power (likelihood of detecting differences when they existed) without inflating type I error (likelihood of detecting differences when none existed), with power calculated as the proportion of samples detecting significant effects to the total created. To compare models by examining power alone, test statistics were recalibrated to achieve a strict 2.5 percent type I error.

Databases representing each of five test patterns were simulated to include a baseline and four quarterly values for five thousand samples of three hundred subjects each, with a 7 percent random drop-out per quarter, beginning with the second assessment. Data values for each of five patterns were generated, with values deviating from the pattern by independently and normally distributed random variates with zero mean and constant variance of 9.5 IDSC units. Test statistics were based on one-tailed tests, α=.025, with standard errors estimated from each drawn sample. Type I errors were determined from a simulated “no effects” and constant-effects cases. See Appendix for equations.

A DMP is considered effective if investigators find a significant growth-effect (Kendal's τ, First and Second-Ordered Growth Models) or a significant initial-effect (Declining-Effects Model). Outcomes follow a declining-effects pattern if the sample reveals a significant initial effect and significant but unfavorable growth-effect (indicating TAU patient outcomes catch up to DMP). Second-Order Growth Model can be adopted to test for declining-effect patterns. See Appendix.

Table 1 contains a description of five test patterns and power estimates, by model, effect, and test pattern. All five test patterns had the same initial-change in outcomes for DMP (−6 IDSC), for TAU (−2 IDSC), and thus the same initial-effect (–4 IDSC). Pattern 1 represents an increasing effect size (−2 IDSC/qtr), resulting from a DMP growth-rate (−3 IDSC/qtr) that was more favorable than TAU (−1 IDSC/qtr). Pattern 2 represents an increasing effect size (−2 IDSC/qtr) that diminishes each quarter (+.50 IDSC/qtr). Pattern 3 represents a constant effect size (0 IDSC/qtr). Patterns 4 and 5 represent declining-effects of 1/qtr and 1.33/qtr, respectively. Technical details are provided in the Appendix.

Table 1.

Power for Selected Statistics for Selected Conditions Based on One-Tailed t-tests of 300 Subjects

| PATTERN 1 Increasing Effects plus Lump-sum | PATTERN 2 Increasing Effects that Diminish | PATTERN 3 Constant Effects | PATTERN 4 Declining Effects (Small) | PATTERN 5 Declining Effects (Large) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Pattern Characteristics | ||||||||||

| DMP | TAU | DMP | TAU | DMP | TAU | DMP | TAU | DMP | TAU | |

| Growth-rate (IDSC/qtr) | −3.00 | −1.00 | −6.75 | −2.25 | −1.00 | −1.00 | 0.00 | −1.00 | 0.33 | −1.00 |

| Diminish growth-rate (IDSC/qtr2) | 0.00 | 0.00 | 0.75 | 0.25 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| Lump-sum (IDSC) | −3.00 | −1.00 | 0.00 | 0.00 | −5.00 | −1.00 | −6.00 | −1.00 | −6.33 | −1.00 |

| Initial-change (IDSC) | −6.00 | −2.00 | −6.00 | −2.00 | −6.00 | −2.00 | −6.00 | −2.00 | −6.00 | −2.00 |

| Growth-effect (IDSC/qtr) | −2.00 | −4.50 | 0.00 | 1.00 | 1.33 | |||||

| Initial-effect (IDSC) | −4.00 | −4.00 | −4.00 | −4.00 | −4.00 | |||||

| Effect Statistic | ||||||||||

| Kendal's τ | ||||||||||

| Growth-effect | 81.92% | 84.56% | 2.18% | 0.00% | 0.00% | |||||

| First-Order Growth Model | ||||||||||

| Growth-effect | 100.00% | 100.00% | 86.56% | 33.92% | 18.40% | |||||

| Second-Order Growth Model | ||||||||||

| Growth-effect | 77.76% | 92.50% | 67.80% | 60.46% | 59.26% | |||||

| Diminishing growth-effect | 16.62% | 36.10% | 50.10% | 64.98% | 71.50% | |||||

| Declining-effect | (2.50%) | 15.72% | 25.86% | |||||||

| Declining-Effects Model | ||||||||||

| Initial-effect | 76.98% | 83.56% | 77.12% | 75.84% | 76.48% | |||||

| Negative growth-effect | 0.00% | 0.00% | 2.64% | 48.06% | 72.86% | |||||

| Declining-effect | (2.50%) | 42.62% | 62.26% | |||||||

Technical details are provided in the Appendix.

Results are reported in Table 1. Detecting if DMP had any impact on outcomes of care, effect-statistics derived from First-Order Growth Models (growth-effects) were superior under an increasing-effects pattern (1–2), while Declining-Effects Models (initial-effects) exhibited the greatest power under a decreasing-effects pattern (4–5). When outcomes exhibited a constant-effects pattern (3), effect-statistics from a Declining-Effects Model had better power than from a Second-Order Growth Model to detect if DMP had any favorable impact on health outcomes (77 percent versus 68 percent). While the single parameter growth-effect estimate from the First-Order Growth Model did display better power (87 percent) than the initial-effect estimate from the Declining-Effects Model (77 percent), the former model is misspecified, and leads to an incorrect interpretation that effect sizes grow throughout follow-up, when in fact the effect size remains constant after a one-time lump-sum increase accruing during an initial period. Thus, for both constant and declining-effects conditions, a Declining-Effects Model is the best choice to derive an effects-statistic. Note that under constant and declining-effect conditions, Kendal's τ was virtually powerless to detect any program differences.

The Declining-Effects Model was also superior to Second-Order Growth Models in detecting declining-effect patterns, a power gap that tended to widen as effect sizes declined at a faster rate. That is, the Declining-Effects Model provides the more appropriate test to determine if TAU caught up with their DMP counterparts after DMP gained an advantage during the initial period.

The results from these simulations meant that in the absence of theory-driven hypotheses, exploratory analyses should begin with a test for declining-effects patterns using Declining-Effects Models. In the absence of declining- or constant-effects patterns, investigators should proceed with Growth Models. To rely on Growth Models when declining or constant effects exist, investigators risk either having insufficient power to detect any program advantages, or risk incorrectly interpreting the data to suggest that group differences continue to grow throughout follow-up.

Detecting Declining-Effect Conditions: TMAP

Declining-Effects Models are relevant only if there is empirical support for declining-effects patterns in nature. To test for such patterns, we use TMAP data for 267 adult patients with a Diagnostic and Statistical Manual of Mental Disorders, Fourth Edition (DSM-IV) diagnosis of bipolar disorder (American Psychiatric Association 1994). All subjects had signed informed consent, provided baseline and at least one follow-up IDSC assessment, and had been assigned to one of 4 ALGO clinics (n=141) or 7 TAU clinics (n=126). The sample was diverse ethnically (27 percent Hispanic, 12 percent African American, 60 percent Caucasian, and 1 percent other), with a mean age of 39.0 years (SD=10.3), and 68 percent female, 24 percent married, 26 percent living alone with household sizes averaging 1.7 plus patient (SD=1.8), were high school graduates (78 percent), but mostly unemployed (73.6 percent) with mean monthly household disposable income (after housing costs) of $450/month (SD=$599) (1999 US$), with income assistance or food-stamps (49 percent) and on Medicaid during six months prior to baseline (53 percent). Clinically, patients at baseline reported a mean length of illness of 14.7 years (SD=12.5), IDSC score of 30.9 (SD=14.5), 24-item Brief Psychiatric Rating Scale (BPRS24) (Overall and Gorham 1988; Ventura et al. 1993) of 52.8 (SD=13.5), and Medical Outcome Study 12-item Short Form (SF-12) (Ware, Kosinski, and Keller 1996) mental functioning of 35.0 (SD=11.3) and physical functioning of 42.6 (SD=11.6), with 20 percent reporting substance abuse during the prior six months. A 10-item Patient Perception of Benefits scale was constructed for TMAP to measure patient attitudes concerning whether health care can improve patient functioning. Patients were asked “If I can get the help I need from a doctor, I believe that I will be much better able to …manage problems at home, earn a living or go to school, enjoy things that interest me, feel good about myself, handle emergencies and crises, get along with friends, get along with my family, control my life, do things on my own, and make important decisions that affect my life and my family.” Patients ranked each item as strongly agree (=1) to strongly disagree (=5). For this sample, baseline scores ranged from 10 to 50, with mean 19.1 (SD=7.6), with higher scores indicating greater pessimism about care.

Declining-Effects Models (equation 8b and 9b in Appendix) were estimated using HLM/3L software (Bryk, Raudenbush, and Congdon 1996) with subjects grouped by baseline symptoms into very severe (IDSC≥46, n=48), severe (IDSC 17–45, n=170), and mild (IDSC≤16, n=46). Estimates were adjusted to reflect patient differences in baseline BPRS24 score, age (years), family size, disposable income, years of education, patient perception of benefits, gender, African American status, and Hispanic status.

Results are reported in Table 2. Examining only those subjects who began the study with the most severe depressive symptoms at baseline, patients in both TAU and DMP tracks experienced a reduction in symptoms during the initial three months of the program by –6 IDSC and –16.5 IDSC, respectively. The greater reduction in symptoms during the initial period among DMP patients (DMP initial-effect) was significant (−10.5 IDSC), indicating a more favorable DMP outcome than TAU at the end of the initial period. During the postperiod, however, TAU patients continued to improve at a growth-rate of –3.2 IDSC/qtr, while symptoms for DMP patients were unchanged. The difference in growth-rates was significant (DMP growth-effect) suggesting that TAU patients caught up with their DMP counterparts during the postperiod as IDSC outcome differences between tracks diminished by 3.7 IDSC/qtr.

Table 2.

IDSC Adjusted Estimates of Initial-Change, Growth-Rate, and Differences between DMP and TAU Patients with Bipolar Disorder, by Baseline IDSC Scores

| TAU (n=123) | DMP (n=141) | DIFFERENCE | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| γ | df | t | P | γ | df | t | P | γ | df | t | P | |

| Initial-Change | ||||||||||||

| Very severe | −6.00±2.79 | 33 | 2.15 | .039 | −16.54±2.46 | 33 | 6.73 | <.001 | −10.54±4.05 | 165 | 2.60 | .010 |

| Severe | −2.59±1.42 | 137 | 1.82 | .069 | −3.72±1.32 | 137 | 2.82 | .005 | −1.13±1.99 | 603 | 0.57 | .57 |

| Mild | 4.73±1.63 | 32 | 2.91 | .007 | 5.81±1.86 | 32 | 3.13 | .004 | 1.08±2.74 | 170 | 0.39 | .69 |

| Growth-Rate | ||||||||||||

| Very severe | −3.18±0.92 | 165 | 3.45 | .001 | 0.58±0.59 | 165 | 0.98 | .33 | 3.75±1.10 | 165 | 3.43 | .001 |

| Severe | 0.45±0.46 | 603 | 0.97 | .33 | −0.19±0.34 | 603 | 0.56 | .58 | −0.64±0.57 | 603 | 1.12 | .27 |

| Mild | −0.34±0.42 | 170 | 0.81 | .42 | −0.95±0.33 | 170 | 2.88 | .004 | −0.61±0.53 | 170 | 1.15 | .25 |

Coefficients represented as mean value±standard error.

Adjusted for mean values by group (all, high, medium, and low clinical depressive symptoms) with respect to baseline BPRS24 score, age (years), family size, disposable income, years of education, patient perception of benefits from treatment, gender, African American Status, Hispanic Status.

Depression symptom subsamples created by mean±1 standard deviation IDSC scores. Sample sizes are for very severe (IDSC≥46) DMP n=24, TAU n=24; severe (IDSC 17-45) DMP n=89, TAU n=81; and mild (IDSC≤16) DMP n=28, TAU n=18.

In contrast, patients who entered the study with severe baseline depressive symptoms showed only modest reductions in symptoms after the initial period (–3.7 IDSC for DMP and –2.6 IDSC for TAU), though DMP and TAU differences were not significant. On the other hand, patients with mild symptoms actually showed a worsening of symptoms at the end of the initial period as both DMP and TAU patients regressed into depressive symptom episodes. An apparent inverse relationship between initial reductions and baseline symptoms suggest a regression to the mean confound. The impact on DMP initial- and growth-effects is small, as baseline IDSC values were 30.3±14.5 for DMP and 31.5±14.5 for TAU, or a mean difference of −1.2±1.8 (t=0.67, df=261, p=.51).

In summary, DMP versus TAU exhibited a declining-effects pattern for only the very severely depressed patients.

Conclusion

Investigators often borrow from the clinical trials literature applying test statistics (Kendall's τ, growth models) to determine program effectiveness by detecting if the effect sizes grow with time. This is appropriate when treatments under study “cure” debilitating illnesses that, if left untreated, would grow worse.

However, a growth hypothesis may not be reasonable in intervention trials focusing on provider behaviors, chronic conditions, and comparisons with TAU controls. Examples include the TMAP algorithm-driven DMP for patients with mental disorders designed to help clinicians find the right mix of available treatments and assist compliance through patient education.

Pattern of outcomes may deviate from a simple growth. Disease management program practitioners may revert to former methods as TAU practitioners accept new ones, causing practice patterns to blend, and outcomes to become similar. TAU practitioners may eventually prescribe an appropriate mix of services that may eventually work, helping TAU outcomes to catch up to their DMP counterparts, though more time is required to reach desired levels. On the other hand, treatments for intractable chronic conditions may eventually wear off, patients may stop care due to intolerable side-effects, or poor prognoses may mean that a DMP merely delays an inevitable decline in health.

In such cases, an intervention could exhibit significant improvement during an initial period, with the advantage declining in subsequent periods.

Our analyses of TMAP data suggested that declining-effects patterns do exist when algorithm-driven DMPs are evaluated against TAU controls. Declining-effects also appear in work therapy programs for homeless, substance dependent veterans (Kashner, Rosenheck et al. 2002), and in acute randomized controlled trials whenever placebos impact outcomes (Hrobjartsson and Gotzsche 2001; Andrews 2001; Kirsch 2000; Lavori 2000).

Our power calculations suggested that under declining-effects patterns, growth models are inappropriate. They either lack power to detect program effects, or misspecify how program advantages unfolded over time. We recommend investigators begin with a test for declining-effects patterns before proceeding with growth models when evaluating DMP for patients with chronic conditions.

In summary, in addition to the psychometric properties of outcome measures, the research design, and the power and type II error of the statistic itself, services researchers should carefully consider how outcomes are expected to unfold over time before selecting an appropriate program effect statistic.

Appendix

Appendix: Increasing-Effects Model

A First-Order Growth Model is:

| (1a) |

where yit is health status assessed at time t from baseline (t=0) when patient i was assigned to either treated (Ii=1) or controls (Ii=0). Patient and time level random effects are ui and vit. The growth-effect is α11, or difference in growth-rates in outcome between treated (a10+a11) and control (α10) patients. For symptom outcomes (fewer symptoms reflect better health), a favorable growth-effect suggests α11<0. α01 is the average baseline difference between treated (α0+α01) and controls (α0).

A Second-Order Growth Model allowing growth-rates to change with time and adjusting for baseline covariates is:

| (1b) |

where α21 is the rate of change in growth-rates over time between treated (α20+α21) and controls (α20). How health varies as patient characteristics (Xit) differ from a reference, or average, patient () is described by α3.

Derivation of Declining-Effects Model

Declining-Effects Models compare health outcomes of patients assigned to a disease management program (DMP) with those assigned to treatment-as-usual (TAU). Let yit be a ratio scale measuring health status for patient i assessed at time t following baseline (t≥0). Health status is assumed to be a linear function of: (1) patient characteristics (β0,), (2) a growth-rate (β1,), (3) a one-time, lump-sum change in outcome (β2) occurring at t0 following baseline, and (4) patient (ui) and time (vit) level random variates, or:

| (2) |

where Bt is a step function that equals one whenever t≥t0, and zero otherwise, with random variates assumed to be identically distributed with zero mean and constant variance: COV(ui, uj)=0 for i≠j; and COV(vis, vit)=0 for s≠t.

Describing the patient effect, let:

| (2a) |

where β00 equals baseline health for the average patient with characteristics, ¯; and the vector β01 is the rate health varies for patients at time t whose characteristics, Xit, differ from the average patient.

Describing the growth-effect, let:

| (2b) |

where β10 is the change in health per unit time for the average patient and the parameter vector β11 describes how the growth-rate varies for patients at time t whose characteristics differ from the average patient. Equation 2b can be expanded to include nonlinear time trends.

Describing the lump-sum effect, let:

| (2c) |

where β20 is the lump-sum change for the average patient and the parameter vector β21 describes how the lump-sum varies for patients whose characteristics differ from the average patient.

Finally, all parameters may be a function of which service system protocol patients were assigned:

| (2d) |

where Ii is a dichotomous variable that assumes the value of 1 if the patient were assigned to DMP services protocol, and=0 for TAU, with βjk1 as the difference between DMP and TAU. Alternatively, βjk1 can be described as a rate when Ii is measured as a continuous variable describing provider adherence and/or patient compliance with the service protocol.

Balancing model parsimony with misspecification error, we: (1) assumed growth-rates were unchanged over relatively short observation periods; (2) focused on how lump-sum and growth-rates vary by treatment group; and (3) focused on lump-sum changes that were allowed to vary with patient characteristics. Thus:

| (3) |

where, β000 is the average baseline value for TAU patients; β001 is the average adjusted difference in baseline values between DMP and TAU patients; β100 is the growth-rate calculated for TAU patients; β101 is the adjusted difference in growth-rates between DMP and TAU; β200 is the one-time lump-sum change in outcomes for the average TAU patient; and β201 is the adjusted difference in lump-sum changes in outcomes between DMP and TAU.

Equation 3 was simplified by substituting change scores for raw health values. Common in clinical trials literature, change scores are calculated by subtracting baseline values from each follow-up assessment. Setting t=0 and Bt=0=0, equation 3 becomes:

| (4) |

Subtracting equation 4 from 3, assuming the first assessment was administered after the lump-sum change (Bt≥t0=1), and recalibrating time so the first assessment occurs at t=1, then:

| (5) |

with change scores Δyit=yit-yit=0. Rearranging terms and substituting u*i=−vit=0, equation 5 is reconfigured as:

| (6) |

Equation 6 is a more familiar random regression equation, but with change scores replacing raw values for the dependent variable. Setting t=1 in equation 6, the average initial-change in outcomes between baseline (t=0) and the first assessment (t=1) can be computed by:

| (7) |

for DMP (Ii=1) and for TAU (Ii=0) patients. The initial-change between baseline and the first assessment equals a lump-sum change (β200+β201Ii) plus an accrued growth-rate during the first period (β100+β101Ii). Adding and subtracting equation 7 from equation 6 yields:

| (8a) |

or

| (8b) |

with TAU initial-change (γ0), TAU growth-rate (γ2), initial-effect (γ1), growth-effect (γ3), the impact on change scores when patient characteristics differ from an average patient (γ4), where γ0=β200+β100; γ2=β100; γ1=β201+β101; γ3=β101; and γ4=β21, and ui* and vit as patient and time-level random variates. Note, the model can be modified to account for bivariate and ordinal value outcome measures. The DMP initial-change and growth-rate can be calculated directly by substituting:

| (9a) |

into Equation 8b to obtain:

| (9b) |

with DMP initial-change (γ0*) and growth-rate (γ2*), and identical but opposite effect size estimates (γ1=−γ1*), (γ3=−γ3*).

Effects are said to be declining if following an initial-effect (γ1<0), TAU has a more favorable growth-rate, γ2<γ2*; or γ2*−γ2=γ3>0. The TAU is catching up if the declining-effects were the result of TAU patients improving (γ2<0) while DMP patients got no worse during the postperiod (γ2<γ2*≤0). On the other hand, both DMP and TAU patients are said to face an inevitable decline if symptoms return to patients in both groups during the postperiod (0<γ2<γ2*). Effects are constant if following an initial-effect (γ1<0), DMP and TAU growth-rates are equal (γ2*=γ2 or γ3=0). Effects are increasing if DMP patients continue to improve at a faster rate than TAU (γ3<0).

Details of Simulation Models

Let Bit=1 for all patient i with t<1 and Bit=0 for t≥1. Data were generated to represent different test patterns including: [#0] No-effects: yit=50+ui+vit; [#1] Increasing-effects with Lump-sum: yit=50−t−2Iit−Bit−2IiBit+i+vit; [#2] Increasing-effects that diminish: yit=50−2.25t−4.5Iit+0.25t2+0.5Iit2+ui+vit; [#3] Constant-effects: yit=50−t−Bit−4IiBit+ui+vit; [#4] Small declining-effects: yit=50−t+1tIi−Bit−5IiBit+ui+vit; and [#5] Declining-effects: yit=50−t+1.33tIi−Bit−5.33IiBit+ui+vit.

Models testing for initial-, declining- and growth-effects included: (A) First-Order Growth: Δyit=at+btIi (growth-effect: b<0); (B) Second-Order Growth: Δyit=at+btIi+ct2+dt2Ii (growth-effect: b<0; diminishing growth-effect: d>0; declining-effects: b<0 and b+8d>0); and (C) Declining-Effect: Δyit=e+fIi+g [t−1]+hIi[t−1] (initial-effect: f<0; growth-effect: h>0; and declining-effects: f<0 and h>0).

Footnotes

This project was supported, in part, by the Department of Veterans Affairs Health Services Research and Development Research Career Scientist Award (RCS# 92-403) and the Texas Medication Algorithm Project (TMAP) through grants from the Robert Wood Johnson Foundation (#031023), Meadows Foundation (#97040055), the National Institute of Mental Health (#5-R24-MH53799), Mental Health Connections and the Texas Department of Mental Health and Mental Retardation, Nanny Hogan Boyd Charitable Trust, Betty Jo Hay Distinguished Chair in Mental Health, the Rosewood Corporation Chair in Biomedical Science, and the Department of Psychiatry of the University of Texas Southwestern Medical Center at Dallas, and Astra-Zeneca Pharmaceuticals, Abbott Laboratories, Bristol-Myers Squibb Company, Eli Lilly and Company, Forest Laboratories, Glaxo Wellcome, Inc., Janssen Pharmaceutica, Novartis Pharmaceuticals Corporation, Organon Inc., Pfizer, Inc., SmithKline Beecham, U.S. Pharmacopoeia, and Wyeth-Ayerst Laboratories. Opinions expressed herein, however, are those of the authors and do not necessarily reflect the views of supporting agencies and sponsors.

REFERENCES

- American Psychiatric Association . Diagnostic and Statistical Manual of Mental Disorders. Fourth Edition (DSM-IV) Washington, DC: American Psychiatric Association; 1994. [Google Scholar]

- American Psychiatric Association . American Psychiatric Association Practice Guideline for Psychiatric Evaluation of Adults. Washington, DC: American Psychiatric Association; 1995. [Google Scholar]

- Andrews G. “Placebo Response in Depression: Bane of Research, Boon to Therapy”. British Journal of Psychiatry. 2001;170:192–194. doi: 10.1192/bjp.178.3.192. [DOI] [PubMed] [Google Scholar]

- Arndt S, Turvey C, Coryell WH, Dawson JD, Leon AC, Akiskal HS. “Charting Patients’ Course: a Comparison of Statistics Used to Summarize Patient Course in Longitudinal and Repeated Measures Studies”. Journal of Psychiatric Research. 2000;34:105–13. doi: 10.1016/s0022-3956(99)00044-8. [DOI] [PubMed] [Google Scholar]

- Berndt ER, Finkelstein SN, Greenberg PE, Howland RH, Keith A, Rush AJ, Russell J, Keller MB. “Workplace Performance Effects from Chronic Depression and its Treatment”. Journal of Health Economics. 1998;17:511–35. doi: 10.1016/s0167-6296(97)00043-x. [DOI] [PubMed] [Google Scholar]

- Bryk AS, Raudenbush SW. Hierarchical Linear Models. Newbury Park, CA: Sage; 1992. [Google Scholar]

- Bryk AS, Raudenbush SW, Congdon RT., Jr . Hierarchical Linear and Nonlinear Modeling, with HLM/2l and HLM/3L Programs. Chicago: Scientific Software International; 1996. (release 4) [Google Scholar]

- Chi EM, Reinsel GC. “Models of Longitudinal Data with Random Effects and AR(1) Errors”. Journal of the American Statistical Association. 1989;84:452–9. [Google Scholar]

- Chiles JA, Miller AL, Crismon ML, Rush AJ, Krasnoff AS, Shon S S. “The Texas Medication Algorithm Project: Development and Implementation of the Schizophrenia Algorithm”. Psychiatric Services. 1999;50:69–74. doi: 10.1176/ps.50.1.69. [DOI] [PubMed] [Google Scholar]

- Crismon ML, Trivedi M, Pigott TA, Rush AJ, Hirschfeld MA, Kahn DA, DeBattista C, Nelson JC, Nierenberg AA, Sackeim HA, Thase ME, the Texas Consensus Conference Panel on Medication Treatment of Major Depressive Disorder “The Texas Medication Algorithm Project: Report of the Texas Consensus Conference Panel on Medication Treatment of Major Depressive Disorder”. Journal of Clinical Psychiatry. 1999;60:142–56. [PubMed] [Google Scholar]

- Donabedian A. Benefits in Medical Care Programs. Cambridge, MA: Harvard University Press; 1976. [Google Scholar]

- Field MJ, Lohr KN. Clinical Practice Guidelines: Directions for a New Program. U.S. Institute of Medicine Committee to Advise the Public Health Service on Clinical Practice Guidelines. Washington, DC: U.S. Department of Health and Human Services, National Academy Press; 1990. [Google Scholar]

- Gibbons RD, Hedeker D, Elkin I, Waternaux C, Kraemer HC, Greenhouse JB, Shea T, Imber SD, Sotsky SM, Watkins JT. Archives General Psychiatry. Vol. 50. 1993. “Some Conceptual and Statistical Issues in Analysis of Longitudinal Psychiatric Data: Application to the NIMH Treatment of Depression Collaborative Research Program Dataset”; pp. 739–50. [DOI] [PubMed] [Google Scholar]

- Gilbert DA, Altshuler KZ, Rago WV, Shon SP, Crismon ML, Toprac MG, Rush AJ. “Texas Medication Algorithm Project: Definitions, Rationale and Methods to Develop Medication Algorithms”. Journal of Clinical Psychiatry. 1998;59:345–51. [PubMed] [Google Scholar]

- Goldstein HI. “Multilevel Mixed Linear Model Analysis Using Iterative Generalized Least Squares”. Biometrika. 1986;73:43–56. [Google Scholar]

- Grossman M. “On the Concept of Health Capital and the Demand for Health”. Journal of Political Economy. 1972;80:223–55. [Google Scholar]

- Hedeker D. Doctoral dissertation, University of Chicago; 1989. “Random Regression Models with Autocorrelated Errors”. [Google Scholar]

- Hedeker D, Gibbons RD. “A Random-Effects Ordinal Regression Model for Multilevel Analysis”. Biometrics. 1994;50:933–44. [PubMed] [Google Scholar]

- Hrobjartsson A, Gotzsche PC. “Is the Placebo Powerless? An Analysis of Clinical Trials Comparing Placebo with No Treatment”. New England Journal of Medicine. 2001;344:1594–1601. doi: 10.1056/NEJM200105243442106. [DOI] [PubMed] [Google Scholar]

- Jennrich RI, Schluchter MD. “Unbalanced Repeated Measures Models with Structured Covariance Matrices”. Biometrics. 1986;42:805–20. [PubMed] [Google Scholar]

- Jobson KO, Potter WZ. “International Psychopharmacology Algorithm Project Report: Introduction”. Psychopharmacology Bulletin. 1995;31:457–9. [PubMed] [Google Scholar]

- Kashner TM, Rosenheck R, Campinell AB, Suris A, Crandall R, Garfield NJ, Lapuc P, Pyrcz K, Soyka T, Wicker A. 2002. “Impact of Work Therapy on Health Status among Homeless, Substance Dependent Veterans: A Randomized Controlled Trial.”. Archives of General Psychiatry. 59(10):938–44. doi: 10.1001/archpsyc.59.10.938. [DOI] [PubMed] [Google Scholar]

- Kashner TM, Rush AJ, Altshuler KZ. “Measuring Costs of Guideline-Driven Mental Health Care: The Texas Medication Algorithm Project”. Journal of Mental Health Policy and Economics. 1999;2:111–21. doi: 10.1002/(sici)1099-176x(199909)2:3<111::aid-mhp52>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- Kirsch I. “Are Drug and Placebo Effects in Depression Additive?”. Biological Psychiatry. 2000;47:733–5. doi: 10.1016/s0006-3223(00)00832-5. [DOI] [PubMed] [Google Scholar]

- Laird NM, Ware H. “Random-Effects Models for Longitudinal Data”. Biometrics. 1982;38:963–74. [PubMed] [Google Scholar]

- La Ruche G, Lorougnon F, Digbeu N. “Therapeutic Algorithms for the Management of Sexually Transmitted Diseases at the Peripheral Level in Côte d’Ivoire: Assessment of Efficacy and Cost”. Bulletin of the World Health Organization. 1995;73:305–13. [PMC free article] [PubMed] [Google Scholar]

- Lavori PW. “ANOVA, MANOVA, and My Black Hen”. Archives of General Psychiatry. 1990;47:775–8. doi: 10.1001/archpsyc.1990.01810200083012. [DOI] [PubMed] [Google Scholar]

- Lavori PW. “Placebo Control Groups in Randomized Treatment Trials: A Statistician's Perspective”. Biological Psychiatry. 2000;47:717–23. doi: 10.1016/s0006-3223(00)00838-6. [DOI] [PubMed] [Google Scholar]

- Louis TA, Spiro A., III . Cambridge, MA: Harvard University School of Public Health, Department of Biostatistics; 1984. “Fitting First Order Auto-regressive Models with Covariates (Technical Report)”. [Google Scholar]

- Lubarsky DA, Glass PSA, Ginsberg B, Dear GdeL, Dentz ME, Gan TJ, Sanderson IC, Mythen MG, Dufore S, Pressley C, Gilbert WC, White WD, Alexander ML, Coleman RL, Rogers M, Reves JG. “The Successful Implementation of Pharmaceutical Practice Guidelines: Analysis of Associated Outcomes and Cost Savings”. Anesthesiology. 1997;86:1145–60. doi: 10.1097/00000542-199705000-00019. [DOI] [PubMed] [Google Scholar]

- McDowell I, Newell C. New York: Oxford University Press; 1996. Measuring Health: A Guide to Rating Scales and Questionnaires. [Google Scholar]

- McFadden ER, Jr, Elsanadi N, Dixon L, Takacs M, Deal EC, Boyd KK, Idemoto BK, Broseman LA, Panuska J, Hammons T, Smith B, Caruso F, McFadden CB, Shoemaker L, Warren EL, Hejal R, Strauss L, Gilbert IA. “Protocol Therapy for Acute Asthma: Therapeutic Benefits and Cost Savings”. American Journal of Medicine. 1995;99:651–61. doi: 10.1016/s0002-9343(99)80253-8. [DOI] [PubMed] [Google Scholar]

- Maritz TS. Empirical Bayes Methods. London: Methuen; 1970. [Google Scholar]

- Overall JE, Gorham DR. “Introduction—The Brief Psychiatric Rating Scale (BPRS): Recent Developments in Ascertainment and Scaling”. Psychopharmacological Bulletin. 1988;24:97–9. [Google Scholar]

- Rush AJ, Crismon ML, Toprac M, Trivedi MH, Rago WV, Shon S, Altshuler KZ. “Consensus Guidelines in the Treatment of Major Depressive Disorder”. Journal of Clinical Psychiatry. 1998;59(20):73–84. [PubMed] [Google Scholar]

- Rush AJ, Giles DE, Schlesser MA, Fulton CL, Weissenburger JE, Burns CT. “The Inventory of Depressive Symptomatology (IDS): Preliminary Findings”. Psychiatry Research. 1986;18:65–87. doi: 10.1016/0165-1781(86)90060-0. [DOI] [PubMed] [Google Scholar]

- Rush AJ, Gullion CM, Basco MR, Jarrett RB, Trivedi MH. “The Inventory of Depressive Symptomatology (IDS): Psychometric Properties”. Psychological Medicine. 1996;26:477–86. doi: 10.1017/s0033291700035558. [DOI] [PubMed] [Google Scholar]

- Rush AJ, Prien RF. “From Scientific Knowledge to the Clinical Practice of Psychopharmacology: Can the Gap Be Bridged?”. Psychopharmacology Bulletin. 1995;31(1):7–20. [PubMed] [Google Scholar]

- Rush AJ, Rago WV, Crismon ML, Toprac MG, Shon SP, Suppes T, Miller AL, Trivedi MH, Swann AIC, Biggs MM, Shores-Wilson K, Kashner TM, Pigott T, Chiles JA, Gilbert DA, Altshuler KZ. “Medication Treatment for the Severely and Persistently Mentally Ill: The Texas Medication Algorithm Project”. Journal of Clinical Psychiatry. 1999;60:284–91. doi: 10.4088/jcp.v60n0503. [DOI] [PubMed] [Google Scholar]

- Siegel S. Nonparametric Statistics for the Behavioral Sciences. New York: McGraw-Hill; 1956. [Google Scholar]

- Stanek EJ, III, Diehl SR. “Growth Curve Models of Repeated Binary Response”. Biometrics. 1988;44:973–83. [PubMed] [Google Scholar]

- Suppes T, Swann A, Dennehy EB, Habermacher E, Mason M, Crismon L, Toprac M, Rush AJ, Shon S, Altshuler KZ. “Texas Medication Algorithm Project: Development and Feasibility Testing of a Treatment Algorithm for Patients with Bipolar Disorder”. Journal of Clinical Psychiatry. 2001;62:439–47. [PubMed] [Google Scholar]

- Ventura J, Green MF, Shaner A, Liberman RP. “Training and Quality Assurance with the Brief Psychiatric Rating Scale: ‘The Drift Busters’”. International Journal of Methods for Psychiatric Research. 1993;3:221–44. [Google Scholar]

- VHA Directive 96-053 . Washington, DC: Department of Veterans Affairs, Veterans Health Administration; 1996. [Google Scholar]

- Ware JE, Kosinski M, Keller SD. “A 12-item Short-form Health Survey (SF-12): Construction of Scales and Preliminary Tests of Reliability and Validity”. Medical Care. 1996;34:220–33. doi: 10.1097/00005650-199603000-00003. [DOI] [PubMed] [Google Scholar]

- Ware JH. “Linear Models for the Analysis of Longitudinal Studies”. American Statistician. 1985;39:95–101. [Google Scholar]

- Willett JB, Ayoub CC, Robinson D. “Using Growth Modeling to Examine Systematic Differences in Growth: An Example of Change in the Functioning of Families at Risk of Maladaptive Parenting, Child Abuse, or Neglect”. Journal of Consulting Clinical Psychology. 1991;59:38–47. doi: 10.1037//0022-006x.59.1.38. [DOI] [PubMed] [Google Scholar]