Abstract

Objective

To investigate whether a performance-based contracting (PBC) system provides incentives for nonprofit providers of substance abuse treatment to select less severe clients into treatment.

Data Sources

The Maine Addiction Treatment System (MATS) standardized admission and discharge data provided by the Maine Office of Substance Abuse (OSA) for fiscal years 1991–1995, provides demographic, substance abuse, and social functional information on clients of programs receiving public funding.

Study Design

We focused on OSA clients (i.e., those patients whose treatment cost was covered by the funding from OSA) and Medicaid clients in outpatient programs. Clients were identified as being “most severe” or not. We compared the likelihood for OSA clients to be “most severe” before PBC and after PBC using Medicaid clients as the control. Multivariate regression analysis was employed to predict the marginal effect of PBC on the probability of OSA clients' being most severe after controlling for other factors.

Principal Findings

The percentage of OSA outpatient clients classified as most severe users dropped by 7 percent (p<=0.001) after the innovation of performance-based contracting, compared to the increase of 2 percent for Medicaid clients. The regression results also showed that PBC had a significantly negative marginal effect on the probability of OSA clients being most severe.

Conclusions

Performance-based contracting gave providers of substance abuse treatment financial incentives to treat less severe OSA clients in order to improve their performance outcomes. Fewer OSA clients with the greatest severity were treated in outpatient programs with the implementation of PBC. These results suggest that regulators, or payers, should evaluate programs comprehensively taking this type of selection behavior into consideration.

Keywords: Performance-based contracting system, selection, substance abuse treatment

State and local governments are major sources of financing for substance abuse services. In 1997, 27 percent of total mental health and substance abuse spending was funded by state or local governments (Mark et al. 2000). Like other payers, governments need efficient forms of payment to offer providers financial incentives to achieve cost-efficiency and to allow payers to monitor performance. Performance-based contracting (PBC) has been promoted by the Institute of Medicine (IOM) as a “promising mechanism to manage and ensure the effectiveness of substance abuse services” (Institute of Medicine 1990). Generally, performance-based contracting ties continuation of funding or the level of funding to certain treatment outcomes. Maine implemented a PBC system in fiscal year 1993. In this system, nonprofit providers (programs) of substance abuse treatment received budgets each year from the state government to finance the cost of treating clients who could not pay. Maine monitored and evaluated programs’ performance to “redirect funds, away from less efficient programs and toward programs which have proven themselves able to ‘produce’ good treatment outcomes” (Commons, McGuire, and Riordan 1997).

Performance-based contracting is designed to encourage providers to provide care to high-priority state clients in a cost-effective manner, but it may have unintended consequences: selection incentives may lead to behavior against the payer's interests. The basic issue is that since providers’ performance is rewarded and usually measured by the average performance of clients at discharge, providers have incentives to select the less severely ill clients who are more likely to have better performance levels at discharge in the first place. Thus, providers may avoid treating more severely ill clients.

Problems related to selection have attracted attention in the literature on optimal payment systems (for an excellent discussion, see Newhouse 1996). When providers are paid on the basis of cost, there is no direct financial incentive to select a low-cost patient over a high-cost one. In a prospective payment system, however, providers receive a fixed fee for each patient. Treatment cost for severely ill patients can be above the fixed fee, motivating providers with financial incentives to “dump” high-cost patients to improve profitability. Researchers have looked for empirical evidence of a selection problem in various contexts, but data limitations have precluded directly testing whether more severely ill patients are denied treatment. Instead, most studies compare lengths of stay or other related factors across different reimbursement systems (Newhouse 1989; Hill and Brown 1990; Weissert and Musliner 1992; Ellis and McGuire 1996).

The PBC system is not a prospective payment system. It allocates public funds among providers, who are then expected to deliver the contracted units of services. Therefore, funding is not tied to specific individuals. Because of this difference, the incentives for selecting favorable patients also vary. Under prospective payment, more severely ill patients might be rejected for treatment because their treatment costs are higher than the fixed payment and providers will lose money by treating them. Under a PBC system, however, more severely ill patients might be rejected for treatment because they will lower the providers’ overall performance. Providers with bad performance can be punished with less funding in the next period. Both systems provide incentives for health care providers to select people.

This study provides important empirical evidence of selection problems in the health care sector for the first time by examining whether nonprofit providers have selected less severely ill clients into their treatment programs in one specific performance-based contracting system, the PBC system in Maine. While several studies have examined other aspects of the PBC system in Maine, this study's primary contribution is directly testing for selection of patients by their severity levels.

Commons, McGuire, and Riordan (1997) studied the direct effect of this system on providers’ performance. They found that providers’ performance effectiveness was positively related to the innovation of PBC. In post-PBC periods, 60 percent of the programs achieved the effectiveness indicators defined by the state regulator while only 50 percent did so prior to PBC implementation. At the same time, the PBC system might cause some unintended provider behavior such as misreporting, which could make performance look better without actually improving the treatment quality (Commons and McGuire 1997). Performance improvement cannot be completely interpreted as the result of an increase in treatment quality without controlling for the unintended effect.

Given that performance evaluation is based on the information supplied by providers, Lu (1999) argued that providers had an incentive to misreport treatment outcomes. By separating the impact of the PBC on treatment effectiveness into a “true effect” that captured providers’ improved effect induced by PBC and a “reporting effect” that was the result of providers’ misreporting, she found that misreporting existed after the implementation of PBC. The present paper tests for the existence of selection behavior induced by the PBC system.

Background of Maine Performance-Based Contracting, and Hypotheses

The Office of Substance Abuse in Maine (OSA) was created to plan, implement, and coordinate all substance abuse treatment activities and services. The OSA allocated the funding it received from the legislature across nonprofit providers of substance abuse treatments through provider contracts. Providers used this funding to finance the cost of uninsured indigent clients, who are called “OSA clients” in this study.

In addition to OSA clients, nonprofit providers (of care in outpatient, inpatient, and other modalities) also treated clients covered by Medicaid, other insurance policies, and a few self-pay clients. The contracts required providers to submit service and financial reports, as well as Maine Addiction Treatment System (MATS) admission and discharge forms for every client treated in their programs.

Through fiscal year (FY) 1992, providers were required to provide contracted units of services; therefore, the outcomes of treatments did not affect funding. OSA's allocations to providers were based on the amount of funding they received in the prior year. A performance-based contracting system (PBC) designed to give nonprofit providers more incentives to care for high-priority clients in a cost-effective manner was implemented in FY 1993. The new contract specified that the performance outcomes of these programs would influence the allocation of funding for the next year.

The performance indicators included three categories: “efficiency,”“effectiveness,” and “special populations.”“Efficiency” dealt with the units of treatment that providers had to deliver in the contract year. “Effectiveness” specified the minimum percentage of discharged clients who had achieved certain outcomes, such as abstinence for 30 days before discharge or reduction in the frequency of drug use before discharge. Finally, “special populations” required that the target percentage of clients were to be drawn from specific populations that were considered difficult to treat, such as homeless people, youths, females, and intravenous (IV) drug users. The effectiveness and special population standards were measured for all discharge clients, regardless of the source payment.

The contract specified separate performance standards for different modalities (i.e., outpatient, inpatient, detoxification). This study examines the incentive problem in outpatient programs, the modality used by the majority of clients as a result of the shift of care from inpatient to outpatient settings. Table 1 lists all the performance indicators for outpatient programs. The efficiency standards required outpatient programs to deliver at least 90 percent of contracted units of treatment. It also required that at least 70 percent of these units be delivered to primary clients. There are a total of 12 effectiveness indicators, including substance abuse measures, social role functioning outcomes, and relationship measures. Programs were evaluated as “good” as long as they accomplished any 8 of these indicators. Finally, programs were required to complete any 5 of the 8 special population standards.

Table 1.

Performance Measures and Standards: 1993

| Efficiency Standards | |

| Minimum service delivery (% of contracted amount) | 90% |

| Minimum service delivery to primary clients (% of units delivered) | 70% |

| Number of standards to be met* | 2 of 2 |

| Effectiveness Standards | |

| Abstinence/drug free 30 days prior to termination | 70%** |

| Reduction of use of primary SA problem | 60% |

| Maintaining employment | 90% |

| Employment improvement | 30% |

| Employability | 3% |

| Reduction in number of problems with employer | 70% |

| Reduction in absenteeism | 50% |

| Not arrested for OUI offense during treatment | 70% |

| Not arrested for any offense | 95% |

| Participation in self help during treatment | 40% |

| Reduction of problems with spouse/significant other | 65% |

| Reduction of problems with other family members | 65% |

| Number of standards to be met | 8 of 12 |

| Special Population Standards | |

| Female | 30% |

| Age: 0–19 | 10% |

| Age: 50+ | 6% |

| Corrections | 25% |

| Homeless | 1% |

| Concurrent psychological problems | 8% |

| History of IV drug use | 12% |

| Poly-drug use | 35% |

| Number of standards to be met | 5 of 8 |

Number of standards to be met is the number of indicators the program must meet to be deemed to have performed in that category.

The minimum percent of total clients which must meet the indicator for the program to be deemed to have met that indicator.

Source: Commons, McGuire, and Riordan (1997)

With the implementation of PBC, providers who achieved good outcomes could be rewarded with more funding in the fiscal year.1 Provider performance outcomes were measured by the average performance of all clients in the program at the time of discharge. This system created incentives for providers to select clients who were easier to treat in order to improve performance outcomes. The OSA tried to mitigate the selection problem by including special populations as one of the performance indicators.2 The policy may nonetheless create leeway for providers to select patients by unregulated but predictive factors such as severity level.

To identify a selection effect, this study focuses on OSA and Medicaid clients, who accounted for 46.8 percent of total outpatient visits. There are two key reasons for restricting the sample to these groups. First, OSA and Medicaid clients were alike in income and substance abuse habits; second, the selection problem triggered by PBC is relevant only for OSA clients. Therefore, the Medicaid group is a control group to study the selection problem in the OSA clients.

This study's basic assumption is that nonprofit providers were seeking to maximize total revenue from all sources. Revenue maximization is one of the standard assumptions in the literature of nonprofit firms (Pauly and Redisch 1973).3 We adopted this assumption based on the fact that the nonprofit providers in our study were heavily regulated and no profit was allowed at the end of each fiscal year. Given this objective, there was a clear incentive for these providers to reject the most severely ill OSA clients who could cause their performance outcomes to deteriorate. Since a provider's fixed budget from OSA is tied with contracted units of service from nonpaying clients, not with specific clients, the provider could avoid the most severely ill OSA clients without suffering a loss of current revenue. Rejecting the unfavorable OSA clients could improve the possibility of receiving more revenue in the next funding period.

This selection issue, however, was more complicated in the case of Medicaid clients. On the one hand, since Medicaid reimbursed providers per unit of treatment, each Medicaid client was a source of revenue; therefore there was an incentive for providers to treat all Medicaid clients. On the other hand, because the effectiveness indicators—used by OSA for future funding decisions—included all discharged clients (i.e., OSA, Medicaid, and other), providers had an incentive to reject the most severely ill Medicaid clients to improve the outcome. Therefore, there was a tradeoff between today's revenue (from Medicaid) and tomorrow's funding (from OSA) as far as undesirable Medicaid clients were concerned. On average, Medicaid reimbursed providers $62 per unit of treatment in post-PBC periods, which was high enough to take any available Medicaid patient, despite the fact that the most severe ones might adversely affect future funding from OSA. Thus, selection was not a major issue in the Medicaid sample. Even if there was selection in the Medicaid sample, it should be more pronounced in the OSA sample.

Methods

Data

Client-level data came from OSA's standardized admission and discharge assessment tool, the Maine Addiction Treatment System. Providers were required by OSA to collect episode-based information about the client upon admission, and to file the discharge forms once the client left the program for each episode. The MATS information includes demographic variables (age, race, sex, education, income), substance abuse variables (type of drug, severity, frequency of use, age at first use), the service variable (units of treatment), and social functioning variables (including problems with family, employers, or school, as well as absenteeism caused by substance use).

This study focuses on one major substance abuse modality: outpatient programs, using data from fiscal years 1991–1995; FY 1991 and FY 1992 are pre-PBC, while the last three years are post-PBC. It excludes nonprimary clients (i. e., those considered to be affected by others or codependents), since many characteristics are not applicable to this group. Only OSA and Medicaid clients are retained.

Dependent Variable: Severity Level of Substance Abuse

In the MATS form, two variables related primarily to the severity level of substance abuse. One was the client self-reported frequency of drug use (no drug listed, no use in past month, once per month, two to three days per month, once per week, two to three days per week, four to six days per week, once daily, two to three times daily, or more than three times daily). The other was counselor-assessed severity: a client was identified as a “casual/experimental user,”“lifestyle-involved user,”“lifestyle-dependent user,” or “dysfunctional” user. The rate of reduction, one of the performance indicators, was measured by the percentage of clients who experienced less frequency of drug use at discharge compared to that at admission. This gives providers an incentive to overreport clients’ frequency of drug use at the time of admission. There was no incentive, however, for providers to misreport the variable that reflects the type of user. Therefore, the counselor-assessed severity variable was used to measure severity. Clients were grouped into the most severe users (dysfunctional users) and less severe users (i.e., those who were casual/experimental, lifestyle-involved, and lifestyle-dependent users).4

Preliminary regression analysis (results not presented) showed that the most severe users were less likely to be abstinent at discharge after controlling for other factors. Hence, providers had an incentive to avoid the most severe OSA clients to improve the overall rate of abstinence.

Independent Variable

Table 2 lists the independent variables. A dummy variable, Medicaid, tested whether Medicaid clients differed from OSA clients. The dummy variable PBC measured the effect of PBC on both Medicaid and OSA clients, and the interaction item OSA*PBC separated the effect of PBC on OSA clients from Medicaid. We also controlled for other covariates that may affect clients’ severity, including clients’ demographic variables (sex, education, income, age, and marital status) and other personal characteristics related to the client's substance abuse (age at first use, the number of prior treatment episodes in any drug use program, the legal status at admission, status of concurrent psychiatric problems, IV drug use, poly-drug usage, and homeless status). The residence location was included to control for any potential geographic influences. Table 3 provided summary statics for these variables.

Table 2.

Definitions of Variables

| Variables | Definitions |

|---|---|

| Medicaid | = 1 if the client was Medicaid client, 0 for OSA client |

| PBC | = 0 for FY 1991 and 1992; = 1 for FY 1993–1995 |

| OSA*PBC | = 1 for OSA client had treatment in FY 1993–1995 |

| Demographic Characteristics | |

| Income | Monthly household income |

| Age | Age of the client |

| Female | = 1 if the client is female |

| Homeless | = 1 if the client is homeless |

| Urban | = 1 if the client' residence is urban |

| Edu | Highest grade completed |

| Married | = 1 if the client is married |

| Legal | = 1 if the client had a legal problem at the time of admission |

| Usage Characteristics | |

| Psypbm | = 1 if the client had a concurrent psychiatric problem at the time of admission |

| IVuser | = 1 if the client was a I.V. drug user at the time of admission |

| Polyuser | = 1 if the client was a poly-drug user at the time of admission |

| Use-age | Age at first using drug |

| Pretx | Number of prior treatment episodes in drug/alcohol treatment program |

Table 3.

Means and Standard Deviations of Independent Variables

| OSA (N=2,367) | Medicaid (N=3,185) | |||

|---|---|---|---|---|

| Pre-PBC (N=983) | Post-PBC (N=1,384) | Pre-PBC (N=1,191) | Post-PBC (N=1,994) | |

| Demographic Characteristics | ||||

| Income | 396.6 | 436.3 | 400.4 | 440.7 |

| (539.3) | (643.3) | (446.4) | (453.4) | |

| Age | 31.3 | 31.9 | 31.7 | 32.2 |

| (10.4) | (9.5) | (10.4) | (10.5) | |

| Female | .21 | .19 | .53 | .52 |

| (.41) | (.39) | (.49) | (.49) | |

| Homeless | .01 | .03 | .02 | .01 |

| (.10) | (.17) | (.12) | (.11) | |

| Urban | .64 | .64 | .67 | .65 |

| (.48) | (.48) | (.47) | (.48) | |

| Edu | 11.4 | 11.6 | 10.8 | 10.8 |

| (2.1) | (1.9) | (2.2) | (2.2) | |

| Married | .16 | .14 | .17 | .18 |

| (.37) | (.35) | (.38) | (.38) | |

| Legal | .57 | .57 | .34 | .37 |

| (.49) | (.49) | (.48) | (.48) | |

| Usage Characteristics | ||||

| Psypbm | .09 | .13 | .24 | .29 |

| (.29) | (.34) | (.43) | (.45) | |

| IVuser | .07 | .06 | .07 | .08 |

| (.26) | (.24) | (.26) | (.27) | |

| Polyuser | .53 | .58 | .53 | .58 |

| (.49) | (.49) | (.49) | (.49) | |

| Use-age | 14.9 | 14.9 | 14.8 | 14.5 |

| (4.7) | (5.1) | (5.4) | (5.5) | |

| Pretx | 1.2 | 1.5 | 1.4 | 1.7 |

| (1.4) | (1.5) | (1.5) | (1.6) | |

Notes: The standard deviations shown in parentheses.

Data Analysis

To test the hypothesis that PBC triggered selection of OSA clients, this study examined the probability of being a most severe user using a probit specification for pooled OSA and Medicaid clients only. The marginal effect of the interaction term OSA*PBC was expected to be negative (i.e., probability of being a most severe user for OSA clients decreased post-PBC after controlling for other factors). Because of the missing values of relevant variables, our estimation was based on 5,552 observations, of which 2,367 were OSA clients and 3,185 were Medicaid clients.

Results

Descriptive Findings

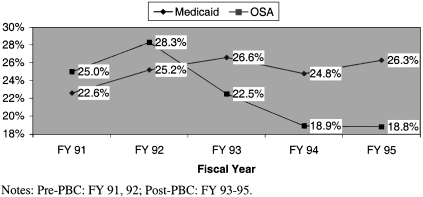

Some simple comparisons support the expectation of selection effects. If providers tended to reject the most severe OSA clients, but not the most severe Medicaid clients, two results are to be expected: 1) the percentage of OSA clients categorized as most severe users should fall in the post-PBC period; and 2) there should be no decline in the percentage of most severe users in the Medicaid sample. Figure 1 and Table 4 confirm these expectations. The percentage of most severe users who were reimbursed by Medicaid increased by 2 percent, but the OSA percentage dropped by 7 percent (p<=0.001) in the post-PBC years.

Figure 1.

Percentage of Clients as the most severe Users (Outpatient).

Table 4.

Comparisons between Pre-PBC and Post-PBC Periods

| Pre-PBC | Post-PBC | Difference | |

|---|---|---|---|

| % as most severe users | |||

| Outpatient | |||

| OSA | 27% | 20% | −7% |

| Medicaid | 24% | 26% | 2% |

| Inpatient | |||

| OSA | 44% | 64% | 20% |

| Medicaid | 47% | 58% | 11% |

| Units of treatment per year | |||

| Outpatient* | |||

| OSA | 735 | 656 | −79 |

| Medicaid | 900 | 902 | 2 |

| Inpatient | |||

| OSA | 808 | 694 | −114 |

| Medicaid | 42 | 237 | 195 |

Possibly due to the selection in the sample of OSA clients, the average annual units of outpatient care delivered to OSA clients decreased from 735 to 656 after the implementation of PBC. Medicaid clients received almost the same amount of outpatient care annually in both pre-PBC and post-PBC (Table 4).

Since the OSA clients are low-income people who cannot afford to pay for substance abuse treatment and do not have Medicaid or private insurance, the nonprofit providers are the last resort for them from which to seek treatment. Therefore, an outpatient provider cannot simply stop treating or turn away these most severe OSA clients. Table 4 shows that the average units of inpatient care per year delivered to Medicaid clients increased from 42 in pre-PBC to 237 in post-PBC. In the meantime, the inpatient care delivered to OSA clients dropped by 114 per year. This trend might provide one scenario of selection: the nonprofit providers helped the most severe OSA clients (in both inpatient and outpatient programs) to get Medicaid coverage and these clients were treated in inpatient programs reimbursed by Medicaid. Consequently, the percentage of Medicaid clients who were most severe in inpatient programs increased from 47 percent pre-PBC to 58 percent post-PBC.

Because the nonprofit providers had to deliver contracted units of care to OSA, they could not fully use the option of Medicaid. For those whose treatment was still covered by OSA, the most severe clients might be referred to intensive inpatient programs from less intensive outpatient programs. Lu et al. (Lu, Ma, and Yuan 2000) found that selection led to a better match between illness severity and treatment intensity. This scenario of selection is supported by the percentage change of most severe OSA clients: pre-PBC, the most severe users were 44 percent of all OSA clients in the inpatient program, but constituted 64 percent post-PBC (Table 4).

Regression Results

Many factors had statistically significant effects on the likelihood of being a most severe user in the outpatient programs (Table 5). Homeless people, those with psychiatric or legal problems, IV drug users, and urban residents were more likely to be most severe users. For example, the marginal effect of being an IV user increased the likelihood of being a most severe user by 11 percent. The younger clients started using drugs, the more likely they were to be most severe users.

Table 5.

Testing Existence of Selection Dependent Variable: Probability of Being a Most Severe User (N=5,552)

| Model 1 | Model 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| Variables | Estimate DF/dx | T-Value | 95% C.I. | Estimate dF/dx | T-Value | 95% C.I. | ||

| Medicaid | −.029 | −.155 | −0.0671 | 0.0080 | −.060 | −2.31 | −0.1115 | −0.0086 |

| PBC | −.001 | −0.08 | −0.0320 | 0.0295 | −.002 | −0.13 | −0.0331 | 0.0285 |

| OSA*PBC | −.074** | −3.26 | −0.1156 | −0.0317 | −.071** | −3.12 | −0.1130 | −0.0285 |

| Demographic Characteristics | ||||||||

| Income | −.000 | −1.16 | 0.0000 | 0.0000 | −.000 | −1.25 | 0.0000 | 0.0000 |

| Age | .004** | 5.69 | 0.0024 | 0.0049 | .004** | 5.62 | 0.0023 | 0.0049 |

| Female | −.029** | −2.25 | −0.0552 | −0.0040 | −.026 | −1.66 | −0.0571 | 0.0045 |

| Homeless | .093** | 2.01 | −0.0048 | 0.1907 | .093** | 2.00 | −0.0049 | 0.1905 |

| Urban | .053** | 4.34 | 0.0297 | 0.0766 | .053** | 4.36 | 0.0299 | 0.0768 |

| Edu | −.001 | −0.46 | −0.0066 | 0.0041 | −.001 | −0.52 | −0.0068 | 0.0039 |

| Married | −.011 | −0.66 | −0.0421 | 0.0207 | −.010 | −.060 | −0.0412 | 0.0218 |

| Legal | .022 | 1.78 | −0.0023 | 0.0471 | .039 | 2.35 | 0.0063 | 0.0711 |

| Usage Characteristics | ||||||||

| Psypbm | .060** | 4.04 | 0.0299 | 0.0905 | .080** | 4.53 | 0.0438 | 0.1164 |

| Ivuser | .110** | 4.68 | 0.0602 | 0.1590 | .109** | 4.67 | 0.0599 | 0.1588 |

| Polyuser | .004 | 0.36 | −0.0200 | 0.0290 | .004 | 0.30 | −0.0208 | 0.0282 |

| Use-age | −.006** | −4.97 | −0.0083 | −0.0036 | −.006** | −5.01 | −0.0083 | −0.0036 |

| Pretx | .033** | 8.63 | 0.0257 | 0.0408 | .033** | 8.63 | 0.0258 | 0.0409 |

| OSA*Female | — | — | — | — | −.005 | −0.19 | −0.0600 | 0.0492 |

| OSA*Legal | — | — | — | — | −.037 | −1.55 | −0.0834 | 0.0086 |

| OSA*Psypbm | — | — | — | — | −.059** | −2.01 | −0.1133 | −0.0060 |

| Log Likelihood | −2916.695 | −2913.737 | ||||||

Notes: Significance≤0.05

Notes: Significance≤0.01.

The marginal effect of being Medicaid clients was negative but not significant, which implied that the likelihood of being a most severe user was not significantly different between OSA and Medicaid clients. The PBC effect was captured by PBC and OSA*PBC. The marginal effect of the dummy variables PBC was negative but not significant. However, the marginal effect of the interaction term OSA*PBC was −.074 and significant. This difference implied that the implementation of PBC affected the likelihood of being most severe for OSA clients. It did not affect the likelihood of being most severe for Medicaid clients. This difference suggests that providers may have avoided the most severe OSA clients after PBC was implemented.

Even though Medicaid clients constitute the best available control group, Medicaid and OSA clients were still different. For example, Medicaid clients were more likely to be female and have psychiatric problems, and less likely to have legal problems (see Table 3). To control for these potentially confounding trends that may have a different impact within the OSA and Medicaid groups, we also estimated the likelihood of being most severe patients, including interaction terms: OSA*Female, OSA*Psypbm, and OSA*Legal (Model 2 in Table 5). Only the interaction term of OSA and Psypbm was significantly negative. Estimation results for other variables showed little difference compared to Model 1 without the three interaction terms. The marginal effect of OSA*PBC remained significantly negative. Gender is the only exception: the significant negative impact of females disappeared after controlling for the interaction terms.

Further, we repeated Model 2 (without OSA*Female) for the male population only. The results are similar compared to those for the total sample. For example, the marginal effect of the interaction term OSA*PBC was negative and significant in the male-only sample. We also tested whether the change of severity is due to the time trend by having each of the five years as a dummy variable. Again, the impact of OSA*PBC was persistent.

Conclusions

Performance-based contracting systems aim to improve treatment effectiveness. However, other unintended incentives could also occur as a result of implementation. This study identified one such consequence: the selection effect. It showed that in response to incentives introduced in Maine's performance-based contracting system, nonprofit providers may engage in activities to attract less severe clients because these clients were easier to treat in order to improve their performance. This result suggests that regulators or payers should evaluate programs comprehensively, taking selection into consideration and adjusting performance measures for client severity level. Policymakers should also be aware of the adverse effect of PBC in other areas that are heavily funded by state or local governments. For example, given that injection drug users at the highest HIV risk are also the most likely to be uninsured and to perform poorly in outpatient treatment (Pollack 1999), the PBC may have potential adverse effects in the area of HIV prevention.

A major limitation of this study is that only outpatient programs were examined. It showed that fewer OSA clients considered most severe were treated in the outpatient programs after the implementation of PBC. Did these patients receive treatment somewhere else? Even though we had several hypotheses regarding these patients, such as they got Medicaid coverage or they were referred from outpatient to inpatient care, these were all based on simple data trends. Future research should examine treatment effectiveness in all modality settings (outpatient or inpatient) controlling for selection and other incentives to obtain a more complete picture of the outcomes of performance-based contracting systems.

Our data showed that the proportion of Medicaid clients considered as most severe increased and the proportion of the most severe OSA clients decreased after the implementation of PBC. Other potentially confounding trends may account for this observed pattern. In particular, it may be due to a background change in OSA or Medicaid populations. Given that Medicaid clients are the best control group available in this study, we cannot completely tease out a relative population mix change from a selection effect. Nevertheless, to further address concerns regarding the population mix effect on the change in severity level, we investigated the trend of drug use, using the National Household Survey of Drug Abuse 1991–1995.

Based on self-reported frequencies of using cocaine, hallucinogens, and sniffing or inhaling in the past 12 months, we grouped survey respondents into heavy, moderate, rare, and nonusers for each of the three drugs. The household survey showed that about 98 percent of people never used drugs in the past 12 months, which was consistent over the time period. Among those who used drugs, the percentage of heavy cocaine users (almost daily user) increased from 8 percent in 1991–1992 to 10.9 percent in 1993–1995; the percentage of heavy hallucinogen users increased from 3.3 percent to 4.3 percent; and the percentage of people who sniffed or inhaled almost daily increased from 4.7 percent to 5.3 percent. The patterns of drug use by income category (low income [<=20,000] and high income [>20,000]) were similar to the overall trend. For example, the percentage of heavy cocaine users increased from 10.6 percent to 14.5 percent and from 5.8 percent to 7.4 percent for low-income and high-income people, respectively.

We also tried to examine trends within the New England region (the survey cannot identify people at the state level) over the same time period. The very small sample of drug users in the region limited our ability to estimate the trend of heavy users. However, the distribution of nonusers within the New England region was almost the same as the national population: the vast majority (98 percent) were nonusers and it was consistent over the years. So, there was no compelling reason to think that the composition of drug users within the New England region should be different from the national population.

The models we constructed estimated the probability of high severity, controlling for household income and various patient characteristics that might be longitudinally related to the population mix of the sample. While it is impossible to account for all major unobservable confounders, the list of independent variables seems rich enough to account for major sources of variation in the severity level. The models do show a consistently and significantly negative effect of the interaction of OSA*PBC. Additionally, from the National Household Survey of Drug Abuse, we found no evidence that severity of drug use decreased in the population. Consequently, we interpreted the decrease in the most severe OSA patients post-PBC as a consequence of selection rather than as a relative population mix change. Future studies could try to collect information at the state level to further address the possibility of a population mix change.

Wheeler and Nahra (2000) found that the provider characteristics such as private or public ownership affected substance abuse treatment. Provider characteristics may also matter in responses to the dual-diagnosis population. For example, hospitals owned or affiliated with academic medical centers would be more likely to keep dual-diagnosis patients. In our setting, one might expect public, freestanding nonprofit and medical center–affiliated groups to differ in their response to the implementation of a PBC system. Unfortunately, because a small number of providers were involved and confidentiality was an issue, we cannot examine the effect of provider characteristics. As Pauly (1985) states, “Interest in a policy question such as biased selection usually has some foundation in welfare economics. We want to know whether there is either inefficiency or a transfer of welfare from one set of consumers to another.” Studying welfare transfer in this setting is a challenging task.

Acknowledgments

I would like to thank Tom McGuire, Ann Hendricks, Randy Ellis, Dan Ackerberg, Albert Ma, Catherine McLaughlin, Stephen Shortell, and two anonymous referees for their helpful comments. The Maine Office of Substance Abuse cooperated in the conduct of the study. All errors and omissions remain my responsibility.

Footnotes

There were, however, no explicit financial reward or penalty schemes. Some providers with good performance were allowed to keep any surplus over a budget or received additional federal block grant funds. For providers who did not meet the targets, special conditions were added to their contracts and OSA worked with them to improve their performance. For a few cases with very low overall performance, OSA renewed the program contracts only for a period of six months (Commons, McGuire, and Riordan 1997).

Initially, special population standards were designed to target the populations that OSA deemed more difficult to treat. (Commons, McGuire, and Riordan 1997) found that providers did not improve significantly in this category after PBC was implemented. They showed that only 55 percent of programs satisfied the special population standards post-PBC compared to 53 percent pre-PBC.

A growing literature questions whether private nonprofit providers are much different, on average, from for-profit providers in matters such as adverse selection and patient-dumping. Duggan (2000) provided an excellent discussion of these issues. His empirical work found that the private nonprofit hospitals have a similar response to profitable opportunities that are created by changes in government policy compared to for-profit hospitals.

Overall, 18 percent clients had “undetermined” counselor-assessed severity in outpatient programs. By cross-examining the relationship between the counselor-assessed severity and self-reported frequency of drug use, we found that the frequency of drug use for those with “undetermined” severity was similar to those identified as “ lifestyle-involved” or “lifestyle-dependent,” and was significantly different from those identified as “dysfunctional” users. This reveals that if the “undetermined” group was included in our analysis, it was more likely to be qualified as less severe rather than most severe clients. Our sensitivity analysis showed that the trend of having fewer most severe OSA clients in post-PBC was robust even when we included the “undetermined” clients into the less severe group or most severe group. We treated this “undetermined” severity as missing value and did not include them in our final analysis.

Research support was provided by grant nos. 1 R01 DA08715-01 and P50 DA10233-03 from the National Institute on Drug Abuse.

References

- Commons M, McGuire TG. “Some Economics of Performance-Based Contracting for Substance-Abuse Services.”. In: Egertson JA, Fox DM, Leshner AI, editors. Treating Drug Abusers Effectively. Malden, MA: Blackwell; 1997. pp. 223–49. [Google Scholar]

- Commons MT, McGuire G, Riordan MH. “Performance Contracting for Substance Abuse Treatment.”. Health Services Research. 1997;32(5):631–50. [PMC free article] [PubMed] [Google Scholar]

- Duggan M G. “Hospital Ownership and Public Medical Spending.”. Quarterly Journal of Economics. 2000;115(4):1343–74. [Google Scholar]

- Ellis RP, McGuire TG. “Hospital Response to Prospective Payment: Moral Hazard, Selection, and Practice-Style Effects.”. Journal of Health Economics. 1996;15(3):257–77. doi: 10.1016/0167-6296(96)00002-1. [DOI] [PubMed] [Google Scholar]

- Hill JW, Brown RS. Final report prepared under contract for HCFA. Washington, DC:: Mathematica Policy Research; 1990. Biased Selection in the TEFRA HMO/CMP Program. [Google Scholar]

- Lu M. “Separating the ‘True Effect’ from ‘Gaming’ in Incentive-Based Contracts in Health Care.”. Journal of Economics and Management Strategy. 1999;8(3):383–432. [Google Scholar]

- Lu M, Ma CA, Yuan L. “Risk Selection and Matching under Performance-Based Contracting.”. Health Economics. doi: 10.1002/hec.734. Forthcoming. [DOI] [PubMed] [Google Scholar]

- Mark TL, Coffey R, King E, Harwood H, McKusick D, Genvardi J, Dilonardo J, Buck J. “Spending on Mental Health and Substance Abuse Treatment, 1987–1997.”. Health Affairs. 2000;19(4):108–20. doi: 10.1377/hlthaff.19.4.108. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine . Treating Drug Problems. Washington, DC: National Academy Press; 1990. [Google Scholar]

- Newhouse JP. “Do Unprofitable Patients Face Access Problems?”. Health Care Financing Review. 1989;11(2):33–42. [PMC free article] [PubMed] [Google Scholar]

- Newhouse JP. “Reimbursing Health Plans and Health Providers: Efficiency in Production versus Selection.”. Journal of Economic Literature. 1996;34(3):1236–63. [Google Scholar]

- Pauly MV. “What is Adverse about Adverse Selection?”. In: Scheffler R, Rossiter L, editors. Advances in Health Economics and Health Services Research. Vol. 6. Greenwich, CT: JAI Press; 1985. pp. 281–6. [PubMed] [Google Scholar]

- Pauly MV, Redisch M. “The Not-for-Profit Hospital as a Physicians’ Cooperative.”. American Economic Review. 1973;63(1):87–99. [Google Scholar]

- Pollack H. Presented at the National HIV Prevention Conference sponsored by the Centers for Disease Control and Prevention. Atlanta, EA.: 1999. “Cost-Effectiveness of Methadone Treatment as HIV Prevention.”. [Google Scholar]

- Weissert W, Musliner MC. “Case Mix Adjusted Nursing Home Reimbursement: A Critical Review of the Evidence.”. Milbank Quarterly. 1992;70(3):455–90. [PubMed] [Google Scholar]

- Wheeler J, Nahra T. “Private and Public Ownership in Outpatient Substance Abuse Treatment: Do We Have a Two-Tiered System?”. Administration Policy Mental. 2000;27(4):197–209. doi: 10.1023/a:1021357318246. [DOI] [PubMed] [Google Scholar]