Abstract

Background

Multiple factors limit identification of patients with depression from administrative data. However, administrative data drives many quality measurement systems, including the Health Plan Employer Data and Information Set (HEDIS®).

Methods

We investigated two algorithms for identification of physician-recognized depression. The study sample was drawn from primary care physician member panels of a large managed care organization. All members were continuously enrolled between January 1 and December 31, 1997. Algorithm 1 required at least two criteria in any combination: (1) an outpatient diagnosis of depression or (2) a pharmacy claim for an antidepressant. Algorithm 2 included the same criteria as algorithm 1, but required a diagnosis of depression for all patients. With algorithm 1, we identified the medical records of a stratified, random subset of patients with and without depression (n=465). We also identified patients of primary care physicians with a minimum of 10 depressed members by algorithm 1 (n=32,819) and algorithm 2 (n=6,837).

Results

The sensitivity, specificity, and positive predictive values were: Algorithm 1: 95 percent, 65 percent, 49 percent; Algorithm 2: 52 percent, 88 percent, 60 percent. Compared to algorithm 1, profiles from algorithm 2 revealed higher rates of follow-up visits (43 percent, 55 percent) and appropriate antidepressant dosage acutely (82 percent, 90 percent) and chronically (83 percent, 91 percent) (p<0.05 for all).

Conclusions

Both algorithms had high false positive rates. Denominator construction (algorithm 1 versus 2) contributed significantly to variability in measured quality. Our findings raise concern about interpreting depression quality reports based upon administrative data.

Keywords: Depression, HEDIS, quality of care, predictive value

Administrative medical, pharmacy, and membership files of managed care organizations offer relatively low-cost, convenient data sources for examining patterns of care at the population level. Cohorts defined from administrative data often drive quality measurement and reporting systems, such as the Health Plan Employer Data and Information Set (HEDIS®). HEDIS is a set of standardized performance measures for comparisons among managed care organizations (Hanchak et al. 1996). Administratively defined cohorts are also used from quality improvement (Weiner et al. 1990; Weiner et al. 1995; Romano, Roos, and Jollis 1993; Garnick, Hendricks, and Comstock 1994; Leatherman et al. 1991) and disease management programs. For example, at-risk individuals may be identified from administrative files to receive reminders for annual mammography or influenza vaccination. The Centers for Medicare and Medicaid Services often use administrative data to identify patients for national Medicare quality improvement projects (Jencks and Wilensky 1992).

Regardless of the condition, several factors limit the accuracy of administrative algorithms for disease identification. These factors include incompleteness of data submitted by providers for capitated visits and procedures (encounters) and fee-for-service procedures (claims) to payors; limited clinical detail in the International Classification of Disease (ICD), Clinical Procedure Terminology (CPT), and Diagnostic Related Group (DRG) systems; and inaccuracy of demographic information in administrative files. For example, administrative pharmacy databases will not contain evidence of treatment if the physician only gives the patient samples from the office and does not write a formal prescription. The patient could also have the prescription filled at a nonparticipating pharmacy without using a pharmacy ID card. Therefore, to use administrative databases effectively for quality improvement and profiling, careful attention must be given to disease identification algorithms (Benesch et al. 1997; Weintraub et al. 1999).

Depression, in particular, presents many additional challenges in the use of health plan administrative data. These problems include failure of the physician to recognize depression (Wells et al. 1989; Kessler, Cleary, and Burke 1985; Borus et al. 1988), failure of the physician and patient to report depression because of the stigma of mental illness (Hoyt et al. 1997; Hirschfeld et al. 1997; Rost et al. 1994), and confounding of diagnosis by medical comorbidity (Tylee, Freeling, and Kerry 1993; Epstein et al. 1996; Koenig et al. 1993; Cohen-Cole and Stoudemire 1987; Coulehan et al. 1990). Because of these difficulties, one cannot assume that successful approaches to identifying patients with other diagnoses from claims data may be necessarily extrapolated to depression (Hanchak et al. 1996; Kiefe et al. 2001; Ellerbeck et al. 1995; Marciniak et al. 1998). However, the high prevalence of depression (Simon, Von Korff, and Barlow 1995; Simon and Von Korff 1995; Henderson and Pollard 1992; Hall and Wise 1995) and the well-documented deficiencies in the diagnosis and treatment of depression (Rost et al. 1994; Wells 1994; Rogers et al. 1993; Norquist et al. 1995; Katon et al. 1992; Lemelin et al. 1994; Bouhassira et al. 1998) make this an important area of quality assessment and improvement.

Existing research has not yet successfully addressed the impact of these difficulties. The purpose of this paper is to describe the process we used to evaluate and refine an algorithm for identifying physician-recognized depression using multistate data from a large managed care organization. We also explored the effect of changes in the algorithm on apparent changes in quality of care.

Methods

Overview

We examined two algorithms that used administrative data to identify patients diagnosed with depression by their primary care physicians. The study sample was drawn from primary care physician member panels of a large managed care organization (MCO). For all comparisons, we used a physician diagnosis of depression in the medical record as the standard. Next, we used administrative data to explore the variability in physician performance on identical quality measures for two contemporaneous patient samples, each constructed from different algorithms. Algorithm 1 was designed to maximize sensitivity by allowing patients to be identified from either administrative diagnostic codes or pharmacy data. Algorithm 2 was designed to decrease the false positive identifications that result from the use of pharmacy data alone.

Patient Data and Eligibility Criteria

Administrative data from primary care encounters, specialist encounters, claims, and pharmacy databases were linked with the MCO's membership and provider files. Although each patient was assigned to a primary care physician, we included treatment and follow-up events from any physician in the plan. We obtained our study samples from the pool of all members aged 12 years and older with medical and pharmacy benefits who were continuously enrolled as members in the MCO's health plans in the Mid-Atlantic or Northeast regions of the United States between January 1, 1997, and December 31, 1997 (n=892,786).

Disease-Identification Algorithms

Algorithm 1 relied on a combination of diagnostic and pharmacy codes from administrative databases. The ICD-9 codes identified depressive disorders (296.2–296.36; 300.4; 311). Bipolar affective disorder (i.e., manic disorders) and depression with psychosis were specifically excluded. Pharmacy codes included: (1) monoamine oxidase inhibitors (MAOIs), (2) tricyclic antidepressants, (3) tetracyclic antidepressants, (4) selective serotonin reuptake inhibitors (SSRIs), (5) serotonin 2-receptor antagonists, (6) alpha-2-receptor antagonists, and (7) other miscellaneous antidepressants (e.g., modified cyclics). Patients less than 19 years of age with prescriptions for imipramine were excluded because imipramine may be used to treat enuresis or attention deficit hyperactivity disorder. Patients with prescriptions for lithium were also excluded.

Algorithm 1 required that members have at least two events in the administrative data with each event satisfying one of the following criteria: (1) an outpatient encounter with a primary diagnosis of depression or (2) a pharmacy claim for an antidepressant medication. Algorithm 1 could be satisfied by two events from the same category. Because a diagnostic code was not required, some members may have been identified because they filled two or more prescriptions for antidepressants. One event (either encounter diagnosis or pharmacy claim) for depression was not considered sufficient for identification because of the potential for miscoding or the use of another family member's pharmacy card when filling prescriptions.

Algorithm 2 required that one of the two events from Algorithm 1 actually be an encounter with a diagnosis of depression. The other event could either be another encounter with a diagnosis of depression or a pharmacy claim for an antidepressant medication.

Protocol for Medical Record Identification and Review

Our protocol for medical record abstraction, which has been published elsewhere, included a computerized abstraction module with extensive instructions and synonym documentation, detailed abstractor training with an instruction manual, careful attention to data security and tracking of the records, and quality assurance (Allison, Wall et al. 2000). The study protocol was approved separately by the Institutional Review Boards of the University of Alabama at Birmingham and the MCO. Abstractors were trained to protect patient confidentiality. Medical record review was performed by the MCO, and no member-identifying information was released to the collaborating academic institution.

The original algorithm (Algorithm 1) was used to identify patients with and without depression for inclusion in the medical record sample. In defining the sample for medical record review, we focused on patients with a new diagnosis of depression. Therefore, we only included members that met the criteria of Algorithm 1 during our 12-month study window and for whom the MCO had no record of depression-related treatment in the 12 months preceding January 1, 1997. We used a stratified random sampling methodology that matched depressed and nondepressed patients within brackets of age, gender, and number of comorbid medical conditions.

From administrative data, 892,786 members were eligible for the study in the Mid-Atlantic and Northeast regions. Of these, 53,170 patients met criteria for depression by Algorithm 1 using administrative data, and the remaining 839,616 patients did not meet criteria. Based upon the stratified randomized methodology described above, we abstracted the charts of 234 patients with depression and 231 patients without depression. The time frame of abstraction was from July 1, 1996, to December 31, 1997. Approximately 10 percent of all medical records were dually abstracted with an overall interrater agreement of at least 95 percent for all main variables. In particular, interrater agreement for physician-recorded diagnosis of depression was 98 percent.

Diagnosis of Depression

The clinical standard for the diagnosis of depression is based on the Diagnostic and Statistical Manual of Mental Disorders, Version IV (American Psychiatric Association 1994). The DSM-IV criteria are traditionally ascertained through a structured medical interview (Spitzer et al. 1992; Robins et al. 1981). A diagnosis of major depression requires the presence of one of the primary symptoms of depression (depressed mood or anhedonia) plus four additional symptoms for more than two weeks (American Psychiatric Association 1994). Additional symptoms include impaired concentration or cognitive dulling, thoughts of suicide, loss of energy/fatigue, altered appetite or weight change, feelings of worthlessness and guilt, disturbances of sleep, and psychomotor retardation or agitation.

Because quality measurements are not currently based on DSM-diagnosed depression, we used the standard of physician-recognized depression determined by documentation in the medical record.

Quality Measures

One purpose of quality measures is to accurately capture the essence of evidence-based clinical guidelines in a quantitative fashion, allowing large amounts of data to be processed for improving delivery of medical care (Weissman et al. 1999; Hofer et al. 1997; Turpin et al. 1996; Harr, Balas, and Mitchell 1996). Important foundations for quality measures include: (1) strength of supportive evidence (evidence obtained from multiple randomized controlled trials given greatest emphasis); (2) consensus of professional societies about targeted intervention; (3) existence of a performance gap with documented need to improve care; (4) ability to improve care based upon quality measure, after consideration of practical resource constraints; (5) availability of adequate and economically feasible data sources; and (6) the severity and consequences of the underlying condition.

We applied the above principles to the 1993 guidelines on treatment of depression issued by the Agency for Health Care Policy and Research (AHCPR), currently the Agency for Healthcare Research and Quality (AHRQ) (1993). The guidelines divide treatment of depression into the acute, continuation, and maintenance phases. The goal of the acute treatment phase is to achieve remission, that is, to remove all signs and symptoms of the current episode of depression and to restore psychosocial and occupational functioning. Continuation treatment is intended to prevent relapse. Recovery is achieved when the patient has been asymptomatic for at least four to nine months, at which time the clinician may consider tapering or stopping antidepressant medication under certain circumstances. Maintenance treatment prevents subsequent episodes in those at risk for recurrence. We developed three measures specifically for the acute phase (adequate follow-up, medication adherence, minimum medication dosage), two measures specifically for the continuation phase (medication adherence and minimum medication dosage), and one measure for both the acute and continuation phase (adequate trial before switching medications). Table 1 provides a more detailed definition and rationale for each measure.

Table 1.

Quality Measures Based on the 1993 Guidelines for Treatment of Major Depression by the Agency for Health Care Policy and Research

| Measure | Data Source | Criteria | Guideline-Based Rationale |

|---|---|---|---|

| 1. Follow-up (acute phase) | Administrative (claims/encounter) | Proportion of patients with at least 1 visit within 6 weeks of initial antidepressant medication | After initial diagnosis, patients should be seen frequently until symptoms resolve, then every 4 to 12 weeks. |

| 2. Medication adherence (acute phase) | Pharmacy | Proportion of patients filling at least 90 days of therapy during the 118 days from the first antidepressant fill | Lack of adherence to medication is associated with worse outcomes. Physicians should promote patient adherence. |

| 3. Medication adherence(chronic phase) | Pharmacy | Proportion of patients filling at least 120 days of therapy during the 155 days from the first antidepressant fill | Medication should be continued for 4 to 9 months after onset of remission. |

| 4. Minimum medication dose (acute phase) | Pharmacy | Proportion of patients started on at least the minimum therapeutic dose approved by Federal Drug Administration | When prescribed, medication should be administered in dosages shown to alleviate symptoms. |

| 5. Minimum medication dose (chronic phase) | Pharmacy | Proportion of patients continued on at least the minimum therapeutic dose approved by Federal Drug Administration | For continuation treatment, medication should be prescribed at the same dosage necessary to control symptoms in the acute phase. |

| 6. Adequate trial before switching (either phase) | Pharmacy | Proportion of patients receiving a minimum 25-day trial of initial antidepressant before receiving new antidepressant exclusive of low dose tricyclic or trazadone added for insomnia | When prescribed, medication should be continued for a sufficient length of time to permit a reasonable assessment of response, generally 4 to 6 weeks. |

Analyses

Bivariate comparisons were made with the chi-square statistic for dichotomous variables and the t-test for continuous variables (Rosner 1995).

We first compared demographic characteristics and comorbidities for abstracted cases by the presence of algorithm-defined depression. Next, we examined the DSM depression symptoms for patients with and without physician-recognized depression. From the sample of 465 abstracted medical records, we compared the operating characteristics (true/false positives, true/false negatives, sensitivity, specificity, and predictive values) of each algorithm, taking physician-diagnosed depression as the standard. We first derived predictive values based on the prevalence of depression in our sample. We then examined change in predictive values over a broad prevalence range of physician-recognized depression.

Finally, we identified patients of all physicians who had a minimum of 10 members with depression by Algorithm 1 (n=32,819 patients) and Algorithm 2 (n=6,837 patients). Using administrative data, we then compared aggregate physician performance on each of the six quality measures for both patient samples.

Results

Although the ages of the patients with and without depression were similar, a higher proportion of the patients with depression were female. In addition, patients with depression tended to have more comorbidities (Table 2).

Table 2.

Patient Characteristics by Presence of Algorithm-Defined Depressiona

| Algorithm Positive for Depression | Algorithm Negative for Depression | |

|---|---|---|

| N | 234 | 231 |

| Female | 70% | 49% |

| Median age (years) | 44 | 41 |

| Number of comorbid conditions | 1.3 | 0.79 |

Members were identified from 1997 Managed Care Organization administrative data by Algorithm 1; reported data (n=465) were obtained from chart review of stratified random subset.

DSM-IV Diagnosis of Depression

The diagnosis of depression was recorded in 121 of the 465 abstracted medical records. Among those 121 medical records, only 9 percent contained documentation that met the American Psychiatric Association's rigorous DSM-IV criteria for diagnosis of depression (Table 3).

Table 3.

Physician Documentation of Depressive Symptomsa

| Physician-Recognized Depression from Medical Record | ||

|---|---|---|

| Yes | No | |

| N | 121 | 344 |

| Depressed mood | 52.9% | 3.5% |

| Anhedonia | 12.4% | 0.3% |

| Worthlessness/guilt | 7.4% | 0% |

| Loss of energy/fatigue | 27.3% | 9.9% |

| Psychomotor retardation/agitation | 5.8% | 1.7% |

| Cognitive dulling | 11.6% | 2.0% |

| Appetite/weight change | 28.1% | 11.3% |

| Suicidal thoughts | 4.1% | 0.3% |

| Insomnia/hypersomnia | 36.4% | 5.5% |

| Formal diagnosis by symptom criteriab | 9.1% | 0% |

Members were identified from 1997 Managed Care Organization administrative data by Algorithm 1; reported data (n=465) were obtained from chart review of stratified random subset.

Diagnosis of depression from Diagnostic and Statistical Manual of Mental Disorders, Version IV (DSM-IV).

Comparison of Disease-Identification Algorithms

Table 4 gives the operating characteristics of both algorithms. Algorithm 1, which required any two depression-related events (diagnosis or medication claim) in the administrative data, had a sensitivity of 95 percent and a specificity of 65 percent. The sensitivity was quite high because there were few cases where the algorithm identified a member as not depressed but the medical record indicated a diagnosis of depression (false negative cases). Of the 234 cases identified with depression by Algorithm 1, 54 percent had no diagnosis of depression in administrative data and were identified from antidepressant use only. As expected, there was a high rate of antidepressant use among the false positive cases (84 percent). Compared to Algorithm 1, Algorithm 2, which required a diagnosis of depression, yielded a higher specificity (88 percent) and lower sensitivity (52 percent). The rate of antidepressant use among false positive cases was less at 53 percent.

Table 4.

Depression Identification Algorithm Operating Characteristicsa

| Patient Subset/Characteristic | Algorithm 1 | Algorithm 2 |

|---|---|---|

| Algorithm “depressed,”n (%) | ||

| • True positive | 115 (25) | 63 (14) |

| • False positive | 119 (26) | 40 (9) |

| Algorithm “non-depressed,”n (%) | ||

| • True negative | 225 (48) | 304 (65) |

| • False negative | 6 (1) | 58 (12) |

| All patients, % | ||

| • Sensitivity | 95.0 | 52.1 |

| • Specificity | 65.4 | 88.4 |

| • Positive predictive valueb | 49.1 | 60.6 |

| • Negative predictive valueb | 97.4 | 84.0 |

Members were identified from 1997 Managed Care Organization administrative data by Algorithm 1; reported data (n=465) were obtained from chart review of stratified random subset; comparison standard: physician diagnosis of depression in medical record.

Based upon 26% prevalence of physician-recognized depression as determined by sampling plan; because the high prevalence of physician-recognized depression is related to the sampling strategy of this study, predictive values over a range of lower prevalence rates are presented in Figures 1A and 1B.

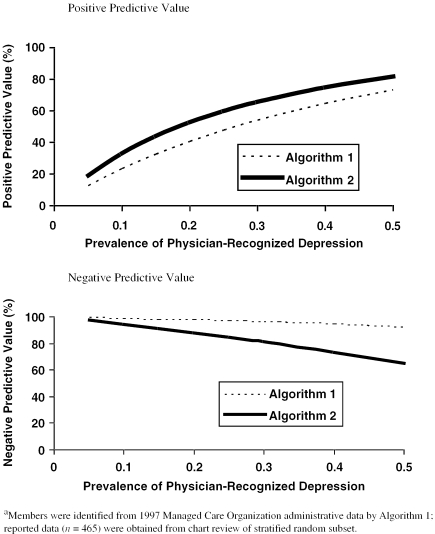

Unlike sensitivity and specificity, predictive values depend upon the prevalence of the index condition in the population. The method we used to construct our sample yielded a prevalence of 26 percent (121/465) for physician-recognized depression. Based upon this prevalence, the positive predictive values for Algorithms 1 and 2 were 49 percent and 61 percent, respectively, and the negative predictive values were 97 percent and 84 percent, respectively (Table 4). Figure 1A and 1B depict how the predictive values of both algorithms vary as the prevalence of physician-recognized depression varies. In the prevalence range of 5–10 percent, the positive predictive values of both algorithms remain below 33 percent, and negative predictive values remain above 94 percent. With a prevalence of 20 percent, the positive predictive values remain below 53 percent and the negative predictive values above 88 percent.

Figure 1.

Predictive Values of Two Algorithms for Identifying Physician-Recognized Depression by Prevalence

Variation in Physician Performance Profiles by Disease-Identification Algorithm

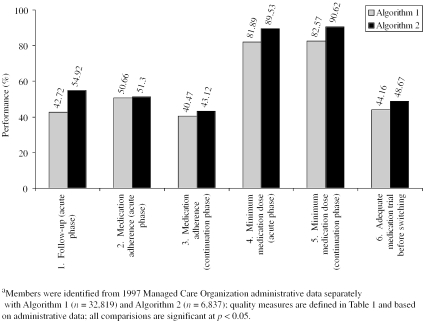

Using administrative medical and pharmacy data, we examined variation in office-level performance on six quality measures for Algorithms 1 and 2. Algorithm 1 identified 32,819 members as depressed, compared to 6,837 members identified by Algorithm 2. The number of primary care provider offices with a minimum of 10 depressed patients decreased from 1,756 with Algorithm 1 to 414 with Algorithm 2.

There were significant differences in quality performance reflected by the two algorithms (Figure 2). More specifically, for Algorithm 2, there were significantly higher rates of follow-up visits after initiation of antidepressant medication, appropriate dosage and medication adherence, and appropriate medication trial before switching to another antidepressant.

Figure 2.

Mean Primary Care Physician Quality Performance by Disease-Identification Algorithm

Discussion

Our work demonstrates the difficulty in identifying patients with depression from administrative data. Recognizing that depression is underreported in administrative data, we specifically designed Algorithm 1 to explore the effects of using pharmacy codes as primary identifiers of depression. The pharmacy inclusion criteria for Algorithm 1 were broad, thus capturing more members (increased sensitivity) at the expense of generating more false positive diagnoses (decreased specificity and positive predictive value). The more stringent Algorithm 2, which required a diagnosis of depression, had a better specificity, but much lower sensitivity.

Both algorithms suffered from low positive predictive value, and thus frequently falsely classify patients as having depression. Predictive value depends upon the prevalence of the underlying condition. Only in highly selected primary care patient populations does the prevalence of depression approach 20 percent (Pearson et al. 1999), corresponding to a positive predictive value of less than 53 percent for both algorithms. One study found the prevalence to range between 5 to 10 percent for unselected elderly patients (Barry et al. 1998), corresponding to a positive predictive value of less than 33 percent for both algorithms. This means that if administrative data were used to derive quality measures for depression, only 33 percent of those patients in the denominator would actually have a physician diagnosis of depression by chart review.

These findings are especially important given the close relationship of our quality measures to the HEDIS measure for Antidepressant Medication Management. Linking Algorithm 2 with quality measures 1, 2, and 3 approximates the current HEDIS technical specifications (Allison, Wall et al. 2000). Algorithm 2 uses the same pharmacy and ICD-9 codes as HEDIS for denominator construction. Both approaches require each member to have at least 12 months of continuous enrollment in a managed care plan with pharmacy benefits.

However, there are some differences between our quality measures and the HEDIS measure. Our quality measures were intended to reflect the 1993 AHCPR guidelines and not to duplicate the HEDIS Antidepressant Medication Management measure, which was in draft format at the time of data collection for this study. Similar to the HEDIS measure, we required a new diagnosis of depression because many of our quality measures apply to the acute phase of depression. In contrast to the HEDIS measure, which allows either a primary or secondary diagnosis of depression, we required a primary diagnosis.

Quality measure 1 differs from the corresponding HEDIS measure by requiring one visit to a primary care provider within 6 weeks of diagnosis, whereas the HEDIS measure requires three visits within 12 weeks. We made our criteria for follow-up more lenient because, in certain cases, telephone contact without an office visit is appropriate and would not be reflected in the administrative data. We included two measures of antidepressant medication adherence. Our measure 2, Adherence during the Acute Phase of Treatment, is similar to the HEDIS measure, as both reflect adherence during the first three months of treatment, allowing for gaps in medication supply. Our measure 3, Adherence during the Maintenance Phase of Treatment, examined adherence within the first four months of treatment, while the HEDIS measure examines adherence during the first six months. We constructed measure 3 to reflect adherence to the minimum recommended by the 1993 AHCPR guideline (i.e., four months). Our work also reveals important differences in quality measurement according to which algorithm was used to define the denominator. Algorithm 1, associated with a positive predictive value and corresponding higher false positive rate, led to lower rates for each quality measure. In fact, three of the measures differed by 7–8 percent. Such underreporting of quality performance is important and may lead to loss of credibility in provider feedback with crippling of improvement efforts (Allison, Calhoun et al. 2000). Therefore, when planning a quality improvement project, positive predictive value is probably the most important operating characteristic of a disease-identification algorithm.

The number of identified members, which increases with the sensitivity of the algorithm, has implications when generating performance profiles. Creating valid physician profiles requires sufficient patient numbers. In this study, the impact of requiring a diagnostic code to identify members with depression reduced the eligible member population by two-thirds. Consequently, fewer practices meet minimum volume criteria for individualized performance profiles.

Even beyond the difficulties imposed by administrative data, several factors contribute to the diagnostic challenges of depression. Although depression affects up to 10 percent of the U.S. population at an estimated annual cost of $44 billion (Hall and Wise 1995) and produces impairment in quality of life similar to that of other serious chronic diseases (Wells, Stewart et al. 1989), the diagnosis is often missed by physicians. Primary care physicians recognize only about one-half of all depressed patients in the outpatient setting (Wells, Hays et al. 1989; Kessler, Cleary, and Burke 1985; Borus et al. 1988). The detection rate by primary care physicians falls to 30 percent for patients with significant medical comorbidity (Tylee, Freeling, and Kerry 1993). This may result from physicians attributing signs and symptoms of depression to other medical illness. Somatic symptoms used in making the diagnosis of depression (e.g., fatigue, sleep disturbance, weight loss) are also presenting features of many other medical illnesses. Subjective symptoms such as depressed mood and anhedonia may also be inappropriately regarded as an understandable reaction to illness.

Concern about patient confidentiality and the potential for jeopardizing reimbursement and other benefits may also lead physicians to deliberately substitute alternative diagnoses on claims and encounters. In a survey of 440 primary care physicians randomly selected from the membership lists of professional organizations, 50 percent of respondents reported substituting another diagnostic code in the prior two weeks for one or more patients who met the criteria for major depression (Rost et al. 1994). Physicians may underreport depression to protect patients from social stigma and possible occupational and legal consequences (Hoyt et al. 1997; Hirschfeld et al. 1997). For example, medical records are often subpoenaed during custody hearings. In addition, many physicians may be uncertain about making such diagnoses because of limited training with behavioral health disorders. As a result, valid cases of depression are not identified by physicians, let alone by algorithms based on administrative data. Furthermore, patients identified from administrative data may represent more severe cases (Valenstein et al. 2000).

Some patients reluctantly express psychological symptoms and may deny mood changes. These patients may present instead with a variety of nonspecific somatic complaints such as headache, abdominal pain, insomnia, weight loss, or low energy. This symptom overlap leads to a complex interaction between depression and medical comorbidity. Therefore, medical illness frequently presents as depression and depression as other medical illness. This problem is especially troubling in the elderly, who suffer from a higher burden of comorbidity (Coulehan et al. 1990).

The overlap in treatment of depression and other medical conditions makes identification of depressed patients from pharmacy data difficult. Antidepressants are now used for a wide variety of diseases other than depression such as chronic pain, neuropathic pain, fibromyalgia, chronic fatigue syndrome, migraine and tension headaches, irritable bowel disease, premenstrual dysphoric disorder, insomnia, eating disorders, premature ejaculation, panic disorder, post-traumatic stress disorder, social phobia, and anger attacks (Barkin et al. 1996a, 1996b; Compas et al. 1998; Davies et al. 1996; Fishbain et al. 1998; Keck and McElroy 1997; McQuay et al. 1996; Merskey 1997; Metz and Pryor 2000; Moreland and St. Clair 1999; Pappagallo 1999; Simon and Von Korff 1997). These syndromes have variable overlap with clinical depression, and it is often difficult to discern which problem is primary (Keck and McElroy 1997; Clarke 1998). Often a corresponding ICD-9 diagnosis of depression cannot be found when an antidepressant prescription is written at an office visit. Given the tolerability and safety of the newer antidepressants, clinicians may tend to prescribe these agents for nonspecific psychiatric symptoms or behavioral problems (e.g., stress) without a making a clear diagnosis. As a result, there is concern about inappropriate use of antidepressants (Bouhassira et al. 1998).

The use of antidepressants for disorders other than depression and the treatment of depression without a diagnosis are both reflected in the rate of antidepressant use among members with a false positive diagnosis. Algorithm 2 produced fewer cases of false positive identification and, even among the false positive cases, the rate of antidepressant use was lower for Algorithm 2 (53 percent versus 84 percent).

The limited observation period available through administrative databases of health plans is both a strength and limitation. Administrative data permits longitudinal observation at the member level, unlike certain other data types. However, annual member disenrollment averaged 29 percent in 1999 for HMOs reporting to NCQA's Quality Compass 2000 (2000). This limits identification of new cases. Although recently enrolled members may appear to be newly identified with a disease, it is possible that the disease is long-standing. Variation in quality performance for new cases of major depression may partially result from undetected variation in disease chronicity. Katon did not find important differences in antidepressant treatment patterns in a staff-model HMO after adjusting for multiple factors, including prior history of depressive episodes (Katon et al. 2000).

Our paper raises the need for caution in interpreting quality measures based on administrative data. For example, we found that rates of appropriate antidepressant treatment (e.g., follow-up visits, appropriate dosage) were substantially higher when the specifications for the member population required a diagnosis of depression. Likewise, Kerr found that variations in the specifications of quality-of-care measures for depression treatment influenced conclusions about the adequacy of antidepressant prescribing patterns in two managed care practices (Kerr et al. 2000). Kerr varied the definition of a new episode of depression (four-month versus nine-month clean period) and the minimum number of visits with a diagnosis of depression. Patients with two or more visits coded for depression were more likely to receive antidepressants at the appropriate dosage than those with only one visit coded for depression. This may, in part, result because increased algorithm specificity from the requirement of a diagnosis code may lead to the identification of patients with more severe depression as opposed to a temporary crisis or generalized stress.

Our study also has specific implications for the training of primary care physicians. We found that primary care physicians who documented a diagnosis of depression in the medical record rarely documented the symptoms required to make that diagnosis using the DSM-IV criteria for major depression. This finding may reflect poor documentation rather than poor interviewing skills. Other studies suggest that interviewing style of the primary care physician is related to the recognition of depression (Badger et al. 1994; Robbins et al. 1994). Training and feedback based upon medical record review has been shown to increase both recognition of depression and documentation of symptoms (Linn and Yager 1980). In addition, automated office screening tools have been shown to increase recognition of depression without placing excessive demands on physicians (Zung et al. 1983; Moore, Silimperi, and Bobula 1978; Hoeper et al. 1984; Magruder-Habib, Zung, and Feussner 1990).

Conclusions

We found that accurate identification of patients with physician-recognized depression from administrative data poses significant difficulty. In addition, observed quality varied significantly with algorithm operating characteristics, with lower observed quality being associated with lower algorithm specificity and a greater number of members being falsely identified as having depression. This suggests that low-quality performance may, in part, be attributed to the specific algorithms used to identify the study population. Low specificity, and the associated false classification of patients as having depression, may inappropriately lower quality performance scores and decrease confidence in performance feedback.

Footnotes

Supported in part by the Academic Medicine and Managed Care Forum, grant nos. HS09446 and HS1112403

References

- Agency for Health Care Policy and Research . Depression in Primary Care: Treatment of Major Depression. Rockville, MD: Agency for Health Care Policy and Research; 1993. [Google Scholar]

- Allison JJ, Calhoun JW, Wall TC, Spettell CM, Fargason CA, Weissman NW, Kiefe CI. “Optimal Reporting of Health Care Process Measures: Inferential Statistics as Help or Hindrance?”. Managed Care Quarterly. 2000;8(4):1–10. [PubMed] [Google Scholar]

- Allison JJ, Wall TC, Spettell CM, Calhoun J, Fargason CA, Kobylinski R, Farmer R, Kiefe CI. “The Art and Science of Chart Review.”. Joint Commission Journal on Quality Improvement. 2000;26(3):115–36. doi: 10.1016/s1070-3241(00)26009-4. [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association . Diagnostic and Statistical Manual of Mental Disorders. 4th Edition. Washington, DC: American Psychiatric Association; 1994. [Google Scholar]

- Badger LW, deGruy FV, Hartman J, Plant MA, Leeper J, Ficken R, Maxwell A, Rand E, Anderson R, Templeton B. “Psychosocial Interest, Medical Interviews, and the Recognition of Depression.”. Archives of Family Medicine. 1994;3(10):899–907. doi: 10.1001/archfami.3.10.899. [DOI] [PubMed] [Google Scholar]

- Barkin RL, Lubenow TR, Bruehl S, Husfeldt B, Ivankovich O, Barkin SJ. “Management of Chronic PainPart I.”. Disease-A-Month. 1996a;42(7):389–454. doi: 10.1016/s0011-5029(96)90017-6. [DOI] [PubMed] [Google Scholar]

- Barkin RL, Lubenow TR, Bruehl S, Husfeldt B, Ivankovich O, Barkin SJ. “Management of Chronic PainPart II.”. Disease-A-Month. 1996b;42(8):457–507. doi: 10.1016/s0011-5029(96)90013-9. [DOI] [PubMed] [Google Scholar]

- Barry KL, Fleming MF, Manwell LB, Copeland LA, Appel S. “Prevalence of and Factors Associated with Current and Lifetime Depression in Older Adult Primary Care Patients.”. Family Medicine. 1998;30(5):366–71. [PubMed] [Google Scholar]

- Benesch C, Witter DM, Jr, Wilder AL, Duncan PW, Samsa GP, Matchar DB. “Inaccuracy of the International Classification of Diseases (ICD-9-CM) in Identifying the Diagnosis of Ischemic Cerebrovascular Disease.”. Neurology. 1997;49(3):660–4. doi: 10.1212/wnl.49.3.660. [DOI] [PubMed] [Google Scholar]

- Borus JF, Howes MJ, Devins NP, Rosenberg R, Livingston WW. “Primary Health Care Providers' Recognition and Diagnosis of Mental Disorders in Their Patients.”. General Hospital Psychiatry. 1988;10(5):317–21. doi: 10.1016/0163-8343(88)90002-3. [DOI] [PubMed] [Google Scholar]

- Bouhassira M, Allicar MP, Blachier C, Nouveau A, Rouillon F. “Which Patients Receive Antidepressants? A ‘Real World’ Telephone Study.”. Journal of Affective Disorders. 1998;49(1):19–26. doi: 10.1016/s0165-0327(97)00193-6. [DOI] [PubMed] [Google Scholar]

- Clarke DM. “Psychological Factors in Illness and Recovery.”. New Zealand Medical Journal. 1998;111(1076):410–2. [PubMed] [Google Scholar]

- Cohen-Cole SA, Stoudemire A. “Major Depression and Physical Illness: Special Considerations in Diagnosis and Biologic Treatment.”. Psychiatric Clinics of North America. 1987;10(1):1–17. [PubMed] [Google Scholar]

- Compas BE, Haaga DA, Keefe FJ, Leitenberg H, Williams DA. “Sampling of Empirically Supported Psychological Treatments from Health Psychology: Smoking, Chronic Pain, Cancer, and Bulimia Nervosa.”. Journal of Consulting and Clinical Psychology. 1998;66(1):89–112. doi: 10.1037//0022-006x.66.1.89. [DOI] [PubMed] [Google Scholar]

- Coulehan JL, Schulberg HC, Block MR, Janosky JE, Arena VC. “Medical Comorbidity of Major Depressive Disorder in a Primary Medical Practice.”. Archives of Internal Medicine. 1990;150(11):2363–7. [PubMed] [Google Scholar]

- Davies HT, Crombie IK, Macrae WA, Rogers KM, Charlton JE. “Audit in Pain Clinics: Changing the Management of Low-Back and Nerve-Damage Pain.”. Anaesthesia. 1996;51(7):641–6. doi: 10.1111/j.1365-2044.1996.tb07845.x. [DOI] [PubMed] [Google Scholar]

- Ellerbeck EF, Jencks SF, Radford MJ, Kresowik TF, Craig AS, Gold JA, Krumholz HM, Vogel RA. “Quality of Care for Medicare Patients with Acute Myocardial InfarctionA Four-State Pilot Study from the Cooperative Cardiovascular Project.”. Journal of the American Medical Association. 1995;273(19):1509–14. [PubMed] [Google Scholar]

- Epstein SA, Gonzales JJ, Onge JS, Carter-Campbell J, Weinfurt K, Leibole M, Goldberg RL. “Practice Patterns in the Diagnosis and Treatment of Anxiety and Depression in the Medically Ill: A Survey of Psychiatrists.”. Psychosomatics. 1996;37(4):356–67. doi: 10.1016/S0033-3182(96)71549-9. [DOI] [PubMed] [Google Scholar]

- Fishbain DA, Cutler RB, Rosomoff HL, Rosomoff RS. “Do Antidepressants Have an Analgesic Effect in Psychogenic Pain and Somatoform Pain Disorder? A Metaanalysis.”. Psychosomatic Medicine. 1998;60(4):503–9. doi: 10.1097/00006842-199807000-00019. [DOI] [PubMed] [Google Scholar]

- Garnick DW, Hendricks AM, Comstock CB. “Measuring Quality of Care: Fundamental Information from Administrative Datasets.”. International Journal for Quality in Health Care. 1994;6(2):163–77. doi: 10.1093/intqhc/6.2.163. [DOI] [PubMed] [Google Scholar]

- Hall RC, Wise MG. “The Clinical and Financial Burden of Mood Disorders: Cost and Outcome.”. Psychosomatics. 1995;36(2):S11–8. doi: 10.1016/S0033-3182(95)71699-1. [DOI] [PubMed] [Google Scholar]

- Hanchak NA, Harmon-Weiss SR, McDermott PD, Hirsch A, Schlackman N. “Medicare Managed Care and the Need for Quality Measurement.”. Managed Care Quarterly. 1996;4(1):1–12. [PubMed] [Google Scholar]

- Harr DS, Balas EA, Mitchell J. “Developing Quality Indicators as Educational Tools to Measure the Implementation of Clinical Practice Guidelines.”. American Journal of Medical Quality. 1996;11(4):179–85. doi: 10.1177/0885713X9601100405. [DOI] [PubMed] [Google Scholar]

- Henderson JG, Jr., Pollard CA. “Prevalence of Various Depressive Symptoms in a Sample of the General Population.”. Psychological Reports. 1992;71(1):208–10. doi: 10.2466/pr0.1992.71.1.208. [DOI] [PubMed] [Google Scholar]

- Hirschfeld RM, Keller MB, Panico S, Arons BS, Barlow D, Davidoff F, Endicott J, Froom J, Goldstein M, Gorman JM, Marek RG, Maurer TA, Meyer R, Phillips K, Ross J, Schwenk TL, Sharfstein SS, Thase ME, Wyatt RJ. “The National Depressive and Manic-Depressive Association Consensus Statement on the Undertreatment of Depression.”. Journal of the American Medical Association. 1997;277(4):333–40. [PubMed] [Google Scholar]

- Hoeper EW, Nycz GR, Kessler LG, Burke JD, Jr., Pierce WE. “The Usefulness of Screening for Mental Illness.”. Lancet. 1984;1(8367):33–5. doi: 10.1016/s0140-6736(84)90192-2. [DOI] [PubMed] [Google Scholar]

- Hofer TP, Bernstein SJ, Hayward PA, DeMonner S. “Validating Quality Indicators for Hospital Care.”. Joint Commission Journal on Quality Improvement. 1997;23(9):455–67. doi: 10.1016/s1070-3241(16)30332-7. [DOI] [PubMed] [Google Scholar]

- Hoyt DR, Conger RD, Valde JG, Weihs K. “Psychological Distress and Help Seeking in Rural America.”. American Journal of Community Psychology. 1997;25(4):449–70. doi: 10.1023/a:1024655521619. [DOI] [PubMed] [Google Scholar]

- Jencks SF, Wilensky GR. “The Health Care Quality Improvement Initiative: A New Approach to Quality Assurance in Medicare.”. Journal of the American Medical Association. 1992;268(7):900–3. [PubMed] [Google Scholar]

- Katon W, Von Korff M, Lin E, Bush T, Ormel J. “Adequacy and Duration of Antidepressant Treatment in Primary Care.”. Medical Care. 1992;30(1):67–76. doi: 10.1097/00005650-199201000-00007. [DOI] [PubMed] [Google Scholar]

- Katon W, Rutter CM, Lin E, Simon G, Von Korff M, Bush T, Walker E, Ludman E. “Are There Detectable Differences in Quality of Care or Outcome of Depression across Primary Care Providers?”. Medical Care. 2000;38(6):552–61. doi: 10.1097/00005650-200006000-00002. [DOI] [PubMed] [Google Scholar]

- Keck PE, Jr., McElroy SL. “New Uses for Antidepressants: Social Phobia.”. Journal of Clinical Psychiatry. 1997;58(14, supplement) 32–6; discussion 37–8. [PubMed] [Google Scholar]

- Kerr EA, McGlynn EA, Van Vorst KA, Wickstrom SL. “Measuring Antidepressant Prescribing Practice in a Health Care System Using Administrative Data: Implications for Quality Measurement and Improvement.”. Joint Commission Journal on Quality Improvement. 2000;26(4):203–16. doi: 10.1016/s1070-3241(00)26015-x. [DOI] [PubMed] [Google Scholar]

- Kessler LG, Cleary PD, Burke JD., Jr. “Psychiatric Disorders in Primary Care: Results of a Follow-up Study.”. Archives of General Psychiatry. 1985;42(6):583–7. doi: 10.1001/archpsyc.1985.01790290065007. [DOI] [PubMed] [Google Scholar]

- Kiefe CI, Allison JJ, Williams OD, Person SD, Weaver MT, Weissman NW. “Improving Quality Improvement Using Achievable Benchmarks for Physician Feedback.”. Journal of the American Medical Association. 2001;258(22):2871–9. doi: 10.1001/jama.285.22.2871. [DOI] [PubMed] [Google Scholar]

- Koenig HG, Cohen HJ, Blazer DG, Krishnan KR, Sibert TE. “Profile of Depressive Symptoms in Younger and Older Medical Inpatients with Major Depression.”. Journal of the American Geriatrics Society. 1993;41(11):1169–76. doi: 10.1111/j.1532-5415.1993.tb07298.x. [DOI] [PubMed] [Google Scholar]

- Leatherman S, Peterson E, Heinen L, Quam L. “Quality Screening and Management Using Claims Data in a Managed Care Setting.”. Quality Review Bulletin. 1991;17(11):349–59. doi: 10.1016/s0097-5990(16)30485-7. [DOI] [PubMed] [Google Scholar]

- Lemelin J, Hotz S, Swensen R, Elmslie T. “Depression in Primary CareWhy Do We Miss the Diagnosis?”. Canadian Family Physician. 1994;40:104–8. [PMC free article] [PubMed] [Google Scholar]

- Linn LS, Yager J. “The Effect of Screening, Sensitization, and Feedback on Notation of Depression.”. Journal of Medical Education. 1980;55(11):942–9. doi: 10.1097/00001888-198011000-00007. [DOI] [PubMed] [Google Scholar]

- McQuay HJ, Tramer M, Nye BA, Carroll D, Wiffen PJ, Moore RA. “A Systematic Review of Antidepressants in Neuropathic Pain.”. Pain. 1996;68(2–3):217–27. doi: 10.1016/s0304-3959(96)03140-5. [DOI] [PubMed] [Google Scholar]

- Magruder-Habib K, Zung WW, Feussner JR. “Improving Physicians' Recognition and Treatment of Depression in General Medical Care: Results from a Randomized Clinical Trial.”. Medical Care. 1990;28(3):239–50. doi: 10.1097/00005650-199003000-00004. [DOI] [PubMed] [Google Scholar]

- Marciniak TA, Ellerbeck EF, Radford MJ, Kresowik TF, Gold JA, Krumholz HM, Kiefe CI, Allman RM, Vogel RA, Jencks S. “Improving the Quality of Care for Medicare Patients with Acute Myocardial Infarction: Results from the Cooperative Cardiovascular Project.”. Journal of the American Medical Association. 1998;279(17):1351–7. doi: 10.1001/jama.279.17.1351. [DOI] [PubMed] [Google Scholar]

- Merskey H. “Pharmacological Approaches Other Than Opioids in Chronic Non-Cancer Pain Management.”. Acta Anaesthesiologica Scandinavica. 1997;41(1, part 2):187–90. doi: 10.1111/j.1399-6576.1997.tb04636.x. [DOI] [PubMed] [Google Scholar]

- Metz ME, Pryor JL. “Premature Ejaculation: A Psychophysiological Approach for Assessment and Management.”. Journal of Sex and Marital Therapy. 2000;26(4):293–320. doi: 10.1080/009262300438715. [DOI] [PubMed] [Google Scholar]

- Moore JT, Silimperi DR, Bobula JA. “Recognition of Depression by Family Medicine Residents: The Impact Of Screening.”. Journal of Family Practice. 1978;7(3):509–13. [PubMed] [Google Scholar]

- Moreland LW, StClair EW. “The Use of Analgesics in the Management of Pain in Rheumatic Diseases.”. Rheumatic Diseases Clinics of North America. 1999;25(1):153–91. doi: 10.1016/s0889-857x(05)70059-4. vii. [DOI] [PubMed] [Google Scholar]

- Norquist G, Wells KB, Rogers WH, Davis LM, Kahn K, Brook R. “Quality of Care for Depressed Elderly Patients Hospitalized in the Specialty Psychiatric Units or General Medical Wards.”. Archives of General Psychiatry. 1995;52(8):695–701. doi: 10.1001/archpsyc.1995.03950200085018. [DOI] [PubMed] [Google Scholar]

- Pappagallo M. “Aggressive Pharmacologic Treatment of Pain.”. Rheumatic Diseases Clinics of North America. 1999;25(1):193–213. doi: 10.1016/s0889-857x(05)70060-0. vii. [DOI] [PubMed] [Google Scholar]

- Pearson SD, Katzelnick DJ, Simon GE, Manning WG, Helstad CP, Henk HJ. “Depression among High Utilizers of Medical Care.”. Journal of General Internal Medicine. 1999;14(8):461–8. doi: 10.1046/j.1525-1497.1999.06278.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quality Compass 2000 Shows Steady Improvement in HEDIS Performance Data.” 2000Capitation Rates and Data 5 (10): 112–5. [Google Scholar]

- Robbins JM, Kirmayer LJ, Cathebras P, Yaffe MJ, Dworkind M. “Physician Characteristics and the Recognition of Depression and Anxiety in Primary Care.”. Medical Care. 1994;32(8):795–812. doi: 10.1097/00005650-199408000-00004. [DOI] [PubMed] [Google Scholar]

- Robins LN, Helzer JE, Croughan J, Ratcliff KS. “National Institute of Mental Health Diagnostic Interview Schedule: Its History, Characteristics, and Validity.”. Archives of General Psychiatry. 1981;38(4):381–9. doi: 10.1001/archpsyc.1981.01780290015001. [DOI] [PubMed] [Google Scholar]

- Rogers WH, Wells KB, Meredith LS, Sturm R, Burnam MA. “Outcomes for Adult Outpatients with Depression under Prepaid or Fee-for-ServiceFinancing.”. Archives of General Psychiatry. 1993;50(7):517–25. doi: 10.1001/archpsyc.1993.01820190019003. [DOI] [PubMed] [Google Scholar]

- Romano PS, Roos LL, Jollis JG. “Adapting a Clinical Comorbidity Index for Use with ICD-9-CM Administrative Data: Differing Perspectives.”. Journal of Clinical Epidemiology. 1993;46(10):1075–9. doi: 10.1016/0895-4356(93)90103-8. discussion 1081–90. [DOI] [PubMed] [Google Scholar]

- Rosner B. Fundamentals of Biostatistics. 4th ed. Belmont, CA: Wadsworth Publishing; 1995. [Google Scholar]

- Rost K, Smith R, Matthews DB, Guise B. “The Deliberate Misdiagnosis of Major Depression in Primary Care.”. Archives of Family Medicine. 1994;3(4):333–7. doi: 10.1001/archfami.3.4.333. [DOI] [PubMed] [Google Scholar]

- Simon GE, Von Korff M. “Prevalence, Burden, and Treatment of Insomnia in Primary Care.”. American Journal of Psychiatry. 1997;154(10):1417–23. doi: 10.1176/ajp.154.10.1417. [DOI] [PubMed] [Google Scholar]

- Simon GE, Von Korff M. “Recognition, Management, and Outcomes of Depression in Primary Care.”. Archives of Family Medicine. 1995;4(2):99–105. doi: 10.1001/archfami.4.2.99. [DOI] [PubMed] [Google Scholar]

- Simon GE, Von Korff M, Barlow W. “Health Care Costs of Primary Care Patients with Recognized Depression.”. Archives of General Psychiatry. 1995;52(10):850–6. doi: 10.1001/archpsyc.1995.03950220060012. [DOI] [PubMed] [Google Scholar]

- Spitzer RL, Williams JB, Gibbon M, First MB. “The Structured Clinical Interview for DSM-III-R (SCID) I: History, Rationale, and Description.”. Archives of General Psychiatry. 1992;49(8):624–9. doi: 10.1001/archpsyc.1992.01820080032005. [DOI] [PubMed] [Google Scholar]

- Turpin RS, Darcy LA, Koss R, McMahill C, Meyne K, Morton D, Rodriguez J, Schmaltz S, Schyve P, Smith P. “A Model to Assess the Usefulness of Performance Indicators.”. International Journal for Quality in Health Care. 1996;8(4):321–9. doi: 10.1093/intqhc/8.4.321. [DOI] [PubMed] [Google Scholar]

- Tylee AT, Freeling P, Kerry S. “Why Do General Practitioners Recognize Major Depression in One Woman Patient Yet Miss It in Another?”. British Journal of General Practice. 1993;43(373):327–30. [PMC free article] [PubMed] [Google Scholar]

- Valenstein M, Ritsema T, Green L, Blow FC, Mitchinson A, McCarthy JF, Barry KL, Hill E. “Targeting Quality Improvement Activities for Depression: Implications of Using Administrative Data.”. Journal of Family Practice. 2000;49(8):721–8. [PubMed] [Google Scholar]

- Weiner JP, Parente ST, Garnick DW, Fowles J, Lawthers AG, Palmer RH. “Variation in Office-Based QualityA Claims-Based Profile of Care Provided to Medicare Patients with Diabetes.”. Journal of the American Medical Association. 1995;273(19):1503–8. doi: 10.1001/jama.273.19.1503. [DOI] [PubMed] [Google Scholar]

- Weiner JP, Powe NR, Steinwachs DM, Dent G. “Applying Insurance Claims Data to Assess Quality Of Care: A Compilation of Potential Indicators.”. QrbQuality Review Bulletin. 1990;16(12):424–38. doi: 10.1016/s0097-5990(16)30404-3. [DOI] [PubMed] [Google Scholar]

- Weintraub WS, Deaton C, Shaw L, Mahoney E, Morris DC. “Can Cardiovascular Clinical Characteristics Be Identified and Outcome Models Be Developed from an In-patient Claims Database?”. American Journal of Cardiology. 1999;84(2):166–9. doi: 10.1016/s0002-9149(99)00228-3. [DOI] [PubMed] [Google Scholar]

- Weissman NW, Allison JJ, Kiefe CI, Farmer RM, Weaver MT, Williams OD, Child IG, Pemberton JH, Brown KC, Baker C. “Achievable Benchmarks of Care: The ABCs of Benchmarking.”. Journal of Evaluation in Clinical Practice. 1999;5(3):269–81. doi: 10.1046/j.1365-2753.1999.00203.x. [DOI] [PubMed] [Google Scholar]

- Wells KB. “Depression in General Medical Settings: Implications of Three Health Policy Studies for Consultation-Liaison Psychiatry.”. Psychosomatics. 1994;35(3):279–96. doi: 10.1016/S0033-3182(94)71776-X. [DOI] [PubMed] [Google Scholar]

- Wells KB, Hays RD, Burnam MA, Rogers W, Greenfield S, Ware JE., Jr. “Detection of Depressive Disorder for Patients Receiving Prepaid or Fee-for-Service Care: Results from the Medical Outcomes Study.”. Journal of the American Medical Association. 1989;262(23):3298–302. [PubMed] [Google Scholar]

- Wells KB, Stewart A, Hays RD, Burnam MA, Rogers W, Daniels M, Berry S, Greenfield S, Ware J. “The Functioning and Well-being of Depressed PatientsResults from the Medical Outcomes Study.”. Journal of the American Medical Association. 1989;262(7):914–9. [PubMed] [Google Scholar]

- Zung WW, Magill M, Moore JT, George DT. “Recognition and Treatment of Depression in a Family Medicine Practice.”. Journal of Clinical Psychiatry. 1983;44(1):3–6. [PubMed] [Google Scholar]