Abstract

Objective

To describe the performance interests of multiple stakeholders associated with the management and delivery of emergency department (ED) care, and to develop a performance framework and set of indicators that reflect these interests.

Study Setting

Stakeholders (1,100 physicians, nurses, managers, home care providers, and prehospital care personnel) with responsibility for ED patients in hospitals in the Canadian province of Ontario.

Study Design

Sixty-two percent of stakeholders responded to a mail survey regarding the importance of 104 potential ED performance indicators. Descriptive and inferential statistics are used to explore the interests of each stakeholder group and to compare interests across the five groups.

Principal Findings

Emergency department stakeholders are primarily interested in indicators that focus on their role and capacity to provide care. Key differences exist between hospital and nonhospital stakeholders. Physicians mean ratings of the importance on ED performance measures were lower than mean ratings in the other stakeholder groups.

Conclusions

Emergency department performance interests are not homogeneous across stakeholder groups, and evaluating performance from the perspective of any one stakeholder group will result in unbalanced assessments. Community-based stakeholders, a group frequently excluded from commenting on ED performance, provide important insights into ED performance related to the external environment and the broader continuum of care.

Keywords: Emergency department performance, multiple stakeholders, quality improvement

The ability of emergency departments (ED) to respond to patient care needs is an important public policy issue. In the current environment of health system restructuring and renewal, EDs are challenged to be flexible and to adapt to changing models of care delivery, while at the same time be stable and have controlled processes that contribute to the achievement of defined goals and efficiencies. Despite wide recognition of the importance of health care performance measurement, and the understanding that measurement is crucial for quality improvement (Nash 1998), ED performance has not been systematically evaluated since Georgopoulos (1986) studied the relationship between organizational structure and performance in EDs.

One of the most striking features of the ED literature is the range of factors used to understand and measure ED performance. For example, one of the dominant themes in the ED literature is the issue of overcrowding and access to appropriate care. Much of this literature examines the question of “appropriate” ED utilization (e.g., Chan, Schull, and Schultz 2001; Lucas and Sanford 1998) and demonstrates the use of process improvement strategies to increase organizational capacity (e.g., Drake 1998; Espinosa, Treiber, and Kosnik 1997). Increased access to primary care is also advocated as a mechanism to reduce overcrowding (e.g., Grumbach, Keane, and Bindman 1993). Alternatively, Babcock Irvin, Wyer, and Gerson (2000) argue that EDs should expand their role to provide health promotion and prevention activities (e.g., alcohol screening and intervention, HIV screening and referral) for high-risk high-prevalence patient populations who do not have access to an ongoing source of primary care.

Although past research on selected aspects of ED performance such as that illustrated above provides substantial insights into the processes and outcomes associated with ED care, and the challenges associated with assessing ED performance, several gaps remain. First, limited attempts have been made to place this work within a broader theoretical framework. In the absence of such a framework, much of the ED literature focuses on the use of isolated measures to evaluate the responses of individual EDs to environmental demands. Second, while it is widely recognized that improvements in the organization and management of ED care need to be considered in relationship to the broader health system, much of the work that speaks to health system integration is virtually silent on the changing role of EDs (e.g., Shortell et al. 1996). Finally, with the exception of performance related to patient satisfaction, the ED literature is primarily concerned with performance from the perspective of staff dedicated to the ED. Performance interests and expectations of adjunct providers are not considered.

Organizational scholars remind us that full and balanced evaluations of performance must consider a variety of indicators and dimensions that reflect the organization's functional and environmental uniqueness (Cameron and Whetten 1983; Sicotte et al. 1998). Moreover, the multiple constituent model of organizational performance reminds us that conceptions of performance are inherently subjective and are based on an individual's values and preferences (Zammuto 1984).

Notwithstanding wide recognition of the importance of identifying and responding to a variety of stakeholder information needs when assessing performance (e.g., Alserver, Richey, and Lima 1995; Slovensky, Fottler, and Houser 1998), few studies have systematically looked at stakeholder interests in the selection of evaluative criteria. Jun, Peterson, and Zsidisin (1998) conducted three homogeneous focus groups with patients, administrators, and physicians in a single midsize U.S. hospital to define the important dimensions of quality. The three stakeholder groups expressed divergent perspectives on the importance of various dimensions of quality: (1) patients emphasized courtesy, communication, and responsiveness; (2) administrators focused on staff competence, understanding customers, and collaboration; and (3) physicians emphasized technical attributes of quality including staff competence and patient outcomes. Zinn, Zalokowski, and Hunter (2001) used the Delphi technique with four homogeneous external stakeholder groups (hospital executives, managed care executives, referring physicians, laboratory regulators) and one internal stakeholder group (laboratory managers) to identify indicators of laboratory performance. While stakeholder priorities varied across the five groups, stakeholders subjected to common environmental pressures had overlapping performance priorities.

Despite evidence that the performance interests of stakeholder groups vary, in reality, the selection of performance measures has been dominated by data feasibility (Oakley Davies and Marshall 1996). Furthermore, most performance reports are created for multiple users (providers, payers, consumers, administrators) who are viewed as one big cluster, rather than different stakeholder groups with unique performance interests and expectations (e.g., Marshall et al. 2000).

In response to calls to pay more attention to the ways in which performance measurement activities can meet a diversity of ED stakeholder interests (e.g., Scanlon et al. 2001), this study contributes to the health care performance measurement literature by (1) providing insight into the performance interests of multiple stakeholders associated with the management and delivery of ED care, and (2) developing an ED performance framework and set of indicators that reflect these interests. The study draws on the Competing Values Framework of organizational effectiveness (Quinn and Rohrbaugh 1981, 1983) to describe and analyze ED stakeholders' perspectives (physicians, nurses, managers, home care providers, and prehospital care personnel) on the indicators necessary to evaluate ED performance.

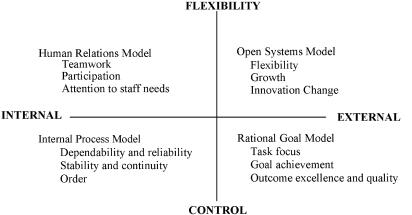

As illustrated in Figure 1, the Competing Values Framework (CVF) consists of four quadrants created by the intersection of two axes. The horizontal axis is related to the focus of the organization, and ranges from an internal micro-emphasis on people in the organization, to an external macro-emphasis on organizational survival. The vertical axis is related to the structure of the organization, and reflects preferences for flexibility versus control in organizational structuring. Each of the four quadrants represents a dominant conceptual model of organizational performance: rational goal, human relations, open systems, and internal process. The rational goal model reflects the view that organizational performance is related to goal achievement—clear direction will lead to desired outcomes. The human relations model reflects the view that organizational performance is related to the participation and involvement of staff. The open systems model emphasizes adaptation to economic, social, and political environments. The internal process model reflects the view that organizational performance is related to stability and control. In this study, the CVF is used as a structure to articulate the explicit performance perspectives held by various ED stakeholders.

Figure 1.

The Competing Values Framework of Organizational Effectiveness* (adapted from Quinn and Rohrbaugh [1983])*Adapted from Quinn and Rohrbaugh (1983).

Methods

Sample and Questionnaire Administration

Cross-sectional survey methods were used to describe and analyze stakeholders' perspectives on the indicators necessary to evaluate ED performance. Two groups of stakeholders were surveyed: hospital stakeholders (physicians, nurses, and managers) and community stakeholders (home care and paramedics). To identify the internal stakeholder sampling units, EDs were stratified into three groups (teaching, community, and small) based on the Joint Policy and Planning Commission (1997) definition of hospital type.1 Estimates of the sample size and design effect were used to determine a realistic number of sampling units (EDs) and the corresponding stakeholder group sample size. The sampling units (15 teaching, 20 community, and 20 small) were randomly selected from the three strata. In the winter of 2001 a contact person at each hospital received a letter describing the project and asking for the names and role title of ED nurses, physicians, and frontline and program managers. The physician and nurse sample (teaching n =80, community n =60, small n =60) was randomly selected from the list of potential respondents for each hospital type. Because the population of frontline and senior managers was only nine more that the required sample size, the population was sampled.

The community sample was identified from two sources. In the winter of 2001, Community Care Access Centre2 (CCAC) administrators were sent a letter outlining the purpose of the study and asking for the names and workplace addresses of frontline ED home care case managers. The home care sample included the entire population of CCAC senior managers (n =40) and home care case managers (n =209) within the 40 (93 percent) CCACs that responded to the request to participate in the research. To compile the prehospital sample, the Ontario Paramedic Association provided a random sample of paramedics from their list of active members (n =200). The sample also included full-time clinical program directors (n =7) and administrative staff (n =45) from Ontario's 26 base hospital programs. Survey data were collected between March and May 2001. Questionnaires were mailed along with a cover letter. A follow-up reminder was sent two weeks later and a second mailing was sent to all nonrespondents four weeks after that. Six hundred and eighty-five stakeholders returned a study questionnaire for an overall response rate of 62 percent. The physician, nurse, and manager response rates were 47 percent, 61 percent, and 66 percent, respectively. The home care response rate was 76 percent and the prehospital care response rate was 59 percent. The difference in response rate by hospital type is not significant (χ=5.93; df =2; p =0.051); however, the physician stakeholder group had a significantly lower response rate than the other four stakeholder groups (chi-square=36.404, df =4, p < .001).

Measures

A three-step modified nominal group process was used with a multidisciplinary expert panel to develop the study questionnaire. In step one, a pre-meeting questionnaire was used to obtain panelist feedback on 187 potential ED performance indicators derived from the ED and health services literature. Step two involved a one-day meeting in which panelists provided assessments of the usefulness (Turpin et al. 1996) and feasibility (McGlynn 1998) of the indicators. In step three, panelists selected a subset of 104 indicators for inclusion in the study questionnaire.3 The questionnaire was pilot-tested and revised before distribution.

Respondents were asked to rate the importance (i.e., the extent to which the indicator raises important questions related to EDs ongoing ability to respond to patient needs) of each indicator using a seven-point Likert-type response scale (Lipsey 1990). Response options ranged from not very important (1) to extremely important (7).

Analysis

The data are collected and analyzed at the level of the stakeholder group. The Intraclass Correlation Coefficient (two-way random effects model) for each of the five stakeholder groups ranged from 0.92 (nurses) to 0.99 (home care) supporting analysis at the stakeholder level.

Because the primary goal of the study was to provide information at the most relevant unit possible to guide improvement activities (Donaldson and Nolan 1997), the analysis focused on individual indicators.4 Descriptive statistics were used to analyze the sample characteristics, and to compare the relative importance of the indicators across the five stakeholder groups and identify a subset of the most important indicators for detailed analysis. The 16 indicators (39 indicators in total, or 38 percent of the indicators included in the survey) receiving the highest mean importance rating within each of the five stakeholder groups are defined as the key ED performance indicators. The ranked lists were closely examined to consider the effect of selecting a different number of indicators and whether there was a logical breaking in the data that would appear less arbitrary. In the absence of such a break point, selection of the top 16 indicators is consistent with previous reports of stakeholder interests (e.g., Cleary 2000; Cullen and Calvert 1995). Moreover, in the absence of evidence about the optimum number of indicators to be included in performance reports, selection of the top 16 indicators balanced the tension between comprehensiveness and parsimony.

Analysis of variance is used to compare the mean for each of the 39 indicators across the stakeholder groups. Prior to conducting the ANOVAs, the data were examined to assess the assumptions of normality and equality of variance.5 Dunnette's C procedure was used for post hoc multiple comparisons. Confidence intervals are set at the 95 percent level, and p-values of less than .05 are considered significant.

Results

For the 685 respondents, the average tenure in their current role ranges from a minimum of about six years for home care respondents (range from 1 year to 19 years) to the maximum average tenure of almost 10 years for physician respondents (range from 1 to 34 years). Prehospital care respondents have the shortest average tenure in health care (14 years with a range from 1 to 28 years) while managers have the longest average tenure in health care (23 years with a range from 1 to 31 years). Just over one-quarter of physicians (28 percent) are certified in emergency medicine. Fewer than one-third of the managers (32 percent) and 12 percent of the community respondents are prepared at the Masters level or higher; 37 percent of nurses and 34 percent of prehospital care respondents are prepared at the baccalaureate level or higher.

Table 1 provides mean importance ratings and zero-order correlation coefficients across all 104 indicators for the five stakeholder groups. Physician stakeholders have the lowest mean importance ratings and the greatest variation for the 104 indicators. While physician ratings were the lowest for approximately 70 percent of the indicators, their ratings were not lower on all indicators. Nurse and home care stakeholder ratings were the highest for approximately 76 percent of the indicators. The highest correlation is between nurse and the manager stakeholders and the lowest correlations are between home care stakeholders and the other four stakeholder groups.

Table 1.

Mean, Standard Deviation, Range, and Pearson Correlations for Stakeholder Groups

| N | Mean | SD | Range | Physicians | Nurses | Managers | Home Care | |

|---|---|---|---|---|---|---|---|---|

| Physicians | 91 | 4.97 | .63 | 3.20 | ||||

| Nurses | 120 | 5.58 | .54 | 2.46 | .801* | |||

| Managers | 133 | 5.35 | .56 | 2.50 | .809* | .815* | ||

| Home Care | 187 | 5.55 | .50 | 2.20 | .621* | .638* | .588* | |

| Prehospital | 138 | 5.25 | .56 | 2.60 | .814* | .769* | .668* | .626* |

Correlation is significant at the 0.005 level (two-tailed).

Stakeholder Performance Interests

Table 2 shows the mean importance rating, standard deviation, and rank for the 39 indicators for each stakeholder group. In addition, shading in the corresponding column indicates the top 16 indicators for each stakeholder group. Analysis of each stakeholder group's list of key indicators suggests that stakeholders are primarily interested in indicators that focus on their role and capacity to provide ED care. Nurse and manager stakeholders place high priority on indicators that focus on critical processes of care as well as on indicators that focus on workplace capacity and patient satisfaction. Managers are also interested in ED utilization and the cost of ED care. While interested in critical processes of care and patient satisfaction outcomes, home care stakeholders place highest priority on indicators that focus on ED linkage with community-based primary care and home care providers. The prehospital stakeholders place a strong focus on indicators associated with monitoring and controlling critical processes of care, including several ambulance-related indicators. Physician stakeholders are primarily focused on indicators that reflect critical processes of care and workplace activities and costs. Like prehospital stakeholders, physicians did not include patient satisfaction indicators in their top 16 indicators.

Table 2.

Standard Deviation, Rank and ANOVA Results of Top 39 Indicators by Stakeholder Group

| Physician (P) | Nurse (N) | Manager (M) | Home Care (H) | Prehospital (Ph) | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Model of Performance/Indicator | Mean | SD | Rank | Mean | SD | Rank | Mean | SD | Rank | Mean | SD | Rank | Mean | SD | Rank | ||||

| 1. Time to thrombolysis for AMI *** | 6.42 | 0.72 | 1 | 6.63 | 0.97 | 1 | 6.55 | 0.75 | 1 | 6.4 | 1.18 | 3 | 6.71>P, C | 0.75 | 1 | ||||

| 2. Time to MD assessment** | 5.69 | 1.13 | 10 | 6.18> P | 0.89 | 11 | 6.15> P | 0.99 | 5 | 6.03 | 1.31 | 14 | 6.17> P | 0.96 | 4 | ||||

| 3. Time to thrombolysis for stroke*** | 4.78 | 2.11 | 69 | 6.45>P, M | 1.16 | 3 | 5.84>P | 1.77 | 23 | 6.45>P, M | 1.14 | 2 | 6.34>P | 1.39 | 2 | ||||

| 4. Availability of diagnostic testing** | 5.65 | 1.27 | 15 | 6.24>P, M | 0.81 | 8 | 5.8 | 1.1 | 27 | 5.93 | 1.24 | 25 | 6.04 | 0.95 | 9 | ||||

| 5. Time to triage*** | 5.55 | 1.24 | 20 | 6.14>P | 1.02 | 16 | 6.11>P | 1.05 | 8 | 5.98 | 1.35 | 18 | 6.2>P | 1.05 | 3 | ||||

| 6. Time to nursing assessment** | 5.57 | 1.12 | 18 | 6.19>P | 1 | 10 | 6.11>P | 1.01 | 7 | 5.98 | 1.33 | 17 | 6.04>P | 1.05 | 8 | ||||

| 7. Cycle time for diagnostic tests | 5.85 | 1.02 | 5 | 6.07 | 0.88 | 20 | 5.92 | 1.03 | 14 | 5.75 | 1.29 | 40 | 5.82 | 1.1 | 16 | ||||

| 8. Availability of MD consultants*** | 5.59 | 1.25 | 17 | 6.16>P, M, Ph | 0.9 | 14 | 5.71 | 1.33 | 31 | 6.01 | 1.04 | 16 | 5.75 | 1.04 | 19 | ||||

| 9. Time to pain management*** | 5.38 | 1.04 | 29 | 6.29>P, M, Ph | 0.85 | 6 | 5.86>P | 1.15 | 20 | 6.22>P | 1.17 | 7 | 5.86>P | 1.22 | 13 | ||||

| 10. Time from admission to transfer to inpatient unit*** | 5.66 | 1.21 | 13 | 6.23>all | 0.92 | 9 | 5.83 | 1.25 | 24 | 5.53 | 1.5 | 61 | 5.7 | 1.22 | 23 | ||||

| 11. Inpatient days in ED | 5.72 | 1.44 | 9 | 6.03 | 1.44 | 23 | 5.57 | 1.78 | 38 | 5.63 | 1.49 | 54 | 5.6 | 1.4 | 27 | ||||

| 12. Ambulance arrivals from other EDs*** | 5.09 | 1.67 | 48 | 5.23 | 1.92 | 78 | 4.58 | 2.18 | 93 | 5.38>M | 1.85 | 73 | 5.83> P, M | 1.55 | 15 | ||||

| 13. Critical patients transferred out due to no in-house critical care beds/services | 5.42 | 1.82 | 25 | 5.6 | 1.81 | 53 | 5.56 | 1.61 | 40 | 5.69 | 1.76 | 50 | 6.04 | 1.33 | 10 | ||||

| 14. Ambulance redirects *** | 5.37>M | 1.59 | 31 | 5.07 | 1.9 | 87 | 4.56 | 2.16 | 95 | 5.43>M | 1.85 | 68 | 6.12>All | 1.37 | 5 | ||||

| 15. Time on ambulance redirects*** | 5.06 | 1.52 | 51 | 5.5>M | 1.67 | 63 | 4.64 | 2.05 | 91 | 5.58>M | 1.61 | 59 | 6.01>P, M | 1.27 | 11 | ||||

| 16. Adverse events* | 5.65 | 1.19 | 14 | 6.09>P | 1.2 | 19 | 5.93 | 1.05 | 13 | 6.02 | 1.32 | 15 | 6.1>P | 1.1 | 6 | ||||

| 17. Client satisfaction–pain management*** | 5.41 | 1.2 | 26 | 6.17>P, Ph | 0.84 | 12 | 6.06>P, Ph | 0.87 | 10 | 6.14>P, Ph | 1.1 | 10 | 5.6 | 1.17 | 26 | ||||

| 18. Client satisfaction–outcomes of care*** | 5.56 | 1.25 | 19 | 6.3>P, Ph | 0.77 | 5 | 6.18>P, Ph | 0.87 | 4 | 6.1>P | 1.1 | 12 | 5.76 | 1.19 | 18 | ||||

| 19. Client satisfaction–quality of care*** | 5.54 | 1.26 | 21 | 6.25>P, H, Ph | 0.81 | 7 | 6.27>P, H, Ph | 0.8 | 3 | 5.93 | 1.16 | 24 | 5.8 | 1.09 | 17 | ||||

| 20. Client satisfaction–nursing care*** | 5.16 | 1.28 | 42 | 6.1>P, H, Ph | 0.97 | 18 | 6.06>P, Ph | 0.89 | 11 | 5.74>P | 1.24 | 41 | 5.43 | 1.16 | 41 | ||||

| 21. Client satisfaction–physician care*** | 5.27 | 1.31 | 36 | 5.98>P, Ph | 1.01 | 27 | 6.07>P, Ph | 0.85 | 9 | 5.81>P | 1.2 | 34 | 5.57 | 1.19 | 32 | ||||

| 22. Visits by triage category*** | 5.39 | 1.67 | 27 | 6.04>P, Ph | 1.07 | 22 | 6.41> all | 0.92 | 2 | 5.69 | 1.44 | 46 | 5.53 | 1.33 | 36 | ||||

| 23. Total cost of ED care*** | 5.1 | 1.41 | 47 | 5.48>Ph | 1.15 | 64 | 5.92>P, N.H | 1.21 | 15 | 5.4 | 1.64 | 69 | 5.06 | 1.39 | 70 | ||||

| 24. Staff satisfaction*** | 5.99 | 0.9 | 3 | 6.55>all | 0.76 | 2 | 6.14 | 0.84 | 6 | 5.96 | 1.3 | 22 | 6.08 | 1 | 7 | ||||

| 25. Total worked nursing hours*** | 5.29 | 1.42 | 35 | 6.17>P, M, H, Ph | 1.01 | 13 | 5.91>P, H | 1.15 | 16 | 5.44 | 1.66 | 65 | 5.58 | 1.29 | 30 | ||||

| 26. Nursing turnover* | 5.66 | 1.18 | 12 | 5.98>P, Ph | 1.26 | 25 | 5.97>Ph | 1.05 | 12 | 5.86 | 1.37 | 29 | 5.65 | 1.28 | 24 | ||||

| 27. Recruitment/retention activities** | 5.76 | 0.96 | 6 | 6.15>P, H, Ph | 1 | 15 | 5.81 | 1.23 | 26 | 5.71 | 1.45 | 44 | 5.58 | 1.33 | 29 | ||||

| 28. Physician workload | 5.87 | 0.9 | 4 | 5.91 | 1.14 | 35 | 5.91 | 0.99 | 17 | 5.69 | 1.47 | 47 | 5.88 | 1.1 | 12 | ||||

| 29. Follow-up for x-ray discrepancies | 5.67 | 0.12 | 11 | 5.56 | 1.18 | 59 | 5.66 | 1.33 | 34 | 5.84 | 1.2 | 32 | 5.63 | 0.1 | 25 | ||||

| 30. Physician turnover | 5.74 | 1.11 | 7 | 5.68 | 1.29 | 48 | 5.87 | 1.11 | 19 | 5.8 | 1.34 | 35 | 5.73 | 1.29 | 21 | ||||

| 31. Opportunities for staff training/certification*** | 5.73 | 1.02 | 8 | 6.43> all | 0.89 | 4 | 5.85 | 1.18 | 22 | 5.96 | 1.33 | 23 | 5.84 | 1.26 | 14 | ||||

| 32. Treatment information is provided to family MD*** | 5.6 | 1.26 | 16 | 5.63 | 1.2 | 50 | 5.58 | 1.33 | 37 | 6.11>all | 1.12 | 11 | 5.3 | 1.42 | 51 | ||||

| 33. Access to primary care in the community*** | 6.11>Ph | 1.03 | 2 | 5.87 | 1 | 38 | 5.69 | 1.28 | 33 | 6.35>N, M, Ph | 0.87 | 5 | 5.57 | 1.38 | 31 | ||||

| 34. ED and community staff examine scope of care*** | 4.7 | 1.54 | 75 | 5.4>P | 1.34 | 71 | 5.05 | 1.38 | 74 | 6.39>all | 0.87 | 4 | 5.17 | 1.38 | 60 | ||||

| 35. Community participates in ED planning/evaluation*** | 4.37 | 0.14 | 84 | 5.15 | 1.35 | 81 | 4.82 | .11 | 83 | 6.15>all | 0.07 | 9 | 4.6 | .1 | 89 | ||||

| 36. Return visits linked to breakdown of in-home care*** | 4.73 | 1.77 | 71 | 5.14 | 1.4 | 82 | 5.05 | 1.51 | 75 | 6.19>all | 1.06 | 8 | 4.74 | 1.65 | 83 | ||||

| 37. Availability/use of community staff*** | 4.96 | 1.3 | 60 | 5.61>P, Ph | 1.16 | 51 | 5.3>Ph | 1.28 | 62 | 6.29>all | 0.88 | 6 | 4.57 | 1.47 | 90 | ||||

| 38. Appropriate patients are referred to community staff*** | 4.93 | 1.3 | 61 | 5.43>P, Ph | 1.23 | 70 | 5.26>Ph | 1.26 | 63 | 6.46>all | 0.67 | 1 | 4.74 | 1.5 | 82 | ||||

| 39. Return visits that result in hospitalization*** | 5.14 | 1.21 | 43 | 5.58>P | 1.16 | 56 | 5.51 | 1.27 | 47 | 6.07>all | 1.04 | 13 | 5.4 | 1.36 | 44 | ||||

p <.05;

p <.01;

p <.001;

Post-hoc analysis are summarized using a greater than (>) sign to denote the significant group differences.

Table 2 indicates that significant stakeholder effects were detected for 33 of the 39 indicators. Three key patterns emerge when examining the ANOVA differences between the stakeholder groups reported in Table 2. First, while the importance ratings are not consistently higher for any one of the five groups, a systematic pattern of stakeholder subgroups does not emerge from the data. The second key finding is that physician stakeholder ratings of importance were significantly lower than at least one other stakeholder group on 32 of the 39 indicators. Third, 8 of the 17 indicators that were identified by only one stakeholder group were significantly more important for that one stakeholder group. This finding suggests that the 8 indicators represent unique stakeholder priorities.

Emergency Department Performance Framework

The second objective of this research was to propose an ED performance framework and set of indicators that effectively integrate stakeholder perspectives. The proposed ED performance framework and set of indicators is presented in Figure 2. Shading in the corresponding column indicates the top 16 indicators for each stakeholder group.

Figure 2.

ED Performance Framework

The ED performance framework maintains continuity with the Competing Values Framework (CVF) by organizing the top 39 indicators into the four effectiveness models depicted in the CVF in Figure 1. Consistent with the CVF, the proposed ED performance framework has four quadrants that reflect the four theoretical models of organizational performance: (1) Critical Processes of Care; (2) Workplace Activities and Outcomes; (3) Outcomes of Care; and (4) Linkages with Community Providers. The Critical Process of Care quadrant, which represents the internal process model of effectiveness, places a great deal of emphases on monitoring and controlling processes of ED care. This quadrant also includes indicators that reflect ED access to hospital resources that influence ED capacity. The Workplace Activities and Outcomes quadrant, which represents the human relations model, emphasizes workload, costs, and outcomes. This quadrant also includes indicators that focus on staff development, recruitment, and retention. The Outcomes of Care quadrant, which represents the rational goal model, focuses on two approaches to assess outcome quality: patient perspectives and adverse events. Finally, the Linkages with Community Providers quadrant, which represents the open systems model, places emphasis on the links between the ED and the postacute environment.

Discussion

The goal of this study was to gain an understanding of the performance interests of multiple stakeholders associated with the management and delivery of ED care, and to develop a performance framework and set of indicators that reflect these interests. Overall, the results indicate that evaluation of performance from the perspective of any one stakeholder group will result in an unbalanced assessment of ED performance. Moreover, the results suggest that the key differences in stakeholders' perspectives of important dimensions of ED performance are between hospital and nonhospital stakeholders. Although previous work has acknowledged the need to balance the dimensions of performance (e.g., Baker and Pink 1995), none has actually explored opportunities to balance multiple dimensions and multiple stakeholder interests in an integrated framework. Organization of the 39 most important ED performance indicators within the CVF provides a useful and coherent model that demonstrates the complex nature of ED performance, and a structure to articulate the explicit performance perspectives held by multiple stakeholders. The integrated framework illustrates the potential paradoxical nature of ED performance by illustrating how emphasis on improving performance from any one perspective may have an impact on ED performance from another perspective.

As illustrated in Figure 2, a major focus of the key indicators is internal ED operations and outcomes of care. This finding is highly consistent with the primary role of emergency medicine that is widely described in the literature—the initial reception and early management of patients in emergent or urgent conditions (Dailey 1998). The study questionnaire also included four indicators that focused specifically on an expanded ED role (health promotion, community-based advocacy, regional advanced directives, collaboration with long-term care facilities), which were a low priority for all five stakeholder groups. These results suggest that relative to the other indicators, the stakeholders in this study did not place a priority on expanding the role of EDs suggested by Babcock Irvin, Wyer and Gerson (2000).

More generally, the findings have important implications for the development and use of performance reports for quality improvement activities. Marshall et al. (2000) recently asserted that provider organizations might be the most responsive to the use of performance data for quality improvement. However, provider organizations are often viewed as one big cluster, rather than as shifting coalitions of interest groups who are engaged in constant negotiation and renegotiation of the conditions of their participation (Cyert and March 1966). This research provides empirical evidence to support the suggestion to break apart the “provider” stakeholder group when considering the development and use of performance measures (Soberman Ginsburg 2003).

Clinical and managerial leaders can use the ED performance framework advanced in this study to respond to the challenges inherent in multidisciplinary quality improvement activities in three ways: (1) as a structure to explore convergent stakeholder interests; (2) as a structure to explore divergent stakeholder interests; and (3) as a structure to reconcile stakeholder differences. Each of these is briefly addressed.

Exploring Convergent Stakeholder Interests

The proposed framework illustrates considerable overlap in stakeholder interests, especially in relation to internal process indicators, which are often the focus of process improvement activities. Teams of organizational employees are the primary medium through which process improvement takes place (Berwick, Godfrey, and Roessner 1991) and the performance interests of the team members can influence the degree to which quality improvement teams are successful (LaVallee and McLaughlin 1994). However, successful quality improvement activities require a strong commitment of upper management through direct involvement in quality management activities. Despite the need for senior management support, the involvement of senior managers in defining improvement objectives can be problematic when workers and managers view quality differently. This reality is reflected in the comments of one nurse respondent who stated:

Generally speaking, frontline workers are expected to follow direction only, not have input into the direction the hospital is going. While I may consider quality of care to be important, my supervisor has a different slant on the type of quality care that is expected. Both are important, but it appears the manager's slant to quality is what is chosen, not the frontline worker.

Thus, the integrated framework provides a means to move beyond the managerial view of ED performance by reflecting interests of multiple care providers. By identifying common stakeholder interests and performance values, the framework provides a starting point for the development of improvement teams and a unifying focus for improvement activities.

Exploring Divergent Stakeholder Interests

The framework illustrates how evaluation of performance from the perspective of only one stakeholder group would result in an unbalanced evaluation of ED performance, which may limit the EDs ability to adapt to changing environmental demands. Because stakeholders may be constrained by their mental models, and depict a tendency to exploit well-established routines rather than explore new schemes (Miller 1993), stakeholders may develop myopia in relation to their perceptions of important aspects of ED performance (e.g., Levinthal and March 1993). The identification of unique and divergent stakeholder interests in an integrated framework may enhance organizational learning and change by enabling stakeholders to see different conceptions of performance, thereby helping them to discover new adaptive opportunities. Moreover, the integrated framework provides legitimacy for the assessment of ED performance from the perspective of other stakeholders, and provides an opportunity for stakeholders to consider the important measures together and to see how performance in one area may impact, or be achieved at, the expense of performance in another. For example, assessment of ED performance from an isolated perspective of home care stakeholders would focus on linkages with external providers, but exclude indicators that focus on internal workplace activities that are an integral part of an ED's capacity to respond to patient needs. By including performance measures that are valued primarily by home care stakeholders in their comprehensive assessment of ED performance, hospital stakeholders would gain a greater understanding of how the roles and functions of the ED are influenced by events that happen in the community prior to the patient's arrival, and thus target activities to improve the ability to effectively discharge ED patients into the community.

Reconciling of Stakeholder Differences

The finding that physician stakeholders had lower ratings on many of the indicators highlights the need for a structure within which stakeholder differences can be reconciled. There are two plausible explanations for the relationship between physician stakeholder ratings and those of other stakeholder groups. First, the low physician ratings may reflect physicians' skeptical views about the collection and use of performance data (Marshall et al. 2000).

Second, physicians may think that there is insufficient evidence to link many of the indicators included in the study to high quality ED care. When the indicators in the study are assessed against the criteria proposed by McGlynn (1998),6 it could be argued that only two study indicators meet these criteria: time to thrombolysis for acute myocardial infarction (AMI) and time to thrombolysis for stroke. Time to thrombolysis for AMI was rated as the most important indicator for physicians, nurses, managers, and prehospital stakeholders, and third for home care stakeholders. Time to thrombolysis for stroke was ranked as the second most important indicator for prehospital and home care stakeholders, third for nurses, twenty-third for managers, and sixty-ninth for physician stakeholders. How might these perceptions be reconciled? Treatment of AMI with intravenous thrombolytic agents is a widely recognized standard of emergency care for the treatment of AMI based on the strong body of evidence linking timely treatment to enhanced patient outcomes. In contrast, there is an ongoing controversy about the treatment of acute strokes with thrombolytic agents, which is reflected in the low rating of this indicator by physician stakeholders (69th out of 104). The results suggest that low physician ratings might reflect specialized technical knowledge, which is not shared by the other stakeholder groups, and a greater interest in evidence-based clinical decision making. The higher ratings of importance by the other stakeholder groups may reflect importance on the timeliness of care rather than the clinical efficacy of care. The dramatic difference in stakeholder rating on the importance of thrombolytic therapy in the care of stroke patients illustrates the value of displaying divergent stakeholder viewpoints. Ultimately, exploring and reconciling differences will require closer examination of the influence of clinical knowledge and evidence on stakeholder assessments of the performance indicators.

Certain limitations of the study should be kept in mind when interpreting the results. First, although an overall response rate of 62 percent was achieved across the five stakeholder groups, physician stakeholders are underrepresented in the full respondent group. Moreover, physicians reported the lowest mean scores and the greatest variation in ratings for the 104 indicators. One possible explanation for this variation in responses could be the result of a different understanding or interpretation of the response scale. Another is that the lower physician mean scores may reflect the true value of importance that physicians place on the study indicators. As discussed above, the health care performance measurement literature has focused on the importance of identifying causal antecedents of quality and the importance of establishing empirical links between performance measures and outcomes of care. Perhaps physicians believe that there is insufficient evidence to support linking many of the survey indicators to high-quality ED care. Perhaps the absence of a strict clinical outcome focus may have contributed to a lower response rate by physicians, and to lower physician ratings of importance for many of the indicators. Moreover, this study relied on self-report questionnaire data, which are subject to common method and social desirability biases. As we try to learn more about stakeholder interests in performance measurement, and physician interests in particular, future studies will need to examine factors that influence physician attitudes to performance measurement, including the influence of evidence-based practice.

The approach to select the 16 highest rated indicators (highest mean scores) for each stakeholder group as the subset of indicators for detailed analysis represents both a strength and a weakness of the study. The top 16 indicators allows us to examine the most important indicators for each stakeholder group, compare the rankings of importance among the groups, and explore stakeholder priorities independent of the concerns raised about respondents' use of the response scale. However, because this approach to indicator selection involves the use of an arbitrary cut-off point, the difference in the means between the 16th and 17th item in each group's list may be very small, and conclusions cannot be made about differences between these two performance indicators. Thus, the results of the analysis must be interpreted to reflect this methodological limitation.

Another threat to generalizability of the findings is related to the organization and management of home care services for ED patients. In Ontario, access to in home care is managed through a designated organization, which may not be the case in other jurisdictions. While there may be jurisdictional differences in access to in-home care, the implications of the findings for incorporating the perspectives of home care and other community stakeholders to obtain balanced assessments of performance is generalizable to other jurisdictions.

Finally, as noted in the performance measurement literature, issues of feasibility are important aspects of indicator development. The degree to which stakeholder ratings were influenced by perceptions of feasibility is unclear.

In summary, the unique contribution of the proposed ED framework is that it balances stakeholder interests as well as multiple dimensions and indicators of performance. The results demonstrate that ED performance interests are not homogeneous across stakeholder groups, and evaluating performance from the perspective of any one stakeholder group will result in unbalanced assessments. Moreover, external stakeholders, a group whose interests are frequently excluded from efforts to measure performance, provide important insights into ED performance related to the external environment and the integration of EDs with other components of the health care system. By integrating multiple stakeholder interests, the performance framework recognizes the interrelationships between the ED and its internal and external environments, and contributes knowledge of how ED performance is related to performance at other levels in the system.

The literature establishes that performance data have to be rigorous developed, effectively disseminated, and widely used in practice to have a positive impact on quality of care. But less emphasis has been placed on the role of managers in fostering reflective conversation as to how people at all levels interpret “ED quality care” and what influences those interpretations. When stakeholders understand not only what matters to other stakeholders, but why it matters, the stage is set for fruitful and constructive interdisciplinary learning and cooperation. Clinical and administrative leaders can create work environments in which respect, courtesy, and listening flourishes as stakeholders engage in these vital conversations. More immediately, managers and clinicians can use the proposed framework to examine the data they currently use to assess ED performance, and the extent to which these data balance both stakeholder interests and multiple dimensions of performance.

Finally, while the proposed framework incorporates different points of view, ultimately it does not provide a mechanism for resolving persistent differences in stakeholder perspectives. Moreover, the proposed framework is provider-centered, and does not incorporate the interests of patients and consumers—the ultimate arbitrators of performance. An important topic for further investigation is the examination of patient and consumer ED performance interests and the extent to which they dovetail with various stakeholder interests. In the end, integrating consumer interests into performance frameworks may provide a mechanism for arbitrating competing values.

Notes

Teaching hospitals include acute and pediatric hospitals that belong to the Ontario Council of Teaching Hospitals. Small hospitals includes hospitals that generally admit fewer that 3,500 weighted cases, have a referral population of fewer than 20,000 people, and are the only hospital in their community. Community hospitals include any acute care hospitals that do not fit the definition of a small or teaching hospital.

Home care in Ontario falls under the jurisdiction of the Ministry of Health and Long-Term Care (MOHLTC). In 1996 the Ministry established 43 Community Care Access Centers (COMMUNITY) across the province to provide a single point of access for home care and long-term placement services.

A copy of the questionnaire is available from the author.

Exploratory factor analysis (EFA) was conducted to determine the factor structure that underlies stakeholder perceptions of the importance of indicators of ED performance. The EFA support a 10-factor structure of ED performance (i.e., Workplace Skills and Support, Community Linkages, Ambulance and ED Response, Activity and Costs, Patient Outcomes, Patient Disposition, Staff Costs and Outcomes, Services and Resources, Patient Directed Discharge, and Process Time). The Competing Values Framework was used as a structure to interpret the meaning of the ten factors, and to group the indicators in one of the four models of performance. Detailed results are available from the author.

Although histograms of each of the 39 variables for each stakeholder group suggested that several of the distributions were left-skewed, given the robustness of the F-test it is assumed that the departure from normality are not sufficiently large enough to warrant transformation. To address concerns about the distribution of the data, Kruskal-Wallis tests (nonparametric equivalent to one-way ANOVA) were conducted and the results were compared to the ANOVA results. The findings between the two tests were consistent for all but three indicators (which detected significant ANOVA results).

McGlynn (1998) proposes that: (1) performance measures should have a significant impact on morbidity and/or mortality; (2) the link between measured processes and outcomes of care should be established empirically; (3) quality in the area measured should be variable or substandard; (4) providers should be able to enhance performance on the measure.

References

- Alserver RN, Ritchey T, Lima NP. Developing a Hospital Report Card to Demonstrate Value in Health Care. Journal for Healthcare Quality. 1995;17(1) doi: 10.1111/j.1945-1474.1995.tb00754.x. [DOI] [PubMed] [Google Scholar]

- Babcock Irvin C, Wyer P, Gerson L. Preventive Care in the Emergency Department, Part II: Clinical Preventive Services—An Emergency Medicine Evidence-Based Review. Academic Emergency Medicine. 2000;7(9):1042–54. doi: 10.1111/j.1553-2712.2000.tb02098.x. [DOI] [PubMed] [Google Scholar]

- Baker RP, Pink G. A Balanced Scorecard for Canadian Hospitals. Healthcare Management Forum. 1995;8(4):7–13. doi: 10.1016/S0840-4704(10)60926-X. [DOI] [PubMed] [Google Scholar]

- Berwick D, Godfrey A, Roessner J. Curing Health Care: New Strategies for Quality Improvement. San Francisco: Jossey-Bass; 1991. [Google Scholar]

- Cameron KS, Whetten DA. Organizational Effectiveness: A Comparison of Models. New York: Academic Press; 1983. [Google Scholar]

- Chan B, Schull M, Schultz S. Emergency Department Services in Ontario, 1993–2000. Toronto: Institute for Clinical Evaluative Sciences; 2001. [Google Scholar]

- Cleary TS. 2000 [Google Scholar]

- Cullen C, Calvert J. 1995 [Google Scholar]

- Cyert RM, March JG. A Behavioral Theory of the Firm. Englewood Cliffs, NJ: Prentice Hall; 1966. [Google Scholar]

- Dailey R. Approach to the Patient in the Emergency Department. In: Rosen P, Barkin R, editors. Emergency Medicine: Concepts and Clinical Practice. St. Louis, MO: Mosby Year Book, Inc; 1998. [Google Scholar]

- Donaldson MS, Nolan K. Measuring the Quality of Health Care: State of the Art. Joint Commission Journal on Quality Improvement. 1997;23(5):283–92. doi: 10.1016/s1070-3241(16)30319-4. [DOI] [PubMed] [Google Scholar]

- Drake D. Cardiac Team: An ED Quality Improvement Success Story. Journal of Emergency Nursing. 1998;24(4):324–8. doi: 10.1016/s0099-1767(98)90105-2. [DOI] [PubMed] [Google Scholar]

- Espinosa JA, Treiber PM, Kosnik L. A Reengineering Success Story: Process Improvement in Emergency Department X-Ray Cycle Time, Leading to Breakthrough Performance in the ED Ambulatory Care (Fast Track) Process. Ambulatory Outreach. 1997;(Winter):24–7. [PubMed] [Google Scholar]

- Georgopoulos B. Organizational Structure, Problem Solving, and Effectiveness: A Comparative Study of Hospital Emergency Services. San Francisco: Jossey-Bass; 1986. [Google Scholar]

- Grumbach K, Keane D, Bindman A. Primary Care and Public Emergency Department Overcrowding. American Journal of Public Health. 1993;83(3):372–8. doi: 10.2105/ajph.83.3.372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Joint Policy and Planning Committee. An Approach for Funding Small Hospitals. 1997 [Google Scholar]

- Jun M, Peterson R, Zsidisin G. The Identification and Measurement of Quality Dimensions in Health Care: Focus Group Interview Results. Health Care Management Review. 1998;23(4):81–96. doi: 10.1097/00004010-199810000-00007. [DOI] [PubMed] [Google Scholar]

- La Vallee R, McLaughlin C. Teams at the Core. In: McLaughlin C, Kaluzny A, editors. Continuous Quality Improvement in Health Care: Theory, Implementation, and Applications. Gaithersburg, MD: Aspen; 1994. [Google Scholar]

- Levinthal DA, March JG. The Myopia of Learning. Strategic Management Journal. 1993;14(Special Issue):95–112. [Google Scholar]

- Lipsey MW. Design Sensitivity: Statistical Power for Experimental Research. Newbury Park, CA: Sage; 1990. [Google Scholar]

- Lucas RHS, Sanford M. An Analysis of Frequent Users of Emergency Care at an Urban University Hospital. Annals of Emergency Medicine. 1998;32(5):563–8. doi: 10.1016/s0196-0644(98)70033-2. [DOI] [PubMed] [Google Scholar]

- Marshall NM, Shekelle PG, Leatherman S, Brook RH. The Public Release of Performance Data: What Do We Expect to Gain? A Review of the Evidence. Journal of the American Medical Association. 2000;283(14):1866–74. doi: 10.1001/jama.283.14.1866. [DOI] [PubMed] [Google Scholar]

- McGlynn EA. Choosing and Evaluating Clinical Performance Measures. Journal on Quality Improvement. 1998;24(9):470–9. doi: 10.1016/s1070-3241(16)30396-0. [DOI] [PubMed] [Google Scholar]

- Miller D. The Architecture of Simplicity. Academy of Management Review. 1993;18(1):116–38. [Google Scholar]

- Nash DB. Report on Report Cards. Health Policy Newsletter. 1998;11(2):1–3. [Google Scholar]

- Oakley Davies HT, Marshall MN. Public Disclosure of Performance Data: Does the Public Get What the Public Wants? The Lancet. 1999;353(9165):1639–40. doi: 10.1016/s0140-6736(99)90047-8. [DOI] [PubMed] [Google Scholar]

- Quinn RE, Rohrbaugh J. A Competing Values Approach to Organizational Effectiveness. Public Productivity Review. 1981 [Google Scholar]

- Quinn RE, Rohrbaugh J. A Spatial Model of Effectiveness Criteria: Towards a Competing Values Approach to Organizational Analysis. Management Science. 1983;29(3):363–77. [Google Scholar]

- Scanlon D, Darby C, Rolph E, Doty H. Use of Performance Information for Quality Improvement. Health Services Research. 2001;36(3):619–41. [PMC free article] [PubMed] [Google Scholar]

- Slovensky D, Fottler M, Houser H. Developing an Outcomes Report Card for Hospitals: A Case Study and Implementation Guidelines. Journal of Healthcare Management. 1998;43(1):15–34. [PubMed] [Google Scholar]

- Shortell S, Gillies R, Anderson D, Morgan Erickson K, Mitchell J. Remaking Health Care in America. Building Organized Delivery Systems. San Francisco: Jossey-Bass; 1996. [Google Scholar]

- Sicotte C, Champagne F, Contandriopoulos AP, Barnsley J, Beland F, Leggat SG, Denis L, Bilodeau H, Langley A, Bremond M, Baker GR. A Conceptual Framework for the Analysis of Health Care Organizations' Performance. Health Services Management Research. 1998;11(1):24–48. doi: 10.1177/095148489801100106. [DOI] [PubMed] [Google Scholar]

- Soberman Ginsburg L. Factors That Influence Line Managers' Perceptions of Hospital Performance Data. Health Services Research. 2003;38(1):261–86. doi: 10.1111/1475-6773.00115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turpin RS, Darcy LA, Koss R, McMahill C, Meyne K, Morton D, Rodriguez J, Schmaltz S, Schyve P, Smith P. A Model to Assess the Usefulness of Performance Indicators. International Journal for Quality in Health Care. 1996;8(4):321–9. doi: 10.1093/intqhc/8.4.321. [DOI] [PubMed] [Google Scholar]

- Whetten D, Cameron SK. Organizational Effectiveness: Old Models and New Constructs. In: Greenberg J, editor. Organizational Behavior: The State of the Science. Hillsdale, NJ: Erlbaum; 1994. [Google Scholar]

- Zammuto R. A Comparison of Multiple Constituency Models of Organizational Effectiveness. Academy of Management Review. 1984;9(4):606–16. [Google Scholar]

- Zinn J, Zalokowski A, Hunter L. Identifying Indicators of Laboratory Management Performance: A Multiple Constituency Approach. Health Care Management Review. 2001;26(10):40–53. doi: 10.1097/00004010-200101000-00004. [DOI] [PubMed] [Google Scholar]