Abstract

Objective

To review the existing literature (1980–2003) on survey instruments used to collect data on patients' perceptions of hospital care.

Study Design

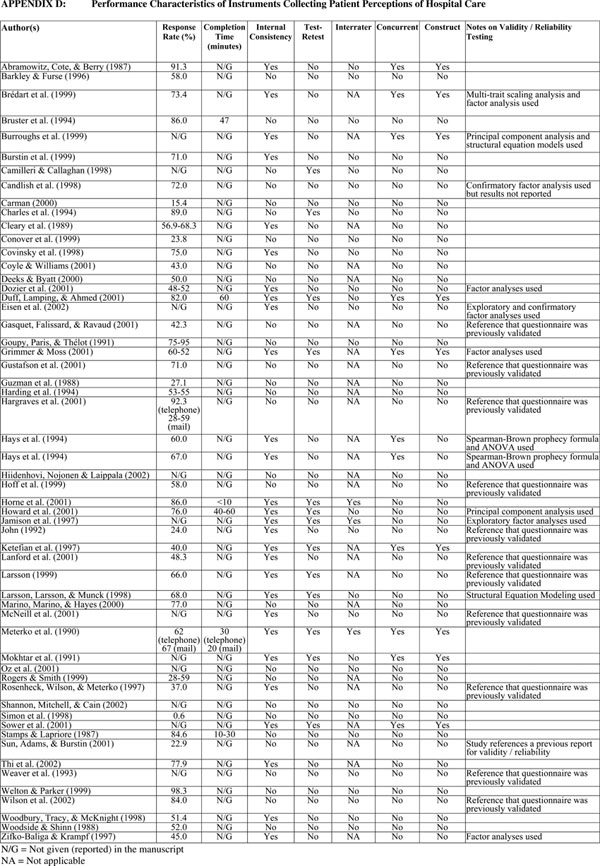

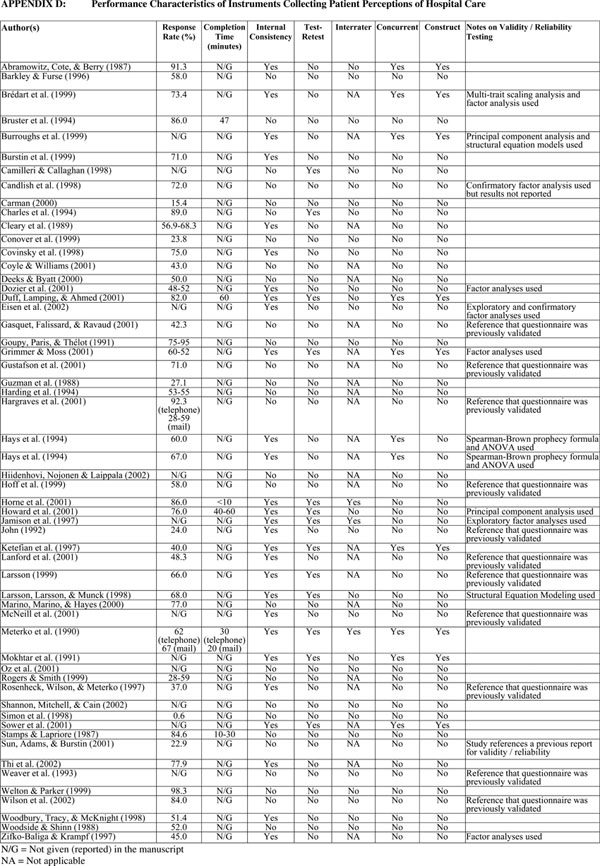

Eight literature databases were searched (PubMED, MEDLINE Pro, MEDSCAPE, MEDLINEplus, MDX Health, CINAHL, ERIC, and JSTOR). We undertook 51 searches with each of the eight databases, for a total of 408 searches. The abstracts for each of the identified publications were examined to determine their applicability for review.

Methods of Analysis

For each instrument used to collect information on patient perceptions of hospital care we provide descriptive information, instrument content, implementation characteristics, and psychometric performance characteristics.

Principal Findings

The number of institutional settings and patients used in evaluating patient perceptions of hospital care varied greatly. The majority of survey instruments were administered by mail. Response rates varied widely from very low to relatively high. Most studies provided limited information on the psychometric properties of the instruments.

Conclusions

Our review reveals a diversity of survey instruments used in assessing patient perceptions of hospital care. We conclude that it would be beneficial to use a standardized survey instrument, along with standardization of the sampling, administration protocol, and mode of administration.

Keywords: Patient reports of hospital care, patient satisfaction instruments, hospital quality, patient care

Patient evaluations of hospital care can be useful to payers, regulatory bodies, accrediting agencies, hospitals, and consumers. All of these parties can use this information to gauge quality of hospital care from the patients' perspective (Marino, Marino, and Hayes 2000). Hospitals can use this information to focus on specific areas for improvement, strategic decision making (Sower et al. 2001), managing the expectations of patients (Hickey et al. 1996), and benchmarking (Dull, Lansky, and Davis 1994). Ultimately, the reporting of patient evaluations can influence the delivery of care (Howard et al. 2001).

Many of the benefits of measuring and reporting patient evaluations of hospital care result from using standardized performance information. Clearly, to adequately make comparisons across hospitals requires each facility to measure and report the same information. As described elsewhere in this issue (Goldstein et al. 2005), systematic efforts are underway by the Centers for Medicare and Medicaid Services (CMS) to make standardized performance information on hospitals publicly available. As part of the background for this effort, we reviewed the existing literature on survey instruments used to collect data on patients' perceptions of hospital care. We describe and compare the format, content, and administration issues associated with these previously used survey instruments.

METHODS

Literature Search

We searched the PubMED, MEDLINE Pro, MEDSCAPE, MEDLINEplus, MDX Health, CINAHL (Cumulative Index for Nursing and Allied Health Literature), ERIC, and JSTOR databases. These searches were conducted with a combination of key words. We limited the searches to articles in English and those with abstracts. Searches returning more than 250 articles were further filtered by using terms such as “questionnaire” and “hospital.” We undertook 51 searches with each of the eight databases, for a total of 408 searches.

After the searches were conducted, the abstracts of the returned articles were examined, to determine their applicability for review. Relevant studies were defined liberally to be those that included any discussion of perceptions of hospital care. Articles that included a survey instrument were included in the analyses. When more than one article was identified reportedly using the same survey instrument, all the articles were included in the analyses; we did not restrict this review to one article per survey instrument. This approach was used because it provided more information on the instruments, such as response rates and psychometric properties.

Analyses

We identified articles that included a patient survey of hospital care for further examination. We also consulted several survey development texts (Krowinski and Steiber 1996; Cohen-Mansfield, Ejaz, and Werner 2000) to construct our approach for characterizing the hospital survey instruments.

These texts describe how to develop the content of a survey instrument, implementation issues to have a usable survey, and performance of the instrument. To characterize hospital survey instruments, we followed these same general steps. First, we provide some basic information, including the name of the instrument. Second, the contents of the instruments are presented, including the number of domains used. Third, implementation characteristics associated with conducting the surveys are presented, including the sample size per facility. Fourth, performance characteristics of the instruments are presented, including the response rates and psychometric properties.

Descriptive Information

We first identified the study author(s) and the name of the survey instrument developed (if any). Some instruments were modified from preexisting instruments, or were amalgams of preexisting instruments. Details on the origins/modifications of the survey instrument are given. The setting includes the number and type of hospitals in which the study was conducted. We also identified the type of respondent from whom the instrument was designed to collect data: patients, family, or staff. The number of respondents in the study is also provided.

Instrument Content

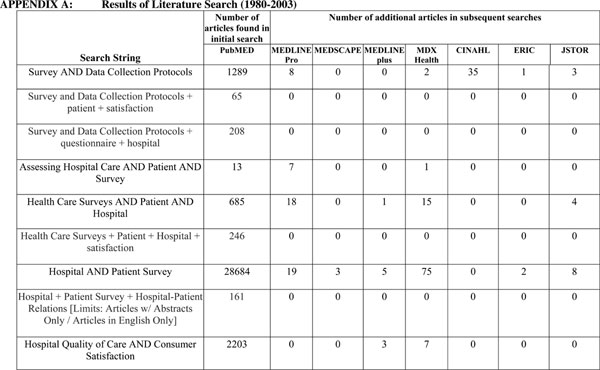

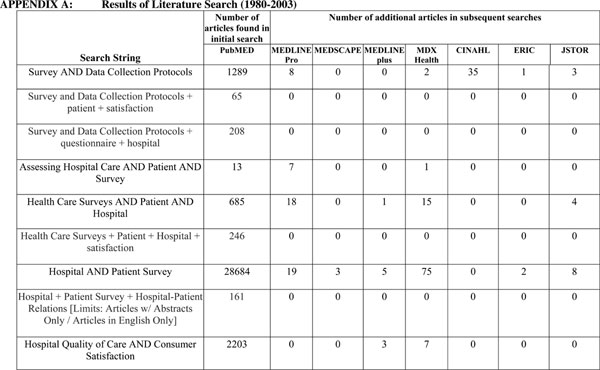

Second, the contents of the survey instruments are further described. We note the number of items in the instrument, excluding demographic and other background questions. Patient survey instruments often classify “like” questions together; for example, capabilities of staff, staff politeness, and the caring nature of staff might be sorted into a staff “bucket” or category. These similar questions are generally referred to as “domains.” We present the number of domains included in each instrument.

In addition, we present the type of domains included in each survey instrument. We also present the type of rating scale used in the instruments (Krowinski and Steiber 1996), and categorize the response scale in terms of whether it is open-ended or close-ended, the number of close-ended response options (dichotomous or multiple categories), and the nature of the response scale. The nature of the response scale included: evaluation (e.g., poor, fair, good, very good, excellent), frequency (e.g., none of the time to all of the time), satisfaction (e.g., very satisfied to very dissatisfied), visual analog, or Chernoff face formats. A visual analog format (also called graphic scaling) is a pictorial scale that usually has some implied interval value (e.g., scale from 0 to 10). Chernoff faces are a pictorial representation with smiles and frowns.

Implementation Characteristics

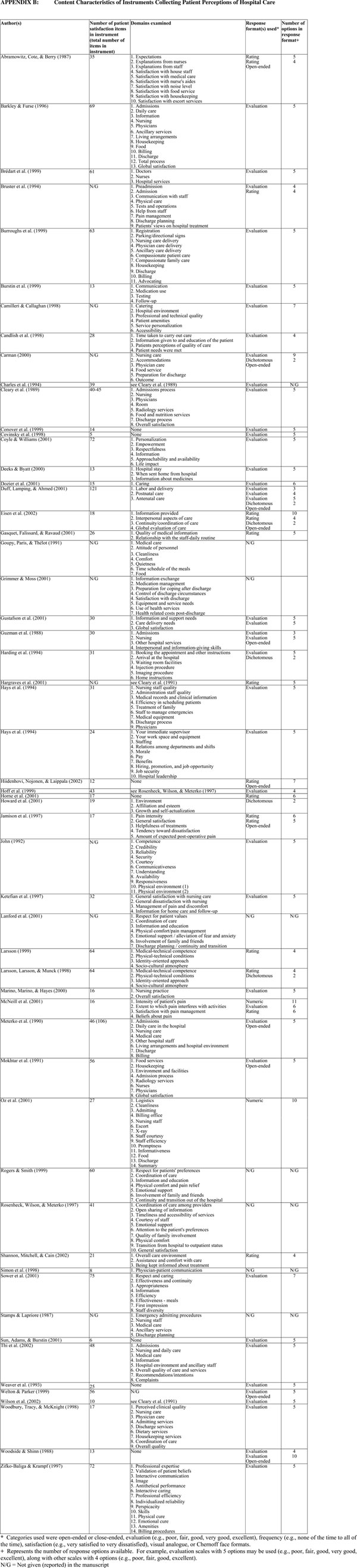

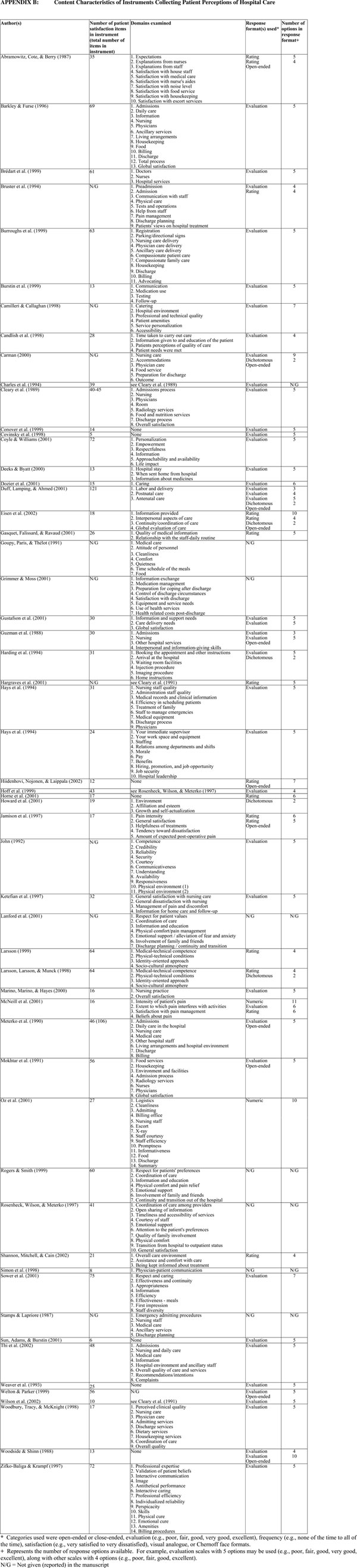

Third, we present characteristics of how the survey instrument was used—that is, implementation characteristics. We present whether any information is provided as to when the instrument was given (or mailed) to respondents (e.g., 2 days after discharge). Survey initiatives can also differ on the target sample size of respondents per facility (or unit). We record these target sample sizes. We also report whether the survey was administered by in-person interviews, telephone, mail, or drop-box.

In some cases, specific sample inclusions are given—for example, including only persons 18 years and older. These sample inclusions are also noted. In addition, in some cases sample restrictions are made—for example, excluding patients receiving hospice services. We record whether any such restrictions are made.

Performance Characteristics

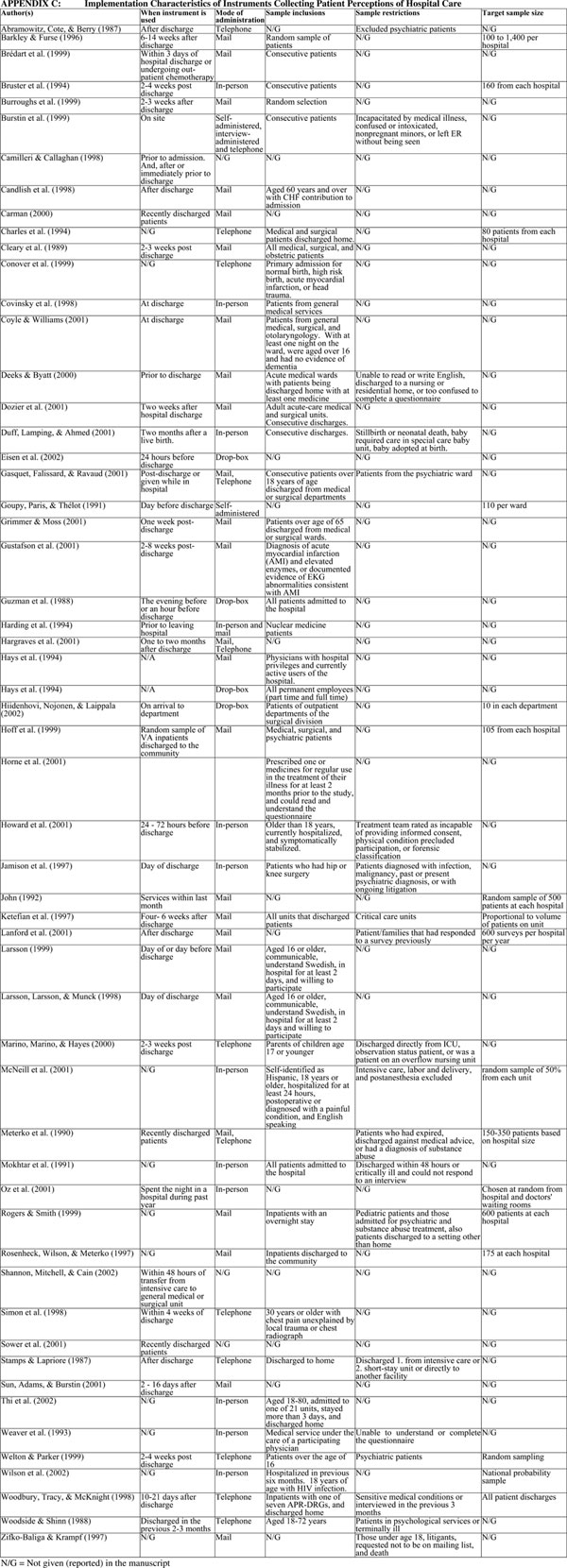

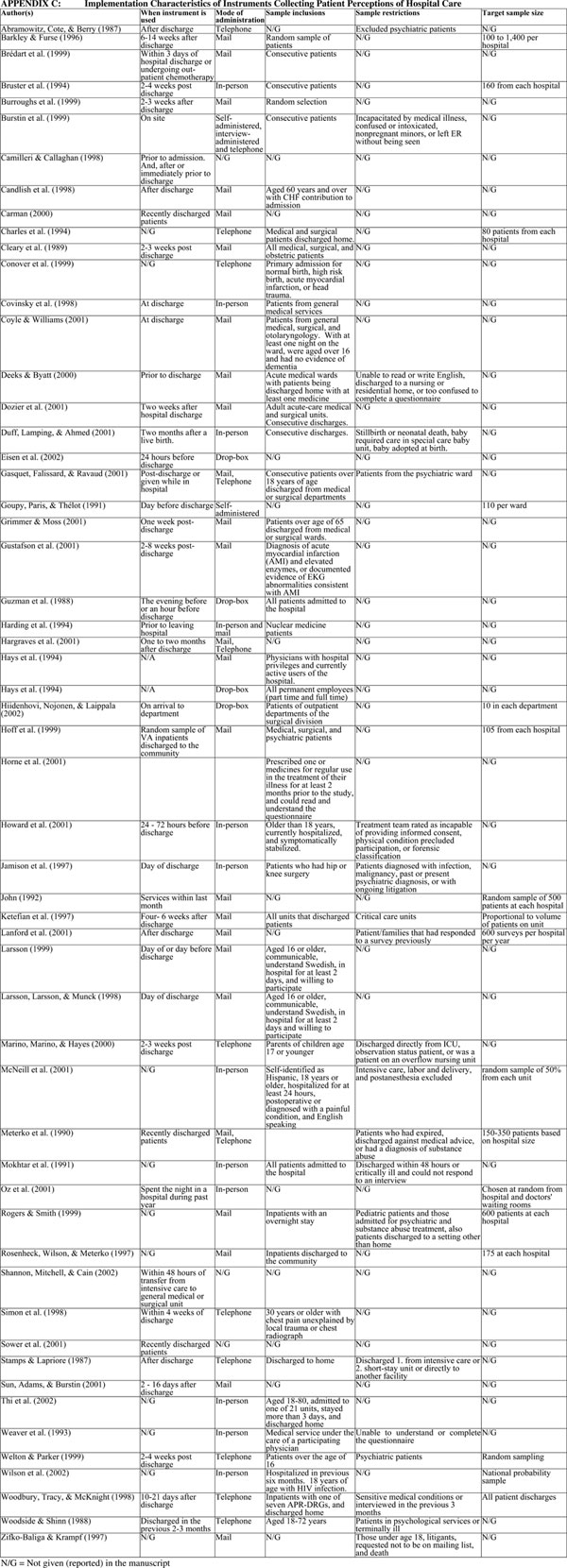

Fourth, we document the performance characteristics of the survey instruments. This includes the response rates and whether information about the reliability (internal consistency, test–retest, and interrater) and construct validity are reported.

We provide information on the time to conduct interviews and further psychometric properties of the instruments. In the interest of space, we do not report the actual levels of reliability and validity achieved for each instrument, instrument domains, or individual questions. Rather, we report whether reliability or validity of the instrument was evaluated (yes or no). Nevertheless, we do note any unusual results (e.g., poor performance), what analyses were used (e.g., factor analysis), or whether any other instrument assessment was undertaken.

RESULTS

The key words and results for the first nine key word searches are summarized in the on-line Appendix Table A. The results in the first column of figures of this table show the number of articles identified from the PubMED literature database. For example, 1,289 articles were identified in PubMED using the search term “survey and data collection protocols.” Results in subsequent columns show the number of additional articles identified, using the other literature databases. For example, using this same search term (“survey and data collection protocols”) eight additional articles were identified using MEDLINE Pro. This literature search identified 246 articles, of which all of the abstracts were reviewed. From these 246 abstracts, 84 full-length articles were subsequently examined, with 59 presenting sufficient information to be included in this review.

Descriptive Information

The descriptive characteristics of the survey instruments are shown in Table 1. The study settings are diverse, ranging from single hospitals to a system comprised of 135 medical centers. Studies are also geographically diverse coming from many regions of the U.S., Europe, and the Middle East. Likewise, the number of respondents included in these studies varied widely from 70 to approximately 25,000. Most studies used patients as respondents, although a few assessed family or caregivers. Twenty-six studies used mail surveys, 13 telephone, four drop-boxes, and 12 used in-person interviews.

Table 1.

Descriptive Characteristics of Instruments Collecting Patient Perceptions of Hospital Care

| Author(s) | Name of Instrument | Origins or Modification of Instrument | Setting | Respondent | Number of Respondents in Study |

|---|---|---|---|---|---|

| Stamps and Lapriore (1987) | None | Small community hospital (72 beds) | Patient | 130 | |

| Abramowitz, Cote, and Berry (1987) | None | Teaching hospital with 900 beds | Patient | 841 | |

| Barkley and Furse (1996) | NCG patient viewpoint survey | 76 medium to large, nonprofit, community and teaching hospitals | Patient | 19,556 | |

| Brédart et al. (1999) | Comprehensive assessment of satisfaction with care | Oncology institute in Italy | Patient | 290 | |

| Bruster et al. (1994) | Patient's charter | 36 hospitals in England | Patients | 5,150 | |

| Burroughs et al. (1999) | None | One health system | Patient | 7,083 | |

| Burstin et al. (1999) | None | Five urban teaching hospital emergency departments | Patient | 3,719 | |

| Camilleri and Callaghan (1998) | None | Questionnaire design was based on SERVQUAL | Private and public hospitals in Malta | Patient | N/G |

| Candlish et al. (1998) | Patient needs questionnaire | Two hospitals in Australia | Patient | 148 | |

| Carman (2000) | None | Dimensions reliable in previous studies | One hospital | Patient | 298 |

| Charles et al. (1994) | None | Adapted from Cleary et al. (1991) | 57 public acute care hospitals (Canada) | Patient | 4,599 |

| Cleary et al. (1989) | None | Brigham and Women's Hospital | Patient | 598 | |

| Conover et al. (1999) | None | Medical Outcomes Study questions | 13 hospitals in Tennessee and 10 hospitals in North Carolina | Patient | 1,691 |

| Covinsky et al. (1998) | None | Adapted from Ware and Hays (1988) | University Hospitals of Cleveland | Patient | 445 |

| Coyle and Williams (2001) | None | Major teaching hospital in Scotland | Patient | 97 | |

| Deeks and Byatt (2000) | None | Teaching hospital (U.K.) | Patient | 152 | |

| Dozier et al. (2001) | Patient perception of hospital experience with nursing | Ten hospitals | Patient | 1,148 | |

| Duff, Lamping, and Ahmed (2001) | Bangladeshi women's experience of maternity services | Four hospitals in London (U.K.) | Patient | 136 | |

| Eisen et al. (2002) | Perceptions of care (PoC) survey | 14 inpatient behavioral health and substance abuse programs | Patient | 6,972 | |

| Gasquet, Falissard, and Ravaud (2001) | None | Public teaching, short-stay, hospital for adults (Paris, France) | Patient | 482 | |

| Goupy et al. (1991) | None | Eight hospitals in France | Patient | 7,066 | |

| Grimmer and Moss (2001) | Prescriptions, ready to re-enter community, education placement, assurance of safety, realistic expectations, empowerment, directed to appropriate services (prepared) | One large tertiary public hospital in Adelaide (Australia) | Patient, caregiver | 500 (patient), 431 (caregiver) | |

| Gustafson et al. (2001) | None | Three community hospitals | Patient | 91 | |

| Guzman et al. (1988) | The patient satisfaction questionnaire | One 150 bed, not-for-profit, community and teaching hospital | Patient (or representative) | 2,156 | |

| Harding et al. (1994) | None | One hospital | Patient | 200 | |

| Hargraves et al. (2001) | None | Adapted from Cleary et al. (1991) | 22 regional hospitals and 51 in a health system in one state | Patients | 12,726 (regional), 12,680 (state) |

| Hays et al. (1994) | Short-form physician judgment system questionnaire | 44 hospitals owned by the Hospital Corporation of America | Physician | 3,435 | |

| Hays et al. (1994) | Short-form employee system questionnaire | 44 hospitals owned by the Hospital Corporation of America | Employees | 17,315 | |

| Hiidenhovi, Nojonen, and Laippala (2002) | None | One hospital in Finland | Patients | 7,679 | |

| Hoff et al. (1999) | None | Picker Institute questions | VA medical centers | Patients | 38,789 |

| Horne et al. (2001) | Satisfaction with information about medicines scale | Hospitals in London and Brighton (U.K.) | Patient | ||

| Howard et al. (2001) | Kentucky consumer satisfaction instrument | Public psychiatric hospital | Patient | 189 | |

| Jamison et al. (1997) | Patient discharge questionnaire | University-based tertiary hospital | Patient | 119 | |

| John (1992) | None | Three hospitals | Patient | 353 | |

| Ketefian et al. (1997) | None | One medical center | Patient | 619 | |

| Lanford et al. (2001) | Picker Institute Pediatric Inpatient survey | 20 hospitals | Family | 4,872 (year 1), 4,518 (year 2) | |

| Larsson (1999) | Quality of care from the patient's perspective | Three county Swedish hospitals | Patient | 1,056 | |

| Larsson, Larsson, and Munck (1998) | Quality of care from the patient's perspective | Swedish hospital | Patient | 611 | |

| Marino, Marino, and Hayes (2000) | None | One hospital | Family | 3,676 | |

| McNeill et al. (2001) | American pain society patient outcome questionnaire | One 400 bed regional hospital | Patient | 104 | |

| Meterko, Nelson, and Rubin (1990) | Patient judgments of hospital quality (PJHQ) questionnaire | Ten hospitals | Patient | 1,367 | |

| Mokhtar et al. (1991) | None | One general hospital in Kuwait | Patient | 493 | |

| Oz et al. (2001) | None | 11 hospitals within 60 miles of NYC | Patient | 261 | |

| Rogers and Smith (1999) | Picker-commonwealth survey of patient-centered care | 50 hospitals in Massachusetts | Patient | 12,680 | |

| Rosenheck, Wilson, and Meterko (1997) | None | Derived from Picker Institute | 135 Veterans Administration medical centers | Patient | 4,968 |

| Shannon, Mitchell, and Cain (2002) | Medicus viewpoint | 25 critical care units in 14 hospitals | Patients, nurses, and physicians | 489 (patients), 518 (nurses), 515 (physicians) | |

| Simon et al. (1998) | Picker-commonwealth survey of patient-centered care | Physician–patient communication questions | Brigham and Women's Hospital | Patient | 637 |

| Sower et al. (2001) | Key quality characteristics assessment for hospitals scale | 3 hospitals | Patient | 663 | |

| Stamps and Lapriore (1987) | None | Small community hospital (72 beds) | Patient | 130 | |

| Thi et al. (2002) | Patient judgments of hospital quality questionnaire | One hospital in France | Patient | 533 | |

| Weaver et al. (1993) | Physicians' humanistic behaviors questionnaire | One hospital | Patient | 119 | |

| Welton and Parker (1999) | None | One hospital | Patient | 1,008 | |

| Wilson et al. (2002) | None | Adapted questions from Picker survey | N/G | Patient | 1,074 |

| Woodbury, Tracy, and McKnight (1998) | Inpatient perceptions of quality questionnaire | Abridged version of long form used | 23 hospitals | Patient | 3,720 |

| Woodside and Shinn (1988) | None | One hospital | Patient | 70 | |

| Zifko-Baliga and Krampf (1997) | None | Large Midwestern hospital | Patient | 529 |

Instrument Content

Summary characteristics of the content, implementation, and performance of the survey instruments are shown in Table 2. The information is also provided by each of the major modes of survey administration (mail, telephone, drop-box, and in-person interviews). The number of items included in the instruments varied from eight to 121. The average values show more questions were generally asked in mail surveys (average=45 questions) and fewer in drop-box surveys (average=16 questions). Likewise, the number of domains varied and included instruments with one domain to as many as 14. However, the average number of domains by mode of administration seemed quite consistent at about six.

Table 2.

Summary Statistics for Implementation, Content, and Performance Characteristics of Instruments Used to Collect Patient Perceptions of Hospital Care

| Survey Characteristic | Mail Surveys (N =26 studies)* | Telephone Surveys (N =13 studies)* | Drop Box (N =4 studies)* | In-Person Interviews (N =12 studies)* |

|---|---|---|---|---|

| Content characteristics | ||||

| Average number of items (range) | 45 (15–72) | 23 (8–39) | 16 (12–30) | 33 (10–121) |

| Average number of domains (range) | 8 (1–14) | 5 (2–10) | 6 (4–10) | 7 (3–14) |

| Implementation characteristics | ||||

| When survey is administered: percent of studies (N) | 12% (3) Less than 2 weeks postdischarge | 0% (0) Less than 2 weeks post discharge | On-site | On-site |

| 12% (3) 2–4 weeks postdischarge | 31% (4) 2–4 weeks post discharge | |||

| 19% (5) >4 weeks postdischarge | 15% (2) >4 weeks postdischarge | |||

| Target sample size (range) | 510 (100–1400) | 115 (80–150) | 10 (NA)† | 160 (NA) |

| Performance characteristics | ||||

| Average response rate (range) | 47% (15–77) | 70% (24–91) | 63% (27–95) | 75% (53–84) |

| Psychometrics reported: percent of studies (N) | 54% (14) internal consistency | 15% (2) internal consistency | 75% (3) internal consistency | 58% (7) internal consistency |

| 19% (5) test–retest | 8% (1) test–retest | 25% (1) test–retest | 33% (4) test–retest | |

| NA interrater | 0% (0) interrater | 0% (0) interrater | 8% (1) interrater | |

| 19% (5) concurrent | 8% (1) concurrent | 50% (2) concurrent | 17% (2) concurrent | |

| 15% (4) construct | 8% (1) construct | 25% (1) construct | 17% (2) construct | |

NA, not applicable

Eighty-four articles were reviewed, 59 were included in this review; we were unable to determine the mode of administration in three articles and a further five articles used more than one mode of administration. Therefore, the number of studies cited in this table does not total 59

This information was only given in one study

We also identified various response formats; however, the most common was an evaluation type response format. The names of the domains and response formats are shown in the on-line Appendix Table B. Looking across studies, we found that the five most-common domains were nursing, physicians, food, services, and care (not shown in the table).

Implementation Characteristics

The lag postdischarge until mailing of the survey instrument varied from 1 week to 6 months, although many (19 percent) studies using mail surveys were sent more than 4 weeks postdischarge. Telephone surveys had a shorter lag time; among the studies for which data were available, most were conducted between 2 and 4 weeks postdischarge. The majority of studies using drop-box surveys or in-person interviews were conducted on-site prior to patient discharge. Few studies provided a target sample size when using the survey instrument. Studies that did give target sample sizes varied from 10 per department to 1,400 per hospital. The target sample size averaged 510 per hospital for mail surveys and 10 per hospital for drop-box surveys. Sample inclusions and exclusions are also shown in the on-line Appendix Table C.

Performance Characteristics

Response rates varied widely, with one study having a 17 percent response rate and another study having a 92 percent response rate. The average response rate for mail surveys was 47 percent, telephone interviews 70 percent, drop-box surveys 63 percent, and in-person interviews 75 percent. The majority of studies provided little information on instrument reliability or validity. For example, 54 percent of studies using mail surveys provided measures of internal consistency; but only 15 percent provided measures of construct validity.

More detailed information on the performance characteristics of the survey instruments, including the completion time, reliability and validity, are provided in the on-line Appendix Table D. However, few studies provided information on the time needed to complete the instrument. For the six studies that provided this information, the time needed to complete instruments varied from 10 to 60 minutes.

DISCUSSION

Prior reviews of the literature on patient perceptions of hospital care have cited the existence of relatively few survey instruments (e.g., Rubin 1990). In this review we examined 59 studies providing information on 54 different survey instruments. This provides some evidence of the increasing salience of use of patient survey instruments addressing hospital care in recent years.

In examining these survey instruments we provide details on descriptive information, instrument content, implementation characteristics, and performance characteristics. Following these general categories a critique of these existing instruments follows, along with suggestions for future research.

Descriptive Information

The survey instruments varied greatly with respect to both the number of institutional settings in which they had been used and the number of patients to whom they had been administered (see Table 1). On the one hand, many survey instruments have been administered in only a few institutional settings and to a limited number of patients; on the other hand, we identified instruments that haven been administered at hundreds of hospitals with thousands of patients. The SERVQUAL, Press Ganey Associates instrument, and Picker questionnaires are notable examples of survey instruments falling in the latter category.

Instrument Content

A variety of different domains of patient perceptions are represented (see Table 2 and on-line Appendix A). In some cases this occurs because survey instruments were developed for very specific purposes (e.g., for use in the ER). The more general instruments measuring patient perceptions of hospital care did yield domains common to these instruments: nursing, physicians, food, services, and care. However, these domains differ in the level of detail of questions and number of items. This divergence in emphasis may be a consequence of the fact that many instruments were developed using expert opinion rather than patient input. Expert opinion is often confounded with clinical measures of care quality (Oermann and Templin 2000) and does not necessarily correspond with patient evaluation of care quality. Indeed, of the 54 different survey instruments we examined, 13 (24 percent) were developed using expert opinion, six (11 percent) used patient input, seven (13 percent) used both expert opinion and patient input, and for 28 survey instruments (52 percent) we could not determine how they were developed.

In future questionnaire development initiatives, consulting studies that have examined patients' evaluations of care may be useful. The Institute of Medicine's (IOM 1999) nine domains of care were developed from patient input and can provide useful guidelines for survey-item development. These nine domains are: respect for patient's values; attention to patient's preferences and expressed needs; coordination and integration of care; information, communication, and education; physical comfort; emotional support; involvement of family and friends; transition and continuity; and access to care. The CAHPS Hospital Survey domains (nurse communication, nursing services, doctor communication, physical environment, pain control, communication about medicines, and discharge information) were derived from the IOM domains (Goldstein et al. 2005). These domains derived from patient input may be influenced by cultural factors, and may not apply to settings outside of the U.S. For example, some modifications to items (e.g., race/ethnicity questions) were made and items were added in a recent adaptation of the CAHPS hospital survey for use in Dutch hospitals (Arah et al. 2005).

It was not surprising that we identified survey instruments developed for very specific purposes (e.g., for use in the ER [Burstin et al. 1999], nuclear medicine [Harding et al. 1994], psychiatric care [Eisen et al. 2002], oncology [Brédart et al. 1999], and critical care [Conover et al. 1999]). General instruments may not be specific enough to identify areas for quality improvement in all hospital departments. Longer instruments can be advantageous, as they can provide more detailed information to departments, but there are limits on how many questions can be included in a survey instrument before response rates are adversely affected. An alternative approach to extending the length of instruments is to use a brief core set of questions, followed by a series of specific questions more relevant to individual departments. States and accreditation bodies can use the core instrument to assess perceptions of care in the aggregate, and the more-specific items could be used by the facility for quality improvement. However, this requires a more-sophisticated targeting approach that would require a patient receive the correct department-specific instrument.

Implementation Characteristics

Instruments measuring patient perceptions of hospital care were administered by telephone, mail, and interview; or were collected by drop-box (see Table 2 and on-line Appendix C). However, the majority of survey instruments were administered by mail. No web-based patient surveys were identified.

No agreement on when the instruments should be administered was evident. Many instruments were mailed months after patient discharge. This may have something to do with the limits of hospital administrative databases that are used to construct the mailing lists. Still, a potential bias to collecting information is recall bias. That is, over time patients' abilities to reliably remember their hospital care may decline (Krowinski and Steiber 1996). For example, Ley et al. (1976) found ratings of care to be less positive at 8 weeks compared with those at 2 weeks. However, we cannot simply generalize that a shorter lag time is more beneficial. If patients' perceptions become more or less negative as time passes, this does not necessarily mean that they are based on less reliable recollections. Recollections may be just as accurate, but the features of care patients regard as important may change over time. It may also be that additional time postdischarge gives patients additional data points to consider (e.g., regarding coordination or care and/or success of treatment) by the time they are asked to evaluate their care. In these cases, it would be reasonable for patients' evaluations to be affected by this new, additional data, and thus change/differences in evaluations associated with the passage of time may not necessarily reflect memory reliability at all.

Several studies found telephone interviews to be advantageous in terms of more-rapid contact with patients and higher response rates (e.g., Woodside and Shinn 1988; Hargraves et al. 2001). However, a potential bias to surveys involves social desirability, leading to more positive assessments of care (Hays and Ware 1986). Social desirability might be more of a problem with telephone administration because this involves more-direct contact, and it may be more difficult for the respondent to feel anonymous. In addition, phone interviews may cost more than mail surveys.

The length of the survey instruments was highly varied. As discussed above, short, very general instruments may be less useful than longer detailed instruments. But, longer instruments carry more response burden and may lower response rates. Indeed, examining the instruments in this review, we find a −.65 correlation between response rate and number of questions.

Performance Characteristics

One of the limitations of surveys of patient perceptions of hospital care can be low response rates (Barkley and Furse 1996). Low response rates are cited as providing different results from high response rates (Barkley and Furse 1996). Our review of the literature identified both relatively high and low response rates (see Table 2 and on-line Appendix D). Nonrespondents may have less favorable perceptions of care than respondents (Barkley and Furse 1996; Mazor et al. 2002; Elliott et al., 2005). However, often very little information is provided on how the response rates are calculated.

A related issue is the representativeness of the patients selected to receive a survey instrument. In some cases the sampling criteria that were used in the studies reviewed appear to have been biased (e.g., by including only patients hospitalized for 3 days or more). In other cases, the sampling criteria may be appropriate, but precision of estimates and power to detect differences was limited by small sample size. Few studies reviewed provided information on whether a sufficiently large sample size was selected such that reasonably accurate point estimates could be reported or that meaningful differences between units of interest at a given point in time could be reported. In addition, Ehnfors and Smedby (1993) report, such problems in sampling can greatly influence survey results.

We identified few articles providing extensive psychometric properties (see Table 2 and on-line Appendix D). In many studies even basic psychometric properties were often not reported. This is important because poor survey instruments “… act as a form of censorship imposed on patients. They give misleading results, limit the opportunity of patients to express their concerns about different aspects of care, and can encourage professionals to believe that patients are satisfied when they are highly discontented” (Whitfield and Baker 1992, p. 152).

CONCLUSION

The plethora of survey instruments measuring patient perceptions of hospital care is heartening; but, the advantages of a standardized core instrument cannot be realized when multiple different instruments are used. For example, benchmarking and report cards facilitating consumer choice may be impeded. Our review clearly shows that there are a variety of approaches regarding the instrument domains, how they are measured, and when perceptions of care are elicited. We conclude that a standardized instrument would be beneficial. Moreover, our results also show that it may also be beneficial to standardize the sampling, administration protocol, and mode of administration of survey instruments.

SUPPLEMENTARY MATERIAL

The following supplementary material for this article is available online:

Results of Literature Search (1980–2003).

Content Characteristics of Instruments Collecting Patient Perceptions of Hospital Care.

Implementation Characteristics of Instruments Collecting Patient Perceptions of Hospital Care.

Performance Characteristics of Instruments Collecting Patient Perceptions of Hospital Care.

Acknowledgments

This work was supported by grant number 5 U18 HS00924 from the Agency for Healthcare Research and Quality.

REFERENCES

- Abramowitz S, Cote AA, Berry E. Analyzing Patient Satisfaction: A Multianalytic Approach. Quality Review Bulletin. 1987;13:122–30. doi: 10.1016/s0097-5990(16)30118-x. [DOI] [PubMed] [Google Scholar]

- Applebaum RA, Straker JK, Geron SM. Assessing Satisfaction in Health and Long-Term Care: Practical Approaches to Hearing the Voices of Consumers. New York: Springer Publishing Company; 2000. [Google Scholar]

- Arnetz JE, Arnetz BB. The Development and Application of a Patient Satisfaction Measurement System for Hospital-Wide Quality Improvement. International Journal for Quality in Health Care. 1996;8:555–66. doi: 10.1093/intqhc/8.6.555. [DOI] [PubMed] [Google Scholar]

- Arah OA, Asbroek GH, Delnoij DM, de Koning JS, Stam P, Poll A, Vriens B, Schmidt P, Klazinga NS. Psychometric Properties of the Dutch Version of the Hospital-Level Consumer Assessment Health Plans Study (CAHPS) Instrument. Health Services Research. 2005 doi: 10.1111/j.1475-6773.2005.00462.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barkley WM, Furse DH. Changing Priorities for Improvement: The Impact of Low Response Rates in Patient Satisfaction. Journal on Quality Improvement. 1996;22:427–33. doi: 10.1016/s1070-3241(16)30245-0. [DOI] [PubMed] [Google Scholar]

- Bell R, Krivich MJ, Boyd MS. Charting Patient Satisfaction. Marketing Health Services. 1997;17:22–9. [PubMed] [Google Scholar]

- Brédart A, Razavi D, Robertson C, Brignone S, Fonzo D, Petit JY, de Haes JM. Timing of Patient Satisfaction Assessment: Effect on Questionnaire Acceptability, Completeness of Data, Reliability and Variability of Scores. Patient Education and Counseling. 2002;46:131–6. doi: 10.1016/s0738-3991(01)00152-5. [DOI] [PubMed] [Google Scholar]

- Brédart A, Razavi D, Robertson C, Didier F, Scaffidi E, de Haes JM. A Comprehensive Assessment of Satisfaction with Care: Preliminary Psychometric Analysis in an Oncology Institute in Italy. Annals of Oncology. 1999;10:839–46. doi: 10.1023/a:1008393226195. [DOI] [PubMed] [Google Scholar]

- Bruster S, Jarman B, Bosanquet N, Weston D, Erens R, Delbanco TL. National Survey of Hospital Patients. British Medical Journal. 1994;309:1542–6. doi: 10.1136/bmj.309.6968.1542. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burroughs TE, Davies AR, Cira JC, Dunagan WC. Understanding Patient Willingness to Recommend and Return: A Strategy for Prioritizing Improvement Opportunities. Joint Commission Journal on Quality Improvement. 1999;25:271–87. doi: 10.1016/s1070-3241(16)30444-8. [DOI] [PubMed] [Google Scholar]

- Burstin HR, Conn A, Setnik G, Rucker DW, Cleary PD, O'Neil AC, Orav EJ, Sox CM, Brennan TA, and the Harvard Emergency Department Quality Study Investigators Benchmarking and Quality Improvement: The Harvard Emergency Department Quality Study. American Journal of Medicine. 1999;107:437–49. doi: 10.1016/s0002-9343(99)00269-7. [DOI] [PubMed] [Google Scholar]

- Camilleri D, O'Callaghan M. Comparing Public and Private Hospital Care Service Quality. International Journal of Health Care Quality Assurance. 1998;11:127–33. doi: 10.1108/09526869810216052. [DOI] [PubMed] [Google Scholar]

- Candlish P, Watts P, Redman S, Whyte P, Lowe J. Elderly Patients with Heart Failure: A Study of Satisfaction with Care and Quality of Life. International Journal for Quality in Health Care. 1998;10:141–6. doi: 10.1093/intqhc/10.2.141. [DOI] [PubMed] [Google Scholar]

- Carman JM. Patient Perceptions of Service Quality: Combining the Dimensions. Journal of Management in Medicine. 2000;14:339–56. doi: 10.1108/02689230010363061. [DOI] [PubMed] [Google Scholar]

- Charles C, Gauld M, Chambers L, O'Brien B, Haynes RB, Labelle R. How Was Your Hospital Stay? Patients' Reports about Their Care in Canadian Hospitals. Canadian Medical Association Journal. 1994;150:1813–22. [PMC free article] [PubMed] [Google Scholar]

- Chou S, Boldy D. Patient Perceived Quality of Care in Hospital in the Context of Clinical Pathways: Development of Approach. Journal of Quality in Clinical Practice. 1999;19:89–93. doi: 10.1046/j.1440-1762.1999.00307.x. [DOI] [PubMed] [Google Scholar]

- Cleary PD, Edgman-Levitan S, Roberts M, Moloney TW, McMullen W, Walker JD, Delbance TL. Patients Evaluate Their Hospital Care: A National Survey. Health Affairs. 1991;10(4):254–67. doi: 10.1377/hlthaff.10.4.254. [DOI] [PubMed] [Google Scholar]

- Cleary PD, Keroy L, Karapanos G, McMullen W. Patient Assessments of Hospital Care. Quality Review Bulletin. 1989;15:172–9. doi: 10.1016/s0097-5990(16)30288-3. [DOI] [PubMed] [Google Scholar]

- Cohen G, Forbes J, Garraway M. Can Different Patient Satisfaction Survey Methods Yield Consistent Results? Comparison of Three Surveys. British Medical Journal. 1996;313:841–4. doi: 10.1136/bmj.313.7061.841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen-Mansfield J, Ejaz F, Werner P. Satisfaction Surveys in Long-Term Care. New York: Springer Publishing Company, Inc; 2000. [Google Scholar]

- Conover CJ, Mah ML, Rankin PJ, Sloan FA. The Impact of Tenn Care on Patient Satisfaction with Care. American Journal of Managed Care. 1999;5:765–75. [PubMed] [Google Scholar]

- Coulter A, Cleary PD. Patients' Experiences with Hospital Care in Five Countries. Health Affairs. 2001;20:244–52. doi: 10.1377/hlthaff.20.3.244. [DOI] [PubMed] [Google Scholar]

- Covinsky KE, Rosenthal GE, Chren M, Justice AC, Fortinsky RH, Palmer RM, Landefeld CS. The Relation between Health Status Changes and Patient Satisfaction in Older Hospitalized Medical Patients. Journal of General Internal Medicine. 1998;13:223–9. doi: 10.1046/j.1525-1497.1998.00071.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coyle J, Williams B. Valuing People as Individuals: Development of an Instrument through a Survey of Person-Centeredness in Secondary Care. Journal of Advanced Nursing. 2001;36:450–9. doi: 10.1046/j.1365-2648.2001.01993.x. [DOI] [PubMed] [Google Scholar]

- Deeks PA, Byatt K. Are Patients Who Self-Administer Their Medicines in Hospital More Satisfied with Their Care? Journal of Advanced Nursing. 2000;31:395–400. doi: 10.1046/j.1365-2648.2000.01286.x. [DOI] [PubMed] [Google Scholar]

- Dozier AM, Kitzman H, Ingersoll GL, Holmberg S, Schultz AW. Development of an Instrument to Measure Patient Perception of the Quality of Nursing Care. Research in Nursing and Health. 2001;24:506–17. doi: 10.1002/nur.10007. [DOI] [PubMed] [Google Scholar]

- Draper M, Cohen P, Buchan H. Seeking Consumer Views: What Use Are Results of Hospital Patient Satisfaction Surveys? International Journal for Quality in Health Care. 2001;13:463–8. doi: 10.1093/intqhc/13.6.463. [DOI] [PubMed] [Google Scholar]

- Duff L, Lamping D, Ahmed L. Evaluating Satisfaction with Maternity Care in Women from Minority Ethnic Communities: Development and Validation of a Sylheti Questionnaire. International Journal for Quality in Health Care. 2001;13:215–30. doi: 10.1093/intqhc/13.3.215. [DOI] [PubMed] [Google Scholar]

- Dull VT, Lansky D, Davis N. Evaluating a Patient Satisfaction Survey for Maximum Benefit. Joint Commission Journal on Quality Improvement. 1994;20:444–53. doi: 10.1016/s1070-3241(16)30089-x. [DOI] [PubMed] [Google Scholar]

- Ehnfors M, Smedby B. Patient Satisfaction Surveys Subsequent to Hospital Care: Problems of Sampling, Non-Response and Other Losses. Quality Assurance in Health Care. 1993;5:19–32. doi: 10.1093/intqhc/5.1.19. [DOI] [PubMed] [Google Scholar]

- Eisen SV, Wilcox M, Idiculla T, Speredelozzi A, Dickey B. Assessing Consumer Perceptions of Inpatient Psychiatric Treatment: The Perceptions of Care Survey. Joint Commission Journal on Quality Improvement. 2002;28:510–26. doi: 10.1016/s1070-3241(02)28056-6. [DOI] [PubMed] [Google Scholar]

- Elliott MN, Edwards C, Angeles J, Hambarsoomians K, Hays RD. Patterns of Unit and Item Non-Response in the CAHPS® Hospital Survey. Health Services Research. 2005 doi: 10.1111/j.1475-6773.2005.00476.x. http://www.blackwell-synergy.com DOI: 10.1111/j.1475-6773.2005.00476.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gasquet I, Falissard B, Ravaud P. Impact of Reminders and Method of Questionnaire Distribution on Patient Response to Mail-Back Satisfaction Survey. Journal of Clinical Epidemiology. 2001;54:1174–80. doi: 10.1016/s0895-4356(01)00387-0. [DOI] [PubMed] [Google Scholar]

- Goldstein MS, Elliott SD, Guccione AA. The Development of an Instrument to Measure Satisfaction with Physical Therapy. Physical Therapy. 2000;80:853–63. [PubMed] [Google Scholar]

- Goldstein L, Farquhar MB, Crofton C, Garfinkel S, Darby C. Why Another Patient Survey of Hospital Care. Health Services Research. 2005 doi: 10.1111/j.1475-6773.2005.00477.x. http://www.blackwell-synergy.com DOI: 10.1111/j.1475-6773.2005.00477.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goupy F, Ruhlmann O, Paris O, Thélot B. Results of a Comparative Study of In-Patient Satisfaction in Eight Hospitals in the Paris Region. Quality Assurance in Health Care. 1991;3:309–15. doi: 10.1093/intqhc/3.4.309. [DOI] [PubMed] [Google Scholar]

- Grimmer K, Moss J. The Development, Validity and Application of a New Instrument to Assess the Quality of Discharge Planning Activities from the Community Perspective. International Journal for Quality in Health Care. 2001;13:109–16. doi: 10.1093/intqhc/13.2.109. [DOI] [PubMed] [Google Scholar]

- Gustafson DH, Arora NK, Nelson EC, Boberg EW. Increasing Understanding of Patient Needs during and after Hospitalization. Joint Commission Journal on Quality Improvement. 2001;27:81–92. doi: 10.1016/s1070-3241(01)27008-4. [DOI] [PubMed] [Google Scholar]

- Guzman PM, Sliepcevich EM, Lacey EP, Vitello EM, Matten MR, Woehlke PL, Wright WR. Tapping Patient Satisfaction: A Strategy for Quality Assessment. Patient Education and Counseling. 1988;12:225–33. doi: 10.1016/0738-3991(88)90006-7. [DOI] [PubMed] [Google Scholar]

- Hall MF. Patient Satisfaction or Acquiescence? Comparing Mail and Telephone Survey Results. Journal of Health Care Marketing. 1995;15:54–61. [PubMed] [Google Scholar]

- Harding LK, Griffith J, Harding VM, Tulley NJ, Notghi A, Thomson WH. Closing the Audit Loop: A Patient Satisfaction Survey. Nuclear Medicine Communications. 1994;15:275–8. doi: 10.1097/00006231-199404000-00158. [DOI] [PubMed] [Google Scholar]

- Hargraves JL, Wilson IB, Zaslavsky A, James C, Walker JD, Rogers G, Cleary PD. Adjusting for Patient Characteristics When Analyzing Reports from Patients about Hospital Care. Medical Care. 2001;39:635–41. doi: 10.1097/00005650-200106000-00011. [DOI] [PubMed] [Google Scholar]

- Hays RD, Larson C, Nelson EC, Batalden PB. Hospital Quality Trends: A Short-Form Patient-Based Measure. Medical Care. 1991;29(7):661–8. [PubMed] [Google Scholar]

- Hays RD, Nelson EC, Larson CO, Batalden PB. Short-Form Measures of Physician and Employee Judgments about Hospital Quality. Jounral on Quality Improvement. 1994;20(2):66–77. doi: 10.1016/s1070-3241(16)30045-1. [DOI] [PubMed] [Google Scholar]

- Hays R, Ware JE. Social Desirability and Patient Satisfaction Ratings. Medical Care. 1986;24:519–25. doi: 10.1097/00005650-198606000-00006. [DOI] [PubMed] [Google Scholar]

- Hickey ML, Kleefield SF, Pearson SD, Hassan SM, Harding M, Haughie P, Lee TH, Brennan TA. Payer-Hospital Collaboration to Improve Patient Satisfaction with Hospital Discharge. Joint Commission Journal on Quality Improvement. 1996;22:336–44. doi: 10.1016/s1070-3241(16)30237-1. [DOI] [PubMed] [Google Scholar]

- Hiidenhovi H, Laippala P, Nojonen K. Development of a Patient-Oriented Instrument to Measure Service Quality in Outpatient Departments. Journal of Advanced Nursing. 2001;34:696–705. doi: 10.1046/j.1365-2648.2001.01799.x. [DOI] [PubMed] [Google Scholar]

- Hiidenhovi H, Nojonen K, Laippala P. Measurement of Outpatients' Views of Service Quality in a Finnish University Hospital. Journal of Advanced Nursing. 2002;38:59–67. doi: 10.1046/j.1365-2648.2002.02146.x. [DOI] [PubMed] [Google Scholar]

- Hoff RA, Rosenheck RA, Meterko M, Wilson NJ. Mental Illness as a Predictor of Satisfaction with Inpatient Care at Veterans Affairs Hospitals. Psychiatric Services. 1999;50:680–5. doi: 10.1176/ps.50.5.680. [DOI] [PubMed] [Google Scholar]

- Horne R, Hankins M, Jenkins R. The Satisfaction with Information about Medicines Scale SIMS: A New Measurement Tool for Audit and Research. Quality in Health Care. 2001;10:135–40. doi: 10.1136/qhc.0100135... [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoskins EJ, Noor FAA, Ghasib SAF. Implementing TQM in a Military Hospital in Saudi Arabia. Joint Commission Journal on Quality Improvement. 1994;20:454–64. doi: 10.1016/s1070-3241(16)30090-6. [DOI] [PubMed] [Google Scholar]

- Howard PB, Clark JJ, Rayens MK, Hines-Martin V, Weaver P, Littrell R. Consumer Satisfaction with Services in a Regional Psychiatric Hospital: A Collaborative Research Project in Kentucky. Archives of Psychiatric Nursing. 2001;15:10–23. doi: 10.1053/apnu.2001.20577. [DOI] [PubMed] [Google Scholar]

- Jamison RN, Ross MJ, Hoopman P, Griffin F, Levy J, Daly M, Schaffer JL. Assessment of Postoperative Pain Management: Patient Satisfaction and Perceived Helpfulness. Clinical Journal of Pain. 1997;13:229–36. doi: 10.1097/00002508-199709000-00008. [DOI] [PubMed] [Google Scholar]

- Jenkinson C, Coulter A, Bruster S. The Picker Patient Experience Questionnaire: Development and Validation Using Data from In-Patient Surveys in Five Countries. International Journal of Quality and Health Care. 2002;14:353–8. doi: 10.1093/intqhc/14.5.353. [DOI] [PubMed] [Google Scholar]

- Jenkinson C, Coulter A, Bruster S, Richards N, Chandola T. Patients' Experiences and Satisfaction with Health Care: Results of a Questionnaire Study of Specific Aspects of Care. Quality and Safety of Health Care. 2002;11:335–9. doi: 10.1136/qhc.11.4.335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- John J. Getting Patients to Answer: What Affects Response Rates? Journal of Health Care Marketing. 1992;12:46–51. [PubMed] [Google Scholar]

- John J. Patient Satisfaction: The Impact of Past Experience. Journal of Health Care Marketing. 1992;12:56–64. [PubMed] [Google Scholar]

- Johnson TR. Family Matters: A Quality Initiative through the Patient's Eyes. Journal of Nursing Care Quality. 2000;14:64–71. doi: 10.1097/00001786-200004000-00008. [DOI] [PubMed] [Google Scholar]

- Ketefian S, Redman R, Nash MG, Bogue EL. Inpatient and Ambulatory Patient Satisfaction with Nursing Care. Quality Management in Health Care. 1997;5:66–75. [PubMed] [Google Scholar]

- Krowinski WJ, Steiber SR. Measuring and Managing Patient Satisfaction. American Hospital Publishing Inc; 1996. [Google Scholar]

- Lanford A, Clausen R, Mulligan J, Hollenback C, Nelson S, Smith V. Measuring and Improving Patients' and Families' Perceptions of Care in a System of Pediatric Hospitals. Joint Commission Journal on Quality Improvement. 2001;27:415–29. doi: 10.1016/s1070-3241(01)27036-9. [DOI] [PubMed] [Google Scholar]

- Larrabe JH, Bolden LV. Defining Patient-Perceived Quality of Nursing Care. Journal of Nursing Care Quality. 2001;16:34–60. doi: 10.1097/00001786-200110000-00005. [DOI] [PubMed] [Google Scholar]

- Larsson BW. Patients' Views on Quality of Care: Age Effects and Identification of Patient Profiles. Journal of Clinical Nursing. 1999;8:693–700. doi: 10.1046/j.1365-2702.1999.00311.x. [DOI] [PubMed] [Google Scholar]

- Larsson G, Larsson BW, Munck IME. Refinement of the Questionnaire “Quality of Care from the Patient's Perspective” Using Structural Equation Modelling. Scandinavian Journal of Caring Science. 1998;12:111–8. [PubMed] [Google Scholar]

- Ley P, Bradshaw PW, Kincey JA, Atherton ST. Increasing Patients' Satisfaction with Communications. British Journal of Social and Clinical Psychology. 1976;15:403–13. doi: 10.1111/j.2044-8260.1976.tb00052.x. [DOI] [PubMed] [Google Scholar]

- Lehmann LS, Brancati FL, Chen MC, Roter D, Dobs AS. The Effect of Bedside Case Presentations on Patients' Perceptions of Their Medical Care. New England Journal of Medicine. 1997;336:1150–5. doi: 10.1056/NEJM199704173361606. [DOI] [PubMed] [Google Scholar]

- Lohr KN, Donaldson MS, Walker AJ. Medicare: A Strategy for Quality Assurance, III: Beneficiary and Physician Focus Groups. Quality Review Bulletin. 1991;17:242–53. doi: 10.1016/s0097-5990(16)30464-x. [DOI] [PubMed] [Google Scholar]

- Longo DR, Land G, Schramm W, Fraas J, Hoskins B, Howell V. Consumer Reports in Health Care: Do They Make a Difference in Patient Care? Journal of the American Medical Association. 1997;278:1579–84. [PubMed] [Google Scholar]

- Marino BL, Marino EK, Hayes JS. Parents' Report of Children's Hospital Care: What It Means for Your Practice. Pediatric Nursing. 2000;26:195–8. [PubMed] [Google Scholar]

- Mazor KM, Clauser BE, Field T, Yood RA, Gurwitz JH. A Demonstration of the Impact of Response Bias on the Results of Patient Satisfaction Surveys. Health Services Research. 2002;37:1403–17. doi: 10.1111/1475-6773.11194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDaniel C, Nash JG. Compendium of Instruments Measuring Patient Satisfaction with Nursing Care. Quality Review Bulletin. 1990;16:182–8. doi: 10.1016/s0097-5990(16)30361-x. [DOI] [PubMed] [Google Scholar]

- McNeill JA, Sherwood GD, Stark PL, Nieto B. Pain Management Outcomes for Hospitalized Hispanic Patients. Pain Management in Nursing. 2001;2:25–36. doi: 10.1053/jpmn.2001.22039. [DOI] [PubMed] [Google Scholar]

- Merakou K, Dalla-Vorgia P, Garania-Papadatos T, Kourea-Kremastinou J. Satisfying Patient's Rights. Nursing Ethics. 2001;8:499–509. doi: 10.1177/096973300100800604. [DOI] [PubMed] [Google Scholar]

- Meterko M, Nelson E, Rubin H. Patient Judgments of Hospital Quality. Report of a Pilot Study. Medical Care. 1990;28:1–56. [PubMed] [Google Scholar]

- Mishra DP, Singh J, Wood V. An Empirical Investigation of Two Competing Models of Patient Satisfaction. Journal of Ambulatory Care Marketing. 1991;4:17–36. doi: 10.1300/J273v04n02_02. [DOI] [PubMed] [Google Scholar]

- Mokhtar SA, Guirguis W, Al-Torkey M, Khalaf A. Patient Satisfaction with Hospital Services: Development and Testing of a Measuring Instrument. Journal of the Egyptian Public Health Association. 1991;66:693–720. [PubMed] [Google Scholar]

- Oermann MH, Templin T. Important Attributes of Quality of Health Care: Consumer Perspectives. Journal of Nursing Scholarship. 2000;32:167–72. doi: 10.1111/j.1547-5069.2000.00167.x. [DOI] [PubMed] [Google Scholar]

- Oz MC, Zikria J, Mutrie C, Slater JP, Scott C, Lehman S, Connolly MW, Asher DT, Ting W, Namerow PG. Patient Evaluation of the Hotel Function of Hospitals. The Heart Surgery Forum. 2001;4:166–71. [PubMed] [Google Scholar]

- Roberts JG, Tugwell P. Comparison of Questionnaires Determining Patient Satisfaction with Medical Care. Health Services Research. 1987;22:637–54. [PMC free article] [PubMed] [Google Scholar]

- Rogers G, Smith DP. Reporting Comparative Results from Hospital Patient Surveys. International Journal for Quality in Health Care. 1999;11:251–9. doi: 10.1093/intqhc/11.3.251. [DOI] [PubMed] [Google Scholar]

- Rosenheck R, Wilson N, Meterko M. Influence of Patient and Hospital Factors on Consumer Satisfaction with Inpatient Mental Health Treatment. Psychiatric Services. 1997;12:1553–61. doi: 10.1176/ps.48.12.1553. [DOI] [PubMed] [Google Scholar]

- Rosenthal GE, Harper DL. Cleveland Health Quality Choice: A Model for Collaborative Community-Based Outcomes Assessment. Joint Commission Journal on Quality Improvement. 1994;20:425–42. doi: 10.1016/s1070-3241(16)30088-8. [DOI] [PubMed] [Google Scholar]

- Rosenthal GE, Hammar PJ, Way LE, Shipley SA, Doner D, Wojtala B, Miller J, Harper DL. Using Hospital Performance Data in Quality Improvement: The Cleveland Health Quality Choice Experience. Journal on Quality Improvement. 1998;24:347–60. doi: 10.1016/s1070-3241(16)30386-8. [DOI] [PubMed] [Google Scholar]

- Rubin HR. Patient Evaluations of Hospital Care: A Review of the Literature. Medical Care. 1990;28:S3–9. doi: 10.1097/00005650-199009001-00002. [DOI] [PubMed] [Google Scholar]

- Shannon SE, Mitchell PH, Cain KC. Patients, Nurses, and Physicians Have Differing Views of Quality of Critical Care. Journal of Nursing Scholarship. 2002;34:173–9. doi: 10.1111/j.1547-5069.2002.00173.x. [DOI] [PubMed] [Google Scholar]

- Simon SE, Patrick A. Understanding and Assessing Consumer Satisfaction in Rehabilitation. Journal of Rehabilitation Outcomes. 1997;1:1–14. [Google Scholar]

- Simon SR, Lee TH, Goldman L, McDonough AL, Pearson SD. Communication Problems for Patients Hospitalized with Chest Pain. Journal of General Internal Medicine. 1998;13:836–8. doi: 10.1046/j.1525-1497.1998.00247.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sower V, Duffy J, Kilbourne W, Kohers G, Jones P. The Dimensions of Service Quality for Hospitals: Development and Use of the KQCAH Scale. Health Care Management Review. 2001;2:47–59. doi: 10.1097/00004010-200104000-00005. [DOI] [PubMed] [Google Scholar]

- Stamps PL, Lapriore EH. Measuring Patient Satisfaction in a Community Hospital. Hospital Topics. 1987;65:22–6. doi: 10.1080/00185868.1987.10543808. [DOI] [PubMed] [Google Scholar]

- Stratmann WC, Zastowny TR, Bayer LR, Adams EH, Black GS, Fry PA. Patient Satisfaction Surveys and Multicollinearity. Quality Management in Health Care. 1994;2:1–12. [PubMed] [Google Scholar]

- Sun B, Adams J, Burstin H. Validating a Model of Patient Satisfaction with Emergency Care. Annals of Emergency Medicine. 2001;38:527–32. doi: 10.1067/mem.2001.119250. [DOI] [PubMed] [Google Scholar]

- Thi PL, Briancon S, Empereur F, Guillemin F. Factors Determining Inpatient Satisfaction with Care. Social Science and Medicine. 2002;54:493–504. doi: 10.1016/s0277-9536(01)00045-4. [DOI] [PubMed] [Google Scholar]

- Thomas LH, Bond S. Measuring Patient Satisfaction with Nursing. Journal of Advanced Nursing. 1996;23:747–56. doi: 10.1111/j.1365-2648.1996.tb00047.x. [DOI] [PubMed] [Google Scholar]

- Ware JE, Berwick DM. Conclusions and Recommendations. Medical Care. 1990;28:S39–44. [PubMed] [Google Scholar]

- Ware EJ, Hays RD. Methods for Measuring Patient Satisfaction with Specific Medical Encounters. Medical Care. 1988;26:393–402. doi: 10.1097/00005650-198804000-00008. [DOI] [PubMed] [Google Scholar]

- Weaver MJ, Ow CL, Walker DJ, Degenhardt EF. A Questionnaire for Patients' Evaluations of Their Physicians' Humanistic Behaviors. Journal of General Internal Medicine. 1993;8:135–9. doi: 10.1007/BF02599758. [DOI] [PubMed] [Google Scholar]

- Welton R, Parker R. Study of the Relationships of Physical and Mental Health to Patient Satisfaction. Journal for Healthcare Quality. 1999;21:39–46. doi: 10.1111/j.1945-1474.1999.tb01003.x. [DOI] [PubMed] [Google Scholar]

- Whitfield M, Baker R. Measuring Patient Satisfaction for Audit in General Practice. Quality in Health Care. 1992;3:151–3. doi: 10.1136/qshc.1.3.151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson IB, Ding L, Hays RD, Shapiro MF, Bozzette SA, Cleary PD. HIV Patients' Experiences with Inpatient and Outpatient Care: Results of a National Survey. Medical Care. 2002;40:1149–60. doi: 10.1097/00005650-200212000-00003. [DOI] [PubMed] [Google Scholar]

- Woodbury D, Tracy D, McKnight E. Does Considering Severity of Illness Improve Interpretation of Patient Satisfaction Data? Journal for Healthcare Quality. 1998;20:33–40. doi: 10.1111/j.1945-1474.1998.tb00270.x. [DOI] [PubMed] [Google Scholar]

- Woodside A, Shinn R. Customer Awareness and Preferences toward Competing Hospital Services. Journal of Health Care Marketing. 1988;8:39–47. [PubMed] [Google Scholar]

- Zifko-Baliga GM, Krampf RF. Managing Perceptions of Hospital Quality. Marketing Health Services. 1997;17:28–35. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Results of Literature Search (1980–2003).

Content Characteristics of Instruments Collecting Patient Perceptions of Hospital Care.

Implementation Characteristics of Instruments Collecting Patient Perceptions of Hospital Care.

Performance Characteristics of Instruments Collecting Patient Perceptions of Hospital Care.