Abstract

Objective

To develop and validate an instrument for measuring knowledge and skills in evidence based medicine and to investigate whether short courses in evidence based medicine lead to a meaningful increase in knowledge and skills.

Design

Development and validation of an assessment instrument and before and after study.

Setting

Various postgraduate short courses in evidence based medicine in Germany.

Participants

The instrument was validated with experts in evidence based medicine, postgraduate doctors, and medical students. The effect of courses was assessed by postgraduate doctors from medical and surgical backgrounds.

Intervention

Intensive 3 day courses in evidence based medicine delivered through tutor facilitated small groups.

Main outcome measure

Increase in knowledge and skills.

Results

The questionnaire distinguished reliably between groups with different expertise in evidence based medicine. Experts attained a threefold higher average score than students. Postgraduates who had not attended a course performed better than students but significantly worse than experts. Knowledge and skills in evidence based medicine increased after the course by 57% (mean score before course 6.3 (SD 2.9) v 9.9 (SD 2.8), P<0.001). No difference was found among experts or students in absence of an intervention.

Conclusions

The instrument reliably assessed knowledge and skills in evidence based medicine. An intensive 3 day course in evidence based medicine led to a significant increase in knowledge and skills.

What is already known on this topic

Numerous observational studies have investigated the impact of teaching evidence based medicine to healthcare professionals, with conflicting results

Most of the studies were of poor methodological quality

What this study adds

An instrument assessing basic knowledge and skills required for practising evidence based medicine was developed and validated

An intensive 3 day course on evidence based medicine for doctors from various backgrounds and training level led to a clinically meaningful improvement of knowledge and skills

Introduction

It is often assumed that training health professionals in evidence based medicine reduces unacceptable variation in clinical practice and leads to improved patient outcomes. This will only be true if the training improves knowledge and skills and that these in turn are translated into improved clinical decision making.

Recent reviews focusing mainly on teaching critical appraisal have cast doubt on the effectiveness of training in evidence based medicine.1–5 Despite the general impression that some benefit might result from such training, most studies were poorly designed and the conclusions tentative. A recently published, well designed trial showed effectiveness and durability of teaching evidence based medicine to residents, but the conclusions were weakened as the instruments used to measure knowledge and skills had not been validated.6

We aimed to develop and validate an instrument to assess changes in knowledge and skills of participants on a course in evidence based medicine and to investigate whether short courses in evidence based medicine lead to a significant increase in knowledge and skills.

Methods

Our study comprised three stages: development of the instrument, validation of the instrument, and before and after assessment of the effect of a short course in evidence based medicine. The instrument was developed by five experienced teachers in evidence based medicine (N Donner-Banzhoff, LF, H-W Hense, RK, and K Weyscheider).

Participants

The instrument was validated by administering it to a group of experts in evidence based medicine (tutors with formal methodological training or graduates from a training workshop for tutors in evidence based medicine) and controls (third year medical students with no previous exposure to evidence based medicine). We then administered the instrument to participants on the evidence based medicine course in Berlin (course participants) with little exposure to evidence based medicine. We included three cohorts: 82 students attending the course in 1999 (course A), 50 students attending the course in 2000 (course B), and 71 students attending the course in 2001 (course C).

Development and validation of instrument

We aimed to develop an instrument that measures doctors' basic knowledge about interpreting evidence from healthcare research, skills to relate a clinical problem to a clinical question and the best design to answer it, and the ability to use quantitative information from published research to solve specific patient problems. The questions were built around typical clinical scenarios and linked to published research studies. The instrument was designed to measure deep learning (ability to apply concepts in new situations) rather than superficial learning (ability to reproduce facts). The final instrument consisted of two sets of 15 test questions with similar content (see bmj.com).

We assessed equivalence of the two sets and their reliability.7,8 We considered a Cronbach's α greater than 0.7 as satisfactory.9,10 We assessed the instrument's ability to discriminate between groups with different levels of knowledge by comparing the three groups with varying expertise: experts versus course participants (before test) versus controls (analysis of variance with Scheffé's method for post hoc comparisons).

Educational effect

Educational intervention

The 3 day course is based on the model developed at McMaster University, Canada11; a curriculum has been published separately.12 The course introduces motivated doctors with little prior knowledge of evidence based medicine to its principles (for example, identification of problems, formulation of questions, critical appraisal, consideration of clinical decision options) and promotes the appropriate use of appraised evidence, especially quantitative estimates of risk, benefit, and harm.

Administration of Berlin questionnaire—Participants received a questionnaire within 4 weeks of the course (before test) and another on the last day of the course (after test). The sequence of the test sets was reversed year on year. The participants were explicitly informed about the experimental character of the test, that participation was voluntary, and that results would not be disclosed to them. Tutors were asked not to modify their sessions with a view to coaching for the test.

Analysis of effect—The main outcome measure was change in mean score after the intervention (absolute score difference). We also measured relative change in score (unadjusted) and relative change in score (adjusted for differences in score before the course), calculated as gain achieved or maximal achievable gain.13 Correct answers scored 1 point, wrong answers 0 points. We compared before and after scores with a paired t test. We also conducted a sensitivity analysis with an unpaired t test comparing the scores of participants who completed only one set to those who completed both sets. We considered as significant a P value ⩽0.05.

Results

In total, 266 people took part in our study: 43 experts in evidence based medicine, 20 controls, and 203 participants of one of three courses in evidence based medicine. Twelve per cent (n=25) of the last group had some exposure to evidence based medicine before the course. Overall, 161 participants (61%) returned both sets of the questionnaire (see bmj.com). The main reasons for partial completion were failure to submit the questionnaire before the course (n=55), failure to participate in the course (n=8), and failure of identification (n=38).

Validation and discrimination

Course participants scored moderately poorly on the questionnaire administered before the test (mean score per question 0.42 (0.19)), whereas experts scored well (0.81 (0.29)) and controls poorly (0.29 (0.43)). The two sets of questionnaires were psychometrically equivalent (intraclass correlation coefficient for students and experts 0.96 (95% confidence interval 0.92 to 0.98, P<0.001)). Cronbach's α was 0.75 for set 1 and 0.82 for set 2.

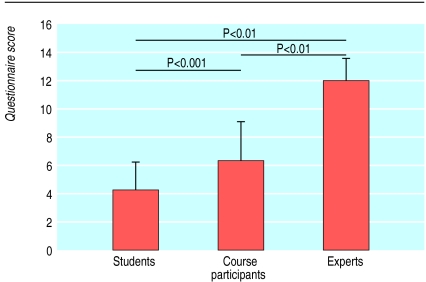

The mean score of controls (4.2 (2.2)), course participants (6.3 (2.9)), and experts (11.9 (1.6)) were significantly different (analysis of variance, P<0.001; all comparisons between groups, P<0.01), whereas the scores of course participants in all three courses before the course were comparable (course A, 5.8 (2.8); B, 6.9 (2.8); and C, 6.6 (3.01); analysis of variance, P>0.5). The instrument distinguished reliably between groups with different expertise in evidence based medicine; groups with comparable knowledge performed consistently (fig 1).

Figure 1.

Assessment of discriminative ability using Berlin questionnaire

Gain in knowledge and skills

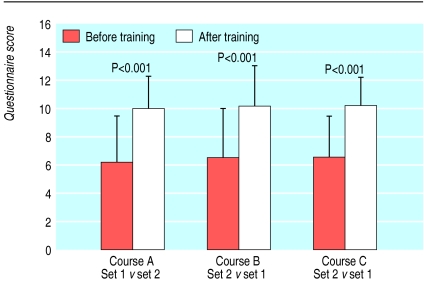

Participation in the course was associated with a mean improvement of 3.6 out of 15 questions answered correctly (P<0.001), a significant increase in knowledge and skills (fig 2). This result was similar across all three courses, but the scores of the course participants (9.9 (2.4)) remained significantly below those achieved by the experts (11.9 (1.6); P<0.001). The crude relative increase in scores across all three courses was 57%. When adjusted for the individual potential for improvement, the improvement rate was 36% (46%). Sensitivity analysis did not detect a significant difference between partial responders (one set returned) and complete responders (both sets returned).

Figure 2.

Comparison of before and after test scores of participants of consecutive short courses in evidence based medicine (paired analysis)

Discussion

Objective evaluation of training in evidence based medicine is difficult but essential, because self perception of ability in evidence based medicine correlates poorly with objective assessment of knowledge and skills.14 Most studies have lacked appropriate instruments.15–17 Recent reviews of critical appraisal programmes showed only non-significant effects.1–3,5 By using a validated questionnaire that reliably distinguishes between different competence levels, we found a 57% crude increase in knowledge and skills after short courses in evidence based medicine, a gain that is likely to be educationally significant.

Not all skills in evidence based medicine (for example, formulation of question, competencies in searching) taught in the course were captured by the instrument. Instead, the instrument was concentrated more on the handling of research information. Some claim that critical appraisal is the least important step for practising evidence based medicine, by referring to increasingly available resources that have already been appraised.18–21 But even this “preprocessed” information is hard to apply unless the practitioner is competent in interpreting commonly used quantitative measures of risk and benefit.22

Evaluation of educational interventions concerns at least four dimensions: satisfaction of participants, learning (knowledge and skills), behavioural change (transfer of knowledge and skills to workplace), and outcomes (impact on patients).23 Our instrument assessed short term learning, but our study was not designed to measure the long term effect on knowledge or even short term behavioural change. Although improved skills are surely conditions for change in behaviour to occur, more research is needed on the impact on clinical behaviour of courses in evidence based medicine.1,3,4

The intervention (a short training course in evidence based medicine) encompasses numerous components. Self selection of motivated doctors, active learning techniques, relevance to clinical practice, and intensity of the programme (participant to tutor ratio of 4:1) are likely to be important factors contributing to the learning effect. We aimed to investigate whether an effect of teaching evidence based medicine can be shown. We found a substantial effect, but our results cannot distinguish the separate contribution of each component. Furthermore, factors other than the course could be partially responsible for the observed effect4: for example, the inability to blind for intervention and assessment could have led to improvement due to awareness of being evaluated (Hawthorne effect), studying at home in advance of the course, or an impact of the study on tutors' behaviour. To reduce such an impact we made enrolment to the test voluntary, explained its experimental nature, and denied feedback (even correct answers). All but two of the tutors were not involved in the development of the test and conduct of the study. The questionnaires were administered only to participants and retrieved after completion.

Our results remain valid even if learning is enhanced by the inclusion of a formal assessment of knowledge and skills before and after the course. Indeed, now that a valid instrument is available, assessment of participants may become routine in courses in evidence based medicine as part of quality assurance.

Further research

Our study contributes to the validation of intensive, problem based curriculums in training in evidence based medicine. Further research should distinguish the individual components of the courses that determine their effectiveness and assess the impact on patient outcomes. Expansion of the question sets and validation of the Berlin questionnaire in different languages, professional groups, and cultural settings will enable the generalisability of our findings to be tested in other settings, as well as allowing comparisons between countries and the evaluation of different teaching methods.

Supplementary Material

Acknowledgments

We thank N Donner-Banzhoff (Marburg), H-W Hense (Munster), and K Wegscheider (Berlin) for their support for development and critical discussion of the questions, the Kaiserin-Friedrich Foundation (J Hammerstein, Ch Schröter), the Berlin Chamber of Physicians (G Jonitz), and the University Hospital Charité (in particular the students from the computer pool) for organising the workshops, R Kersten, J Meyerrose, and B Meyerrose for their assistance in collecting and analysing the data, and the participants and tutors. Course organisers who are interested in participating in the ongoing international study on teaching evidence based medicine should contact RK.

Footnotes

Funding: RK is supported by an academic career award for women from the senate of Berlin.

Competing interests: None declared.

Full details of the instrument and a table showing completion of questionnaire appear on bmj.com

References

- 1.Norman GR, Shannon SI. Effectiveness of instruction in critical appraisal (evidence-based medicine) skills: a critical appraisal. CMAJ. 1998;158:177–181. [PMC free article] [PubMed] [Google Scholar]

- 2.Green ML. Graduate medical education training in clinical epidemiology, critical appraisal, and evidence-based medicine: a critical review of curricula. Acad Med. 1999;74:686–694. doi: 10.1097/00001888-199906000-00017. [DOI] [PubMed] [Google Scholar]

- 3.Parkes J, Hyde C, Deeks J, Milne R. Cochrane Library. Issue 3. Oxford: Update Software; 2001. Teaching critical appraisal skills in health care settings. [DOI] [PubMed] [Google Scholar]

- 4.Taylor R, Reeves B, Ewings P, Binns S, Keast J, Mears R. A systematic review of the effectiveness of critical appraisal skills training for clinicians. Med Educ. 2000;34:120–125. doi: 10.1046/j.1365-2923.2000.00574.x. [DOI] [PubMed] [Google Scholar]

- 5.Alguire PC. A review of journal clubs in postgraduate medical education. J Gen Intern Med. 1998;13:347–353. doi: 10.1046/j.1525-1497.1998.00102.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Smith CA, Ganschow PS, Reilly BM, Evans AT, McNutt RA, Osei A, et al. Teaching residents evidence-based medicine skills: a controlled trial of effectiveness and assessment of durability. J Gen Intern Med. 2000;15:710–715. doi: 10.1046/j.1525-1497.2000.91026.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Streiner DL, Norman GR. Health measurement scales. Oxford: University Press; 1995. [Google Scholar]

- 8.Lienert GA, Raatz U. Testaufbau und testanalyse. Weinheim: Psychologie Verlagsunion; 1995. [Google Scholar]

- 9.Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrica. 1951;16:297–334. [Google Scholar]

- 10.Nunnally JC. Psychometric theory. New York: McGraw-Hill; 1978. [Google Scholar]

- 11.Guyatt G, Rennie D. Users' guides to the medical literature: a manual for evidence-based clinical practice. Chicago: JAMA and Archives Journals; 2002. [Google Scholar]

- 12.Kunz R, Fritsche L, Neumayer HH. Development of quality assurance criteria for continuing education in evidence-based medicine. Z Arztl Fortbild Qualitatssich. 2001;95:371–375. [PubMed] [Google Scholar]

- 13.Linzer M, Brown JT, Frazier LM, DeLong ER, Siegel WC. Impact of a medical journal club on house-staff reading habits, knowledge, and critical appraisal skills. A randomized control trial. JAMA. 1988;260:2537–2541. [PubMed] [Google Scholar]

- 14.Khan KS, Awonuga AO, Dwarakanath LS, Taylor R. Assessments in evidence-based medicine workshops: loose connection between perception of knowledge and its objective assessment. Med Teach. 2001;23:92–94. doi: 10.1080/01421590150214654. [DOI] [PubMed] [Google Scholar]

- 15.Kitchens JM, Pfeifer MP. Teaching residents to read the medical literature: a controlled trial of a curriculum in critical appraisal/clinical epidemiology. J Gen Intern Med. 1989;4:384–387. doi: 10.1007/BF02599686. [DOI] [PubMed] [Google Scholar]

- 16.Gehlbach SH, Bobula JA, Dickinson JC. Teaching residents to read the medical literature. J Med Educ. 1980;55:362–365. doi: 10.1097/00001888-198004000-00007. [DOI] [PubMed] [Google Scholar]

- 17.Smith CA, Ganschow PS, Reilly BM, Evans AT, McNutt RA, Osei A, et al. Teaching residents evidence-based medicine skills: a controlled trial of effectiveness and assessment of durability. J Gen Intern Med. 2000;15:710–715. doi: 10.1046/j.1525-1497.2000.91026.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Green ML. Evidence-based medicine training in graduate medical education: past, present and future. J Eval Clin Pract. 2000;6:121–138. doi: 10.1046/j.1365-2753.2000.00239.x. [DOI] [PubMed] [Google Scholar]

- 19.Cochrane Library. Issue 3. Oxford, Update Software. 2002. [Google Scholar]

- 20.American College of Physicians. Best evidence, vol 5. Philadelphia: ACP; 2001. [Google Scholar]

- 21.Clinical evidence. London: BMJ Publishing; 2001. . (Issue 6.) [Google Scholar]

- 22.Guyatt GH, Meade MO, Jaeschke RZ, Cook DJ, Haynes RB. Practitioners of evidence based care. Not all clinicians need to appraise evidence from scratch but all need some skills. BMJ. 2000;320:954–955. doi: 10.1136/bmj.320.7240.954. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kirkpatrick DI. Evaluation of training. In: Craig R, Bittel I, editors. Training and development handbook. New York: McGraw-Hill; 1967. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.