Abstract

Statistically based iterative image reconstruction methods have been developed for emission tomography. One important component in iterative image reconstruction is the system matrix, which defines the mapping from the image space to the data space. Several groups have demonstrated that an accurate system matrix can improve image quality in both SPECT and PET. While iterative methods are amenable to arbitrary and complicated system models, the true system response is never known exactly. In practice, one also has to sacrifice the accuracy of the system model because of limited computing and imaging resources. This paper analyzes the effect of errors in the system matrix on iterative image reconstruction methods that are based on the maximum a posteriori principle. We derived an analytical expression for calculating artifacts in a reconstructed image that are caused by errors in the system matrix using the first-order Taylor series approximation. The theoretical expression is used to determine the required minimum accuracy of the system matrix in emission tomography. Computer simulations show that the theoretical results work reasonably well in low-noise situations.

1. Introduction

Statistically based iterative image reconstruction methods have been developed for emission tomography to improve image quality [Fessler, 1994, Mumcuoglu et al, 1994, Fessler and Hero, 1995, Bouman and Sauer, 1996]. Compared to analytic approaches, which generally require an idealized system model and do not account for nonuniform noise in the data, iterative methods are amendable to an arbitrary, complicated, and realistic system model that defines the mapping from source to detectors. Furthermore, iterative methods allow for proper modeling of the statistical properties of the acquired data and result in better tradeoffs of resolution verses noise. In the past decade, tremendous effort has been devoted to developing accurate system models for image reconstruction, e.g., [Bai et al, 2002, Bai et al, 2000, Beekman et al, 1997, Formiconi et al, 1989, Frey and Tsui, 1990, Gilland et al, 1994, Jaszczak et al, 1984, King et al, 1995, King et al, 1996a, Laurette et al, 2000, Meikle et al, 1994, Metz et al, 1980, Qi et al, 1998, Tsui et al, 1994a, Tsui et al, 1998, Welch and Gullberg, 1998, Wells et al, 1997]. In positron emission tomography (PET) the line integral model had been considered to be reasonably accurate. Even so, resolution is affected by the combined effects of positron range, noncolinearity of the photon pair, variations in detector-pair sensitivity along the line of response (LOR), and the spatially variant response of the detector caused by intercrystal penetration and scatter. The resolution in filtered backprojection degrades significantly as the target is moved from the center of the scanner to radially off-center locations. By modeling these effects in an iterative reconstruction framework, one can obtain high-resolution images [Veklerov et al, 1988, Mumcuoglu et al, 1996]. In single photon emission computed tomography (SPECT), line-integrals are a far less accurate model. The collimators produce pronounced depth dependence in resolution that is not included in the line integral model. The single photon detection process results in attenuation that can differ between each voxel and each detector element. Iterative methods can explicitly model these effects in the system matrix. It is been shown, for example, that correct modeling of collimator response can reduce partial volume effect and reduce bias in kinetic parameter estimation [Kadrmas et al, 1999], and that accurate attenuation and scatter correction improves the quantitation in cardiac scans [King et al, 1996b, Tsui et al, 1994b] and the signal to noise ratio of cold lesions [Beekman et al, 1997, Hutton et al, 1996, Hutton, 1997]. Clinical studies also show improved sensitivity and specificity in the diagnosis and evaluation of coronary artery disease [Ficaro et al, 1996, Ficaro and Corbett, 2004].

One approach in implementing iterative algorithms is to precompute and store the system matrix. If the system matrix needs to be computed only once as is often the case in PET, computation time is not an issue. The system matrix can be computed using analytic calculations [Huesman et al, 2000] or Monte Carlo simulations [Floyd and Jaszczak, 1985, Veklerov et al, 1988], or it can be measured directly [Formiconi et al, 1989]. However, one challenge is the huge size of system matrices for fully 3D imaging systems. Even storing only the non-zero elements can be a daunting task. Researchers have exploited sparse matrix structures and used various approximations to make this approach practical [Johnson et al, 1995, Floyd and Jaszczak, 1985, Qi et al, 1998].

To avoid the precomputation and storage of a huge projection matrix, on-the-fly calculations are used. The simplest one uses a ray-tracing technique that is essentially the same as the line integral model. A more sophisticated approach is to trace multiple rays for each LOR in order to model the geometric response [Huesman et al, 2000, Laurette et al, 2000]. To include other physical effects in the forward model, Monte Carlo simulations can be used to perform forward and back projection [Kudrolli et al, 2002, Beekman et al, 2002]. Monte Carlo software packages with very accurate physical models of PET and SPECT systems are available. However, computation time is still high if every detail in the photon detection process is modeled, and one must trade off between computation time and model accuracy.

Regardless of the approach, errors are inevitable in the system matrix. When data noise and modeling error both follow a white Gaussian distribution, a well-known solution to this problem is the total least squares (TLS) method [Golub and Van Loan, 1996], which minimizes the combined error in both the system matrix and data measurements. It has been studied for image restoration [Mesarovic et al, 1995a, Mesarovic et al, 1995b, Mesarovic et al, 2000] and image reconstruction [Zhu et al, 1997, Brankov et al, 1999, Zhu et al, 1998, Zhu et al, 1999]. However, due to the high computational cost of TLS methods, most of the work has focused on spatially invariant linear inverse problems, although methods have also been proposed for nonconvolutional linear inverse problems [Zhu et al, 1997, Zhu et al, 1999]. When noise is not white Gaussian, such as in emission tomography, TLS methods are not directly applicable. Because of these limitations of TLS methods, the majority of the image reconstruction methods still assume that the system matrix is exact. Thus, it is important to understand the effect of modeling errors on these reconstruction methods.

In this paper, we study the error propagation from the system matrix into reconstructed images in MAP reconstruction. Unlike the existing work that often focuses on a specific type of modeling error, such as geometric response, attenuation, or scatter, we derive a general theoretical formula that is not limited to a single type of modeling error. We use the result to determine the minimum required accuracy for the system matrix, such that the effect of the modeling error is small compared to the intrinsic noise in the data. An earlier work on quantitative analysis of effects of the modeling errors can be found in [Tekalp and Sezan, 1990], but it is limited to linear space-invariant image restoration with stationary noise. Here we focus on nonconvolutional imaging systems and nonstationary noise models. We analyze iterative reconstruction at convergence using the fixed-point condition [Fessler, 1996]. Thus, the result is independent of the particular optimization algorithm, as long as the algorithm is iterated to effective convergence.

2. Theory

2.1. Approximations

For a given imaging system with measured data y ∈ ℝM×1, the maximum a posteriori (MAP) reconstruction is found by maximizing the log-posterior density function:

| (1) |

where L(y; x) is the log-likelihood function, U(x) is energy function of a Gibbs prior, which can be written as

| (2) |

where β is the hyperparameter that controls the resolution of the reconstructed image, and Z is a normalization constant.

We focus our attention on the reconstruction problems in which the expectation of the data, , can be related to the unknown image x by the following equation

| (3) |

where f(·) is a one-to-one function, P ∈ ℝM×N is the system matrix that defines the mapping from the image space to the data space, [Px]i denotes the ith element of Px, and ri accounts for the mean of background events in the ith measurement.

For example, in emission tomography the (i, j)th element of P contains the probability of detecting an event emitted from the jth image element at the ith sinogram bin, f(z) = z, and the log-likelihood function is

| (4) |

Equation (3) assumes a discrete-to-discrete (DD) imaging model [Barrett and Myers, 2003], where the unknown image is represented by a linear combination of a set of basis functions (image elements) with x containing the corresponding coefficients. The most commonly used basis functions in image reconstruction are pixels in two dimensions and voxels in three dimensions, although other basis functions, such as blobs [Matej and Lewitt, 1996], and natural pixels [Buonocore et al, 1981], have also been proposed. The analysis here is not restricted to any particular form of basis function. The DD model in (3) is not even required to have zero image error (see Ch. 7.4.3 in [Barrett and Myers, 2003] for a discussion on image errors), because we focus on analyzing how changes in the system matrix P affect the estimate of the finite dimension coefficient x. The representation error of a continuous object by the basis functions is beyond the scope of this paper.

To simplify the notation in the following derivation, we first assume that the log-likelihood function L(y; x) can be decomposed as follows

| (5) |

Such decomposition is always possible when measured data contain independent noise, but independence is not a necessary requirement. For the Poisson log-likelihood function in (4), we have

We shall show later that the above requirement in (5) can be relaxed.

The necessary condition for to be the solution of (1) is the Kuhn-Tucker condition [Luenberger, 1984]:

| (6) |

where .

For a given data vector y, equation (6) implicitly defines the relationship between the system matrix P and the corresponding MAP reconstruction . Let us denote the “true” system matrix‡ by . The reconstructed image with the “true” system matrix, , satisfies

| (7) |

Considering as a function of P, when ΔP is small, we can use the first-order Taylor series approximation to calculate the error propagation, i.e.,

| (8) |

where denotes the partial derivative of with respect to Pmn evaluated at P. Here the Taylor series is expanded at the noisy system matrix P. However, the expansion can also be taken at the “true” system matrix , because the first-order Taylor series approximation essentially assumes that the first derivatives are constant.

To proceed, we assume that the zero regions in and are the same, so . Thus, we can exclude zero regions from the following analysis by removing the columns in P that correspond to the zero image elements and reducing the number of unknowns in x. Without loss of generality, we shall assume that for all j = 1, …, N in the rest of the derivation.

To find the expression of for the nonzero image elements, we differentiate equation in (6) with respect to Pmn by applying the chain rule [Fessler, 1996] and get

| (9) |

where δmn is the Kronecker delta function defined as

and .

Solving the linear equations in (9) yields

| (10) |

where the (j, k) element of H is

and [H−1]jk denotes the (j, k)th element of H−1. Here we assume that the symmetric matrix H is positive definite.

Substituting (10) into (8), we get

| (11) |

The above equation can be written in a matrix form

| (12) |

where diag[yi] denotes a diagonal matrix with (i, i)th element being yi, [ci] denotes a column vector with the ith element being ci, and ‘′’ denotes the matrix transpose.

As we have mentioned above, the decomposition in (5) is not essential to the derivation. The fundamental requirement is that the log-likelihood function can be written as

| (13) |

where q = Px. Following the same procedure, we can show that the error propagation formula for the likelihood function in (13) is

| (14) |

where the (j, k)th element of ∇02Φ(y, q) is , the jth element of ∇01Φ(y, q) is , and is a symmetric matrix with the (j, m)th element being .

Equations (12) and (14) are the main results of this paper. They can be applied to a range of likelihood functions and image priors so long as the first-order Taylor series approximation (8) and equation (13) hold. In the following we shall show the applications of these results to emission tomography and transmission tomography problems.

2.2. Emission Tomography

Emission data are often modeled as independent Poisson random variables with the expectation related to the unknown image x through an affine transform

where r accounts for the mean of the background events, such as randoms and scatters. For the Poisson likelihood function, we have

| (15) |

| (16) |

| (17) |

Substituting (16) and (17) into (12), we get the following expression for error propagation in emission tomography

| (18) |

where

and . In high count situations we can approximate , so (18) reduces to

| (19) |

The result shows that the error propagation from the system matrix depends on the prior function through . In general, a prior that encourages smoothness in the reconstructed image can reduce the error propagation. This is similar to the error propagation from the voxel sensitivity image [Qi and Huesman, 2004].

One application of (19) is to determine the minimum accuracy required for the system matrix. While the exact answer depends on the specific task, a reasonable requirement would be that the artifacts caused by the errors in the system matrix are small compared to the uncertainty caused by the Poisson noise in the data, i.e.,

| (20) |

where E denotes ensemble expectation, ∑ is the image covariance caused by the Poisson noise given the true system matrix , and a is a user-defined tolerance factor, e.g., 0.01.

From the results in [Fessler, 1996], we know that ∑ can be approximated by

| (21) |

where denotes the MAP reconstruction of the noise-free data with ,

and .

To proceed, we assume that the artifacts caused by the modeling error are independent of the Poisson noise in the measurements. Thus, we can analyze using noise-free data, in which case we have . If we consider P as a random quantity, the expansion point of the first-order Taylor series approximation in (8) should be changed to , which essentially changes all to and all P to in equation (19). Substituting the modified equation (19) and equation (21) into (20), we get

| (22) |

A sufficient condition for (22) to hold is

| (23) |

Equation (23) is useful in practice to determine whether the system matrix is accurate enough for reconstruction. For example, when using a system matrix that is calculated using Monte Carlo simulations or is measured directly using a scanning point source, can be estimated from the covariance of the forward projected sinogram. To satisfy the requirement in (23), the variance in the forward projection need be less than a times the statistical variance in the data. If no acceleration scheme is used in the Monte Carlo simulation, this essentially means that the total number of detected events in the forward projection should be more than 1/a times the total number of detections in the data set. A similar criterion may also be used in the situation where Monte Carlo simulation is used to calculate the forward and back projection at each iteration, e.g., [Kudrolli et al, 2002]. However, because the system matrix changes from iteration to iteration in this case, exact analysis is more difficult.

Another direct application of (23) is to determine the required accuracy for normalization and attenuation correction factors in PET. These factors are often measured using blank and transmission scans, in which noise is inevitable. Both factors can be written as a diagonal matrix, so we have

| (24) |

where and Δni denote the true correction (normalization or attenuation) factors and the errors in the measured correction factors, respectively.

Substituting (24) into (23) and using the approximation results in

| (25) |

Assuming Δni and Δnj are independent for i ≠ j, we get

| (26) |

A simpler but sufficient condition is

| (27) |

which essentially requires the relative noise level in the correction factors to be less than α times of that in the original data.

2.3. Transmission Tomography

The theoretical result is also applicable to transmission tomography. Here we consider the transmission reconstruction from measurements that have independent Poisson distributions. We use xj to denote the the attenuation coefficient in the jth pixel. The mean of the data, , is related to x by

where Pij denotes the intersection length of the ith ray passing through the jth pixel, bi denotes the number of emission from the transmission source along the ith measurement, and ri denotes the additive background events in the ith measurement. For simplicity we focus on the case where ri = 0. Thus, we have

| (28) |

| (29) |

| (30) |

Substituting equations (29) and (30) into (12) yields the following error propagation expression for transmission tomography

| (31) |

where

and . If we use the approximation , equation (31) reduces to

| (32) |

We can also use the condition (20) to determine the required accuracy for transmission reconstruction. From [Fessler, 1996] we know that the covariance caused by Poisson noise in transmission reconstruction can be approximated by

| (33) |

where is the noisefree reconstruction, , and . Substituting equations (32) and (33) into (20) and simplifying yields the following condition

| (34) |

3. Validation using Computer Simulations

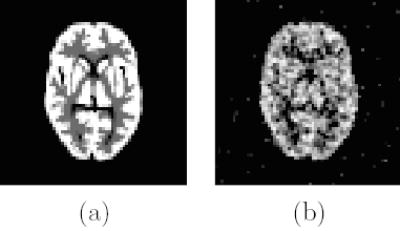

Computer simulations have been conducted to validate the theoretical results for emission tomography. We simulate a single-ring small-animal PET scanner that consists of 240 scintillation detectors. The size of each scintillation crystal is 2 mm (transaxial) × 10 mm (radial). The field-of-view (FOV) is a circular region of 64 mm in diameter represented by 64×64 1-mm2 pixels. A scaled Hoffman brain phantom is used (Fig. 1(a)). The activity concentrations in the gray matter, white matter, and CSF are 5, 1, and 0, respectively. The “true” system matrix is calculated using a ray-tracing technique with modeling of crystal penetration effect by subdividing each crystal into 36 small elements as described in [Huesman et al, 2000]. We add a uniform background (10% of true events) to model random and scattered events. The total number of detected events is about 0.1M except where noted otherwise. A preconditioned conjugated gradient MAP algorithm with a log-quadratic prior is used to reconstruct the image. The prior energy function is U(x) = ∑j ∑k∈Nj (xj = xk)2, where Nj includes the four nearest neighbors of pixel j. Note that the results require only that the algorithm iterates to an effective convergence and are independent of the particular optimization algorithm. The exact expectation of the additive background r is used. A noisy reconstruction is shown in Fig. 1(b).

Figure 1.

(a) The scaled Hoffman brain phantom and (b) a noisy reconstruction with β = 100.

3.1. Validation of Equation (18)

Figs. 2 and 3 show the changes in the reconstructed images when we decrease one row (i.e., a fixed LOR) of the system matrix by 50%. The theoretical predictions are computed using (18) with the reconstruction shown in Fig. 1b. The measured results are calculated from two independent reconstructions, i.e., reconstructions of the same noisy data using the “true” system matrix and the one with errors. Both results show a small increase in activity at the voxels close to the perturbed LOR. A slight decrease in activity at the voxels that are further away from the LOR is also noticed. Most importantly, the theoretical predictions match the measured results very well.

Figure 2.

Comparison of the measured Δx and theoretically predicted Δx caused by a 50% perturbation along a center LOR. Top left: theoretically predicted Δx; top right: measured Δx; bottom: vertical profiles through the center of the theoretical (solid line) and the measured (‘×’) image.

Figure 3.

Comparison of the measured Δx and theoretically predicted Δx caused by a 50% perturbation along an off-center LOR. Top left: theoretically predicted Δx; top right: measured Δx; bottom: vertical profiles through the center of the theoretical (solid line) and the measured (‘×’) image.

Figs. 4 and 5 show the changes in the reconstructed images when we decrease one column (i.e., a fixed voxel) of the system matrix by 50%. Without a prior, such perturbation would only increase the activity at this voxel. In our case, the effect is spread to neighboring voxels by the prior as shown by both theoretical calculation and Monte Carlo reconstructions.

Figure 4.

Comparison of the measured Δx and theoretically predicted Δx caused by a 50% perturbation along a column of the system matrix (a center voxel). Top left: theoretically predicted Δx; top right: measured Δx ; bottom: vertical profiles through the center of the theoretical (solid line) and the measured (‘×’) image.

Figure 5.

Comparison of the measured Δx and theoretically predicted Δx caused by a 50% perturbation along a column of the system matrix (an offset voxel). Top left: theoretically predicted Δx; top right: measured Δx; bottom: vertical profiles through the center of the theoretical (solid line) and the measured (‘×’) image.

Fig. 6 shows the changes in the reconstructed images when we add 20% zero-mean Gaussian noise to each nonzero element of the system matrix. Again, good agreement between the theoretical calculation and measured results is found.

Figure 6.

Comparison of the measured Δx and theoretically predicted Δx resulting from 20% white Gaussian noise in the system matrix. Top left: theoretically predicted Δx; top right: measured Δx; bottom: vertical profiles through the center of the theoretical (solid line) and the measured (‘×’) image.

3.2. Validation of Condition (23)

One of the contributions of this paper is the derivation of (23), which provides a guideline for determining the required accuracy of a system matrix. We test this condition at three different count levels, 0.1M, 1M, and 10M events. At each count level, we generate 50 independent data sets by adding pseudo-random Poisson noise. Each data set is reconstructed using both the “true” system matrix and a noisy system matrix with three different β values (β = 100, 10, 1). The resolution is measured by the full-width-at-half-maximum (FWHM) of the local impulse response function at the center of the FOV. The noisy system matrices are generated by adding 15% zero-mean Gaussian noise to each nonzero element of the “true” system matrix.

Table 1 shows the comparison of variations caused by Poisson noise and artifacts caused by noise in the system matrix. The variation caused by Poisson noise (denoted as “Poisson noise”) is measured by trace{∑}, where ∑ is the covariance matrix calculated from the 50 reconstructed images using the “true” system matrix. The level of the artifacts caused by noise in the system matrix is measured by the average of the mean squared error (MSE) between the reconstructed images using the “true” system matrix and noisy system matrices, which is equal to trace . The predicted MSE is calculated using from equation (18).

Table 1.

Comparison of the variations caused by Poisson noise and system modeling errors. (See text for details.)

| Total Counts | FWHM (β) | Poisson Noise | Measured MSE | Predicted MSE |

|---|---|---|---|---|

| 0.1M | 2.5 mm (100) | 0.2068 | 0.1378 | 0.1254 |

| 1.7 mm (10) | 1.7722 | 1.1930 | 0.8883 | |

| 1.4 mm (1) | 8.2431 | 6.7784 | 3.5624 | |

| 1M | 2.5 mm (100) | 0.0211 | 0.0064 | 0.0063 |

| 1.7 mm (10) | 0.1988 | 0.0320 | 0.0320 | |

| 1.4 mm (1) | 1.1999 | 0.4436 | 0.3393 | |

| 10M | 2.5 mm (100) | 0.0021 | 0.0004 | 0.0004 |

| 1.7 mm (10) | 0.0201 | 0.0014 | 0.0014 | |

| 1.4 mm (1) | 0.1289 | 0.0134 | 0.0140 |

The results show that when count level is high, the artifacts cause by system modeling error is small compared to Poisson noise. However, as count level decreases, the artifacts by the modeling error increases at a faster rate than that of Poisson noise. In low-noise reconstructions (high count or strong regularization), the theoretically predicted MSEs match the measured results very well. As noise level increases, the theoretical prediction breaks down. This is a limitation of the first-order Taylor series approximation that is used in the theoretical derivation.

To validate equation (23), we estimate the value of a by calculating the ratio of the second moments of and . The maximum ratio is 1.05 and the average ratio is 0.22. All the results in Table 1 satisfy the worse case scenario a = 1.05. For high count data it is safe to use the average ratio instead of the maximum ratio.

3.3. Validation of Condition (27)

We generate noisy normalization factors using white Gaussian noise with unit mean and variance of 0.0025 (equivalent to the counting statistics of 400 events). We reconstruct 50 independent data sets with the ideal normalization factor and 50 noisy normalization factors (no other error is introduced to the system matrix). From these reconstructions we calculate the variations caused by Poisson noise and the variations caused by the noisy normalization factors.

Table 2 shows that the effect of noisy normalization factors (or attenuation correction factors) is independent of count level of the original data. When count level is at 0.1M (the maximum value in the sinogram is about 20), the effect of the noisy normalization factors is insignificant. However, when count level is at 10M (the maximum value in the sinogram is about 2000), the effect of the noisy normalization factors becomes greater than that of Poisson noise. This follows exactly the theoretical prediction in (27).

Table 2.

Comparison of the variations caused by Poisson noise and noise in normalization factors. (See text for details.)

| Total Count | FWHM (β) | Poisson Noise | Measured MSE | Predicted MSE |

|---|---|---|---|---|

| 0.1M | 2.5 mm (100) | 0.2130 | 0.0056 | 0.0056 |

| 1.7 mm (10) | 1.8158 | 0.0505 | 0.0519 | |

| 1.4 mm (1) | 8.3067 | 0.2806 | 0.3199 | |

| 1M | 2.5 mm (100) | 0.0214 | 0.0055 | 0.0055 |

| 1.7 mm (10) | 0.2015 | 0.0541 | 0.0545 | |

| 1.4 mm (1) | 1.2078 | 0.3331 | 0.3511 | |

| 10M | 2.5 mm (100) | 0.0021 | 0.0055 | 0.0055 |

| 1.7 mm (10) | 0.0201 | 0.0541 | 0.0542 | |

| 1.4 mm (1) | 0.1296 | 0.3426 | 0.3546 |

4. Conclusions and Discussion

We have derived an analytical formula for calculating the error propagation from the system matrix to the final reconstructed image in iterative reconstruction. The theoretical expression has been used to determine the required accuracy of the system matrix in emission reconstruction. Computer simulation results show that the theoretical predictions match the measured results reasonably well at low-noise situations. The residual errors in the theoretical predication are caused mainly by the nonlinear property of the reconstruction algorithm, because we use the first-order Taylor series approximation. Using high-order approximations would improve the theoretical predictions, but expressions become more complicated quickly.

Acknowledgments

The authors would like to thank Dr. H. H. Barrett and the anonymous reviewers for their insightful comments.

This work is supported in part by the National Institutes of Health under grant no. R01 EB00194 and by the Director, Office of Science, Office of Biological and Environmental Research, Medical Sciences Division, of the U.S. Department of Energy under contract no. DE-AC03-76SF00098.

Footnotes

The word “true” here means that we use as a reference. may contain modeling error as well, since a real imaging system is a continuous-to-discrete transform and its response can never be known exactly.

References

- Bai C, Zeng GL, Gullberg GT. A slice-by-slice blurring model and kernel evaluation using Klein-Nishina formula for 3D scatter compensation in parallel and converging beam SPECT. Physics in Medicine and Biology. 2000;45:1275–1307. doi: 10.1088/0031-9155/45/5/314. [DOI] [PubMed] [Google Scholar]

- Bai B, Li Q, Holdsworth CH, Asma E, Tai YC, Chatziioannou A, Leahy RM. Model-based normalization for iterative 3D PET image reconstruction. Physics in Medicine and Biology. 2002;47(15):2773–2784. doi: 10.1088/0031-9155/47/15/316. [DOI] [PubMed] [Google Scholar]

- Barrett HH and Myers KJ, (2003). Foundations of Image Science. John Wiley & Sons, Inc.

- Beekman FJ, den Harder JM, Viergever MA, van Rijk PP. SPECT scatter modelling in non-uniform attenuating objects. Physics in Medicine and Biology. 1997;42:1133–1142. doi: 10.1088/0031-9155/42/6/010. [DOI] [PubMed] [Google Scholar]

- Beekman FJ, de Jong HWAM, van Geloven S. Efficient fully 3-D iterative SPECT reconstruction with Monte Carlo-based scatter compensation. IEEE Transactions on Medical Imaging. 2002;21(8):867–877. doi: 10.1109/TMI.2002.803130. [DOI] [PubMed] [Google Scholar]

- Bouman C, Sauer K. A unified approach to statistical tomography using coordinate descent optimization. IEEE Transactions on Image Processing. 1996;5(3):480–492. doi: 10.1109/83.491321. [DOI] [PubMed] [Google Scholar]

- Brankov J, Djordjevic J, Galatsanos NP, and Wernick MN. PET image reconstruction with allowance for errors in the system model. In Proceedings of IEEE Nuclear Science Symposium and Medical Imaging Conference, volume 3, pages 1163 – 1167, 1999.

- Buonocore MH, Brody WR, Macovski A. A natural pixel decomposition for two-dimensional image reconstruction. IEEE Transactions on Biomedical Engineering. 1981;28:69–78. doi: 10.1109/TBME.1981.324781. [DOI] [PubMed] [Google Scholar]

- Fessler JA. Penalized weighted least-squares image reconstruction for PET. IEEE Transactions on Medical Imaging. 1994;13:290–300. doi: 10.1109/42.293921. [DOI] [PubMed] [Google Scholar]

- Fessler JA. Mean and variance of implicitly defined biased estimators (such as penalized maximum likelihood): Applications to tomography. IEEE Transactions on Image Processing. 1996;5(3):493–506. doi: 10.1109/83.491322. [DOI] [PubMed] [Google Scholar]

- Fessler JA, Hero AO. Penalized maximum-likelihood image reconstruction using space-alternating generalized EM algorithms. IEEE Transactions on Image Processing. 1995;4:1417–1429. doi: 10.1109/83.465106. [DOI] [PubMed] [Google Scholar]

- Ficaro EP, Corbett JR. Advances in quantitative perfusion spect imaging. J Nucl Cardiol. 2004;11:62–70. doi: 10.1016/j.nuclcard.2003.10.007. [DOI] [PubMed] [Google Scholar]

- Ficaro EP, Fessler JA, Shreve PD, Kritzman JN, Rose PA, Corbett JR. Simultaneous transmission/emission myocardial perfusion tomography : Diagnostic accuracy of attenuation-corrected 99mTc-Sestamibi single-photon emission computed tomography. Circulation. 1996;93(3):463–473. doi: 10.1161/01.cir.93.3.463. [DOI] [PubMed] [Google Scholar]

- Floyd CS, Jaszczak RJ. Inverse Monte Carlo: A unified reconstruction algorithm for SPECT. IEEE Transactions on Nuclear Science. 1985;NS-32:779–785. [Google Scholar]

- Formiconi AR, Pupi A, Passeri A. Compensation of spatial system response in SPECT with conjugate gradient reconstruction technique. Physics in Medicine and Biology. 1989;34(1):69–84. doi: 10.1088/0031-9155/34/1/007. [DOI] [PubMed] [Google Scholar]

- Frey EC, Tsui BMW. Parametrization of the scatter response function in SPECT imaging using Monte Carlo simulation. IEEE Transactions on Nuclear Science. 1990;37:1308–1315. [Google Scholar]

- Gilland DR, Jaszczak RJ, Wang H, Turkington TG, Greer KL, Coleman RE. A 3D model of non-uniform attenuation and detector response for efficient iterative reconstruction in SPECT. Physics in Medicine and Biology. 1994;39:547–561. doi: 10.1088/0031-9155/39/3/017. [DOI] [PubMed] [Google Scholar]

- Golub GH and Van Loan CF, (1996). Matrix Computations. Johns Hopkins University Press, third edition.

- Huesman RH, Klein GJ, Moses WW, Qi J, Reutter BW, Virador PRG. List mode maximum likelihood reconstruction applied to positron emission mammography with irregular sampling. IEEE Transactions on Medical Imaging. 2000;19:532–537. doi: 10.1109/42.870263. [DOI] [PubMed] [Google Scholar]

- Hutton BF. Cardiac single-photon emission tomography: is attenuation correction enough? Eur J Nucl Med. 1997;24:713–715. doi: 10.1007/BF00879656. [DOI] [PubMed] [Google Scholar]

- Hutton BF, Osiecki A, Meikle SR. Transmission-based scatter correction of 180 degree myocardial singlephoton emission tomographic studies. Eur J Nucl Med. 1996;23:1300–1308. doi: 10.1007/BF01367584. [DOI] [PubMed] [Google Scholar]

- Jaszczak RJ, Greer KL, Floyd CE, Harris CC, Coleman RE. Improved spect quantification using compensation for scattered photons. Journal of Nuclear Medicine. 1984;25:893–899. [PubMed] [Google Scholar]

- Johnson C, Yan Y, Carson R, Martino R, Daube-Witherspoon M. A system for the 3D reconstruction of retracted-septa PET data using the EM algorithm. IEEE Transactions on Nuclear Science. 1995;42(4):1223–1227. [Google Scholar]

- Kadrmas DJ, DiBella EVR, Huesman RH, Gullberg GT. Analytical propagation of errors in dynamic SPECT: estimators, degrading factors, bias and noise. Physics in Medicine and Biology. 1999;44(8):1997–2014. doi: 10.1088/0031-9155/44/8/311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King MA, Tsui BMW, Pan T. Attenuation compensation for cardiac single-photon emission computed tomographic imaging: Part I. impact of attenuation and methods of estimating attenuation maps. J Nucl Cardiol. 1995;2:513–524. doi: 10.1016/s1071-3581(05)80044-3. [DOI] [PubMed] [Google Scholar]

- King MA, Tsui BMW, Pan T, Glick SJ, Soares E. Attenuation compensation for cardiac single-photon emission computed tomographic imaging: Part II. attenuation compensation algorithms. J Nucl Cardiol. 1996;3(55–63) doi: 10.1016/s1071-3581(96)90024-0. [DOI] [PubMed] [Google Scholar]

- King MA, Xia W, de Vries DJ, Pan TS, Villegas BJ, Dahlberg S, Tsui BMW, Ljunberg MH, Morgan HT. A Monte Carlo investigation of artifacts caused by liver uptake in SPECT perfusion imaging with Tc-99m-labelled agents. J Nucl Cardiol. 1996;3:18–29. doi: 10.1016/s1071-3581(96)90020-3. [DOI] [PubMed] [Google Scholar]

- Kudrolli H, Worstell W, Zavarzin V. SS3D-fast fully 3D PET iterative reconstruction using stochastic sampling. IEEE Transactions on Nuclear Science. 2002;49(1):124–130. [Google Scholar]

- Laurette I, Zeng GL, Welch A, Christian PE, Gullberg GT. A three-dimensional ray-driven attenuation, scatter and geometric response correction technique for SPECT in inhomogeneous media. Physics in Medicine and Biology. 2000;45:3459–3480. doi: 10.1088/0031-9155/45/11/325. [DOI] [PubMed] [Google Scholar]

- Luenberger D, (1984). Linear and Nonlinear Programming. Addison-Wesley Publishing Company, second edition.

- Matej S, Lewitt R. Practical considerations for 3-D image reconstruction using spherically symmetric volume elements. IEEE Transactions on Medical Imaging. 1996;15(1):68–78. doi: 10.1109/42.481442. [DOI] [PubMed] [Google Scholar]

- Meikle SR, Hutton BF, Bailey DL. A transmission-dependent method for scatter correction in spect. Journal of Nuclear Medicine. 1994;35:360–367. [PubMed] [Google Scholar]

- Mesarovic VZ, Galatsanos NP, Katsaggelos AK. Regularized constrained total least squares image restoration. IEEE Transactions on Image Processing. 1995;4(8):1096–1108. doi: 10.1109/83.403444. [DOI] [PubMed] [Google Scholar]

- Mesarovic VZ, Galatsanos NP, and Wernick MN. Iterative maximum a posteriori (MAP) restoration from partially-known blur for tomographic reconstruction. In Proceedings of IEEE International Conference on Image Processing, pages 512–515, 1995.

- Mesarovic VZ, Galatsanos NP, Wernick MN. Iterative LMMSE restoration of partially-known blurs. Journal of Optical Society of America A. 2000;17:711–723. doi: 10.1364/josaa.17.000711. [DOI] [PubMed] [Google Scholar]

- Metz CE, Atkins FB, Beck RN. The geometric transfer function component for scintillation camera collimators with straight parallel holes. Physics in Medicine and Biology. 1980;25(6):1059–1070. doi: 10.1088/0031-9155/25/6/003. [DOI] [PubMed] [Google Scholar]

- Mumcuoglu E, Leahy R, Cherry S, Zhou Z. Fast gradient-based methods for Bayesian reconstruction of transmission and emission PET images. IEEE Transactions on Medical Imaging. 1994;13(4):687–701. doi: 10.1109/42.363099. [DOI] [PubMed] [Google Scholar]

- Mumcuoglu E, Leahy R, Cherry S, and Hoffman E. Accurate geometric and physical response modeling for statistical image reconstruction in high resolution PET. In Proceedings of IEEE Nuclear Science Symposium and Medical Imaging Conference, pages 1569–1573, Anaheim, CA, 1996.

- Qi J, Huesman RH. Propagation of errors from the sensitivity image in list mode reconstruction. IEEE Transactions on Medical Imaging. 2004;23(9):1094–1099. doi: 10.1109/TMI.2004.829333. [DOI] [PubMed] [Google Scholar]

- Qi J, Leahy RM, Cherry SR, Chatziioannou A, Farquhar TH. High resolution 3D Bayesian image reconstruction using the microPET small animal scanner. Physics in Medicine and Biology. 1998;43(4):1001–1013. doi: 10.1088/0031-9155/43/4/027. [DOI] [PubMed] [Google Scholar]

- Tekalp AM, Sezan MI. Quantitative analysis of artifacts in linear space invariant image restoration. Multidimensional Systems and Signal Processing. 1990;1:143–177. [Google Scholar]

- Tsui BMW, Frey EC, Zhao X, Lalush DS, Johnston RE, Mccartney WH. The importance and implementation of accurate 3D compensation methods for quantitative SPECT. Physics in Medicine and Biology. 1994;39(3):509–530. doi: 10.1088/0031-9155/39/3/015. [DOI] [PubMed] [Google Scholar]

- Tsui BMW, Zhao XD, Gregoriou GK, Lalush DS, Frey EC, Johnston RE, McCartney WH. Quantitative cardiac SPECT reconstruction with reduced image degradation due to patient anatomy. IEEE Transactions on Nuclear Science. 1994;41:2838–2844. [Google Scholar]

- Tsui BMW, Frey EC, LaCroix KJ, Lalush DS, McCartney WH, King MA, Gullberg GT. Quantitative myocardial spect. J Nucl Cardiol. 1998;5:507–522. doi: 10.1016/s1071-3581(98)90182-9. [DOI] [PubMed] [Google Scholar]

- Veklerov E, Llacer J, Hoffman EJ. MLE reconstruction of a brain phantom using a Monte Carlo transition matrix and a statistical stopping rule. IEEE Transactions on Nuclear Science. 1988;35:603–607. [Google Scholar]

- Welch A, Gullberg GT. Implementation of a model-based nonuniform scatter correction scheme for SPECT. IEEE Transactions on Medical Imaging. 1998;16:717–726. doi: 10.1109/42.650869. [DOI] [PubMed] [Google Scholar]

- Wells RG, Celler A, Harrop R. Experimental validation of an analytical method of calculating SPECT projection data. IEEE Transactions on Nuclear Science. 1997;44:1283–1290. [Google Scholar]

- Zhu W, Wang Y, Yao Y, Chang J, Graber HL, Barbour RL. Iterative total least-squares image reconstruction algorithm for optical tomography by the conjugate gradient method. Journal of Optical Society of America A. 1997;14:799–807. doi: 10.1364/josaa.14.000799. [DOI] [PubMed] [Google Scholar]

- Zhu W, Wang Y, Zhang J. Total least-squares reconstruction with wavelets for optical tomography. Journal of Optical Society of America A. 1998;15:2639–2650. doi: 10.1364/josaa.15.002639. [DOI] [PubMed] [Google Scholar]

- Zhu W, Wang Y, Galatsanos N, Zhang J. An efficient solution to the regularized total least squares approach for non-convolutional linear inverse problems. IEEE Transactions on Image Processing. 1999;8(11):1657–1661. doi: 10.1109/83.799895. [DOI] [PubMed] [Google Scholar]