Abstract

When choosing between delayed or uncertain outcomes, individuals discount the value of such outcomes on the basis of the expected time to or the likelihood of their occurrence. In an integrative review of the expanding experimental literature on discounting, the authors show that although the same form of hyperbola-like function describes discounting of both delayed and probabilistic outcomes, a variety of recent findings are inconsistent with a single-process account. The authors also review studies that compare discounting in different populations and discuss the theoretical and practical implications of the findings. The present effort illustrates the value of studying choice involving both delayed and probabilistic outcomes within a general discounting framework that uses similar experimental procedures and a common analytical approach.

Choice is relatively predictable when the alternatives differ on only one dimension. For example, if individuals are offered a choice between two rewards that differ only in amount, they generally choose the larger rather than the smaller reward. Similarly, if offered a choice between two rewards that differ only in delay, individuals tend to choose the reward available sooner rather than the one available later, and if offered a choice between two alternatives that differ only in probability, they tend to choose the more certain reward. These same general principles, and a complementary set of principles for negative outcomes (e.g., smaller punishments will be chosen over larger ones), apply both to humans and other animals. These behavioral tendencies obviously make both economic and evolutionary sense.

Problems arise, however, when choice options differ on more than one dimension—for example, when an individual must choose between a smaller reward available sooner and a larger reward available later. It is also unclear, in general, which alternative an individual would (or should) select when choosing between a smaller, more certain reward and a larger, less certain one or between a less certain reward available sooner and a more certain, but more delayed, reward. These problems are only compounded when rewards differ on all three dimensions (i.e., amount, delay, and probability). The central issue is how individuals make trade-offs among their preferences on these dimensions (Keeney & Raiffa, 1993).

This issue is not only of theoretical interest; it also has implications for everyday decision making, which often involves outcomes that differ on multiple dimensions. Examples of such decisions include deciding whether to purchase a less expensive item that can be enjoyed now or to save for a more expensive one; whether to choose a risky investment that potentially could pay off at a high rate or one that pays a low but guaranteed rate of return; and whether to buy a cheaper, less durable item or spend more on something longer lasting. Although more research has been conducted on choices involving positive outcomes, many important examples also involve negative outcomes (e.g., behaviors with possible delayed health risks).

Choices involving delayed and probabilistic outcomes may be viewed from the perspective of discounting. This perspective assumes that the subjective value of a reward is increasingly discounted from its nominal amount as the delay until or the odds against receiving the reward increase and that individuals choose the reward with the higher (discounted) subjective value (e.g., Ainslie, 1992; Green & Myerson, 1993; Kagel, Battalio, & Green, 1995; Loewenstein & Elster, 1992; Rachlin, 1989). The number of experimental studies that approach choice from the discounting perspective has been rapidly increasing in recent years. This increase appears to be because the concept of discounting provides a potentially unifying theoretical approach that may be applied to diverse issues in psychology. This approach may help in addressing issues that are of fundamental theoretical interest, such as the extent to which decision making with delayed and probabilistic outcomes involve the same underlying processes (e.g., Green, Myerson, & Ostaszewski, 1999a; Myerson, Green, Hanson, Holt, & Estle, 2003; Prelec & Loewenstein, 1991; Rachlin, Raineri, & Cross, 1991). In addition, building on the seminal contributions of Ainslie (1975, 1992, 2001), the discounting approach may be applied to topics of general psychological, even clinical, concern, such as self-control, impulsivity, and risk taking (e.g., Bickel & Marsch, 2001; Green & Myerson, 1993; Heyman, 1996; Logue, 1988; Rachlin, 1995) as well as to more applied topics, such as administrative and career-related decision making (Logue & Anderson, 2001; Schoenfelder & Hantula, 2003).

The present effort provides an integrative review of the rapidly expanding psychological literature on both temporal and probability discounting (for a review of the temporal discounting literature from an economic perspective, see Frederick, Loewenstein, & O’Donoghue, 2002; for discussion of the application of discounting to consumption, savings, asset allocation, and other economic issues concerning aggregate behavior, see Angeletos, Laibson, Repetto, Tobacman, & Weinberg, 2001; Laibson, 1997; for a review of the literature on choice under risk, see Starmer, 2000). We begin by considering choice between delayed rewards and the role of discounting in preference reversals. We then consider alternative mathematical descriptions of the relation between subjective value and delay. We go on to extend this work to choice involving probabilistic rewards and show that the same form of mathematical discounting functions can be used to describe behavior in such situations.

The fact that discounting functions of the same form describe choice involving delayed and probabilistic rewards raises the question of whether temporal and probability discounting both reflect the same underlying process (Green & Myerson, 1996; Prelec & Loewenstein, 1991; Rachlin, Logue, Gibbon, & Frankel, 1986; Rachlin, Siegel, & Cross, 1994; Stevenson, 1986), and we consider evidence bearing on this issue. We do so from the perspective of a general discounting framework that emphasizes the value of using similar experimental procedures and a common analytical approach involving the same form of mathematical discounting function. As a consequence of this similarity in experimental and analytical approaches, we are better able to evaluate claims regarding the adequacy of a single-process account of temporal and probability discounting.

In subsequent sections, we discuss studies that have compared discounting in different populations (e.g., individuals from different cultures; substance abusers vs. controls) as well as data bearing on the relationship between behavioral measures of discounting and psychometric measures of impulsivity. Finally, we discuss areas in which further research is called for, such as the need to study behavior in more complex choice situations in which the outcomes have probabilistic and delayed as well as positive and negative aspects.

Discounting and Choice Between Delayed Rewards

The classic example of discounting involves choice between a larger and a smaller reward, where the smaller reward is available sooner than the larger one. Although an individual may choose the larger, later reward when both alternatives are well in the future, with the passage of time, preference may reverse so that the individual now chooses the smaller, sooner reward. For example, one might prefer to receive $100 right now rather than $120 one month from now. Nevertheless, if the choice were between $100 in 1 year and $120 in 13 months, then one might choose the $120. Notice that preference reverses as an equal amount of time is added to the delay until both outcomes. Similarly, a student might well watch a favorite movie on television Friday evening rather than work on an extracredit assignment due the following week that will raise the student’s course grade. This might happen even though several days earlier the student had indicated a preference for working on the paper Friday night rather than watching the movie.

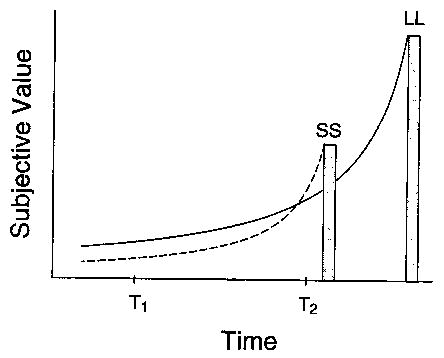

Such preference reversals have been diagrammed as shown in Figure 1. The vertical axis represents the subjective, or discounted, value of a future reward, and the horizontal axis represents time. The heights of the bars represent the actual reward amounts. The curves show how their subjective values might change as a function of the time at which the rewards were evaluated. Such curves are termed discounting functions because they indicate how the value of a future reward is discounted when it is delayed. According to the representation in Figure 1, if one were offered a choice between the smaller, sooner and the larger, later rewards at Time 1, one would choose the larger, later reward, whereas if one were offered a choice between the same rewards at Time 2, one would choose the smaller, sooner reward.

Figure 1.

Choice between a smaller reward, available sooner (SS), and a larger reward, available later (LL). The curved lines represent change in subjective value as a function of time. The heights of the bars represent the actual reward amounts. T1 = Time 1; T2 = Time 2.

According to the discounting account, preference reversals occur because the subjective value of smaller, sooner rewards increases more than that of larger, later rewards when there is an equivalent decrease in the delays to the two rewards (as shown in Figure 1). The preference reversals described previously seem intuitively correct, but do they actually occur? If so, they violate the stationarity assumption that underlies the discounted utility model of classical economic theory (i.e., the assumption that if A is preferred to B at one point in time, it will be preferred at all other points in time; Koopmans, Diamond, & Williamson, 1964; see Frederick et al., 2002, for a history of the discounted utility model and a review of the many violations of the model’s assumptions). The results of studies with both humans and nonhuman animals clearly violate the stationarity assumption and are consistent with the discounting account.

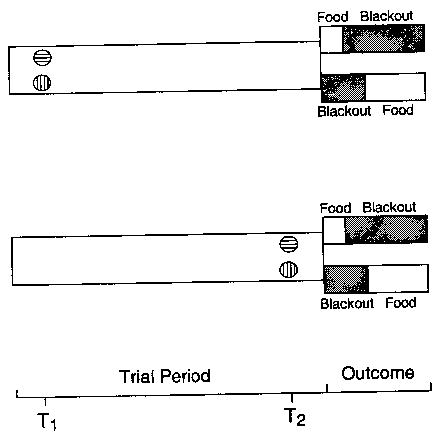

For example, in an earlier study by Green, Fisher, Perlow, and Sherman (1981), pigeons were studied with a procedure like that shown schematically in Figure 2. The pigeons chose between two alternatives, a smaller, sooner reward and a larger, later reward, by pecking at one of two illuminated response keys. In different conditions, the choice was presented at different points in time within the 30-s trial period. For example, in one condition, the choice was presented 28 s before the outcome period (see the top diagram in Figure 2), whereas in another condition, the choice was presented 2 s before the outcome period (see the bottom diagram in Figure 2).

Figure 2.

Experimental procedure in Green et al. (1981). The top diagram represents a trial in which pigeons made a choice at Time 1 (T1), 28 s before the outcome period, between a smaller, sooner reward and a larger, later reward by pecking one of two keys (indicated by the horizontally and vertically striped circles, respectively). The bottom diagram represents a trial in which pigeons made their choice at Time 2 (T2), 2 s before the outcome period.

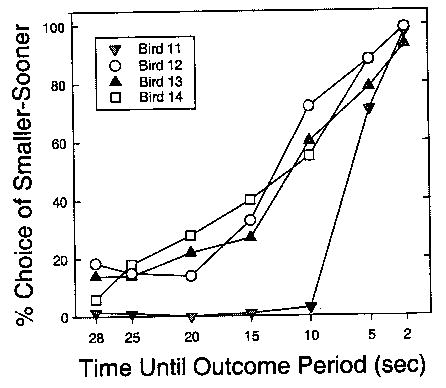

The results of the Green et al. (1981) experiment are shown in Figure 3. As may be seen, when the choice was offered further in advance of the outcome period, analogous to Time 1 in Figures 1 and 2, each pigeon strongly preferred the larger, later reward. When the choice was offered shortly before the outcome period, analogous to Time 2, each pigeon showed a reversal in preference, now strongly preferring the smaller, sooner reward.

Figure 3.

Percentage of choice of the smaller, sooner reward plotted as a function of the time from the choice until the outcome period. Data are reprinted from Behaviour Analysis Letters, 1, L. Green, E. B. Fisher Jr., S. Perlow, and L. Sherman, “Preference Reversal and Self-Control: Choice as a Function of Reward Amount and Delay,” pp. 43–51, 1981, with permission from Elsevier.

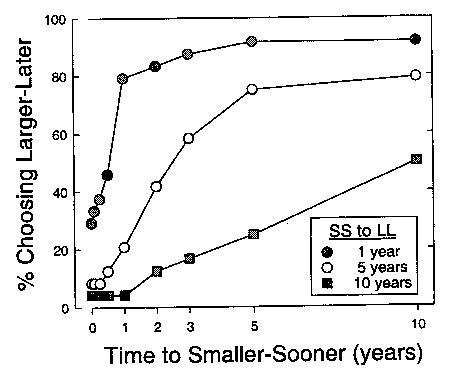

Preference reversals were also observed in humans by Green, Fristoe, and Myerson (1994), who used a procedure analogous to that used in the pigeon study but with hypothetical monetary rewards. In one condition, for example, people were asked whether they would prefer $20 now (smaller, sooner) or $50 in 1 year (larger, later). Under these circumstances, most people said they would prefer $20 now. Equivalent delays then were added to both alternatives, and preferences were reassessed. This procedure was repeated adding further delays so that, for example, people were asked whether they would prefer $20 in 1 month or $50 in 1 year plus 1 month and also whether they would prefer $20 in 1 year or $50 in 2 years, and so on. As may be seen in Figure 4, as the delay added to the two alternatives was increased while the interval between the two alternatives (smaller, sooner to larger, later) was held constant (e.g., at 1 year), the percentage of participants who reversed their preference and chose the later, $50 reward increased, as predicted by the discounting account. A similar pattern of increasing preference for the larger, more delayed reward was obtained with other time intervals between the smaller and larger rewards (e.g., 5 years and 10 years; see Figure 4) and using other pairs of amounts (i.e., $100 vs. $250 and $500 vs. $1,250).

Figure 4.

Percentage of participants choosing the larger, later (LL) reward plotted as a function of the time until the smaller, sooner (SS) alternative. Data represent results from three conditions, each with a different interval between the SS and the LL reward. Data are from “Temporal Discounting and Preference Reversals in Choice Between Delayed Outcomes,” by L. Green, N. Fristoe, and J. Myerson, 1994, Psychonomic Bulletin & Review, 1, p. 386. Copyright 1994 by the Psychonomic Society. Reprinted with permission.

In addition to the results obtained with the type of procedure just described, studies of both human and nonhuman subjects have obtained evidence of preference reversals with other types of procedures (Ainslie & Haendel, 1983; Ainslie & Herrnstein, 1981; Green & Estle, 2003; Kirby & Herrnstein, 1995; Mazur, 1987; Rachlin & Green, 1972; Rodriguez & Logue, 1988). According to the discounting account represented in Figure 1, preference reversals occur because the subjective value of the larger, later reward decreases more slowly as its receipt becomes more delayed than does the subjective value of the smaller, sooner reward. However, this description of the discounting of smaller and larger rewards does not greatly constrain the form of the discounting function, even though the shape of this function is assumed to underlie the preference reversal phenomenon. Recent efforts to determine the actual form of the temporal discounting function have been motivated by a desire to better understand preference reversals, as well as by the fact that different function forms have different theoretical implications for the discounting process.

Mathematical Descriptions of Temporal Discounting

Economists and researchers in decision analysis typically assume that temporal discounting is exponential (i.e., subjective value decreases by a constant percentage per unit time). An exponential discounting function has the form

| (1) |

where V is the subjective value of a future reward, A is its amount, D is the delay to its receipt, and b is a parameter that governs the rate of discounting. One of the common criticisms of Equation 1 is that it does not, by itself, predict preference reversals. Indeed, preference reversals violate the stationarity assumption that is part of standard economic theory (e.g., Koopmans, 1960; Koopmans et al., 1964). As Green and Myerson (1993) noted, however, Equation 1 does predict preference reversals if the discounting rate for a larger amount is assumed to be lower than the discounting rate for a smaller amount.

Alternatives to exponential discounting have been proposed by psychologists, behavioral ecologists, and behavioral economists. One major alternative proposal is that the discounting function is a hyperbola (e.g., Mazur, 1987):

| (2) |

where k is a parameter governing the rate of decrease in value and, as in Equation 1, V is the subjective value of a future reward, A is its amount, and D is the delay until its receipt.

A hyperbola-like discounting function in which the denominator of the hyperbola is raised to a power, s, has also been proposed (Green, Fry, & Myerson, 1994):

| (3) |

The parameter s may represent the nonlinear scaling of amount and/or time and is generally equal to or less than 1.0 (Myerson & Green, 1995). Notice that in the special case when s equals 1.0, Equation 3 reduces to Equation 2. Loewenstein and Prelec (1992) have proposed a discounting function that is similar in form to Equation 3 except that in their formulation the exponent is assumed to be inversely proportional to the rate parameter.

Why is it important to determine what form of discounting function best describes the data? One reason is because different equations instantiate different assumptions regarding some fundamental aspects of the choice process. For example, if Equation 1 were to provide the best description, such a finding would be consistent with the assumptions that waiting for a delayed reward involves risk (Kagel, Green, & Caraco, 1986) and that with each additional unit of waiting time there is a constant probability that something will happen to prevent the reward’s receipt. Preference reversals might then be explained by assuming further that waiting for smaller delayed rewards is riskier than waiting for larger rewards (i.e., if the rate parameter of the exponential, b, decreased with amount of reward; Green & Myerson, 1993). Another account of exponential discounting assumes that there is an “opportunity cost” in waiting for delayed rewards (Keeney & Raiffa, 1993).

Alternatively, if Equations 2 or 3 were to provide the best description, such findings would be consistent with the view that choices between rewards available at different times are really choices between different rates of reward. As Myerson and Green (1995) showed, if one assumes that value is directly proportional to rate of reward (Rachlin, 1971), then discounting will have the form of Equation 2.

This may be shown as follows. We assume first, that V = bA/t, where b is the proportionality constant and; is the actual delay, and second, that even an immediate reward involves some minimal delay, m, such that t = D + m, where D is the stated or programmed delay. These assumptions may be combined to yield V = bA/(D + m). Next, consider the case where there are two alternatives, an immediate reward of amount Ai, for which Di = m, and a delayed reward of amount Ad, for which Dd = D + m (where the subscripts i and d refer to the immediate and delayed reward, respectively). It follows mathematically that the amount of an immediate reward that is judged equal in value to a delayed reward (i.e., when Vi = Vd), is given by Ai = Ad/(1 + D/m). Note that the preceding equation is equivalent to Equation 2 with k = 1/m (for a more detailed derivation, see Myerson & Green, 1995).

The assumption of direct proportionality between value and rate of reward, however, is probably too simple. Stevens (1957) demonstrated that for a variety of stimulus dimensions, the relationship between the perceived magnitude of a stimulus and the physical magnitude of the stimulus can be described by a power function. Power functions have been shown repeatedly to provide a good description of the relation between perceived and physical magnitudes, although there may be systematic deviations with small stimulus magnitudes, and in some cases, the parameters of the power function may depend on how the physical magnitude is measured (Luce & Krumhansl, 1988).

Myerson and Green (1995), therefore, assumed that the perceived rate would be equal to the perceived amount divided by the perceived delay, where the perceived magnitude of each variable (amount and delay) would be a power function of the actual magnitude. That is, the perceived amount equals cAr and the perceived delay equals a(D + m)q, and thus value, which depends on perceived rate, equals cAr/a(D + m)q. It follows mathematically that the amount of an immediate reward that is judged equal in value to a delayed reward is given by the expression Ad/(1 + D/m)q/r. This is equivalent to Equation 3 with k = 1/m and s = qlr (Myerson & Green, 1995). Thus, both Equations 2 and 3 are consistent with the view that choices between rewards available at different times are really choices between different rates of reward.

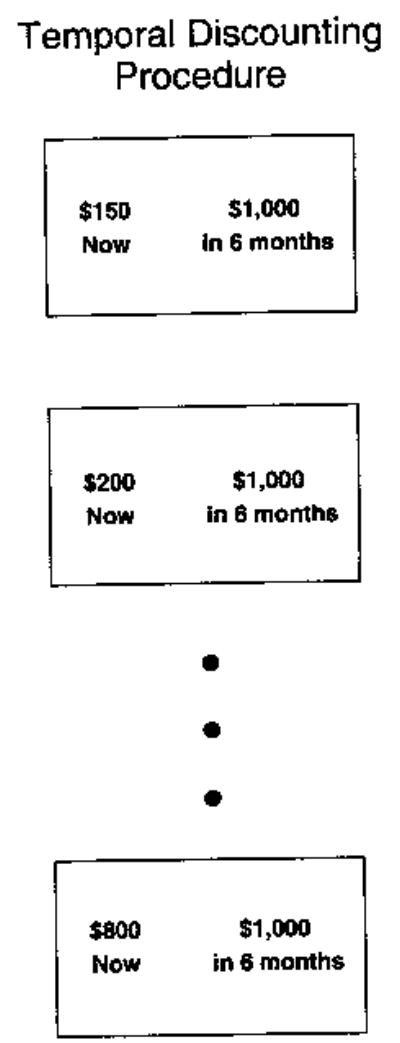

Rachlin et al. (1991) developed a psychophysical procedure to evaluate empirically different discounting equations. An example of this type of procedure is shown in Figure 5. In the example, participants choose between hypothetical monetary rewards: a small amount of money available immediately and a larger amount available after a delay (e.g., $150 now vs. $1,000 in 6 months). If they choose the delayed reward, then the amount of the immediate reward is increased (e.g., $200 now), and they are asked to choose again. This process is repeated until the immediate reward is preferred to the delayed reward. To control for order effects, the point at which preference reverses is redetermined, this time beginning with an amount of the immediate reward similar to that of the delayed reward so that the immediate reward is preferred. The amount of immediate reward is then successively decreased until the delayed reward is preferred. The average of the two amounts at which preference reversed is taken as an estimate of the subjective value of the delayed reward (i.e., the amount of an immediate reward that is judged equal in value to the delayed reward).

Figure 5.

Procedure for studying temporal discounting. Each of the rectangles represents one of a series of successive choices between a smaller, sooner and a larger, later reward in which the amount of the smaller reward is increased until it is preferred to the larger reward.

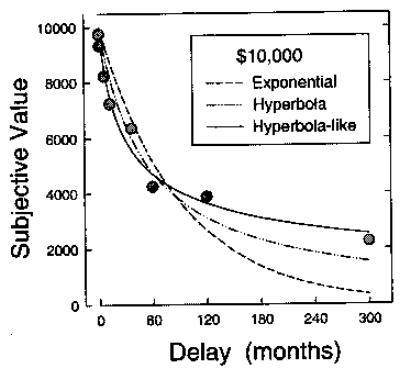

To map out a discounting function, subjective value is estimated at a number of different delays. Figure 6 shows representative data (group medians) for delayed hypothetical $10,000 rewards obtained by Green, Fry, and Myerson (1994) using this psychophysical method and subsequently fit with different equations by Myerson and Green (1995). As may be seen, the exponential (Equation 1; dashed curve) provided the poorest fit, systematically overpredicting the subjective values at briefer delays and under-predicting the subjective values at longer delays. The hyperbola (Equation 2; dashed-and-dotted curve) provided a much better fit, although it, too, tended to systematically overpredict the subjective values at briefer delays and underpredict the subjective values at longer delays.

Figure 6.

The subjective value of a delayed $10,000 reward plotted as a function of the time until its receipt. The curved lines represent alternative forms of the temporal discounting function (Equations 1,2, and 3) fit to the data. Data are from “Discounting of Delayed Rewards: A Life-Span Comparison,” by L. Green, A. F. Fry, and J. Myerson, 1994, Psychological Science, 5, p. 35. Copyright 1994 by Blackwell Publishers, Limited. Reprinted with permission.

The finding that temporal discounting is better described by a hyperbola than by an exponential has been replicated in study after study (e.g., Green, Myerson, & McFadden, 1997; Kirby, 1997; Kirby & Maraković, 1995; Kirby & Santiesteban, 2003; Rachlin et al., 1991; Simpson & Vuchinich, 2000). Although the difference in the proportion of variance accounted for (R2)is small in some of these studies (e.g., Kirby & Maraković, 1995), it should be noted that in others the difference in the proportion of variance accounted for is substantial. In Figure 6, for example, the exponential (Equation 1) accounts for 81.5% of the variance, whereas the hyperbola (Equation 2) accounts for 93.7% (for another particularly striking example, see Rachlin et al., 1991, Figure 7).

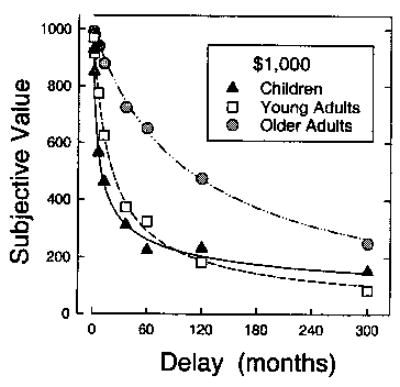

Figure 7.

The subjective value of a delayed $1,000 reward plotted as a function of the time until its receipt for three age groups (children, young adults, and older adults). The curved lines represent the hyperbola-like discounting function (Equation 3) fit to the data for each group. Data are from Behavioural Processes, 46, L. Green, J. Myerson, and P. Ostaszewski, “Discounting of Delayed Rewards Across the Life Span: Age Differences in Individual Discounting Functions,” pp. 89–96. Copyright 1999 by Elsevier. Printed with permission.

It can be seen that Equation 3 (solid curve) provided the best fit to the data in Figure 6 (R2 = .977). It is well known, of course, that simply adding a free parameter to a model tends to increase the proportion of variance accounted for. Therefore, to make the case that Equation 3 provides a better model of discounting, one must take into account considerations in addition to the increase in the value of R2 relative to a simple hyperbola or exponential function. One of the most fundamental of these considerations is whether the differences in the proportion of variance accounted for are statistically significant. This question may be addressed by using a statistical approach analogous to the comparison of linear regression models. That is, one may compare a reduced model (a hyperbola without the exponent parameter) with a full model (one that includes the exponent parameter).

With respect to the group median data depicted in Figure 6, Myerson and Green (1995) reported that adding an exponent produced a statistically significant increase in the proportion of variance accounted for. This finding has subsequently been replicated in further studies from our laboratory (Green et al., 1999a; Ostaszewski, Green, & Myerson, 1998) as well as in reanalyses of data from other laboratories described in subsequent sections (Bickel, Odum, & Madden, 1999; Madden, Petry, Badger, & Bickel, 1997; Murphy, Vuchinich, & Simpson, 2001; Raineri & Rachlin, 1993). Thus, the increase in the proportion of variance explained by Equation 3 is significantly greater than would be expected simply on the basis of the fact that it has two free parameters whereas Equations 1 and 2 each have only one.

Of course, for certain purposes one might prefer a simpler model even when a more complicated one provides a more accurate description of the data. For example, if the more complicated model were associated with considerable loss in mathematical tractability, one might choose to work with the simpler model because predictions would be easier to derive. Moreover, reliance on the simpler model would be more justified if the accuracy of the empirical descriptions provided by the two models did not differ substantially (even if they differed significantly). That is, the underlying issue in such decisions is often the trade-off between fit and parsimony (Harless & Camerer, 1994). Nevertheless, it would seem important at least to know which type of model provides the better description (and whether the fit is significantly better), and on this point the results seem clear: Although the size of the increase in the proportion of explained variance may vary, at the group level a hyperbola-like function provides significantly better fits to discounting data than the simple hyperbola.

A good psychological model should be able to describe not only group data but also data from individual participants. In the two studies to compare fits of the three discounting functions to individual data, the hyperbola-like discounting function provided statistically adequate fits to the data from all the participants, whereas both the exponential and the hyperbola failed to describe some of the individual data (Myerson & Green, 1995; Simpson & Vuchinich, 2000). The finding that the same hyperbola-like function, Equation 3, describes temporal discounting in all individual participants is theoretically significant because it suggests that individual differences in discounting are primarily quantitative and may reflect variations on fundamentally similar choice processes.

The issue of whether adding a free parameter significantly improves the fit also needs to be addressed at the individual level, and the same statistical approach described previously (i.e., comparing full and reduced models) may be used. Myerson and Green (1995) showed that adding an exponent significantly improved the fit to temporal discounting data from individual young adults in the majority of cases (i.e., Equation 3 fit significantly better than Equation 2). This same pattern of results was reported by Simpson and Vuchinich (2000). In addition, Green, Myerson, and Ostaszewski (1999b) showed that Equation 3 provided significantly better fits to individual discounting data from a majority of children (12-year-olds) as well as to individual data from a substantial proportion of older adults.

Amount of variance accounted for is not the only, nor necessarily the best, basis on which to evaluate a mathematical model (Roberts & Pashler, 2000), although it may play an important role in choosing between models (Rodgers & Rowe, 2002). A good model not only accounts for a large proportion of the variance but also provides unbiased predictions. In this regard, it should be noted that both the exponential and simple hyperbola systematically overpredict subjective values at briefer delays and underpredict subjective values at longer delays. In contrast, the fits of Equation 3 reveal no such systematic bias (see Figure 6 for an example). Thus, in terms of both the ability of the model to capture accurately the pattern of the relation between subjective value and delay as well as the amount of variance accounted for both at the group level and at the individual level, the hyperbola-like discounting model provides a better description than both exponential and simple hyperbola models.

How General Is the Form of the Temporal Discounting Function?

Although Figure 6 reveals that Equation 3 accurately describes temporal discounting in a typical population of participants in psychology experiments (i.e., American undergraduates), a good model of temporal discounting obviously must generalize to other populations. One issue is whether the present discounting function applies to different age groups. In this regard, it is important not only to assess how well the function fits the data but also to determine whether the parameters of the function change in a way that is developmentally meaningful.

Green et al. (1999b) examined fits of Equation 3 to data from children (mean age = 12.1 years), young adults (mean age = 20.3 years), and older adults (mean age = 69.7 years). Figure 7 shows the discounting of delayed hypothetical $1,000 rewards by each of the three age groups. The hyperbola-like discounting function accurately described the group median data in each case (all R2s > .990). At the individual level, the median the proportion of variance accounted for was greater than .910 in all three groups. These findings demonstrate that the hyperbola-like discounting function (Equation 3) is extremely general in that it describes temporal discounting in individuals from childhood to old age.

With age, there was a developmental decrease in how steeply delayed rewards were discounted, as indicated by decreases in the value of the k parameter with age, as well as a systematic increase in the value of the s parameter with age, suggesting developmental changes in the way that time and amount are scaled. Although these results must be interpreted cautiously because of the small sample sizes and the lack of independence in the estimates of the k and s parameters (Myerson, Green, & Warusawitharana, 2001), these systematic (and meaningful) changes in the parameters with age suggest that across this age range, differences in temporal discounting are primarily quantitative in nature and do not reflect qualitative changes of the sort that might reflect developmental stages.

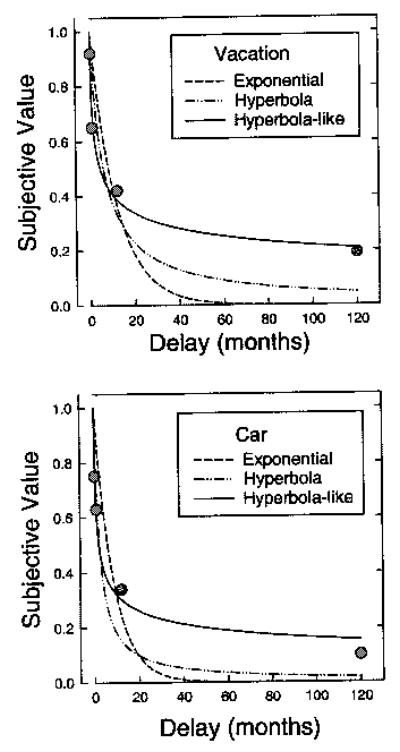

Raineri and Rachlin (1993) have shown that choice involving commodities other than money (i.e., vacations and cars) also can be analyzed in terms of discounting. In their Experiment 4, participants made a series of choices between two hypothetical outcomes: an immediate monetary reward and a delayed nonmonetary reward. For one group, the delayed reward was an all-expenses-paid vacation trip; for a second group, the delayed reward was a future time period in which they would have free use of an economy car. A procedure analogous to that shown in Figure 5 was used to determine the immediate amount judged equal in value to the delayed reward, and the duration of the reward was varied in different conditions.

The top graph in Figure 8 presents data for the condition in which the reward was 1 year of vacation. Subjective value was calculated as the amount of money judged equal in value to the delayed commodity divided by the value of an immediate commodity. This made it possible to compare discounting of different commodities (e.g., vacations and cars) on a common scale. Although Raineri and Rachlin (1993) fit the data using an equation derived to model continuous consumption of an extended reward, it is clear from Figure 8 that the data are very well described by a hyperbola-like discounting function (Equation 3; solid curve; R2 = .977). As was the case with discounting of delayed monetary rewards, Equation 3 provided a significantly better fit to the data than either Equation 1 (dashed curve; R2 = .607) or Equation 2 (dashed-and-dotted curve; R2 = .754).

Figure 8.

The subjective value of a delayed reward of a year-long vacation plotted as a function of the time until the beginning of the vacation (upper graph), and the subjective value of a delayed reward of 1 year’s free use of a car plotted as a function of the time until use of the car was available (lower graph). For both graphs, subjective value was calculated as a proportion of the amount of money that participants judged equal in value to the reward (vacation or car) when it was available without a delay. The curved lines represent fits of Equations 1, 2, and 3 to the data. Data are from Experiment 4 of “The Effect of Temporal Constraints on the Value of Money and Other Commodities,” by A. Raineri and H. Rachlin, 1993, Journal of Behavioral Decision Making, 6, p. 92. Copyright 1993 by John Wiley & Sons Limited. Reproduced with permission.

Similar results were obtained with the second group of participants in Experiment 4 of the Raineri and Rachlin (1993) study. The bottom graph in Figure 8 presents data for the condition in which the delayed reward was 1 year’s free use of an economy car. Again, Equation 3 (R2 = .971) provided a significantly better fit to the data than either Equation 1 (R2 = .474) or Equation 2 (R2 = .742). Thus, a hyperbola-like discounting function describes the discounting of different commodities as well as discounting of monetary rewards by different age groups. Taken together, these results suggest that Equation 3 provides a fairly general description of the decrease in subjective value with increases in delay.

Are These Procedures Realistic?

Most previous studies of temporal discounting have used procedures in which the delayed rewards were hypothetical. It should be noted, however, that the results of those studies that have used real rewards (e.g., Baker, Johnson, & Bickel, 2003; Johnson & Bickel, 2002; Kirby, 1997; Kirby & Maraković, 1995, 1996; Madden, Begotka, Raiff, & Kastern, 2003; Rodriguez & Logue, 1988) also are consistent with discounting. For example, in a study by Kirby and Maraković (1995, Experiment 1), participants knew that both the amount of money they would receive and when they would receive it depended on the choices they made. Specifically, participants estimated how much money they would be willing to accept at the end of the experimental session in exchange for a monetary reward due at a specified later time. The amounts of the delayed rewards ranged from $14.75 to $28.50, and the delays ranged from 3 to 29 days. For each participant, one choice trial was chosen at random at the end of the session. The participant then either received the amount of money judged equal in value to the delayed reward or received the delayed reward in cash on the day that it came due.

Kirby and Maraković (1995, Experiment 1) fit discounting functions to the data from each individual participant. As has been observed with hypothetical rewards, hyperbolic functions (Equation 2) fit the data better than exponential functions (Equation 1). This was true for at least 19 of their 21 participants at each of the delayed amounts studied. Kirby (1997) also compared the fits of Equations 1 and 2 to data obtained with a procedure using real reward similar to that used in Kirby and Maraković (1995 , Experiment 1) and again found that Equation 2 accounted for more of the variance. Equation 3 was not evaluated in either study.

Until a recent study by Johnson and Bickel (2002), however, no experiment directly compared the temporal discounting of real and hypothetical rewards using an experimental design in which the same individuals were studied in both real and hypothetical reward conditions. In addition, Johnson and Bickel examined a much larger range of amounts ($10, $25, $100, and $250) than had been used in previous studies with real rewards. As in the Kirby studies (Kirby, 1997; Kirby & Maraković, 1995), the real reward condition involved rewards that were not only delayed but also probabilistic. That is, participants were told that for each amount, one of their choice trials would be randomly selected, and they would actually receive the amount, immediate or delayed, that they had chosen.

Johnson and Bickel (2002) reported that for 5 of the 6 participants, there were no systematic differences in discount rate between the real reward and hypothetical reward conditions, although 1 participant did show reliably steeper discounting of the real rewards. For the 4 participants who were tested with hypothetical rewards before real rewards, a hyperbolic discounting function (Equation 2) generally provided good fits (although 1 participant’s data were better fit by the exponential function, Equation 1). The 2 participants who were tested with the real rewards first, however, showed very little discounting of either real or hypothetical rewards, suggesting the possibility of an order effect (although the sample size was too small to evaluate this statistically). Moreover, perhaps as a result of the lack of discounting by these 2 participants, neither equation provided an adequate description of their data.

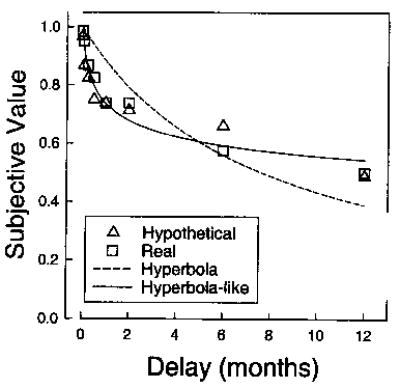

A more recent study by Madden et al. (2003) also used a within-subject design to examine discounting with real and hypothetical monetary rewards. There were 20 participants, half of whom were tested first with hypothetical rewards and then with real rewards; the other half were tested first with real rewards and then with hypothetical rewards. In all cases, the amount of reward was $10. As may be seen in Figure 9, there was no significant difference in the extent to which participants discounted the real and hypothetical rewards (represented by squares and triangles, respectively). It may be noted that Madden et al. (2003) reported no significant effect of order. They also reported that a simple hyperbola (Equation 2) described the data better than an exponential (Equation 1), but they did not evaluate the hyperbola-like discounting function (Equation 3). Our analysis of the group median data for both the real and hypothetical reward data revealed that the hyperbola-like discounting function (solid curve in Figure 9) accounted for more than 93% of the variance and provided a significantly better fit than a simple hyperbola (dashed curve). Thus, the same form of function that describes discounting with hypothetical rewards also describes discounting with real rewards.

Figure 9.

Subjective value of delayed real and hypothetical rewards (expressed as a proportion of their nominal value: $10) plotted as a function of the time until their receipt. The curved lines represent fits of Equations 2 and 3 to the combined data from the real and hypothetical rewards. Data are from “Delay Discounting of Real and Hypothetical Rewards,” by G. J. Madden, A. M. Begotka, B. R. Raiff, and L. L. Kastern, 2003, Experimental and Clinical Psychopharmacology, 11, p. 142. Copyright 2003 by the American Psychological Association. Reprinted with permission of the first author.

There may be, however, other differences between the discounting of the two types of rewards. Comparing the results of earlier studies that examined discounting of real or hypothetical rewards, Kirby (1997) noted that the studies using real rewards reported steeper discounting rates than those studies using hypothetical rewards. He suggested that there may be real differences in discounting the two types of reward, although he also pointed out that the observed difference in discounting rates might reflect the fact that studies using real rewards tend to use smaller amounts than the studies using hypothetical rewards. Indeed, Johnson and Bickel (2002) and Madden et al. (2003), who tested the same participants with both real and hypothetical monetary rewards of the same amount, found no systematic differences in the rate of discounting.

Thus, at present the data support the idea that the discounting of real and hypothetical rewards is at least qualitatively similar (see also Frederick et al., 2002). This conclusion is similar to that reached by Camerer and Hogarth (1999) after an extensive review of the literature on financial incentives in experimental economics more generally. They concluded that methods involving hypothetical choices and those involving real consequences typically yield qualitatively similar results. Moreover, the few studies comparing discounting of real and hypothetical rewards in the same participants (Baker et al., 2003; Johnson & Bickel, 2002; Madden et al., 2003) have reported no significant quantitative differences in discounting rate, although it may be too early to reach a firm conclusion on this point.

Another issue that arises with respect to the validity of laboratory discounting procedures and their generalizability to natural settings concerns the length of the delays that are sometimes used in experiments. That is, one might question whether individuals are capable of making reliable or valid decisions regarding the value of events that will not occur for a number of years. Clearly, however, individuals often do make decisions about very delayed events in the world outside the laboratory. Decisions regarding financial investments, such as deciding how much money to spend now and how much to save for retirement, constitute an obvious real-world example involving very delayed outcomes. Thus, the range of delays that have been examined in laboratory experiments seems appropriate for the study of temporal discounting.

Although some studies have found little or no relationship between discounting measures based on hypothetical scenarios and real-world health behaviors (e.g., Chapman et al., 2001), other studies have found a relation between laboratory measures of delay of gratification and real-world behaviors outside the health domain (e.g., Mischel, Shoda, & Rodriguez, 1989). Notably, a number of recent studies have demonstrated that the rate of discounting hypothetical monetary rewards discriminates substance abusers from non-substance abusers. For example, people addicted to heroin, smokers, and problem drinkers, all show reliably steeper discounting than control groups (e.g., Madden et al., 1997; Mitchell, 1999; Vuchinich & Simpson, 1998). The issue of group differences in discounting, which has obvious relevance for the relationship between laboratory discounting measures and real-world behavior, is discussed in detail in the Applications to Group Differences section.

Discounting and Choice Between Probabilistic Rewards

Choice between probabilistic rewards, like choice between delayed rewards, typically involves outcomes that differ on more than one dimension. That is, just as the latter may involve choosing between a smaller, sooner reward and a larger, later reward, the former may involve choosing between a smaller, less risky reward and a larger, more risky reward. Moreover, just as an individual may choose the larger, later reward when the alternatives are both well in the future, so too, an individual may choose the larger, more risky alternative when the probabilities of receiving either reward are very low. And just as preference may reverse as the time until both alternatives decreases, so too, preference may reverse as risk decreases. That is, an individual who chooses the larger, more risky alternative when the probabilities of receiving either reward are very low may choose the smaller, less risky reward when the probabilities of receiving either reward are increased proportionally (e.g., Rachlin, Castrogiovanni, & Cross, 1987). This kind of reversal in preference is similar to what is known in economics as the Allais paradox—after Maurice Allais (1953), who first reported it—and to the certainty effect (Kahneman & Tversky, 1979). Such preference reversals represent a violation of the independence axiom of expected utility theory. Note that this violation of the independence axiom with respect to probabilistic rewards is similar to the violation of the stationarity assumption with respect to delayed rewards described previously.

Preference reversals that violate the independence axiom have been studied extensively in humans and, along with other findings, have prompted the development of generalized expected utility theories as well as non-expected utility theories and other theoretical approaches. (For recent reviews of theory and research on choice under risk and uncertainty, see Camerer, 1995; Starmer, 2000.) Most of these theories do not lead to specific predictions regarding the mathematical form of the probability discounting function (for examples, see Camerer, 1995) and fewer still have been generalized to temporal discounting (but see Prelec & Loewenstein, 1991).

Prospect theory (Kahneman & Tversky, 1979; Tversky & Kahneman, 1992) is perhaps the most influential theoretical account of choice under uncertainty. Prospect theory assumes that in evaluating probabilistic outcomes, individuals transform both the amounts and the probabilities of the alternatives. (In many choice situations, individuals first may go through an editing process to simplify the alternatives, but experiments on probability discounting usually bypass the editing phase and present participants with simple alternatives such as choice between a larger probabilistic reward and a smaller certain reward.) Amounts are transformed according to an S-shaped value function that is steeper for losses than for gains, and probabilities are transformed according to a weighting function that overweights lower probabilities and underweights higher probabilities. The utility of a probabilistic outcome equals the product of its value and its weight, and choices between outcomes are made on the basis of their utilities. Although prospect theory accounts for many important findings of choice under uncertainty (e.g., risk seeking and loss aversion), it is not directly generalizable to intertemporal choice (i.e., choice involving delayed outcomes).

The discounting framework advocated in the present article is relatively unique in that the approach to understanding choice involving probabilistic outcomes is similar to the approach taken to understanding choice involving delayed outcomes (see Prelec & Loewenstein, 1991, and Rachlin et al., 1991, for pioneering efforts). The discounting framework not only views choices involving delayed and probabilistic outcomes as being similar conceptually but also emphasizes the use of parallel experimental procedures and mathematical modeling techniques to rigorously evaluate the case for similarities between temporal and probability discounting. We would emphasize that the discounting framework, through its use of parallel approaches, also is well suited to reveal theoretically meaningful differences between the two types of discounting. When different procedures and analytical techniques are used, as is typically the case, any apparent differences observed between temporal and probability discounting could be due to the procedural and analytic approaches rather than to true differences in the underlying processes.

The conceptual similarity of discounting involving delayed and probabilistic rewards may be seen by considering reversals in preference. Recall that it was argued previously that preference reversals with delayed rewards occur because the subjective value of the smaller, sooner reward increases more than the subjective value of the larger, later reward as the delays to both are decreased equally. Similarly, preference reversals with probabilistic rewards might be explained by assuming that the subjective value of the smaller, less risky reward decreases more than the subjective value of the larger, more risky reward if the probabilities of winning decrease. As was true for temporal discounting, however, preference reversals with risky rewards do not greatly constrain the mathematical form of the probability discounting function (although they do preclude a simple expected value model of risky choice).

Given what is now known about the form of the temporal discounting function, the question becomes whether a similar hyperbola-like mathematical function also describes the discounting of probabilistic rewards. Recent evidence suggests that, indeed, this is the case. Moreover, the fact that the same mathematical function describes both temporal and probability discounting has generated suggestions that the same (or similar) underlying processes might account for both probability and temporal discounting (e.g., Green & Myerson, 1996; Prelec & Loewenstein, 1991; Rachlin et al., 1986, 1994; Stevenson, 1986). After presenting two proposed probability discounting functions that have the same mathematical forms as the temporal discounting functions discussed previously, we evaluate such single-process accounts.

Mathematical Descriptions of Probability Discounting

Rachlin et al. (1991) proposed that the value of probabilistic rewards may be described by a discounting function of the same form (i.e., a hyperbola) as that which they used to describe delayed rewards:

| (4) |

where V represents the subjective value of a probabilistic reward of amount A, h is a parameter (analogous to k in Equation 2) that reflects the rate of decrease in subjective value, and Θ represents the odds against receipt of a probabilistic reward (i.e., Θ = [1 − p]/p, where p is the probability of receipt). When h is greater than 1.0, choice is always risk averse; when h is less than 1.0, choice is always risk seeking; and when h equals 1.0, the subjective value predicted by Equation 4 is equivalent to the expected value.

Alternatively, Ostaszewski et al. (1998) suggested that the discounting of probabilistic rewards may be better described by a hyperbola-like form analogous to that for delayed rewards (i.e., Equation 3):

| (5) |

The parameter s may represent the nonlinear scaling of amount and/or odds against and is usually less than 1.0 (Green et al., 1999a). This is analogous to the role of the exponent in Equation 3, which may represent the nonlinear scaling of amount and/or delay. It is to be noted that the hyperbola (Equation 4) proposed by Rachlin et al. (1991) represents the special case of Equation 5 where s is equal to 1.0. Prelec and Loewenstein (1991) have proposed a similar form for the weighting of probabilistic outcomes where the weight is equal to 1/[1 + a log(p)]a/b which is mathematically equivalent to 1/[1 + a log(1 + Θ)]a/b. Note that this weighting function is similar in form to Equation 5 except that the independent variable is the logarithm of 1 + Θ, and the exponent is directly proportional to a (which corresponds to the discounting parameter h in Equation 5).

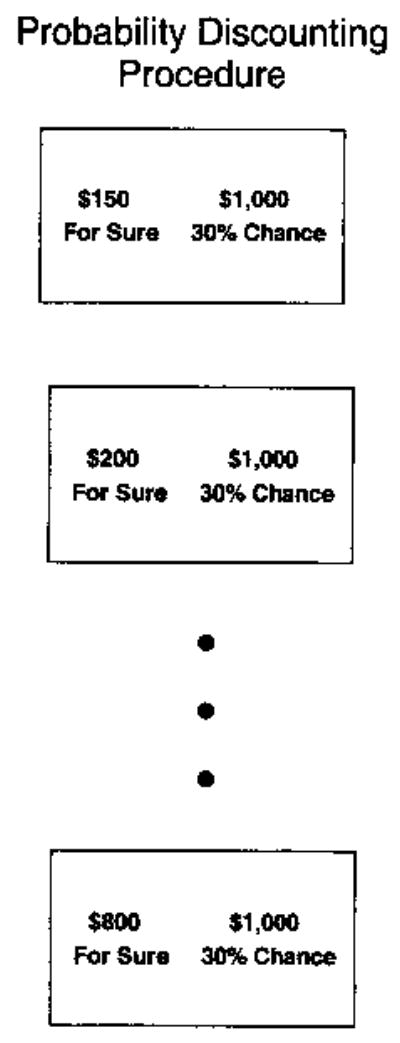

The two proposed probability discounting functions (Equations 4 and 5) have been evaluated with psychophysical procedures similar to those used to study the discounting of delayed rewards. An example of this type of procedure is shown in Figure 10. In the example, participants choose between a small but certain hypothetical amount of money and a larger, probabilistic amount (e.g., $150 for sure vs. $1,000 with a 30% chance). If they choose the probabilistic reward, then the amount of the certain reward is increased (e.g., $200 for sure), and they are asked to choose again. This process is repeated until the certain reward is preferred to the probabilistic reward. As in the temporal discounting procedure, order effects may be controlled for by redetermining the point at which preference reverses, this time beginning with an amount of the certain reward equal to that of the probabilistic reward. The amount of the certain reward is then successively decreased until the probabilistic reward is preferred. The average of the two amounts at which preference reversed is taken as an estimate of the subjective value of the probabilistic reward (i.e., the amount of a certain reward that is judged equal in value to the probabilistic reward).

Figure 10.

Procedure for studying probability discounting. Each of the rectangles represents one of a series of successive choices between a smaller, certain, and a larger, probabilistic, reward in which the amount of the smaller reward is increased until it is preferred to the larger reward.

Rachlin et al. (1991) showed that a hyperbola (Equation 4) described the discounting of probabilistic rewards more accurately than an exponential function. Ostaszewski et al. (1998) evaluated Equations 4 and 5, and they showed that including an exponent in the discounting function significantly improved the fit (i.e., Equation 5 accounted for significantly more of the variance than Equation 4). These findings were extended and replicated by Green et al. (1999a).

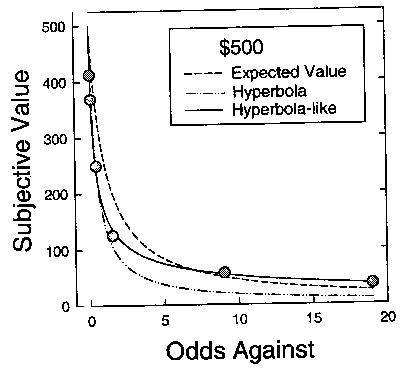

Figure 11 shows the results from one condition of Experiment 1 from Green et al. (1999a). As may be seen, the hyperbola-like function (Equation 5; solid curve) fit the data better than the hyperbola (Equation 4; dashed-dotted curve). Similar results were observed at the individual level. When Equation 5 was fit to the individual data from the 68 participants in Experiment 1, the estimated value of 5 was less than 1.0 in 87% of the cases, significantly more than would be expected by chance if the exponent were actually 1.0 (i.e., if the probability discounting function were a hyperbola, Equation 4). This finding was replicated in Green et al.’s (1999a) Experiment 2, in which 77% of the 30 participants had estimated values of s less than 1.0. These results strongly suggest that the form of the probability discounting function, like the form of the temporal discounting function, is hyperbola-like (i.e., similar to a hyperbola but with the denominator raised to a power).

Figure 11.

The subjective value of a probabilistic $500 reward plotted as a function of the odds against its receipt. The curved lines represent the expected value and the alternative forms of the probability discounting function (Equations 4 and 5) fit to the data. Data are from Experiment 1 of “Amount of Reward Has Opposite Effects on the Discounting of Delayed and Probabilistic Outcomes,” by L. Green, J. Myerson, and P. Ostaszewski, 1999a, Journal of Experimental Psychology: Learning, Memory, and Cognition, 25, p. 421. Copyright 1999 by the American Psychological Association.

As may be seen in Figure 11, the subjective value of the hypothetical $500 reward deviates systematically from its expected value (i.e., the product of probability and amount, indicated by the dashed curve). This systematic deviation is of some theoretical significance. First, it represents an anomaly from the standpoint of standard microeconomic theory, which assumes a rational decision maker. Second, the nature of the deviation is such that behavior is risk averse when the odds against receiving a reward are low (i.e., when the probability of reward is high) but not when the odds against are high (i.e., when the probability of reward is low). This finding, which is predicted by prospect theory (Kahneman & Tversky, 1979), is also predicted by the hyperbola-like form of the probability discounting function when the exponent is less than 1.0. The observed change from risk aversion to risk seeking as the likelihood of reward decreases may explain the extraordinary popularity of state lotteries, for which the expected value of a $1 ticket may be appreciably less than its $1 nominal value. For example, Lyons and Ghezzi (1995) reported that the average expected value of $1 lottery tickets in Arizona and Oregon averaged $0.54 and $0.65, respectively, over the several years examined.

Do Probability and Temporal Discounting Reflect the Same Underlying Process?

The finding that the same form of mathematical function describes both temporal and probability discounting raises the possibility (although it does not prove) that they both reflect a single discounting process (Green & Myerson, 1996; Rachlin et al., 1986, 1994; Stevenson, 1986). Moreover, if a single process does underlie both types of discounting, the similar form of the temporal and probability discounting functions does not indicate whether one type of discounting is more fundamental. For example, Rachlin et al. (1991) argued that temporal discounting is the more fundamental process. They suggested that probability discounting arises because probabilistic rewards are experienced as repeated gambles in which the lower the probability, the more times, on average, the gamble has to be repeated before a win occurs, and thus, the longer one has to wait for a reward. It also has been suggested (e.g., Myerson & Green, 1995), however, that probability discounting could be the fundamental process, and that temporal discounting arises because with longer delays there could be a greater risk that the expected or promised reward will not actually be received (see also Fehr, 2002; Stevenson, 1986). This view is common in the behavioral ecology literature, in which the relevant risks include losing food items to competitors or having foraging interrupted by a predator (e.g., Houston, Kacelnik, & McNamara, 1982).

Prelec and Loewenstein (1991) have proposed an account of discounting and related phenomena that highlights the parallels between the discounting of delayed and probabilistic rewards. As in prospect theory (Kahneman & Tversky, 1979), the process underlying evaluation of multiattribute outcomes is decomposed into two subprocesses, one of which transforms the amount according to a value function and the other of which describes the weight given to other attributes (e.g., probability or delay), with the utility of the outcome being equal to the product of its value and the weight applied to its other attributes. Also following prospect theory, Prelec and Loewenstein assumed an asymmetry between the value functions for gains and losses. They termed this property loss amplification and, going beyond prospect theory, they applied it to the evaluation of delayed as well as probabilistic outcomes.

Prelec and Loewenstein (1991) assumed separate hyperbola-like weighting functions for probability and for delay. The similar forms of these weighting functions follow from the fact that they are assumed to share two fundamental properties that Prelec and Loewenstein termed decreasing absolute sensitivity and increasing proportional sensitivity. In a two-alternative situation, decreasing absolute sensitivity refers to a decrease in the weight given an attribute (e.g., delay) when a constant is added to the values of that attribute for both outcomes. For example, when the delay to both outcomes is increased equally, the weight given to delay decreases and amount plays a larger role in determining preference. Preference reversals in choice between delayed rewards (e.g., Green et al., 1981; Green, Fristoe, & Myerson, 1994) may represent an example of decreasing absolute sensitivity. Hyperbola-like discounting functions predict decreasing absolute sensitivity. Increasing proportional sensitivity refers to an increase in the weight given an attribute when the values of that attribute are multiplied by a constant. The Allais paradox and the certainty effect represent examples of increasing proportional sensitivity.

A single-process view (e.g., Rachlin et al., 1991) that assumes that the same psychological processes underlie discounting of both delayed and probabilistic rewards and a view that emphasizes the strong similarities between temporal and probability discounting (e.g., Prelec & Loewenstein, 1991) both would predict that variables that affect temporal discounting should have a similar effect on probability discounting, and vice versa. Recently, however, evidence has been accumulating that despite the similarity in the mathematical form of the discounting functions, a number of variables have different effects on temporal and probability discounting. The most notable case to emerge to date concerns the opposite effect that amount of reward has on the rate at which delayed and probabilistic rewards are discounted (e.g., Christensen, Parker, Silberberg, & Hursh, 1998; Green et al., 1999a).

Does Amount Have Similar Effects on Probability and Temporal Discounting?

Standard microeconomic accounts assume that the rate of discounting is independent of the amount being discounted, and until recently, most psychological accounts had ignored this issue. There is now a substantial body of evidence, however, that smaller delayed rewards are discounted more steeply than larger delayed rewards. The effect of amount on rate of temporal discounting (often referred to as the magnitude effect) has been shown in numerous studies of human decision making involving both real and hypothetical monetary rewards (e.g., Benzion, Rapoport, & Yagil, 1989; Green, Fristoe, & Myerson, 1994; Green, Fry, & Myerson, 1994; Green et al., 1997; Johnson & Bickel, 2002; Kirby, 1997; Kirby & Maraković, 1996; Myerson & Green, 1995; Raineri & Rachlin, 1993; Thaler, 1981), as well as other commodities as diverse as medical treatments, vacation trips, and job choices (Baker et al., 2003; Chapman, 1996; Chapman & Elstein, 1995; Raineri & Rachlin, 1993; Schoenfelder & Hantula, 2003).

In contrast to the general consensus regarding the effect of amount on human temporal discounting, the two studies of animals to examine the effect of amount on discounting curves both reported the absence of a magnitude effect (Green, Myerson, Holt, Slevin, & Estle, 2004; Richards, Mitchell, de Wit, & Seiden, 1997; see also Grace, 1999, who reached a similar conclusion using a concurrent-chains procedure). Richards et al. (1997) found that rats discounted larger and smaller amounts of delayed water reward at comparable rates. Green et al. (2004) reported that neither pigeons nor rats showed a magnitude effect when delayed food rewards were used.

A possible reason for the discrepancy between the human and the animal data may be that studies of temporal discounting in humans typically manipulate amount of reward over a much broader range. For the pigeons in the Green et al. (2004) study, the range of amounts studied was sixfold, and for rats the range was fourfold. In Richards et al. (1997), the range of water amounts studied with rats was only twofold. In contrast, the range of delayed reward amounts examined in humans has often been much greater. To take an extreme example, Raineri and Rachlin (1993) studied hypothetical monetary rewards ranging from $100 to $1 million. It is important to note, however, that differences in discounting rate have been obtained in humans with much smaller differences in amount (e.g., $10 vs. $20; Kirby, 1997) that are more comparable in proportional terms to the range of amounts examined in pigeons and rats. More animal studies dealing with this issue are clearly needed, but the data currently available support the view that although rats and pigeons might not show magnitude effects, similarly shaped temporal discounting curves are observed in all three species studied to date.

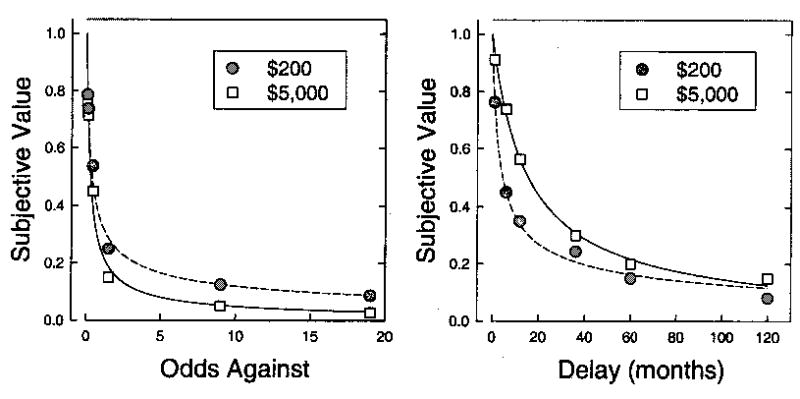

There has been much less research on the effect of amount on probability discounting than on temporal discounting, but the effect of amount on discounting probabilistic rewards in humans appears to be opposite in direction to that observed with delayed rewards (the effect of amount on probability discounting in animals has not yet been studied). That is, humans discount smaller probabilistic rewards less steeply than larger probabilistic rewards (Christensen et al., 1998; Du, Green, & Myerson, 2002; Green et al., 1999a; Myerson et al., 2003). This may be seen in the left graph in Figure 12, which depicts data from the $200 and $5,000 probabilistic reward conditions of Experiment 2 of Green et al. (1999a). In contrast, the right graph depicts the data from the corresponding delayed reward conditions of this experiment in which, as may be seen, the smaller reward was discounted more steeply than the larger reward. Green et al. (1999a) also examined discounting of three other hypothetical reward amounts and showed that as amount increased from $200 to $100,000, probabilistic rewards were discounted more and more steeply, whereas for delayed rewards, the degree of discounting decreased from $200 to $10,000, with little systematic change when amount was increased further.

Figure 12.

The subjective value of probabilistic $200 and $5,000 rewards (expressed as a proportion of their nominal amounts) plotted as a function of the odds against their receipt (left graph), and the subjective value of delayed $200 and $5,000 rewards as a function of the time until their receipt (right graph). The curved lines represent the hyperbola-like discounting function (Equation 3 in the left graph and Equation 5 in the right graph) fit to the data. Data are from Experiment 2 of “Amount of Reward Has Opposite Effects on the Discounting of Delayed and Probabilistic Outcomes,” by L. Green, J. Myerson, and P. Ostaszewski, 1999a, Journal of Experimental Psychology: Learning, Memory, and Cognition, 25, p. 423. Copyright 1999 by the American Psychological Association.

Results consistent with those of Green et al. (1999a) have been obtained by Christensen et al. (1998) using a very different procedure. Both studies involved choices between a smaller, certain reward and a larger, probabilistic reward and also between a smaller, immediate reward and a larger, delayed reward. However, Christensen et al. adjusted the probability of the larger reward on the basis of a participant’s choice on the previous trial in the probability discounting conditions, whereas Green et al. (1999a) adjusted the amount of the smaller, certain reward. In addition, Christensen et al. adjusted the delay to the larger reward on the basis of a participant’s choice on the previous trial in the temporal discounting conditions, whereas Green et al. (1999a) adjusted the amount of the smaller, immediate reward. Despite these procedural differences, both studies show consistent results in terms of the opposite effects of amount on temporal and probability discounting rates. Christensen et al. also reported that the pattern of results observed when participants had the possibility of receiving real monetary rewards was similar to that when participants made choices involving purely hypothetical rewards.

A recent study (Myerson et al., 2003) examined whether amount of hypothetical reward has different effects on the exponents of the temporal and probability discounting functions (Equations 3 and 5, respectively). Amount of reward had no significant effect on the exponent of the temporal discounting function in either of two relatively large samples (both Ns > 100). In contrast, the exponent of the probability discounting function increased significantly with the amount of reward in both samples. Moreover, subsequent analyses revealed that although the temporal discounting rate parameter, k, decreased significantly with amount of reward, there was no significant change in h, the probability discounting rate parameter. Thus, despite the similarity in the mathematical form of the temporal and probability discounting functions, the finding that amount of reward differentially affects the parameters of these functions suggests that separate processes may underlie the discounting of delayed and probabilistic rewards.

The opposite effects of amount on temporal and probability discounting pose problems for approaches that decompose the discounting function into value and weighting functions and then localize, either explicitly or implicitly, the effect of amount in the value function. Because the same value function applies in both temporal and probability discounting, such approaches must predict similar magnitude effects in both domains. For example, Prelec and Loewenstein’s (1991) model localizes the effect of amount in the value function, which enables them to correctly predict the magnitude effect in choices involving delayed rewards. As applied to reward amount, increasing proportional sensitivity predicts that multiplying the amounts involved by a constant will increase the weight given to the amount attribute. Consider the case in which an individual must choose between $10 now and $20 in a year and also between $10,000 now and $20,000 in a year. Increasing proportional sensitivity predicts that an individual who chooses the immediate $10 option in the first situation might well choose the delayed $20,000 option in the second situation. This is consistent with the finding that larger delayed amounts are discounted less steeply than smaller amounts.

Now consider the case in which an individual must choose between $10 for sure and a 50% chance of getting $20 and also between $10,000 for sure and a 50% chance of getting $20,000. Increasing proportional sensitivity predicts that an individual who chooses the $10 sure thing might well choose to gamble on getting $20,000 (note that this prediction is analogous to that involving delayed rewards). However, Prelec and Loewenstein (1991) pointed out the implausibility of this prediction. That is, it seems more likely that an individual who is risk averse with small amounts will be even more risk averse with large amounts. Indeed, in recent studies we have demonstrated empirically that risk aversion, as revealed by steeper probability discounting, increases with the amounts involved (Du et al., 2002; Green et al., 1999a; Myerson et al., 2003), contrary to the prediction of increasing proportional sensitivity.

These problems may be avoided by localizing magnitude effects, not in the value function, but rather in the weighting functions for probabilistic and delayed outcomes and by assuming that amount affects these weighting functions in different ways. Such an approach would be consistent with the view, expressed earlier, that different processes underlie the discounting of delayed and probabilistic outcomes. For example, the derivation of the hyperbola-like temporal discounting function (Equation 3) proposed by Myerson and Green (1995) suggests that the discounting function may be decomposed into value and weighting functions along the lines proposed by Kahneman and Tversky (1979). That is, the derivation of Equation 3 may be thought of as assuming that the value of a given amount of money is a power function (cAr) of the amount, and that this value is multiplied by (i.e., weighted by) 1/a(D + m)q to obtain the utility (which Myerson & Green, 1995, termed the subjective value) of a delayed reward (see the discussion of Equation 3 presented earlier). Because this derivation is based on the assumption that individuals making choices involving delayed rewards are actually evaluating rates of reward, Myerson and Green suggested that m may reflect, not only the minimum delay to any reward, but also the time between choice opportunities. If opportunities involving larger amounts occur less frequently, then such amounts will be discounted less steeply. This would account for the magnitude effect with delayed rewards.

Similarly, in the case of probability discounting, the parameter h may reflect the extent to which individuals distrust the stated odds against receiving the reward. If individuals place less trust in the stated odds for larger than for smaller amounts (i.e., they consider the odds against to be greater than they are told, which is reflected in larger h values), then this would lead to the steeper discounting (and greater risk aversion) observed with larger probabilistic rewards. Admittedly, these speculations concerning the role of amount in the weighting functions for delayed and probabilistic rewards are post hoc. Nevertheless, they illustrate an approach to explaining the opposite effects that amount of reward has on temporal and probability discounting. Because this approach localizes these effects in the weighting functions, it allows for the differing magnitude effects observed with delayed and probabilistic rewards, whereas an approach in which amount only affects the value function (e.g., Prelec & Loewenstein, 1991) has difficulty accounting for such findings.

Do Other Variables Have Different Effects on Probability and Temporal Discounting?

In addition to amount of reward, there appear to be other variables that influence the discounting of delayed and probabilistic rewards in different ways. For example, Ostaszewski et al. (1998) showed that inflation affects temporal discounting but does not affect probability discounting. Ostaszewski et al. (Experiments 1 and 2) studied discounting during a period of extremely high inflation in Poland and examined participants’ choices involving both Polish zlotys and U.S. dollars. Hypothetical rewards in the two currencies were of equivalent worth according to the then-current exchange rates. For probabilistic rewards, the rates of discounting were the same for both dollars and zlotys. In contrast, for delayed rewards, discounting was much steeper when amounts were expressed in terms of zlotys than when they were expressed in terms of their dollar equivalents.

Selective effects, like that of inflation on temporal discounting, present a challenge for single-process theories of discounting. If probabilistic rewards are discounted because of the inherent delay involved in waiting for repeated gambles to pay off (Rachlin et al., 1991), then inflation’s effect on the value of delayed rewards should be mimicked in its effect on probabilistic rewards. A similar prediction follows from the hypothesis that temporal discounting reflects the risk inherent in waiting for delayed rewards. Thus, Ostaszewski et al.’s (1998) findings are inconsistent with both forms of single-process accounts.

The results of a recent study examining cultural similarities and differences (Du et al., 2002) are also inconsistent with single-process accounts of the discounting of delayed and probabilistic rewards. Such accounts would predict that people raised in a culture in which probabilistic rewards are discounted very steeply would also discount delayed rewards very steeply, and vice versa. To assess cultural differences in discounting, Du et al. (2002) compared the performance of American, Chinese, and Japanese graduate students on temporal and probability discounting tasks. The Americans showed relatively steep discounting on both tasks. More relevant to the question of whether a single process is involved in both, the Chinese discounted delayed rewards more steeply than the Japanese, but the Japanese discounted probabilistic rewards more steeply than the Chinese.

These results suggest that temporal and probability discounting are dissociable by cultural processes, contrary to the predictions of single-process theories. It also is noteworthy that despite the cultural differences, Equations 3 and 5 provided accurate descriptions of the discounting of all three groups: For each group, the median proportion of variance accounted for by fits to individual data was greater than .930 on both tasks. In addition, all three groups showed opposite effects of amount on the discounting of delayed and probabilistic rewards. That is, in all three groups the rate at which delayed rewards were discounted was higher for the smaller amount, whereas the rate at which probabilistic rewards were discounted was lower for the smaller amount. Taken together, these findings suggest that, despite the cultural differences in discounting rates, there are significant commonalities among the different cultures with respect to the processes underlying evaluation of delayed and probabilistic rewards (Du et al., 2002).

Applications to Group Differences

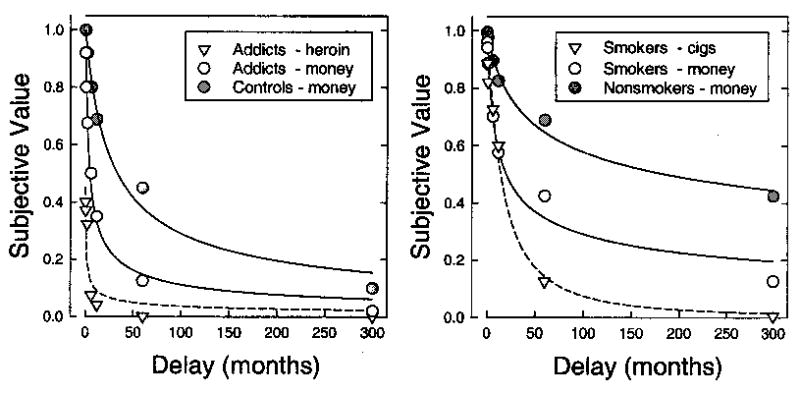

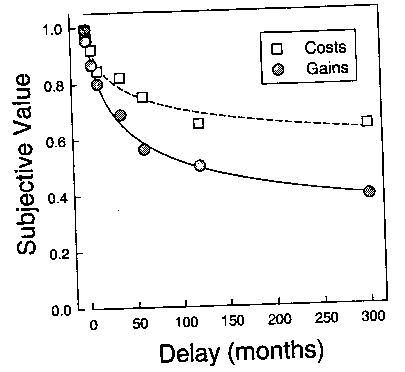

The Du et al. (2002) study of cultural differences exemplifies a growing body of research concerned with group differences in discounting. One of the first studies to take this approach was the Green, Fry, and Myerson (1994) study of age differences. In a follow-up study, Green, Myerson, Lichtman, Rosen, and Fry (1996) examined the effects of income on temporal discounting in older adults (mean age = 71 years), comparing a lower income group (median income less than $10,000 per year and living in subsidized housing) with a higher income group (median income approximately $50,000 per year). The two groups were approximately equal in years of education. Green et al. (1996) observed that for both groups, their data were well described by a hyperbola (Equation 2), but the lower income older adults discounted delayed rewards much more steeply than the higher income older groups. A younger group (mean age = 33 years) of upper income adults was also tested, and no age difference was observed between the two upper income groups. The relative stability of discounting in adults between the ages of 30 and 70 parallels previous findings of the stability of personality traits over this age range (Costa & McCrea, 1989 ) and suggests that in adults, income comes to play a larger role than age in discounting.