Abstract

Performance feedback has facilitated the acquisition and maintenance of a wide range of behaviors (e.g., health-care routines, seat-belt use). Most researchers have attributed the effectiveness of performance feedback to (a) its discriminative functions, (b) its reinforcing functions, or (c) the combination of the two. In this study, we attempted to evaluate the relative contributions of the discriminative and reinforcing functions of performance feedback by comparing a condition in which the discriminative functions were maximized and the reinforcing functions were minimized (i.e., performance-specific instructions without contingent money) with one in which the reinforcing functions were maximized and the discriminative functions were minimized (i.e., contingent money with no performance-specific instructions). We compared the effects of these two conditions on the acquisition of skills involved in conducting two commonly used preference assessments. Results showed that acquisition of these skills occurred primarily in the condition with performance-specific instruction without contingent money, suggesting that the delivery of performance-specific instructions was critical to skill acquisition, whereas the delivery of contingent money had little effect.

Keywords: behavioral skills training, feedback, organizational behavior management, preference assessments, staff training

Feedback has facilitated the acquisition and maintenance of a wide range of behaviors including customer service (Crowell, Anderson, Abel, & Sergio, 1988), academic behavior (Van Houten, Morrison, Jarvis, & McDonald, 1974), health-related behavior (Alavosius & Sulzer-Azaroff, 1990), staff training (Parsons & Reid, 1995), treatment integrity (Witt, Noell, LaFleur, & Mortenson, 1997), and implementation of functional analyses (Iwata et al., 2000). Feedback has also been effective in reducing various problem behaviors, including phobic behavior (Leitenberg, Agras, Thompson, & Wright, 1968), activity level of children (Schulman, Suran, Stevens, & Kupst, 1979), and safety hazards (Sulzer-Azaroff & de Santamaria, 1980).

Feedback has been widely used in organizational and industrial settings (Ford, 1980) and is the most frequently used method to change behavior in organizations (Prue & Fairbank, 1981). In a review of the feedback literature, Balcazar, Hopkins, and Suarez (1985) found that feedback alone resulted in consistent performance effects in 28% of the articles reviewed and that contingent reinforcement resulted in more consistent effects. In a more recent review, Alvero, Bucklin, and Austin (2001) also found that feedback combined with consequences (e.g., differential reinforcement) increased the efficacy of feedback. Alvero et al. noted that the lack of consistency in the use of the term feedback made it difficult to identify articles that used feedback as an independent variable. The authors also stated that it is important to assess the functional mechanisms of feedback. In a review that examined contingent money (also referred to as monetary incentive systems), Bucklin and Dickinson (2001) found that contingent money plus feedback improved performance in comparison to noncontingent money (hourly pay) plus feedback. In addition, performance under different performance-pay arrangements (e.g., schedule of money delivery, percentage of base pay earned, etc.) was similar. The authors noted that one explanation for similar outcomes across varying pay arrangements might have been the inclusion of feedback. The authors also stated, “it is important to isolate the effects of monetary incentives from those of feedback” (p. 128).

Although several studies have demonstrated the effectiveness of feedback, the definitions and components used to implement feedback have not been consistent. For example, feedback has been defined as “delivering praise for successful performance in a behavioral rehearsal and instruction on ways to improve the performance in the future” (Miltenberger, 2001, p. 492). Mazur (1998) defined feedback as giving the subject information about the accuracy of each response. Catania (1998) defined feedback as “a stimulus or stimulus property correlated with or produced by the organism's own behavior. The stimulus may in turn change the behavior” (p. 390). Sulzer-Azaroff and Mayer (1991) defined feedback as “information transmitted back to the responder following a particular performance: seeing or hearing about specific features of the results” (p. 590). Based on these definitions, the term feedback encompasses two components; one component involves the delivery of a potential reinforcer (e.g., praise, money), and the other component involves the delivery of information about correct or incorrect performance. Other authors have noted that the feedback literature has not addressed the potential behavioral mechanisms that are responsible for its effects (Duncan & Bruwelheide, 1986). Peterson (1982) noted that feedback may serve multiple functions, and few have analyzed its effects in terms of basic principles of behavior.

Most of the studies that have examined the effectiveness of feedback have used feedback and potentially reinforcing components simultaneously. For example, Alavosius and Sulzer-Azaroff (1990) used this type of feedback when they assessed the effects of feedback on the acquisition and maintenance of health-care routines. During the feedback sessions, experimenters reviewed with each participant whether he or she had performed each component of the task correctly and offered specific suggestions for improving subsequent performance. In addition, during these sessions, the experimenter delivered “approval of increasingly correct performance” (p. 155). Results indicated that feedback was highly effective in increasing correct performance. Austin, Kessler, Riccobono, and Bailey (1996) assessed the effects of feedback and reinforcement (contingent food and money) for improving the performance of a roofing crew. Results showed that this intervention resulted in reduced labor costs due to early completion of the job. Because both information and potential reinforcers (praise, food, or money) were used simultaneously, it is unclear whether presentation of information about performance or potential reinforcers were necessary for task acquisition.

A number of researchers have evaluated the informational aspects of feedback procedures alone without approval or a potential reinforcement component. For example, O'Brien and Azrin (1970) demonstrated that delivery of informational feedback, in the form of a mild vibrotactile stimulus, decreased participants' slouching behavior. To ensure that the stimulus did not function as a punisher, the authors conducted a control condition in which the participants were instructed to slouch; participants' slouching increased during this condition. More recently, Kuhn, Lerman, and Vorndran (2003) illustrated the effects of a pyramidal program to train family members of children with behavior disorders to implement individualized treatments for problem behavior and to train other family members to implement the program. The component used during training was feedback, consisting of statements about incorrect implementation of treatment and instruction describing the correct procedure. Results indicated that the training procedure was effective in increasing caregivers' correct implementation of the intervention.

Some authors have compared the effects of feedback alone with the effects of feedback plus praise. For example, Leitenberg et al. (1968) compared the effects of feedback alone with feedback and praise for decreasing phobic behavior (by increasing the amount of time the participant exposed him- or herself to the phobic item). During feedback, the participant was given a stopwatch and was told to record the time for each trial; no praise was delivered for improved performance during this condition. During a subsequent feedback and reinforcement condition, praise was delivered. Results indicated substantial improvements during the feedback condition; however, no additional improvement was observed as a result of including praise. Cossairt, Hall, and Hopkins (1973) assessed the effects of feedback alone for increasing teacher praise on student attending. Feedback (consisting of information about how often the teachers praised the students' attending) did not increase teacher praise, whereas when the teachers received praise for their delivery of praise to students, the teachers' use of praise increased. Because the effects of contingent praise were never examined in isolation, it remains unclear whether reinforcement alone would have increased teacher praise. Gaetani, Hoxeng, and Austin (1985) evaluated the effects of feedback with and without contingent monetary commissions for increasing daily productivity (in dollars billed) of 2 machinists. Results showed increases in performance for feedback alone; however, greater increases in production were observed when contingent money was combined with feedback. Thus, these three studies had differing findings with respect to whether feedback only and feedback with reinforcement resulted in positive outcomes. In none of the studies was reinforcement assessed independently, precluding a direct comparison of these two training components.

Few studies have assessed the effects of contingent reinforcement with and without feedback. Smoot and Duncan (1997) compared the effects of contingent money with and without performance feedback for increasing worker performance on a simple production task. Experimental control was demonstrated using within-subject and between-groups comparisons. Following baseline, groups of two were exposed to three different pay arrangements (linear piece-rate pay, accelerating piece-rate pay, and decelerating piece-rate pay) with feedback. During the final phase, the feedback component was removed for one of the groups. Results showed high levels of productivity when contingent money was combined with feedback, and performance did not deteriorate when feedback was removed, suggesting that feedback did not enhance the effects of contingent money. The authors noted, however, that the effects of feedback in the contingent-money-plus-feedback condition could have carried over to the contingent-money-without-feedback condition. Bucklin, McGee, and Dickinson (2003) evaluated the effects of contingent money with and without feedback. Seven college students served as participants, and their correct performance on a computer-based task was measured. The authors compared three conditions: contingent money without feedback, contingent money with feedback, and hourly pay (noncontingent money) with feedback. Results showed that feedback enhanced the effects of contingent money. However, because performance did not reverse during the return to contingent money only, these results must be interpreted with caution. The authors noted that performance increases observed during the contingent-money-plus-feedback condition might have carried over to the subsequent contingent-money condition. The authors recommended that an alternative to the within-subject reversal design be used to evaluate the effects of these variables in the future.

We have found only one study that assessed the independent effects of feedback and reinforcement (Wincze, Leitenberg, & Agras, 1972). The authors assessed the independent effects of feedback (vocally telling participants when their verbalizations were correct or incorrect and also providing the correct response) and reinforcement (tokens that could be exchanged for preferred activities or items) for decreasing the delusional talk of 10 individuals with schizophrenia. Results indicated that feedback alone was ineffective, whereas reinforcement alone was effective. However, a number of factors may have limited the generality of these findings: The dependent variable was based on nurses' inferences, participants' inappropriate vocal behavior may have been maintained by social reinforcers (i.e., feedback may have functioned as social reinforcement), and individual histories may have affected the results.

The purpose of the current study was to extend this line of research by conducting an investigation that would further delineate the relative roles of feedback and reinforcement in staff training, an activity that routinely involves feedback. To this end, we compared the relative efficacy of a treatment component that maximized the discriminative properties of feedback and minimized the reinforcement properties (a feedback-only condition) versus a component that maximized the reinforcing properties and minimized the discriminative properties (a contingent-money condition).

To evaluate the effects of feedback, we chose two commonly used stimulus-preference assessment procedures, the paired-stimulus preference assessment (Fisher et al., 1992) and the multiple-stimulus-without-replacement (MSWO) assessment (DeLeon & Iwata, 1996). Because correct implementation of preference assessments requires a chain of responses that are different but are of similar complexity across methods, this experimental task allowed comparison of training components applied to each of these methods. In addition, we selected this task because only one study to date has evaluated the efficacy of staff training for increasing staff implementation of preference assessments (Lavie & Sturmey, 2002).

General Method

Participants and Setting

There were three types of participants in this investigation: adults who were being trained to conduct preference assessments (henceforth referred to as “trainees”), adults playing the role of a client whose preferences were being assessed (henceforth referred to as “simulated clients”), and children who were receiving services in an outpatient clinic who needed preference assessments (henceforth referred to as “real clients”).

Trainees

Four individuals who had little or no experience in conducting stimulus preference assessments participated in this study. All trainees were female. Trainees had earned their BA and had received no formal training in conducting stimulus preference assessments. All trainees provided written informed consent for the use of their data as part of a research project. They were informed that their performance in this study was not used as an evaluation of their job performance.

Simulated Clients

To keep the level of difficulty constant across the trainees, individuals who had worked in an outpatient behavior program for at least 1 month played the role of simulated clients throughout the study. During sessions, these simulated clients had access to scripts (see below) indicating how to respond during each session.

Real Clients

To assess the generality of each of the training components, probe sessions were conducted in the therapy room. These probe sessions were procedurally identical to sessions with simulated clients, except that 2 real clients (Sam and James) participated in their natural setting without the use of scripts. Sam was a 4-year-old boy with autism who had been referred for the assessment and treatment of severe self-injurious behavior. He did not speak. James was a 12-year-old boy with profound mental retardation who had been referred for the assessment and treatment of aggression. He spoke using single-word utterances. These clients were selected because they frequently emitted problem behavior, which is representative of some of the natural challenges encountered by therapists who conduct preference assessments in a natural setting.

Setting and Materials

Training sessions were conducted in an office or session room at a day-treatment program for individuals with developmental disabilities. Items necessary for conducting a preference assessment (e.g., table and chairs, leisure materials, paper, pen, stopwatch, and calculator) were provided. A videocamera was always present, and all sessions were videotaped for subsequent data collection (if needed) and for use during the feedback condition (see below).

Preference Assessments for Trainees

A brief preference assessment was conducted with the trainees prior to any baseline or treatment sessions. The purpose of this assessment was to identify items that may function as reinforcers that could be used during subsequent training conditions. A list containing 10 different putative preferred items or activities (e.g., money, gift certificates, time off work) was handed to each trainee, and she was instructed to rank each item from 1 (most preferred) to 10 (least preferred). All trainees chose money (a maximum of $10) as the most preferred item; this item was delivered during the contingent-money component of training (see below).

Preference Assessments for Simulated and Real Clients

Two commonly used preference assessment methods, the paired-stimulus method and the MSWO method, based on procedures described by Fisher et al. (1992) and DeLeon and Iwata (1996), respectively, were used as the experimental tasks. The trainees were trained to conduct these two preference assessments with the simulated and real clients. Slight modifications to the procedures of the paired-stimulus and MSWO methods were included in the current investigation to specify correct responding for specific client distracter behaviors that were not described by Fisher et al. and DeLeon and Iwata. For example, Fisher et al. did not indicate how the therapist should respond if a client selects two items sequentially (i.e., selects two items in rapid succession) during the paired-stimulus method; thus, we developed a rule for trainees if the client responded in this manner (i.e., remove the stimuli and reinitiate the trial with those items). DeLeon and Iwata did not indicate how the therapist should respond when the client selected two items simultaneously during the MSWO method; thus, we developed a rule for trainees to follow if the client responded in this manner (i.e., the client was not permitted access to either item, the stimuli were rotated, and the trial was reinitiated). Correct implementation of the preference assessments involved the correct presentation of materials and prompts and the delivery of consequences by a trainee. Below are descriptions indicating the correct implementation of each preference assessment method. Note that if a trainee emitted all of the responses described below in a given session, she would obtain a score of 100% correct for that session. Also note that we use the term therapist (rather than trainee) in this next section because we are specifying the correct responses for implementing the paired-stimulus and MSWO procedures (which the trainees may or may not emit).

During the paired-stimulus assessment, leisure items were randomly presented in pairs. During each trial, the therapist placed two items in front of the client, spaced 30.5 cm apart, and instructed him or her to “pick one.” If the client selected an item within 5 s, the therapist immediately permitted access to the item selected and removed the other item. After 5 s, the item selected was removed, and a new trial with two different stimuli was initiated. If the client selected both items simultaneously or sequentially (selected each item in rapid succession), the therapist immediately removed both stimuli (withdrew them from the table) and re-presented them (placed them back on the table in front of the client, spaced 30.5 cm apart, and instructed the client to “pick one”). If the client did not select an item within 5 s, the therapist immediately removed both stimuli, physically prompted the client to sample each of the stimuli (by sequentially placing each of the items in the client's hand for 5 s), and re-presented them (as described above). If the client did not select an item within 5 s or selected both items simultaneously or sequentially following re-presentation, both items were removed, and the therapist continued to the next trial (i.e., she presented two new stimuli). If the client grabbed an item other than those presented, the therapist immediately blocked access to or removed the grabbed item and continued with the current trial. The therapist recorded client responses for each trial by circling the corresponding symbol on the data sheet (e.g., circling 1 if the client selected Item 1). The therapist summarized client data by adding the total number of selections for each item and dividing this number by the total number of presentations for that item and multiplying by 100% to yield a percentage selection measure for each item in the array.

During the MSWO preference assessment, prior to the start of the first session, the therapist presented each of the seven items, one at a time, for 30 s. Next, the therapist simultaneously presented seven leisure items by placing all items in a straight line or arc in front of the client, instructing him or her to “pick one.” If the client selected an item within 30 s, the therapist immediately permitted access to that item for 30 s. After 30 s, the item was removed from the client, that item was not put back into the array, and the therapist rotated the positions of the remaining items. If the client selected two items in a sequential fashion, the client was permitted access to the item selected first for 30 s. After 30 s, the item was removed from the array. If the client selected two items simultaneously, the client was prevented from obtaining either of the items, and the therapist reinitiated the trial by rotating the items and saying “pick one.” If the client simultaneously selected two items a second consecutive time, all stimuli were removed, and a new session (i.e., all seven items were presented) was initiated. If the client did not select an item within 30 s, all stimuli were removed, and a new session (i.e., all seven items were presented) was initiated. If the client grabbed an item other than that presented, the therapist immediately blocked access to or removed the grabbed item and continued with the current trial. Therapists recorded client responses for each trial by circling the corresponding symbol on the data sheet. The therapist summarized client data by adding the total number of selections for each item and dividing that number by the total number of presentations for that item and multiplying by 100% to yield a percentage selection measure for each item in the array.

Assessment Scripts

Six scripts (three for each type of preference assessment) were randomly rotated across sessions for each preference assessment. Each script specified one or more client responses for each trial across a 16-trial simulated preference assessment. If the trainee conducted more than 16 trials, the simulated client continued using the same script, starting with the response specified for the first trial. However, only the trainees' performance on the first 16 trials was recorded. In addition, if the trainee attempted to terminate the paired-stimulus or MSWO assessment prematurely (i.e., before 16 trials were completed), the simulated client prompted the trainee to continue with the assessment to ensure equivalent opportunities for correct and incorrect responses across experimental phases. Each script contained 11 distracter trials (specified challenging behaviors) and five standard trials (specified less challenging behaviors); however, the sequence of these behaviors varied across scripts. The distracter behaviors exhibited by simulated clients included (a) simultaneously selecting two stimuli, (b) selecting two stimuli sequentially in quick succession, (c) grabbing a stimulus that was not in the array, and (d) not selecting a stimulus within the appropriate time period. Examples of script instructions included, “During Trial 1, select Item 1 s after it is presented, interact with that item until it is removed,” “During Trial 2, select 1 item and then quickly select another item. If the items are represented, select only 1 item.” Note that Trial 1 contained a standard client response, whereas Trial 2 contained a more challenging response.

Trainee Target Behaviors

The dependent variable of interest was the behavior of the trainees who served the role of therapist. For each trial, there were a number of specified antecedent (always two for both assessments) and consequent events (varied from one to three depending on scripted client responses) that should have been presented. Two additional trainee responses that were measured were (a) circling each item selected in the appropriate box (applicable for all conditions except baseline) and (b) correctly calculating the percentage of trials in which each item was selected and ranking the items from most to least preferred (applicable for all conditions). The trainee received a correct or incorrect score for each of these responses. For example, for each MSWO assessment, the two antecedent events were as follows: (a) The trainee had to present the appropriate number of stimuli (seven or fewer depending on client performance during the previous trial) in the appropriate manner (i.e., in front of the client in a line or arc), and (b) the trainee was required to instruct the client to “pick one.” Thus, for each MSWO trial, these two trainee responses were scored. An example of a trial requiring three correct consequent events during the paired-stimulus assessment is as follows: If the client selected two stimuli simultaneously, the correct trainee response was to re-present the stimuli (Response 1). If the client then selected an item within 5 s, the correct trainee response was to allow access to that item and remove the nonselected item (Response 2) and remove the selected item after 5 s (Response 3). Based on the client's behavior, three correct or incorrect responses would have been scored during this trial. Also, when a specified duration was noted as the correct trainee behavior (e.g., remove item after 5 s), a window of plus or minus 2 s was permitted.

Response Measurement and Reliability

Two sessions (one using the MSWO method and one using the paired-stimulus method) were conducted each day, 2 to 4 days per week. Sessions varied in duration depending on the number of trainee-initiated trials; however, a minimum of 16 trials was required for all sessions. All sessions were videotaped and scored by a postdoctoral fellow or graduate student research assistants. Correct and incorrect responses were scored for the first 16 trials observed during each session, and the total number of correct responses was divided by the total number of correct and incorrect responses and multiplied by 100% to yield a percentage correct measure for each session. A second observer independently collected data on 40% of the videotaped sessions using the point-by-point agreement ratio method (Kazdin, 1982, pp. 53–56). Observers' records were compared for each trial in which a response was recorded by one of the observers, and an agreement was defined as both observers scoring the same response (either correct or incorrect) for each recorded trial. The number of agreements was divided by the number of agreements plus disagreements, and this number was multiplied by 100% to obtain a percentage agreement score. The mean agreement scores for trainee behaviors were 94% (range, 81% to 100%) during Experiment 1 and 94% (range, 80% to 100%) during Experiment 2.

Experimental Design

During all conditions, trainees were instructed to identify a hierarchy of client preference (i.e., generate a list of the client's most preferred item to his or her least preferred item). Across all phases, the trainees were asked to conduct a paired-stimulus assessment in one condition and an MSWO assessment in the other condition (in accordance with a multielement design). During each phase, a natural probe was conducted for each of the assessment methods. During the first phase (i.e., baseline) the baseline procedures (e.g., instruction given to the trainees) were the same in both the paired-stimulus and MSWO conditions. Similarly, when the written instruction condition was introduced in the second phase (according to a multiple baseline design), the procedures were the same in both the paired-stimulus and MSWO conditions. However, in the third phase (feedback vs. contingent money), the paired-stimulus condition was exposed to the feedback training procedures for Beth and Liz and the MSWO condition was exposed to the contingent-money training procedures for these 2 trainees. For Dianne, the paired-stimulus condition was exposed to the contingent-money training procedures and the MSWO condition was exposed to the feedback training procedures.

Assigning each stimulus preference condition to a different training method (feedback or contingent money) was not done with Kerry, because she showed considerably better performance in the MSWO condition than in the paired-stimulus condition in Phase 2, when both conditions were exposed to written instruction. Therefore, for Kerry, both preference assessments were first exposed to the contingent-money training procedures in the third phase, and then both preference assessments were exposed to the feedback training procedures in the fourth phase.

Procedure

Baseline

Because teachers, parents and other caregivers are often asked to identify preferred stimuli for clients without any formal training (e.g., without reading published material on the topic or receiving structured practice with feedback), the baseline was constructed to approximate this situation. Trainees were asked to serve as therapists while conducting a MSWO or paired-stimulus preference assessment with research assistants playing the role of clients (i.e., simulated clients). Trainees were given no further instructions (other than that noted above), prompts, reading materials, or data sheets. Trainees were given a blank sheet of paper to write on (with the label “MSWO method” or “paired-stimulus method” for each condition), a pen, a stopwatch, and the appropriate number of leisure items the assessment required. Instructions, feedback, and reinforcement were not delivered during this condition.

Written Instruction

Prior to the start of the first session for each preference assessment condition in this phase, trainees were given brief summaries of key points included in the method sections of the Fisher et al. (1992) and DeLeon and Iwata (1996) articles for the paired-stimulus and MSWO assessments, respectively. (Descriptions used for each assessment are available from the authors on request.) Trainees had 30 min to review the methods prior to the start of the first session of each phase. Trainees were not permitted to refer to the written instructions while they were conducting the assessments; however, they had unlimited access to the written instructions outside the sessions. Data sheets specifically designed for the paired-stimulus and MSWO assessments were provided to the trainees during this condition.

Feedback Condition

Feedback was delivered after the experimenters recorded performances viewed from videotapes of the sessions; thus, feedback was delivered immediately prior to the start of the next session based on trainees' performances from the preceding session. Sessions were 5 to 10 min long. Immediately prior to each session, the experimenter played the videotape and handed the trainee the relevant data sheet from the preceding session (the last baseline session was used for the first feedback session). The experimenter provided feedback on each performance type (see the response measurement section for specific examples). Thus, the number of feedback statements was held constant across sessions. For each performance type, the experimenter stated whether the target behavior was performed correctly or incorrectly while pointing to the target behavior on the data sheet and videotape. For responses performed incorrectly, the experimenter noted why the response was incorrect. For example, if the trainee's performance was scored incorrect on Trial 4, the experimenter said, in a neutral tone, “Trial 4 was incorrect because you did not deliver a prompt to ’pick one' when presenting the stimuli,” while pointing to the relevant trial on the data sheet and showing the error on the videotape. To reduce the potential influence of social reinforcers, the experimenter delivered feedback using a neutral tone and only vocalized information necessary for communicating which responses were correct or incorrect and why they were correct or incorrect. No additional comments, such as “good job” or “that was good,” were given.

Contingent Money

During this condition, the experimenter delivered the most preferred stimulus (i.e., a maximum of $10) identified during the trainee's preference assessment contingent on performance. The specific amount of the preferred stimulus delivered was proportional to the percentage of correct responses exhibited by the trainee during her last session. For example, if the trainee exhibited 50% correct performance during her last session, then she received 50% of the total amount of the available money (e.g., she received $5 of the potential $10 that could be earned). The experimenter handed the trainee a slip indicating the amount of the reinforcer she had earned for her performance on the previous session. However, the experimenter did not disclose information regarding her performance (i.e., the specific behaviors she exhibited that were correct or incorrect, or why). In addition, the trainees were not shown the relevant videotape or data sheet associated with their performance.

Feedback Plus Contingent Money

This condition was implemented only if feedback or contingent-money conditions (when implemented alone) did not result in 90% or higher correct performance across three consecutive sessions (our criterion for mastery). During this condition, components of the feedback and contingent-money conditions (see above) were combined.

Results

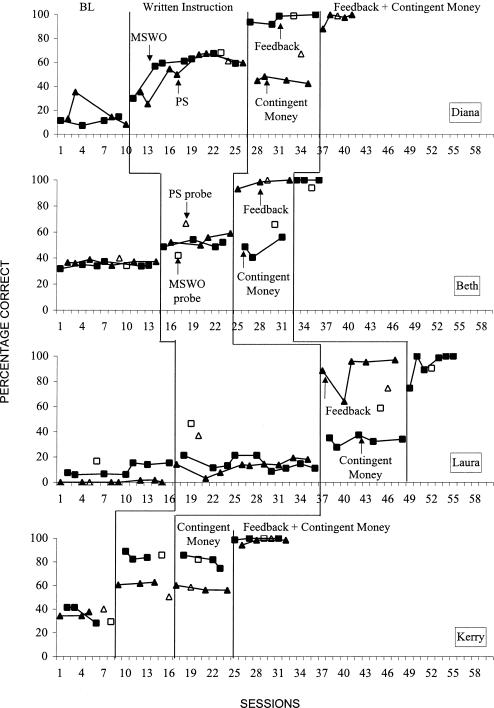

Figure 1 shows trainees' performance during the preference assessment sessions, expressed as the percentage of correct trainee responses. During baseline, all trainees exhibited low percentages of correct performance during both MSWO and paired-stimulus assessments: Diana (MSWO M = 15%; paired-stimulus M = 21%); Beth (MSWO M = 34%; paired-stimulus M = 37%); Laura (MSWO M = 10%; paired-stimulus M = 0.6%); and Kerry (MSWO M = 37%; paired-stimulus M = 36%). During the written instruction condition, correct performances increased to moderate levels for Diana (MSWO M = 62%; paired-stimulus M = 54%) and Beth (MSWO M = 51%; paired-stimulus M = 54%), and only slightly for Laura (MSWO M = 15%; paired-stimulus M = 13%). By contrast, Kerry's correct performance increased to moderate levels in the paired-stimulus condition (M = 62%) and to high levels in the MSWO condition (M = 85%).

Figure 1. The effects of written instruction, feedback, and contingent money on the percentage of correct responses when implementing the paired-stimulus (PS) and multiple-stimuli-without-replacement (MSWO) preference assessments.

During the feedback versus contingent-money phase (Diana, Beth, and Liz) or the contingent-money phase (Kerry), contingent money did not produce substantial increases in correct performances for any of the trainees, irrespective of the preference assessment being trained. That is, similar findings were obtained for the MSWO (M = 48% for Beth, M = 33% for Laura, and M = 81% for Kerry) and paired-stimulus assessments (M = 45% for Diana, and M = 58% for Kerry). By contrast, the feedback condition resulted in criterion levels of correct performance (i.e., 90% or higher across three consecutive sessions), whether the component was used to train the MSWO method (M = 96% for Diana) or the paired-stimulus method (M = 97% for Beth, and M = 88% for Laura). When feedback was added to the condition previously trained with only contingent money, performances met criterion across all trainees: Diana (paired-stimulus M = 96%), Beth (MSWO M = 100%), Laura (MSWO M = 94%), and Kerry (MSWO M = 99% and paired-stimulus M = 97%).

Performances during probe sessions usually matched or approximated those observed during simulated conditions across all training components: baseline (MSWO M = 27%, range, 17% to 34%; paired-stimulus M = 27%, range, 0% to 40%), written instruction (MSWO M = 61%, range, 42% to 86%; paired-stimulus M = 54%, range, 37% to 67%), contingent money (MSWO M = 69%, range, 58% to 82%; paired-stimulus M = 63%, range, 59% to 67%), feedback (MSWO M = 99% for Diana only; paired-stimulus M = 87%, range, 75% to 100%), and feedback plus contingent money (MSWO M = 95%, range, 90% to 100%; paired-stimulus M = 99%, range, 99% to 100%). However, probe performance during feedback met the 90% criterion level for only Diana and Beth. Although Laura's probe data during feedback indicated an increase from the previous condition, correct performance during the probe was below criterion at 75% correct. It is interesting to note that when performance on probes deviated from that observed during simulated conditions, probe performances were often higher than those observed under simulated conditions, suggesting that our simulated conditions were no less difficult than conditions that may be encountered in the natural environment.

These results indicate that written instruction produced small or inconsistent increases in performance, contingent money produced no appreciable effect on performance, and feedback produced large and consistent improvements in performance. These findings extend previous feedback research by demonstrating that conditions that maximize the discriminative aspects of feedback may be more critical than those that emphasize the reinforcing aspects of feedback for producing task acquisition.

Discussion

Numerous studies have demonstrated the effectiveness of feedback for increasing a wide range of behaviors. Although it is commonly assumed that feedback involves a combination of discriminative and reinforcing properties, few investigations have examined their relative contributions to feedback effects. In the present study, we evaluated the relative effects of the discriminative and reinforcing properties of feedback by implementing and comparing two conditions. In one condition (feedback), we maximized the discriminative properties and minimized the reinforcing properties of feedback; in the other condition (contingent money), we maximized the reinforcing properties and minimized the discriminative properties of feedback. Each training condition (feedback, contingent money) was used to train novice staff members to conduct two commonly used stimulus preference assessments (MSWO, paired stimulus). The results demonstrated that the feedback condition was both necessary and sufficient to produce rapid and large increases in correct implementation of the two stimulus preference assessments, whereas providing money contingent on correct responding did not affect performance.

These findings suggest that the trainees came to the experiment sufficiently motivated to implement the preference assessments accurately, but they lacked the skills needed to do so. That is, the presentation of a monetary consequence or incentive for correct performance in the contingent-money condition had no effect on performances (i.e., the money did not function as reinforcement for correct responding). By contrast, providing the trainees with clear vocal and videotaped information on the specific errors they made and also on how to correct those errors (i.e., the discriminative function of feedback) resulted in immediate and dramatic improvements in performance.

When evaluating the potential importance of this study, it may be useful to carefully scrutinize and delineate how the feedback and contingent-money conditions differed and whether they were functionally different in the manner intended (i.e., whether the feedback condition had primarily a discriminative function and the contingent-money condition had primarily a reinforcing function). The finding that the contingent-money condition did not produce an increase in correct responding suggests that it had neither a discriminative nor a reinforcing function. Contingent delivery of money often functions as reinforcement, but it did not in the current investigation. One possible reason for this finding is that the money was delivered in a delayed fashion (about 24 hr following sessions); however, in organizational settings, contingent money is often delayed, and several studies have demonstrated increases in performance using contingent money that was even more delayed (Bucklin & Dickinson, 2001).

One explanation for why the trainees did not demonstrate high levels of correct performance during the contingent-money condition is because they did not have the discriminative information necessary for improvements in correct responding. In hindsight, it would have been useful to include a brief reinforcer assessment with a simple target response (e.g., switch pressing) that was in each trainee's repertoire (e.g., Fisher et al., 1992) to demonstrate that the contingency used (money delivered in proportion to the percentage of correct responses up to $10) would function as reinforcement under certain conditions. Future investigations attempting to evaluate the relative effects of the discriminative and reinforcing functions of feedback should include methods for establishing the reinforcing value of the contingency used in the reinforcement condition.

Although it is reasonably clear that the contingency used in the contingent-money condition had neither a discriminative nor a reinforcing function, it may be more important to examine whether the feedback condition had primarily a discriminative function. It is possible that providing feedback regarding the trainees' performances in the feedback condition functioned as social reinforcement, in addition to its discriminative function. We attempted to minimize this possibility by presenting the verbal feedback in a neutral tone of voice with no praise or evaluative comments (e.g., specifically avoiding statements like, “that was perfect”). Nevertheless, subtle unprogrammed reinforcers may have affected behavior during this condition. For example, it is possible that statements such as “correct” and “incorrect” may have functioned as conditioned reinforcers if they had been previously paired with primary reinforcers or punishers prior to the study. In addition, seeing instances of success on the videotapes may have functioned as a reinforcer, or reducing observations of failure on a task may have functioned as negative reinforcement.

Information on level of performance in each session was provided to trainees in both the feedback and the contingent-money conditions. In the contingent-money condition, the amount of money earned in a session was directly related to the level of performance of the trainee in that session (e.g., 50% correct performance produced $5; 75% correct produced $7.50). However, this type of information did not increase correct responding in the contingent-money condition (i.e., it did not produce a reinforcement effect). If this type of information did not function as social reinforcement in the contingent-money condition, it is unlikely that it did so in the feedback condition. The primary difference between the feedback and contingent-money conditions was not that information on level of performance was available to the trainee in only one condition; these potential sources of social reinforcement were available in both the feedback and contingent-money conditions. The primary difference between the two conditions was that detailed and clear discriminative information on the type of errors trainees made and how they could correct them was available in the feedback condition but not in the contingent-money condition. This suggests that feedback produced improvements in correct responding primarily through its discriminative function.

Our findings differ from previous studies that have shown feedback alone to be ineffective. For example, Kohlenberg, Phillips, and Proctor (1976) implemented feedback to reduce high electrical-energy expenditures in three volunteer families by installing a continuous data-collection system in each of the homes to monitor energy consumption. Results indicated that feedback alone was minimally effective; monetary incentives were necessary for producing adequate reduction. In a more recent example, Nau, Van Houten, Rolider, and Jonah (1993) examined the effects of feedback on decreasing drinking of alcohol before driving. Feedback included giving bar patrons cards to guide their pace when drinking and information on their blood alcohol concentration. Results indicated that feedback did not reduce the percentage of impaired drivers departing the tavern; the addition of a police enforcement program was necessary to produce a reduction in impaired driving.

It is not clear why feedback alone has been ineffective in some circumstances (e.g., Kohlenberg et al., 1976; Nau et al., 1993) and effective in others (e.g., the current results). One potential relevant variable is the way feedback is arranged. In the studies noted above, feedback consisted of general information about the total amount of behavior exhibited (e.g., energy consumption) or successful completion of a goal. In the present study, feedback specified how to respond differentially in the future and was disseminated both verbally and visually (via videotapes). This type of feedback may be more likely to serve as an effective discriminative stimulus.

Another reason feedback alone was highly effective in the current study may have been because the participants came to the experiment strongly motivated to perform the target response well (they were bachelor-level employees who were seeking clinical experience prior to pursuing graduate training in applied behavior analysis). By contrast, feedback alone may not be effective when the participants come to the experiment with strong motivation to maintain the target response (e.g., individuals who drink excessively in bars often do so because, for them, alcohol is a potent reinforcer; Nau et al., 1993). Thus, feedback alone may be most effective when participants are already highly motivated to change the target response.

Contingent money may have been more effective in previous studies because participants had the requisite discriminative information necessary to perform the target response correctly prior to the experiment. Feedback may have had primarily a discriminative function in our study because the trainees did not receive sufficient instruction on how to correctly perform the task before the feedback and reinforcement conditions were introduced. They read about how to correctly implement the stimulus preference assessments during the didactic instruction phase, but this was not sufficient to alter their behavior. During the feedback condition, they watched themselves perform the target responses on videotape and were given clear verbal descriptions of what their errors were and how to correct them from the experimenter, and this discriminative information appeared to be critical to correct performance.

Feedback will not function as a discriminative stimulus in the absence of an effective consequence, and reinforcement will not influence behavior in the absence of stimulus conditions that are necessary to occasion behavior. For this reason, it is important to arrange feedback in a manner that will maximize both of these functions.

References

- Alavosius M.P, Sulzer-Azaroff B. Acquisition and maintenance of health-care routines as a function of feedback density. Journal of Applied Behavior Analysis. 1990;23:151–162. doi: 10.1901/jaba.1990.23-151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alvero A.M, Bucklin B.R, Austin J. An objective review of the effectiveness and essential characteristics of performance feedback in organizational settings (1985–1998). Journal of Organizational Behavior Management. 2001;21:3–29. [Google Scholar]

- Austin J, Kessler M.L, Riccobono J.E, Bailey J.S. Using feedback and reinforcement to improve the performance and safety of a roofing crew. Journal of Organizational Behavior Management. 1996;16:49–75. [Google Scholar]

- Balcazar F.E, Hopkins B, Suarez Y. A critical objective review of performance feedback. Journal of Organizational Behavior Management. 1985;7:65–89. [Google Scholar]

- Bucklin B.R, Dickinson A.M. Individual monetary incentives: A review of different types of arrangements between performance and pay. Journal of Organizational Behavior Management. 2001;21:45–137. [Google Scholar]

- Bucklin B.R, McGee H.M, Dickinson A.M. The effects of individual monetary incentives with and without feedback. Journal of Organizational Behavior Management. 2003;23:65–93. [Google Scholar]

- Catania A.C. Learning. Englewood Cliffs, NJ: Prentice Hall; 1998. (4th ed.). [Google Scholar]

- Cossairt A, Hall R.V, Hopkins B.L. The effects of experimenter's instructions, feedback, and praise on teacher praise and student attending behavior. Journal of Applied Behavior Analysis. 1973;6:89–100. doi: 10.1901/jaba.1973.6-89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowell C.R, Anderson D.C, Abel D.M, Sergio J.P. Task clarification, performance feedback, and social praise: Procedures for improving the customer service of bank tellers. Journal of Applied Behavior Analysis. 1988;21:65–71. doi: 10.1901/jaba.1988.21-65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeLeon I.G, Iwata B.A. Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis. 1996;29:519–532. doi: 10.1901/jaba.1996.29-519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan P.K, Bruwelheide L.R. Feedback: Use and possible behavioral functions. Journal of Organizational Behavior Management. 1985;7:91–114. [Google Scholar]

- Fisher W, Piazza C.C, Bowman L.G, Hagopian L.P, Owens J.C, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ford J.E. A classification system for feedback procedures. Journal of Organizational Behavior Management. 1980;2:183–191. [Google Scholar]

- Gaetani J.J, Hoxeng D.D, Austin J.T. Engineering compensation systems: Effects of commissioned versus wage payment. Journal of Organizational Behavior Management. 1985;7:51–63. [Google Scholar]

- Iwata B.A, Wallace M.D, Kahng S, Lindberg J.S, Roscoe E.M, Conners J, et al. Skill acquisition in the implementation of functional analysis methodology. Journal of Applied Behavior Analysis. 2000;33:181–194. doi: 10.1901/jaba.2000.33-181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazdin A.E. New York: Oxford University Press; 1982. Single-case research designs: Methods for clinical and applied settings. [Google Scholar]

- Kohlenberg R, Phillips T, Proctor W. A behavioral analysis of peaking in residential electrical-energy consumers. Journal of Applied Behavior Analysis. 1976;9:13–18. doi: 10.1901/jaba.1976.9-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhn S.A.C, Lerman D.C, Vorndran C.M. Pyramidal training for families of children with problem behavior. Journal of Applied Behavior Analysis. 2003;36:77–88. doi: 10.1901/jaba.2003.36-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lavie T, Sturmey P. Training staff to conduct a paired-stimulus preference assessment. Journal of Applied Behavior Analysis. 2002;35:209–211. doi: 10.1901/jaba.2002.35-209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leitenberg H, Agras W.S, Thompson L.E, Wright D.E. Feedback in behavior modification: An experimental analysis in two phobic cases. Journal of Applied Behavior Analysis. 1968;1:131–137. doi: 10.1901/jaba.1968.1-131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Learning and behavior. Englewood Cliffs, NJ: Prentice Hall; 1998. (4th ed.). [Google Scholar]

- Miltenberger R. Behavior modification: Principles and procedures. Belmont, CA: Wadsworth; 2001. (2nd ed.). [Google Scholar]

- Nau P.A, Van Houten R, Rolider A, Jonah B.A. The failure of feedback on alcohol impairment to reduce impaired driving. Journal of Applied Behavior Analysis. 1993;26:361–367. doi: 10.1901/jaba.1993.26-361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Brien F, Azrin N.H. Behavioral engineering: Control of posture by informational feedback. Journal of Applied Behavior Analysis. 1970;3:235–240. doi: 10.1901/jaba.1970.3-235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parsons M.B, Reid D.H. Training residential supervisors to provide feedback for maintaining staff teaching skills with people who have severe disabilities. Journal of Applied Behavior Analysis. 1995;28:317–322. doi: 10.1901/jaba.1995.28-317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson N. Feedback is not a new principle of behavior. The Behavior Analyst. 1982;5:101–102. doi: 10.1007/BF03393144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prue D.M, Fairbank J.A. Performance feedback in organizational behavior management: A review. Journal of Organizational Behavior Management. 1981;3:1–16. [Google Scholar]

- Schulman J.L, Suran B.G, Stevens T.M, Kupst M.J. Instructions, feedback, and reinforcement in reducing activity levels in the classroom. Journal of Applied Behavior Analysis. 1979;12:441–447. doi: 10.1901/jaba.1979.12-441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smoot D.A, Duncan P.K. The search for the optimum individual monetary incentive pay system: A comparison of the effects of flat pay and linear and non-linear incentive pay systems on worker productivity. Journal of Organizational Behavior Management. 1997;17:5–75. [Google Scholar]

- Sulzer-Azaroff B, de Santamaria M.C. Industrial safety hazard reduction through performance feedback. Journal of Applied Behavior Analysis. 1980;13:287–295. doi: 10.1901/jaba.1980.13-287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sulzer-Azaroff B, Mayer G.R. Behavior analysis for lasting change. Fort Worth, TX: Holt, Rinehart, and Winston; 1991. [Google Scholar]

- Van Houten R, Morrison E, Jarvis R, McDonald M. The effects of explicit timing and feedback on compositional response rate in elementary school children. Journal of Applied Behavior Analysis. 1974;7:547–555. doi: 10.1901/jaba.1974.7-547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wincze J.P, Leitenberg H, Agras W.S. The effects of token reinforcement and feedback on the delusional verbal behavior of chronic paranoid schizophrenics. Journal of Applied Behavior Analysis. 1972;5:247–262. doi: 10.1901/jaba.1972.5-247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witt J.C, Noell G.H, LaFleur L.H, Mortenson B.P. Teacher use of interventions in general education settings: Measurement and analysis of the independent variable. Journal of Applied Behavior Analysis. 1997;30:693–696. [Google Scholar]