Abstract

Neuroscientific approaches to drug addiction traditionally have been based on the premise that addiction is a process that results from brain changes that in turn result from chronic administration of drugs of abuse. An alternative approach views drug addiction as a behavioral disorder in which drugs function as preeminent reinforcers. Although there is a fundamental discrepancy between these two approaches, the emerging neuroscience of reinforcement and choice behavior eventually may shed light on the brain mechanisms involved in excessive drug use. Behavioral scientists could assist in this understanding by devoting more attention to the assessment of differences in the reinforcing strength of drugs and by attempting to develop and validate behavioral models of addiction.

Keywords: Drug addiction, neuroscience

Drug addiction continues to take a massive toll in terms of economic loss and human misery. For the purpose of this article, we define drug addiction as the final outcome of a process that begins with occasional drug-taking, and ends with consumption of excessive amounts of drug to the detriment of society and the individual. Drug addiction is a chronic, relapsing disorder that provides research and treatment challenges to scientists from widely ranging disciplines. Among these, geneticists and epidemiologists are particularly intrigued by the fact that drug-taking behavior exists on a continuum in humans: some people engage in it to excess, most in moderation, and many not at all.

Clearly, genetic differences and specific societal-environmental conditions can play a role in the development of drug abuse. Psychiatrists and clinical psychologists attend more to the individual characteristics of the drug abuser, and consider how other cognitive co-morbidities, such as anxiety or depression, contribute to the development and maintenance of drug abuse and addiction. Pharmacologists tend to focus on the drugs themselves, studying their mechanisms of action and attempting to develop potential drug antagonists that might be useful in the treatment of drug abuse. And behavioral pharmacologists look for clues to the etiology and control of drug abuse in the effects of drugs on the behavior of humans or animals under controlled experimental conditions.

Neuroscience, because it searches for relationships between brain function and behavior, is in an especially appropriate position to study the neural correlates of the behavior of drug abuse, and neuroscientists have contributed a tremendous amount to our understanding of the effects of drugs of abuse on the brain and nervous system. This article will address some of the neuroscience research on the problem of drug abuse, but will touch only on limited aspects of what is a massive area of scientific inquiry.

The first section on brain reward circuitry is included because this is the neuroanatomical basis for virtually all hypotheses and research on the neuroscience of drug abuse. The next section describes some recent research from a few of the many neuroscientists who have concentrated their efforts on drug addiction. What is interesting and somewhat distressing for behavioral scientists is how little behavior is regarded in much of this research.

Because drug addiction generally is conceived as a process caused directly by chronic administration of drugs of abuse, the investigative effort is concentrated on a search for changes in the morphology or molecular biology in relevant parts of the brain as a function of chronic drug administration. The neuroscience skills and techniques used by these investigations are formidable, and interesting neuronal changes have been observed following chronic drug administration. Even more technically fascinating is the possibility of recreating these localized neurobiological changes in the absence of drug administration—in effect producing an addicted brain without giving the addicting substance. Animals so treated then can be evaluated behaviorally, to determine whether they are, in fact, addicted. Unfortunately, the behavioral measures used in these types of studies often are weak and not validated, bringing the conclusions of much of this work into question.

Our generally negative critique of much of the most prominent work on the neuroscience of drug abuse is followed by a description of a strictly behavioral approach to drug abuse. This behavioral approach is presented as an additional avenue to be explored by neuroscientists and others investigating drug abuse. We argue that drug addiction involves the excessive choice of a drug over other environmental stimuli, perhaps because the drug is a more potent reinforcer relative to competing reinforcers in the addict's life. This section serves two purposes: one is to present an option to the neuroscience approach and to suggest how addiction can be described more appropriately; the other is to prepare for the next section which returns to neuroscience, but in a more behavioral context.

In this next section, we describe some experiments that indicate that neuroscience embedded in a more behavioral context potentially can identify the neurological correlates of behavioral constructs, such as reinforcer strength. For example, investigations of the brain correlates of choice eventually may clarify the regions in the brain and the patterns of brain activity that are correlated with preference for higher magnitude reinforcers. Although the work described in this section does not involve studies of drugs as reinforcers per se, it easily could be extended to complement a behavioral model of addiction.

The behavioral approach to drug addiction presupposes that drugs are not qualitatively different from non-drug reinforcers. The last section of this article describes recent neuroscientific studies that assess the validity of this assumption. For example, do the brain changes that accompany reinforcement differ if the stimulus is cocaine compared with other non-drug stimuli?

Finally, the perspectives offered in this paper are limited by both space and our time. It would add useful knowledge to review what is known about the neuroanatomy and neurophysiology of Pavlovian conditioning (e.g., Cardinal & Everitt, 2004; LeDoux, 2000). Also useful would be a discussion of several psychological theories that have been posited to explain drug addiction, many of which were put forward by neuroscientists and based on hypothesized or observed drug-induced brain changes (e.g., Koob & Le Moal, 1997; Lubman, Yucel, & Pantelis, 2004; Robinson & Berridge, 1993, 2000). However, our interest in data rather than theories led us to exclude this conjunction of drug addiction and neuroscience.

Neuroanatomy of Reward

In a simplified view, the brain can be thought of as the locus for the retrieval, processing, and storage of information transmitted from the environment via neurons. The brain also activates motor, autonomic, and/or endocrine output in response to both the current and historical environment. Quite a bit is known about the location of the specific sensory input and motor output areas of the brain, but less is understood about the brain structures and processes involved in the formation of constructs such as values, emotions, and memories (see review by Glimcher, 2003). The current general concept of input and output anatomy within the brain involves parallel sensory and motor circuits that can be described as cortical-striatal-pallidal-thalamic-cortical (Heimer, 2003). These circuits carry information from either the somatosensory cortex or the motor cortex to related areas in the putamen. Circuits run medially from the putamen to the globus pallidus or to the substantia nigra, and the globus pallidus sends projections to specific areas of the thalamus. The thalamus closes the loop by returning information to local areas of the cortex. The key concept in these circuits is topographical organization. Different parts of each of these anatomical areas are activated depending on the nature (e.g., visual, auditory, nociceptive) and location (e.g., finger, arm, head) of the information being received and conveyed.

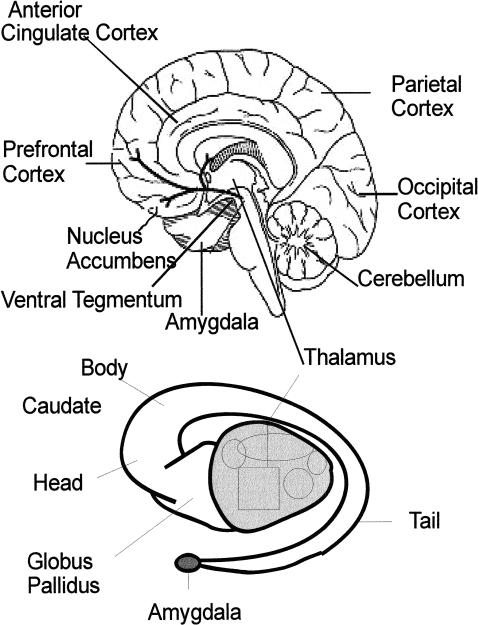

The association areas of the brain also may be connected in several circuits that run somewhat parallel to the input and output circuits. One such circuit, called the anterior cingulate circuit (Alexander, DeLong, & Strick, 1986), incorporates much of what is thought to be involved in the brain's motivational pathways (Kalivas, Churchill, & Klitenick, 1993). A locus of particular importance in this pathway is the ventral striatum which includes the nucleus accumbens. Dopamine release in this area has been considered a critical mediator of the reinforcing effects of stimuli, including drugs of abuse (Koob & LeMoal, 1997; Robbins & Everett, 1996), although some investigators suggest a more general activating effect of dopamine (Horvitz, 2000). Dopamine is released into the ventral striatum via dopaminergic neurons that project from the ventral tegmentum (Figure 1). The ventral tegmentum also sends dopaminergic neurons to dorsal striatal and cortical areas. The ventral striatum has non-dopaminergic, primarily glutamatergic, input from the amygdala and hippocampus, which are involved in memory and emotional processing. Many of these pathways are reciprocal, with information passing back to the structure that originated the stimulation.

Figure 1. (Top) Structures related to reward pathway visible on midline of brain.

Dark lines indicate dopamine pathways. (Bottom) Relation of thalamus (with lateral thalamic nuclei) to the more lateral caudate nucleus and globus pallidus.

The cortical areas involved in reward circuitry are thought to include the entorhinal and perirhinal cortices, the anterior cingulate cortex, the temporal lobe and the posterior area of the medial orbital frontal cortex. Projections from these cortical areas travel to the ventral striatum as well. In the ventral striatum there is close interdigitation with pallidal structures, and there are neuronal connections from the striatum to the ventral pallidum, the internal globus pallidus, and down to the substantia nigra pars reticulata. The medial dorsal thalamus is innervated by fibers from the pallidal areas, and thalamic projections complete the circuit by traveling to the anterior cingulate cortex.

Thus, a cortical-striatal-pallidal-thalamic-cortical circuit can be described in the emotional areas of the brain. The cortical areas are different from those involved in motor control and sensory input; the striatal and pallidal areas typically are located more ventrally, have considerable non-cortical input, and a distinct thalamic nucleus is involved. Nevertheless, this circuit has parallels with other primary circuits in the brain (Alexander et al., 1986; Heimer, 2003).

What clearly is missing is how the various circuits are interconnected to integrate the sensory input with the motor output. This question stimulates much of the research on the neurocircuitry of reward. Although the parallel circuitry suggests that integration is possible at many cortical and subcortical levels, the precise pathways whereby sensory input is evaluated, modified by processes associated with memorial, emotional, and motivational factors, and converted into motor output have not been delineated clearly. It has been thought that the nucleus accumbens, with its shell and core components, as well as its strong connection to various amygdaloid nuclei, is critical to emotional coordination of behavioral output.

The extended amygdala is a fairly recently described structure that is an important part of this system; it consists of two components. One includes the central amygdaloid nucleus and the lateral bed nucleus of the stria terminalis, and carves a sweeping circuit beneath the main body of the striatum and the globus pallidus. The other consists of the medial amygdaloid nucleus and the medial bed nucleus of the stria terminalis, and travels along with the central component under the lenticular nuclei (Alheid, 2003). The central and medial nuclei of the amygdala have substantial input from the basal lateral nucleus of the amygdala and many projections to the cortex and to autonomic and endocrine areas in the hypothalamus and brain stem. As noted by Heimer (2003), “Thus, [the extended amygdala] represents a strategically placed ring formation capable of coordinating activities in regions of the multiple limbic lobe forebrain for the development of coherent behavioral responses through the referenced output channels” (p. 1735).

It is in the various parts of this reward circuit anatomy and its connection with motor circuitry where neuroscientists look for brain changes that reflect reinforced behavior.

Neuroscientists Look for Drug-Related Brain Changes

Neuroscientists primarily interested in drug abuse typically search for changes in some aspect of the reward circuitry that accompany the development of drug addiction. This presupposes a consensus on what addiction is, and most neuroscientists agree that drug addiction is a behavioral disorder. They usually define addiction in humans as a change over time from occasional drug taking to more “compulsive” drug taking, and a “loss of control” over the amount of drug taken. Addiction is further viewed as a chronic disorder that persists as a sustained susceptibility to relapse (“craving”) even if an individual has abstained from drug use for an extended period of time.

Despite a common definition of the disorder, and despite the fact that there are some models that might reflect compulsive drug taking (Ahmed & Koob, 1998; Ahmed, Walker, & Koob, 2000; Deneau, Yanagita, & Seevers, 1969), few of these neuroscientists use or describe an animal model of “compulsive” or “uncontrolled” drug taking as part of their evaluation of accompanying brain changes. Measures of the reinforcing effects of drugs (drug self-administration) are used by several neuroscientists as indicators of the development of addiction. Even more popular are measures of sensitization (an increase over time in the locomotor stimulating effects of a drug as a consequence of repeated administration of the drug), conditioned place preference (a selection by the animal of an environment where it previously experienced the effect of a drug), and reinstatement (an increase in extinguished, drug-maintained responding as a consequence of non-contingent drug administration, stress, or other intervention). However, as indices of addiction, these measures leave much to be desired.

Although drug self-administration procedures seem to reflect the subjective drug effects reported by human drug addicts (Griffiths & Balster, 1979), the relation between this and the critical outcome—excessive behavior that is the hallmark of addiction—has not been determined.

Sensitization has only the fact that it is produced by repeated administration of a drug, and is maintained for several weeks or months following drug withdrawal, to recommend it as a model of addiction. This drug-induced increase in locomotion lacks even face validity as an indicator of compulsive drug taking or loss of control of drug-taking behavior. Conditioned place preference probably measures a different process from either reinforcement or sensitization (Bardo & Bevins, 2000), but there is no evidence that this is related to addiction. Reinstatement has good face validity, but has not been shown to have predictive validity as a model of relapse (Katz & Higgins, 2003).

It can be argued that the lack of a validated behavioral measure of addiction is the biggest impediment for neuroscientists who focus on brain changes associated with chronic drug administration. Despite these challenges, the actual neuroscience is typically innovative and productive. One of the most provocative neural changes that occurs as a consequence of drug administration involves drug-induced alterations in gene expression. Alterations in gene expression, with their consequent changes in protein synthesis, are considered to be a neural aspect of learning and memory, or “plasticity” as it is referred to by neuroscientists. Learning and memory may have neural mechanisms in common with drug abuse because both reflect changes in reinforced behavior over extended time periods. Neuroscientists, stating that drug addiction is a relatively stable condition, are especially interested in gene expression changes that have an extended duration.

There are many places where gene expression can be modified. The group headed by Eric Nestler has emphasized long-term, drug-induced changes in transcription factors as a potentially critical aspect of gene expression that might be related to drug addiction. Transcription factors are proteins that attach to the part of DNA that is responsible for the rate at which this DNA is transferred to RNA and subsequently to the rate of synthesis of specific proteins. A number of neurotransmitters act through their membrane receptors to alter levels of transcription factors. Because drugs of abuse can directly affect the action of some neurotransmitters on their receptors, this is a logical place to begin to look for the effects of drugs on protein synthesis.

A recent review (Nestler, 2004) describes two transcription factors that his laboratory has evaluated that meet some of their criteria as important factors in drug addiction. Both of these, CREB (cAMP response element binding protein) and deltaFos B, are increased in the nucleus accumbens following chronic administration of cocaine. Elevated levels of CREB are fairly short lasting, declining within several days after cocaine administration has been stopped. They also are associated with decreased sensitivity to a wide variety of stimuli, including drugs of abuse (Barrot et al., 2002; McClung & Nestler, 2003), and seem unlikely to be strongly related to drug addiction. DeltaFos B, in contrast, increases a small amount with each injection of cocaine and is unusually stable in the brain, remaining at elevated levels for several weeks following termination of cocaine administration (McClung & Nestler, 2003). It is therefore a more interesting candidate as a correlate of drug addiction.

Levels of deltaFos B can be modified in the nucleus accumbens and the dorsal striatum of mice (the same areas in which they are increased by cocaine administration) using technically sophisticated procedures that do not involve cocaine administration. If elevated striatal deltaFos B levels play a role in drug addiction, mice with enhanced striatal deltaFos B levels in the absence of cocaine exposure should respond differently to cocaine than mice with normal striatal deltaFos B levels. A variety of behavioral indices support this hypothesis. Mice with elevated striatal deltaFos B levels exhibited greater preference for the cocaine-paired compartment in a conditioned place preference study, relative to controls (McClung & Nestler, 2003). In addition, these mice self-administered smaller doses of cocaine than controls and completed higher ratios than control mice on progressive-ratio schedules (Colby, Whisler, Steffen, Nestler, & Self, 2003).

Interestingly, these effects appear to be somewhat specific to cocaine; the two groups did not differ in terms of food-maintained responding. These results suggest that higher striatal levels of deltaFos B may be correlated with enhanced sensitivity to the incentive properties of cocaine. However, an important comparison that was not made was to determine whether mice with histories of chronic cocaine self-administration, and presumably cocaine-induced increases in deltaFos B, also showed similar patterns of responding as those mice with artificially increased deltaFos B expression.

A driving concern of neuroscientists is identification of the proteins that are synthesized in increased amounts as a result of deltaFos B and CREB overexpression. Several candidates have been suggested, among them a gut and brain protein, cholesystokinin, and an endogenous kappa receptor ligand, dynorphin (McClung & Nestler, 2003; Nestler, 2004). A subunit of a glutamate receptor also has been found to increase with overexpression of deltaFos B (Nestler, 2004). In addition, another peptide, Cdk5, is increased in animals that overexpress deltaFos B, and Cdk5 gene expression also is increased by chronic cocaine administration. Cdk5 may effectively reduce the effects of dopamine that are produced through the D1-like receptors (Benavides & Bibb, 2004). These receptors have some actions that oppose those of the D2-like receptors, and it is the D2-like receptors that have been tied more closely to the stimulating and addictive effects of cocaine.

Research in the laboratory of Peter Kalivas has emphasized another protein, Homer, as being particularly important in the development of cocaine addiction. This research group has studied the importance of glutamate, the excitatory neurotransmitter that is released by the neural connections that travel from the prefrontal cortex and amygdala to the nucleus accumbens and ventral tegmental area, in mediating the neuronal plasticity involved in drug addiction. They suggest that dopamine release may underlie the development of addiction, but that the permanent changes resulting in sensitization and reinstatement involve protein changes that regulate glutamate transmission (Kalivas, 2004).

The Homer protein is associated with glutamate receptors, where it acts as a scaffold to keep relevant proteins in association with each other. Mice that had one of three Homer genes knocked out developed a conditioned place preference to a smaller dose of cocaine than did normal mice and, at larger cocaine doses, spent more time on the cocaine-associated area of the apparatus than did normal mice. These knock-out mice also showed a greater sensitization to cocaine than did wild-type mice, and learned to self-administer a small dose of cocaine more rapidly than wild-type littermates. In addition, the knock-out mice had reduced levels of accumbens glutamate, similar to what had been found in mice that had been given cocaine chronically and then withdrawn. The similar effects of Homer deletion and cocaine withdrawal on accumbens biochemistry, as well as the finding that the Homer knock-out mice showed sensitization to the effects of cocaine without having received prior injections of cocaine, suggested that Homer proteins or the proteins that are bonded together by Homer, are important in the development of cocaine addiction (Szumlinski et al., 2004).

Terry Robinson has maintained an interest in brain changes that accompany the development of sensitization to drugs of abuse. The incentive-sensitization theory developed by Robinson and Berridge (1993, 2000) posits that the neural changes accompanying drug administration are directly responsible for both sensitization and the development of compulsive drug-taking behavior. Tests of this theory have found that either the length of neuronal dendrites, the number of branches in the dendrites, and/or the concentration of the spines that come off the dendrites were increased in rats following either experimenter-administered or self-administered amphetamine. Cocaine and nicotine produced similar increases in dendritic branching and density in the accumbens and prefrontal cortex, but, interestingly, morphine produced significant decreases in these measures. The changes in dendritic morphology were relatively long lasting, running in close parallel with the duration of sensitization (Robinson & Kolb, 2004), and the brain structures in which these changes were greatest differed depending on the drugs (cocaine vs. amphetamine) given and whether the drugs were given passively or taken by the animals.

These studies of the molecular, biological, and morphological changes that accompany administration of drugs of abuse make the general point that such changes can, in fact, be measured. The neuroscientists who are finding these drug-induced brain changes have not claimed that these are necessarily the essential components of drug addiction; the brain is sufficiently complex and there are so many aspects of its activity that can be modified, that the search for correlates to addiction is necessarily a long-term one. These investigators, however, do hold to the debatable position that addiction constitutes drug-induced changes in the brain.

We would like to propose an alternative to this drug-focused approach to drug addiction by treating drugs as reinforcing stimuli that may come to dominate the behavioral repertoire of some individuals. This domination may occur as the result of the relative efficacy of certain drugs as reinforcers; that is, the strength of these reinforcers relative to other concurrently available reinforcers in the environment. This approach is one we favor and is described in more detail in the following section.

Drug Addiction as a Behavoral Disorder

Behavioral scientists generally regard drug addiction as a behavioral disorder that results when drug reinforcers assume control over a substantial portion of an individual's behavioral repertoire (Higgins, Heil, & Lussier, 2004). As such, addiction to drugs can be considered a form of excessive behavior, occurring when other activities are expected and appropriate. Overeating and excessive gambling are other examples of inappropriate and excessive behaviors often attributed to an addiction of some kind, but do not involve drug administration. A characteristic of each is that initial exposure to a reinforcing stimulus (e.g., euphoria, food, money) is followed by a progressive escalation in the behavior that produced it. Behavior that results in the availability of these reinforcers may eventually dominate the behavioral repertoire simply because these stimuli function as more potent reinforcers than others available in an individual's environment. This may be due, in part, to genetic predispositions or, more likely, to particular learning histories combined with relatively easy access to these reinforcers (i.e., a high rate of reinforcement) and insufficient contact with alternative sources of reinforcement.

One advantage of a behavioral approach to drug abuse is that, contrary to the drug-based neuroscience theories, it not only accounts for excessive behavior that does not involve drugs, but it also accounts for situations in which repeated exposure to drugs is not followed by addiction. For example, people who use drugs to excess while they are young are likely to stop using drugs when they get older, a process called maturing out (Chen & Kandel, 1995). When a young person is exposed to reinforcers that are incompatible with drug taking, such as those associated with marriage, family, and employment, the relative reinforcing functions of drugs usually decrease to the point where they no longer maintain the drug-taking behavior. People who do not mature out of their excessive drug taking may not have these other reinforcers available, may not seek them out, or may not find them to be superior to the drugs they are taking due to particular learning histories and/or genetic predispositions.

As a second example of repeated drug use not leading inevitably to addiction, consider that soldiers who used heroin to excess while in combat situations in Vietnam typically did not continue this use when they returned home (Robins, 1994). A third situation occurs in patients who self-administer opioids for the treatment of pain but have no inclination to continue to use the drug following recovery. The reinforcing effect of the drug in this case is related to its ability to reduce pain, and following recovery there is no reason to continue to use the drug. There is also the fact that a great many people have successfully stopped smoking cigarettes, at least in part because the health risks became overwhelmingly obvious (Centers for Disease Control and Prevention, 2004). It clearly is not the case that simple exposure to drugs, even in the context of their strong reinforcing effects, necessarily leads to a permanent state of drug addiction.

A behavioral approach also is much more hopeful about the potential for treating drug addiction (Higgins et al., 2004). Theories that subscribe to drug-induced changes in the nervous system present more hopeless scenarios (once-a-drug-addict-always-a-drug-addict) that are more likely bereft of treatment possibilities. Behavioral management of drug use, however, is one of the most successful intervention strategies, particularly with cocaine abuse for which there is no pharmacological treatment yet available. Contingency management procedures typically involve giving patients vouchers if they have drug-free urine samples on a regular basis. The vouchers can be exchanged for various goods and services. Some contingency management therapies increase the value of the voucher over time, as long as the client remains drug free, and resets the value if cocaine use is detected or if the client refuses to submit a urine sample. These procedures were far superior to standard therapy in producing drug-free clients and retaining them in treatment over a 24-week study (Higgins et al., 1993). At this point, a behavioral approach is uniquely able to generate successful strategies for prevention and treatment.

If one finds a behavior/reinforcement approach to drug abuse and addiction more satisfying than the notion that drugs themselves are responsible for drug addiction, does that make neuroscience an irrelevant perspective on drug addiction? Certainly not. But it does mean that a rather different type of neuroscience should be evaluated for what it might contribute to our understanding of addictive behavior. For example, neuroscientists are beginning to look at activity in the brain that accompanies choices between reinforcing stimuli that differ in magnitude or frequency, as described below (e.g., Cromwell & Schultz, 2003; Platt & Glimcher, 1999). Although drugs have yet to be evaluated in this type of work, the work could easily progress to this point in the future, and understanding the neurological correlates of the reinforcing strength of drugs may lead to a more unified account of drug addiction.

Electrophysiological Correlates of Reinforcers of Different Magnitude

Can neurophysiological and neurobiochemical studies provide indicators of the relative reinforcing effects of various stimuli? If so, knowing the neurological correlates of the relative reinforcing effects of stimuli might allow us to predict choice at the behavioral level. In addition, if addiction is accompanied by an increase in the reinforcing effects of drugs relative to other stimuli, this could be reflected in such neurological measures. Neuronal changes have been measured in various parts of the brain upon the delivery of a reinforcer. Experiments assessing brain responses to reinforcers of different magnitude often involve the use of a discrete-trial delayed reaction task (Cromwell & Schultz, 2003; Hassani, Cromwell, & Schultz, 2001; Platt & Glimcher, 1999; Tremblay & Schultz, 1999; Watanabe, Hikosaka, Sakagami, & Shrakawa, 2002). In a typical procedure, a monkey learned to emit a response, either holding a lever or fixating on a visual stimulus, and to maintain that response for several seconds. This resulted in the presentation of a discriminative stimulus (signaled delay) for a few seconds followed by the presentation of another discriminative stimulus (trigger) that signaled the availability of a reinforcer contingent upon a manual or visual saccade response. In some studies, it was necessary for the monkey to withhold responding following the presentation of one cue and to respond following presentation of another cue (go-no go task) either to earn a reinforcer or to advance to the next trial. In other studies, a discriminative stimulus indicated whether or not a reinforcer would be delivered upon the emission of the response. In a variation of the task, the magnitude or type (preferred vs. nonpreferred) of reinforcer was signaled. Neurons that fired during various aspects of this task were recorded and described.

The activity of single neurons in a number of brain structures has been measured during the delay between the presentation of the discriminative stimulus and the reinforced response. Neurons in the ventral tegmentum, the ventral striatum, the caudate and putamen (striatum), the orbital frontal cortex, and various areas of the parietal and prefrontal cortex have been studied most frequently. In general, neurons located in all of the tested areas fired more rapidly during the delay when a larger reinforcer, as opposed to a smaller reinforcer, was signaled. Platt and Glimcher (1999), using a visual saccade task, reported this differential response from neurons in the lateral intraparietal area of the posterior parietal cortex as well as in the superior colliculus, a part of the visual pathway. In several studies, a smaller proportion of neurons were identified that fired in an exactly opposite pattern, showing greater activity when the smaller reinforcer was signaled. Tremblay and Schultz (1999) found this pattern of differentiation using recordings from the six-layer area of the orbitofrontal cortex of primates; a subset of orbitofrontal cells fired more in response to cues predicting reinforcer A than to reinforcer B when A was preferred, and more to B than to C when B was preferred. A similar pattern of differentiation was found in the anterior parts of caudate nucleus, putamen, and ventral striatum (Cromwell & Schultz, 2003; Hassani et al., 2001).

The lateral prefrontal cortex also appeared to code the significance of stimuli and reinforcement. Some neurons in this location were particularly active during the delay that followed the onset of a stimulus correlated with reinforcement. Others were more active following the presentation of a stimulus correlated with unreinforced trials. Some lateral prefrontal neurons fired more rapidly during blocks of trials in which a preferred food (e.g., apple rather than potato) or fluid maintained responding. In addition, neurons that were more responsive during unreinforced trials also were more active when the less-preferred reinforcer was available. These neurons, according to the authors, coded not only the absence of reinforcement, but also which reinforcer would be absent. A small percentage of neurons fired according to a more complex pattern: The rate of firing for some of these neurons was highest during reinforced trials, prior to preferred reinforcers, and also during omissions of the less-preferred reinforcer relative to omissions of the more preferred reinforcer (Watanabe et al., 2002). The frequent finding that some neurons fired at a higher rate prior to a signaled larger reinforcer and other neurons showed the opposite pattern was a critical aspect of Gold and Shadlen's (2001) description of brain decision-making based on sensory input.

Behavioral measures in these types of studies also showed that responses to the larger or more-preferred reinforcer differed from those to the smaller or less-preferred reinforcer (e.g., shorter latency or decreased probability of visual fixation breaks; Watanabe et al., 2001). The possibility that these behavioral differences were responsible for the different rates of neuronal firing has been considered. Although some research found reinforcer magnitude-related differences in neuronal firing in the association cortex and none in the motor-related areas (Leon & Shadlen, 1999), others observed that more precise recordings in the motor cortex clearly reflected the magnitude of the reinforcer (Roesch & Olson, 2003). The latter investigators subsequently reported that the orbitofrontal cortex seemed to code the magnitude of the reinforcer, firing most rapidly immediately after presentation of the larger-reinforcer cue. Activity of the premotor cortex, in contrast, was more related to motor preparation, arousal, and attention; firing more rapidly just before the trigger stimulus was presented and when the reinforcer was delivered (Roesch & Olson, 2004).

These studies taken together indicate that several areas of the brain reward circuit contain neurons that are responsive to the magnitude or value of signaled reinforcers. Various investigators have taken different positions with respect to the significance of these findings, some suggesting that these areas code reinforcer magnitude, others proposing that the differential firing rates reflect consummatory or motor responses that are correlated with reinforcer magnitude. All seem to agree, however, that these simple firing differences are not sufficient to explain the formation of discriminations among reinforcers of different magnitude or type, or to clarify how animals choose among reinforcers. Nonetheless, this research represents an important first step to a better understanding of where and how decisions are made within the brain. The techniques for single neuron recordings eventually should permit simultaneous measurements from a number of brain areas, making it possible to track how incoming stimuli pass through the brain. Measurements that follow the neuroanatomical description of cortical-striatal-pallidal-thalamic-cortical circuit eventually may lend physiological support to this notion, or demonstrate a much more complicated interaction. Finally, theories such as those of Gold and Shadlen (2001, 2002), who proposed mathematical rules of brain decision-making, will direct increasingly sophisticated research in this area.

There have been two recent reports on neuronal activity during more complicated behavioral tasks involving choice. Sugrue, Corrado, and Newsome (2004) employed a choice procedure in which the ratio of reinforcement for responding on two variable-interval schedules varied several times over the course of a session. Monkeys modified their selection of response options (visual saccades to one of two colored targets) quite rapidly within the session in accordance with the matching law (Herrnstein, 1961). To account for the observed rapid sensitivity to local shifts in relative reinforcement rate, Sugrue et al. proposed a modification of the matching law that minimized the influence of previous reinforcers through the use of a “leaky” integrator (for a competing model of dynamic choice, see Davison & Baum, 2000).

Sugrue et al. (2004) also recorded activity of single neurons in the lateral intraparietal (LIP) area of the posterior parietal cortex, an area shown by Platt and Glimcher (1999) to be responsive to reinforcer magnitude when oculomotor decisions were required, and perhaps involved in spatial memory and motor planning. (e.g., Colby & Goldberg, 1999) Interestingly, the authors reported that neurons in this region exhibited a firing rate proportional to the relative reinforcement rate of the stimulus associated with the alternative currently presented within the cell's response field. As a population, the neurons did not reflect relative reinforcement rate immediately when the visual stimuli were presented; the difference in firing rate as a function of local reinforcement rate increased over the time course of the stimulus presentation and “reset” after reinforcer delivery. The authors used this “reset effect” as one reason to speculate that the LIP is not the primary locus for computing reinforcement rate. The primary locus that encodes reinforcement rate should maintain that representation across the appropriate time scale of the task. The LIP, the authors reasoned, may be involved in the transformation of the color-based representation of reinforcement rate to spatial eye-movement. This conforms to the notion of the LIP as an area that guides eye movements and shifts in visual attention based on incoming information.

Neuronal activity apparently related to the outcome of a previous trial was observed in the dorsolateral prefrontal cortex by Barraclough, Conroy, and Lee (2004). Monkeys were trained to make saccade responses to one of two targets according to one of four different strategies. In one, the two targets were different colors, and a response to one of the colors was reinforced 50% of the time. In the other three algorithms, the computer engaged the monkeys in a zero-sum game. In the first of these three algorithms, the computer selected the target to be reinforced on a random basis, and the monkeys developed a side bias. In the second, the computer took the animals' previous choice behavior into account, and the monkeys responded in a primarily win-stay-lose-switch manner. In the third, the computer took both choice and reinforcement history into account, and the monkeys responded in a fairly random manner. In each case, monkeys behaved optimally, with the optimal strategy resulting in a reinforcement probability of 0.5.

Single neuron recordings taken from the dorsolateral prefrontal cortex during responding maintained under the third algorithm indicated that some neurons in this area were responsive to the choice and outcome of the previous trial. For example, when a monkey selected a target, one neuron fired differentially based on whether the selection of that target had been reinforced on the previous trial; firing rates were higher if the selection on the previous trial was not reinforced. Barraclough et al. (2004) concluded that the dorsolateral prefrontal cortex might be the locus at which stimuli related to past choices and their outcomes might be temporally integrated to influence current choice.

In an excellent recent review of much of this work, Schultz (2004) emphasized game theory and microeconomics as important tools for neuroscientists involved in understanding decision-making processes at a neural level. He found reinforcement theory to be less helpful because it “does not produce optimal learning and performance” (p. 144). This is an unfortunate criticism, not only because it is incorrect, but because it may reflect a bias that could impede collaboration between neuroscientists and behavior analysts. Scientists who analyze behavior are in a unique position to contribute to this methodology, and their input is critical to the ultimate success of a neuroscientific analysis of drug abuse (Skinner, 1938/1991).

In summary, electrophysiologists are becoming more interested in searching for neural correlates of choice behavior, and their experimental designs and techniques are becoming more sophisticated. Given the questions that are unanswered by these initial studies of the neuroscience of choice, it may be too soon to assume that a consensus eventually will be reached on where and how the brain assigns value to different stimuli and directs a choice between them. Ultimately, however, questions of how various drugs of abuse compare with each other and to non-drug reinforcers might be answered at a neuronal level, as might questions about whether these relationships change as addiction progresses.

These questions about neuronal correlates of reinforcement are closely tied to the issues addressed in the next section: Do drugs function differently from nondrugs as reinforcers at the neuronal level? If there is a qualitative difference between drug and non-drug reinforcers, this might support the notion of many neuroscientists that drugs “hijack” the reinforcement pathway (e.g., Lubman et al., 2004). If no clear differences are observed, then it becomes more reasonable not to place drugs in a “special” category of reinforcers, but to study and compare them in traditional ways.

Electrophysiological and Neurochemical Correlates of Drug and Non-Drug Reinforcement

There have been a few studies of electrical activity in the brain during drug-reinforced responding. Of particular interest are those from Deadwyler and colleagues. Deadwyler, Hayashizaki, Cheer, and Hampson (2004) recorded activity from one to four neurons in the nucleus accumbens of rats that responded and received either cocaine or water reinforcers. Throughout the session, the two reinforcers were available on distinct levers, only one of which was available at a time. Deadwyler et al. found that there were different neurons in the nucleus accumbens that responded similarly regardless of whether the reinforcer was water or cocaine. These neurons displayed one of three different firing patterns, although each was characterized by a brief, profound decrease in activity at the time the operant response was made. Some neurons showed increased activity before the response or any overt movement towards the lever, others showed an inverse pattern of increased activity following the response, and the third type showed increased activity both before and after the response.

The firing patterns in these neurons were similar for both cocaine- and water-maintained responding, suggesting that drugs of abuse mediate reinforcement through the same neural mechanisms and firing patterns as other reinforcers. Interestingly, the individual neurons activated by water and cocaine were completely different and each type fired in a reciprocal manner. Thus, cells that showed activity related to cocaine reinforcement were quiescent when water-maintained responding was occurring, and cells that showed activity related to water delivery were quiescent when cocaine-maintained responding was occurring. Although these neurons could be proximal to one another, none of the neurons of interest fired simultaneously to both reinforcers. “Rather, it appears that different reinforcers ‘sculpt out’ different connections within a pool of common [nucleus accumbens] neurons, such that individual circuits with the same firing characteristics will be independently activated under a restricted set of conditions dependent upon both the type and magnitude of reward.” (Deadwyler et al., 2004, p. 706).

Similar studies reviewed by Carelli (2002) demonstrated that a subset of cells in the nucleus accumbens showed phasic activity following a response reinforced by food, water, or sucrose. A similar pattern of activity was shown in cells when cocaine was response contingent, but these were an entirely different population of cells. The apparent magnitude of reward (water vs. sucrose) was not reflected by accumbens firing rate. Chang, Janak, and Woodward (1998) found that different cells in the nucleus accumbens were responsive to cocaine- as compared with heroin-reinforced behavior.

These studies suggest that the nucleus accumbens codes different reinforcers in a similar way, but on different neurons. It is possible that there are a sufficient number of accumbens neurons to account for the variety of reinforcing stimuli in the environment, but it would be interesting to evaluate activity in this nucleus following delivery of a wider variety of reinforcers. In addition, it is of considerable interest to the neuroscience of drug abuse to evaluate neural responses to drug reward in other brain regions. For example, although the accumbens appears not to play a role in distinguishing reinforcer magnitude, regions such as the orbitofrontal or dorsolateral parietal area, as shown in the previous section, seem to reflect these differences. How do these areas respond in animals given access to different doses of cocaine, or to food versus cocaine? Would responses to cocaine change during the development of cocaine addiction? These types of questions may be more useful in approaching the neuroscience of drug abuse than research on how drug administration alters brain molecular biology.

Dopamine has long been considered to be involved in mediation of reinforcement. Schultz (1998), for example, measured electrophysiolgical activity from dopamine cells in monkey midbrain and found that these cells fired when unsignaled (unpredictable) food was delivered to the animals. When the food delivery was signaled, the dopamine cells ceased to respond to the food delivery, and responded instead to the predicting signal.

A relation between dopamine release in the nucleus accumbens and administration of drugs of abuse was reported several years ago (Di Chiara & Imperato, 1988), and has been followed up by several investigators. This relation was based on the measurement of extracellular dopamine levels over several minutes following drug delivery. The recent development of fast-scan cyclic voltammetry, a technique that measures extracellular dopamine levels in neuronal tissue on a subsecond time scale, has made it possible to correlate much more closely the temporal pattern of dopamine changes within the context of ongoing operant behavior. The possibility that changes in neuronal dopamine can be monitored nearly as closely as firing rates of dopamine neurons eventually should lead to interesting comparisons. Although to date only a few studies have examined dopamine release using fast-scan cyclic voltammetry during ongoing operant behavior, more will soon be known about how dopamine and other neurochemicals change during various types of ongoing behavior.

Roitman, Stuber, Phillips, Wightman and Carelli (2004) described dopamine release in the ventral striatum of rats when each lever press resulted in intraoral sucrose delivery. They found that each time the levers and cue light were presented, dopamine levels in the ventral striatum began to increase, peaking at the time the lever press was emitted. Dopamine levels then declined quickly to baseline levels during the delivery of the sucrose solution. By comparison, these cues did not evoke dopamine increases in animals that had not learned the response-sucrose contingency, and the light-tone stimulus alone in untrained rats also did not result in changes in dopamine levels. Interestingly, whereas rats typically responded immediately after the lever was presented, on some occasions the rats paused for nearly 30 s before emitting a response. In these situations, the cue-induced increase in dopamine was less than that observed when the latency to respond was short. However, dopamine levels increased just before the press, peaking at the time of the response, in a similar pattern to what was obtained when latencies were short. This suggested that “phasic dopamine is time-locked to approach behavior,” and may have a specific role in selecting the motor output related to the cue.

This same group (Phillips, Stuber, Heien, Wightman, & Carelli, 2003) evaluated subsecond dopamine release in the ventral striatum during cocaine self-administration sessions in rats. Similar to the findings with food-reinforced responding, increases in dopamine began as the rat approached the lever and peaked just after the response was made. This finding suggests the possibility, as did the evaluation of dopamine release during sucrose-reinforced responding, that there was an important motor component to accumbens dopamine release. The consistency of the dopamine increase before the motor response led the investigators to produce similar patterns of dopamine release in the ventral striatum by electrically stimulating the ventral tegmentum every 120 s throughout an otherwise normal cocaine self-administration session. Ventral tegmentum stimulation was followed by a rapid increase in accumbens dopamine and by behavioral sequences that typically ended in a lever press.

These latter two studies of dopamine release in the nucleus accumbens during behavior reinforced either by food or by cocaine are inconsistent with earlier studies of the electrophysiological response in dopamine cells that occurs when signaled and unsignaled food is delivered. In Schultz's studies (e.g., Schultz, 1998), there was no response contingency, and dopamine cells fired when food delivery was signaled, or, if no signal was given, by the food delivery itself. In the studies by Carelli and colleagues (Phillips et al., 2003; Roitman et al., 2004), a response contingency was trained, and dopamine release in the accumbens appeared to accompany the motor response rather than the delivery of the sucrose or cocaine reinforcer. Interestingly, neurochemical activity, as was found previously with electrical activity, appears to be similar regardless of whether drug or non-drug reinforcers are being delivered. Although this may be the result of an identical behavioral response requirement, it remains a provocative finding supporting the notion that neural systems may not treat drugs differently from the way they treat other reinforcers, at least in animals that have rather limited exposure to drugs. This suggests that research of this type might eventually help to test a behavioral approach to drug addiction.

Conclusions

The techniques developed by and available to neuroscientists are truly awesome, and will certainly become even more so in coming years. The ability to detect or modify gene expression in local brain areas at specific times in development, to measure real-time changes in neurotransmitter levels, and to watch the electrical responses of single neurons in behaving animals has begun to make inroads in our understanding of an extremely complex nervous system. Neuroscientists almost always attempt to relate their findings to behavior, and a collaboration with behavioral scientists could only be beneficial to both disciplines. The studies in which a correlation was observed between brain activity and monkeys' responding on concurrent VI schedules (Sugrue et al., 2004) provide an excellent example of such a collaboration in which sophisticated operant techniques were employed to examine reward circuitry in the brain. This and similar studies of the neuroscience of reinforcement and choice seem especially promising in determining how the brain changes during the process of drug addiction. This area is still in its infancy and faces important challenges. For example, is observed brain activity associated with reinforcement or with the motor response that accompanies reinforcement? The problem of measuring neurological correlates of reinforcement with minimal or no overt behavior is also a challenge to which behavioral scientists must rise.

Unfortunately, there has been limited interaction between behavioral scientists and most of the laboratories directly involved in the neuroscience of drug addiction. This may be the result of fundamental differences in the constructs invoked to describe the development of addiction. Behaviorists reject the prevalent neuroscientific notion that drugs themselves are responsible for the development of addiction, and see addiction not primarily as a “brain disease,” but as a behavioral disorder that cannot be separated from the prevailing and historical contingencies of reinforcement.

In addition to the differences in the approach to addiction, the absence of a collaboration between these groups of scientists also can be attributed to the fact that behavioral scientists, even those of us interested in drug-related behavior, have not established and validated a model of addiction that could be used by neuroscientists to test, extend, and perhaps refute, their hypotheses. Such a model might involve reliable measures of reinforcer efficacy or strength (e.g., of a drug reinforcer), or measures of reinforcer preferences (e.g., between food and drug), and how these might change as a consequence of chronic drug administration or drug self-administration.

Behavioral scientists have much to offer neuroscientists, and drug addiction might be an appropriate place for these two disciplines to meet. In addition, neuroscientific data ultimately could be of interest to behavior analysts for more fundamental reasons, such as closing temporal gaps in demonstrated functional relations between historical variables and current behavior (Skinner, 1974), and relating current behavior to events at the neurological level when information about the historical environment is inaccessible. If we behavioral scientists can rise to the challenge that neuroscience presents, and if neuroscientists can appreciate the improvements we might be able to offer them, there is clearly much to be gained in both of these areas of scientific endeavor.

Acknowledgments

Preparation of this review was supported by USPHS grants AA 013713 and DA 015449.

References

- Ahmed S.H, Koob G.F. Transition from moderate to excessive drug intake: Change in hedonic set point. Science. 1998;282:298–300. doi: 10.1126/science.282.5387.298. [DOI] [PubMed] [Google Scholar]

- Ahmed S.H, Walker J.R, Koob G.F. Persistent increase in the motivation to take heroin in rats with a history of drug escalation. Neuropsychopharmacology. 2000;22:413–421. doi: 10.1016/S0893-133X(99)00133-5. [DOI] [PubMed] [Google Scholar]

- Alexander G.E, DeLong M.R, Strick P.L. Parallel organization of functionally segregated circuits linking basal ganglia and cortex. Annual Review of Neuroscience. 1986;9:357–381. doi: 10.1146/annurev.ne.09.030186.002041. [DOI] [PubMed] [Google Scholar]

- Alheid G.F. Extended amygdala and basal forebrain. Annals of the New York Academy of Science. 2003;985:185–205. doi: 10.1111/j.1749-6632.2003.tb07082.x. [DOI] [PubMed] [Google Scholar]

- Bardo M.T, Bevins R.A. Conditioned place preference: what does it add to our preclinical understanding of drug reward? Psychopharmacology. 2000;153:31–43. doi: 10.1007/s002130000569. [DOI] [PubMed] [Google Scholar]

- Barraclough D.J, Conroy M.L, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nature Neuroscience. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Barrot M, Olivier J.D, Perrotti L.I, DiLeone R.J, Berton O, Eisch A.J, Impey S, Storm D.R, Neve R.L, Yin J.C, Zachariou V, Nestler E.J. CREB activity in the nucleus accumbens shell controls gating of behavioral responses to emotional stimuli. Proceedings of the National Academy of Sciences of the United States of America. 2002;99:11435–11440. doi: 10.1073/pnas.172091899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal R.N, Everitt B.J. Neural and psychological mechanisms underlying appetitive learning: Links to drug addiction. Current Opinion in Neurobiology. 2004;14:156–162. doi: 10.1016/j.conb.2004.03.004. [DOI] [PubMed] [Google Scholar]

- Carelli R.M. Nucleus accumbens cell firing during goal-directed behaviors for cocaine vs “natural” reinforcement. Physiology and Behavior. 2002;76:379–387. doi: 10.1016/s0031-9384(02)00760-6. [DOI] [PubMed] [Google Scholar]

- The great American smoke out – November 18, 2004. MMWR. 2004;53:1035–1036. [Google Scholar]

- Chang J.Y, Janak P.H, Woodward D.J. Comparison of mesocorticolimbic neuronal responses during cocaine and heroin self-administration in freely moving rats. Journal of Neuroscience. 1998;18:3098–3115. doi: 10.1523/JNEUROSCI.18-08-03098.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen K, Kandel D.B. The natural history of drug use from adolescence to the mid-thirties in a general population sample. American Journal of Public Health. 1995;85:41–47. doi: 10.2105/ajph.85.1.41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colby C.R, Whisler K, Steffen C, Nestler E.J, Self D.W. Striatal cell type-specific overexpression of ΔFosB enhances incentive for cocaine. Journal of Neuroscience. 2003;23:2488–2493. doi: 10.1523/JNEUROSCI.23-06-02488.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Colby C.L, Goldberg M.E. Space and attention in parietal cortex. Annual Review of Neuroscience. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Cromwell H.C, Schultz W. Effects of expectations for different reward magnitudes on neuronal activity in primate striatum. Journal of Neurophysiology. 2003;89:2823–2838. doi: 10.1152/jn.01014.2002. [DOI] [PubMed] [Google Scholar]

- Davison M, Baum W.M. Choice in a variable environment: Every reinforcer counts. Journal of the Experimental Analysis of Behavior. 2000;74:1–24. doi: 10.1901/jeab.2000.74-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deadwyler S.A, Hayashizaki S, Cheer J, Hampson R.E. Reward, memory and substance abuse: Functional neuronal circuits in the nucleus accumbens. Neuroscience and Biobehavioral Reviews. 2004;27:703–711. doi: 10.1016/j.neubiorev.2003.11.011. [DOI] [PubMed] [Google Scholar]

- Deneau G.A, Yangita T, Seevers M.H. Self-administration of psychoactive substances by the monkey. Psychopharmacologia. 1969;16:30–48. doi: 10.1007/BF00405254. [DOI] [PubMed] [Google Scholar]

- Di Chiara G, Imperato A. Drugs abused by humans preferentially increase synaptic dopamine concentrations in the mesolimbic system of freely moving rats. Proceedings of the National Academy of Sciences of the United States of America. 1988;85:5274–5278. doi: 10.1073/pnas.85.14.5274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher P.W. The neurobiology of visual-saccadic decision making. Annual Review of Neuroscience. 2003;26:133–179. doi: 10.1146/annurev.neuro.26.010302.081134. [DOI] [PubMed] [Google Scholar]

- Gold J.I, Shadlen M.N. Neural computations that underlie decisions about sensory stimuli. Trends in Cognitive Science. 2001;5:10–16. doi: 10.1016/s1364-6613(00)01567-9. [DOI] [PubMed] [Google Scholar]

- Gold J.I, Shadlen M.N. Banburismus and the brain: Decoding the relationship between sensory stimuli, decisions, and reward. Neuron. 2002;36:299–308. doi: 10.1016/s0896-6273(02)00971-6. [DOI] [PubMed] [Google Scholar]

- Griffiths R.R, Balster R.L. Opioids: Similarity between evaluations of subjective effects and animal self-administration results. Clinical Pharmacology & Therapeutics. 1979;25:611–617. doi: 10.1002/cpt1979255part1611. [DOI] [PubMed] [Google Scholar]

- HassaniK O, Cromwell H.C, Schultz W. Influence of expectation of different rewards on behavior-related neuronal activity in the striatum. Journal of Neurophysiology. 2001;85:2477–2489. doi: 10.1152/jn.2001.85.6.2477. [DOI] [PubMed] [Google Scholar]

- Heimer L. A new anatomical framework for neuropsychiataric disorders and drug abuse. American Journal of Psychiatry. 2003;160:1726–1739. doi: 10.1176/appi.ajp.160.10.1726. [DOI] [PubMed] [Google Scholar]

- Herrnstein R.J. Relative and absolute strength of response as a function of frequency of reinforcement. Journal of the Experimental Analysis of Behavior. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins S.T, Budney A.J, Bickel W.K, Hughes J.R, Foerg F, Badger G. Achieving cocaine abstinence with a behavioral approach. American Journal of Psychiatry. 1993;150:763–769. doi: 10.1176/ajp.150.5.763. [DOI] [PubMed] [Google Scholar]

- Higgins S.T, Heil S.H, Lussier J.P. Clinical implications of reinforcement as a determinant of substance use disorders. Annual Review of Psychology. 2004;55:431–461. doi: 10.1146/annurev.psych.55.090902.142033. [DOI] [PubMed] [Google Scholar]

- Horvitz J.C. Mesolimbocortical and nigrostriatal dopamine responses to salient non-reward events. Neuroscience. 2000;96:651–656. doi: 10.1016/s0306-4522(00)00019-1. [DOI] [PubMed] [Google Scholar]

- Kalivas P.W. Recent understanding in the mechanisms of addiction. Current Psychiatry Reports. 2004;6:347–351. doi: 10.1007/s11920-004-0021-0. [DOI] [PubMed] [Google Scholar]

- Kalivas P.W, Churchill L, Klitenick M.A. The circuitry mediating the translation of motivational stimuli into adaptive motor responses. In: Kalivas P.W, Barnes C.D, editors. Limbic motor circuits and neuropsychiatry. Boca Raton, FL: CRC Press; 1993. pp. 237–287. [Google Scholar]

- Katz J.L, Higgins S.T. The validity of the reinstatement model of craving and relapse to drug use. Psychopharmacology. 2003;168:21–30. doi: 10.1007/s00213-003-1441-y. [DOI] [PubMed] [Google Scholar]

- Koob G.F, Le Moal M. Drug abuse: Hedonic homeostatic dysregulation. Science. 1997;278:52–58. doi: 10.1126/science.278.5335.52. [DOI] [PubMed] [Google Scholar]

- LeDoux J.E. Emotion circuits in the brain. Annual Review of Neuroscience. 2000;23:155–184. doi: 10.1146/annurev.neuro.23.1.155. [DOI] [PubMed] [Google Scholar]

- Leon M.I, Shadlen M.N. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24:415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- Lubman D.I, Yucel M, Pantelis C. Addiction, a condition of compulsive behavior? Neuroimaging and neuropsychological evidence of inhibitory dysregulation. Addiction. 2004;99:1491–1502. doi: 10.1111/j.1360-0443.2004.00808.x. [DOI] [PubMed] [Google Scholar]

- McClung C.A, Nestler E.J. Regulation of gene expression and cocaine reward by CREB and FosB. Nature Neuroscience. 2003;6:1208–1215. doi: 10.1038/nn1143. [DOI] [PubMed] [Google Scholar]

- Nestler E.J. Historical review: Molecular and cellular mechanisms of opiate and cocaine addiction. Trends in Pharmacological Science. 2004;25:210–218. doi: 10.1016/j.tips.2004.02.005. [DOI] [PubMed] [Google Scholar]

- Phillips P.M, Stuber G.D, Heien M.L, Wightman R.M, Carelli R.M. Subsecond dopamine release promotes cocaine seeking. Nature. 2003;422:614–681. doi: 10.1038/nature01476. [DOI] [PubMed] [Google Scholar]

- Platt M.L, GlimcherW P. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Robbins T.W, Everitt B.J. Neurobehavioural mechanisms of reward and motivation. Current Opinion in Neurobiology. 1996;6:228–236. doi: 10.1016/s0959-4388(96)80077-8. [DOI] [PubMed] [Google Scholar]

- Robins L.N. The Nathan B. Eddy Lecture: Challenging conventional wisdom about drug abuse. NIDA Research Monograph. 1994;140:30–45. [PubMed] [Google Scholar]

- Robinson T.E, Berridge K.C. The neural basis of drug craving: An incentive-sensitization theory of addiction. Brain Research and Brain Research Reviews. 1993;18:247–291. doi: 10.1016/0165-0173(93)90013-p. [DOI] [PubMed] [Google Scholar]

- Robinson T.E, Berridge K.C. The psychology and neurobiology of addiction: An incentive-sensitization view. Addiction. 2000;95:S91–S117. doi: 10.1080/09652140050111681. [DOI] [PubMed] [Google Scholar]

- Robinson T.E, Kolb B. Structural plasticity associated with exposure to drugs of abuse. Neuropharmacology. 2004;47((1)):33–46. doi: 10.1016/j.neuropharm.2004.06.025. [DOI] [PubMed] [Google Scholar]

- Roesch M.R, Olson C.R. Impact of expected reward on neuronal activity in prefrontal cortex, frontal and supplementary eye fields and premotor cortex. Journal of Neurophysiology. 2003;90:1766–1789. doi: 10.1152/jn.00019.2003. [DOI] [PubMed] [Google Scholar]

- Roesch M.R, Olson C.R. Neuronal activity related to reward value and motivation in primate frontal cortex. Science. 2004;304:307–310. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- Roitman M.F, Stuber G.D, Phillips P.E.M, Wightman R.M, Carelli R.M. Dopamine operates as a subsecond modulator of food seeking. The Journal of Neuroscience. 2004;24:1265–1271. doi: 10.1523/JNEUROSCI.3823-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. Journal of Neurophysiology. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Schultz W. Neural coding of basic reward terms of animal learning theory, game theory, microeconomics and behavioural ecology. Current Opinion in Neurobiology. 2004;14:139–147. doi: 10.1016/j.conb.2004.03.017. [DOI] [PubMed] [Google Scholar]

- Skinner B.F. About behaviorism. New York: Alfred A. Knopf; 1974. [Google Scholar]

- Skinner B.F. The behavior of organisms: An experimental analysis. Acton, MA: Copley Publishing Group; 1991. (Original work published 1938.) [Google Scholar]

- Sugrue L.P, Corrado G.S, Newsome W.T. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- Szumlinski K.K, Dehoff M.H, Kang S.H, Frys K.A, Lominac K.D, Klugmann M, et al. Homer proteins regulate sensitivity to cocaine. Neuron. 2004;43:401–413. doi: 10.1016/j.neuron.2004.07.019. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Schultz W. Relative reward preference in primate orbital frontal cortex. Nature. 1999;398:704–708. doi: 10.1038/19525. [DOI] [PubMed] [Google Scholar]

- Watanabe M, Cromwell H.C, Tremblay L, Hollerman J.R, Hikosaka K, Schultz W. Behavioral reactions reflecting differential reward expectations in monkeys. Experimental Brain Research. 2001;140:511–518. doi: 10.1007/s002210100856. [DOI] [PubMed] [Google Scholar]

- Watanabe M, Hikosaka K, Sakagami M, Shrakawa S. Coding and monitoring of motivational context in the primate prefrontal cortex. Journal of Neuroscience. 2002;22:2391–2400. doi: 10.1523/JNEUROSCI.22-06-02391.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]