Abstract

Objective To investigate if published studies tend to report favourable cost effectiveness ratios (below $20 000, $50 000, and $100 000 per quality adjusted life year (QALY) gained) and evaluate study characteristics associated with this phenomenon.

Design Systematic review.

Studies reviewed 494 English language studies measuring health effects in QALYs published up to December 2001 identified using Medline, HealthSTAR, CancerLit, Current Content, and EconLit databases.

Main outcome measures Incremental cost effectiveness ratios measured in dollars set to the year of publication.

Results Approximately half the reported incremental cost effectiveness ratios (712 of 1433) were below $20 000/QALY. Studies funded by industry were more likely to report cost effectiveness ratios below $20 000/QALY (adjusted odds ratio 2.1, 95% confidence interval 1.3 to 3.3), $50 000/QALY (3.2, 1.8 to 5.7), and $100 000/QALY (3.3, 1.6 to 6.8). Studies of higher methodological quality (adjusted odds ratio 0.58, 0.37 to 0.91) and those conducted in Europe (0.59, 0.33 to 1.1) and the United States (0.44, 0.26 to 0.76) rather than elsewhere were less likely to report ratios below $20 000/QALY.

Conclusion Most published analyses report favourable incremental cost effectiveness ratios. Studies funded by industry were more likely to report ratios below the three thresholds. Studies of higher methodological quality and those conducted in Europe and the US rather than elsewhere were less likely to report ratios below $20 000/QALY.

Introduction

Cost effectiveness analysis can help inform policy makers on better ways to allocate limited resources.1-3 Some form of cost effectiveness is now required for health interventions to be covered by many insurers.1,4,5 The quality adjusted life year (QALY) is used to compare the effectiveness of a wide range of interventions. Cost effectiveness analysis produces a numerical ratio—the incremental cost effectiveness ratio—in dollars per QALY. This ratio is used to express the difference in cost effectiveness between new diagnostic tests or treatments and current ones.

Interpreting the results of cost effectiveness analysis can be problematic, making it difficult to decide whether to adopt a diagnostic test or treatment. The threshold for adoption is thought to be somewhere between $20 000 (£11 300, €16 500)/QALY and $100 000/QALY, with thresholds of $50-60 000/QALY frequently proposed.6-9

Regardless of the true value of the willingness of society to pay, studies of healthcare interventions would be expected to report a wide range of incremental cost effectiveness ratios. When published ratios cluster around a proposed threshold, bias may exist, and health policies based on their values may be flawed.

To describe the distribution of reported incremental cost effectiveness ratios and characteristics of studies associated with favourable ratios, we systematically reviewed cost effectiveness studies in health care that used QALYs as an outcome measure. We hypothesised that authors tend to report favourable incremental cost effectiveness ratios, such as those below $50 000 per QALY.

Methods

We conducted a systematic literature search of Medline, Health-STAR, CancerLit, Current Contents Connect (all editions), and EconLit databases for all original cost effectiveness analyses published in English between 1976 and 2001 that expressed health outcomes in QALYs.10-13 Cost effectiveness analyses are reported as dollars per QALY.1 We used a standard data collection form, and two reviewers independently evaluated each study and abstracted the data. Disagreements were resolved by consensus. Details of the Tufts-NEMC CEA Registry (formerly the Harvard School of Public Health CEA Registry) are available online (http://tufts-nemc.org/cearegistry).

For each article, we documented the name of the journal, the year of publication, the disease category, and the country where the study was carried out. We used the Science Citation Index database to assign an impact factor for the year before publication to each journal. The sources of funding were identified as “industry” (partial or complete funding by a pharmaceutical or medical device company indicated in the manuscript) or “;non-industry.” Studies for which a funding source was not listed were identified as “not specified.” We also assigned a quality score to each article, ranging from 1 (low) to 7 (high), based on the overall quality of the study methods, assumptions, and reporting practices.10

Because cost effectiveness analyses often compare several programmes and include scenarios specific to patient subgroups or settings, each study may have contributed more than one cost effectiveness ratio. All cost effectiveness ratios were converted to US dollars at the exchange rate prevalent in the year of publication.14 Because we wanted to test whether the ratios targeted certain thresholds of the willingness of society to pay, such as $50 000/QALY, we did not adjust the ratios to constant dollars.

Statistical analysis

We analysed the distribution of all incremental cost effectiveness ratios and of the smallest and largest ratios from each study. We excluded nine ratios for which both the incremental cost and the incremental QALYs were negative. Although such interventions may be economically efficient, decision makers might not want to adopt interventions associated with reduced health.15

Generalised estimating equations were used to evaluate study characteristics associated with incremental cost effectiveness ratios below the threshold values of $20 000, $50 000, and $100 000, as recommended previously.6,8,9 We used these equations because they take into account the correlation of cost effectiveness ratios derived from within the same study.16 We estimated odds ratios for associations between study characteristics and the presence of a favourable cost effectiveness ratio. Adjusted odds ratios were estimated by fitting a non-parsimonious model that included a priori predictor variables.

We used SAS statistical software version 8.2 for all analyses. Two sided P values less than 0.05 were considered significant.

Results

We screened more than 3300 study abstracts and identified 533 original cost-utility analyses. Thirty nine studies were excluded because they did not report numerical incremental cost effectiveness ratios. In total, 1433 cost effectiveness ratios were reported in these 494 studies, with a median of 2.0 (interquartile range 1-3) and a range of 1-20 ratios per study. Overall, 130 incremental cost effectiveness ratios (9%) were reported as cost saving (they saved money and improved health simultaneously), 124 (9%) were dominated by their comparators (had worse health outcomes and increased costs), and 1179 (82%) increased costs but improved health outcomes.

Most studies were published in the 1990s (table 1). The citation impact factor in the year before publication was available for 449 studies (91%). Cardiovascular and infectious disease interventions were the most commonly studied. Most studies were from the United States. About 18% were sponsored by industry, almost half were sponsored by non-industry sources, and sponsorship could not be determined in 34% of studies.

Table 1.

Characteristics of 494 cost-utility analyses of health interventions published between 1976 and 2001

| Study characteristic | No. (%) |

|---|---|

| Publication year | |

| 1976-91 | 47 (9) |

| 1992-6 | 125 (25) |

| 1997-2001 | 322 (65) |

| Journal impact factor* | |

| <2 | 157 (32) |

| 2-4 | 137 (28) |

| >4 | 155 (31) |

| Not available | 45 (9) |

| Disease category | |

| Cardiovascular | 110 (22) |

| Endocrine | 30 (6) |

| Infectious | 94 (19) |

| Musculoskeletal | 21 (4) |

| Neoplastic | 76 (15) |

| Neurological or psychiatric | 43 (9) |

| Other | 120 (24) |

| Sponsorship or funding source | |

| Non-industry | 240 (49) |

| Industry† | 88 (18) |

| Not specified | 166 (34) |

| Region of study | |

| Europe | 118 (24) |

| United States | 306 (62) |

| Other‡ | 70 (14) |

| Methodological quality§ | |

| 1.0-4.0 | 214 (43) |

| 4.5-5.0 | 159 (32) |

| 5.5-7.0 | 121 (25) |

Impact factor for that journal in the year before publication of the study.

Funding by a pharmaceutical or medical device manufacturer.

Canada 41 (59%), Australia 18 (26%), Japan 2 (3%), New Zealand 2 (3%), South Africa 2 (3%), other 5 (7%).

Mean quality scores for the two reviewers.

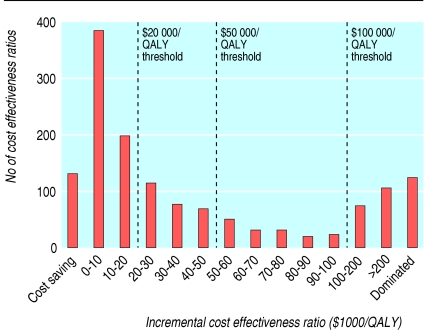

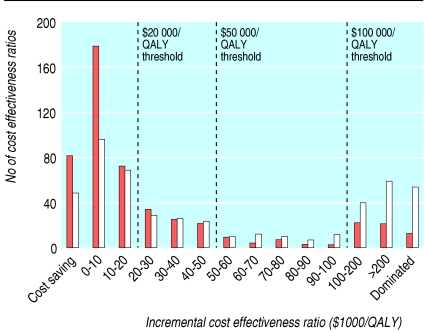

Figure 1 shows the frequency distribution of all 1433 incremental cost effectiveness ratios. The median (interquartile range) ratio per QALY was $20 133 ($4520-74 400). Approximately half of the ratios (712; 50%) were below $20 000/QALY, two thirds (974; 68%) were below $50 000/QALY, and more than three quarters (1129; 79%) were below $100 000/QALY. When analysed according to study sponsorship, median (range) ratios per QALY were $13 083 ($3600-33 000) for those sponsored by industry and $27 400 ($4600-96 600) for those with non-industry sponsors. The median (range) cost effectiveness ratio per QALY for studies with unknown sponsorship was $18 900 ($4 960-64 300). Restricting the analysis to the lowest and highest ratios reported by each study yielded median ratios of $8784/QALY and $31 104/QALY (fig 2).

Fig 1.

Frequency distribution of 1433 incremental cost effectiveness ratios for health interventions

Fig 2.

Frequency distribution of lowest (brown) and highest (white) incremental cost effectiveness ratios in each study

Several study characteristics were associated with reporting incremental cost effectiveness ratios below one or all three thresholds (table 2). The more quoted journals with a citation impact factor above 4 were less likely to publish ratios below $20 000/QALY (crude odds ratio 0.60, 95% confidence interval 0.42 to 0.86) or $50 000/QALY (crude 0.56, 0.38 to 0.82) than less quoted journals with a lower impact factor. However, this finding was not significant within the multivariable model (table 2).

Table 2.

Characteristics of studies associated with favourable incremental cost effectiveness ratios according to three threshold values. Values are odds ratios (95% confidence intervals)

| Study characteristic |

Crude OR (95% Cl)

|

Adjusted OR (95% Cl)*

|

||||

|---|---|---|---|---|---|---|

| <$20 000/QALY | <$50 000/QALY | <$100 000/QALY | <$20 000/QALY | <$50 000/QALY | <$100 000/QALY | |

| Publication year | ||||||

| 1976-91 | 1.6 (0.98 to 2.7) | 1.4 (0.80 to 2.4) | 1.2 (0.67 to 2.3) | 1.6 (0.96 to 2.7) | 1.3 (0.76 to 2.3) | 1.2 (0.61 to 2.2) |

| 1992-6 | 1.3 (0.94 to 1.9) | 1.4 (0.93 to 2.3) | 1.1 (0.68 to 1.6) | 1.3 (0.87 to 1.8) | 1.3 (0.87 to 1.9) | 1.0 (0.64 to 1.6) |

| 1997-2001 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| Journal impact factor† | ||||||

| <2 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| 2-4 | 0.62 (0.42 to 0.91) | 0.62 (0.41 to 0.94) | 0.59 (0.38 to 0.94) | 0.75 (0.50 to 1.1) | 0.82 (0.53 to 1.3) | 0.77 (0.47 to 1.2) |

| >4 | 0.60 (0.42 to 0.86) | 0.56 (0.38 to 0.82) | 0.83 (0.53 to 1.3) | 0.95 (0.63 to 1.4) | 0.81 (0.52 to 1.3) | 1.1 (0.66 to 1.9) |

| Disease category | ||||||

| Cardiovascular | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| Endocrine | 1.3 (0.68 to 2.6) | 1.2 (0.58 to 2.5) | 1.3 (0.58 to 3.0) | 1.2 (0.56 to 2.4) | 1.1 (0.52 to 2.3) | 1.2 (0.53 to 2.7) |

| Infectious | 1.1 (0.66 to 1.7) | 0.79 (0.48 to 1.3) | 0.74 (0.43 to 1.3) | 1.0 (0.64 to 1.7) | 0.75 (0.44 to 1.3) | 0.71 (0.39 to 1.3) |

| Musculoskeletal | 1.4 (0.60 to 3.3) | 1.3 (0.51 to 3.1) | 1.4 (0.50 to 3.7) | 1.1 (0.43 to 2.7) | 0.89 (0.34 to 2.3) | 1.1 (0.37 to 3.1) |

| Neoplastic | 0.91 (0.56 to 1.5) | 0.79 (0.46 to 1.3) | 0.77 (0.42 to 1.4) | 0.78 (0.47 to 1.3) | 0.64 (0.37 to 1.1) | 0.69 (0.36 to 1.3) |

| Neurological/psychiatric | 0.76 (0.40 to 1.5) | 0.78 (0.40 to 1.5)) | 0.66 (0.31 to 1.4) | 0.75 (0.39 to 1.4) | 0.70 (0.34 to 1.4) | 0.61 (0.27 to 1.4) |

| Other | 1.2 (0.75 to 1.8) | 0.67 (0.42 to 1.1) | 0.52 (0.31 to 0.88) | 1.0 (0.63 to 1.6) | 0.53 (0.31 to 0.88) | 0.49 (0.27 to 0.86) |

| Study funding source‡ | ||||||

| Non-industry | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| Industry | 2.2 (1.4 to 3.4) | 3.5 (2.0 to 6.1) | 3.4 (1.6 to 7.0) | 2.1 (1.3 to 3.3) | 3.2 (1.8 to 5.7) | 3.3 (1.6 to 6.8) |

| Not specified | 1.3 (0.95 to 1.9) | 1.5 (1.1 to 2.2) | 1.4 (0.93 to 2.1) | 1.3 (0.89 to 1.8) | 1.5 (1.0 to 2.1) | 1.5 (0.97 to 2.2) |

| Region of study | ||||||

| Europe | 0.50 (0.28 to 0.89) | 0.43 (0.21 to 0.87) | 0.46 (0.21 to 1.0) | 0.59 (0.33 to 1.1) | 0.42 (0.21 to 0.86) | 0.43 (0.19 to 0.96) |

| United States | 0.35 (0.21 to 0.57) | 0.29 (0.16 to 0.55) | 0.33 (0.16 to 0.66) | 0.44 (0.26 to 0.76) | 0.35 (0.18 to 0.67) | 0.33 (0.16 to 0.68) |

| Other§ | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| Methodological quality¶ | ||||||

| 1.0-4.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 |

| 4.5-5.0 | 0.92 (0.64 to 1.3) | 0.95 (0.64 to 1.4) | 0.96 (0.62 to 1.5) | 1.0 (0.70 to 1.5) | 1.1 (0.70 to 1.6) | 1.0 (0.63 to 1.6) |

| 5.5-7.0 | 0.48 (0.33 to 0.70) | 0.57 (0.39 to 0.83) | 0.82 (0.52 to 1.3) | 0.58 (0.37 to 0.91) | 0.72 (0.45 to 1.2) | 0.90 (0.51 to 1.6) |

QALY, quality adjusted life year.

Adjusted for all other study characteristics.

Impact factor in the year before publication.

Funding by a pharmaceutical or medical device manufacturer.

Canada 41 (59%), Australia 18 (26%), Japan 2 (3%), New Zealand 2 (3%), South Africa 2 (3%), other 5 (7%).

Mean score from two reviewers.

Studies funded by industry were more likely to report cost effectiveness ratios less than $20 000/QALY (adjusted odds ratio 2.1, 1.3 to 3.3), $50 000/QALY (3.2, 1.8 to 5.7), or $100 000/QALY (3.3, 1.6 to 6.8) than studies funded by non-industry sources (table 2). Studies carried out in the US and Europe were significantly less likely to find favourable incremental cost effectiveness ratios than studies carried out elsewhere. Studies with quality scores for methodology above 5.5 were significantly less likely to report ratios below $20 000/QALY (0.48, 0.33 to 0.70) and $50 000/QALY (0.57, 0.39 to 0.83). Within the multivariable model, the association with quality remained significant only for cost effectiveness ratios below $20 000/QALY (adjusted odds ratio 0.58, 0.37 to 0.91; table 2).

Discussion

About half of all cost effectiveness studies published over a 25 year period reported highly favourable incremental cost effectiveness ratios of less than $20 000/QALY. More than half of the highest ratios reported by each study were below $50 000/QALY. In multivariable analysis, location of the study, methodological quality, and sponsorship were associated with a favourable cost effectiveness ratio. Studies sponsored by industry were more than twice as likely as studies sponsored by non-industry sources to report ratios below $20 000/QALY and over three times more likely to report ratios below $50 000/QALY or $100 000/QALY. Studies sponsored by industry were also more likely to be of lower methodological quality and to be published in journals with lower impact factors.

Few studies have described the distribution of reported incremental cost effectiveness ratios.12,17 We reviewed many studies, but we restricted our analysis to studies that measured health outcomes with QALYs. Although the QALY measure remains controversial, it has been endorsed by authoritative bodies, and it potentially allows cost effectiveness analysis to assess both allocative and technical efficiency.1,18,19 It would be interesting to examine whether the use of alternative measures of health outcome results in more or less favourable cost effectiveness ratios. A limitation of our analysis is that some cost effectiveness analyses used in decision making may not have been published.5 However, analyses of cost effectiveness assessments submitted to the National Institute for Health and Clinical Excellence (NICE) in the United Kingdom found that cost effectiveness ratios submitted by manufacturers were significantly lower than analyses of identical technologies performed by assessors from an academic centre.20

Publication bias

We found relatively few published incremental cost effectiveness ratios between $50 000/QALY and $100 000/QALY; many were below $20 000/QALY and some were above $100 000/QALY. This indicates that cost effectiveness analyses tend to report “positive” or “negative” results but not intermediate results. There are three possible explanations for these findings. Firstly, they may reflect the true distribution of cost effectiveness ratios for healthcare interventions. Perhaps interventions that manufacturers deem to be economically unattractive are not brought to market.21 Secondly, analysts may not be interested in studying interventions with mid-range cost effectiveness ratios or some journals may not want to publish such studies. Thirdly, some cost effectiveness analyses may be modelled to yield favourable ratios or studies with unfavourable ratios may be suppressed. These findings do not tell us whether authors (and their sponsors) fail to report undesirable cost effectiveness results while reporting those that are positive. It is unclear whether publication bias occurs at a conscious or unconscious level. In any case, our results support concerns about the presence of significant and persistent bias in both the conduct and reporting of cost effectiveness analyses.22,23 It could be argued that all cost effectiveness analyses should be registered before they start, as for randomised clinical trials, but this may be unrealistic given the way they are currently conducted.24

If bias is an important explanation for our findings, our results indicate that the “target” cost effectiveness ratio for a healthcare intervention is between $20 000/QALY and $50 000/QALY. The true willingness of society to pay for an extra QALY is unknown, although some have tried to derive this willingness from economic principles, or to infer it from policy level decisions.25-27 In the US, the threshold most often used is $50 000/QALY, based on Medicare's coverage of dialysis, although the criteria for selecting this value have been criticised.8,26 Furthermore, interventions may be adopted despite having an unfavourable ratio if other concerns such as disease burden and health equity are dealt with.25

Recent attempts to standardise the conduct and reporting of economic analyses and modelling studies may help prevent the manipulation of studies.1,18,28-30 Such guidelines offer the producers of cost effectiveness analyses, reviewers, readers, and journal editors a framework against which they can assess analyses, although the complexity of models and publication bias remain important issues.5,8,22 Electronic publishing could enhance transparency in modelling by making technical appendices available and improving the presentation of results.3,23 Furthermore, distribution of the underlying decision analysis models to the public should be considered.

Industry sponsorship and the location of the study do not fully explain the tendency for incremental cost effectiveness ratios to fall below desirable thresholds. Fewer than 20% of studies reviewed were funded by industry, and another 33% did not specify their source of funding. Moreover, the median ratio for studies not sponsored by industry was well below the $50 000/QALY threshold. The lower ratios found in US studies cannot be fully accounted for by foreign exchange rates and inflation because the US results were similar to those found in European studies. The methodological quality of studies is another factor that should be explored in future research.

Journal editors and reviewers can help reduce publication bias.23,31-33 Potential conflicts of interest of study sponsors and authors need to be scrutinised.23,34-36 One approach is to restrict the publication of cost effectiveness analyses funded by industry if at least one author has direct financial ties with the sponsoring company, as adopted by the New England Journal of Medicine in 1994.37 Journal editors may show bias by publishing studies with positive results but not studies with negative results, although this practice may not be common.33,38-41 One study proposes that differences between economic analyses cannot be explained by selective submission or editorial selection bias alone and probably reflect a more fundamental difference in the studies.42

What is already known on this topic

Cost effectiveness analysis is widely used to inform policy makers about the efficient allocation of resources

Various thresholds for cost effectiveness ratios have been proposed to identify good value, but the distribution of published ratios with respect to these thresholds has not been investigated

What this study adds

Two thirds of published cost effectiveness ratios were below $50 000 per quality adjusted life year (QALY) and only 21% were above $100 000/QALY

Published cost effectiveness analyses are of limited use in identifying health interventions that do not meet popular standards of “cost effectiveness”

Conclusions

More rigour and openness is needed in the discipline of health economics before decision makers and the public can be confident that cost effectiveness analyses are conducted and published in an unbiased manner. These considerations are a prerequisite for these analyses to compare health management strategies. A heightened awareness of the limitations of cost effectiveness analyses and potentially influential factors may help users to interpret the conclusions of these analyses.

The paper was presented in abstract form at the Fifth International Congress on Peer Review and Biomedical Publication in Chicago, IL, 16-18 September 2005.

Contributors: All authors contributed to the conception and design of the project, revised the article critically for important intellectual content, and provided final approval of the final version. CMB, DRU, JGR, and AB analysed and interpreted the data and helped to draft the article. CMB had access to all of the data and takes responsibility for the integrity of the data and the accuracy of the data analysis. CMB and PJN are guarantors.

Funding: Agency for Health Care Research and Quality (RO1 HS10919). CMB and JGR are recipients of a phase 2 clinician scientist award and a new investigator award, both from the Canadian Institutes of Health Research. DRU holds a career scientist award from the Ontario Ministry of Health.

Competing interests: None declared.

References

- 1.Gold MR, Siegel JE, Russell LB, Weinstein MC. Cost-effectiveness in health and medicine. New York: Oxford University Press, 1996.

- 2.Granata AV, Hillman AL. Competing practice guidelines: using cost-effectiveness analysis to make optimal decisions. Ann Intern Med 1998;128: 56-63. [DOI] [PubMed] [Google Scholar]

- 3.Pignone M, Saha S, Hoerger T, Lohr KN, Teutsch S, Mandelblatt J. Challenges in systematic reviews of economic analyses. Ann Intern Med 2005;142: 1073-9. [DOI] [PubMed] [Google Scholar]

- 4.Laupacis A. Incorporating economic evaluations into decision-making: the Ontario experience. Med Care 2005;43(suppl 7): 15-9. [DOI] [PubMed] [Google Scholar]

- 5.Hill SR, Mitchell AS, Henry DA. Problems with the interpretation of pharmacoeconomic analyses: a review of submissions to the Australian pharmaceutical benefits scheme. JAMA 2000;283: 2116-21. [DOI] [PubMed] [Google Scholar]

- 6.Laupacis A, Feeny D, Detsky AS, Tugwell PX. How attractive does a new technology have to be to warrant adoption and utilization? Tentative guidelines for using clinical and economic evaluations. CMAJ 1992;146: 473-81. [PMC free article] [PubMed] [Google Scholar]

- 7.Owens DK. Interpretation of cost-effectiveness analyses. J Gen Intern Med 1998;13: 716-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Evans C, Tavakoli M, Crawford B. Use of quality adjusted life years and life years gained as benchmarks in economic evaluations: a critical appraisal. Health Care Manage Sci 2004;7: 43-9. [DOI] [PubMed] [Google Scholar]

- 9.Eichler HG, Kong SX, Gerth WC, Mavros P, Jonsson B. Use of cost-effectiveness analysis in health-care resource allocation decision-making: how are cost-effectiveness thresholds expected to emerge? Value Health 2004;7: 518-28. [DOI] [PubMed] [Google Scholar]

- 10.Neumann PJ, Stone PW, Chapman RH, Sandberg EA, Bell CM. The quality of reporting in published cost-utility analyses, 1976-1997. Ann Intern Med 2000;132: 964-72. [DOI] [PubMed] [Google Scholar]

- 11.Neumann PJ, Greenberg D, Olchanski NV, Stone PW, Rosen AB. Growth and quality of the cost utility literature, 1976-2001. Value Health 2005;8: 3-9. [DOI] [PubMed] [Google Scholar]

- 12.Chapman RH, Stone PW, Sandberg EA, Bell C, Neumann PJ. A comprehensive league table of cost-utility ratios and a sub-table of “panel-worthy” studies. Med Decis Making 2000;20: 451-67. [DOI] [PubMed] [Google Scholar]

- 13.Greenberg D, Rosen AB, Olchanski NV, Stone PW, Nadai J, Neumann PJ. Delays in publication of cost utility analyses conducted alongside clinical trials: registry analysis. BMJ 2004;328: 1536-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Monthly exchange rates series. Federal Reserve Bank of St Louis. http://research.stlouisfed.org/fred2/categories/15 (accessed 9 Feb 2006).

- 15.O'Brien BJ, Gertsen K, Willan AR, Faulkner LA. Is there a kink in consumers' threshold value for cost-effectiveness in health care? Health Econ 2002;11: 175-80. [DOI] [PubMed] [Google Scholar]

- 16.Zeger SL, Liang KY, Albert PS. Models for longitudinal data: a generalized estimating equation approach. Biometrics 1988;44: 1049-60. [PubMed] [Google Scholar]

- 17.Tengs TO, Adams ME, Pliskin JS, Safran DG, Siegel JE, Weinstein MC, et al. Five-hundred life-saving interventions and their cost-effectiveness. Risk Anal 1995;15: 369-90. [DOI] [PubMed] [Google Scholar]

- 18.Canadian Coordinating Office for Health Technology Assessment. Common drug review submission guidelines. Ottawa: Canadian Coordinating Office for Health Technology Assessment (CCOHTA), 2005. www.ccohta.ca/CDR/cdr_pdf/cdr_submiss_guide.pdf (accessed 9 Feb 2006).

- 19.Oliver A, Healey A, Donaldson C. Choosing the method to match the perspective: economic assessment and its implications for health-services efficiency. Lancet 2002;359: 1771-4. [DOI] [PubMed] [Google Scholar]

- 20.Miners AH, Garau M, Fidan D, Fischer AJ. Comparing estimates of cost effectiveness submitted to the National Institute for Clinical Excellence (NICE) by different organisations: retrospective study. BMJ 2005;330: 65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Neumann PJ, Sandberg EA, Bell CM, Stone PW, Chapman RH. Are pharmaceuticals cost-effective? A review of the evidence. Health Aff (Millwood) 2000;19: 92-109. [DOI] [PubMed] [Google Scholar]

- 22.Freemantle N, Mason J. Publication bias in clinical trials and economic analyses. Pharmacoeconomics 1997;12: 10-6. [DOI] [PubMed] [Google Scholar]

- 23.Hillman AL, Eisenberg JM, Pauly MV, Bloom BS, Glick H, Kinosian B, et al. Avoiding bias in the conduct and reporting of cost-effectiveness research sponsored by pharmaceutical companies. N Engl J Med 1991;324: 1362-5. [DOI] [PubMed] [Google Scholar]

- 24.Rennie D. Peer review in Prague. JAMA 1998;280: 214-5. [DOI] [PubMed] [Google Scholar]

- 25.Devlin N, Parkin D. Does NICE have a cost-effectiveness threshold and what other factors influence its decisions? A binary choice analysis. Health Econ 2004;13: 437-52. [DOI] [PubMed] [Google Scholar]

- 26.Hirth RA, Chernew ME, Miller E, Fendrick AM, Weissert WG. Willingness to pay for a quality-adjusted life year: in search of a standard. Med Decis Making 2000;20: 332-42. [DOI] [PubMed] [Google Scholar]

- 27.Garber AM, Phelps CE. Economic foundations of cost-effectiveness analysis. J Health Econ 1997;16: 1-31. [DOI] [PubMed] [Google Scholar]

- 28.Sculpher M, Fenwick E, Claxton K. Assessing quality in decision analytic cost-effectiveness models. A suggested framework and example of application. Pharmacoeconomics 2000;17: 461-77. [DOI] [PubMed] [Google Scholar]

- 29.Philips Z, Ginnelly L, Sculpher M, Claxton K, Golder S, Riemsma R, et al. Review of guidelines for good practice in decision-analytic modelling in health technology assessment. Health Technol Assess 2004;8: 1-172. [DOI] [PubMed] [Google Scholar]

- 30.Weinstein MC, O'Brien B, Hornberger J, Jackson J, Johannesson M, McCabe C, et al. Principles of good practice for decision analytic modeling in health-care evaluation: report of the ISPOR task force on good research practices—modeling studies. Value Health 2003;6: 9-17. [DOI] [PubMed] [Google Scholar]

- 31.Sharp DW. What can and should be done to reduce publication bias? The perspective of an editor. JAMA 1990;263: 1390-1. [PubMed] [Google Scholar]

- 32.Chalmers TC, Frank CS, Reitman D. Minimizing the three stages of publication bias. JAMA 1990;263: 1392-5. [PubMed] [Google Scholar]

- 33.Dickersin K. The existence of publication bias and risk factors for its occurrence. JAMA 1990;263: 1385-9. [PubMed] [Google Scholar]

- 34.Haivas I, Schroter S, Waechter F, Smith R. Editors' declaration of their own conflicts of interest. Can Med Assoc J 2004;171: 475-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Lexchin J, Bero LA, Djulbegovic B, Clark O. Pharmaceutical industry sponsorship and research outcome and quality: systematic review. BMJ 2003;326: 1167-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Friedberg M, Saffran B, Stinson TJ, Nelson W, Bennett CL. Evaluation of conflict of interest in economic analyses of new drugs used in oncology. JAMA 1999;282: 1453-7. [DOI] [PubMed] [Google Scholar]

- 37.Kassirer JP, Angell M. The journal's policy on cost-effectiveness analyses. N Engl J Med 1994;331: 669-70. [DOI] [PubMed] [Google Scholar]

- 38.Ray JG. Judging the judges: the role of journal editors. QJM 2002;95: 769-74. [DOI] [PubMed] [Google Scholar]

- 39.Murray MD, Birt JA, Manatunga AK, Darnell JC. Medication compliance in elderly out-patients using twice-daily dosing and unit-of-use packaging. Ann Pharmacother 1993;27(5): 616-21. [DOI] [PubMed] [Google Scholar]

- 40.Dickersin K, Chan S, Chalmers TC, Sacks HS, Smith H, Jr. Publication bias and clinical trials. Control Clin Trials 1987;8: 343-53. [DOI] [PubMed] [Google Scholar]

- 41.Olson CM, Rennie D, Cook D, Dickersin K, Flanagin A, Hogan JW, et al. Publication bias in editorial decision making. JAMA 2002;287: 2825-8. [DOI] [PubMed] [Google Scholar]

- 42.Baker CB, Johnsrud MT, Crismon ML, Rosenheck RA, Woods SW. Quantitative analysis of sponsorship bias in economic studies of antidepressants. Br J Psychiatry 2003;183: 498-506. [DOI] [PubMed] [Google Scholar]