Several years ago, a young faculty member at a major university informed me that her department chair had mandated that any faculty member seeking tenure should make sure to publish manuscripts only in journals with an impact factor of 5.0 or greater. As the publisher of a large number of scientific journals, I was offended by the effort of the chair to attempt to correlate the impact factor of the journal with the impact, or excellence, of the faculty member's research. It was apparent that the chair did not realize that impact factor, a bibliometric indicator developed by ISI, was not a measure of scientific quality. Instead, it would have been more relevant to use the actual citation frequency of the scientific paper in evaluating the work of individual scientists.

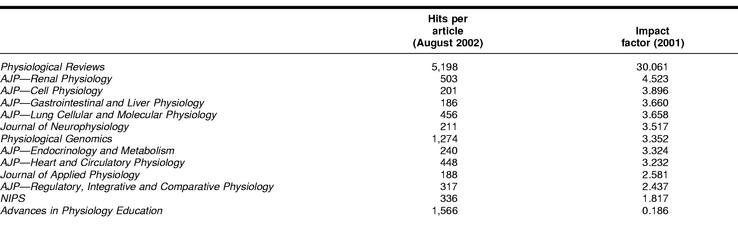

The question facing the scientific community in the digital age is whether impact factors have any relevance in today's environment. While libraries use impact factors as one of several determining factors for their subscription decisions, they also use shelving data, that is, the number of times that a given journal is removed from the shelf by a user and reshelved by a library employee. This measure is inexact, complicated by the fact that some individuals actually reshelve journals themselves. In a similar vein, manuscripts are read many more times than cited. For that reason, the American Physiological Society (APS) has been tracking the number of hits received by the APS's online journals and correlating the information to the actual impact factor measured by ISI. As noted in Table 1, the number of hits per article online does not necessarily correlate well with the actual impact factor of the journal. As expected, a review journal like Physiological Reviews, with an impact factor of 30.061 also has the greatest number of hits per article online of 5,198. However, the correlation is weaker for the various sections of the American Journal of Physiology (AJP). While the section receiving the highest number of hits per article online, AJP—Renal Physiology, also has the highest impact factor, the two sections with the next highest impact factors, AJP—Cell Physiology and AJP—Gastrointestinal and Liver Physiology, have the lowest number of hits per article online. Similarly, Advances in Physiology Education, the APS journal with the lowest impact factor at 0.186, has a hits-per-article online rate of 1,566, ranking second among the APS journals. The question facing publishers, libraries, and end users is whether impact factors or hit rates are a better measure of the journal.

Table 1 A comparison of hits per article online versus 2001 impact factor for the journals of the American Physiological Society

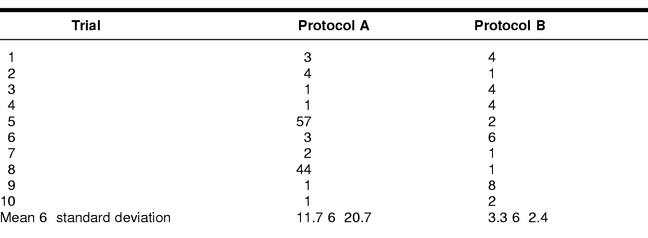

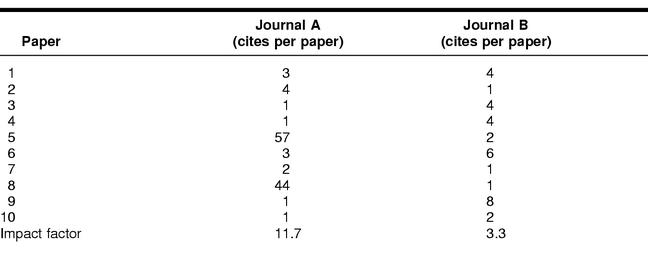

In making impact factors the de facto measure of quality, the scientific community has taken a bibliometric measure developed in 1963 by ISI [1] and made it into a measure of quality. In essence, the scientific community has taken a measure familiar to each of us from our own research experiments and made it an absolute measure. An impact factor is a simple ratio of citations and papers. The numerator is the number of current year citations (e.g., citations made in the year 2001) to all of the papers published by a given journal in the previous two years (that is, 1999 and 2000). The denominator is the total number of papers published in the journal in 1999 and 2000. In that regard, the measure of scientific quality or impact factor is not too dissimilar from the results arising from a research experiment in which a number of experimental trials are tabulated to determine the effectiveness of an experimental protocol. As noted in Table 2, an experimental protocol incorporating ten trials can be compared to another protocol by calculating the mean and standard deviation and performing statistical tests designed to determine if protocol B is significantly different from protocol A. As noted in Table 2, while the means are different, the results from the two protocols are not statistically different from each other. As good scientists, we would each declare the results of this study to be unworthy of publication, even though it might provide some significant insights to the experimenter. Yet, when we use a similar analysis to measure the impact of a journal, we tend to ignore all that we learned in elementary statistics. Converting protocols to journals and trials to papers (Table 3), we see that when we use impact factors, we are only using the mean, ignoring the statistical tests that we use to analyze our own data.

Table 2 Comparison of experimental protocols A and B

Table 3 Comparison of the impact factors for journals A and B

It is unfortunate that the scientific community and university administrators have equated impact factors with excellence without having a complete understanding of how it is calculated or measured. As noted earlier, the denominator is a measure of the number of articles published in the journal during the previous two years. According to ISI, an article is generally defined as a research or review article based on the number of authors, references, page length, page overlap, and inclusion of author addresses [2]. It does not include marginalia, such as letters, news articles, book reviews, or abstracts that might also appear in a journal. According to Pendlebury [3], about 27% of the items indexed in Science Citation Index are such marginalia. Yet the numerator does include citations to these elements, contributing to an inflated impact factor for some journals. It has also been shown by Seglen [4] that about 15% of the articles in a typical journal account for half of the citations gained by the publication. This information suggests that most articles in a high impact journal are cited no more frequently than a paper published in a lower impact journal. Moed [5] has shown in a study of citations for journals contained in the Science Citation Index that about 7% of all references are cited incorrectly, and this is even more prevalent in journals with dual volume-numbering systems. This latter point can help explain the citation rate for articles published in the American Journal of Physiology. Hamilton [6] has reported that 41.3% of the biological sciences papers and 46.4% of the medicine papers published in journals covered by ISI's citation database did not receive a single citation in the five years after they were published.

Even though impact factors do not equate to excellence, universities in several European countries use impact factors to help determine institutional funding. Additionally, many European investigators regularly provide journal impact factors alongside the listing of their articles on their curricula vitae. In most cases, the impact factor provided is for the current year, not the year the article was published. Similarly, as evidenced by the experiences of the young faculty member noted earlier, promotion and appointment committees are increasingly using impact factors to assess the quality of the candidates.

The impact factor calculation developed by Eugene Garfield, ISI, was initially used to evaluate and select journals for listing in Current Contents. It covered a two-year field and did not measure whether the journals were in a rapidly growing or stable field. As a result, the impact factor only measured the influence of an article during the first two years after publication. For journals in more stable fields, the bulk of the citations often occur after the initial two years, contributing to a longer half-life for articles published in that journal. Garfield has noted that the half-life would be longer for journals publishing articles related to physiology than for those publishing articles in molecular biology. As a result, the ranking of physiology journals improved significantly overall as the number of years increased, but the rankings within the group of physiology journals did not change significantly. Table 4 compares the fifteen-year and seven-year impact factor rankings for the APS's three main research journals as compared to their two-year rankings [7]. For example, the American Journal of Physiology's two-year impact ranking in 1983 was 101 as compared to 60 for a fifteen-year ranking. The AJP's impact ranking in 1991 was 124 as compared to 64 for its seven-year ranking. The Journal of Applied Physiology showed an even more pronounced shift, moving from a two-year impact factor rank of 376 to 96 for a seven-year rank.

Table 4 Long-term versus short-term journal impact

Because of the dual citation format for the American Journal of Physiology, it was not until 2000 that the APS was able to get ISI to disaggregate the sections of the American Journal of Physiology to calculate the impact factors for AJP's component parts. In the past, the APS's dual referencing format had created problems of citation recognition for ISI. However, after an extended meeting with the group in 1999, an effort was made to include impact factors for the individual AJP journals in ISI's Journal Citation Reports. In the absence of such data, the APS contracted directly with ISI to do a special citation analysis to compare the ten-year citation statistics for the AJP journals to each of their competitor journals [8]. The results provided comparable information to that contained in Table 4, demonstrating that the long half-life of the physiology journals significantly improved their status and ranking when compared to competitor journals.

It is clear from an analysis of the information available from ISI that impact factor cannot and should not be considered a measure of the quality of both the journal and the author. As discussed by Saha [9], impact factor may serve as a reasonable indicator of quality for general medical journals. However, it is not a good indicator of the quality of an author's work. The impact factor provides users with information about the average number of citations to articles published in a journal during the previous two years. An impact factor of 10 implies that articles published in 1999 and 2000 would receive ten citations in 2001. However, because 15% of the articles receive half of the citations, it is just as likely that an article published in a journal with an impact factor of 10 has received only one or two citations. The best way to measure the quality of an author's work is to determine the number of citations received by each of his or her papers. To paraphrase a well-known saying, read the articles and “don't judge an author by the journal's impact factor!”

Footnotes

* Based on an article that was originally published in The Physiologist 2002 Aug;45(4):18–3.

† Copyright 2002, American Physiological Society.

References

- Garfield E, Sher IH. New factors in the evaluation of scientific literature through citation indexing. American Documentation. 1963 Jul; 14(3):195–201. [Google Scholar]

- Garfield E. Which medical journals have the greatest impact. Ann Intern Med. 1986 Aug; 105(2):313–20. [DOI] [PubMed] [Google Scholar]

- Pendlebury DA. Science, citation, and funding. Science. 1991 Mar 22; 251(5000):1410–1. [DOI] [PubMed] [Google Scholar]

- Seglen PO. Why the impact factor of journals should not be used for evaluating research. BMJ. 1997 Feb 15; 314(7079):498–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moed HF, Van Leeuwen TN. Impact factors can mislead. Nature. 1996 May 16; 381(6579):186. [DOI] [PubMed] [Google Scholar]

- Hamilton DP. Research papers: who's uncited now? Science. 1991 Jan 4; 251(4989):25. [DOI] [PubMed] [Google Scholar]

- Garfield E. Long-term vs. short-term journal impact: does it matter? The Scientist. 1998 Feb 2; 12(3):11–2. [PubMed] [Google Scholar]

- Rauner B. Citation statistics for the individual journals of the American Journal of Physiology. The Physiologist. 1998 Jun; 41(3):109. 111, 2. [PubMed] [Google Scholar]

- Saha S, Saint S, and Christakis DA. Impact factor: a valid measure of journal quality? J Med Libr Assoc. 2002 Jan; 91(1):42–6. [PMC free article] [PubMed] [Google Scholar]