Abstract

Objective

To determine whether a recent decrease in the rate of acute rejection after kidney transplantation was associated with a decrease in the rate of chronic rejection.

Summary Background Data

Single-institution and multicenter retrospective analyses have identified acute rejection episodes as the major risk factor for chronic rejection after kidney transplantation. However, to date, no study has shown that a decrease in the rate of acute rejection leads to a decrease in the rate of chronic rejection.

Methods

The authors studied patient populations who underwent transplants at a single center during two eras (1984–1987 and 1991–1994) to determine the rate of biopsy-proven acute rejection, the rate of biopsy-proven chronic rejection, and the graft half-life.

Results

Recipients who underwent transplantation in era 2 had a decreased rate of biopsy-proven acute rejection compared with era 1 (p < 0.05). This decrease was associated with a decreased rate of biopsy-proven chronic rejection for both cadaver (p = 0.0001) and living donor (p = 0.08) recipients. A trend was observed toward increased graft half-life in era 2 (p = NS).

Conclusions

Development of immunosuppressive protocols that decrease the rate of acute rejection should lower the rate of chronic rejection and improve long-term graft survival.

Chronic deterioration of graft function and subsequent graft loss have emerged as major problems for kidney transplant recipients. 1 The pathogenesis is controversial. Both immunologic (previous acute rejection episodes) and nonimmunologic (limited nephron mass) factors have been implicated; both may play a role. 2–5 Because the pathogenesis is unclear, terminology has become inconsistent. Some authors, acknowledging the importance of immunologic factors, continue to call the process “chronic rejection.” 2,4 Others, suggesting a multifactorial pathogenesis, use “chronic graft dysfunction” or “chronic allograft nephropathy.” 3,5

In our clinical series, a previous acute rejection episode has been the major risk factor for development of biopsy-proven chronic rejection and decreased long-term graft survival. 2,6–9 Recently, the rate of acute rejection has decreased. Therefore, a major question is whether this decreased rate of acute rejection is associated with a decreased rate of chronic rejection and with improved long-term graft survival. To answer this question, we studied for two eras the incidence of biopsy-proven acute and chronic rejection and the graft half-life (t½) (the time it takes for half of the grafts functioning at 1 year to subsequently fail).

METHODS

Era 1

Between January 1, 1984, and December 31, 1987, we performed 493 primary kidney transplants in adults at the University of Minnesota. Of these, 240 were living donor and 253 were cadaver transplants. Preoperative evaluation, surgical technique, and immunosuppressive protocols have been described in detail. 10 In brief, immunosuppression for living donor recipients was with cyclosporine (4 mg/kg b.i.d. starting 2 days before surgery), azathioprine (5 mg/kg/day rapidly tapered to 2.5 mg/kg/day), and prednisone (1 mg/kg/day tapered to 0.4 mg/kg/day by 1 month and 0.15 mg/kg/day by 1 year). Immunosuppression for cadaver recipients was with polyclonal antibody (Minnesota antilymphocyte globulin) (20 mg/kg/day for 7 to 14 days), prednisone, and azathioprine, with delayed introduction of cyclosporine. Cyclosporine blood levels were measured by high-pressure liquid chromatography and maintained at >100 ng/ml for the first 3 months after the transplant. For recipients >3 months after the transplant who were clinically well, there was no attempt to maintain cyclosporine levels above any threshold.

Suspected acute rejection episodes were confirmed by percutaneous allograft biopsy. Documented episodes were treated by recycling the prednisone taper; steroid-resistant episodes were treated with antibody. Chronic rejection was suspected in recipients with chronic deterioration of graft function and was usually confirmed by percutaneous allograft biopsy or at nephrectomy.

Era 2

Between January 1, 1991, and December 31, 1994, we performed 563 primary kidney transplants in adults (316 living donor, 247 cadaver). Preoperative evaluation and surgical technique were similar to those in era 1.

Immunosuppressive drugs for living donor recipients were the same as in era 1—cyclosporine, prednisone, and azathioprine. A major change in era 2 was our perception that maintaining higher cyclosporine blood levels was important to minimize the incidence of acute rejection. 11 Therefore, we tried more aggressively to achieve cyclosporine trough levels of >150 ng/ml early after the transplant and to maintain levels at >150 ng/ml for the first 3 months after the transplant. In addition, our retrospective analyses suggested that low cyclosporine levels late after the transplant were a risk factor for late acute rejection episodes and for chronic rejection. 7,12 Therefore, we maintained trough levels >100 ng/ml late after the transplant.

Immunosuppression for cadaver recipients changed during era 2. After August 1992, Minnesota antilymphocyte globulin was not available. Recipients who underwent transplantation before then received Minnesota antilymphocyte globulin, azathioprine, and prednisone, with delayed introduction of cyclosporine. After August 1992, however, ATGAM (Upjohn, Kalamazoo, MI) replaced Minnesota antilymphocyte globulin. In addition, as for living donor recipients, we tried more aggressively to achieve cyclosporine trough levels of >150 ng/ml early after the transplant, to maintain levels at >150 ng/ml for the first 3 months after the transplant, and to maintain levels at >100 ng/ml late after the transplant.

Acute rejection episodes were confirmed with biopsy and were treated by recycling the prednisone taper. Steroid-resistant episodes were treated with antibody. In addition, for recipients with acute rejection and cyclosporine blood levels of <100 ng/ml, the cyclosporine dosage was adjusted to achieve levels of >100 ng/ml. Chronic rejection was proven by either biopsy or nephrectomy.

Data Analysis

Recipient information was stored on a microcomputer database. For era 1 versus era 2, we compared patient demographics, cyclosporine levels, the incidence of biopsy-proven acute rejection, the incidence of biopsy-proven or nephrectomy-proven chronic rejection, and t½. An advantage of studying t½ is that it starts with 1-year survivors and thus eliminates from the analysis early graft loss from technical factors, acute rejection, recurrent disease, or death with function. 13,14 We calculated t½ with and without censoring for death with a functioning graft.

Patient demographics were compared between eras using the Fisher exact, the chi square, and the Student t tests. Rejection incidences and graft and patient survival rates were computed using the Kaplan-Meier method and compared between eras using a generalized Wilcoxon test; t½ was estimated by computing the cumulative survival beyond 1 year after the transplant, multiplying by log 2, then dividing by the number of graft losses beyond 1 year. We compared t½ across eras using the methods described in Cho and Terasaki. 15

RESULTS

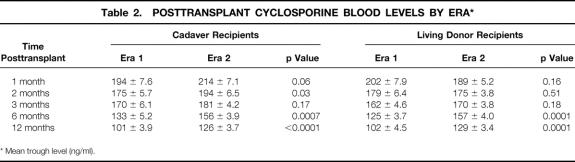

Recipient demographics are shown in Table 1. For both living donor and cadaver recipients, mean donor age and mean recipient age were higher in era 2. In addition, in era 2, for cadaver recipients, a smaller percentage were diabetic and the number of human leukocyte antigen (HLA) mismatches was lower. A higher proportion of living donor recipients in era 2 were unrelated to their donor.

Table 1. RECIPIENT DEMOGRAPHICS BY ERA

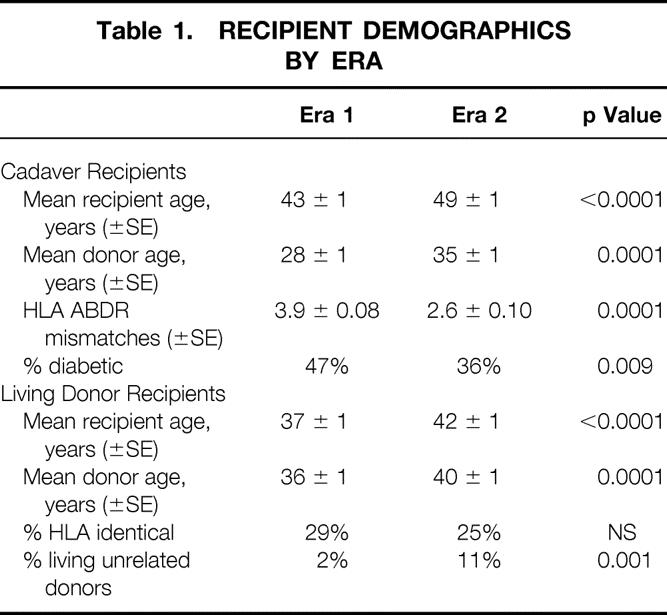

Mean (±SE) cyclosporine blood levels for the two eras are shown in Table 2. For cadaver recipients in era 2, levels were significantly higher at 1 month, 2 months, 6 months, and 1 year compared with era 1; for living donor recipients, levels were significantly higher at 6 months and 1 year.

Table 2. POSTTRANSPLANT CYCLOSPORINE BLOOD LEVELS BY ERA*

* Mean trough level (ng/ml).

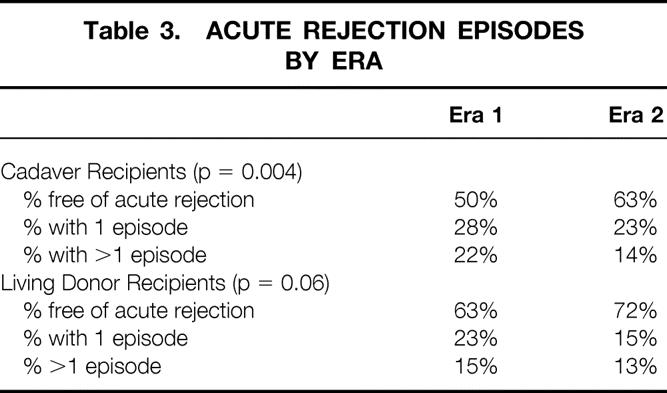

The incidence of acute rejection in each era is shown in Table 3. More living donor and cadaver recipients in era 2 were completely free of acute rejection than in era 1. In addition, significantly fewer recipients in era 2 had more than one acute rejection episode.

Table 3. ACUTE REJECTION EPISODES BY ERA

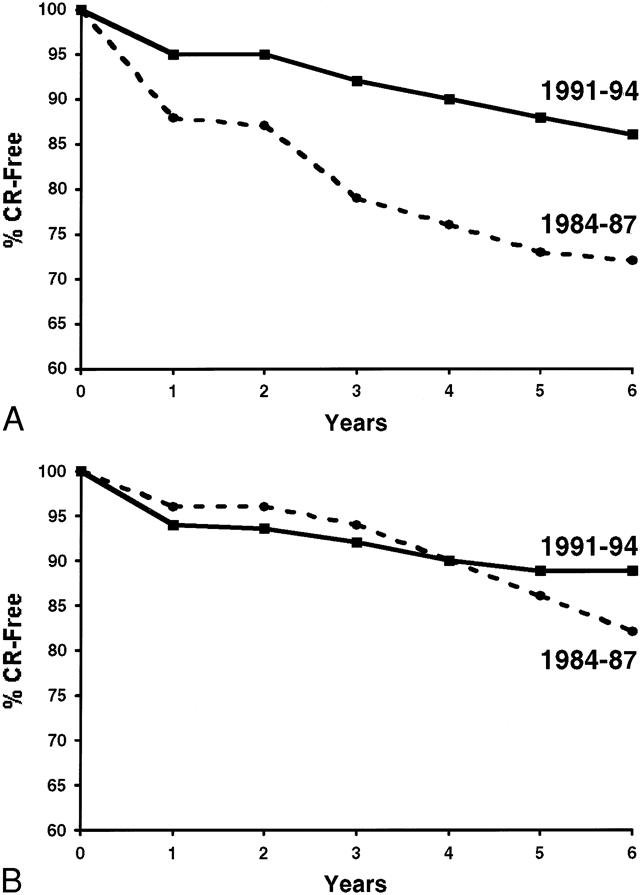

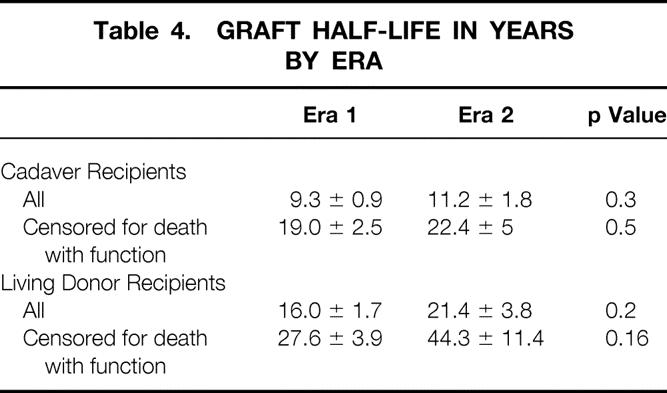

The decreased rate of acute rejection in era 2 was associated with a decreased rate of biopsy-proven chronic rejection for both cadaver (p = 0.001) and living donor (p = 0.08) recipients (Fig. 1). At 6 years after the transplant, only 14% of cadaver recipients in era 2 had chronic rejection, compared with 31% in era 1. Similarly, at 6 years after the transplant, 11% of living donor recipients in era 2 and 18% in era 1 had chronic rejection. In addition, there was a trend toward improved t½ in era 2 (Table 4). For cadaver recipients, t½ increased from 9.3 ± 0.9 years in era 1 to 11.2 ± 1.8 years in era 2 (when death with function was censored, from 19.0 ± 2.5 years in era 1 to 22.4 ± 5 years in era 2). For living donor recipients, t½ increased from 16.0 ± 1.7 years in era 1 to 21.4 ± 3.8 years in era 2 (when death with function was censored, from 27.6 ± 3.9 years in era 1 to 44.3 ± 11.4 years in era 2).

Figure 1. Percentage of recipients free of chronic rejection (CR) in era 1 vs. era 2. For both cadaver (A) and living (B) donor recipients, the rate of chronic rejection decreased in era 2.

Table 4. GRAFT HALF-LIFE IN YEARS BY ERA

DISCUSSION

In the 1960s, only 50% of living donor and 30% of cadaver recipients at our institution had functioning grafts at 1 year after the transplant. Acute rejection was a major cause of graft loss. Since that time, more potent immunosuppressive protocols have evolved, and acute rejection is now only a rare cause of graft loss. 1 Chronic rejection and death with function have instead become the major impediments to long-term graft function.

The pathogenesis of chronic rejection, as noted above, is controversial. One hypothesis suggests that chronic rejection is the result of immunologic injury—either an immune response or its consequences lead to ongoing deterioration of graft function. Numerous single-institution and multicenter studies have demonstrated an association between acute rejection episodes and subsequent development of chronic rejection and late graft failure. Recipients with multiple acute rejection episodes or with acute rejection episodes >1 year after the transplant are at markedly increased risk. 2

A second hypothesis holds that chronic rejection is the result of a process of hyperfiltration that develops when the number of nephrons is limited relative to the recipient’s metabolic needs; the hyperfiltration then leads to ongoing damage of the remaining nephrons.

In fact, these two hypotheses may not be mutually exclusive. Immunologic injury could lead to a limited number of nephrons and result in hyperfiltration. Alternatively, a kidney with limited nephrons (e.g., because of the donor’s history) may do well in the absence of rejection but may not tolerate any additional immunologic injury. Recently, Halloran et al 5 presented a model uniting these concepts. They hypothesized that the cumulative burden of injury and age exhausts the ability of key cells in the endothelium and epithelium to repair and remodel. The result is fibrosis.

We previously studied the relative importance of immunologic and nonimmunologic factors for long-term graft survival. 8 We calculated survival for a cohort of kidney transplant recipients (n = 1987) without recurrent disease, technical failure, or death with function. The 10-year graft survival rate was 72%. From this cohort, we then eliminated from our analysis those with a previous acute rejection episode. Graft loss in the remaining subgroup (n = 1128) was likely the result of nonimmunologic causes. The 10-year graft survival rate in the subgroup was 92%, suggesting that only a limited percentage of late graft loss is the result of nonimmunologic causes. In a separate multivariate analysis, we studied the relative importance of both immunologic and nonimmunologic factors for decreased long-term graft survival. 9 Results differed for living donor and cadaver recipients. For living donor recipients, only a previous acute rejection episode was associated with worse outcome (p < 0.0001); nonimmunologic factors were not significant. For cadaver recipients, however, a previous acute rejection episode (p < 0.0001), black recipient race (p < 0.02), and older donor age (p = 0.0002) were all important. The different impact of older donor age between living donor and cadaver recipients may be the result of the better ability to evaluate the living donor.

Immunologic and nonimmunologic factors may be additive. Knight and Burrows reported on the interaction of donor age and acute rejection. 16 In their series, graft survival was decreased in recipients with acute rejection (vs. no rejection). However, in recipients with acute rejection, the 3-year graft survival rate was 82% if the donor was 50 years or younger and 33% if the donor was older than 50 years. Troppmann et al reported a similar interaction of delayed graft function (defined by the need for dialysis in the first week after the transplant) and acute rejection. 17 Delayed graft function alone had little impact on graft survival; acute rejection alone was associated with significantly decreased graft survival. However, recipients with delayed graft function and subsequent acute rejection had a markedly worse outcome. Humar et al extended these findings by showing that the combination of slow graft function (slow fall in serum creatinine level after the transplant, but no need for dialysis) and acute rejection significantly worsened survival compared with slow graft function alone or acute rejection alone. 18

A major criticism of the hypothesis that immunologic factors predominate in the pathogenesis of chronic graft dysfunction is that recent decreases in the rate of acute rejection have not been associated with reported decreases in the rate of chronic rejection or with increases in long-term graft survival. 19 However, data to support the lack of impact come from multicenter registries or from trials of new immunosuppressive agents with relatively short follow-up (1 to 3 years). There are many problems with such data. First, in registry reports, only acute rejection episodes occurring during the hospital admission or in the first year after the transplant are considered. As described above, the subgroups with late acute rejection or with multiple acute rejection episodes are the ones at markedly increased risk for biopsy-proven chronic rejection. Second, some of the data have not been censored for death with a functioning graft. 13 If the numbers in the groups being compared are depleted by patient deaths, it would be hard to show a difference between groups. Third, with limited numbers and short follow-up in some studies, a true difference between groups may not yet be apparent. Fourth, new immunosuppressive agents may reduce clinical episodes of acute rejection without reducing subclinical episodes (i.e., those detected by biopsy but with no significant change in serum creatinine level). Legendre et al reported that subclinical acute rejection, diagnosed by protocol biopsy at 3 months, was a risk factor for chronic transplant nephropathy at 2 years. 20 Similarly, Nickerson et al recently reported that subclinical acute rejection diagnosed by protocol biopsy at 6 months was associated with a higher serum creatinine level at 2 years. 21

Another factor that needs to be emphasized is the effect of medication noncompliance on long-term outcome. Clearly, immunosuppressive protocols that decrease the rate of acute rejection will not affect long-term outcome if the recipient subsequently becomes noncompliant. For solid organ transplant recipients, noncompliance has been associated with an increased risk of acute rejection, of late acute rejection, and of late graft loss. 22 If a significant proportion of patients in two groups being compared are noncompliant, detecting any real difference between groups will be difficult. Gaston et al suggested including noncompliance as a third factor to be addressed (besides chronic rejection and death with function) to improve long-term transplant results. 23

In our current single-center analysis, we have shown that decreasing the rate of acute rejection is associated with a decreased rate of chronic rejection and with improved t½. Of importance, even when death with function is censored, t½ in era 2 increased compared with era 1. We have previously noted the importance of censoring for patient death when studying long-term transplant results. 13,14

Although t½ increased in era 2, the difference was not statistically significant; this may in part be the result of small numbers. In our series, t½ increased for cadaver recipients from 9.3 ± 0.9 years in era 1 to 11.2 ± 1.8 years in era 2 (see Table 4) and for living donor recipients from 16.0 ± 1.7 years to 21.4 ± 3.8 years. Similar changes in t½ were statistically significant in the United Network for Organ Sharing (UNOS) Kidney Registry (which follows larger numbers of recipients). In the UNOS registry, t½ for cadaver recipients increased from 7.6 years in 1988 to 9.5 years in 1993 (p < 0.001) and for living donor recipients from 12.5 years in 1988 to 17 years in 1993. 24 Of importance to our hypothesis, the improvement in t½ noted in the UNOS registry was also in a cohort that had a reduced rate of acute rejection in the first 6 months after the transplant. In the UNOS registry, >50% of the recipients who underwent transplantation in 1988 had one or more acute rejection episodes in the first 6 months after the transplant. By 1993, this percentage had decreased to 40% and by 1996 to 24%. 25

There are two caveats to interpreting our data. First, the recipient populations in eras 1 and 2 were not identical. Recipients in era 2 were older and received older donor kidneys, factors that could be associated with worse outcome. However, cadaver recipients in era 2 also had fewer HLA ABDR mismatches, a factor associated with better outcome. However, it is unlikely that the 17% difference in the incidence of chronic rejection at 6 years was the sole result of fewer mismatches. The second caveat is that cyclosporine blood levels were different between the eras. In fact, for living donor recipients, cyclosporine levels were significantly higher only in the late posttransplant period (6 and 12 months). It can be argued that the decreased rate of chronic rejection in era 2 was the result of the significantly elevated cyclosporine blood levels. Even if this is the case, however, our basic premise is unchanged—immunosuppressive protocols associated with decreased acute rejection will also be associated with decreased chronic rejection.

Our analysis is limited to clinical rejection episodes, those with an elevated serum creatinine level leading to a biopsy-confirmed diagnosis. Rush et al recently described the value of treating early subclinical rejection episodes. 26,27 In their series, patients were randomized to undergo protocol biopsies at 1, 2, 3, 6, and 12 months after the transplant versus only at 6 and 12 months. Subclinical rejection detected in the early protocol biopsies (defined by histologic features of rejection without a rise in serum creatinine level) was treated with high-dose corticosteroids. Subclinical episodes were diagnosed in 43% of recipients at 1 month, 32% at 2 months, and 27% at 3 months. Recipients randomized to the protocol biopsy group had reduced chronic changes at 6 months, better graft survival at 2 years (97% vs. 83%), and a significantly lower mean serum creatinine level at 2 years (133 ± 14 μmol/L vs. 183 ± 22 μmol/L) (p = 0.05).

Finally, our data do not prove that acute rejection is the predominant cause of chronic rejection. Our single-center series demonstrated a decreased rate of acute rejection in era 2. This decrease was associated with a decreased rate of chronic rejection and with improved long-term graft survival. Development of immunosuppressive protocols that further decrease the rate of acute rejection should also decrease the rate of chronic rejection; additional efforts are warranted to develop safe immunosuppressive protocols that eliminate posttransplant acute rejection.

Acknowledgments

The authors thank Mary Knatterud for editorial assistance and Stephanie Daily for preparation of the manuscript.

Discussion

Dr. Clyde F. Barker (Philadelphia, Pennsylvania): For three decades or more, Dr. Najarian’s huge transplant program and its carefully maintained database have been almost a national resource. It has allowed analysis of changes in immunosuppression and other aspects of patient care and improvements brought about by these that couldn’t be accomplished by the reports of multicenter registries or smaller single-center experiences.

In today’s report, Dr. Matas has used the power of this unique database to confirm a hypothesis which most transplant surgeons have favored for many years, that is, that recipients spared acute rejection episodes are less likely to be subject later to the effects of chronic rejection, that acute rejection would predispose to later chronic rejection and graft loss. This hypothesis would seem self-evident, but has not been easy to substantiate.

In fact, for some years we have been confronted by the seeming paradox that agents such as FK506, which gained its initial favor by virtual prevention of acute rejection, or even reversal of it, were not clearly associated with improvement in kidney graft survival, at least in the initial years.

Dr. Matas’s manuscript does not contain the triggers for the 1-, 2-, or 5-year graft or patient survival comparing era 1 and era 2 in the conventional sense with which we see these data reported. I would be interested to know whether the graft survival presented in these conventional terms are different or whether the improvement can only be seen by studying graft half-life, which may indeed be a better index of success.

While I am convinced that acute rejection and therefore chronic rejection were reduced in era 2, I am not convinced that Dr. Matas has explained to us why this is. He is inclined to believe that the increasing levels of cyclosporine in the blood have accounted for the change. One hopes parenthetically that the overall trend to improving graft survival will not depend on increasing immunosuppression, since in an earlier era we went through this, with its risks of infection and tumor.

I am inclined to believe actually that some other difference, such as better donor–recipient matching (especially by interregional sharing of 6-antigen matches, which is now mandated by UNOS) or the decreased number of diabetics, might account for the improvement in era 2. And I would like to know how many of the transplants in era 2 were 6-antigen matches, categorically 6-antigen matches.

Era 2 patients had several disadvantages such as older age and older age of the donor. I would like to ask if other factors which may have been of possible importance in the other direction were also studied, such as lesser incidence of acute tubular necrosis (ATN) or cytomegalovirus (CMV) infections. It is even possible that the change in the latter part of era 2 in the use of different antilymphocytic agents may have been another important difference, although I believe that Minnesota ALG was a very fine agent. In short, I don’t know why era 2 was better than era 1, but I hope it represents a continuing trend to better results in transplantation, a trend in which Dr. Najarian’s group has led the way.

One additional question for Dr. Matas. Since humoral factors have been blamed for chronic rejection, were any parameters of humoral immunity assessed and compared in era 1 versus era 2, such as preformed antibodies in the recipient against the panel of donors?

Presenter Dr. Arthur J. Matas (Minneapolis, Minnesota): I don’t have specific figures, but graft survival has been steadily improving in our program over the years. And I believe that it really is due to more sophistication in the use of immunosuppression, although the agents haven’t changed. So that in era 2, the graft survival was significantly better than in era 1.

You brought up a number of factors that affect graft survival, including CMV, ATN, and matching. It is hard to sort out each of these as an individual factor in terms of our data analysis. One of the advantages of single-center studies is that you can do analyses like this. One of the disadvantages, of course, is that the numbers required for multivariate analyses, looking at multiple factors at the same time, are not necessarily there.

When you subdivide our recipients into those who are both CMV-free and rejection-free, and those who are rejection-free but have CMV, there is no difference in the incidence of chronic rejection. This finding suggests that CMV alone does not play a role in the development of chronic rejection.

You asked about ATN as another factor. Its impact is again confounded, because patients with ATN have a higher incidence of acute rejection. However, ATN alone in our series is not associated with either decreased graft survival or an increased incidence of chronic rejection. It is only when ATN is combined with acute rejection that we see an impact. We believe that impact is due to the acute rejection episode.

You asked about 6-antigen matches. There were more 6-antigen–matched transplants in era 2; I can’t give you the specific number, but it was less than 10%.

Finally, we did not study humoral immunity.

Dr. Arnold G. Diethelm (Birmingham, Alabama): I think the paper is most interesting. It is a follow-up of a previous study published 5 or 6 years ago and it addresses the single most difficult question in renal transplantation, and that is chronic rejection. The recent development of immunosuppressive therapy has helped us tremendously with acute rejection, but the problem with chronic rejection persists.

There is another interesting observation that Dr. Matas mentioned, which is that early acute rejection doesn’t carry necessarily the same serious outcome in proceeding to chronic rejection. Perhaps there are two types of acute rejection episodes, or else it is the same rejection episode, but with time, chronic rejection occurs if the rejection episode is, say, after 6 months.

There is another comment that is worth thinking about. Once chronic rejection begins, it always ends in graft failure. But the temporal relationship of the rate of decline of renal function varies and cannot always be predicted at the onset of chronic rejection.

I have a few questions, Dr. Matas.

First, you didn’t mention the role of noncompliance. Many of us have problems with patient noncompliance, and I think there may be a geographical component to this. But nonetheless it is there, it is real. And noncompliance is often a problem, causing late rejection that goes on to chronic rejection.

The second question that I would like to bring up is the difficulty in separating recurrent disease at times from chronic rejection. And if you look at the results in polycystic kidney patients where the renal disease is of genetic origin, graft survival is better and chronic rejection is often less frequent. So I wonder if at times perhaps long-term follow-up studies confuse recurrent disease and chronic rejection.

The last question that I would like to bring up relates to whether or not, in your mind, chronic rejection is an immunologic event or is some other event that we don’t understand? I think we have always felt that chronic rejection was a result of acute rejection. But we all know patients who never have acute rejection and 3 years later have a slow insidious onset of chronic rejection, and their temporal relationship of that course to graft failure may be 2 to 5 years.

Dr. Matas: I think the role of noncompliance is critically important in transplantation. The data I presented suggest that a late rejection episode is a major risk factor for chronic rejection. Clearly, patients who have done well initially and then have a first late acute rejection episode are often noncompliant.

The pathogenesis of chronic graft loss should include chronic rejection, death with function, and noncompliance as the three major causes. I would suggest that noncompliance is related to chronic graft loss via its impact on the rate of acute rejection episodes.

In terms of your comment on recurrent disease versus chronic rejection, our data are on biopsy- or nephrectomy-proven chronic rejection. In the end-stage kidney, it may be difficult in some cases to separate these out.

The impact on patients with polycystic kidneys is more difficult to determine because polycystic kidney recipients are older. As Dr. Najarian has reported at this meeting previously, recipients who are older have a decreased rate of acute rejection; therefore, if our hypothesis is correct, they will also have a decreased rate of chronic rejection.

Finally, you asked my thinking as to whether chronic rejection is an immunologic event. In our data analyses, everything points to it being an immunologic event. If we eliminate from our analyses first, patients with graft loss to recurrent disease, death with function, or technical failures, and second, patients with an acute rejection episode, we are left with about 1,200 patients who have a 92% 10-year graft survival rate. Thus, if you can get rid of acute rejection in a patient population, you really will make a huge impact on long-term graft survival.

I suspect that the patients we have all seen who have chronic rejection without ever having a well-defined acute rejection episode probably do have some smoldering acute rejection. There are data to support this possibility from the Winnipeg series, where they have done protocol biopsies at 1, 2, 3, 6, and 12 months posttransplant. These are biopsies done in the absence of clinical acute rejection episodes.

The Winnipeg series investigators randomized patients to those with and without protocol biopsies. The patients with protocol biopsies that show evidence of rejection are treated with antirejection therapy, whereas obviously the patients without protocol biopsies don’t undergo treatment. According to a recent publication, the patients with protocol biopsies who are treated for these subclinical rejection episodes have improved creatinine at 2 years posttransplant and fewer chronic changes on biopsies done at 2 years. This result suggests that, at least in some patients, we are missing an overt clinical episode, but there is a subclinical episode that may explain the subsequent chronic rejection.

Dr. Robert J. Corry (Pittsburgh, Pennsylvania): Very nice report, Dr. Matas. You have just answered my question, because I was going to ask whether or not the rejections with normal creatinine should be treated. As I mentioned, we have been treating them with creatinines of 1.0 in the kidney/pancreas group, and I was wondering whether you thought that was advantageous. And you have just mentioned that you did think it was advantageous. Very nice report.

Footnotes

Correspondence: Arthur J. Matas, MD, Dept. of Surgery, University of Minnesota, Box 328 Mayo, 420 Delaware St. SE, Minneapolis, MN 55455.

Presented at the 119th Annual Meeting of the American Surgical Association, April 15–17, 1999, Hyatt Regency Hotel, San Diego, California.

Supported by NIH grant DK-13083.

Accepted for publication April 1999.

References

- 1.Schweitzer EJ, Matas AJ, Gillingham KJ, et al. Causes of renal allograft loss:: progress in the 1980s, challenges for the 1990s. Ann Surg 1991; 214: 679–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Matas AJ. Risk factors for chronic rejection—a clinical perspective. Transplant Immunol 1998; 6: 1–11. [DOI] [PubMed] [Google Scholar]

- 3.Waaga AM, Rocha AM, Tilney NL. Early risk factors contributing to the evolution of long-term allograft dysfunction. Transplant Rev 1997; 11 (4): 208–216. [Google Scholar]

- 4.Tullius SG, Tilney NL. Both alloantigen-dependent and -independent factors influence chronic allograft rejection. Transplantation 1995; 59: 313–318. [PubMed] [Google Scholar]

- 5.Halloran PF, Melk A, Barth C. Rethinking chronic allograft nephropathy: : the concept of accelerated senescence. J Am Soc Nephrol 1999; 10: 167–181. [DOI] [PubMed] [Google Scholar]

- 6.Basadonna GP, Matas AJ, Gillingham KJ, et al. Early versus late acute renal allograft rejection: : impact on chronic rejection. Transplantation 1993; 55: 993–95. [DOI] [PubMed] [Google Scholar]

- 7.Almond PS, Matas A, Gillingham K, et al. Risk factors for chronic rejection in renal allograft recipients. Transplantation 1993; 55: 752–57. [DOI] [PubMed] [Google Scholar]

- 8.Humar A, Kandaswamy R, Hassoun A, Matas AJ. Immunologic factors—the major risk for long-term renal allograft survival. Transplantation (in press). [DOI] [PubMed]

- 9.Matas AJ, Gillingham K, Humar A, et al. Immunologic and nonimmunologic factors—different risks in living and cadaver donor transplantation. Clin Transplant (in press). [DOI] [PubMed]

- 10.Perez RV, Matas AJ, Gillingham KJ, et al. Lessons learned and future hopes: three thousand renal transplants at the University of Minnesota. In: Terasaki PI, ed. Clinical transplants 1990. Los Angeles: UCLA Tissue Typing Laboratory; 1991: 217–231. [PubMed]

- 11.Johnson EM, Canafax DM, Gillingham KJ, et al. Effect of early cyclosporine levels on kidney allograft rejection. Clin Transplantation 1997; 11: 552–557. [PubMed] [Google Scholar]

- 12.Wrenshall LE, Matas AJ, Canafax DM, et al. An increased incidence of late acute rejection episodes in recipients of cadaver renal allografts given azathioprine, cyclosporine and prednisone. Transplantation 1990; 50: 233–237. [DOI] [PubMed] [Google Scholar]

- 13.Matas AJ, Gillingham KJ, Sutherland DER. Half-life and risk factors for kidney transplant outcome—importance of death with function. Transplantation 1993; 55: 757–60. [DOI] [PubMed] [Google Scholar]

- 14.West M, Sutherland DERS, Matas AJ. Kidney transplant recipients who die with functioning grafts: : serum creatinine level and cause of death. Transplantation 1996; 62: 1029–1030. [DOI] [PubMed] [Google Scholar]

- 15.Cho YW, Terasaki PI. Long-term survival. In: Terasaki PI, ed. Clinical transplants 1998. Los Angeles: UCLA Tissue Typing Laboratory; 1988: 277. [PubMed]

- 16.Knight RJ, Burrows L. The combined impact of donor age and acute rejection on long-term cadaver renal allograft survival [abstract]. 23d Annual Meeting of the American Society of Transplant Surgeons, Chicago, May 1997:100. [PubMed]

- 17.Troppmann C, Gillingham K, Benedetti E, et al. Delayed graft function, acute rejection, and outcome after cadaver renal transplantation—a multivariate analysis. Transplantation 1995; 59: 962–68. [DOI] [PubMed] [Google Scholar]

- 18.Humar A, Johnson EM, Payne WD, et al. Effect of initial slow graft function on renal allograft rejection survival. Clin Transplantation 1997; 11: 623–627. [PubMed] [Google Scholar]

- 19.Hunsicker LG, Bennett LE. Design of trials of methods to reduce late renal allograft loss: : the price of success. Kidney Int 1995; 48(suppl 52): S120–123. [PubMed] [Google Scholar]

- 20.Legendre C, Thervet E, Skhiri H, et al. Histologic features of chronic allograft nephropathy revealed by protocol biopsies in kidney transplant recipients. Transplantation 1998; 65: 1506–1509. [DOI] [PubMed] [Google Scholar]

- 21.Nickerson P, Jeffery J, Gough J, et al. Identification of clinical and histopathologic risk factors for diminished renal function 2 years posttransplant. J Am Soc Nephrol 1998; 9: 482–487. [DOI] [PubMed] [Google Scholar]

- 22.Matas AJ. Noncompliance and late graft loss: : implications for clinical studies. Transplant Rev 1999; 13: 78–82. [Google Scholar]

- 23.Gaston RS, Hudson SL, Ward M, et al. The impact of patient compliance on late allograft loss in renal transplantation. Transplant Proc (in press).

- 24.Cecka JM. The UNOS Scientific Renal Transplant Registry. In: Cecka JM, Terasaki PI, eds. Clinical transplants 1996. Los Angeles: UCLA Tissue Typing Laboratory; 1997: 1–14. [PubMed]

- 25.Cecka JM. The UNOS Scientific Renal Transplant Registry—Ten years of kidney transplants. In: Cecka JM, Terasaki PI, eds. Clinical transplants 1997. Los Angeles: UCLA Tissue Typing Laboratory; 1998: 1–14. [PubMed]

- 26.Rush D, Nickerson P, Gough J, et al. Beneficial effects of treatment of early subclinical rejection: : a randomized study. J Am Soc Nephrol 1998; 9: 2129–2134. [DOI] [PubMed] [Google Scholar]

- 27.Rush DN, Nickerson P, Jeffery JR, et al. Protocol biopsies in renal transplantation: : research tool or clinically useful? Curr Opin Nephrol Hypertens 1998; 6: 691–694. [DOI] [PubMed] [Google Scholar]