Abstract

Objective

To describe initial testing of the Assessment of Chronic Illness Care (ACIC), a practical quality-improvement tool to help organizations evaluate the strengths and weaknesses of their delivery of care for chronic illness in six areas: community linkages, self-management support, decision support, delivery system design, information systems, and organization of care.

Data Sources

(1) Pre-post, self-report ACIC data from organizational teams enrolled in 13-month quality-improvement collaboratives focused on care for chronic illness; (2) independent faculty ratings of team progress at the end of collaborative.

Study design

Teams completed the ACIC at the beginning and end of the collaborative using a consensus format that produced average ratings of their system's approach to delivering care for the targeted chronic condition. Average ACIC subscale scores (ranging from 0 to 11, with 11 representing optimal care) for teams across all four collaboratives were obtained to indicate how teams rated their care for chronic illness before beginning improvement work. Paired t-tests were used to evaluate the sensitivity of the ACIC to detect system improvements for teams in two (of four) collaboratives focused on care for diabetes and congestive heart failure (CHF). Pearson correlations between the ACIC subscale scores and a faculty rating of team performance were also obtained.

Results

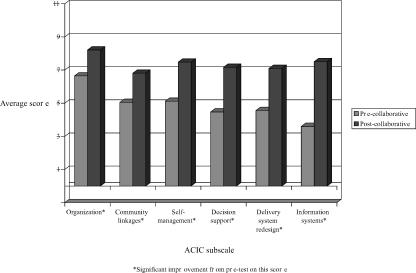

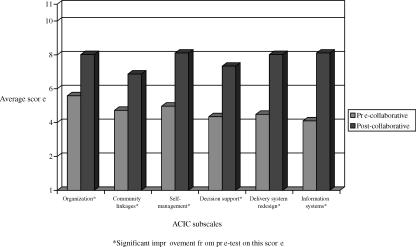

Average baseline scores across all teams enrolled at the beginning of the collaboratives ranged from 4.36 (information systems) to 6.42 (organization of care), indicating basic to good care for chronic illness. All six ACIC subscale scores were responsive to system improvements diabetes and CHF teams made over the course of the collaboratives. The most substantial improvements were seen in decision support, delivery system design, and information systems. CHF teams had particularly high scores in self-management support at the completion of the collaborative. Pearson correlations between the ACIC subscales and the faculty rating ranged from .28 to .52.

Conclusion

These results and feedback from teams suggest that the ACIC is responsive to health care quality-improvement efforts and may be a useful tool to guide quality improvement in chronic illness care and to track progress over time.

Keywords: Measurement, quality, chronic illness, health care, systems

The prevalence of individuals with chronic illness is growing at an astonishing rate because of the rapid aging of the population and the greater longevity of individuals with chronic illness (U.S. Department of Health and Human Services 2000). This growth has taxed health-care systems and revealed deficiencies in the organization and delivery of care to patients with chronic illness (Ray et al. 2000; MMWR 1997; Jacobs 1998; Desai et al. 1999). There is a growing literature, however, describing effective interventions that improve systems of care in which persons with chronic illness are treated (McCulloch et al. 2000; Lorig et al. 1999; Weinberger et al. 1989, 1991; VonKorff 1997; Wagner et al. 1996a, b). This literature strongly suggests that changing processes and outcomes in chronic illness requires multicomponent interventions that change the prevailing clinical system of care (Wagner et al. 1996, 1999).

Unfortunately, organizational teams are often at a loss for being able to define the deficiencies in their systems of care for chronic illness and the extent to which changes they make result in sustained improvement. Measures that exist for evaluating quality—such as the Joint Commission on Accreditation of Healthcare Organization's quality scores and the NCQA's Health Plan Data and Information Set (HEDIS measures) (Sennett 1998; O'Malley 1997)—neglect important process measures (Sewell 1997), such as whether providers use evidence-based guidelines to direct clinical care. Moreover, they provide little guidance on what system changes organizational teams must make in order to improve processes and outcomes of care. Thus, practical assessment tools that help organizational teams guide quality improvement efforts and evaluate changes in chronic illness care processes are needed.

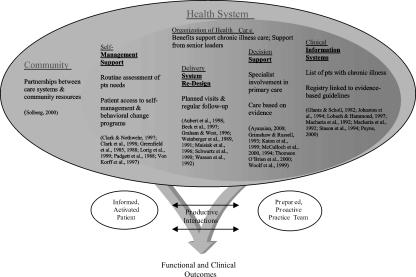

The Assessment of Chronic Illness Care (ACIC) was developed to help organizational teams identify areas for improvement in their care for chronic illnesses, and to evaluate the level and nature of improvements made in their system (Bonomi et al. 2000). The ACIC is based on six areas of system change suggested by the Chronic Care Model (CCM) that have been shown to influence quality of care—linkages to community resources, self-management support, decision support, delivery system design, clinical information systems, and organization of the health system—and promising interventions within these areas associated with better outcomes (Wagner et al. 1996, 1999). The Chronic Care Model was derived based on a survey of best practices, expert opinion, more promising interventions in the literature, and our quality improvement work in diabetes, depression, and cardiovascular disease at Group Health Cooperative, the sixth largest health maintenance organization in the United States (Wagner et al. 1999). Figure 1 shows how system changes in the six areas of the Chronic Care Model noted above influence interactions between patients and providers to produce better care and improved outcomes.

Figure 1.

Chronic Care Model

The ACIC is one of the first comprehensive tools specifically focused on organization of care for chronic illness, rather than traditional outcome measures (e.g., HbA1c levels), “productivity” measures (e.g., number of patients seen), or process indicators (e.g., percent of diabetic patients receiving foot exams). The ACIC items attempt to represent poor to optimal organization and support of care for chronic illness in the Chronic Care Model areas. Basic support for chronic illness includes, for example, facilitating access to evidence-based guidelines and self-management programs. More advanced support for chronic illness care would include access to automated patient registries that are tied to guidelines and reminders, and self-management programs that are integrated into routine clinical care. This paper describes the initial development and testing of the ACIC in health care systems participating in quality improvement initiatives focused on chronic illness care.

Methods

Sample and Data Collection

The sample consisted of 108 organizational teams from health care systems across the United States who were active participants in one of four national or regional “Breakthrough Series” (Sperl-Hillen et al. 2000; Berwick 1989, 1998; Kilo 1998; Leape et al. 2000) quality improvement collaboratives focused on chronic illness care. The chronic care Breakthrough Series collaboratives were organized by process improvement experts at Improving Chronic Illness Care (ICIC) (Seattle, WA), the Institute for Healthcare Improvement (Boston, MA), Associates for Process Improvement (Austin, TX), and in the case of the regional collaborative, by the Washington State Department of Health and PRO-West. Collaboratives were designed to: (a) bring together expertise in clinical issues, processes for rapid organizational change, and principles of chronic illness care, and (b) facilitate and structure sharing and learning interactions among teams as well as with faculty. Each collaborative was 13 months in duration and consisted of three two-day learning sessions alternating with action periods, during which teams developed and tested interventions (“plan-do-study-act” or “PDSA” cycles) in their own systems of care for chronically ill patients. The first collaborative commenced in September 1998 and ended in October 1999; the most recent collaborative will be completed in 2001. Details of the chronic illness Breakthrough Series collaborative process are described elsewhere (Wagner et al. 2001).

Collaborative faculty included both academic and nonacademic experts in the management of the clinical condition and chronic disease quality improvement. They included physicians (e.g., diabetologists, cardiologists), nurses, behavioral scientists, and managers. Faculty (usually four to seven per collaborative) were involved on a routine basis with teams—both as instructors at the learning sessions and as coaches during the action periods—to encourage implementation of system changes consistent with all six elements of the Chronic Care Model. The faculty had multiple roles: (1) they instructed BTS participants in implementing aspects of the Chronic Care Model, e.g., presented studies in support of the Chronic Care Model elements that demonstrated effective practice change and demonstrated how to set up a registry; (2) helped teams with planning and problem solving; (3) assisted teams in identifying appropriate process and outcome measures; and (4) charted and provided feedback on teams' progress over the course of the collaborative using input from the teams about the change cycles they had implemented, as well as progress reports about outcome measures. All faculty were specifically trained in the Chronic Care Model, and many of the clinical faculty experts were already familiar with implementing CCM-based interventions in their own practices.

Table 1 presents a breakdown of the number of teams and the chronic conditions represented in each of the four collaboratives. Each participating team of three members (generally an administrative decision maker; physician and opinion leader; and nurse manager/coordinator) selected the chronic condition within their collaborative on which they were most interested in focusing their improvement efforts. The conditions studied in the four collaboratives were as follows: Chronic #1 (diabetes and frailty in the elderly), Chronic #2 (diabetes and congestive heart failure), Washington State (diabetes), and Chronic #3 (asthma and depression). Teams represented diverse health-care systems and areas of the United States. Eighty-one percent of teams in Chronic #3 represented safety-net systems. The average pilot patient panel size was 379, although there were a few outliers that influenced the overall average. These outliers were teams who had either just begun building their pilot population (particularly in Chronic #3) or teams representing very large health care systems with resources to impact a large number of patients.

Table 1.

Teams & Chronic Conditions Represented in the Collaboratives

| Chronic #1 | Chronic #2 | Washington State | Chronic #3 | TOTAL | |

|---|---|---|---|---|---|

| Number of teams & conditions studied | 23 diabetes* | 13 CHF 7 diabetes | 17 diabetes | 26 asthma 22 depression | 108 teams |

| Average target pilot population size | 615 | 140 | 357 | 196 | 379 |

| Range of pilot population size | 42–2926 | 33–485 | 70–2533 | 12–815 | 12–2926 |

| Region of U.S. represented % | |||||

| West | 22% (5/23)** | – | 100% (17/17) | 17% (8/48) | 28% (30/108) |

| Southwest | – | – | – | 10% (5/48) | 5% (5/108) |

| Mountain West | 9% (2/23) | – | – | 8% (4/48) | 6% (6/108) |

| Mid West | 35% (8/23) | 35% (7/20) | – | 25% (12/48) | 25% (27/108) |

| Southeast | 17% (4/23) | 25% (5/20) | – | 17% (8/48)*** | 16% (17/108) |

| Northeast | 17% (4/23) | 40% (8/20) | – | 23% (11/48) | 21% (23/108) |

| Type of organization % | |||||

| Academic Organizational Center | 17% (4/23) | 10% (2/20) | 6% (1/17) | 2% (1/48) | 7% (8/108) |

| Community/Specialty Clinic | 4% (1/23) | – | 18% (3/17) | 4% (2/48) | 6% (6/108) |

| Hospital System | 13% (3/23) | 35% (7/20) | 12% (2/17) | 2% (1/48) | 12% (13/108) |

| Managed Care Organization | 22% (5/23) | 20% (4/20) | 47% (8/17) | 8% (4/48) | 19% (21/108) |

| Safety Net Provider/School | 30% (7/23) | 30% (6/20) | 18% (3/17) | 81% (39/48) | 51% (55/108) |

| Other | 13% (3/23) | 5% (1/20) | – | 2% (1/48) | 5% (5/108) |

Six teams (in addition to the 23 diabetes teams) worked on frailty in the elderly, but numbers (and data submitted) were not sufficient to include in the analysis.

Includes Victoria, British Columbia.

Includes Cidra, Puerto Rico.

Teams were encouraged to implement interventions (plan-do-study-act or “PDSA cycles”) in each of the six areas of the Chronic Care Model. These were not large-scale interventions; but rather, small practice change interventions, such as attempting telephone follow-up with a few patients or providing self-management tools (e.g., scales for CHF patients). Table 2 presents sample PDSA interventions in each of the CCM areas implemented by teams in Collaboratives #1 and 2. Based on preliminary examination of data from Collaborative #1, the most successful cycles were those that either helped change important attitudes or helped create more effective systems (e.g., development of registries linked to practice guidelines and follow-up) (Wagner et al. 2001). Less successful cycles involved traditional approaches to change provider behavior (e.g., seminars, distributing guidelines).

Table 2.

Sample Interventions across Several Chronic Care Model Elements

| SELF-MANAGEMENT SUPPORT | |

|---|---|

| Diabetes Teams | CHF Teams |

| • Created self-management tool kit which included tracking forms, posters, calendars, etc. | • Collaborative goals set with patients for weight, medications, diet, blood pressure, etc. |

| • Held peer support groups • Televised self-management course to six counties | • Distributed logs and calendars for self-monitoring, e.g., salt intake • Provided scales to patients in need |

| • Linked individual patient goal-setting to the registry | • Developed scripts to teach patients how to raise issues with their physicians |

| Delivery System Re-Design | |

| Diabetes Teams | CHF Teams |

| • Implemented planned visits, group visits and/or chronic disease visits | • Routine telephone follow-up with patients • Added family practice MD to team for continual PCP input |

| • Posted notices in exam rooms for patients with diabetes to remove their shoes | • Prospective identification of CHF patients with appointment “today” |

| • Used registry reports & pop-up reminders for follow-up and care planning | |

| Decision Support | |

| Diabetes Teams | CHF Teams |

| • Posted guidelines on the Internet | • Formal medication “pre-fill” protocol developed |

| • Generated feedback for clinical teams on guideline compliance using registry data | • Cardiologists offer educational classes to PCPs to relate guidelines |

| • Requested electronic chart review & feedback from endocrinologist | • Integrated CHF protocols into routine practice |

| • Held routine meetings with social workers to discuss more challenging patients | • Evaluated best practices approach for treating CHF patients across medical community |

The intent of this manuscript is to describe initial use of the ACIC—not to evaluate the collaboratives. (The RAND Corporation, in conjunction with the University of California-Berkeley, is conducting an evaluation of two of the three collaboratives mentioned in this manuscript.) Since the ACIC data were collected in a real world quality improvement effort rather than a formal research project, we did not pressure teams to return surveys, and we did not follow-up on nonrespondents for data. With this in mind, complete baseline data were available for 83 percent of teams (87/108), and baseline and follow-up data were available for 72 percent (31/43) teams enrolled in the first two collaboratives (Table 3). (The other two collaboratives were not yet completed at the time of this analysis.)

Table 3.

Percent of Teams with Complete ACIC Data

| Collaborative | % Complete Baseline | % Complete Baseline & Follow-up |

|---|---|---|

| Chronic #1: Diabetes | 83% (19/23) | 57% (13/23) |

| Chronic #2: CHF & Diabetes | 95% (19/20) | 90% (18/20) |

| Wash. State: Diabetes | 35% (6/17) | – |

| Chronic #3: Asthma & Depression | 96% (46/48) | – |

| TOTAL | 83% (90/108) | 72% (31/43) |

Measures

Assessment of Chronic Illness Care

Each team completed the ACIC (see Appendix) to evaluate their organization's support of care for the targeted chronic condition before the collaborative (at “baseline”) and again at the end of the collaborative (approximately 13 months later). Teams were instructed to complete the ACIC as a “group” using input from each team member to arrive at a consensus rating for each item. This encouraged input from all team members in assessing their system's approach to chronic illness care. Seventy-four percent of ACIC completion events were undertaken in the group consensus format, 8 percent using input from only one team member, and 18 percent were unknown.

The ACIC was modeled after a tool developed by the Indian Health Service (IHS) that enabled IHS clinical sites to evaluate their diabetic services on a continuum ranging from basic services to advanced, comprehensive services (Acton et al. 1993, 1995). The content of the ACIC was based on specific interventions and concepts within the Chronic Care Model:

Self-management support (e.g., integration of self-management support into routine care) (Clark and Nothwehr 1997; Clark et al. 1998; Greenfield et al. 1985, 1988; Lorig and Holman 1989; Lorig et al. 1999; Padgett et al. 1988; VonKorff et al. 1997);

Decision support (e.g., linkages between primary and specialty care; integration of clinical guidelines) (Ayanian 2000; Grimshaw and Russell 1993; Johnston et al. 1994; Katon et al. 1999; McCulloch et al. 2000; Thomson et al. 2000; Woolf et al. 1999);

Delivery system redesign (e.g., use of telephone follow-up and reminders; nurse case manager support) (Aubert et al. 1998; Beck et al. 1997; Grahame and West 1996; Weinberger et al. 1989, 1991; Maisiak et al. 1996; Schwartz et al. 1990; Wasson et al. 1992);

Clinical information systems (e.g., chronic illness registry, reminders) (Glanz and Scholl 1982; Johnston et al. 1994; Lobach and Hammond 1997; Macharia et al. 1992; Payne 2000; Stason et al. 1994);

Linkages to community resources (e.g., community-based self-management programs) (Le Fort et al. 1998; Lorig et al. 1999; Leveille et al. 1998); and

Organization of care (e.g., leadership, incentives) (Solberg 2000).

Although there are few research studies in the literature documenting the importance of larger organizational factors in chronic illness care management (e.g., support from senior leaders to conduct quality-improvement work), our expert panel, when reviewing the concept for the Chronic Care Model, felt that these were important for improving chronic illness care. The Malcolm Baldrige National Quality Award Criteria, the standard for organizational excellence in other industries, include leadership as a central component of effective organizations (U.S. Chamber of Commerce 1993). The Baldrige criteria have since been adapted for use with health-care systems (Shortell et al. 1995).

Twenty-one items were developed for the original version of the ACIC, with at least one item for each intervention. Seven items were subsequently added as we gained practical experience in the collaborative. The current version of the ACIC consists of 28 items covering the six areas of the Chronic Care Model: health care organization (6 items); community linkages (3 items); self-management support (4 items); delivery system design (6 items); decision support (4 items); and clinical information systems (5 items) (Bonomi et al. 2000).

Responses to each item in the ACIC fall within four descriptive levels of implementation ranging from “little or none” to a “fully-implemented intervention” (e.g., evidence-based guidelines are available and supported by provider education). Within each of the four levels, respondents are asked to choose one of three ratings of the degree to which that description applies. The result is a 0–11 scale, with categories within this defined as follows: 0–2 (little or no support for chronic illness care); 3–5 (basic or intermediate support for chronic illness care); 6–8 (advanced support); and 9–11 (optimal, or comprehensive, integrated care for chronic illness). Subscale scores for the six areas are derived by summing the response choices for items in that subsection and dividing by the corresponding number of items.

Faculty rating

Each month during the collaborative, two faculty member—trained in system improvement and the Chronic Care Model—independently assessed the progress of each team using a single, five-point rating. The faculty ratings were based on cumulative monthly reports prepared by teams, which included both process and outcomes data (e.g., chart review data). Faculty members were the most appropriate raters of team's progress because of their close association with teams, and their familiarity with content in the senior leader (progress) reports. The faculty ratings were defined as follows: (1.) Non-starter: “Team has been formed and population identified. An aim focused on a chronic illness has been agreed upon and baseline measures collected”; (2.) Activity but no changes: “Team is actively engaged in the project and understands the CCM. But team has not yet begun to implement change”; (3.) Modest improvement: “PDSA cycles have been implemented for some CCM elements. Evidence of improvement in process measures related to team's aim”; (4.) Significant progress: “The CCM has been implemented and improved outcomes are evident. Team is at least halfway toward accomplishing goals stated in their aim”; (5.) Outstanding sustainable results: “All CCM elements successfully implemented and goals accomplished. Outcome measures appropriate to chronic condition are at national benchmark levels. Work to spread the changes to other patient populations is underway.”

Analyses

Descriptive data (means, percentages, standard deviations, and ranges) were calculated for all teams at the start of the collaboratives. A chi-square test was conducted to determine whether characteristics—population size, organization type, geographic location, and baseline faculty rating—differed for teams with complete (both baseline and follow-up) or incomplete (baseline only) ACIC data. Baseline ACIC subscale scores were averaged across collaboratives to indicate where teams started. Where several teams (clinics) from a single organization were involved, scores were averaged across teams to obtain an average rating for that institution.

Paired t-tests were used to evaluate the responsiveness of the ACIC (change in subscale scores) in relation to the quality improvement efforts implemented by diabetes teams from Chronic #1 and #2, and CHF teams from Chronic #2. Pre-post data were combined for the diabetes teams from both collaboratives to provide more stable change estimates. Teams that focused on frailty in the elderly in Chronic #1 were not included in the analysis, as there were insufficient data from these teams. In general, we expected to see improvement from the beginning to the end of the collaborative for all ACIC dimensions given the focus of the collaboratives on making changes in all aspects of the Chronic Care Model.

Pearson correlation coefficients were used to evaluate the relationship the ACIC scores at “follow-up” (end of collaborative) for teams in Chronic #1 and 2 and the faculty rating made at the end of the collaborative.1 Although the faculty rating primarily focused on the evaluation of teams' absolute outcome and system redesign goal achievement, it was a reasonable measure to compare the ACIC. Moderate to high correlations (r>.30) were hypothesized.

Results

There were no significant baseline differences for teams with complete ACIC data versus those with incomplete data on the dimensions of pilot population size, geographic location, and faculty rating. There was a significant overall effect for type of organization ![]() . Teams from hospital-based programs were more likely to submit complete data than teams from other organizations

. Teams from hospital-based programs were more likely to submit complete data than teams from other organizations ![]() .

.

Table 4 presents average ACIC scores for teams beginning the chronic care collaboratives. Overall, collaborative-wide subscale scores at baseline ranged from 4.36 (information systems) to 6.42 (organization of care). This indicates that most teams had basic to good support for chronic illness. However, scores varied and some teams within a collaborative started at a very low level of functioning, which would be expected before beginning quality improvement work. In general, teams indicated that the areas best developed at the onset of the collaboratives were organization of care and community linkages. The area least developed was information systems, given that most teams did not have a registry in place at the time of collaborative.

Table 4.

Average ACIC Scores at Start of Chronic Care Collaboratives

| ACIC Subscale Scores | ||||||

|---|---|---|---|---|---|---|

| Teams | Organization | Community linkages | Self-management | Decision support | Delivery system redesign | Information systems |

| Collaborative #1 (Diabetes + Frail Elderly) n = 19 | 7.00 (1.27)† | 6.21 (2.17) | 5.41 (1.34) | 5.12 (2.07) | 5.23 (2.07) | 4.11 (2.61) |

| Collaborative #2 (Diabetes + CHF) n = 19 | 5.84 (2.11) | 4.50 (2.51) | 4.88 (2.09) | 4.35 (2.06) | 4.51 (2.14) | 4.21 (2.35) |

| Collaborative #3 (Depression + Asthma) n = 46 | 6.64 (1.74) | 6.53 (2.00) | 5.80 (2.21) | 5.03 (1.99) | 6.10 (2.17) | 4.72 (1.93) |

| Wash. State Diabetes Collaborative n = 6 | 4.70 (1.83) | 4.50 (2.25) | 4.05 (0.85) | 3.50 (0.59) | 3.46 (1.40) | 3.16 (1.88) |

| Overall (combined across collaboratives) n = 90 | 6.42 (1.82) | 5.90 (2.30) | 5.41 (2.00) | 4.80 (1.99) | 5.40 (2.23) | 4.36 (2.19) |

Standard deviation in parentheses.

Figures 2 and 3 present average improvement by Chronic Care Model area for diabetes and CHF teams respectively, from the start to the end of the collaborative. Significant improvement (p<.05) was observed in all six ACIC subscale scores for both diabetes and CHF teams. The largest improvements were seen in decision support, delivery system redesign, and information systems. Postcollaborative ACIC subscale scores for both diabetes and CHF teams were particularly high for organization of care (scores above 8.0) and information systems (scores above 7.5). CHF teams had higher postcollaborative ACIC subscale scores in self-management support (8.1), delivery system redesign (8.0), and information systems (8.1).

Figure 2.

Average Improvement for Diabetes Teams

Figure 3.

Average Improvement for CHF Teams

Table 5 reveals moderately strong and positive correlations between the ACIC and the faculty rating of outcomes, with the exception of community linkages (.28), a traditionally challenging area for teams to implement change. The correlations between the ACIC change scores and faculty ratings at the end of the collaborative were as follows: organization (.36), community linkages (.32), self-management (.39), decision support (.24), delivery system redesign (.41), and clinical information systems (.34).

Table 5.

Correlations between ACIC Scores and Faculty Rating*

| Post-collaborative ACIC scores (at 12 months) | ||||||

|---|---|---|---|---|---|---|

| Organization | Community linkages | Self- management | Decision support | Delivery system redesign | Information systems | |

| Faculty Rating (at 12 months) | .35 | .31 | .39 | .23 | .41 | .29 |

Based on data from 31 teams and 2 faculty members at the completion of two national collaboratives

Discussion

This paper describes initial testing of an instrument to evaluate key elements of structure and processes of care for chronic illness. Our results and feedback from teams suggest that the ACIC is responsive to changes resulting from quality improvement efforts in health-care settings. Baseline scores were generally similar across teams addressing different chronic illnesses, and consistently showed improvement after intervention across Chronic Care Model elements. Moreover, the ACIC correlates positively with ratings of teams' performance outcomes by faculty experts leading the quality improvement collaboratives. Feedback from participating teams suggests that they find the ACIC extremely useful for identifying areas in which they need to focus improvement efforts, and in tracking progress over time. Initial experience suggests that the ACIC is very applicable across different types of health-care systems (e.g., for profit, IPA, community health centers, hospital-based programs) and chronic illnesses. The collaboratives asked teams to focus their activities on a small number of practices (e.g., 1–3), which was the focus for the response to the ACIC.

The ACIC typically requires 15–30 minutes to complete including time spent reaching agreement on ratings. Discussions of areas and specific improvement strategies are prompted by the item anchors provided and follow naturally from completion of the ratings. These discussions typically assist teams in identifying areas for improvement for chronic illness care. In most cases, improvement in all six areas of the ACIC are needed, including organization of care, establishing formal links to community resources, developing formal supports for self-management, decision support, delivery system design, and information systems. One of the advantages of the ACIC is that the most advanced category (the highest possible score for each item) describes optimal practice. Thus, teams have guidance as to what comprises the best care for chronic illness. To further assist teams in translating their scores into practical terms for focusing their improvement efforts, we are developing and formally testing a feedback form that will be used in future chronic care collaboratives.

There are limitations to the evidence presented to date. The ACIC was developed to supplement our quality-improvement work with health-care delivery systems. Thus, it was not subject to the typical instrument development process involving a complete review of the literature to identify content for items. The development of the Chronic Care Model, the conceptual framework for the ACIC, has been described in a previous article (Wagner et al. 1999). It was derived from a combination of surveys of best practices, quality improvement activities, expert opinion, and consistency with the more promising interventions in the literature. It was not intended to be a representation of available evidence, but a heuristic, practical tool that would be changed in light of new evidence. We intend to add evidence for the domains of the Chronic Care Model and ACIC in a formal literature review as it becomes available. For example, although there are limited research studies that examine the impact of leadership on practice redesign in chronic illness care, there is evidence in other industries that leadership support is important in making organizational changes. We will continue to monitor the literature on studies that address this issue in relation to chronic illness care improvement.

The analyses on the ACIC were undertaken as a secondary objective of our quality improvement work, rather than as part of a formal research study. Thus, we did not impose a formal study design or select teams to be involved in the collaboratives. The involved organizations were relatively small in number and highly motivated, which may not be representative of the average health care organization. We would like to have had complete data from all teams to more fully depict improvement across the collaboratives. Future chronic care collaboratives will involve a more rigorous data collection strategy to minimize missing data. Moreover, additional data need to be collected by other research groups to further document the reliability and validity of the ACIC.

Although the ACIC was responsive to improvement efforts, the presence of a control group (or control “sites”) would have strengthened the conclusions. While it is possible that simply completing the ACIC could act as an intervention itself based on the “education” teams receive in completing the survey, we do not think it likely given the difficulty in producing organizational change. Nevertheless, the RAND–UC-Berkeley evaluation of the collaboratives will include a formal comparison of enrolled teams to “control groups,” that is, clinics not actively participating in the quality improvement effort, to determine whether differences in process measures (e.g., ACIC scores) and outcomes exist. At this writing, it was not possible to evaluate whether changes in the ACIC represent verifiable changes teams made in their systems, or furthermore, whether teams focused organizational change on all six aspects of the Chronic Care Model. As part of the official evaluation of the collaboratives, each of the interventions (“PDSA” cycles) teams make in their system will be coded according to Chronic Care Model elements. This will allow us to compare whether changes on the ACIC are related to the number and/or intensity of changes teams make in different areas, or whether certain aspects of the Chronic Care Model—e.g., self-management support—appear to be more important in improving outcomes.

In conclusion, preliminary data suggest that the ACIC is a useful quality improvement tool. While additional research is clearly indicated, the instrument appears sensitive to intervention changes across different chronic illnesses and helps teams focus their efforts on adopting evidence-based chronic care changes.

Appendix

Assessment of Chronic Illness Care

Part 1.

Organization of the Health Care Delivery System. Chronic illness management programs can be more effective if the overall system (organization) in which care is provided is oriented and led in a manner that allows for a focus on chronic illness care.

| Components | Little support | Basic support | Good support | Full support | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overall Organizational Leadership in Chronic Illness Care | … does not exist or there is little interest. | … is reflected in vision statements and business plans, but no resources are specifically earmarked to execute the work. | … is reflected by senior leadership and specific dedicated resources (dollars and personnel). | … is part of the system's long term planning strategy, receive necessary resources, and specific people are held accountable. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Organizational Goals for Chronic Care | … do not exist or are limited to one condition. | … exist but are not actively reviewed. | … are measurable and reviewed. | … are measurable, reviewed routinely, and are incorporated into plans for improvement. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Improvement Strategies for Chronic Illness Care | … are ad hoc and not organized or supported consistently. | … utilize ad hoc approaches for targeted problems as they emerge. | … utilize a proven improvement strategy for targeted problems. | … include a proven improvement strategy and are used proactively in meeting organizational goals. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Incentives and Regulations for Chronic Illness Care | … are not used to influence clinical performance goals. | … are used to influence utilization and costs of chronic illness care. | … are used to support patient care goals. | … are used to motivate and empower providers to support patient care goals. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Senior Leaders | … discourage enrollment of the chronically ill. | … do not make improvements to chronic illness care a priority. | … encourage improvement efforts in chronic care. | … visibly participate in improvement efforts in chronic care. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Benefits | … discourage patient self-management or system changes. | … neither encourage nor discourage patient self-management or system changes. | … encourage patient self-management or system changes. | … are specifically designed to promote better chronic illness care. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

Part 2.

Community Linkages. Linkages between the health system and community resources play important roles in chronic illness management.

| Components | Little support | Basic support | Good support | Full support | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Linking Patients to Outside Resources | … is not done systematically. | … is limited to a list of identified community resources in an accessible format. | … is accomplished through a designated staff person or resource responsible for ensuring providers and patients make maximum use of community resources. | … is accomplished through active coordination between the health system, community service agencies, and patients. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Partnerships with Community Organizations | … do not exist. | … are being considered but have not yet been implemented. | … are formed to develop supportive programs and policies. | … are actively sought to develop formal supportive programs and policies across the entire system. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Regional Health Plans | … do not coordinate chronic illness guidelines, measures, or care resources at the practice level. | … would consider some degree of coordination of guidelines, measures or care resources at the practice level but have not yet implemented changes. | … currently coordinate guidelines, measures, or care resources in one or two chronic illness areas. | … currently coordinate chronic illness guidelines, measures, and resources at the practice level for most chronic illnesses. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

Part 3.

Self-Management Support. Effective self-management support can help patients and families cope with the challenges of living with and treating chronic illness and reduce complications and symptoms.

| Components | Limited support | Basic support | Good support | Full support | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Assessment and Documentation of Self-Management Needs and Activities | … are not done. | … are expected. | … are completed in a standardized manner. | … are regularly assessed and recorded in standardized form linked to a treatment plan available to practice and patients. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Self-Management Support | … is limited to the distribution of information (pamphlets, booklets). | … is available by referral to self-management classes or educators. | … is provided by trained clinical educators who are designated to do self-management support, are affiliated with each practice, and see patients on referral. | … is provided by clinical educators affiliated with each practice, trained in patient empowerment and problem-solving methodologies, and see most patients with chronic illness. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Addressing Concerns of Patients and Families | … is not consistently done. | … is provided for specific patients and families through referral. | … is encouraged, and peer support, groups, and mentoring programs are available. | … is an integral part of care and includes systematic assessment and routine involvement in peer support, groups, or mentoring programs. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Effective Behavior Change Interventions and Peer Support | … are not available. | … are limited to the distribution of pamphlets, booklets, or other written information. | … are available only by referral to specialized centers staffed by trained personnel. | … are readily available and an integral part of routine care. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

Part 4.

Decision Support. Effective chronic illness management programs assure that providers have access to evidence-based information necessary to care for patients–decision support. This includes evidence-based practice guidelines or protocols, specialty consultation, provider education, and activating patients to make provider teams aware of effective therapies.

| Components | Limited support | Basic support | Good support | Full support | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Evidence-Based Guidelines | … are not available. | … are available but are not integrated into care delivery. | … are available and supported by provider education. | … are available, supported by provider education and integrated into care through reminders and other proven provider behavior change methods. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Involvement of Specialists in Improving Primary Care | … is primarily through traditional referral. | … is achieved through specialist leadership to enhance the capacity of the overall system to routinely implement guidelines. | … includes specialist leadership and designated specialists who provide primary care team training. | … includes specialist leadership and specialist involvement in improving the care of primary care patients. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Provider Education for Chronic Illness Care | … is provided sporadically. | … is provided systematically through traditional methods. | … is provided using optimal methods (e.g. academic detailing). | … includes training all practice teams in chronic illness care methods such as population-based management, and self-management support. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Informing Patients about Guidelines | … is not done. | … happens on request or through system publications. | … is done through specific patient education materials for each guideline. | … includes specific materials developed for patients which describe their role in achieving guideline adherence. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

Part 5.

Delivery System Design. Evidence suggests that effective chronic illness management involves more than simply adding additional interventions to a current system focused on acute care. It may necessitate changes to the organization of practice that impact provision of care.

| Components | Limited support | Basic support | Good support | Full support | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Practice Team Functioning | … is not addressed. | … is addressed by assuring the availability of individuals with appropriate training in key elements of chronic illness care. | … is assured by regular team meetings to address guidelines, roles and accountability, and problems in chronic illness care. | … is assured by teams who meet regularly and have clearly defined roles including patient self-management education, proactive follow-up, and resource coordination and other skills in chronic illness care. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Practice Team Leadership | … is not recognized locally or by the system. | … is assumed by the organization to reside in specific organizational roles. | … is assured by the appointment of a team leader but the role in chronic illness is not defined. | … is guaranteed by the appointment of a team leader who assures that roles and responsibilities for chronic illness care are clearly defined. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Appointment System | … can be used to schedule acute care visits, follow-up and preventive visits. | … assures scheduled follow-up with chronically ill patients. | … is flexible and can accommodate innovations such as customized visit length or group visits. | … includes organization of care that facilitates the patient seeing multiple providers in a single visit. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Follow-up | … is scheduled by patients or providers in an ad hoc fashion. | … is scheduled by the practice in accordance with guidelines. | … is assured by the practice team by monitoring patient utilization. | … is customized to patient needs, varies in intensity and methodology (phone, in person, e-mail) and assures guideline follow-up. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Planned Visits for Chronic Illness Care | … are not used. | … are occasionally used for complicated patients. | … are an option for interested patients. | … are used for all patients and include regular assessment, preventive interventions, and attention to self-management support. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Continuity of Care | … is not a priority. | … depends on written communication between primary care providers and specialists, case managers, or disease management companies. | … between primary care providers and specialists and other relevant providers is a priority but not implemented systematically. | … is a high priority and all chronic disease interventions include active coordination between primary care, specialists, and other relevant groups. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

Part 6.

Clinical Information Systems. Timely, useful information about individual patients and populations of patients with chronic conditions is a critical feature of effective programs, especially those that employ population-based approaches.

| Components | Limited support | Basic support | Good support | Full support | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Registry (list of patients with specific conditions) | … is not available. | … includes name, diagnosis, contact information, and date of last contact either on paper or in a computer database. | … allows queries to sort sub-populations by clinical priorities. | … is tied to guidelines which provide prompts and reminders about needed services. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Reminders to Providers | … are not available. | … include general notification of the existence of a chronic illness, but do not describe needed services at time of encounter. | … include indications of needed service for populations of patients through periodic reporting. | … include specific information for the team about guideline adherence at the time of individual patient encounters. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Feedback | … is not available or is non-specific to the team. | … is provided at infrequent intervals and is delivered impersonally. | … occurs at frequent enough intervals to monitor performance and is specific to the team's population. | … is timely, specific to the team, routine, and personally delivered by a respected opinion leader to improve team performance. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Information about Relevant Subgroups of Patients Needing Services | … is not available. | … can only be obtained with special efforts or additional programming. | … can be obtained upon request but is not routinely available. | … is provided routinely to providers to help them deliver planned care. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| Patient Treatment Plans | … are not expected. | … are achieved through a standardized approach. | … are established collaboratively and include self-management as well as clinical goals. | … are established collaboratively and include self management as well as clinical management. Follow-up occurs and guides care at every point of service. | ||||||||

| Score | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

Notes

As a comparison, we also obtained Pearson correlations between the average ACIC change scores (pre- to postcollaborative) and the faculty rating.

This paper was supported by grant no. 0347984 from The Robert Wood Johnson Foundation to Group Health Cooperative of Puget Sound. The authors gratefully acknowledge Nirmala Sandhu, M.P.H., for her assistance with data analysis; Jane Altemose for her input on tables presented in this manuscript; and Brian Austin, Connie Davis, M.N., Lou Grothaus, M.S., Mike Hindmarsh, M.A., George Jackson, Ph.D. (candidate, University of North Carolina, Charlotte), Paula Lozano, M.D., M.P.H., and Judith Schaefer, M.P.H., for their input on the content of the Assessment of Chronic Illness Care.

References

- Acton K, Valway S, Helgerson S, Huy JB, Smith K, Chapman V, Gohdes D. “Improving Diabetes Care for American Indians.”. Diabetes Care. 1993;16(1):372–5. doi: 10.2337/diacare.16.1.372. [DOI] [PubMed] [Google Scholar]

- Acton K, Bochenski C, Broussard B, Gohdes D, Hosey G, Rith-Najarian S, Stahn R, Stacqualursi F. Putting Integrated Care and Education to Work for American Indians/Alaska Natives Manual of the Indian Health Services Diabetes Program. Albuquerque NM: Department of Health and Human Services; 1995. [Google Scholar]

- Aubert RE, Herman WH, Waters J, Moore W, Sutton D, Peterson BL, Bailey CM, Kaplan JP. “Nurse Case Management to Improve Glycemic Control in Diabetic Patients in a Health Maintenance Organization: A Randomized Controlled Trial.”. Annals of Internal Medicine. 1998;129:605–12. doi: 10.7326/0003-4819-129-8-199810150-00004. [DOI] [PubMed] [Google Scholar]

- Ayanian JZ. “Generalists and Specialists Caring for Patients with Heart Disease: United We Stand Divided We Fall.”. American Journal of Medicine. 2000;108:216–26. doi: 10.1016/s0002-9343(99)00455-6. [DOI] [PubMed] [Google Scholar]

- Beck A, Scott J, Williams P, Robertson B, Jackson D, Gade G, Cowan P. “A Randomized Trial of Group Outpatient Visits for Chronically Ill Older HMO Members: The Cooperative Health Care Clinic.”. Journal of the American Geriatric Society. 1997;45:543–9. doi: 10.1111/j.1532-5415.1997.tb03085.x. [DOI] [PubMed] [Google Scholar]

- Berwick DM. “Continuous Improvement as an Ideal in Health Care.”. New England Journal of Medicine. 1989;320(1):53–6. doi: 10.1056/NEJM198901053200110. [DOI] [PubMed] [Google Scholar]

- Berwick DM. “Developing and Testing Change in Delivery of Care.”. Annals of Internal Medicine. 1998;128(8):651–6. doi: 10.7326/0003-4819-128-8-199804150-00009. [DOI] [PubMed] [Google Scholar]

- Bonomi AE, Glasgow R, Wagner EH, Davis C, Sandhu N. Seattle Wash: 2000. “Assessment of Chronic Illness Care: How Well Does Your Organization Provide Care for Chronic Illness?” Paper presented at the Institute for Healthcare Improvement National Congress. June. [Google Scholar]

- Clark NM, Baile WC, Rand C. “Advances in Prevention and Education and Lung Disease.”. American Journal of Respiratory Critical Care Medicine. 1998;157:155–67. doi: 10.1164/ajrccm.157.4.nhlbi-14. [DOI] [PubMed] [Google Scholar]

- Clark NM, Nothwehr F. “Self-management of Asthma by Adult Patients.”. Patient Education Counsel. 1997;32(1):S5-20. doi: 10.1016/s0738-3991(97)00092-x. [DOI] [PubMed] [Google Scholar]

- Desai MM, Zhang P, Hennessy CH. “Surveillance for Morbidity and Mortality among Older Adults—United States 1995–1996.”. Morbidity and Mortality Weekly Reports. 1999;48(8):7–25. [PubMed] [Google Scholar]

- Glanz K, Scholl TO. “Intervention Strategies to Improve Adherence among Hypertensives: Review and Recommendations.”. Patient Counsel Health Education. 1982;4:14–28. doi: 10.1016/s0738-3991(82)80031-1. [DOI] [PubMed] [Google Scholar]

- Grahame R, West J. “The Role of the Rheumatology Nurse Practitioner in Primary Care: An Experiment in the Further Education of the Practice Nurse.”. British Journal of Rheumatology. 1996;35(6):581–8. doi: 10.1093/rheumatology/35.6.581. [DOI] [PubMed] [Google Scholar]

- Greenfield S, Kaplan SH, Ware JE. “Expanding Patient Involvement in Care: Effects of Patient Outcomes.”. Annals of Internal Medicine. 1985;102(4):520–8. doi: 10.7326/0003-4819-102-4-520. [DOI] [PubMed] [Google Scholar]

- Greenfield S, Kaplan SH, Ware JE, Yano EM, Frank HJ. “Patients' Participation in Medical Care: Effects on Blood Sugar Control and Quality of Life in Diabetes.”. Journal of General Internal Medicine. 1988;3(5):448–57. doi: 10.1007/BF02595921. [DOI] [PubMed] [Google Scholar]

- Grimshaw JM, Russell IT. “Effect of Guidelines on Medical Practice: A Systematic Review of Rigorous Evaluations.”. Lancet. 1993;342:1317–22. doi: 10.1016/0140-6736(93)92244-n. [DOI] [PubMed] [Google Scholar]

- Isenberg SF, Stewart MG. “Utilizing Patient Satisfaction Data to Assess Quality Improvement in Community-based Organizational Practices.”. American Journal of Medical Quality. 1998;13(4):188–94. doi: 10.1177/106286069801300404. [DOI] [PubMed] [Google Scholar]

- Jacobs RP. “Hypertension and Managed Care.”. American Journal of Managed Care. 1998;4(12):S749–52. [PubMed] [Google Scholar]

- Johnston ME, Langton KB, Haynes RB, Mathieu A. “Effects of Computer-Based Clinical Decision Support Systems on Clinical Performance and Patient Outcomes: A Critical Appraisal of Research.”. Annals of Internal Medicine. 1994;120(2):135–42. doi: 10.7326/0003-4819-120-2-199401150-00007. [DOI] [PubMed] [Google Scholar]

- Katon W, VonKorff M, Lin E, Simon G, Walker E, Unutzer J, Bush T, Russo J, Ludman E. “Stepped Collaborative Care for Primary Care Patients with Persistent Symptoms of Depression: A Randomized Trial.”. Archives of General Psychiatry. 1999;56:1109–15. doi: 10.1001/archpsyc.56.12.1109. [DOI] [PubMed] [Google Scholar]

- Kilo CM. “A Framework for Collaborative Improvement: Lessons from the Institute for Healthcare Improvement's Breakthrough Series.”. Quality Managed Health Care. 1998;6(4):1–13. doi: 10.1097/00019514-199806040-00001. [DOI] [PubMed] [Google Scholar]

- Leape LL, Kabcenell AI, Gandhi TK, Carver P, Nolan TW, Berwick DM. “Reducing Adverse Drug Events: Lessons from a Breakthrough Series Collaborative.”. Joint Commission Journal on Quality Improvement. 2000;26(6):321–31. doi: 10.1016/s1070-3241(00)26026-4. [DOI] [PubMed] [Google Scholar]

- Le Fort SM, Gray-Donald K, Rowat KM, Jeans ME. “Randomized Controlled Trial of a Community-based Psychoeducation Program for the Self-management of Chronic Pain.”. Pain. 1998;74:297–306. doi: 10.1016/s0304-3959(97)00190-5. [DOI] [PubMed] [Google Scholar]

- Leveille SG, Wagner EH, Davis C, Grothaus L, Wallace J, LoGerfo M, Kent D. “Preventing Disability and Managing Chronic Illness in Frail Older Adults: A Randomized Trial of a Community-based Partnership with Primary Care.”. Journal of the American Geriatric Society. 1998;46(10):1191–8. doi: 10.1111/j.1532-5415.1998.tb04533.x. [DOI] [PubMed] [Google Scholar]

- Lobach DF, Hammond WE. “Computerized Decision Support Based on Clinical Practice Guidelines Improves Compliance with Care Standards.”. American Journal of Medicine. 1997;102:89–98. doi: 10.1016/s0002-9343(96)00382-8. [DOI] [PubMed] [Google Scholar]

- Lorig KR, Sobel DS, Stewart AL, Brown BW, Bandura A, Ritter P, Gonzalez VM, Laurent DD, Holman HR. “Evidence Suggesting That a Chronic Disease Self-management Program Can Improve Health Status While Reducing Hospitalization: A Randomized Trial.”. Medical Care. 1999;37(1):5–14. doi: 10.1097/00005650-199901000-00003. [DOI] [PubMed] [Google Scholar]

- Lorig K, Holman HR. “Long-term Outcomes of an Arthritis Self-management Study: Effects of Reinforcement Efforts.”. Social Science Medicine. 1989;29(2):221–4. doi: 10.1016/0277-9536(89)90170-6. [DOI] [PubMed] [Google Scholar]

- Macharia WM, Leon G, Rowe BH, Stephenson BJ, Haynes RB. “An Overview of Interventions to Improve Compliance with Appointment Keeping for Medical Services.”. Journal of the American Medical Association. 1992;267(13):1813–7. [PubMed] [Google Scholar]

- Maisiak R, Austin J, Heck L. “Health Outcomes of Two Telephone Interventions for Patients with Rheumatoid Arthritis or Osteoarthritis.”. Arthritis Rheumatism. 1996;39(8):1391–9. doi: 10.1002/art.1780390818. [DOI] [PubMed] [Google Scholar]

- Mazzuca SA, Brandt KD, Katz BP, Musick BS, Katz BP. “The Therapeutic Approaches of Community Based Primary Care Practitioners to Osteoarthritis of the Hip in Elderly Patients.”. Journal of Rheumatology. 1991;18(10):1593–1600. [PubMed] [Google Scholar]

- McCulloch DK, Price MJ, Hindmarsh M, Wagner EH. “Improvement in Diabetes Care Using an Integrated Population-based Approach in a Primary Care Setting.”. Disease Management. 2000;3(2):75–82. [Google Scholar]

- McCulloch D, Glasgow RE, Hampson SE, Wagner E. “A Systematic Approach to Diabetes Management in the Post-DCCT Era.”. Diabetes Care. 1994;19(7):1–5. doi: 10.2337/diacare.17.7.765. [DOI] [PubMed] [Google Scholar]

- MMWR. “Resources and Priorities for Chronic Disease Prevention and Control 1994.”. Morbidity and Mortality Weekly Reports. 1997;46(13):286–7. [PubMed] [Google Scholar]

- O'Malley C. “Quality Measurement for Health Systems: Accreditation and Report Cards.”. American Journal of Health System Pharmacology. 1997;54(13):1528–35. doi: 10.1093/ajhp/54.13.1528. [DOI] [PubMed] [Google Scholar]

- Ornstein SM, Jenkins RG. “Quality of Care for Chronic Illness in Primary Care: Opportunity for Improvement in Process and Outcome Measures.”. American Journal of Managed Care. 1999;5(5):621–7. [PubMed] [Google Scholar]

- Padgett D, Mumford E, Hynes M, Carter R. “Meta-analysis of the Effects of Educational and Psychosocial Interventions on Management of Diabetes Mellitus.”. Journal of Clinical Epidemiology. 1988;41:1007–30. doi: 10.1016/0895-4356(88)90040-6. [DOI] [PubMed] [Google Scholar]

- Payne TH. “Computer Decision Support Systems.”. Chest. 2000;188(2):47S–52S. doi: 10.1378/chest.118.2_suppl.47s. [DOI] [PubMed] [Google Scholar]

- Rubin HR, Gandek B, Rogers WH, Kosinski M, McHorney CA, Ware Jr JE. “Patients' Ratings of Outpatient Visits in Different Practice Settings: Results from the Organizational Outcomes Study.”. Journal of the American Medical Association. 1993;270(7):835–40. [PubMed] [Google Scholar]

- Sawacki PT, Jhuhlhausen I, Didjurgeit U, Berger M. “Improvement of Hypertension Care by a Structured Treatment and Teaching Programme.”. Journal of Human Hypertension. 1993;7:571–3. [PubMed] [Google Scholar]

- Schwartz LL, Raymer JM, Nash CA, Hanson IA, Muenter DT. “Hypertension: The Role of the Nurse-therapist.”. Mayo Clinic Proc. 1990;65:67–72. doi: 10.1016/s0025-6196(12)62111-9. [DOI] [PubMed] [Google Scholar]

- Sennett C. “An Introduction to the National Committee on Quality Assurance.”. Pediatric Annual. 1998;27(4):210–4. doi: 10.3928/0090-4481-19980401-09. [DOI] [PubMed] [Google Scholar]

- Sewell N. “Continuous Quality Improvement in Acute Health Care: Creating a Holistic and Integrated Approach.”. International Journal of Health Care Quality Assurance. 1997;10(1):20–6. doi: 10.1108/09526869710159598. [DOI] [PubMed] [Google Scholar]

- Shortell SM, O'Brien JL, Carman JM, Foster RW, Hugnes EF, Boerstler H, O'Connor EJ. “Assessing the Impact of Continuous Quality Improvement/Total Quality Management: Concept versus Implementation.”. Health Services Resources. 1995;30(2):377–401. [PMC free article] [PubMed] [Google Scholar]

- Solberg LI. “Guideline Implementation: What the Literature Doesn't Tell Us.”. Joint Commission Journal on Quality Improvement. 2000;26:525–37. doi: 10.1016/s1070-3241(00)26044-6. [DOI] [PubMed] [Google Scholar]

- Sperl-Hillen J, O'Connor PJ, Carlson RR, Lawson TB, Haestenson C, Crawson T, Wuorenma J. “Improving Diabetes Care in a Large Health Care System: An Enhanced Primary Care Approach.”. Joint Commission Journal on Quality Improvement. 2000;26(10):615–22. doi: 10.1016/s1070-3241(00)26052-5. [DOI] [PubMed] [Google Scholar]

- Stason WB, Shepard DS, Perry HM, Carmen BM, Nagurney JT, Rosner B, Meyer G. “Effectiveness and Costs of Veterans Affairs Hypertension Clinic.”. Medical Care. 1994;32(12):1197–215. doi: 10.1097/00005650-199412000-00004. [DOI] [PubMed] [Google Scholar]

- Stuart ME, Wallach RW, Penna PM, Stergachis A. “Successful Implementation of a Guideline Program for the Rational Use of Lipid-lowering Drugs.”. HMO Practice. 1991;5:198–204. [PubMed] [Google Scholar]

- Thomson O'Brien MA, Oxman AD, Davis DA, Haynes RB, Freemantle N, Harvey EL. “Education Outreach Visits: Effects on Professional Practice and Health Care Outcomes.”. Cochrane Database System Review. 2000 doi: 10.1002/14651858.CD000409. [DOI] [PubMed] [Google Scholar]

- U.S. Chamber of Commerce. The Malcolm Baldridge Award. 1993 [Google Scholar]

- U.S. Department of Health and Human Services. Healthy People 2010: Understanding and Improving Health. Washington DC: U.S. Department of Health and Human Services; 2000. [Google Scholar]

- VonKorff M, Gruman J, Schaefer J, Curry SJ, Wagner EH. “Collaborative Management of Chronic Illness.”. Annals of Internal Medicine. 1997;127:1097–102. doi: 10.7326/0003-4819-127-12-199712150-00008. [DOI] [PubMed] [Google Scholar]

- Wagner EH, Austin BT, Von Korff M. “Improving Outcomes in Chronic Illness.”. Managed Care Quarterly. 1996;4(2):12–25. [PubMed] [Google Scholar]

- Wagner EH, Austin BT, Von Korff M. “Organizing Care for Patients with Chronic Illness.”. Milbank Quarterly. 1996;74:511–44. [PubMed] [Google Scholar]

- Wagner EH, Davis C, Schaefer J, Von Korff M, Austin B. “A Survey of Leading Chronic Disease Management Programs: Are They Consistent with the Literature?”. Managed Care Quarterly. 1999;7(3):56–66. [PubMed] [Google Scholar]

- Wagner EH, Glasgow RE, Davis C, Bonomi AE, Provost L, McCulloch D, Carver P, Sixta C. “Quality Improvement in Chronic Illness Care: A Collaborative Approach.”. Joint Commission Journal on Quality Improvement. 2001;27:63–80. doi: 10.1016/s1070-3241(01)27007-2. [DOI] [PubMed] [Google Scholar]

- Wasson J, Gaudette C, Whaley F, Sauvigne A, Baribeau P, Welch HG. “Telephone Care As a Substitute for Routine Clinical Follow-up.”. Journal of the American Medical Association. 1992;267:1788–93. [PubMed] [Google Scholar]

- Weinberger M, Tierney WM, Booher P, Katz BP. “Can the Provision of Information to Patients with Osteoarthritis Improve Functional Status: A Randomized Controlled Trial.”. Arthritis Rheumatism. 1989;32(12):1577–83. doi: 10.1002/anr.1780321212. [DOI] [PubMed] [Google Scholar]

- Weinberger M, Tierney WM, Booher P, Katz BP. “The Impact of Increased Contact on Psychosocial Outcomes in Patients with Osteoarthritis: A Randomized Controlled Trial.”. Journal of Rheumatology. 1991;18(6):849–54. [PubMed] [Google Scholar]

- Woolf SH, Grol R, Hutchinson A, Eccles M, Grimshaw J. “Clinical Guidelines: Potential Benefits Limitations and Harms of Clinical Guidelines.”. British Medical Journal. 1999;318:527–30. doi: 10.1136/bmj.318.7182.527. [DOI] [PMC free article] [PubMed] [Google Scholar]