Abstract

Objective: In the context of an inpatient care provider order entry (CPOE) system, to evaluate the impact of a decision support tool on integration of cardiology “best of care” order sets into clinicians' admission workflow, and on quality measures for the management of acute myocardial infarction (AMI) patients.

Design: A before-and-after study of physician orders evaluated (1) per-patient use rates of standardized acute coronary syndrome (ACS) order set and (2) patient-level compliance with two individual recommendations: early aspirin ordering and beta-blocker ordering.

Measurements: The effectiveness of the intervention was evaluated for (1) all patients with ACS (suspected for AMI at the time of admission) (N = 540) and (2) the subset of the ACS patients with confirmed discharge diagnosis of AMI (n = 180) who comprise the recommended target population who should receive aspirin and/or beta-blockers. Compliance rates for use of the ACS order set, aspirin ordering, and beta-blocker ordering were calculated as the percentages of patients who had each action performed within 24 hours of admission.

Results: For all ACS admissions, the decision support tool significantly increased use of the ACS order set (p = 0.009). Use of the ACS order set led, within the first 24 hours of hospitalization, to a significant increase in the number of patients who received aspirin (p = 0.001) and a nonsignificant increase in the number of patients who received beta-blockers (p = 0.07). Results for confirmed AMI cases demonstrated similar increases, but did not reach statistical significance.

Conclusion: The decision support tool increased optional use of the ACS order set, but room for additional improvement exists.

Despite considerable emphasis on development and dissemination of practice guidelines, remarkable variation persists in the provision of health care.1 The recent Institute of Medicine2 report “Crossing the Quality Chasm” cites more than 70 significant peer-reviewed studies documenting the inconsistent quality of care in the United States. Clinical practice variability exists even in areas where strong scientific evidence and a high degree of expert consensus have defined best practices. American Heart Association (AHA) guidelines for acute myocardial infarction (AMI) recommend that clinicians prescribe aspirin and beta-blockers within the first 24 hours of admission. The decision to prescribe each is clinically independent; aspirin and beta-blockers have different indications, different contraindications, and different effects. For example, a patient with AMI who received thrombolytic therapy initiated in the emergency department would not receive aspirin, but should receive beta-blockers within the first 24 hours (unless a contraindication existed). Both the Center for Medicare and Medicaid Services (CMS) and the Joint Commission for Accreditation of Healthcare Organizations (JCAHO) mandate the use of these measures to assess and monitor quality of care for AMI patients. Despite the strong evidence that independently administering aspirin and/or beta-blockers soon after the onset of a patient's symptoms prolongs life, studies demonstrate that adherence to these recommended evidence-based therapies is still far from optimal, with substantial regional variation.3,4,5 Recent successful quality improvement initiatives targeting the acute coronary syndrome (ACS)1 patient population that includes patients with suspected AMI recommend standardized order sets to increase compliance with key evidence-based therapies. These studies have demonstrated significant improvement in compliance with early aspirin and beta-blocker use for ACS patients with standardized order set use.6,7,8 However, the underlying processes were paper based, where standardized order sets were used at the discretion of the clinicians with no automated decision support.

Grimshaw and Russell5 reviewed 59 published evaluations of clinical guidelines and found that the most effective guideline implementation strategies delivered patient-specific advice at the time and place of consultation. Care provider order entry (CPOE) provides a useful platform for integrating knowledge into clinicians' workflow by presenting just-in-time treatment advice tailored to the needs of individual patients.9

Care provider order entry systems with decision support have been recognized for promoting safe use of medications. Such safety features include (1) patient-specific dosing suggestions,10 (2) reminders/prompts for appropriate drug selection and administration,11,12 (3) drug–allergy and drug–drug interaction checks,13 and (4) standardized “best of care” order sets constructed to guide clinicians.14,15 In addition, CPOE systems with decision support have been touted as effective tools in helping physicians improve preventive care,16,17,18,19 guideline compliance,20,21 and resource use.22,23,24,25

Although many studies have demonstrated that CPOE-based interventions that prompt physicians to individual treatment options yield increased compliance, there is not much experience with their utility in facilitating the use of standardized order sets earlier in the hospital course. In this article, we developed a CPOE-based decision support tool to improve admission practices by facilitating use of standardized “best of care” order sets at the time of admission. We evaluated the effectiveness of the intervention for the management of (1) all patients with ACS (suspected for AMI at the time of admission) and (2) a subset of the ACS patients who had confirmed discharge diagnosis of AMI (because those patients are specifically the target of both the AHA guidelines and those to whom JCAHO quality measures are applied), with respect to early use of the standardized ACS order set and to the association of ACS order set use with early aspirin and beta-blocker ordering behaviors.

Methods

Setting

The study was conducted at Vanderbilt University Medical Center (VUMC), which includes Vanderbilt University Hospital (VUH), a 630-bed academic tertiary care teaching facility with approximately 31,000 admissions per year. Approximately 300 VUH patients are hospitalized with AMI annually. Most patients with AMI are admitted to cardiology units (cardiology step-down or coronary care unit) where they are cared for by cardiology attending physicians, fellows, and house staff. If not admitted to cardiology units, these patients are cared for on “monitored” units by noncardiology services consisting of general medicine attending physicians and house staff.

WizOrder is VUMC's CPOE system with integrated decision support.26,27,28,29,30 Currently, all inpatient orders are entered using WizOrder. Approximately 15,000 orders are entered into WizOrder per day with 75% of them being directly entered by physician staff including attending physicians, interns, residents, and fellows. The rest are entered by other clinical staff (including nurses, pharmacists, and others) after clinicians generate verbal or written orders. During the study period, the Emergency Department at VUH had not yet implemented the CPOE system (this was done during 2004), so that orders written and carried out in the Emergency Department were not analyzed in the current study.

Baseline CPOE system features at the time of study included (1) drug–allergy and drug–drug interaction checks, (2) interventions to promote cost- effective care, (3) more than 1,000 order sets encapsulating “best of care” practices, (4) linked patient-specific access to educational resources and biomedical literature, and (5) a programmable rules engine used to deliver Web-based decision support modules for the implementation of guidelines.28

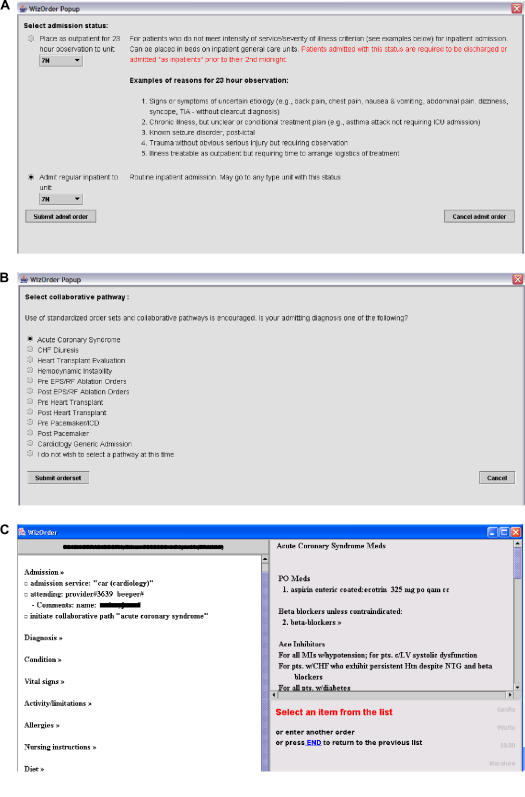

Intervention: Admission Advisor

Within WizOrder, order sets are typically invoked voluntarily, at the user's discretion, for specific patient-related indications. Prior to this project, no effective tools were available to directly facilitate appropriate order set use within the CPOE system. Rather than searching for an optimal order set to address the particular case at hand, busy clinicians frequently composed orders in an ad hoc manner based on their knowledge, recall, and judgment about the case. Under such circumstances, clinician-users constructed all orders one by one, rather than from evidence-based templates. In an effort to promote order set use early in the hospital stay, the authors developed the Admission Advisor, a decision support module that alerts physicians to relevant diagnosis/procedure-specific order sets for a patient upon admission. The Admission Advisor directed admitting physicians, including attending physicians and house staff, to service-based diagnosis/procedure-specific order sets (after entry of an admission order placing the patient on one of the cardiology units) using the CPOE system programmable rules engine.28 If the admitting physician indicated that one of the recommended order sets should be initiated, the Admission Advisor logic immediately displayed the order set template for selection of appropriate orders. Upon initiation, the selected order set became the patient-specific (default) order set and was displayed on the screen every subsequent time a clinician initiated an order entry session for the same patient. If the admitting physician rejected the recommendation, the CPOE system allowed individual order entry as described above. ▶ illustrates the steps generated by the Admission Advisor.

Figure 1.

(A) Selection of the admission unit. (B) Diagnosis/procedure-specific order sets. (C) Acute coronary syndrome (ACS) order set.

Prior to the implementation of the Admission Advisor, order sets relevant to care of ACS/AMI patients were reviewed and revised by the cardiology clinical team (including attending faculty and case managers) for content, completeness, and accuracy. At the beginning of each monthly rotation, house staff rotating through cardiology were introduced to the existence of and value of using the ACS order set during their cardiology service orientation. Within the ACS order set, actions to select and order aspirin or beta-blockers were separate, i.e., it was not possible to order both with a single mouse click. Ordering of each represented independent judgments.

Study Design

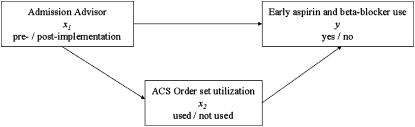

The study was a before-and-after design with respect to the implementation of the Admission Advisor. The conceptual framework that illustrates the hypothesized associations among study variables is provided in ▶. As illustrated in this diagram, it is hypothesized that implementation of the Admission Advisor influences ACS order set use, and ACS order set use influences early aspirin and beta-blocker ordering behavior where ACS order set use serves as a mediating variable between the intervention and compliance with early aspirin and beta-blocker use.

Figure 2.

Conceptual framework with the hypothesized associations among study variables.

The preintervention period was 32 weeks (August 1, 2002–March 31, 2003) followed by a 20-week postintervention period (May 1, 2003–September 30, 2003). Data from April 2003 were excluded from the study since the intervention was activated in the middle of that month (on April 14, 2003). The study was approved by Vanderbilt University Institutional Review Board (IRB) (IRB 990007, 07/25/2003). Consent was not required of participating patients or physicians per VUMC IRB committee.

We adopted JCAHO patient inclusion guidelines established for the evaluation of compliance with AMI quality-of-care measures to define the AMI population considered in this study.31 The AMI patient population included all cases older than the age of 18 years who were discharged from VUH with a principal discharge diagnosis of AMI (as determined by the billing ICD-9 codes 410.xx) between August 1, 2002, and September 30, 2003. In addition, we devised a criterion to identify admissions with suspected AMI based on the presence of a set of AMI diagnostic orders entered within the first 24 hours of admission. The presence of an electrocardiogram (EKG) order in conjunction with a troponin or a creatine kinase isoenzyme MB (CKMB) order entered within the first 24 hours of admission was employed to identify a set of patients with expected high sensitivity for suspected AMI at the time of admission (“sensitive for AMI” set).

The evaluation considered in this paper aims to (1) compare pre- and postintervention cohorts with respect to ACS order set use, (2) compare early aspirin and beta-blocker use with respect to ACS order set use status, and (3) compare pre- and postintervention cohorts with respect to early aspirin and beta-blocker use.

The evaluation steps listed above were repeated for two separate patient populations. The first population included the previously defined “sensitive for AMI” set (i.e., all patients who had an EKG order in conjunction with a troponin or a CKMB order entered within the first 24 hours of admission) admitted to VUH cardiology units. This patient population was considered to test the effectiveness of the intervention for all patients suspected for AMI at the time of admission. The second population considered was a subset of the “sensitive for AMI” set with a confirmed discharge diagnosis of AMI. This population included all suspected AMI admissions to VUH cardiology units, who were later discharged with a primary diagnosis of AMI. This group represents the confirmed AMI cases that also satisfied the criterion for suspected AMI at the time of admission. It is important to note that the VUH Cardiology Service receives a number of AMI transfers from other care facilities around middle Tennessee. Some of these transferred AMI patients do not satisfy the admission criterion for the “sensitive for AMI” set because their symptoms might have resolved and their diagnostic tests were performed prior to their arrival at VUH. The second patient population described above excludes patients with a primary discharge diagnosis of AMI who do not satisfy the admission criterion for the “sensitive for AMI” set.

Evaluation of the intervention in this before-and-after design was complicated by a coincidental event, the introduction of a CPOE-based discharge-planning tool.32 The discharge-planning tool was introduced in August 2002, the beginning of the preintervention period of this study and was in place throughout both pre- and postintervention periods. It is a decision-support module that implements AMI discharge guidelines via point of care reminders to improve quality of care for AMI patients including aspirin and beta-blockers at the time of discharge. Its relationship to this study is considered further in the Discussion section.

Outcome Measures

The primary outcome for this study was the rate of ACS order set use. The secondary outcome was the association of the ACS order set use with recommended early aspirin and/or beta-blocker use. The compliance rate for ACS order set use was calculated as the percentage of study patients who had at least one order entered via the ACS order set within 24 hours of admission. Compliance rates for early aspirin and beta-blocker use were individually calculated as the percentage of study patients who had orders to begin each medication within the first 24 hours of admission.

Data Sources

Every order placed by clinicians in the CPOE system is stored in its log files, which, for system monitoring and quality control purposes, contain all the information related to an order including order identification number, order name, order type (pharmacy, nutrition, nursing, etc.), order date and time, order component fields (e.g., frequency, dose, route, etc.), patient medical record number for the order written, ordering physician, hospital unit where the order was entered, and the order set (if any) from which the order was selected. Order set use and compliance analyses were conducted by parsing the CPOE system log files generated during the study period. For each study patient's medical record number, the CPOE system log files generated during the patient's hospital stay were analyzed to identify the matches for the evidence-based quality-of-care indicators considered in this study.

Statistical Analyses

The unit of analysis for the assessment of compliance with both ACS order set use and recommended early aspirin and beta-blocker ordering was the patient. Hypothesized associations between the variables considered in the conceptual framework are provided in ▶. All the variables considered in the framework (implementation of the Admission Advisor, ACS order set use status, early use of evidence-based quality-of-care indicators for AMI) were dichotomous, taking values “0” or “1.” Therefore, the influence of the implementation of the Admission Advisor (x1) on the use of the ACS order set (x2), x1 on early aspirin and beta-blocker use (y), and x2 on y were evaluated by examining a set of univariate and multivariate generalized estimating equations (GEEs) to adjust for the dependency over repeated patient-level observations (e.g., order set use, aspiring use, beta-blocker use) for each admitting physician. The degrees of association between the dependent and the independent variables are represented as odds ratios.

Our analyses did not control for every ordering provider since there was not a simple one-to-one relationship between ordering providers and patients as presented later in the Results section.

Pre- and postintervention patient demographics were compared with the use of two-sided t-test and χ2 test. Finally, two-sided χ2 test was employed to compare the proportions of recommended early aspirin and beta-blocker use before and after the implementation of the CPOE-based discharge-planning tool and prior to the implementation of the Admission Advisor. For all analyses, traditional significance level (α = 0.05) was used to declare a significant association.

Results

Description of the Study Populations

During the study period, 540 patients admitted to VUH cardiology units had an EKG order in conjunction with a troponin or a CKMB order entered within the first 24 hours of admission, satisfying the criterion for a suspected AMI (“sensitive for AMI” set). Of these, 313 were admitted during the preintervention period and 227 were admitted during the postintervention period. Of the 540 suspected AMI admissions, 180 were discharged with a primary hospital-coded discharge diagnosis of AMI (ICD-9 code 410.xx). Of the 180 patients with confirmed AMI, 105 were admitted during the preintervention period and 75 were admitted during the postintervention period.

A total of 135 physicians admitted 540 suspected AMI patients to VUH cardiology units (a mean of 4 ± 3.92 patients per provider; median, 2; range, 1–20). Of these, 84 physicians admitted 313 patients during the preintervention period, and 68 physicians admitted 227 patients during the postintervention period. Of the 135 admitting physicians, 17 overlapped between the pre- and postintervention periods. Analysis of the structure of only the confirmed AMI discharges demonstrated that a total of 88 physicians admitted 180 confirmed AMI patients to VUH cardiology units (a mean of 2 ± 1.67 patients per provider; median, 1.5; range, 1–11). Of these, 53 physicians admitted 105 patients during the preintervention period, and 43 physicians admitted 75 patients during the postintervention period. Of the 88 admitting physicians, eight overlapped between the pre- and postintervention periods.

Although Admission Advisor was directed at the admitting physician (i.e., only the admitting physician is exposed to the intervention), a certain proportion of individual treatment orders, including aspirin and beta-blockers, was entered by different providers throughout the hospital stay. For example, among 427 suspected AMI patients who had an aspirin order placed within the first 24 hours of admission, 40 (8.7%) patients had aspirin ordered by a provider different from the admitting physician. Similarly, 37 of the 396 patients (9.3%) with a beta-blocker order placed within the first 24 hours of admission received the order from a provider different from the admitting physician. Also, among 168 confirmed AMI patients who had an aspirin order placed within the first 24 hours of admission, 21 (12.5%) patients had aspirin ordered by a provider different from the admitting physician. Similarly, 28 of the 152 patients (18.4%) with a beta-blocker order placed within the first 24 hours of admission received the order from a provider different from the admitting physician.

▶ illustrates the characteristics of the study cohorts. A total of 313 suspected AMI patients admitted during the preintervention period had a mean age of 62.27 ± 15.13, 60% were male and 70% were white. The mean age of the 227 suspected AMI patients admitted during the postintervention period was 60.75 ± 14.02; 63% were male and 72% were white. Similarly, 105 confirmed AMI patients admitted during the preintervention period had a mean age of 63.60 ± 14.06; 68% were male and 70% were white. The mean age of the 75 AMI patients admitted during the postintervention period was 65.28 ± 14.07; 68% were male and 68% were white. There were no significant demographic differences between patient cohorts considered for the pre- and postintervention periods.

Table 1.

Description of the Study Population

| ACS |

AMI |

|||||

|---|---|---|---|---|---|---|

| Pre-intervention | Post-intervention | p-Value | Pre-intervention | Post-intervention | p-Value | |

| No. of AMI admissions | 313 | 227 | 105 | 75 | ||

| Mean age, yr ± SD | 62.27 ± 15.13 | 60.75 ± 14.02 | 0.23 | 63.60 ± 14.06 | 65.28 ± 14.07 | 0.27 |

| Sex, % male | 60 | 63 | 0.55 | 68 | 68 | 0.95 |

| Race, % white | 70 | 72 | 0.64 | 70 | 68 | 0.72 |

ACS = acute coronary syndrome; AMI = acute myocardial infarction.

Acute Coronary Syndrome Order Set Use

As illustrated in ▶, for all suspected AMI admissions to VUH cardiology units, there was a significant increase in ACS order set use after the implementation of the Admission Advisor. During the preintervention period, the ACS order set was used for 60% (189 of 313) of the suspected AMI admissions to VUH cardiology units. During the postintervention period, the ACS order set use increased to 70% (161 of 227) for the same patient cohort (odds ratio [OR] = 2.85; 95% confidence interval [CI] = 1.29–6.29; p = 0.009). Although pre- and postintervention difference in ACS order set use showed a similar strong trend for the confirmed AMI cases, the results were not significant (OR = 2.02; 95% CI = 0.92–4.41; p = 0.07).

Table 2.

Summary of Failures to Use ACS Order Set

| No. of Patients without ACS Order Set Use (%) |

Significance* |

||||

|---|---|---|---|---|---|

| Pre-intervention | Post-intervention | OR | p-Value | 95% CI | |

| Suspected AMI admissions | 124/313 (40) | 66/227 (30) | 2.85 | 0.009 | 1.29–6.29 |

| Suspected AMI admissions with confirmed AMI | 56/105 (54) | 27/75 (36) | 2.02 | 0.07 | 0.92–4.41 |

ACS = acute coronary syndrome; AMI = acute myocardial infarction.

Generalized estimating equation analyses: OR (odds ratio), CI (confidence interval).

Compliance with the Evidence-based Quality-of-care Metrics

▶ illustrates the association between the ACS order set use with recommended early aspirin and beta-blocker ordering behavior. For all suspected AMI admissions to VUH cardiology units, ACS order set use yielded a significant increase in early aspirin ordering (OR = 3.01; 95% CI = 1.59–5.70; p = 0.001) and an increase in trend toward significance in beta-blocker ordering (OR = 1.44; 95% CI = 0.96–2.15; p = 0.07). There was a similar trend in aspirin and beta-blocker ordering behavior associated with ACS order set use for all suspected AMI admissions that were later discharged with a confirmed diagnosis of AMI. However, associations were not significant both for aspirin (OR = 3.40; 95% CI = 0.86–13.47; p = 0.08) and beta-blocker (OR = 1.67; 95% CI = 0.77–3.62; p = 0.18).

Table 3.

Failure to Order Aspirin or Beta-blockers Categorized by ACS Order Set Utilization for AMI Admissions to Cardiology Units

| No. of Eligible Patients Not Receiving Therapy (%) |

Significance* |

||||

|---|---|---|---|---|---|

| ACS Order Set Used | ACS Order Set Not Used | OR | p-Value | 95% CI | |

| Suspected AMI admissions | |||||

| Aspirin | 31/350 (8.8) | 42/190 (22.1) | 3.01 | 0.001 | 1.59–5.70 |

| Beta-blocker | 86/350 (24.5) | 58/190 (30.5) | 1.44 | 0.07 | 0.96–2.15 |

| Suspected AMI admissions with confirmed AMI | |||||

| Aspirin | 3/97 (3) | 9/83 (10.8) | 3.40 | 0.08 | 0.86–13.47 |

| Beta-blocker | 12/97 (12.3) | 16/83 (19.2) | 1.67 | 0.18 | 0.77–3.62 |

ACS = acute coronary syndrome; AMI = acute myocardial infarction.

Generalized estimating equation analyses: OR (odds ratio), CI (confidence interval).

Results of analyses comparing recommended early aspirin and beta-blocker ordering before and after the implementation of Admission Advisor are illustrated in ▶. Implementation of the Admission Advisor did not yield a significant increase in early aspirin or beta-blocker ordering for both patient cohorts.

Table 4.

Failure to Order Aspirin or Beta-blockers before and after the Implementation of the Admission Advisor

| Pre-intervention |

Post-intervention |

||||||

|---|---|---|---|---|---|---|---|

| No. of Eligible Patients Not Receiving Therapy (%) | ACS Order Set (Used) | ACS Order Set (Not Used) | Combined | ACS Order Set (Used) | ACS Order Set (Not Used) | Combined | Significance* OR, p-Value (95% CI) |

| Suspected AMI admissions | |||||||

| Aspirin | 16/189 (8.4) | 28/124 (22.5) | 44/313 (14) | 15/161 (9.3) | 14/66 (21.2) | 29/227 (12.7) | 1.17, 0.58 (0.66–2.07) |

| Beta-blocker | 56/189 (29.6) | 38/124 (30.6) | 94/313 (30) | 30/161 (18.6) | 20/66 (30.3) | 50/227 (22) | 1.44, 0.11 (0.91–2.30) |

| Suspected AMI admissions with confirmed AMI | |||||||

| Aspirin | 2/49 (4) | 7/56 (12.5) | 9/105 (8.5) | 1/48 (2) | 2/27 (7.4) | 3/75 (4) | 2.27, 0.27 (0.52–9.85) |

| Beta-blocker | 5/49 (10.2) | 11/56 (19.6) | 16/105 (15.2) | 7/48 (14.5) | 5/27 (18.5) | 12/75 (16) | 0.95, 0.9 (0.41–2.20) |

ACS = acute coronary syndrome; AMI = acute myocardial infarction.

Generalized estimating equation analyses: OR (odds ratio), CI (confidence interval).

Finally, analyses comparing compliance with evidence-based quality-of-care indicators for the AMI patients admitted prior to the implementation of the Admission Advisor were conducted to investigate the effect of the CPOE-based discharge-planning tool on the current study results. A comparison of early aspirin and beta-blocker use before and after the implementation of the discharge-planning tool for AMI patients admitted before the implementation of the Admission Advisor period indicates that implementation of the discharge-planning tool yielded a significant increase in beta-blocker use (p = 0.003 by χ2 test) within the first 24 hours of admission.

Discussion

Leape33 previously identified important steps that can significantly reduce the risk of medical errors. These include reducing reliance on memory, improved access to information, standardization of care, and training. Care provider order entry systems offer a good platform on which to develop interventions that implement these steps and reduce errors. The AHA guidelines for AMI recommend the use of aspirin and beta-blockers within the first 24 hours of admission. Moreover, early aspirin and beta-blocker prescription has been mandated by JCAHO as part of its AMI core quality measures unless it is contraindicated (e.g., active peptic ulcer disease and allergy for aspirin and severe asthma or chronic obstructive pulmonary disease for beta-blockers). In an effort to standardize care and prevent errors of omission, we instituted a CPOE-based decision-support tool that improves admission practices by integrating best of care procedure/diagnosis-specific order sets into the admission workflow. In this study, we evaluated the effectiveness of this intervention on the use of the ACS order set and the association of its use with adherence to two quality-of-care indicators for AMI, aspirin, and beta-blocker use within 24 hours of admission.

The implementation of the Admission Advisor yielded a significant increase in ACS order set use for patients who satisfied the criterion for suspected AMI at the time of admission to VUH cardiology units. Furthermore, use of the ACS order set was associated with a significant increase in recommended early aspirin ordering and a nonsignificant increase in early beta-blocker ordering. For confirmed AMI discharges that satisfied the criterion for suspected AMI at the time of admission, there was an increase (nonsignificant) in ACS order set use and a nonsignificant increase in trend toward significance for early aspirin ordering associated with ACS order set use. It is possible that the increases observed for these parameters did not achieve significance due to the relatively small cohort sizes in the current study.

These results suggest that the intervention had a significant impact on all admissions that satisfied the criteria for a suspected AMI. Although the results show similar trends for the subpopulation of suspected AMI patients who were later discharged with confirmed AMI, the overall impact was not significant, possibly because the sample size for suspected AMI admissions with confirmed discharge diagnosis of AMI was too small to detect a significant effect.

Even though implementation of the Admission Advisor yielded a significant increase in ACS order set use, pre- and postintervention differences with respect to early therapy were not significant both for aspirin and beta-blockers for both cohorts considered in this study. The intervention evaluated in this study did not have a direct measurable effect on compliance with recommended early aspirin and beta-blocker ordering behavior. We suspect these results were partly due to the relatively low rates of the ACS order set use even after the implementation of the Admission Advisor and to the high rates of compliance with aspirin and beta-blockers before the implementation of the Admission Advisor. Although the use of the ACS order set was significantly increased after the implementation of the Admission Advisor, postintervention ACS order set use rates remained relatively low (64% to 70%). Given the existing opportunity to further improve ACS order set use, we are currently investigating some alternative strategies to improve the effectiveness of the Admission Advisor. One of these strategies is to capture the significant diagnoses and conditions of the admitted patients (e.g., problem list) in a codified format, to monitor aspirin and beta-blocker use for all eligible patients, and periodically prompt the physicians based on the assessed status of compliance with aspirin and beta-blockers for the identified patients during order entry.

A number of paper-based quality improvement studies for AMI evaluated the impact of incorporating guidelines into care processes by creating tools that reinforced adherence to evidence-based practices.6,7,8 Mehta et al.6,7 instituted an AMI quality improvement tool that targeted collaborative care focusing on physicians and nurses. They reported that use of AMI standard admission orders was correlated with a significant improvement in early administration of aspirin and beta-blockers and early measurement of lipids. In another study, Biviano et al.8 demonstrated increased adherence to AMI quality-of-care indicators after the implementation of a paper-based chest pain protocol accompanied by a set of standardized orders for patients presenting with ACS. These tools were all paper based and included a preprinted standardized AMI admission orders sheet as part of the quality improvement initiative.

Additional studies have employed template-based approaches similar to the present study to increase compliance with guidelines.21,34 Lobach et al.21 integrated clinical guidelines for the long-term management of diabetes via a computer-generated patient encounter form and demonstrated an increase in physician compliance with the targeted guidelines. Henry et al.34 incorporated clinical guidelines into care processes by representing guidelines as structured encoded text organized into the online patient encounter template. Template-based approaches require the clinician to take extra cognitive steps in applying a particular guideline to the current situation. Therefore, they can be applied to complex guidelines for which automated versions are very difficult to implement.35 In a CPOE-based intervention that targeted the increased use of preventive care for hospitalized patients, Dexter et al.16 found that automated reminders increased ordering rates for prophylactic aspirin at the time of discharge for patients with coronary artery disease.

This study has several limitations. The before-and-after design has the flaws inherent to any study design with historical controls. Also, even though the GEE analyses performed adjusted for the dependency over repeated patient-level outcome measures for each admitting physician, lack of one-to-one mapping between physicians and patients in an inpatient environment did not allow adjusting for every physician who entered orders during the study period. A team of physicians including interns, residents, fellows, and attending physicians enter orders for an individual patient during the hospital stay. For example, even though it is the admitting physician who is exposed to the intervention (i.e., only one physician admits the patient), it is not always the admitting physician who enters aspirin and beta-blocker orders later during the hospital stay. However, the design of the Admission Advisor allows the admitting physician's choice of using the order set to have a downstream effect on subsequent users by setting the selected order set as the default order set. Therefore, we expect that the action of the admitting physician will influence the behavior of the other physicians who enter orders for the same patient subsequently, allowing GEE adjusting for the admitting physician to be a valid model for the analysis of the data. Another limitation of this study is that the postintervention period included July, the month when new house staff members enter the residency program. Finally, cardiology unit–based case managers assign all cardiology patients to a diagnosis/procedure-specific pathway and ensure that the care delivered to the patients complies with the interventions outlined for the pathway in a timely and cost-effective fashion. Therefore, it is possible that their actions decreased the relative effect of the intervention on compliance with the quality measures considered in this study.

Another reason that we believe has contributed to the absence of significant pre/post difference in early aspirin and beta-blocker use is institutional implementation of the overlapping in scope AMI discharge-planning tool eight months prior to the implementation of the Admission Advisor. The discharge-planning tool was designed both to improve discharge processes and to document the level of compliance with JCAHO-mandated discharge quality measures for AMI patients. It addressed therapeutic actions related to those targeted by the Admission Advisor including the promotion, when appropriate, of aspirin and beta-blocker prescription at discharge. A pre- and postdischarge-planning tool comparison of early aspirin and beta-blocker ordering demonstrated significantly higher early beta-blocker ordering after the implementation of the discharge-planning tool. Aspirin ordering within the first 24 hours of admission in the predischarge-planning tool period was high and remained so in the postdischarge-planning tool period. These high compliance rates during the preintervention period of our study require a much larger patient population to demonstrate significant pre/post differences in early aspirin and beta-blocker use.

A final consideration influencing the current study is the lack of use of the CPOE system in the Emergency Department at the time of the study. This affects reported study results in two ways. First, the time the patient spends in the Emergency Department represents the earliest phase of hospital care, and, ideally, the Admission Advisor interventions should have been available during that time but were not. Second, it is possible that patients who had “obvious” clinical and laboratory signs of AMI while in the Emergency Department received aspirin there and that clinicians on the admitting cardiology ward were aware of this and did not give additional aspirin until at least 24 hours had elapsed (making their behavior appear to be “noncompliant”). The latter situation would artificially decrease the reported rates of aspirin administration in the current study.

In summary, a CPOE-based intervention designed to improve admission practices by prompting physicians to initiate disease/diagnosis-specific order sets at the time of admission was evaluated and resulted in a significant increase in ACS order set use for all suspected AMI admissions and a nonsignificant increase for the confirmed AMI patients. Moreover, when the ACS order set was used, patients had a higher chance of receiving aspirin and beta-blockers within the first 24 hours of admission. Before-and-after evaluation of the intervention, however, did not have a direct measurable impact on early therapy. Provision of just-in-time information at the time and place that it is needed has been among the most useful applications of computerized decision support. Such applications result in enhanced safety and increased efficiency by significantly reducing the chance of error caused by reliance on memory, limited access to information, and a lack of care standardization and training. Despite the proven effectiveness of computerized decision support, its acceptance by providers for optimal compliance remains to be a challenge.

This research was supported by VUMC, Agency for Healthcare Research and Quality, Centers for Education and Research in Therapeutics cooperative agreement (grant no. HS 1-0384), and National Library of Medicine (grant nos. R01LM06920 and R01LM LM07995).

The authors acknowledge the technical assistance provided by Ty Webb, Grace Brennan, Martha Newton, Rick Stotler, and Dario Guise. They thank Irene Feurer, Rafe Donahue, and Dominik Aronsky for their insightful suggestions for data analyses and are also grateful to Penny Vaughan, Jeannie Byrd, and Janis Smith for their assistance with the implementation.

Disclosure: The WizOrder Care Provider Order Entry (CPOE) system described in this manuscript was developed by Vanderbilt University Medical Center faculty and staff within the School of Medicine and Informatics Center beginning in 1994. In May 2001, Vanderbilt University licensed the product to a commercial vendor who is modifying the software. Drs. Miller and Waitman have been recognized by Vanderbilt as contributing to the authorship of the WizOrder software and have received and will continue to receive royalties from Vanderbilt under the University's intellectual property policies. While these involvements could potentially be viewed as conflict of interest with respect to the submitted manuscript, the authors have taken a number of concerted steps to avoid an actual conflict, and those steps have been disclosed to the Journal during the editorial review.

Footnotes

Acute Coronary Syndrome (ACS) is the clinical term that covers a group of clinical conditions associated with acute myocardial ischemia (substernal chest pain suggestive of insufficient blood supply to the heart muscle). The spectrum of clinical conditions covered under ACS range from unstable angina to AMI. Initial presentation of both is similar, and additional tests are required to determine which patients had AMI.

References

- 1.Park RE, Brook RH, Kosecoff J, et al. Explaining variations in hospital death rates. Randomness, severity of illness, quality of care. JAMA. 1990;264:484–90. [PubMed] [Google Scholar]

- 2.Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington, DC: National Academy Press, 2001. [PubMed]

- 3.Critical pathways for management of patients with acute coronary syndromes: an assessment by the National Heart Attack Alert Program. Am Heart J. 2002;143:777–89. [DOI] [PubMed] [Google Scholar]

- 4.Pearson SD, Goulart-Fisher D, Lee TH. Critical pathways as a strategy for improving care: problems and potential. Ann Intern Med. 1995;123:941–8. [DOI] [PubMed] [Google Scholar]

- 5.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice: a systematic review of rigorous evaluations. Lancet. 1993;342:1317–22. [DOI] [PubMed] [Google Scholar]

- 6.Mehta RH, Montoye CK, Gallogly M, et al. Improving quality of care for acute myocardial infarction: the Guidelines Applied in Practice (GAP) Initiative. JAMA. 2002;287:1269–76. [DOI] [PubMed] [Google Scholar]

- 7.Mehta RH, Das S, Tsai TT, Nolan E, Kearly G, Eagle KA. Quality improvement initiative and its impact on the management of patients with acute myocardial infarction. Arch Intern Med. 2000;160:3057–62. [DOI] [PubMed] [Google Scholar]

- 8.Biviano AB, Rabbani LE, Paultre F, et al. Usefulness of an acute coronary syndrome pathway to improve adherence to secondary prevention guidelines. Am J Cardiol. 2003;91:1248–50. [DOI] [PubMed] [Google Scholar]

- 9.Kuperman GJ, Gibson RF. Computer physician order entry: benefits, costs, and issues. Ann Intern Med. 2003;139:31–9. [DOI] [PubMed] [Google Scholar]

- 10.Chertow GM, Lee J, Kuperman GJ, et al. Guided medication dosing for inpatients with renal insufficiency. JAMA. 2001;286:2839–44. [DOI] [PubMed] [Google Scholar]

- 11.Overhage JM, Tierney WM, Zhou X-HA, McDonald CJ. A randomized trial of “corollary orders” to prevent errors of omission. J Am Med Inform Assoc. 1997;4:364–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Evans RS, Pestotnik SL, Classen DC, et al. A computer-assisted management program for antibiotics and other antiinfective agents. N Engl J Med. 1998;338:232–8. [DOI] [PubMed] [Google Scholar]

- 13.Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. JAMA. 1998;280:1311–6. [DOI] [PubMed] [Google Scholar]

- 14.Payne TH, Hoey PJ, Nichol P, Lovis C. Preparation and use of preconstructed orders, order sets, and order menus in a computerized provider order entry system. J Am Med Inform Assoc. 2003;10:322–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Achtmeyer CE, Payne TH, Anawalt BD. Computer order entry system decreased use of sliding scale insulin regimens. Methods Inf Med. 2002;41:277–81. [PubMed] [Google Scholar]

- 16.Dexter PR, Perkins S, Overhage JM, Maharry K, Kohler RB, McDonald CJ. A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med. 2001;345:965–70. [DOI] [PubMed] [Google Scholar]

- 17.McDonald CJ, Hui SL, Tierney WM. Effects of computer reminders for influenza vaccination on morbidity during influenza epidemics. MD Comput. 1992;9:304–12. [PubMed] [Google Scholar]

- 18.Overhage JM, Tierney WM, McDonald CJ. Computer reminders to implement preventive care guidelines for hospitalized patients. Arch Intern Med. 1996;156:1551–6. [PubMed] [Google Scholar]

- 19.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting. J Am Med Inform Assoc. 1996;3:399–409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Pestotnik SL, Classen DC, Evans RS, Burke JP. Implementing antibiotic practice guidelines through computer-assisted decision support: clinical and financial outcomes. Ann Intern Med. 1996;124:884–90. [DOI] [PubMed] [Google Scholar]

- 21.Lobach DF, Hammond WE. Computerized decision support based on a clinical practice guideline improves compliance with care standards. Am J Med. 1997;102:89–98. [DOI] [PubMed] [Google Scholar]

- 22.Tierney WM, McDonald CJ, Martin DK, Rogers MP. Computerized display of past test results. Effect on outpatient testing. Ann Intern Med. 1987;107:569–74. [DOI] [PubMed] [Google Scholar]

- 23.Tierney WM, Miller ME, McDonald CJ. The effect on test ordering of informing physicians of the charges for outpatient diagnostic tests. N Engl J Med. 1990;322:1499–504. [DOI] [PubMed] [Google Scholar]

- 24.Tierney WM, Miller ME, Overhage JM, McDonald CJ. Physician inpatient order writing on microcomputer workstations. Effects on resource utilization. JAMA. 1993;269:379–83. [PubMed] [Google Scholar]

- 25.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med. 1999;106:144–50. [DOI] [PubMed] [Google Scholar]

- 26.Geissbuhler A, Miller RA. A new approach to the implementation of direct care-provider order entry. Proc AMIA Annu Fall Symp. 1996:689–93. [PMC free article] [PubMed]

- 27.Bansal P, Aronsky D, Talbert D, Miller RA. A computer based intervention on the appropriate use of arterial blood gas. Proc AMIA Symp. 2001:32–6. [PMC free article] [PubMed]

- 28.Heusinkveld J, Geissbuhler A, Sheshelidze D, Miller R. A programmable rules engine to provide clinical decision support using HTML forms. Proc AMIA Symp. 1999:800–3. [PMC free article] [PubMed]

- 29.Starmer JM, Talbert DA, Miller RA. Experience using a programmable rules engine to implement a complex medical protocol during order entry. Proc AMIA Symp. 2000:829–32. [PMC free article] [PubMed]

- 30.Sanders DL, Miller RA. The effects on clinician ordering patterns of a computerized decision support system for neuroradiology imaging studies. Proc AMIA Symp. 2001:583–7. [PMC free article] [PubMed]

- 31.Joint Commission on Accreditation of Healthcare Organizations (JCAHO) hospital core measures for Acute Myocardial Infarction. Accessed from: http://www.jcaho.org/pms/core+measures/information+on+final+specifications.htm/. Accessed March 22, 2004.

- 32.Butler J, Speroff T, Arbogast P, et al. Improved compliance with quality measures at hospital discharge with a computerized provider order entry system. Am Heart J. In press. [DOI] [PubMed]

- 33.Leape LL. Error in medicine. JAMA. 1994;272:1851–7. [PubMed] [Google Scholar]

- 34.Henry SB, Douglas K, Galzagorry G, Lahey A, Holzemer WL. A template-based approach to support utilization of clinical practice guidelines within an electronic health record. J Am Med Inform Assoc. 1998;5:237–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tierney W, Overhage J, Takesue B, et al. Computerizing guidelines to improve care and patient outcomes: the example of heart failure. J Am Med Inform Assoc. 1995;2:316–22. [DOI] [PMC free article] [PubMed] [Google Scholar]