Abstract

The Multi-Country Evaluation of the Integrated Management of Childhood Illness (IMCI) includes studies of the effectiveness, cost, and impact of the IMCI strategy in Bangladesh, Brazil, Peru, Tanzania, and Uganda.

Seven questions were addressed when the evaluation was designed: who would be in charge, through what mechanisms IMCI could affect child health, whether the focus would be efficacy or effectiveness, what indicators would be measured, what types of inference would be made, how costs would be incorporated, and what elements would constitute the plan of analysis.

We describe how these questions were answered, the challenges encountered in implementing the evaluation, and the 5 study designs. The methodological insights gained can improve future evaluations of public health programs.

Integrated Management of Childhood Illness (IMCI) is a strategy for improving child health and development. The IMCI strategy was developed in a stepwise fashion. It began with a set of case-management guidelines for sick children seen in first-level health facilities. Over time, the strategy expanded to include a range of guidelines and interventions addressing child health needs at household, community, and referral levels. A detailed review of the development and evaluation of the case-management guidelines is available elsewhere.1–3

IMCI has 3 components, each of which is adapted in countries on the basis of local epidemiology, health system characteristics, and culture. One component focuses on improving the skills of health workers through training and reinforcement of correct performance. Training is based on a set of adapted algorithms that guide the health worker through a process of assessing signs and symptoms, classifying the illness on the basis of treatment needs, and providing appropriate treatment and education of the child’s caregiver. The IMCI guidelines include identifying malnutrition and anemia, checking vaccination status, providing nutritional counseling, and communicating effectively with mothers. A second component of IMCI aims to improve health system supports for child health service delivery, including the availability of drugs, effective supervision, and the use of monitoring and health information system data. The third component focuses on a set of family practices that are important for child health and development and encourages the development and implementation of community- and household-based interventions to increase the proportions of children exposed to these practices.

The ministries of health in Tanzania and Uganda began implementing IMCI in 1996. In the 8 years since then, over 80 additional countries have adopted the strategy and gained significant experience in its implementation.1,4

Evaluation received special attention throughout the development and introduction of IMCI. The strategy includes numerous specific interventions, most of which have been rigorously tested in controlled trials.3 Examples include antibiotic treatment for pneumonia, oral rehydration therapy for diarrhea, antimalarials, immunizations, breastfeeding counseling, anemia diagnosis and treatment, and vitamin A supplementation. Nevertheless, there was a need to evaluate the strategy as a whole as an approach to the delivery of these proven child health interventions.

Planning for the Multi-Country Evaluation of IMCI Effectiveness, Cost and Impact (MCE) began in 1997. The objectives were to:

evaluate the impact of the IMCI strategy as a whole on child health, including child mortality, child nutritional status, and family behaviors

evaluate the cost-effectiveness of the IMCI strategy

document the process and intermediate outcomes of IMCI implementation, as a basis for improved planning and implementation of child health programs

The Department of Child and Adolescent Health and Development of the World Health Organization (WHO) coordinates the evaluation.

This article has 3 aims. In the first part, we describe the early design decisions as well as their implications for study implementation. In the second part, we explain the challenges encountered in implementing the evaluation, how each was addressed, and the 5 study designs currently being implemented. The conclusions section summarizes the implications of this work for the design of large-scale evaluations of public health programs.

We have used the simple past tense throughout to improve readability. In fact, many of the MCE activities described have already been completed, some are under way, and the remainder are planned for the future.

EARLY DESIGN DECISIONS

We addressed 7 basic questions when designing the evaluation: (1) Who will be in charge? (2) Through what mechanisms will IMCI affect child health? (3) Will the evaluation focus on efficacy, effectiveness, or something in between? (4) What indicators will be measured? (5) What type of inference is required? (6) How will costs be incorporated into the design? and finally (7) What will be the key elements in the MCE plan of analysis? This section describes how the MCE responded to each of these questions.

Who Will Be in Charge of the Evaluation?

As described above, there was broad consensus on the need for an impact evaluation of IMCI. From the outset, however, the need for a clear division of roles between those responsible for developing and implementing IMCI and those responsible for evaluating it was recognized. WHO therefore established an external MCE Technical Advisory Group and charged it with making recommendations on evaluation design, implementation, and the analysis and interpretation of results. All of the MCE advisers who are authors of this article (C. G. V., J.-P. H., P. V., R. E. B.) were independent of IMCI implementation in the study sites; 3 other individuals who subsequently joined the Technical Advisory Group (D. de Savigny, L. Mgalula, J. Armstrong Schellenberg) had limited (and indirect) involvement in the implementation of IMCI in Tanzania and were therefore able to contribute in important ways to the measurement and interpretation of contextual factors and the analysis of the intermediate results.

In all sites, principal investigators were national scientists. In 2 of the 5 sites, the MCE selected principal investigators on a competitive basis, with input from the ministry of health. In the other 3 sites, the principal investigators were senior researchers with previous public health research experience. An MCE publications and data use team was established, and guidelines were developed for review, clearance, and responses to requests for specific data and analyses. MCE principal investigators had final authority over the publication of the evaluation results, but consultation with the ministry of health, WHO, and the MCE technical advisers was strongly recommended to ensure that perspectives arising from experience in IMCI implementation and the broader MCE evaluation were incorporated appropriately.

Advisers and MCE investigators worked together to develop mechanisms that would ensure substantive involvement by those responsible for implementing IMCI. This involvement increased the likelihood that MCE results would be relevant to the needs of program decisionmakers and that they would be understood, accepted, and acted on by child health staff. Ministries of health and the staff of WHO, UNICEF, and bilateral agencies supporting IMCI implementation in the MCE sites (e.g., the US Agency for International Development and the Department for International Development, UK) at country, regional, and headquarter levels were involved in planning the evaluation, selecting sites and investigators, commenting on study design and instruments, reviewing preliminary results and participating in their interpretation, and developing feedback mechanisms for those involved in implementing IMCI. A list of implementation partners for each of the MCE sites is presented in the next section.

In general, this approach worked well. There were numerous instances in which the guidelines on roles and responsibilities were used as the basis for resolving tensions between program staff and evaluators, both within sites and for the MCE as a whole. Intermediate MCE results have been used by child health staff in concrete ways to improve their policies and program delivery strategies. At the same time, the MCE was designed and carried out by evaluators not involved in IMCI implementation to ensure that the resulting reports and publications were objective.

Through What Mechanisms Will IMCI Affect Child Health?

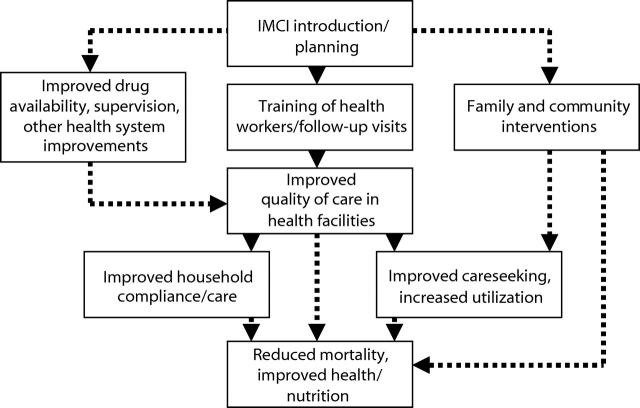

IMCI is a complex strategy, incorporating numerous interventions that affect child health through a variety of pathways. Designing the evaluation required the development of a model that defined how the introduction of IMCI was expected to lead to changes in child mortality, health, and nutrition (these changes are referred to as “impact” within the MCE). Figure 1 ▶ shows a simplified version of the model; the full model expands each of the boxes to provide details about expected changes. Further information is available at the MCE Web site.5 This conceptual model was used as the basis for establishing siteselection criteria, defining the indicators to be measured, developing the data collection tools, and estimating the magnitude of expected impact and of associated sample sizes.

FIGURE 1—

Outline of the Integrated Management of Childhood Illness (IMCI) impact model.

Efficacy, Effectiveness, or Something in Between?

Evaluations of large-scale interventions may involve different degrees of control by the research team.6 Evaluations of program efficacy are conducted when interventions are delivered through health services in relatively restricted areas, under close supervision. They answer the question of whether—given ideal circumstances—the intervention has an effect. On the other hand, evaluations of program effectiveness assess whether the interventions have an effect under the “real-life” circumstances faced by health services. Few public health programs are implemented in ways that can support evaluations that are either entirely “efficacy” or entirely “effectiveness.” This dimension of program evaluation should therefore be considered as a continuum.

Within the MCE, the advisers agreed to focus on sites where ministries of health were implementing IMCI under routine conditions, evaluating the effectiveness of the strategy rather than its efficacy. They also agreed, however, to include in the MCE design one site in which program implementation fell closer to the efficacy end of the continuum. Effectiveness was emphasized because earlier studies had demonstrated the efficacy of the individual interventions within IMCI. In addition, decisionmakers’ questions about IMCI tended to focus on the extent to which IMCI could be implemented given the health system constraints in most low- and middle-income countries and its effectiveness under those conditions.

What Indicators Will Be Measured?

The stepwise approach proposed by Habicht et al.7 was used to guide the evaluation design. Table 1 ▶ shows the main categories of indicators used in the MCE.

TABLE 1—

A Stepwise Approach to Evaluation Indicators Used in the Multi-Country Evaluation of IMCI Effectiveness, Cost and Impact (MCE)

| Indicator | Question | Example of Indicators |

| Provision | Are the services available? | Number of health facilities with health workers trained in IMCI per 100 000 population |

| Are they accessible? | Proportion of the population within 30 minutes’ travel time of a health facility with IMCI activities | |

| Is their quality satisfactory? | Proportion of health workers with appropriate case-management skills | |

| Utilization | Are services being used? | Number of attendances of under-5’s per 1000 children |

| Coverage | Is the target population being reached? | Proportion of under-5’s in population who were seen by a trained health worker |

| Impact | Were there improvements in disease patterns or health-related behaviors? | Time trends in childhood deaths |

| Improvements in breastfeeding indicators or in health care–seeking behaviors |

Note. IMCI = Integrated Management of Childhood Illness; under-5 = child younger than 5 years old.

A logical order leads from provision to impact indicators. Adequate provision means that the services are available and accessible to the target population and that the quality of services is appropriate. Once services are available, the population makes use of them, in this case by bringing their children to health care services. Utilization then results in a specific level of population coverage. Finally, the achieved population coverage may lead to changes in behaviors or an impact on health. Any important shortcomings at the early stages of this chain will result in failures in the later indicators.

The MCE emphasized the assessment of IMCI impact. Nonetheless, WHO and the advisers agreed from the start that the evaluation should also assess provision, utilization, and population coverage indicators. If an impact was demonstrated, this approach would document the underlying steps that led to success and contribute to the adoption and successful implementation of IMCI in other settings. If no impact was documented, the stepwise approach would reveal where and why IMCI failed and identify problems that needed to be addressed.

The stepwise approach was also cost-effective. Complex and costly impact evaluations were carried out within an MCE site only if simpler evaluations of the preceding steps showed that IMCI implementation was progressing well and was associated with the expected intermediate outcomes (Figure 1 ▶). Provision or utilization was assessed by using routine information systems or by surveying health facilities. However, population coverage and impact usually required field data collection with important cost implications. The stepwise approach resulted in substantial savings because relatively simple evaluations showed that more time was needed to expand the provision of IMCI interventions before more costly population coverage or impact studies were conducted.

What Type of Inference Is Required?

This question refers to the types of data and level of certainty that decisionmakers need to act on evaluation findings.7,8 Adequacy evaluations refer to whether changes in indicators—be they provision, utilization, population coverage, or impact indicators—met the initial goals for introduction of the intervention. If there were no explicit goals, the question is whether trends are moving in the expected direction and are of the expected magnitude. Plausibility evaluations go a step farther to ask whether the observed changes are likely to be due to the intervention. These require a control group and the ability to rule out alternative explanations for the trends. Finally, probability evaluations involve randomized designs to determine the effect of the intervention. For the reasons discussed in our companion article,8 the MCE emphasizes plausibility-type evaluations, but the advisers agreed that 1 study with a probability design should be included.

An essential element in the MCE design was the focus on demonstrating adequacy in each site, even when the design could also support plausibility or probability inferences. This was because the adequacy approach answered the question of whether goals for IMCI had been achieved, while plausibility and probability approaches were concerned with the existence of an effect of IMCI. Child health decisionmakers were posing both types of questions at the time the MCE was designed: (1) How well is IMCI being implemented? and (2) Where IMCI is implemented, what is its effectiveness, and at what cost? The relative emphasis on these 2 questions varied, both over time and across the intended audiences for the evaluation results.

How Will Costs Be Incorporated Into the Design?

Decisionmakers were demanding information on the costs and cost-effectiveness of IMCI. The advisers and WHO were faced with 2 major decisions. First, should the MCE measure only the costs of health services provision or all associated costs, including those incurred by society broadly and households specifically? Second, cost-effectiveness relative to what? No child health services? Existing services? “High-quality” services based on existing disease-specific programs such as those targeting diarrhea and acute respiratory infections? The MCE results and conclusions would vary widely depending on how the evaluation addressed these 2 questions. The final MCE design adopted an economic methodology that measured all costs associated with child health in districts implementing the IMCI strategy. These costs were compared with all costs associated with child health in districts that were not implementing the IMCI strategy at the time of the evaluation. Details of the economic evaluation are available elsewhere.9

The overall objectives of the MCE costing methodology were defined as follows:

To estimate the total costs of providing IMCI in a district; that is, the full costs of services to children aged younger than 5 years using IMCI. These costs were estimated from the perspective of society as a whole. All costs were included, regardless of the source. This allowed a generalized cost-effectiveness analysis.

To estimate the additional (incremental) costs of introducing and running IMCI from the societal perspective; for example, what resources were required in addition to those already used to provide child health care in each setting. This allowed a traditional incremental cost-effectiveness analysis, asking if the additional benefits over current practice justified the additional resources.

To provide the MCE sites with information about the financial expenditures involved in introducing and delivering IMCI services in their settings.

What Will Be the Central Elements of the MCE Plan of Analysis?

A central challenge in designing the MCE was anticipating the various types and levels of analysis that would be required to achieve the objectives. The initial proposals from each site included a plan of analysis tailored to its specific designs and incorporating plans for the measurement of the standard MCE indicators. The results generated from these site-specific plans formed the basis for qualitative cross-site analyses designed to shed further light on the cost-effectiveness and impact of IMCI.

The core analyses involved the development of analytic methods and approaches for the evaluation of costs and cost-effectiveness, quality of care, and equity. Small technical working groups involving at least 1 MCE technical adviser with pertinent expertise and one MCE site investigator were established to address each of these areas. With the exception of equity, the MCE team based at WHO recruited a technical consultant whose primary responsibility was to coordinate the work in each area and to provide ongoing assistance to the site investigators. Once relevant data had been collected and preliminary analyses completed, workshops were held to apply various analytic approaches and agree on a standard plan of analysis.

Supplemental analyses, defined as important but not directly related to the original objectives of the MCE, developed over time. These included analyses driven by the needs of decisionmakers (e.g., the financial costs of implementing IMCI in Tanzania),10 analyses to address specific methodological questions (e.g., application of various methods of constructing wealth indices11–13 based on data about household possessions and characteristics of a family’s house available from the MCE household surveys), and other types of opportunistic analyses responding to questions that could be addressed through the MCE data sets (e.g., improving the correct use of antimicrobials through IMCI case-management training).14

IMPLEMENTATION OF THE EVALUATION

Guided by the design decisions described above, the MCE consisted of a series of independent studies with compatible designs, each tailored to the stage and characteristics of IMCI implementation in the participating country. The set of site-specific studies included those with prospective, retrospective, and mixed designs. They reflected a continuum from efficacy to effectiveness, with variable degrees of influence from the evaluation team on program implementation. Each study included a plausibility-type evaluation, regardless of whether a probability design was also present. All studies measured an identical set of indicators and, with few exceptions, used identical data collection tools. Investigators also added a limited number of site-specific indicators to respond to local characteristics and questions. Assessments of costs to providers and clients were included as an essential aspect of the evaluation. In this section, we describe how the MCE was implemented.

Selecting Countries for the MCE

Four categories of criteria were used to choose the countries for inclusion in the MCE.

Characteristics that should be present in all sites.

(1) Adequate IMCI implementation covering the 3 components of IMCI (health worker training, interventions targeting important family practices, health systems support); (2) timely IMCI implementation compatible with the time frame of the evaluation (see “Defining the Time Frame for the Evaluation” below); (3) sufficient population size covered by IMCI interventions to provide required sample sizes for the MCE; (4) availability of partners including the ministry of health, researchers, and funding agencies to support both IMCI implementation and the evaluation activities.

Characteristics that facilitated mortality measurement.

High mortality level, to increase likelihood of a measurable impact, but studies were also to be carried out in intermediate-mortality scenarios, where improvements in intermediate outcomes and reductions in cost were examined even though no great effect on mortality was expected.

Characteristic important for specific study designs.

Political stability is important for prospective designs to ensure full implementation.

Characteristics in which diversity was sought among the set of studies.

(1) Region of the world—ideally, at least one study in each major region of the world; (2) Malaria prevalence—studies both in high- and low-risk areas for malaria; (3) Level of existing services and programs—studies in areas with different levels of development of health services; (4) Community organization—studies in areas where communities were poorly organized and areas with strong community programs.

Selection of countries for the MCE was more challenging and time-consuming than expected. Discussions with staff at WHO central and regional offices resulted in a rank-ordered short list of countries where the above conditions were likely to be met. Teams of MCE advisers and WHO staff made at least 1 visit to each of 12 countries. For many countries, more than 1 site visit was needed because information on eligibility criteria was not readily available. On a few occasions, the MCE commissioned small studies to gather these data. Many important lessons regarding IMCI implementation were learned in the process of conducting these country reviews.15

Defining the Time Frame for the Evaluation

Prospective evaluations must ensure that sufficient time is allowed for the intervention to affect the impact indicators. The design must take into account the time required for (a) achievement of full implementation with high population coverage, (b) the intervention to have a biological effect, and (c) measurement of the final impact indicators.

On the basis of the MCE conceptual model, Table 2 ▶ presents the assumptions used in developing the MCE studies. It suggests, for example, that at least 1 to 2 years of implementation work would be needed at the country level to move from the introduction of IMCI to population coverage with all 3 IMCI components (Figure 1 ▶) in the study area. Once population coverage was achieved, a part of the biological impact of IMCI on mortality would occur relatively quickly, but other effects (and especially those that require changes in family behaviors) might not be realized for years. Finally, sufficient time was needed to allow measurement of the impact indicators.

TABLE 2—

Time Required for Evaluating IMCI Impact on Child Mortality

| Component | Issue | Time Required |

| Implementation | Time required for reaching a high coverage in a geographic area | No less than 1–2 years for training health workers and increasing utilization rates. |

| Biological effect | Time needed for mortality reduction | Improved case management of severe infections may lead to immediate mortality reduction. |

| Improving care-seeking and changing behaviors related to nutrition (breastfeeding, complementary feeding) will take longer. At least 2 years should be allowed forthe full impact of IMCI to be detected. | ||

| Impact measurement | Time needed for measuring the impact indicator | At least 1 year, if mortality surveillance is used; up to 2 years for retrospective mortality surveys. |

Note. IMCI = Integrated Management of Childhood Illness.

A larger temporal frame bounds the MCE as a whole. Decisionmakers were already posing questions about the cost-effectiveness and impact of IMCI at the time of MCE design. A somewhat arbitrary date of the end of 2005 was set, by which time all impact and cost-effectiveness results would be available. This reflects a compromise between the increased validity offered by longer-term studies and the need to support sound decisionmaking about child health intervention priorities.

Collecting Data of Different Types and at Different Levels

For IMCI to have an impact on child health, changes were needed at several different levels (Figure 1 ▶), starting at the national level and moving down to the household. The MCE needed to document IMCI implementation at all levels to understand the evaluation results on IMCI impact and cost-effectiveness.

A list of indicators was prepared that addressed essential variables at each level. At the district and national levels, MCE staff interviewed health managers and reviewed records to document IMCI implementation activities. These activities included the training of health workers, supervision, drug and vaccine supplies, equipment, and related issues. Data on district-level costs were also collected.

Documenting the quality of care at the health facility level required the development of a health facility survey tool. This tool was developed in collaboration with an interagency Working Group on IMCI Monitoring and Evaluation and was then adapted for use in the MCE. Further information about the survey tools for routine use is available at http://www.who.int/child-adolescent-health. Four of the 5 MCE sites conducted health facility surveys, involving samples of no fewer than 20 facilities. A survey team spent at least 1 day per health facility and observed health workers managing an average of 6 sick children. The performance of the local health worker was assessed through a reexamination of the same children by a trained supervisor who represented the “gold standard” for IMCI case management.

The MCE developed and tested several quality of care indices for the statistical analysis of health worker performance. Surveyors interviewed mothers as they left the health facility to assess their understanding of how to continue the care of the child at home. Information was also obtained on the facility’s physical structure, equipment, drug and vaccine supplies, utilization statistics, and costs. Data on other supports for health worker performance, such as IMCI follow-up visits after training, supervision, and supervision quality (as reflected in whether the supervisory visit included the observation of health worker performance with feedback to the health worker), were collected both through interviews with the health workers and reviews of national, district, and facility records. Continuous rounds of monitoring at the health facility level were included in the MCE designs in Uganda and Bangladesh and used to support analyses of health worker performance over time and its correlates.

Sample surveys of households were used to measure the MCE indicators related to care-seeking behavior, population coverage levels for selected interventions (including vaccinations, micronutrient supplementation, use of insecticide-treated bed nets to prevent malaria, and oral rehydration therapy), use of outpatient and inpatient health services, and compliance with health workers’ recommendations. If the child had used health services recently, surveyors obtained detailed information on direct and indirect costs. Demographic, socioeconomic, and environmental characteristics of the family and household were also recorded.

The household survey also collected information on recent morbidity, feeding practices (breastfeeding and use of complementary foods), and anthropometry (weight and height). In some countries, children were tested for anemia with portable hemoglobinometers.

The plausibility approach used in the MCE required collecting data on external (non-IMCI) factors that might account for observed changes in child health. The description of these contextual factors was particularly important at the time of this evaluation, when rapid changes were under way in many national health services because of political, economic, and structural reforms. The MCE therefore developed tools for the collection of information on changes in socioeconomic, demographic, environmental, and other relevant factors over the course of the evaluation. MCE investigators also documented the delivery of other child health interventions in the study area and their population coverage.

Measuring Mortality

Mortality impact was the ultimate indicator in the MCE design. Most of the impact of IMCI on mortality is likely to occur through reductions in deaths from 5 causes: pneumonia, diarrhea, malaria, measles, and malnutrition. IMCI was not likely to have an impact on early neonatal mortality because at the time of the evaluation there were no specific IMCI interventions directed at the major causes of death among children aged younger than 7 days. The 2 main mortality indicators in the study were therefore the under-5 mortality rate (probability of dying between birth and exactly 5 years of age) and the post–early-neonatal under-5 mortality rate (probability of dying between 7 days and exactly 5 years of age). Investigators also collected information on cause of death to support cause-specific analyses.

Countries involved in the MCE used 3 alternative methodologies for mortality measurement. The choice of a method was largely based on local data availability and quality. These included mortality surveys, demographic surveillance, and calculation of mortality ratios based on vital statistics.

Mortality surveys.

Probability samples of women of reproductive age (usually 15–49 years) living in the study area were asked to provide information on births and deaths among their children in recent years. Mortality rates were calculated retrospectively for different time periods before the survey date. Information on causes of death was obtained by asking respondents to provide a detailed description of the child’s condition prior to death (verbal autopsy).

Demographic surveillance.

Two of the MCE study areas had continuous demographic surveillance systems with records of all births, deaths, pregnancies, and changes in residence in to or out of the surveillance area. These allowed the calculation of under-5 mortality rates on an annual basis. Verbal autopsies provided information on causes of death.

Vital statistics.

In countries where official death registration systems were reasonably reliable, the ratios of infant and of under-5 deaths to all deaths (age-specific proportionate mortality ratios) based on vital statistics were calculated. Although mortality registration was incomplete in some sites, the use of age-specific proportionate mortality ratios reduced possible biases. The proportions of infant and under-5 deaths due to selected conditions targeted by IMCI (diarrhea, pneumonia, malaria, measles, and malnutrition; hereafter referred to as cause-specific mortality ratios) were also calculated. Validation exercises were carried out to check data accuracy, for example, by comparing MCE indicator results with those from demographic and health surveys.

The use of multiple methods for mortality assessment is consistent with the flexibility of the MCE design. In each country, existing sources of mortality data—including vital statistics and established demographic surveillance systems—were assessed during the planning of the study. If data from these sources were not available or were judged unreliable, the more costly option of a demographic survey was considered. Despite the use of different methods, consistent and reliable information on mortality was produced.

Calculating Sample Sizes

Mortality reduction was the primary impact measure within the MCE. A computer simulation model based on the work of Becker and Black16 was used to estimate the magnitude of mortality reduction that could be expected from introducing IMCI in different settings. These simulations led to the assumption that IMCI implementation in the types of settings where the MCE was being introduced was likely to reduce under-5 mortality by no less than 20%. In each site, sample sizes were thus calculated to allow detection of reductions of at least 20%. Sample size calculations took into account the number of areas in which IMCI was being introduced, the clustered nature of the data, and the type of mortality measurement approach (survey, surveillance, or vital statistics).

Certifying Adequacy of IMCI Implementation for the MCE

Consistent with the stepwise approach described above, MCE advisers, investigators, and their IMCI counterparts from ministries of health and WHO agreed that measuring impact in the absence of a reasonable level of implementation was not warranted. Results from the computer simulation model and discussions with those implementing IMCI were used to estimate the level of intensity of IMCI implementation needed to achieve a reduction of at least 20% in all-cause under-5 mortality within 2 years in the MCE sites (this is the time required for implemented interventions to lead to mortality reduction, shown as the “biological effect” in Table 2 ▶). This group then agreed on a set of 11 criteria to be used in “starting the evaluation clock,” that is, for determining the date at which this level of implementation was achieved (Table 3 ▶). No specific cutoff levels were set for these criteria because of broad variations in IMCI implementation approaches and contextual factors among the MCE sites. Instead, site-specific levels for these criteria were used to arrive at an overall judgment about whether the level of IMCI implementation was sufficient to result in a decrease in under-5 mortality of at least 20% in the MCE intervention area within 2 years of the start date.

TABLE 3—

Criteria for Certifying the Adequacy of IMCI Implementation for Evaluation Purposes

| Indicator | Description |

| Training coverage | Proportion of first-level health facilities with at least 60% of health workers managing children trained in IMCI. |

| Supervision | Proportion of health facilities that received at least 1 visit of routine supervision that included the observation of case management during the previous 6 months. |

| Quality of care | An aggregate index measuring the quality of the treatment and counseling received by sick children observed during the health facility survey. |

| Drug availability | Index of availability of essential oral treatments, based on the health facility survey. |

| Vaccine availability | Index of availability of 4 vaccines (polio, DPT, measles, and tuberculosis), based on the health facility survey. |

| Equipment | Availability of 6 essential items of equipment and supplies needed to provide IMCI case management, based on the health facility survey. |

| Utilization | Mean annual number of health facility attendances per under-5 child. |

| Care-seeking behavior | Documentation of delivery of messages to the community at a level of intensity capable of reaching a high coverage in the target population on a sustainable basis. |

| Home disease management | Presence of mechanisms to deliver interventions for improving the home management of disease at a level of intensity capable of reaching a high coverage in the target population on a sustainable basis. |

| Nutrition counseling | Presence of mechanisms to deliver interventions for improving child nutrition at a level of intensity capable of reaching a high coverage in the target population on a sustainable basis. |

| Insecticide-treated bed nets | Documentation of the existence of a sales network for nets and insecticides covering a large proportion of villages or similar administrative units. |

Note. IMCI = Integrated Management of Childhood Illness; under-5 child = child younger than 5 years old; DPT = diphtheria–pertussis–tetanus.

As the first step in certifying the adequacy of IMCI implementation for purposes of the evaluation, investigators and their ministry of health counterparts within an MCE site reviewed available data and decided that the strength of IMCI implementation met the above criteria. At their request, the MCE then convened an independent panel composed of MCE technical advisers, WHO and ministry of health staff members, and the principal investigator from another MCE site. This panel reviewed the available evidence and reached a decision about whether IMCI implementation was adequate. In some cases, the evaluation clock was set back to the day when IMCI implementation in the study area first began to meet the criteria.

Accounting for Contextual Factors

Information on factors and constraints external to IMCI was needed to interpret the results of the plausibility evaluations. For example, recent research in Bangladesh has shown significant reductions in child mortality owing to women-focused poverty alleviation programs.17 Such evidence, while welcome, may create enormous noise in the IMCI evaluation and must be taken into account. At all sites, MCE investigators collected data on levels and trends in 4 areas:

socioeconomic factors, including family income, parental education and occupation, unemployment, land tenure, and the existence of economic crises (inflation rates, structural adjustment, etc.);

environmental factors, including water supply, sanitation, housing, and environmental pollution;

demographic factors, including fertility patterns and family size; and

health services–related factors, including structure of health services, health manpower, health worker pay, drug supply, availability of referral services, and presence of other major health initiatives.

These characteristics, as well as other locally relevant factors, were taken into account in the plausibility analyses. The techniques used to adjust for external factors included both simulation and multivariate analyses. In the Peru study, for example, ecological analyses of the impact of IMCI implementation on child mortality and nutrition outcomes included several contextual factors as covariates.

Collecting Data on Costs

Cost data were collected from all levels of the system involved in introducing or supporting IMCI. These typically included first-level facilities providing primary services to children, higher-level facilities providing referral care to children, district-level (or regional) administration supporting IMCI implementation, national-level administration supporting IMCI, and households incurring costs for seeking and obtaining treatment.

Tools and methods were developed to collect these data, adapted to the circumstances of MCE sites, field-tested, and used for data collection. Standardized guidelines and templates were developed for use in summarizing and analyzing the cost data. The basic analysis of both costs and effects generated cost-effectiveness ratios in terms of total and incremental under-5 deaths averted or years of life saved.

Outline of the MCE Designs in Each Site

Table 4 ▶ provides basic information about the 5 MCE sites, including a list of collaborators in IMCI implementation and the MCE. A brief summary of the design in each site is provided in the next sections. Further information is available at http://www.who.int/imci-mce.

TABLE 4—

Characteristics of Study Sites in the Multi-Country Evaluation of IMCI

| Tanzania | Uganda | Bangladesh | Peru | Brazil | |

| Design | Pre–post comparison of 2 IMCI and 2 non-IMCI districts | Comparison of 10 districts with different levels of IMCI implementation | Randomized trial of 10 health facilities with IMCI and 10 without IMCI | Comparison of 25 departments with different levels of IMCI implementation | Comparison of 32 IMCI and 64 non-IMCI municipalities |

| Baseline under-5 mortality | 160–180 | 141 | 96 | 58 | 70 |

| Mortality assessment | DSS | Surveys | DSS + survey | Vital statistics | Surveys |

| Household coverage surveys | 1999 (baseline) | 2000 (baseline) | 2000 (baseline) | Not planned for Phase 1 | 2004 (planned) |

| 2004 (planned) | 2004 (planned) | 2004 (planned) | |||

| Health facility assessments | 2000 (midway) | Ongoing rolling sample | 2000 (baseline) | 1999 (pilot study in selected departments) | 2002 (midway) |

| 2004 (planned) | |||||

| Cost assessments | Included in survey tools | Included in survey tools | Included in survey tools | Not included in Phase 1 | Included in survey tools |

| Randomization | None | None | Yes (2001) | None | None |

| Type of inference | Plausibility | Plausibility | Probability | Plausibility | Plausibility |

| Malaria | Yes | Yes | None | Variable | None |

| Partnerships | Ifakara Centre, TEHIP, MOH | Johns Hopkins, Makerere University, MOH, USAID | ICDDR, B, MOH, USAID | Instituto del Niño, MOH | Ceará University, MOH |

Note. IMCI = Integrated Management of Childhood Illness; DSS = Demographic Surveillance System; TEHIP = Tanzania Essential Health Interventions Project; ICDDR, B = International Centre For Diarrhoeal Disease Research, Bangladesh; MOH = ministry of health; USAID = US Agency for International Development.

Tanzania.

The Tanzania Ministry of Health, and specifically the district health management teams, implemented IMCI in 2 pioneer districts (Morogoro Rural and Rufiji). The Tanzania Essential Health Interventions Project also supported the teams in these 2 districts in the use of simple management tools for priority setting, monitoring, and mapping. Two similar, geographically contiguous districts where neither IMCI nor management tools were yet implemented (Kilombero and Ulanga) served as comparison areas. Areas within each of these 4 districts were under continuous mortality surveillance, allowing a study of the impact of IMCI on child mortality. A baseline survey was conducted in 1999 in about 2400 households and a health facility survey in 2000 covering 70 facilities. Results from these surveys were shared with the district health management teams to reinforce implementation of child health services. Information on costs and documentation of IMCI-related activities were collected simultaneously. The final household survey to assess utilization and population coverage was conducted in late 2002.

Uganda.

This study, an integral part of the MCE, was carried out by Johns Hopkins University and Makerere University with funding from the US Agency for International Development (USAID). IMCI implementation was spearheaded by the Uganda Ministry of Health with support from WHO, USAID, and other donors. Six IMCI intervention districts were selected randomly from those considered by the Ministry of Health to be likely to implement IMCI soon and fully. Four districts judged unlikely to implement IMCI in the near future were matched on a summary measure reflecting demographic and health system characteristics to serve as comparison districts. A baseline survey was carried out in 14 000 households in 2000 to assess demographic and health indicators in all 10 districts. Continuous monitoring was used to track IMCI implementation and intermediate outcomes at district, health facility, and household levels. In 2002, monitoring results indicated that considerable crossover had occurred between the IMCI districts and comparison districts, and an alternative analysis plan using a “dose–response” approach was adopted. A cost assessment study was simultaneously carried out to determine the costs of introducing IMCI and the alternatives if the resources were used differently.

Bangladesh.

A randomized design was developed to evaluate IMCI efficacy. A baseline demographic survey covering 80 000 households was carried out in the first half of 2000 in the areas of Matlab district covered by regular government health services (population 400 000; this excludes villages covered by special health services provided by the International Centre for Health and Population, International Centre For Diarrhoeal Disease Research, Bangladesh); an in-depth household survey was concurrently conducted in 5% of households. In the second half of 2000, a health facility survey was carried out in 20 health facilities in the same geographic area. The facilities were then divided into pairs on the basis of demographic and health system characteristics, and one facility in each pair was randomly selected for the intervention group. In collaboration with the Government of Bangladesh, best-possible implementation of IMCI was introduced in the 10 intervention facilities and their catchment areas, including the training of health workers, upgrading health facilities, and ensuring needed support and implementing community-level activities targeting highimpact family practices. The demographic survey will be repeated at the end of the study to assess mortality changes associated with IMCI implementation. The in-depth survey of 5% of households and the health facility survey will be repeated in 2004 to assess midterm changes. Data on costs are being collected in all surveys.

Peru.

The Peruvian MCE took advantage of the large amount of child health data available for the country’s 34 health districts. Data sources included the 1996 and 2000 demographic and health surveys as well as one planned for 2004, official vital statistics, and Ministry of Health data on health services provision, utilization, and population coverage. The relatively low mortality levels suggested that it would be difficult to document an impact from IMCI, but the design was able to support measurement of a possible impact on nutrition because stunting of growth owing to poor nutrition is highly prevalent in children. In addition, the evaluation provided a wealth of data on the evolution of several process indicators. The design involved a nationwide analysis of existing data on health and related variables, including a mixed (retrospective and prospective) ecological analysis of the impact of IMCI. All 34 health districts were visited in 2001 to collect detailed data on implementation, utilization, population coverage, and impact indicators, and this monitoring is continuing. All steps in the evaluation were carried out in close coordination with the Ministry of Health.

Brazil.

The MCE study area is in the northeast, the highest mortality region in the country. In each of 3 states, 8 municipalities reported by the Ministry of Health to have had strong IMCI implementation since 2000 were selected. For each of these, a comparison municipality was chosen where IMCI had not yet been implemented. A health facility survey was carried out in these municipalities in 2002. A demographic survey is planned for 2004 to assess mortality retrospectively in IMCI and non-IMCI municipalities, matched on the basis of geographic location, socioeconomic factors, and health services infrastructure.

CONCLUSIONS

The MCE is breaking new ground in evaluating the impact of child health strategies. The evaluation is under way in 5 countries in 3 regions of the world. The evaluation designs include large-scale measurement surveys as well as assessments of equity, the quality of care in health facilities, and the comprehensive measurement of costs. Studies within the MCE include prospective, retrospective, and mixed designs.

The MCE will contribute to a rigorous assessment of the strengths and weaknesses of IMCI and other child health strategies. It has already led to improvements in child health policies, interventions, and service delivery strategies in participating countries and elsewhere through the dissemination of intermediate results.18–22

The MCE can also contribute to the development and refinement of methods for conducting large-scale evaluations of intervention effectiveness. Among the key issues addressed in this report are (1) the importance of a conceptual framework in guiding the evaluation design, the choice of indicators, the timing of measurements, and the interpretation of the results; (2) the need to provide feedback to program managers despite the fact that this leads to changes in the intervention under study; (3) the difficulty of evaluating a strategy that is locally adapted, still in the process of development, and for which the time needed for full implementation is not yet known; and (4) the extensive efforts needed to document contextual factors and develop plausible interpretations of intervention effects in settings of rapid health system change.

Owing to space limitations, several important issues could not be addressed in this first methodological report drawing on the MCE experience. For example, a description of the time and resources needed to carry out an evaluation of this nature and scale would undoubtedly be useful for those interested in embarking on large-scale effectiveness evaluations. Detailed descriptions of the composition of the research teams at both international and country levels, and special efforts made to expand local capacity, could also be helpful to those seeking to expand research capacity in developing countries. Finally, more detailed descriptions of methodological and analytic approaches developed to address specific issues need to be provided. The MCE will continue to elucidate these and other methodological challenges in future articles.

Acknowledgments

The IMCI-MCE is supported by the Bill and Melinda Gates Foundation and the US Agency for International Development and is coordinated by the Department of Child and Adolescent Health and Development of the World Health Organization.

The authors thank those responsible for the MCE studies and IMCI implementation in Bangladesh, Brazil, Peru, Tanzania, and Uganda, including Ministry of Health personnel in each site. Full lists of investigators are available on the MCE Web site (http://www.who.int/imci-mce). In addition, Taghreed Adam, Eleanor Gouws, David Evans, Saul Morris, and Don de Savigny made important technical contributions to the design and implementation of the MCE.

Contributors All authors participated fully in the conceptualization and design of the Multi-Country Evaluation (MCE) and helped to generate ideas, interpret findings, and review the article. J. Bryce was responsible for the project on behalf of the World Health Organization and cowrote the first draft with C. G. Victora, who is the senior technical adviser for the MCE. J.-P. Habicht, P. Vaughan, and R. E. Black are technical advisers to the MCE and provided continuous technical oversight throughout its implementation.

Peer Reviewed

References

- 1.Child and adolescent health and development. Available at: http://www.who.int/child-adolescent-health. Accessed December 11, 2003.

- 2.Tulloch J. Integrated approach to child health in developing countries. Lancet. 1999;354(suppl 2):SII16–SII20. [DOI] [PubMed] [Google Scholar]

- 3.Gove S. Integrated management of childhood illness by outpatient health workers. Technical basis and overview. The WHO Working Group on Guidelines for Integrated Management of the Sick Child. Bull World Health Organ. 1997;75(suppl 1):7–24. [PMC free article] [PubMed] [Google Scholar]

- 4.Lambrechts T, Bryce J, Orinda V. Integrated management of childhood illness: a summary of first experiences. Bull World Health Organ. 1999;77:582–594. [PMC free article] [PubMed] [Google Scholar]

- 5.World Health Organization Multi-Country Evaluation. Available at: http://www.who.int/imci-mce. Accessed December 11, 2003.

- 6.Habicht J-P, Mason JB, Tabatabai H. Basic concepts for the design of evaluation during program implementation. In: Sahn DE, Lockwood R, Scrimshaw NS, eds. Methods for the Evaluation of the Impact of Food and Nutrition Programs. Tokyo, Japan: United Nations University; 1984:1–25.

- 7.Habicht J-P, Victora CG, Vaughan JP. Evaluation designs for adequacy, plausibility and probability of public health programme performance and impact. Int J Epidemiol. 1999;28:10–18. [DOI] [PubMed] [Google Scholar]

- 8.Victora CG, Habicht J-P, Bryce J. Evidence-based public health: moving beyond randomized trials. Am J Public Health. 2004;94:400–405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Adam T, Bishai D, Khan M, Evans D. Methods for the costing component of the Multi-Country Evaluation of IMCI. Available at: http://www.who.int/imci-mce. Accessed December 17, 2003.

- 10.Adam T, Manzi F. Costs of under-five care using IMCI in Tanzania—preliminary results. Paper presented at: Multilateral Initiative on Malaria, Pan-African Malaria Conference; November 18–22, 2002; Arusha, Tanzania. Available at: http://www.who.int/imci-mce. Accessed December 17, 2003.

- 11.Filmer D, Pritchett L. Estimating wealth effects without expenditure data or tears: an application to educational enrollments in states of India. Demography. 2001;38:115–132. [DOI] [PubMed] [Google Scholar]

- 12.Morris SS, Carletto C, Hoddinott J, Christiaensen LJ. Validity of rapid estimates of household wealth and income for health surveys in rural Africa. J Epidemiol Community Health. 2000;54:381–387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Paul K. A Guttman scale model of Tahitian consumer behavior. Southwestern J Anthropol. 1964;20:160–167. [Google Scholar]

- 14.Gouws E, Bryce J, Habicht J-P, et al. Improving the use of antimicrobials through IMCI case management training. Bull World Health Organ. In press.

- 15.The Multi-Country Evaluation of IMCI Effectiveness, Cost and Impact (MCE). Progress Report, May 2001–April 2002. Geneva, Switzerland: Dept of Child and Adolescent Health and Development, World Health Organization; 2002. WHO document WHO/FCH/CAH/02.16. Available at: http://www.who.int/child-adolescent-health. Accessed December 17, 2003.

- 16.Becker S, Black RE. A model of child morbidity, mortality and health interventions. Popul Dev Rev. 1996;22:431–456. [Google Scholar]

- 17.Bhuiya A, Chowdhury M. Beneficial effects of a woman-focused development programme on child survival: evidence from rural Bangladesh. Soc Sci Med. 2002;55:1553–1560. [DOI] [PubMed] [Google Scholar]

- 18.Bryce J, el Arifeen S, Pariyo G, et al. Reducing child mortality: can public health deliver? Lancet. 2003;362:159–164. [DOI] [PubMed] [Google Scholar]

- 19.Schellenberg JA, Victora CG, Mushi A, et al. Inequities among the very poor: health care for children in rural southern Tanzania. Lancet. 2003;361:561–566. [DOI] [PubMed] [Google Scholar]

- 20.Victora CG, Wagstaff A, Schellenberg JA, Gwatkin D, Claeson M, Habicht J-P. Applying an equity lens to child health and mortality: More of the same is not enough. Lancet. 2003;362:233–241. [DOI] [PubMed] [Google Scholar]

- 21.Tanzania IMCI Multi-Country Evaluation Health Facility Survey Study Group. Health care for under-fives in rural Tanzania: effect of Integrated Management of Childhood Illness on observed quality of care. Health Policy Plann. In press. [DOI] [PubMed]

- 22.Bellagio Study Group on Child Survival. Knowledge into action for child survival. Lancet. 2003;362:323–327. [DOI] [PubMed] [Google Scholar]