Abstract

Objective:

To evaluate the effectiveness of surgical simulation compared with other methods of surgical training.

Summary Background Data:

Surgical simulation (with or without computers) is attractive because it avoids the use of patients for skills practice and provides relevant technical training for trainees before they operate on humans.

Methods:

Studies were identified through searches of MEDLINE, EMBASE, the Cochrane Library, and other databases until April 2005. Included studies must have been randomized controlled trials (RCTs) assessing any training technique using at least some elements of surgical simulation, which reported measures of surgical task performance.

Results:

Thirty RCTs with 760 participants were able to be included, although the quality of the RCTs was often poor. Computer simulation generally showed better results than no training at all (and than physical trainer/model training in one RCT), but was not convincingly superior to standard training (such as surgical drills) or video simulation (particularly when assessed by operative performance). Video simulation did not show consistently better results than groups with no training at all, and there were not enough data to determine if video simulation was better than standard training or the use of models. Model simulation may have been better than standard training, and cadaver training may have been better than model training.

Conclusions:

While there may be compelling reasons to reduce reliance on patients, cadavers, and animals for surgical training, none of the methods of simulated training has yet been shown to be better than other forms of surgical training.

In a systematic review of the 30 relevant randomized controlled trials, simulated training (including computer simulation) was not shown to be superior to other forms of surgical training.

Simulation is particularly attractive in the field of surgery because it avoids the use of patients for skills practice and ensures that trainees have had some practice before treating humans.1 Surgical simulation may or may not involve the use of computers.

One of the barriers to development of virtual reality surgical simulation has been the large amount of computing capacity that has been required to remove delays in signal processing, but this is being addressed by systems that break down the tasks by concentrating on chains of behavior.2 Designers of surgical simulators attempt to balance visual fidelity, real-time response and computing power, and cost.3

What Currently Happens in Surgical Training?

Surgical training consists of developing cognitive, clinical, and technical skills, the latter being traditionally acquired through mentoring.4 Fewer mentoring opportunities5 have led to the use of models, cadavers, and animals to replicate surgical situations and, more recently, to development of surgical skills centers or laboratories. However, the effectiveness of skills laboratories in teaching basic surgical skills (eg, instrument handling, knot tying, and suturing) is not yet proven.6,7 Reznick8 maintains that the teaching and testing of technical skills have been the least systematic or standardized component of surgical education.

Is Simulation an Effective Method of Training?

This review attempts to gauge the instructional effectiveness of surgical simulation9 (that repeated use improves performance), as well as construct validity (that the simulator measures the skill it is designed to measure). Validity studies of surgical simulation (mostly computer simulation) have shown mixed results for construct validity while other important aspects of validity (such as predictive validity) and reliability are frequently not tested.3,9 The ultimate validation is for simulation training to show a positive influence on patient outcomes (adapted from Berg et al9).

This review focuses on the use of surgical simulation for training, but simulation is also being used to assess surgeons. For example, the U.K. General Medical Council is using it to assess poorly performing surgeons referred to the Council.10

Costs of Surgical Training

While the costs of simulation systems can be high, ranging from about U.S. $5000 for most laparoscopic simulators to up to U.S. $200,000 for highly sophisticated anesthesia simulators,1 traditional Halstedian training is not without cost either. Bridges and Diamond11 calculated that the cost of training a surgical resident in the operating room for 4 years was nearly U.S. $50,000 (measured by the additional time that the resident took to complete procedures). Other providers of surgical training are making substantial investments in surgical skills centers.

MATERIALS AND METHODS

This review is a summary and an update of an assessment carried out for ASERNIP-S (the Australian Safety and Efficacy Register of New Interventional Procedures): www.surgeons.org/asernip-s/.

Inclusion Criteria

Randomized controlled trials (RCTs) of any training technique using at least some elements of surgical simulation compared with any other methods of surgical training, or no surgical training were included for review. Participants in the RCTs could have been surgeons, surgical trainees (residents), medical students, or other groups. Included studies must have contained information on either measures of surgical task performance (whether objective or subjective) or measures of satisfaction with training techniques or both.

Search Strategy

Studies were identified by searching MEDLINE, EMBASE, PreMEDLINE, Current Contents, The Cochrane Library (Issue 2, 2005), scholar.google.com, metaRegister of Controlled Trials, National Research Register (U.K.), NHS Health Technology Assessment (U.K.), and the NHS Centre for Reviews and Dissemination databases (last searched April 2005). PsycINFO, CINAHL, and Science Citation Index were searched on March 25, 2003. Search terms used were surgical simulation or surg* AND (simulat* OR virtual realit*). Additional articles were identified through the reference sections of the studies retrieved.

Articles were retrieved when they were judged to possibly meet the inclusion criteria. Two reviewers then independently applied the inclusion criteria to these retrieved articles. Any differences were resolved by discussion.

Data Extraction and Analysis

The ASERNIP-S reviewer extracted data on to data extraction sheets designed for this review and a second reviewer checked the data extraction.

It was not considered appropriate to pool results across studies, because outcomes were not comparable. Relative risks (RR), for dichotomous outcome measures or weighted mean differences (WMD), for continuous outcome measures with 95% confidence intervals (CI) were calculated for some outcomes in individual RCTs where it was thought that this would aid in the interpretation of results. Calculations were made using RevMan 4.2 (Cochrane Collaboration, 2003). Some of the calculations of continuous measures showed skew (the standard deviation is twice more than the mean), indicating that some of the results were not normally distributed. This usually indicates that there is a strong “floor” or “ceiling” effect for performance of a particular task.12 Study quality was assessed on a number of parameters such as the quality of methodologic reporting, methods of randomization and allocation concealment, blinding of outcomes assessors, attempts made to minimize bias, sample sizes, and ability to measure “true effect.”

Details of RCTs

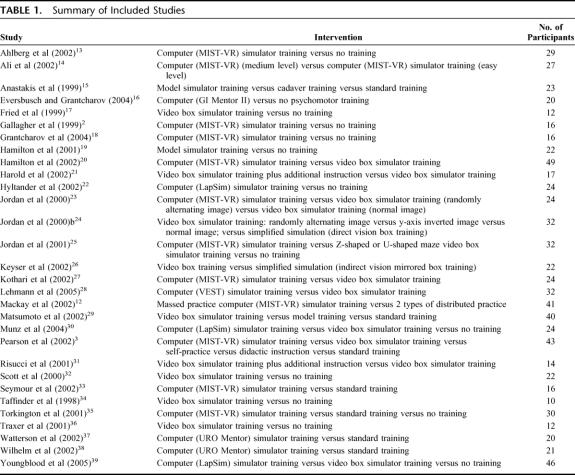

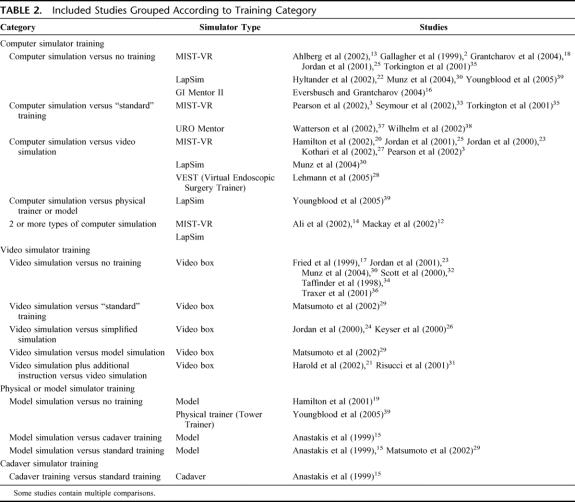

A total of 30 relevant RCTs with 760 participants overall were included (Tables 1 and 2).2,3,12–39

TABLE 1. Summary of Included Studies

TABLE 2. Included Studies Grouped According to Training Category

The length of time devoted to training varied greatly between the studies, with the shortest reported time being 10 minutes,37 and the longest being 10 hours.22 In the study by Harold et al,21 both training groups were given 1 week to practice using the video box trainer, with the trained group receiving an additional 60-minute course on laparoscopic suturing and in Lehmann et al28 each group carried out a defined training program over 4 days and then switched to the other group on the fifth day. Fifteen studies did not report the length of training but rather the number of sessions or the number of tasks that were practiced.2,3,14,16,17,20,23,25–27,31,34,35 The previous experience of participants varied greatly between the studies, ranging from high school students14 to third, fourth, and fifth year urology residents32 to expert surgeons.16,28

Quality of Studies

Only 3 studies16,18,25 are likely to have demonstrated adequate allocation concealment, with use of sealed or closed envelopes. It was not possible to blind participants for any of the interventions in the included studies. Outcome assessors were blinded in 15 RCTs.13,15,18–22,29–33,36,37,39 One of the 2 outcome assessors was blinded in Wilhelm et al.38 In 14 RCTs, it was not stated whether the outcome assessors were blinded.2,3,12,14,16,17,23–28,30,31 Because the study durations were generally short, most studies had no losses to follow-up.

RESULTS

The 30 included studies have been categorized into 4 types of simulation (computer, video, model, and cadaver) and compared with no training and standard training, as well as with each other.

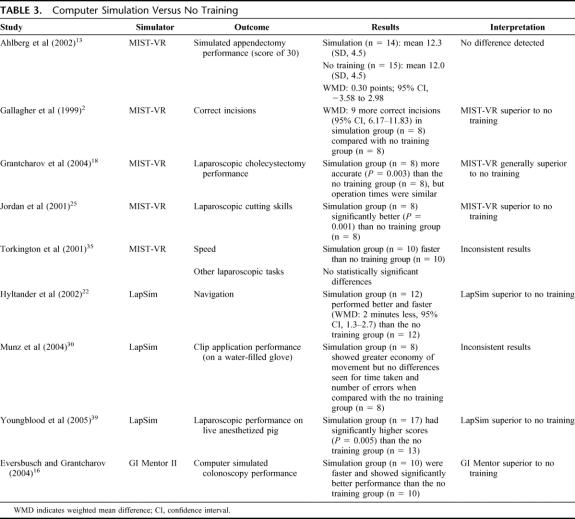

Computer Simulation Versus No Training (9 Studies) (Table 3)

TABLE 3. Computer Simulation Versus No Training

As may be expected, those trained on computer simulators performed better than those who received no training at all. However, the improvement was not universal.

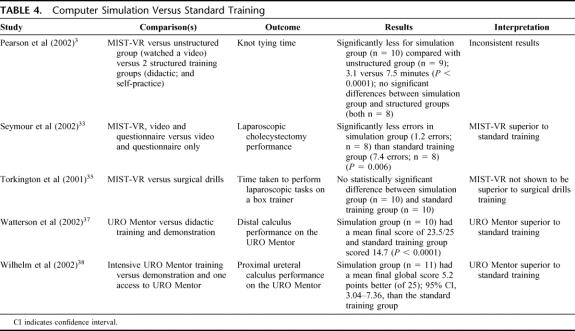

Computer Simulation Versus Standard Training (5 Studies) (Table 4)

TABLE 4. Computer Simulation Versus Standard Training

When computer simulator trained students were compared with students who received standard training, the superiority of computer simulation was less pronounced than for “no training” comparisons. The computer simulation versus “standard” training comparisons varied, potentially confounded by the different components of “standard” training, as well as by the different intensities of time allowed on the simulator in the computer simulation groups.

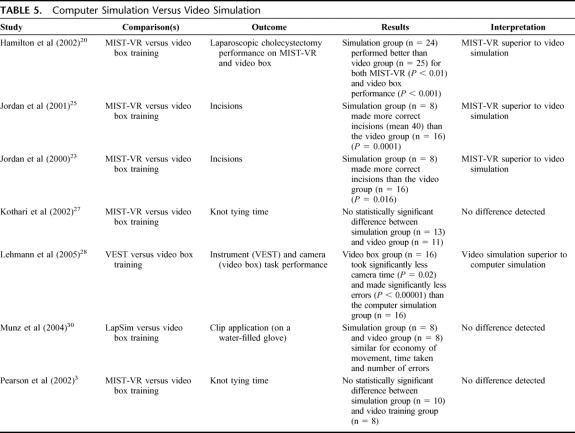

Computer Simulation Versus Video Simulation (7 Studies) (Table 5)

TABLE 5. Computer Simulation Versus Video Simulation

Computer simulation showed mixed results, superior in some studies, but not others and was inferior to video simulation in one study. This may have depended on types of tasks, with computer simulation producing better results for tasks such as incisions, but not for knot tying times. However, there were too few studies to determine this.

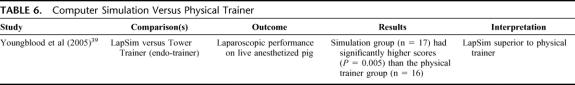

Computer Simulation Versus Physical Trainer or Model (1 Study)39 (Table 6)

TABLE 6. Computer Simulation Versus Physical Trainer

One study showed computer simulation training to be superior to training on a physical trainer.

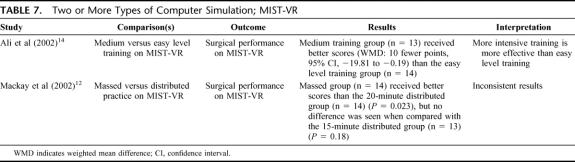

Two or More Types of Computer Simulation; MIST-VR (2 Studies) (Table 7)

TABLE 7. Two or More Types of Computer Simulation; MIST-VR

One RCT14 showed that more demanding training may lead to better performance of surgical tasks on MIST-VR. Another RCT12 failed to show clear differences between massed and distributed practice on MIST-VR.

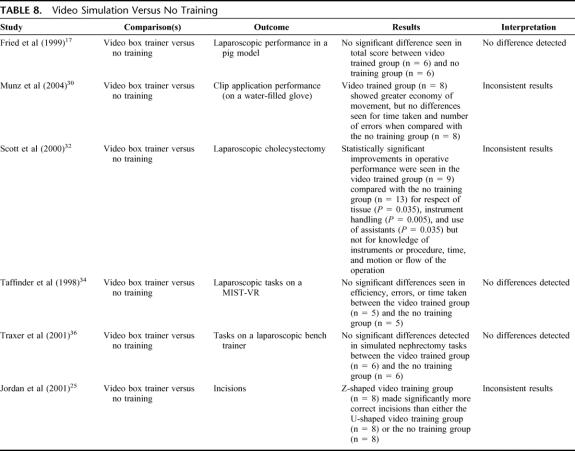

Video Simulation Versus No Training (6 Studies) (Table 8)

TABLE 8. Video Simulation Versus No Training

Video simulation groups did not show consistently better results than groups who did not receive training.

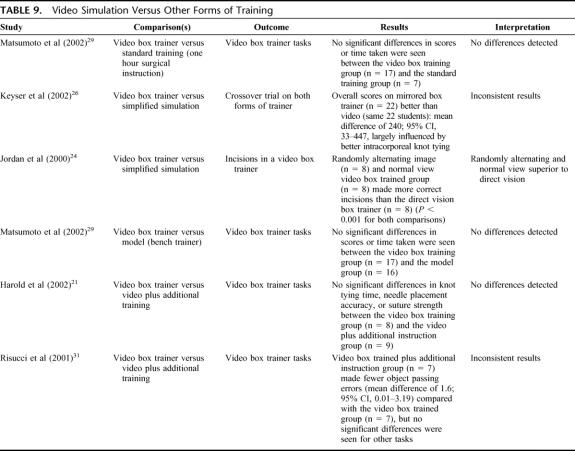

Video Simulation Versus Other Forms of Training (5 Studies) (Table 9)

TABLE 9. Video Simulation Versus Other Forms of Training

Generally, no differences were seen between video box training and other forms of training such as bench models or standard training.

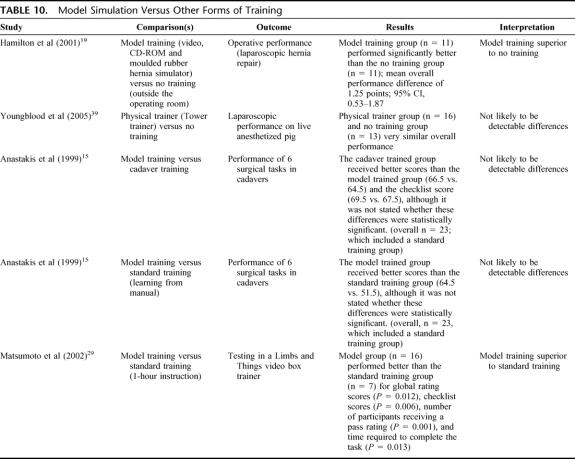

Physical or Model Simulation Versus Other Forms of Training, Including No Training (4 Studies) (Table 10)

TABLE 10. Model Simulation Versus Other Forms of Training

While these comparisons showed mixed results, model training may be better than no training and standard training such as instruction from mentors or manuals.

Cadaver Training Versus Standard Training (1 Study)

In Anastakis et al,15 the cadaver trained group received better scores than the standard training group, which learned independently from the manuals, for the global assessment of operative performance on cadavers (66.5 versus 51.5) and the checklist score (69.5 versus 60.5), although it was not stated whether these differences were statistically significant.

DISCUSSION

Computer simulation generally showed better results than no training at all but was not convincingly superior to standard training (such as surgical drills) or video simulation (particularly when assessed by operative performance). Video simulation did not show consistently better results than groups with no training at all, and there were not enough data to determine if video simulation was better than standard training or the use of models. Model simulation may have been better than standard training, and cadaver training may have been better than model training. Only one RCT39 made a comparison between computer simulation and model training, which showed LapSim to be superior to training on a physical Tower trainer.

With 30 RCTs testing the effect of surgical simulation for training, a clearer result might have been expected. Possible reasons for failing to see a clear benefit for surgical simulation include the following:

Small sample sizes. There were only 760 participants in total across all the RCTs, meaning that the ability to detect differences between different forms of training was quite limited.

Multiple and confounding comparisons. The power to detect any differences was further diluted by the large number of different comparisons, both within and between studies, and the comparisons may have been confounded by factors such as mentoring, which could have swamped any effect of simulation.

Disparate interventions. The component tasks of surgical simulation varied considerably between studies, which may have obscured results for individual tasks such as knot tying. On the other hand, it may be more realistic to test a “package” of surgical skills, but there did not appear to be a consensus as to what might constitute a core set or package of surgical skills.

The comparators were not standardized. “Standard” training covered a wide range of activities, some of which were of very minimal intensity (eg, reading a manual, watching a video). Even so, the simulated groups did not always show superiority even over groups with minimal training.

The surgical simulations may not have been intensive or long enough to show an effect on training. While some simulator interventions were very brief, a “practice” effect with longer simulator exposure was not generally evident, although such a practice effect may only be apparent for quite high levels of simulator use. However, most simulator interventions (and comparators) showed significant improvements pre and post intervention (or comparator).

Ideally, outcome assessors would have been blinded to group allocation because many of the measurements can be quite subjective (eg, global scores). However, such blinding was not routinely used in the studies included in this review.

Most studies measured simulator, and not actual, performance. Unless the simulator has previously been validated by assessing operative performance, or the study assesses skills gained on the simulator by assessing operative performance, we cannot be sure that observed improvements on the simulator will translate into improved operative performance. The failure to translate simulator performance into actual operative performance (concurrent validity) was illustrated in a validity study by Paisley et al,10 which also showed that simulator performance did not discriminate between naive and experienced surgeons (construct validity). The reasons for this apparent lack of concurrent validity cannot be ascertained from this review, but the relatively new nature of simulation (particularly computer simulation and virtual reality) may be significant causes. Perhaps surgical simulation is not yet realistic enough, in regard to representation of anatomy or haptics are simulated inadequately. Perhaps the simulated tasks are isolated or not presented in the right order, or perhaps they are not even the most appropriate tasks.

Although it is very difficult to show equivalence (particularly with the small sample sizes and disparate comparisons), a more optimistic interpretation of the findings of this review would be that surgical simulation may be just as good as other forms of surgical training. In other words, can surgical simulation provide an alternative to standard surgical training? Most of the RCTs in this review showed that both simulator and standard training groups improved significantly from baseline, but that participants' final scores usually did not show differences between the simulator and other groups. Apart from the obvious avoidance of experimenting on human patients and not using cadavers or animals, surgical simulation would enable surgical experience earlier in training, make more cases available, give exposure to a wider range of pathologies in a compressed period, allowing acceleration of the learning curve, and give exposure to new procedures and technologies.40 Surgical simulation is claimed to be able to save money, although this does not appear to have been substantiated yet. Computer simulation and virtual reality have received much attention, which has eclipsed investigation of other forms of simulation such as models, which may be cheaper and as effective. Unfortunately, many of the studies in surgical simulation have been of poor experimental design, leading Champion and Gallagher41 to state that “bad science in the field of medical simulation has become all too common.” Even though this review included only RCTs, potentially the most rigorous study design for intervention studies, generally there were significant problems with small sample sizes and the validity and reliability of outcome measurements in particular. The studies by Grantcharov et al,18 Scott et al,32 and Seymour et al33 stand out as higher-quality studies since they measured outcomes by actual operative performance, although only some elements were measured in Grantcharov et al.18

CONCLUSION

While there may be compelling reasons to reduce reliance on patients, cadavers, and animals for surgical training, none of the methods of simulated training (including computer simulation) has yet been shown to be better than other forms of surgical training. In addition, little is known about the real costs (including adverse outcomes in patients) of either simulated or standard surgical training.

Adequately powered, well-designed, and unconfounded RCTs (preferably multicenter with similar protocols) are needed and outcome assessors need to be blinded. Outcomes need to be tested in actual operative circumstances (or on validated systems). In particular, model simulation needs to be further tested against computer simulation. Studies of cost comparisons also need to be done. The RCTs dealt exclusively with technical skills, although other skills such as cognitive skills and communication skills are clearly integral parts of surgical performance.

Footnotes

Reprints: Guy Maddern, MD, PhD, ASERNIP-S, PO Box 553, Stepney, South Australia 5069, Australia. E-mail: college.asernip@surgeons.org.

REFERENCES

- 1.Issenberg SB, McGaghie WC, Hart IR, et al. Simulation technology for health care professional skills training and assessment. JAMA. 1999;282:861–866. [DOI] [PubMed] [Google Scholar]

- 2.Gallagher AG, McClure N, McGuigan J, et al. Virtual reality training in laparoscopic surgery: a preliminary assessment of Minimally Invasive Surgical Trainer Virtual Reality (MIST VR). Endoscopy. 1999;31:310–313. [DOI] [PubMed] [Google Scholar]

- 3.Pearson AM, Gallagher AG, Rosser JC, et al. Evaluation of structured and quantitative training methods for teaching intracorporeal knot tying. Surg Endosc. 2002;16:130–137. [DOI] [PubMed] [Google Scholar]

- 4.Satava RM, Gallagher AG, Pellegrini CA. Surgical competence and surgical proficiency: definitions, taxonomy, and metrics. J Am Coll Surg. 2003;196:933–937. [DOI] [PubMed] [Google Scholar]

- 5.Anastakis DJ. Wanzel KR, Brown MH, et al. Evaluating the effectiveness of a 2-year curriculum in a surgical skills center. Am J Surg. 2003;185:378–375. [DOI] [PubMed] [Google Scholar]

- 6.Kuppersmith RB, Johnston R, Jones SB, et al. Virtual reality surgical simulation and otolaryngology. Arch Otolaryngol Head Neck Surg. 1996;122:1297–1298. [DOI] [PubMed] [Google Scholar]

- 7.Rogers DA, Elstein AS, Bordage G. Improving continuing medical education for surgical techniques: applying the lessons learned in the first decade of minimal access surgery. Ann Surg. 2001;233:159–166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Reznick RK. Teaching and testing technical skills. Am J Surg. 1993;165:358–361. [DOI] [PubMed] [Google Scholar]

- 9.Berg D, Raugi G, Gladstone H, et al. Virtual reality simulators for dermatologic surgery: measuring their validity as a teaching tool. Dermatol Surg. 2001;27:370–374. [DOI] [PubMed] [Google Scholar]

- 10.Paisley AM, Baldwin PJ, Paterson-Brown S. Validity of surgical simulation for the assessment of operative skill. Br J Surg. 2001;88:1525–1532. [DOI] [PubMed] [Google Scholar]

- 11.Bridges M, Diamond DL. The financial impact of teaching surgical residents in the operating room. Am J Surg. 1999;177:28–32. [DOI] [PubMed] [Google Scholar]

- 12.Mackay S, Morgan P, Datta V, et al. Practice distribution in procedural skills training: a randomized controlled trial. Surg Endosc. 2002;16:957–961. [DOI] [PubMed] [Google Scholar]

- 13.Ahlberg G, Heikkinen T, Iselius L, et al. Does training in a virtual reality simulator improve surgical performance? Surg Endosc. 2002;16:126–129. [DOI] [PubMed] [Google Scholar]

- 14.Ali MR, Mowery Y, Kaplan B, et al. Training the novice in laparoscopy: more challenge is better. Surg Endosc. 2002;16:1732–1736. [DOI] [PubMed] [Google Scholar]

- 15.Anastakis DJ, Regehr G, Reznick RK, et al. Assessment of technical skills transfer from the bench training model to the human model. Am J Surg. 1999;177:67–70. [DOI] [PubMed] [Google Scholar]

- 16.Eversbusch A, Grantcharov TP. Learning curves and impact of psychomotor training on performance in simulated colonoscopy: a randomized trial using a virtual reality endoscopic trainer. Surg Endosc. 2004;18:1514–1518. [DOI] [PubMed] [Google Scholar]

- 17.Fried GM, Derossis AM, Bothwell J, et al. Comparison of laparoscopic performance in vivo with performance measured in a laparoscopic simulator. Surg Endosc. 1999;13:1077–1081. [DOI] [PubMed] [Google Scholar]

- 18.Grantcharov TP, Kristiansen VB, Bendix J, et al. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg. 2004;91:146–150. [DOI] [PubMed] [Google Scholar]

- 19.Hamilton EC, Scott DJ, Kapoor A, et al. Improving operative performance using a laparoscopic hernia simulator. Am J Surg. 2001;182:725–728. [DOI] [PubMed] [Google Scholar]

- 20.Hamilton EC, Scott DJ, Fleming JB, et al. Comparison of video trainer and virtual reality training systems on acquisition of laparoscopic skills. Surg Endosc. 2002;16:406–411. [DOI] [PubMed] [Google Scholar]

- 21.Harold KL, Matthews BD, Backus CL, et al. Prospective randomized evaluation of surgical resident proficiency with laparoscopic suturing after course instruction. Surg Endosc. 2002;16:1729–1731. [DOI] [PubMed] [Google Scholar]

- 22.Hyltander A, Liljegren E, Rhodin PH, et al. The transfer of basic skills learned in a laparoscopic simulator to the operating room. Surg Endosc. 2002;16:1324–1328. [DOI] [PubMed] [Google Scholar]

- 23.Jordan JA, Gallagher AG, McGuigan J, et al. A comparison between randomly alternating imaging, normal laparoscopic imaging, and virtual reality training in laparoscopic psychomotor skill acquisition. Am J Surg. 2000;180:208–211. [DOI] [PubMed] [Google Scholar]

- 24.Jordan JA, Gallagher AG, McGuigan J, et al. Randomly alternating image presentation during laparoscopic training leads to faster automation to the ‘fulcrum effect'. Endoscopy. 2000;32:317–321. [DOI] [PubMed] [Google Scholar]

- 25.Jordan JA, Gallagher AG, McGuigan J, et al. Virtual reality training leads to faster adaptation to the novel psychomotor restrictions encountered by laparoscopic surgeons. Surg Endosc. 2001;15:1080–1084. [DOI] [PubMed] [Google Scholar]

- 26.Keyser EJ, Derossis AM, Antoniuk M, et al. A simplified simulator for the training and evaluation of laparoscopic skills. Surg Endosc. 2000;14:149–153. [DOI] [PubMed] [Google Scholar]

- 27.Kothari SN, Kaplan BJ, DeMaria EJ, et al. Training in laparoscopic suturing skills using a new computer-based virtual reality simulator (MIST-VR) provides results comparable to those with an established pelvic trainer system. J Laparoendosc Adv Surg Tech. 2002;12:167–173. [DOI] [PubMed] [Google Scholar]

- 28.Lehmann KS, Ritz JP, Maass H, et al. A prospective randomized study to test the transfer of basic psychomotor skills from virtual reality to physical reality in a comparable training setting. Ann Surg. 2005;241:442–449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Matsumoto ED, Hamstra SJ, Radomski SB, et al. The effect of bench model fidelity on endourological skills: a randomized controlled study. J Urol. 2002;167:1243–1247. [PubMed] [Google Scholar]

- 30.Munz Y, Kumar BD, Moorthy K, et al. Laparoscopic virtual reality and box trainers. Surg Endosc. 2004;18:485–494. [DOI] [PubMed] [Google Scholar]

- 31.Risucci D, Cohen JA, Garbus JE, et al. The effects of practice and instruction on speed and accuracy during resident acquisition of simulated laparoscopic skills. Curr Surg. 2001;58:230–235. [DOI] [PubMed] [Google Scholar]

- 32.Scott DJ, Bergen PC, Rege RV, et al. Laparoscopic training on bench models: better and more cost effective than operating room experience? J Am Coll Surg. 2000;191:272–283. [DOI] [PubMed] [Google Scholar]

- 33.Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance results of a randomized, double-blinded study. Ann Surg. 2002;236:458–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Taffinder N, Sutton C, Fishwick RJ, et al. Validation of virtual reality to teach and assess psychomotor skills in laparoscopic surgery: results from randomised controlled studies using the MIST VR laparoscopic simulator. Stud Health Technol Inform. 1998;50:124–130. [PubMed] [Google Scholar]

- 35.Torkington J, Smith SGT, Rees BI, et al. Skill transfer from virtual reality to a real laparoscopic task. Surg Endosc. 2001;15:1076–1079. [DOI] [PubMed] [Google Scholar]

- 36.Traxer O, Gettman MT, Napper CA, et al. The impact of intense laparoscopic skills training on the operative performance of urology residents. J Urol. 2001;166:1658–1661. [PubMed] [Google Scholar]

- 37.Watterson JD, Beiko DT, Kuan JK, et al. Randomized prospective blinded study validating acquisition of ureteroscopy skills using computer based virtual reality endourological simulator. J Urol. 2002;168:1928–1932. [DOI] [PubMed] [Google Scholar]

- 38.Wilhelm DM, Ogan K, Roehrborn CG, et al. Assessment of basic endoscopic performance using a virtual reality simulator. J Am Coll Surg. 2002;195:675–681. [DOI] [PubMed] [Google Scholar]

- 39.Youngblood PL, Srivastava S, Curet M, et al. Comparison of training on two laparoscopic simulators and assessment of skills transfer to surgical performance. J Am Coll Surg. 2005;200:564–551. [DOI] [PubMed] [Google Scholar]

- 40.Edmond CV. Virtual reality and surgical simulation. In: Citardi MJ, eds. Computer-Aided Otorhinolaryngology-Head and Neck Surgery, New York: Marcel Dekker, 2002. [Google Scholar]

- 41.Champion HR, Gallagher AG. Surgical simulation: a ‘good idea whose time has come'. Br J Surg. 2003;90:767–768. [DOI] [PubMed] [Google Scholar]