Abstract

Goal-directed self-motion through space is anything but a trivial task. What we take for granted in everyday life requires the complex interplay of different sensory and motor systems. On the sensory side most importantly a target of interest has to be localized relative to one's own position in space. On the motor side the most critical step in neural processing is to define and perform a movement towards the target as well as the avoidance of obstacles. Furthermore, the multisensory (visual, tactile and auditory) motion signals as induced by one's own movement have to be identified and differentiated from the real motion of visual, tactile or auditory objects in the outside world. In a number of experimental studies performed in recent years we and others have functionally characterized a subregion within monkey posterior parietal cortex (PPC) that appears to be well suited to contribute to such multisensory encoding of spatial and motion information. In this review I will summarize the most important experimental findings on the functional properties of this very region in monkey PPC, i.e. the ventral intraparietal area.

General introduction

Navigation in space generates a massive flow of sensory information that has to be analysed in order to move towards a target or to avoid obstacles. This task is anything but trivial. Signals arising from the different senses have to be synthesized into a coherent framework. Initially, all three sensory subsystems are organized in parallel and their respective information is encoded topographically at the first cortical stages. Yet, according to the different receptor epithelia these topographical maps are organized in different coordinate systems: visual information is represented retinocentrically in striate cortex, with a large over-representation of the foveal part of the retina. The whole body surface is represented in primary somatosensory cortex, yet here also the size of the representation of each body part is not homogeneous but rather reflects its functional significance. Finally, auditory information is represented tonotopically in primary auditory cortex. Accordingly, synthesizing all three different signals in order to generate a single and coherent representation of the outside world requires massive computational effort. Nevertheless, responses to signals from all three modalities are found in single cells in monkey parietal cortex. This review describes the latest findings on how these signals are combined and how they are used to construct a multisensory representation of spatial and motion information. More specifically, I will concentrate on the description of response properties of neurones within one specific subregion of posterior parietal cortex, i.e. the ventral intraparietal area (VIP) of the macaque.

Localization of targets and object avoidance

Movement towards a target in space requires complex sensorimotor processing. First of all a target has to be localized in space. This is not trivial given that the dominant sensory signal, i.e. the visual information, is initially encoded in retinal coordinates. Yet, during active exploration we constantly move our eyes. Hence an object's image on the retina shifts while the object itself might be stable in the outside world. As a consequence, our movements have to be planned and performed with respect to our body (egocentrically) or even with respect to the surroundings (allocentrically) rather than with respect to the fovea. Obviously, this requires a transformation of the visual signals from retinal to body (-part) or world coordinates. Andersen and colleagues had performed the most influential studies related to this issue in the early 80s. In a first experimental study Andersen and Mountcastle demonstrated an influence of the angle of gaze on the visual responses of neurones in the posterior parietal cortex of the monkey (Andersen & Mountcastle, 1983). In their experiments, they presented optimal visual stimuli at identical retinal locations while the monkey gazed in different directions. The authors showed that, although the stimulus was identical in all cases, the neuronal discharge changed systematically as a function of eye position. In most cases, the neuronal discharge increased or decreased linearly with varying gaze.

In a combined experimental and theoretical follow-up study Zipser and Andersen showed that this eye position signal can be used to transform visual signals from an eye-centred into a head-centred representation (Zipser & Andersen, 1988). They had trained a back-propagation network to represent visual stimuli in head-centred coordinates. The retinal location of the visual stimulus and the information on gaze direction served as input signal. After training, the units in the hidden layer revealed response properties identical to those previously recorded by the same authors from area 7a in posterior parietal cortex (PPC). This was taken as strong evidence for an ongoing coordinate transformation of visual signals within monkey PPC. Furthermore, this finding fitted nicely with observations from neuropsychological studies on parietal patients who, after lesion of their (mostly right hemispheric) PPC, could no longer orientate and navigate within space.

Since then, we and others have shown that such eye position effects exist not only in parietal cortex but are far more widespread and probably can be found in the whole visual system, starting from striate cortex (area V1) up to area 7a along the dorsal stream (Bremmer et al. 1997a, c, 1998, 1999a; Trotter & Celebrini, 1999) and via area V4 up to area inferotemporal cortex (TE) and the hippocampal formation along the ventral stream (Nowicka & Ringo, 2000; Bremmer, 2000; Rosenbluth & Allman, 2002). Furthermore, eye position effects with similar response properties are also found in premotor cortex (Boussaoud et al. 1998; Boussaoud & Bremmer, 1999) and even subcortically (Van Opstal et al. 1995).

In our studies we could show that eye position effects in all areas fulfil the prerequisites for a population coding of visual information in a head-centred frame of reference. Accordingly, the behavioural deficits as observed specifically after damage to the PPC cannot be due to lesioned neurones carrying such a gaze signal. Rather this deficit must be related to specific neural processing within PPC and its specific anatomical connections to other sensorimotor regions of the brain.

One such specific neural processing step seems to be performed in area VIP and relates to the reference frame of visual information. Inspired by the work of Andersen and colleagues we asked in our study if it could be the case that, at the single cell level, visual information in area VIP might be encoded in a coordinate system that is different from the retina (Duhamel et al. 1997). At this stage it is important to mention that the experiments for obtaining data in this and all our other studies mentioned in this review were conducted according to contemporary welfare standards. To be more precise, all animal procedures were carried out in accordance with national French, German and international published guidelines on the use of animals in research (European Communities Council Directive 86/609/ECC) and were approved by the local ethics committees.

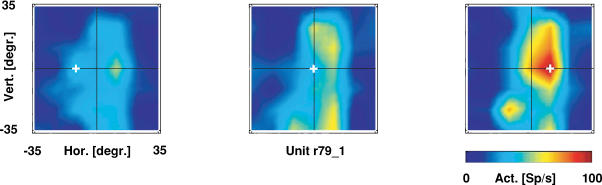

In our experiments, we mapped the location of the visual receptive field (RF) of single VIP neurones while the monkey fixated at different locations on a screen. It turned out that for many neurones the location of the visual RF was independent of gaze direction. One such example is shown in Fig. 1 for varying horizontal gaze. In this figure each colour-coded map shows the RF during fixation at a different location along the horizontal meridian. It becomes obvious that the location of the RF remains stable while the gaze changes from left (left map) to right (right map) from the vertical meridian. In other words, this single VIP neurone encodes visual information in a head-centred reference frame. In addition, response strength increases from left to right. Therefore, even neurones coding visual information in head-centred coordinates might carry an eye position signal.

Figure 1. Receptive field maps for varying eye positions.

The position of each colour-coded map (red: high neural activity (spikes s−1); blue: low neural activity) represents the fixation location (given by the ‘+’ sign) during receptive field (RF) mapping. Maps are shown in screen coordinates. While the gaze shifts from left to right, the RF stays stationary on the screen, i.e. this neurone encodes visual information along the horizontal axis in head-centred coordinates. In addition, response strength increases for more rightward fixation locations.

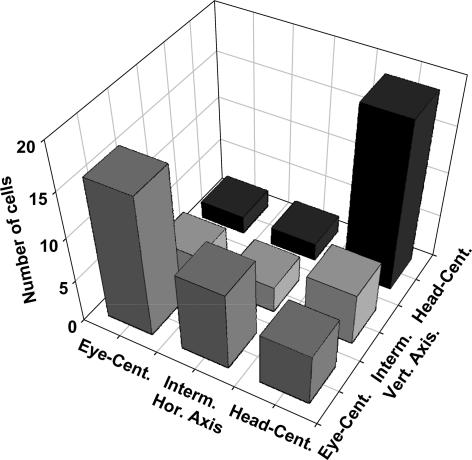

We quantified the stability of this signal transformation by determining the shift in the RF location associated with a given shift in eye position by computing a 2-D cross-correlation analysis. This computation was performed independently for the horizontal and vertical axis since eye positions also varied along these two axes. This analysis allowed us to define a shift index SI = (ΔRF/ΔEye). Accordingly, a shift index of SI = 1.0 represents an eye-centred encoding (the RF shifts by the same amount (ΔRF) as the underlying shift in gaze direction (ΔEye)), while SI = 0.0 indicates a complete head-centred encoding. Figure 2 shows the distribution of shift indices as observed in our study. It becomes obvious that the majority of cells encodes either in an eye- or a head-centred reference frame. Nevertheless, some neurones encode visual information in an intermediate frame of reference. We suggest that this intermediate type of encoding reflects the way in which the brain represents spatial information. Recent theoretical studies have given evidence as to why such an encoding might be of computational advantage for the sensorimotor system. Due to limited space in this review we refer to the original work of Pouget et al. for more on this issue (e.g. Pouget et al. 2002; Deneve & Pouget, 2004).

Figure 2. Distribution of spatial reference frames.

As described in more detail in the main text we determined for each individual neurone its reference frame for visual spatial information along the horizontal and vertical axis (see Fig. 1). Neurones whose visual RF shifted completely with the eye were considered eye-centred. Those neurones whose visual RF did not shift at all with the eye were considered head-centred. Interestingly, a number of neurones fell in an intermediate class that is neither eye- nor head-centred.

To summarize this first part of my review: I have shown that area VIP carries signals relevant for localizing targets in space. Some neurones encode visual information in eye-centred coordinates. The combination of this information with gaze signals allows for a population code of visual information in non-retinal coordinates. In addition, even single neurones encode visual information in head-centred (or even body-centred: Prevosto et al. 2004) coordinates.

Such a representation of visual information is suited to localize targets in space and even to avoid obstacles on the way towards these targets. Indeed, evidence for a putative role of area VIP in object avoidance comes from a recent study by Graziano and colleagues. These authors could show that electrical microstimulation of area VIP results in defensive movements such as those observed during avoidance of (approaching) objects (Cooke et al. 2003).

Multisensory encoding of self and object motion

The visual domain

In an initial study on visual response properties Colby et al. (1993) showed that VIP neurones are selective for the direction and speed of visual stimulus motion. In a follow-up study we and others aimed at characterizing in greater detail these response characteristics. There was reason to assume that VIP neurones would respond selectively to visual self-motion stimuli: firstly, area VIP, like the medial superior temporal area (MST), receives its dominant sensory input from middle temporal area (area MT). and many studies have described visual self-motion responses in area MST (e.g. Duffy & Wurtz 1991a, 1991b, 1995; Lappe et al. 1996). Hence, it could be the case that VIP neurones would also respond to optic flow stimuli. Secondly, Hilgetag et al. (1996) showed in a theoretical study that both areas (MST and VIP) are at the same hierarchical level of the dorsal visual processing stream. Therefore, again, it could be assumed that both areas might also share specific response features.

Accordingly, for our experiments, awake behaving monkeys were sitting in front of a large tangential screen while fixating a central target (Bremmer et al. 1997b, 2002a). During fixation we presented visual stimuli simulating either a forward (expansion) or backward (contraction) motion, with gaze direction and movement direction either being co-aligned (expansion) or anti-aligned (contraction). In such cases (translational movement with fixed gaze), the heading direction is given by the singularity of the optic flow (SOF), i.e. the very point in the visual field without any net motion (Gibson, 1950).

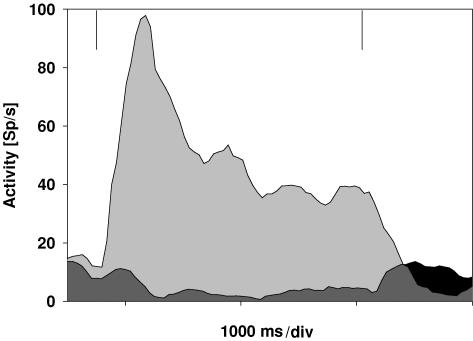

About 75% (67/90) of the neurones in area VIP responded selectively to optic flow stimuli with a majority preferring expansion to contraction stimuli. One such example is shown in Fig. 3. The two spike density curves show the responses of a cell for simulated forward motion (expansion, light grey) and simulated backward motion (contraction, dark grey). The cell reveals a clear preference for forward motion. For contraction, the discharge is even inhibited and reveals a release from inhibition after motion offset.

Figure 3. Neuronal responses for expansion and contraction stimuli.

The spike density curves show the data for testing a cell with stimuli simulating forward (expansion; light grey) and backward (contraction; dark grey and black) motion. The vertical lines indicate stimulus on- and offset. The cell clearly preferred simulated forward motion.

At the population level, the average response for an expansion stimulus was significantly stronger compared to the response for a contraction stimulus (rank sum test, P < 0.01). Our results are in accordance with data obtained by Duysens and colleagues (Schaafsma & Duysens, 1996).

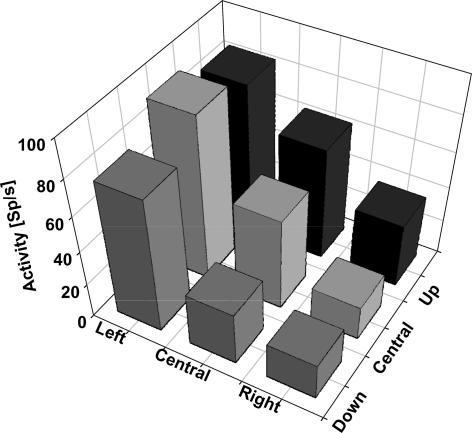

As a second step we were interested in the question of whether the neuronal response strength might be influenced by the location of the singularity of the optic flow, i.e. whether cells are tuned for different heading directions. Hence, we tested neurones for their responses to expansion stimuli with the singularity at one of nine possible focus locations. Possible focus locations were the screen centre or one of eight foci shifted 25 deg into the periphery. The vast majority of neurones (95%) were indeed tuned for heading direction. An example is shown in Fig. 4. Mean discharges for the nine different focus locations are shown in a 3-D plot. Variation of the focus location had a significant influence on the neuronal discharges (P < 0.001).

Figure 4. Tuning for heading direction at the single cell level.

Each column indicates the mean response for a stimulus with the singularity (i.e. heading direction) in a given part of the visual field. Heading directions were either straight ahead (central column) or 25 deg in the periphery. This cell clearly preferred heading to the left.

At the population level, the modulatory influence of the SOF location on the discharge was balanced out. Hence, all heading directions are encoded equally strongly. This result is similar to data previously obtained from single cell recordings in area MST (Lappe et al. 1996). In general, this response characteristic can be considered a prerequisite for a population code of the current heading direction. We could indeed show that a population of VIP neurones is capable of encoding the location of the SOF within the visual field (Bremmer et al. 2002a). In this approach, we applied our previously developed encoding scheme (isofrequency encoding, Boussaoud & Bremmer, 1999) to the responses obtained from a sample of n = 54 neurones from two animals. Heading direction could be predicted from the neuronal discharges with an error of less than 5 deg; a reasonably good value given the relatively small sample size. Yet, for this computation the whole response length had to be considered. To be biologically plausible, heading direction computation must be much faster. We hence tested the dynamics of heading direction encoding based on our isofrequency algorithm. We could show that heading direction is determined already after about t = 400 ms. This processing time is well in line with psychophysical studies on the dynamics of the perception of heading direction in humans (Hooge et al. 1999).

The results described so far imply that area VIP in the macaque is involved in the encoding of self-motion. Yet, motion on the retina can result from real object motion in the outside world as well. We hence asked whether neurones in area VIP are also activated during the tracking of real moving objects and, if so, how the two motion signals would relate to each other (Schlack et al. 2003). In this experimental series monkeys had to track small targets moving in one of four possible directions along the cardinal axes. Our recordings showed that more than half of the VIP neurones respond directionally selective during smooth pursuit eye movements. Interestingly, the preferred direction of smooth pursuit eye movements and translational motion were identical only in a minority of cases. For about half of the cells the directional difference between both motion signals was in the range of 180 deg. Hence, such cells could be used to differentiate between retinal motion due to self-motion as compared to object motion.

The vestibular domain

So far, we have shown that neurones in area VIP are capable of encoding object motion and visually simulated self-motion. An area with a putative role in the encoding of movement in space, however, is supposed to represent not only simulated but rather real motion signals such as those arising from the vestibular organ. Stimulation of this organ, i.e. of the semicircular canals and the otoliths, occurs during rotational and translational self-motion, respectively. In recent studies we have been able to show that many neurones in area VIP indeed carry such self-motion signals (Bremmer et al. 2002b; Schlack et al. 2002).

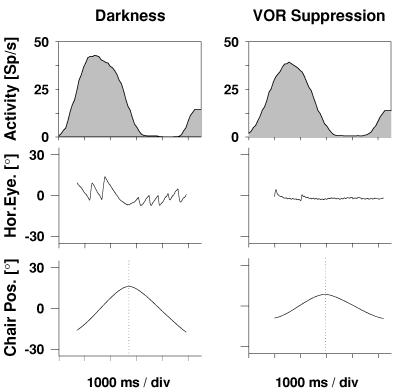

In an initial study, neurones were tested for rotational vestibular responsiveness. During such stimulation, animals (like humans) perform reflexive, compensatory eye movements. In order to exclude the possibility that these compensatory eye movements would drive the neurones, some were tested during vestibular-ocular-reflex (VOR) suppression (see Fig. 5). It turned out that all neurones kept their selectivity for vestibular stimulation across the different experimental conditions. Thus, area VIP represents real rotational self-motion signals. This finding was confirmed in a recent study by Klam and Graf showing that VIP neurones are also often active during active compared to passive head movements (Klam & Graf, 2003).

Figure 5. Responses to rotational vestibular stimulation.

The upper two spike density curves show the responses of a single cell to rotational vestibular stimulation in darkness with free eye movements (left) and during VOR suppression (right). The middle panels show sample horizontal eye position traces. The bottom panels depict the position of the horizontal turntable. This cell responded to rightward motion, irrespective of whether the eye movements were suppressed or not.

All neurones with vestibular responses also showed directionally selective visual responses. Interestingly, preferred directions for visual and for vestibular stimulation were always co-directional. Considering geometrical properties, this can be taken as a hint for the role of area VIP in the coding of motion in near-extrapersonal space. Indeed, in a parallel study we could show that the vast majority of VIP neurones preferred near-moving over far-moving stimuli. In this study we presented dichoptically visual stimuli moving on a circular pathway (Schoppmann & Hoffmann, 1976) at different virtual depths. Stimulus speed was adjusted so that the retinal speed was identical across the different disparity levels. Our recordings revealed that the vast majority of cells preferred stimuli moving in near space, i.e. between the animal's eyes and the plane of fixation. This result is in accordance with data from a previous study using monocular stimulation in near and ultra-near space (Colby et al. 1993). This result, however, is different from that reported for dorsal part of area MST (MST d). Roy & Wurtz (Roy & Wurtz, 1990; Roy et al. 1992) showed that about the same proportion of cells is tuned for near and for far space. Furthermore, about 40% of the MSTd cells reverse their directional tuning dependent on whether motion occurs in the near or far space. However, we could not observe such a reversal of the preferred direction of visual stimulus motion in area VIP (Bremmer & Kubischik, 1999).

In a further study, we tested VIP neurones for their responsiveness to linear translational motion (Schlack et al. 2002). In this experimental series, monkeys were moved sinusoidally on a parallel swing. More than 70% of the neurones responded to pure vestibular stimulation, i.e. linear translation in darkness. Again, like the responses to simulated self-motion, these results are very similar to data obtained from area MST (Duffy, 1998; Bremmer et al. 1999b).

Responses of VIP neurones were often tuned to the direction of the swing movement as well as of visual motion. Yet, preferred directions for the vestibular stimulation coincided with the preferred direction for visually simulated self-motion only in about half of the cases. For those cells with opposite visual and vestibular on-directions, preferred directions during bimodal stimulation were dominated equally often by the visual and vestibular modality. We conclude that, as for the preferred directions of smooth pursuit and translational motion, these non-synergistic response preferences could be used to differentiate between self-induced motion and external object motion.

The somatosensory and auditory domain

In addition to visual and vestibular information, somatosensory and auditory signals can also be used to signal self-motion. Previous studies have shown that many VIP neurones respond to tactile stimulation (Colby et al. 1993; Duhamel et al. 1998). Somatosensory receptive fields typically cover portions of the head, with the upper and lower facial areas being represented in an approximately equivalent manner. Interestingly, somatosensory and visual receptive fields tend to be spatially congruent. As an example, a visual receptive field in the upper left quadrant is often accompanied by a tactile RF of the very same cell on the left forehead. In addition, tactile responses are often directionally selective with preferred directions in both the visual and somatosensory domains being very similar. We suggest that this type of spatial congruency might be used for a congruent supramodal encoding of motion.

Finally, in a recent study we demonstrated for the first time responses to auditory stimuli (Schlack et al. 2005). In this experimental series, single unit activity was recorded during exposure to auditory and visual stimuli. For auditory stimulation we employed stationary white noise bursts presented via calibrated headphones. Signals were filtered with individually measured head-related transfer functions. The stimuli were positioned at various distinct virtual positions in the environment. The visual receptive fields (RFs) were mapped with stimulation patches presented at non-overlapping locations on a projection screen. With this experimental setup it was possible to measure both the auditory and the visual RFs and compare their spatial locations. Reliable visual and auditory RFs could be measured for more than two-thirds of the neurones. More than 60% of these responsive cells revealed a similar response pattern, i.e. RFs in the two sensory domains were spatially congruent.

Summary

I have shown in this review that the ventral intraparietal area (VIP) is a likely candidate for the multisensory encoding of spatial and motion information as required for goal-directed movements in external space. Single cells as well as populations of neurones are capable of signalling visual spatial locations in a non-retinocentric frame of reference. Many neurones are multisensory, i.e. they respond to visual, vestibular, tactile and auditory stimulation. These multimodal responses might be used to differentiate between self-induced motion signals and signals arising from moving objects in the outside world. Further experiments, however, are needed to establish the specific functional role of area VIP for navigation in space.

Acknowledgments

This work was supported by the DFG (SFB 509/B7; Br 2282/1-1), the European Union (hcm: chrxct930267; EUROKINESIS QLK6-CT-2002-00151), and the Human Frontier Science Program (rg71/96b; RG0149/1999B).

References

- Andersen RA, Mountcastle VB. The influence of the angle of gaze upon the excitability of the light-sensitive neurons of the posterior parietal cortex. J Neurosci. 1983;3:532–548. doi: 10.1523/JNEUROSCI.03-03-00532.1983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boussaoud D, Bremmer F. Gaze effects in the cerebral cortex: reference frames for space coding and action. Exp Brain Res. 1999;128:170–180. doi: 10.1007/s002210050832. [DOI] [PubMed] [Google Scholar]

- Boussaoud D, Jouffrais C, Bremmer F. Eye position effects on the neuronal activity of dorsal premotor cortex in the macaque monkey. J Neurophysiol. 1998;80:1132–1150. doi: 10.1152/jn.1998.80.3.1132. [DOI] [PubMed] [Google Scholar]

- Bremmer F. Eye position effects in macaque area V4. Neuroreport. 2000;11:1277–1283. doi: 10.1097/00001756-200004270-00027. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Distler C, Hoffmann KP. Eye position effects in monkey cortex. II. Pursuit- and fixation-related activity in posterior parietal areas LIP and 7A. J Neurophysiol. 1997a;77:962–977. doi: 10.1152/jn.1997.77.2.962. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Duhamel J-R, Ben Hamed S, Graf W. The representation of movement in near extra-personal space in the macaque ventral intraparietal area (VIP) In: Thier P, Karnath H-O, editors. Parietal Lobe Contributions to Orientation in 3D Space. Heidelberg: Springer Verlag; 1997b. pp. 619–630. [Google Scholar]

- Bremmer F, Duhamel J-R, Ben Hamed S, Graf W. Heading encoding in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002a;16:1554–1568. doi: 10.1046/j.1460-9568.2002.02207.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Graf W, Ben Hamed S, Duhamel JR. Eye position encoding in the macaque ventral intraparietal area (VIP) Neuroreport. 1999a;10:873–878. doi: 10.1097/00001756-199903170-00037. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Ilg UJ, Thiele A, Distler C, Hoffmann KP. Eye position effects in monkey cortex. I. Visual and pursuit-related activity in extrastriate areas MT and MST. J Neurophysiol. 1997c;77:944–961. doi: 10.1152/jn.1997.77.2.944. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Klam F, Duhamel J-R, Ben Hamed S, Graf W. Visual-vestibular interactive responses in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002b;16:1568–1586. doi: 10.1046/j.1460-9568.2002.02206.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Kubischik M. Representation of near extrapersonal space in the macaque ventral intraparietal area (VIP) Abstr Soc Neurosci. 1999;25:1164. [Google Scholar]

- Bremmer F, Kubischik M, Pekel M, Lappe M, Hoffmann KP. Linear vestibular self-motion signals in monkey medial superior temporal area. Ann N Y Acad Sci. 1999b;871:272–281. doi: 10.1111/j.1749-6632.1999.tb09191.x. [DOI] [PubMed] [Google Scholar]

- Bremmer F, Pouget A, Hoffmann KP. Eye position encoding in the macaque posterior parietal cortex. Eur J Neurosci. 1998;10:153–160. doi: 10.1046/j.1460-9568.1998.00010.x. [DOI] [PubMed] [Google Scholar]

- Colby CL, Duhamel J-R, Goldberg ME. Ventral intraparietal area of the macaque: anatomical location and visual response properties. J Neurophysiol. 1993;69:902–914. doi: 10.1152/jn.1993.69.3.902. [DOI] [PubMed] [Google Scholar]

- Cooke DF, Taylor CS, Moore T, Graziano MS. Complex movements evoked by microstimulation of the ventral intraparietal area. Proc Natl Acad Sci U S A. 2003;100:6163–6168. doi: 10.1073/pnas.1031751100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deneve S, Pouget A. Bayesian multisensory integration and cross-modal spatial links. J Physiol Paris. 2004;98:249–258. doi: 10.1016/j.jphysparis.2004.03.011. [DOI] [PubMed] [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. J Neurophysiol. 1998;80:1816–1827. doi: 10.1152/jn.1998.80.4.1816. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. I: a continuum of response selectivity to large-field stimuli. J Neurophysiol. 1991a;65:1329–1345. doi: 10.1152/jn.1991.65.6.1329. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Sensitivity of MST neurons to optic flow stimuli. II: mechanisms of response selectivity revealed by small-field stimuli. J Neurophysiol. 1991b;65:1346–1359. doi: 10.1152/jn.1991.65.6.1346. [DOI] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. J Neurosci. 1995;15:5192–5208. doi: 10.1523/JNEUROSCI.15-07-05192.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Bremmer F, BenHamed S, Graf W. Spatial invariance of visual receptive fields in parietal cortex neurons. Nature. 1997;389:845–848. doi: 10.1038/39865. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. Ventral intraparietal area of the macaque: congruent visual and somatic response properties. J Neurophysiol. 1998;79:126–136. doi: 10.1152/jn.1998.79.1.126. [DOI] [PubMed] [Google Scholar]

- Gibson JJ. The Perception of the Visual World. Boston: Houghton Mifflin; 1950. [Google Scholar]

- Hilgetag CC, O'Neill MA, Young MP. Indeterminate organization of the visual system. Science. 1996;271:776–777. doi: 10.1126/science.271.5250.776. [DOI] [PubMed] [Google Scholar]

- Hooge IT, Beintema JA, Van den Berg AV. Visual search of heading direction. Exp Brain Res. 1999;129:615–628. doi: 10.1007/s002210050931. [DOI] [PubMed] [Google Scholar]

- Klam F, Graf W. Vestibular signals of posterior parietal cortex neurons during active and passive head movements in macaque monkeys. Ann N Y Acad Sci. 2003;1004:271–282. doi: 10.1196/annals.1303.024. [DOI] [PubMed] [Google Scholar]

- Lappe M, Bremmer F, Pekel M, Thiele A, Hoffmann KP. Optic flow processing in monkey STS: a theoretical and experimental approach. J Neurosci. 1996;16:6265–6285. doi: 10.1523/JNEUROSCI.16-19-06265.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nowicka A, Ringo JL. Eye position-sensitive units in hippocampal formation and in inferotemporal cortex of the macaque monkey. Eur J Neurosci. 2000;12:751–759. doi: 10.1046/j.1460-9568.2000.00943.x. [DOI] [PubMed] [Google Scholar]

- Pouget A, Deneve S, Duhamel JR. A computational perspective on the neural basis of multisensory spatial representations. Nat Rev Neurosci. 2002;3:741–747. doi: 10.1038/nrn914. [DOI] [PubMed] [Google Scholar]

- Prevosto V, Klam F, Graf W. Abstract Viewer/Itinerary Planner, Society for Neuroscience. Washington, DC: 2004. Visual receptive field organization and spatial reference transformation in intraparietal neurons (area VIP and LIP) of macaque monkeys. Program No. 487.8.2004. [Google Scholar]

- Rosenbluth D, Allman JM. The effect of gaze angle and fixation distance on the responses of neurons in V1, V2, and V4. Neuron. 2002;33:143–149. doi: 10.1016/s0896-6273(01)00559-1. [DOI] [PubMed] [Google Scholar]

- Roy J-P, Komatsu H, Wurtz RH. Disparity sensitivity of neurons in monkey extrastriate area MST. J Neurosci. 1992;12:2478–2492. doi: 10.1523/JNEUROSCI.12-07-02478.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roy J-P, Wurtz RH. The role of disparity-sensitive cortical neurons in signalling the direction of self-motion. Nature. 1990;348:160–162. doi: 10.1038/348160a0. [DOI] [PubMed] [Google Scholar]

- Schaafsma SJ, Duysens J. Neurons in the ventral intraparietal area of awake macaque monkey closely resemble neurons in the dorsal part of the medial superior temporal area in their responses to optic flow patterns. J Neurophysiol. 1996;76:4056–4068. doi: 10.1152/jn.1996.76.6.4056. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann KP, Bremmer F. Interaction of linear vestibular and visual stimulation in the macaque ventral intraparietal area (VIP) Eur J Neurosci. 2002;16:1877–1886. doi: 10.1046/j.1460-9568.2002.02251.x. [DOI] [PubMed] [Google Scholar]

- Schlack A, Hoffmann KP, Bremmer F. Selectivity of macaque ventral intraparietal area (area VIP) for smooth pursuit eye movements. J Physiol. 2003;551:551–561. doi: 10.1113/jphysiol.2003.042994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlack A, Sterbing S, Hartung K, Hoffmann K-P, Bremmer F. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci. 2005;25:4616–4625. doi: 10.1523/JNEUROSCI.0455-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoppmann A, Hoffmann K-P. Continuous mapping of direction selectivity in the cat's visual cortex. Neurosci Lett. 1976;2:177–181. doi: 10.1016/0304-3940(76)90011-2. [DOI] [PubMed] [Google Scholar]

- Trotter Y, Celebrini S. Gaze direction controls response gain in primary visual-cortex neurons. Nature. 1999;398:239–242. doi: 10.1038/18444. [DOI] [PubMed] [Google Scholar]

- Van Opstal AJ, Hepp K, Suzuki Y, Henn V. Influence of eye position on activity in monkey superior colliculus. J Neurophysiol. 1995;74:1593–1610. doi: 10.1152/jn.1995.74.4.1593. [DOI] [PubMed] [Google Scholar]

- Zipser D, Andersen RA. A back-propagation programmed network that simulates response properties of a subset of posterior parietal neurons. Nature. 1988;331:679–684. doi: 10.1038/331679a0. [DOI] [PubMed] [Google Scholar]