Abstract

Bottlenose dolphins (Tursiops truncatus) develop individually distinctive signature whistles that they use to maintain group cohesion. Unlike the development of identification signals in most other species, signature whistle development is strongly influenced by vocal learning. This learning ability is maintained throughout life, and dolphins frequently copy each other’s whistles in the wild. It has been hypothesized that signature whistles can be used as referential signals among conspecifics, because captive bottlenose dolphins can be trained to use novel, learned signals to label objects. For this labeling to occur, signature whistles would have to convey identity information independent of the caller’s voice features. However, experimental proof for this hypothesis has been lacking. This study demonstrates that bottlenose dolphins extract identity information from signature whistles even after all voice features have been removed from the signal. Thus, dolphins are the only animals other than humans that have been shown to transmit identity information independent of the caller’s voice or location.

Keywords: animal communication, individual recognition, Tursiops truncatus

Identity information is encoded in most animal calls, and can give details of species, population, group, family line, and/or individual identity. Although most animals encode some identity information in their calls, comparatively few studies have demonstrated individual discrimination or even recognition. If it occurs, it is usually based on general voice features that affect all call types produced by an individual and allow individual discrimination even if different animals produce the same call type (1–4). However, voice features are subtle and can be masked by background noise or lost over long transmission distances (5–7). Some species use specific cohesion calls in contexts of social separation. There are two types of such calls. The most common scenario is the use of an isolation or cohesion call type that is shared among all members of a species. Here, the identity information is again encoded in individual voice features that affect selected features of the call without changing its overall gestalt. These calls are common in mobile species that live in high-background-noise environments where group cohesion depends on vocal recognition. Penguins, for example, display tremendous interindividual variation in their cohesion calls, but all individuals of a species use the same type of call to maintain cohesion (8). The other strategy to increase distinctiveness of identity information is the use of an individually distinctive and unique call type by each individual. The assumption here is that the identity information is encoded in the call type, i.e., the pattern of frequency modulation over time that gives a spectrographic contour its distinctive shape, rather than in more subtle voice features of the individual. This strategy appears to be less common in animals. Several bird species develop individually specific elements or song types as part of their song displays, and these can be used to identify individuals (9, 10). Humans develop names to facilitate identification, and bottlenose dolphins (Tursiops truncatus) are thought to have a similar system based on the tremendous amount of interindividual variability in their signature whistles (11, 12) but not in other whistle types (13–15). Although theoretically the transition from subtle variations within a call type to different call types is a continuous one, there is a noticeable distinction between species that use either end of the spectrum. Developing individually distinctive call types appears to require vocal learning (9, 16–18), which may be one reason why such calls are rare in animals.

Observations of individually distinctive call types suggest that the identity information is encoded in the type difference rather than in voice cues, but few studies have confirmed this hypothesis. Humans and dolphins (11, 19) copy individually distinctive call types in vocal interactions, and at least humans are capable of using them as descriptive labels in referential communication. Bottlenose dolphins can be taught to use artificial signals to refer to objects (20). If this is a skill used with signature whistles, it may be a rare case of referential communication with learned signals in nonhumans. However, it is not known whether signature whistle shape carries identity information. Sayigh et al. (21) conducted a playback study in which they demonstrated that dolphins recognize related conspecifics, but this discrimination could have been based on voice features because original recordings of whistles were used. In this study, we address the question of whether individual discrimination through signature whistles is independent of such voice features, as it is in human naming. To test this question, we produced synthetic whistles that had the same frequency modulation but none of the voice features of known signature whistles. We conducted playback experiments to known individuals, testing their responses to synthetic signature whistles that resembled those of familiar related and unrelated individuals. We hypothesized that, as in the study of Sayigh et al. (21), animals would turn more often toward the speaker if they heard a whistle resembling that of a related individual.

Results

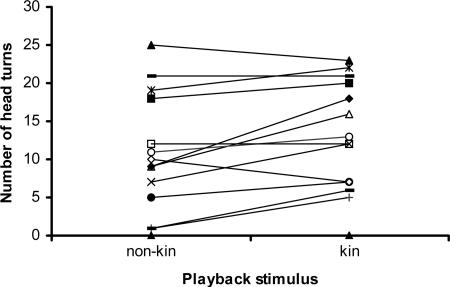

Individuals turned more toward the speaker if the playback was a synthetic signature whistle of a close relative than a synthetic signature whistle of a known unrelated individual matched for age and sex (Z = 2.236, P = 0.025) (see Figs. 1 and 2). This effect was found for 9 of 14 animals. This result clearly demonstrates that the whistle modulation contour carries identity information that is used by receivers. Three animals showed no preference, and two turned more toward the stimulus that resembled an unrelated individual. We found no significant difference in head turns away from the speaker between the two different stimuli classes (Z = 0.267, P = 0.789), nor were there any significant differences in whistle rates in response to playbacks (Z = 0.84, P = 0.401). To test whether kin discrimination or a response based on similarity to the target dolphin’s own whistle was a possible explanation for our results, we cross-correlated spectrograms of whistles of the target animals and the stimuli played to them in saslab avisoft correlator software (Avisoft Bioacoustics, Berlin). We did not find a significant difference between the similarities of the whistles of target animals to those of related and unrelated individuals (Z = 1.352, P = 0.176). We also tested whether target individuals responded more strongly to those stimuli that were significantly more or less similar to their own whistle, independent of kin relationship, and again found no significant difference (Z = 1.406, P = 0.16). Thus, our results demonstrate individual rather than kin discrimination, based on the frequency modulation pattern of whistles.

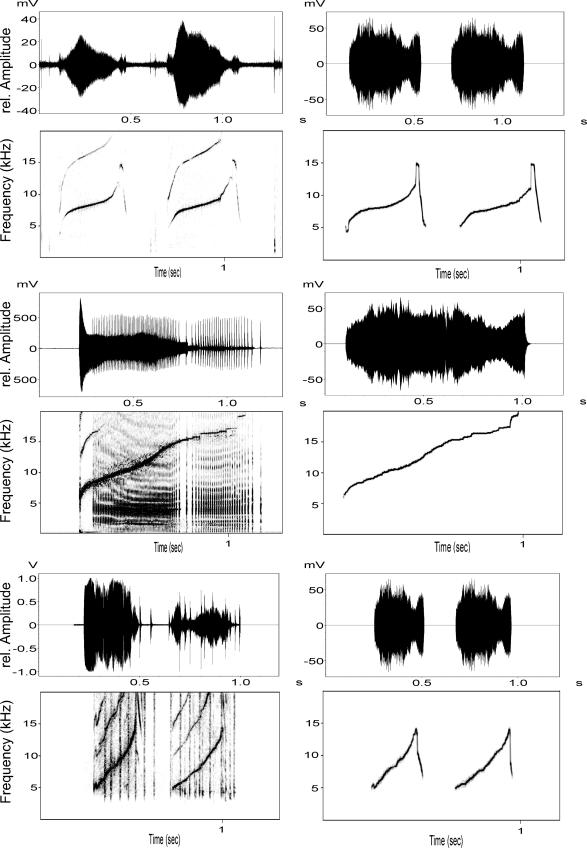

Fig. 1.

Spectrograms and waveforms of original whistles (Left) and their synthetic versions (Right) for three individuals. The first whistle is from a target animal, the second is its kin stimulus, and the third one is its nonkin stimulus whistle. This animal reacted more to its kin stimulus despite its own whistle being more similar to the nonkin stimulus. Synthetic whistles in this figure represent the input to the speaker system.

Fig. 2.

Turning responses toward the kin and nonkin synthetic whistle stimuli. Data for the same individual are connected by lines.

Discussion

This study demonstrates individual identity information being encoded independent of the signaler’s voice or location in the natural communication system of a nonhuman animal. We found not only that the frequency modulation pattern of signature whistles carries sufficient information for individual discrimination but that this information is used by the receiver to identify individuals. This finding does not mean that dolphins do not have individually specific voice features. However, sound production is greatly affected by changing water pressure (22), which would make voice recognition underwater more difficult than in air. Bottlenose dolphins live in a three-dimensional environment with few landmarks or other visual cues that could aid group cohesion (23). In such conditions, signature whistles can facilitate continued contact between individuals. This is reflected in the fact that ≈50% of bottlenose dolphin whistles produced in the wild are signature whistles (24). Although variation is not unexpected in a biological system, we investigated why some individuals did not react in the expected way by either showing no difference in reactions (three animals) or turning more toward the nonrelated individual (two animals). However, there was no pattern in our data that provided an explanation. We suspect that this is a motivational issue that may have to do with the exact nature of the relationship between our target animals and the animals that we used to create the test stimuli. Sayigh et al. (21) found similar individual variability in responses to playbacks of natural signature whistles.

Our results also suggest that animals recognize each other’s whistles individually rather than just discriminate between them. We define recognition as perceiving something to be identical with something previously known. Discrimination can use but does not require such previous knowledge if it is solely based on the comparison of distinctive features. The animals clearly discriminated between stimuli because they showed a preference for one class of stimuli. The question then is why they would develop such a preference. We see two possible explanations for such a preference. One is a bias toward kin based on a preference for an underlying feature in the modulation pattern used by related individuals. However, we did not find any evidence for a systematic bias in the stimuli that resembled those of a particular kin group. The other explanation, which our results strongly support, is that the animals recognized the preferred stimulus individually as one they had encountered before. This finding should clarify previous confusion around the role or even existence of signature whistles (14, 25, 26). The fact that individuals clearly react to identity information in synthetic signature whistles that had all voice information removed demonstrates that the contour carries such signature information and that this information is used by the receiver.

Janik and Slater (27) argued that the need for individual recognition and group cohesion was the most likely selection pressure for the evolution of vocal learning in dolphins. Vocal learning allows increasing interindividual variability of signature whistles while maintaining potential group, population, or species features in the signal. In signature whistle development, an infant appears to copy a whistle that it only heard rarely and then uses a slightly modified version as its own signature whistle (16–18). This process leads to individually distinctive signature whistles but also may lead to geographic variation in whistle parameters over longer distances (28). Currently, we do not know to what extent bottlenose dolphins may use this group or community information, if present. It is known, however, that the largest dolphin species, the killer whale (Orcinus orca), uses group-specific dialects in its communication system (29). An investigation of more species will help to clarify the role of vocal learning in the development of dolphin signals.

Although vocal learning may have evolved in one particular context, it can be used for other purposes once it is established. For example, learning also allows copying of signals in direct social interactions. Dolphins frequently copy each other’s whistles in the wild (30). The fact that signature whistle shape carries identity information independent from voice features presents the possibility to use these whistles as referential signals, either addressing individuals or referring to them, similar to the use of names in humans. Given the cognitive abilities of bottlenose dolphins (31), their vocal learning and copying skills (32), and their fission–fusion social structure (33), this possibility is an intriguing one that demands further investigation.

Materials and Methods

Our study was conducted on the bottlenose dolphin community of Sarasota Bay, FL (34). Capture–release projects of the dolphins in this community since 1975 have provided us with recordings of signature whistles for most individuals (35). To capture animals, a net was deployed from a small outboard vessel in water of <3-m depth. This net was 500 × 4 m in size. Once deployed, it created a net corral that was used to keep the animals for short (1–2 h) periods of time after which they were released back into the wild. Since 1984, recordings were made by using suction cup hydrophones on the melon of the animals while they were being held in the net corral or examined out of the water.

Sayigh et al. (21) demonstrated that bottlenose dolphins from Sarasota can recognize signature whistles of individuals by playing back original whistles to dolphins and analyzing their turning responses. However, their study did not determine whether this recognition was through voice features or the distinctive frequency modulation pattern that signature whistles are known for and that can be copied by other individuals. We used the same paradigm as Sayigh et al. (21) but played synthetic whistles instead of natural dolphin whistles. We used signal 3.1 (Engineering Design, Berkeley, CA) to synthesize whistles; a brief description of this process follows. Every tonal sound can be represented by a frequency function F(t) and an amplitude function A(t). We produced synthetic signature whistles by tracing F(t), the frequency modulation pattern of the fundamental frequency, of each available bottlenose dolphin signature whistle in the Sarasota dolphin community (13) and then synthesizing tonal signals by using F(t) and an average whistle amplitude envelope A(t)average, which represented the sound’s time-varying intensity (36). In each synthesis process F(t) was used from the individual signature whistle that we wanted to produce. A(t)average was the same for all synthesized whistles. This average amplitude function A(t)average was produced from 20 signature and 20 nonsignature whistle A(t) values, which were first all warped to the same length and then added together to create an average whistle. We then calculated A(t)average using the signal 3.1 ENV command using a 5-msec decay time. This command computed A(t)average by detecting the time-varying amplitude envelope of the whistle. First, it rectified the time waveform, then it traced the rectified waveform by following it when it increased and exponentially decaying when it decreased. The exponential time constant was set at 5 msec. Thus, a decrease was either followed closely, or the envelope decayed exponentially if the decrease was too rapid. A(t)average then was smoothed by using a 2-msec rectangular averaging window to avoid added noise during the synthesis process. Some individuals produced multiloop whistles, in which the same contour pattern was produced at least twice whenever the dolphin used the whistle. Each repetition is referred to as a “loop,” and such repeated whistles were considered to be one unit. Multiloop whistles were synthesized by using all of our standardized A(t)average for each loop of the whistle (Fig. 1). Our whistle synthesis provided us with 121 synthetic signature whistles that all shared the same waveform envelope A(t)average and only differed in their frequency modulation patterns. We used a subset of these stimuli in playbacks to 14 temporarily captured adult animals (7 male and 7 female).

Playbacks were conducted during four capture seasons between June 2002 and February 2005. We used a LL9162 speaker (Lubell Labs, Columbus, OH) connected to a car power amplifier to project sounds to dolphins. Sound files were played either from a laptop computer or a SL-S125 CD player (Panasonic, Secaucus, NJ). The frequency response for the combined system was 240 Hz to 20 kHz ± 3 dB. All stimulus pairs were played at the same source level, generating a received level at 2-m distance from the speaker (the location of our experimental animal) that approximated the received level of whistles produced by a nearby dolphin (as judged by the experimenters). Playbacks were monitored with a SSQ94 hydrophone (High Tech, Inc., Gulfport, MI) next to the speaker. Whistle rates were recorded by a custom-built suction cup hydrophone that was attached to the melon of the target dolphin. If there were other animals in the water during a playback (five cases), their whistles also were recorded with a suction cup hydrophone. All recordings were made with a Panasonic AG-7400 video recorder. The frequency response of the recording equipment was 50 Hz to 20 kHz ± 3 dB. Playback sessions were recorded on a Sony DCRTRN 320 digital video camera from an overhead platform on a boat ≈2 m above the water surface at the speaker position (see figure 1 in ref. 21).

Each animal was held in the net corral by several people with its head free to move from side to side for at least 15 min before it was used for a playback. It was then moved into position next to the boat and the speaker and was held there for another 2-min pretrial phase before the playbacks started. In the setup the speaker was facing the dolphin and was placed 2 m to one side of the target animal. The boat was anchored in shallow areas and always faced into the current. There always was open water behind the speaker. We recorded all background noise continuously and aborted the playback if there was loud boat noise or if other dolphins were heard or seen in the area outside the net corral. Animals that were in aborted trials were not used again in this experiment. All people holding the animal were blind to the playback sequence and had no prior experience with the whistles of this dolphin community (even though the stimulus was only rarely audible in air). In three cases there were two other animals held in the net corral at the time of the experiment, and in two cases there was one other animal held in the water. In all but one of these cases the other animals were positioned behind the experimental animal so that it could not see their reactions. In one case the dependent calf of an experimental animal was held to her right, while the speaker was on her left. This female turned toward both stimuli (i.e., away from the calf) and more so in response to the stimulus resembling a related individual.

Each animal listened to a sequence of synthetic whistles resembling the signature whistle of a related individual (as determined through long-term observations and confirmed through genetic testing) and a sequence of synthetic whistles resembling that of an unrelated but known individual that was not directly related to the target individual but had been seen with it in the past 3 years. Depending on stimulus availability, kin whistles that were played back were either of mothers (if target animals were independent offspring) or independent offspring (if target animals were mothers). Unrelated individuals whose synthetic signature whistles were played were of the same sex and approximate age as the related individual. Simple ratio coefficients of association (CoAs) (37) calculated from photoidentification sighting data were used to ensure that the target animal had spent at least some time with both individuals in the previous three years, and CoAs between the target animal and each stimulus animal for this time period were matched as closely as possible. Each playback consisted of several repetitions of the stimulus, and lasted for 30 sec. Playbacks were separated by a quiet period of 5 min. Playback stimuli were presented in a balanced experimental design with seven trials in which the kin stimulus was played first and seven trials with the nonkin stimulus as the first playback. We also balanced the number of whistles played and the total duration of sound played in each 30-sec playback, so that there was no bias in playbacks of kin or nonkin stimuli (see below). Following the methods of Sayigh et al. (1999), we counted head turns >20° toward or away from the playback speaker within a 5-min period from the start of the playback as a response. Anything <20° was not counted because animals frequently moved back and forth within this range. We also recorded whistle rates of the target animals. All analyses including the scoring of reactions were conducted blindly to the stimulus from video recordings of the playback sessions. All data were tested by using Wilcoxon signed ranks tests.

Because dolphin whistles vary in duration and loop number, it is difficult to balance a playback in which whistles of different individuals are being used. Whistles were played back with alternating interwhistle intervals of 4 and 1 sec. We did not play any whistles outside of our 30-sec playback window. Depending on the length of the whistle, this technique resulted in 4–9 whistles being played back in each 30-sec playback interval. Stimulus whistles had between 1 and 4 loops, and the total duration of sound played varied from 2.9 to 11.2 sec. However, there was no bias in whistle number (Z = 0.368, P = 0.713), loop number (Z = 0, P = 1), or total sound duration (Z = 0.596, P = 0.551) for kin or nonkin whistles.

Acknowledgments

We thank all of the Earthwatch Institute and other volunteers who helped with various aspects of the experiments over the years. We especially thank A. Blair Irvine, Michael D. Scott, H. Carter Esch, Kim Fleming, Lynne Williams, Alessandro Bocconcelli, Mandy Cook, Sue Hofmann, Kim Hull, Stephanie Nowacek, and Kim Urian. This work was supported by Dolphin Quest, National Oceanic and Atmospheric Administration (NOAA) Fisheries Service, Disney’s Animal Programs and Mote Marine Laboratory (R.S.W.), Harbor Branch Oceanographic Institute (L.S.S. and R.S.W.), and a Royal Society University Research Fellowship (to V.M.J.). Work was conducted under NOAA Fisheries Service Scientific Research Permits 417, 655, 945, and 522-1569 (to R.S.W.).

Footnotes

Conflict of interest statement: No conflicts declared.

This paper was submitted directly (Track II) to the PNAS office.

References

- 1.Weary D. M., Krebs J. R. Anim. Behav. 1992;43:283–287. [Google Scholar]

- 2.Rendall D., Rodman P. S., Emond R. E. Anim. Behav. 1996;51:1007–1015. [Google Scholar]

- 3.Lind H., Dabelsteen T., McGregor P. K. Anim. Behav. 1996;52:667–671. [Google Scholar]

- 4.Cheney D. L., Seyfarth R. M. Anim. Behav. 1988;36:477–486. [Google Scholar]

- 5.Aubin T., Jouventin P. Proc. R. Soc. London Ser. B; 1998. pp. 1665–1673. [Google Scholar]

- 6.Drullman R., Bronkhorst A. W. J. Acoust. Soc. Am. 2000;107:2224–2235. doi: 10.1121/1.428503. [DOI] [PubMed] [Google Scholar]

- 7.Pollack I., Pickett J. M., Sumby W. H. J. Acoust. Soc. Am. 1954;26:403–406. [Google Scholar]

- 8.Aubin T., Jouventin P. Adv. Study Behav. 2002;31:243–277. [Google Scholar]

- 9.Eens M. Adv. Study Behav. 1997;26:355–434. [Google Scholar]

- 10.Stoddard P. K. In: Ecology and Evolution of Acoustic Communication in Birds. Kroodsma D. E., Miller E. H., editors. Ithaca, NY: Comstock; 1996. pp. 356–374. [Google Scholar]

- 11.Janik V. M., Slater P. J. B. Anim. Behav. 1998;56:829–838. doi: 10.1006/anbe.1998.0881. [DOI] [PubMed] [Google Scholar]

- 12.Caldwell M. C., Caldwell D. K., Tyack P. L. In: The Bottlenose Dolphin. Leatherwood S., Reeves R. R., editors. San Diego: Academic; 1990. pp. 199–234. [Google Scholar]

- 13.Janik V. M., Dehnhardt G., Todt D. Behav. Ecol. Sociobiol. 1994;35:243–248. [Google Scholar]

- 14.Janik V. M. Anim. Behav. 1999;57:133–143. doi: 10.1006/anbe.1998.0923. [DOI] [PubMed] [Google Scholar]

- 15.Watwood S. L., Owen E. C. G., Tyack P. L., Wells R. S. Anim. Behav. 2005;69:1373–1386. [Google Scholar]

- 16.Fripp D., Owen C., Quintana-Rizzo E., Shapiro A., Buckstaff K., Jankowski K., Wells R., Tyack P. Anim. Cognit. 2005;8:17–26. doi: 10.1007/s10071-004-0225-z. [DOI] [PubMed] [Google Scholar]

- 17.Miksis J. L., Tyack P. L., Buck J. R. J. Acoust. Soc. Am. 2002;112:728–739. doi: 10.1121/1.1496079. [DOI] [PubMed] [Google Scholar]

- 18.Tyack P. L. Bioacoustics. 1997;8:21–46. [Google Scholar]

- 19.Tyack P. Behav. Ecol. Sociobiol. 1986;18:251–257. [Google Scholar]

- 20.Richards D. G., Wolz J. P., Herman L. M. J. Comp. Psychol. 1984;98:10–28. [PubMed] [Google Scholar]

- 21.Sayigh L. S., Tyack P. L., Wells R. S., Solow A. R., Scott M. D., Irvine A. B. Anim. Behav. 1999;57:41–50. doi: 10.1006/anbe.1998.0961. [DOI] [PubMed] [Google Scholar]

- 22.Ridgway S. H., Carder D. A., Kamolnick T., Smith R. R., Schlundt C. E., Elsberry W. R. J. Exp. Biol. 2001;204:3829–3841. doi: 10.1242/jeb.204.22.3829. [DOI] [PubMed] [Google Scholar]

- 23.Janik V. M. In: Mammalian Social Learning: Comparative and Ecological Perspectives. Box H. O., Gibson K. R., editors. Cambridge, U.K.: Cambridge Univ. Press; 1999. pp. 308–326. [Google Scholar]

- 24.Cook M. L. H., Sayigh L. S., Blum J. E., Wells R. S. Proc. R. Soc. London Ser. B. 2004;271:1043–1049. doi: 10.1098/rspb.2003.2610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tyack P. L. In: Cetacean Societies: Field Studies of Dolphins and Whales. Mann J., Connor R. C., Tyack P. L., Whitehead H., editors. Chicago: Univ. of Chicago Press; 2000. pp. 270–307. [Google Scholar]

- 26.McCowan B., Reiss D. Anim. Behav. 2001;62:1151–1162. [Google Scholar]

- 27.Janik V. M., Slater P. J. B. Adv. Study Behav. 1997;26:59–99. [Google Scholar]

- 28.Wang D., Würsig B., Evans W. E. Aquat. Mamm. 1995;21:65–77. [Google Scholar]

- 29.Ford J. K. B., Fisher H. D. In: Communication and Behavior of Whales. Payne R., editor. Boulder, CO: Westview; 1983. pp. 129–161. [Google Scholar]

- 30.Janik V. M. Science. 2000;289:1355–1357. doi: 10.1126/science.289.5483.1355. [DOI] [PubMed] [Google Scholar]

- 31.Herman L. M., Pack A. A., Morrel-Samuels P. In: Language and Communication: Comparative Perspectives. Roitblat H. L., Herman L. M., Nachtigall P. E., editors. Hillsdale, NJ: Lawrence Erlbaum Associates; 1993. pp. 403–442. [Google Scholar]

- 32.Herman L. M. In: Imitation in Animals and Artifacts. Dautenhahn K., Nehaniv C. L., editors. Cambridge, MA: MIT Press; 2002. pp. 63–108. [Google Scholar]

- 33.Wells R. S., Scott M. D., Irvine A. B. In: Current Mammalogy. Genoways H. H., editor. Vol. 1. New York: Plenum; 1987. pp. 247–305. [Google Scholar]

- 34.Wells R. S. In: Animal Social Complexity: Intelligence, Culture, and Individualized Societies. de Waal F. B. M., Tyack P. L., editors. Cambridge, MA: Harvard Univ. Press; 2003. pp. 32–56. [Google Scholar]

- 35.Sayigh L. S., Tyack P. L., Wells R. S., Scott M. D. Behav. Ecol. Sociobiol. 1990;26:247–260. [Google Scholar]

- 36.Beeman K. In: Animal Acoustic Communication: Sound Analysis and Research Methods. Hopp S. L., Owren M. J., Evans C. S., editors. Berlin: Springer; 1998. pp. 59–103. [Google Scholar]

- 37.Ginsberg J. R., Young T. P. Anim. Behav. 1992;44:377–379. [Google Scholar]