Background

Systematic reviews in various health care settings have demonstrated that different implementation interventions have varying effects. [1,2]. Most interventions to implement clinical guidelines focused on changing professional behaviour, but there is increasing awareness that factors related to the social, organisational and economical context can also be important determinants of guideline implementation[3]. For instance, a recent study on the implementation of screening guidelines in ambulatory settings has confirmed the influence of a number of organisational factors, such as mission, capacity and professionalism[4]. Despite increasing attention to organisational determinants of guideline implementation, research evidence on the relevance of specific factors is still limited. Insight into these factors is important as it can improve the effectiveness of implementation interventions by tailoring interventions to local circumstances. For example, different interventions may be more effective at academic hospitals than at community hospitals.

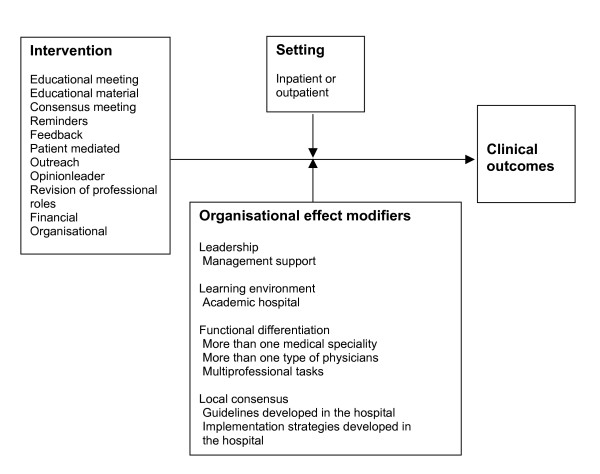

Most reviews on guideline implementation were conducted on implementation across settings, or implementation in primary care settings[5]. The literature on guideline implementation in hospital settings has not yet been reviewed separately. Therefore, we reviewed the effect of different intervention strategies to implement clinical guidelines at hospitals, and explored the impact of specific organisational factors on the effectiveness of these interventions. Hospitals are complex organisational systems whose primary aim is to deliver clinical care to individual patients. Management theories on change and innovation were analysed to derive specific factors for this explorative study. We identified the following factors that moght modify the effects of interventions: sufficient management support, appropriate learning environment, functional differentiation and local consensus on the intended changes (figure 1).

Figure 1.

Conceptual framework to assess the relationship between organisational or implementation aspects and clinical outcomes.

Theories on leadership and on quality management have suggested that support for an innovation from hospital management has a positive impact on its adoption [7-9]. The impact of management support may be based on power, incentives or facilitation. Hospital managers may also act as role models by implementing the innovation. Thus we hypothesized that implementation interventions are more effective if the effort is clearly supported by the local leaders.

The learning environment comprises a second set of factors. The underlying mechanism is that the availability of knowledge in the organisation enhances the adoption of innovations. This is consistent with existing theory on organisational learning, which suggests that an organisation's capacity to learn as an organisation is a crucial feature[9]. Teaching hospitals create a specific learning environment for trainers and trainees. Therefore we expected that implementation interventions are more effective in teaching hospitals than in non-teaching hospitals.

Functional differentiation is another factor that is expected to influence the uptake of new information or procedures in practice[10]. A higher level of specialisation and a higher level of technical expertise in the organisation may enhance implementation. The level and diversity of knowledge may be larger in settings with a range of medical disciplines, in which there is involvement of consultants, other physicians and non-physician practitioners. We therefore hypothesized that higher functional differentiation is positively associated with the effectiveness of implementation interventions.

Finally, we expected that promoting ownership through local consensus about clinical guideline recommendations and implementation strategies may also be associated with better uptake[11]. Organisational learning theory suggests that information gathering, shared perceptions of performance gaps and an experimental mind-set are important factors for learning in organisations[9]. Specific group cultures at hospitals appear to be associated with patient outcomes[12]. Theory on complex adaptive systems suggests that innovations should not be specified in detail in order to promote ownership and that 'muddling-through' should steer the guideline implementation process[13], while theory on adult learning adds that implementation should be tailored to each individual's learning needs[14]. We hypothesized that guideline implementation interventions would be most effective when developed within a hospital rather than derived from sources outside a hospital.

This systematic literature review aimed to assess the effectiveness of implementation and quality improvement interventions in hospital settings and to test our hypotheses on the impact of organisational factors.

Methods

Inclusion/exclusion

Only studies with a concurrent control group of the following designs were included:

• Randomised controlled trials (RCTs), involving individual randomisation or cluster randomisation on the level of the hospital, ward or professional.

• Controlled clinical trials or controlled before-and-after studies.

Participants: the studies described the performance of medical health care professionals working at the hospitals. Medical centres, health centres or clinics without an inpatient department were excluded. Ambulatory departments and clinics that fell directly under hospital management were included.

Intervention: studies that evaluated interventions to implement guidelines were included. If the guidelines were aimed at multi-professional groups or other health care professionals, studies were only included if the results on medical health care professionals were reported separately, or medical health care professionals represented more than 50% of the target population. Studies that evaluated the introduction of guidelines targeted at undergraduate medical students were excluded.

Outcome: objective measures of provider behaviour, such as proportion of patients treated in accordance with guidelines. Only studies reporting dichotomous measures were included.

Literature search

Studies were identified from a systematic review of guideline dissemination and implementation strategies across all settings[2]. Details of the search strategies and their development are described elsewhere[2]. Briefly, electronic searches were made of the following databases: Medline (1966–1998), HEALTHSTAR (1975–1998), Cochrane controlled trial register (4th edition 1998), EMBASE (1980–1998), SIGLE (1980–1988) and the Cochrane Effective Practice and Organisation of Care group specialised register. For the review of interventions in all settings, over 150,000 hits were screened: 5000 were considered potentially relevant papers and full text articles of 863 were retrieved for assessment. In total, 235 studies were included in the systematic review of strategies across all settings. These studies were screened to identify potentially relevant studies for the hospital based review; we identified 108 studies conducted in hospital settings, of which 23 did not have a concurrent control group (were interrupted time series designs) and 32 other studies had continous measures. Therefore 53 of the 108 studies met our inclusion criteria.

Data-extraction

The study followed the methods proposed by the Cochrane Effective Practice and Organisation of Care (EPOC) group[14]. Two independent reviewers extracted data on study design, methodological quality, participants, study settings, target behaviours, characteristics of interventions and study results, according to the EPOC checklist[15]. A second data extraction was done to assess potential organisational effect modifiers in hospital studies. Management support was regarded as positive if the manuscript gave information on direct support from the hospital management for the intervention, such as funding, or when the project was initiated by the hospital management or was set up as a result of hospital quality improvement strategies. "Academic hospital" was taken as the proxy for learning environment. Functional differentiation was operationalized by noting whether more than one specialty had been involved in the intervention, e.g. internal medicine and gynaecology, or whether more than one type of physician had been involved, e.g. specialists and residents, or when other professions, e.g. trained nurses, had been directly involved in the implementation process. Local consensus was regarded as being present when explicit information was given that the guidelines had been developed at the hospital or when major adaptations had been made to external guidelines before introduction at this hospital. Local consensus was also considered to be present when the implementation strategies had been developed at the hospital.

Analysis

Analysis was based on the theoretical framework depicted in figure 1. The effect of the different intervention strategies on clinical outcomes was expected to be influenced by the organisational effect modifiers listed under the headings leadership, learning environment, functional differentiation and local consensus. Effects and modifiers may have different influences on clinical outcomes in inpatient or outpatient settings (figure 1).

In each comparison, the primary process of care measure was extracted, as defined by the authors. If multiple process of care measures were reported and none of them were defined as being the primary variable, effect sizes were ranked and the median value was taken. Effect sizes were constructed so that treatment benefits were denoted positively.

All statistical analyses were performed using the proc mixed procedure by SAS version 6.12. First we estimated the treatment effect (log odds ratio) and the variances in this effect for each comparison weighted for variance within the study and between studies. These estimated effects were used as responses in a random effect meta-regression model, in which we corrected for multiple comparisons in a single study. In most studies a unit of analysis error was found and insufficient data were presented to calculate cluster sizes. First we ignored the unit of analysis error to analyse all the studies included. Then, from the studies that reported sufficient data on the number of participants and professionals, a sensitivity analysis was performed. We did this by calculating the design effect by using the cluster sizes in each study and assuming a constant and conservative intracluster correlation of 0.20,[16] after which we re-ran the meta-regression model with and without a correction for a unit of analysis error.

To measure the effect of each individual intervention strategy, effect sizes were adjusted for other intervention components that appeared in at least one third of the studies on each strategy. Adjustment for other interventions that appeared less frequently was not possible due to small numbers. To evaluate the relative effectiveness of different intervention components, covariates were included in the model.

Results

The 53 trials yielded 81 comparisons. The appendix gives an overview of these studies and the intervention components of each comparison compared to the intervention components, if present, in the control group [see Additional file 1]. The trials consisted of 39 randomised controlled trials (of which 32 were clustered randomised controlled trials), 7 controlled clinical trials and 7 controlled before-and-after studies; 19 were inpatients studies [17-36], 28 were outpatient studies [37-64] and 6 had mixed settings [65-70]. In the 81 comparisons, 22 involved a single intervention. Mean number of interventions per comparison was 2.5 (SD 1.3).

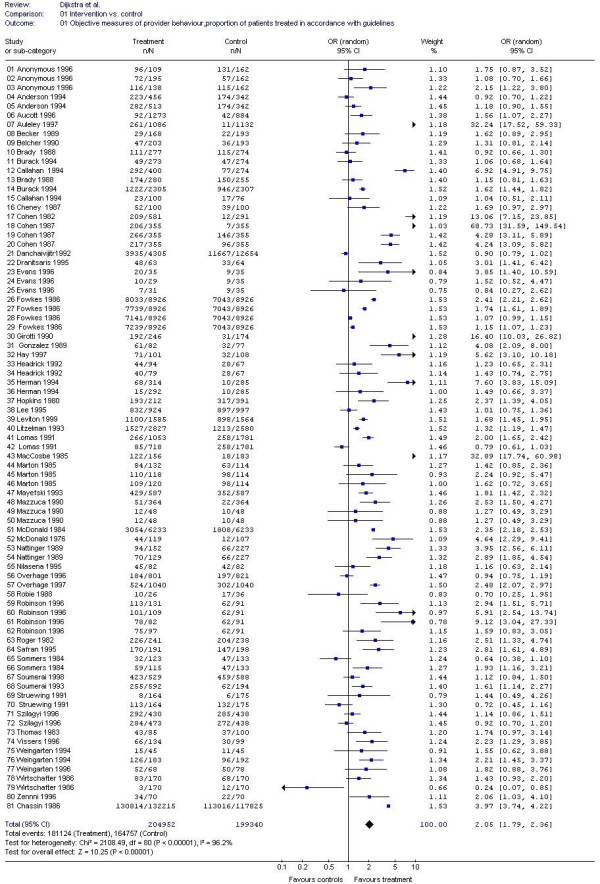

Table 1 shows the results of the meta-analysis on the effects of various intervention components. When taken together, the Odds ratio in all intervention strategies appeared to be 2.13 (SD 1.72–2.65). These total results are visualised in a Forest plot in figure 2. The Odds ratio for the forest plot is slightly different because we could not correct for different interventions within studies. Single interventions consisted of reminders or feedback; no other intervention strategies were applied as a single intervention strategy. Overall odds ratios were 2.18 in single intervention studies, versus 1.77 in studies that had more than one intervention component, the so-called multifaceted intervention studies. Intervention components applied most frequently were reminders, feedback, educational meetings and educational material. Only a few studies included outreach visits, consensus meetings, financial interventions or the role of an opinion leader. With regard to the specific components of individual intervention strategies, we found that all components showed positive effects, except for consensus meetings, outreach and financial interventions, possibly due to small numbers.

Table 1.

Effectiveness of specific intervention components

| n | Total comparisons OR (95%CI) | ||

| Total effect | 81 | 2.13 (1.72–2.65) | |

| Single interventions | 22 | 2.18 (1.62–2.94) | |

| Multifaceted interventions | 59 | 1.77 (1.36–2.31) | |

| Single intervention strategies | |||

| Reminders | 18 | 2.14 (1.49–3.07) | |

| Feedback | 4 | 2.33 (1.32–4.10) | |

| Components of multifaceted intervention strategies | n | Unadjusted Odds ratios OR (95%CI) | Adjusted Odds ratios# OR (95%CI) |

| Educational meeting (ab) | 34 | 1.98 (1.39–2.82) | 0.66 (0.25–1.76) |

| Educational material (bc) | 41 | 2.13 (1.60–2.84) | 1.84 (1.09–3.11) |

| Consensus meeting (ad) | 6 | 1.35 (0.73–2.49) | 0.76 (0.56–1.04) |

| Reminders (ac) | 54 | 2.10 (1.61–2.75) | 1.92 (1.39–2.65) |

| Feedback (ac) | 20 | 2.01 (1.28–3.18) | 2.50 (1.38–4.52) |

| Patient mediated (abc) | 12 | 2.00 (1.08–3.70) | 0.64 (0.16–2.54) |

| Outreach | 2 | 1.67 (0.70–4.01) | Na |

| Opinion leader | 5 | 1.51 (1.13–2.02) | na |

| Revision of professional roles (abc) | 13 | 2.57 (1.13–5.87) | 9.78 (3.22–29.70) |

| Financial | 3 | 3.16 (0.40–24.9) | Na |

| Organisational (abce) | 15 | 1.89 (1.12–3.20) | 8.41 (0.81–87.2) |

n: number of comparisons, OR: Odds ratios (95% confidence intervals) of intervention versus control

# adjusted for other intervention components if present in > 1/3 of the comparisons.

Adjusted for (a): educational material, (b): reminders (c): educational meeting (d): feedback (e): revision of professional roles, na: not available due to small numbers.

Figure 2.

forest plot: total effect of guideline implementation in hospitals.

To learn more about the contribution of each intervention strategy to a multifaceted approach, we adjusted for co-operating intervention components to identify each unique contribution made by a specific intervention component when the other intervention components remained constant. Although adjustments could only be made for other intervention components that were co-operating in at least one third of the comparisons, we saw substantial changes in the results. The effects of educational material, reminders and feedback remained statistically significant, while the effects of educational meetings and patient-mediated interventions disappeared. The effect of the latter might be explained by the other co-operating intervention components. Furthermore, the revision of professional roles appeared to be a strong component in the intervention strategies besides organisational interventions, although the latter were not significant. Sensitivity analysis showed that, within the 47 comparisons in which cluster sizes could be calculated, adjustment for clustering effects showed some small changes in the effect sizes. There were no effect sizes that had become non significant due to adjustment for a design effect. Table 2 describes the effect of the different organisational factors on outcome measures. For most organisational effect modifiers, no significant differences were found in outcomes. Academic hospitals showed greater improvements in inpatient care only compared with community general hospitals.

Table 2.

Effects of the presence of organisational features to improve the quality of care at hospitals

| Total comparisons | Inpatient | Outpatient | ||||

| Organisational effect modifiers | n | OR (95%CI) | n | OR (95%CI) | n | OR (95%CI) |

| leadership | ||||||

| management support | 20 | 1.95 (1.26–3.03) | 5 | 2.77 (0.90–8.51) | 11 | 1.47 (0.83–2.62) |

| no management support | 61 | 2.20 (1.71–2.84) | 24 | 1.98 (1.21–3.24) | 30 | 2.66 (1.94–3.65) |

| learning environment | ||||||

| Academic hospital | 44 | 2.15 (1.62–2.86) | 9 | 3.42 (2.25–5.19) | 32 | 1.91 (1.41–2.59) |

| non academic hospital | 37 | 2.11 (1.49–2.98) | 20 | 1.44 (0.95–2.19)* | 9 | 3.90 (2.87–5.29)* |

| functional differentiation | ||||||

| >1 medical speciality involved | 23 | 1.57 (1.03–2.38) | 13 | 1.79 (0.90–3.54) | 5 | 1.33 (0.41–4.36) |

| 1 medical speciality involved | 58 | 2.39 (1.87–3.06) | 16 | 2.48 (1.39–4.41) | 36 | 2.43 (1.80–3.27) |

| more physician types | 38 | 2.06 (1.53–2.79) | 15 | 1.97 (1.15–3.37) | 21 | 2.35 (1.52–3.61) |

| one physician type | 43 | 2.22 (1.61–3.06) | 14 | 2.62 (1.20–5.76) | 20 | 2.21 (1.46–3.34) |

| multiprofessional task | 14 | 1.64 (0.94–2.88) | 3 | 1.46 (0.09–24.0) | 9 | 1.86 (0.93–3.71) |

| no multiprofessional task | 67 | 2.25 (1.78–2.85) | 26 | 2.25 (1.44–3.53) | 32 | 2.41 (1.74–3.34) |

| local consensus | ||||||

| own guideline | 45 | 1.99 (1.49–2.65) | 19 | 2.11 (1.24–3.60) | 23 | 2.08 (1.41–3.06) |

| external guideline | 36 | 2.35 (1.68–3.30) | 10 | 2.26 (0.99–5.18) | 18 | 2.57 (1.63–4.06) |

| own intervention | 59 | 1.78 (1.36–2.32) | 16 | 2.56 (1.56–4.21) | 36 | 1.85 (1.32–2.60) |

| external intervention | 22 | 4.62 (2.82–7.57)** | 13 | 1.37 (0.60–3.11) | 5 | 12.7 (4.99–32.2)** |

n: number of comparisons; OR: Odds ratios (95% confidence interval)

significant differences between presence or absence of possible moderators: * p < 0.05 ** p < 0.01

However, in outpatient studies, community hospitals showed significantly larger effects. Furthermore, interventions that had not been developed internally, but had originated from outside the hospital, led to better outcomes, especially in outpatient studies.

Discussion

This is the first systematic review to make an in depth exploration of guideline implementation in relation to the organisational characteristics of hospitals. Not only multifaceted interventions seemed to be effective, but also single interventions, contrary to our expectation that multifaceted interventions would prevail[69]. Single intervention strategies, particularly reminders, known to be effective in other settings, also appeared to be effective strategies in hospitals. Although a multi-faceted intervention including reminders may be effective, a single reminder strategy might provide a clearer or more consistent message and thus have more impact. Furthermore, educational material, reminders, feedback and revision of professional roles had more effect than other intervention strategies. We did not confirm our hypotheses on the influence of organisational factors, except for a learning environment in inpatient settings. Contrary to our expectations, effects were greater at community hospitals in outpatient studies. In some multi-centre studies, no difference was found between academic and community hospitals [27,28]. or only a moderate positive effect was found for academic hospitals[70]. External factors, such as the origin of the intervention, seemed to have more impact than internal factors.

This review of the research literature has the limitation that it was an explorative retrospective study that may have suffered from publication bias. There may also have been reporting bias, because different studies with different aims were brought together and compared, while their research question was not to measure the influence of contextual factors. Due to limited organisational data within the studies, the validity of the measurements of organisational effect modifiers can be challenged. Another limitation of the study concerned the validity of the determinants, e.g. with regard to teaching hospitals as a proxy for learning environment, when no other valid measurements for learning environment could be found in the manuscripts. Other potential effect modifiers had to be disregarded, because there were no data present, for example organisational slack[9]. Also the number of studies was limited, partly because studies were left out if they did not produce dichotomous measures or did not have a concurrent group (interrupted time series designs). Adding these studies might have given our analysis greater power and perhaps other conclusions. The analysis was not corrected for clustering effects, which may have led to an overestimation of the statistical significance of the effects. Finally the study is limited by the fact that the exhaustive search strategy and data extraction inhibited the inclusion of studies published after 1998. An update of this review in a new study is recommended, including the possibility of exploring the inclusion of interupted time series and studies with continous measures.

Theories on organisational effect modifiers are mostly based on study results from a wide range of fields inside and outside health care and when they are based on health care, this mostly concerns primary care. Despite the pertinence of regarding organisational factors as being crucial for quality improvement, there is only limited research evidence for the claims made. The influence of possible organisational effect modifiers on the quality of care at hospitals needs more research attention and more evidence is needed from inside not outside hospitals for the development of theories in this field. Ongoing research should develop validated measures of potentially important organisational constructs and explore their influence on quality of care.

Conclusion

There is no 'magic bullet' in terms of the most effective strategy or organisational effect modifiers for the implementation of change within hospitals. On an organisational level, barriers against and facilitators for effective interventions are unclear. Depending on the management policy and other local factors, such as funds available and motivation of the health care personnel, hospitals might wish to focus on building a learning organisation [71] or on adopting proven, effective, strategies from outside.

Competing interests

The author(s) declare that they have no competing interests.

Authors' contributions

RD, MW, JB and RG drew up the design and framework of this manuscript. Data extraction on profesional performances and intervention strategies was done by RT, JG, RD and MW. Data extraction on organisational features was done by RD and MW. Statistical analysis was done bij RA. All authors read and approved the final manuscript.

Pre-publication history

The pre-publication history for this paper can be accessed here:

Supplementary Material

Studies to implement guidelines at hospitals. The reviewed studies are listed with details on type of study, setting, target, number of patientes, intervention strategies and post study percentages on the primary outcome measure.

Acknowledgments

Acknowledgements

We are grateful to our colleagues involved in the systematic review of guideline dissemination and implementation strategies across all settings especially Cynthia Fraser, Graeme MacLennan, Craig Ramsay, Paula Whitty, Martin Eccles, Lloyd Matowe, Liz Shirran. The systematic review of guideline dissemination and implementation strategies across all settings was funded by the UK NHS Health Technology Assessment Program. Dr Ruth Thomas is funded by a Wellcome Training Fellowship in Health Services Research. (Grant number GR063790MA). The Health Services Research Unit is funded by the Chief Scientists Office of the Scottish Executive Department of Health. Dr Jeremy Grimshaw holds a Canada Research Chair in Health Knowledge Transfer and Uptake. However the views expressed are those of the authors and not necessarily the funders.

Contributor Information

Rob Dijkstra, Email: r.dijkstra@kwazo.umcn.nl.

Michel Wensing, Email: m.wensing@kwazo.umcn.nl.

Ruth Thomas, Email: ret@hsru.abdn.ac.uk.

Reinier Akkermans, Email: r.akkermans@ives.umcn.nl.

Joze Braspenning, Email: j.braspenning@kwazo.umcn.nl.

Jeremy Grimshaw, Email: jgrimshaw@ohri.ca.

Richard Grol, Email: r.grol@kwazo.umcn.nl.

References

- Grol R, Grimshaw JM. From best evidence to best practice: effective implementation of change in patients' care. Lancet. 2003;362:1225–1230. doi: 10.1016/S0140-6736(03)14546-1. [DOI] [PubMed] [Google Scholar]

- Grimshaw JM, Thomas RE, MacLennan G, Fraser C, ramsay CR, Vale L, Whitty P, Eccles MP, Matowe L, Shirran L, Wensing M, Dijkstra R, Donaldson C. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Ass. 2004;8 doi: 10.3310/hta8060. [DOI] [PubMed] [Google Scholar]

- Di Blasi Z, Harkness E, Ernst E, Georgiou A, Kleijnen J. Influence of context effects on health outcomes: a systematic review. Lancet. 2001;357:757–762. doi: 10.1016/S0140-6736(00)04169-6. [DOI] [PubMed] [Google Scholar]

- Vaughn TE, McCoy K, BootsMiller BJ, Woolson RF, Sorofman B, Tripp-Reimer T, Perlin J, Doebbeling BN. Organizational predictors of adherence to ambulatory care screening guidelines. Med Care. 2002;40:1172–1185. doi: 10.1097/00005650-200212000-00005. [DOI] [PubMed] [Google Scholar]

- Grimshaw JM, Shirran L, Thomas R, Mowatt G, Fraser C, Bero L, Grilli R, Harvey E, Oxman A, O'Brien MA. Changing provider behavior: an overview of systematic reviews of interventions. Med-Care. 2001;39:2–45. [PubMed] [Google Scholar]

- Lipman T. Power and influence in clinical effectiveness and evidence-based medicine. Fam Pract. 2000;17:557–563. doi: 10.1093/fampra/17.6.557. [DOI] [PubMed] [Google Scholar]

- Gustafson DH, Hundt AS. Findings of innovation research applied to quality management principles for health care. Health Care Manage Rev. 1995;20:16–33. [PubMed] [Google Scholar]

- Weiner JP, Parente ST, Garnick DW, Fowles J, Lawthers AG, Palmer RH. Variation in office-based quality. A claims-based profile of care provided to Medicare patients with diabetes. JAMA. 1995;273:1503–1508. doi: 10.1001/jama.273.19.1503. [DOI] [PubMed] [Google Scholar]

- Nevis EC, Di Bella AJ, Gould JM. Understanding organizations as learning systems. Sloan Management Review. 1995;73:73–85. [Google Scholar]

- Damanpour F. Organizational innovation: a meta-analysis of effects of determinants and moderators. Acad Manage J. 1991;34:555–590. doi: 10.2307/256406. [DOI] [Google Scholar]

- Dickinson E. Using marketing principles for healthcare development. Qual Health Care. 1995;4:40–4. doi: 10.1136/qshc.4.1.40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shortell SM, Jones RH, Rademaker AW, Gillies RR, Dranove DS, Hughes EF, Budetti PP, Reynolds KS, Huang CF. Assessing the impact of total quality management and organizational culture on multiple outcomes of care for coronary artery bypass graft surgery patients. Med Care. 2000;38:207–217. doi: 10.1097/00005650-200002000-00010. [DOI] [PubMed] [Google Scholar]

- Plsek PE, Wilson T. Complexity, leadership, and management in healthcare organisations. BMJ. 2001;323:746–749. doi: 10.1136/bmj.323.7315.746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tassone MR, Heck CS. Motivational orientations of allied health professionals participating in continuing education. J Contin Educ Health Prof. 1997;17:97–105. [Google Scholar]

- Bero L, Grilli R, Grimshaw JM, Mowatt G, Oxman A, Zwarenstein M. Cochrane effective practice and organization of care review group. the Cochrane Library. 2001.

- Campbell M, Grimshaw J, Steen N. Sample size calculations for cluster randomised trials. Changing Professional Practice in Europe Group. J Health Serv Res Policy. 2000;5:12–6. doi: 10.1177/135581960000500105. [DOI] [PubMed] [Google Scholar]

- Hay JA, Maldonado L, Weingarten SR, Ellrodt AG. Prospective evaluation of a clinical guideline recommending hospital length of stay in upper gastrointestinal tract hemorrhage. JAMA. 1997;278:2151–2156. doi: 10.1001/jama.278.24.2151. [DOI] [PubMed] [Google Scholar]

- Overhage JM, Tierney WM, Zhou XH, McDonald CJ. A randomized trial of "corollary orders" to prevent errors of omission. J Am Med Inform Assn. 1997;4:364–375. doi: 10.1136/jamia.1997.0040364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leviton LC, Goldenberg RL, Baker CS, Schwartz RM, Freda MC, Fish LJ, Cliver SP, Rouse DJ, Chazotte C, Merkatz IR, Raczynski JM. Methods to encourage the use of antenatal corticosteroid therapy for fetal maturation: a randomized controlled trial. JAMA. 1999;281:46–52. doi: 10.1001/jama.281.1.46. [DOI] [PubMed] [Google Scholar]

- Weingarten S, Riedinger MS, Conner L, Johnson B, Ellrodt AG. Reducing lengths of stay in the coronary care unit with a practice guideline for patients with congestive heart failure Insights from a controlled clinical trial. Med Care. 1994;32:1232–1243. doi: 10.1097/00005650-199412000-00006. [DOI] [PubMed] [Google Scholar]

- Weingarten S. Practice guidelines and reminders to reduce duration of hospital stay for patients with chest pain. An interventional trial. Ann Intern Med. 1994;120:257–263. doi: 10.7326/0003-4819-120-4-199402150-00001. [DOI] [PubMed] [Google Scholar]

- Kong GK, Belman MJ, Weingarten S. Reducing length of stay for patients hospitalized with exacerbation of COPD by using a practice guideline. Chest. 1997;111:89–94. doi: 10.1378/chest.111.1.89. [DOI] [PubMed] [Google Scholar]

- Weingarten S, Riedinger MS, Hobson P, Noah MS, Johnson B, Giugliano G, Norian J, Belman MJ, Ellrodt AG. Evaluation of a pneumonia practice guideline in an interventional trial. Am J Resp Crit Care. 1996;153:1110–1115. doi: 10.1164/ajrccm.153.3.8630553. [DOI] [PubMed] [Google Scholar]

- Fowkes FG, Davies ER, Evans KT, Green G, Hartley G, Hugh AE, Nolan DJ, Power AL, Roberts CJ, Roylance J. Multicentre trial of four strategies to reduce use of a radiological test. Lancet. 1986;1:367–370. doi: 10.1016/S0140-6736(86)92327-5. [DOI] [PubMed] [Google Scholar]

- Robinson MB, Thompson E, Black NA. Evaluation of the effectiveness of guidelines, audit and feedback: improving the use of intravenous thrombolysis in patients with suspected acute myocardial infarction. Int J Qual Health Care. 1996;8:211–222. doi: 10.1016/1353-4505(96)00033-6. [DOI] [PubMed] [Google Scholar]

- Soumerai SB, McLaughlin TJ, Gurwitz J, Guagagnoli E, Hauptman PL, Borbas C, Morris N, McLaughlin B, Gao X, Willison DJ, Asinger R, Gobel F. Effect of local medical opinion leaders on quality of care for acute myocardial infarction: a randomized controlled trial. JAMA. 1998;279:1358–1363. doi: 10.1001/jama.279.17.1358. [DOI] [PubMed] [Google Scholar]

- Wirtschafter DD, Sumners J, Jackson JR, Brooks CM, Turner M. Continuing medical education using clinical algorithms. A controlled-trial assessment of effect on neonatal care. Am J Dis Child. 1986;140:791–797. doi: 10.1001/archpedi.1986.02140220073036. [DOI] [PubMed] [Google Scholar]

- Soumerai SB, Salem-Schatz S, Avorn J, Casteris CS, Ross-degnan D, Popovsky MA. A controlled trial of educational outreach to improve blood transfusion practice. JAMA. 1993;270:961–966. doi: 10.1001/jama.270.8.961. [DOI] [PubMed] [Google Scholar]

- Anderson FA, Jr, Wheeler HB, Goldberg RJ, Hosmer DW, Forier A, Patwardhan NA. Changing clinical practice. Prospective study of the impact of continuing medical education and quality assurance programs on use of prophylaxis for venous thromboembolism. Arch Intern Med. 1994;154:669–677. doi: 10.1001/archinte.154.6.669. [DOI] [PubMed] [Google Scholar]

- Sommers LS, Sholtz R, Shepherd RM, Starkweather DB. Physician involvement in quality assurance. Med Care. 1984;22:1115–1138. doi: 10.1097/00005650-198412000-00006. [DOI] [PubMed] [Google Scholar]

- Overhage JM, Tierney WM, McDonald CJ. Computer reminders to implement preventive care guidelines for hospitalized patients. Arch Intern Med. 1996;156:1551–1556. doi: 10.1001/archinte.156.14.1551. [DOI] [PubMed] [Google Scholar]

- Lomas J, Enkin MW, Anderson GM, Hannah WJ, Vayda E, Singer J. Opinion leaders vs audit and feedback to implement practice guidelines. Delivery after previous cesarean section. JAMA. 1991;265:2202–2207. doi: 10.1001/jama.265.17.2202. [DOI] [PubMed] [Google Scholar]

- Dranitsaris G, Warr D, Puodziunas A. A randomized trial of the effects of pharmacist intervention on the cost of antiemetic therapy with ondansetron. Supportive Care in Cancer. 1995;3:183–189. doi: 10.1007/BF00368888. [DOI] [PubMed] [Google Scholar]

- Danchaivijitr S, Chokloikaew S, Tangtrakool T, Waitayapiches S. Does indication sheet reduce unnecessary urethral catheterization? J Med Assoc Thai. 1992;75:1–5. [PubMed] [Google Scholar]

- Girotti MJ, Fodoruk S, Irvine-Meek J, Rotstein OD. Antibiotic handbook and pre-printed perioperative order forms for surgical antibiotic prophylaxis: do they work? Can J Surg. 1990;33:385–388. [PubMed] [Google Scholar]

- MacCosbe PE, Gartenberg G. Modifying empiric antibiotic prescribing: experience with one strategy in a medical residency program. Hosp Formul. 1985;20:986–999. [PubMed] [Google Scholar]

- Struewing JP, Pape DM, Snow DA. Improving colorectal cancer screening in a medical residents' primary care clinic. Am J Prev Med. 2002;7:75–81. [PubMed] [Google Scholar]

- Aucott JN, Pelecanos E, Dombrowski R, Fuehrer SM, Laich J, Aron DC. Implementation of local guidelines for cost-effective management of hypertension. A trial of the firm system. J Gen Intern Med. 1996;11:139–146. doi: 10.1007/BF02600265. [DOI] [PubMed] [Google Scholar]

- Cheney C, Ramsdell JW. Effect of medical records' checklists on implementation of periodic health measures. Am J Med. 1987;83:129–136. doi: 10.1016/0002-9343(87)90507-9. [DOI] [PubMed] [Google Scholar]

- Cohen DI, Littenberg B, Wetzel C, Neuhauser D. Improving physician compliance with preventive medicine guidelines. Med Care. 1982;20:1040–1045. doi: 10.1097/00005650-198210000-00006. [DOI] [PubMed] [Google Scholar]

- Cohen SJ, Christen AG, Katz BP, Drook CA, Davis BJ, Smith DM, Stookey GK. Counseling medical and dental patients about cigarette smoking: the impact of nicotine gum and chart reminders. Am J Public Health. 1987;77:313–316. doi: 10.2105/ajph.77.3.313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litzelman DK, Dittus RS, Miller ME, Tierney WM. Requiring physicians to respond to computerized reminders improves their compliance with preventive care protocols. J Gen Intern Med. 1993;8:311–317. doi: 10.1007/BF02600144. [DOI] [PubMed] [Google Scholar]

- McDonald CJ, Hui SL, Smith DM, Tierney WM, Cohen SJ, Weinberger M, McCabe GP. Reminders to physicians from an introspective computer medical record. A two-year randomized trial. Ann Intern Med. 1984;100:130–138. doi: 10.7326/0003-4819-100-1-130. [DOI] [PubMed] [Google Scholar]

- Thomas JC, Moore A, Qualls PE. The effect on cost of medical care for patients treated with an automated clinical audit system. J Med Syst. 1983;7:307–313. doi: 10.1007/BF00993294. [DOI] [PubMed] [Google Scholar]

- Herman CJ, Speroff T, Cebul RD. Improving compliance with immunization in the older adult: results of a randomized cohort study. J Am Geriatr Soc. 1994;42:1154–1159. doi: 10.1111/j.1532-5415.1994.tb06981.x. [DOI] [PubMed] [Google Scholar]

- Mayefsky JH, Foye HR. Use of a chart audit: teaching well child care to paediatric house officers. Med Educ. 1993;27:170–174. doi: 10.1111/j.1365-2923.1993.tb00248.x. [DOI] [PubMed] [Google Scholar]

- Robie PW. Improving and sustaining outpatient cancer screening by medicine residents. South Med J. 1988;81:902–905. doi: 10.1097/00007611-198807000-00022. [DOI] [PubMed] [Google Scholar]

- Nattinger AB, Panzer RJ, Janus J. Improving the utilization of screening mammography in primary care practices. Arch Intern Med. 1989;149:2087–2092. doi: 10.1001/archinte.149.9.2087. [DOI] [PubMed] [Google Scholar]

- Nilasena DS, Lincoln MJ. A computer-generated reminder system improves physician compliance with diabetes preventive care guidelines. Proc Annu Symp Comput Appl Med Care. 1995:640–645. [PMC free article] [PubMed] [Google Scholar]

- Zenni EA, Robinson TN. Effects of structured encounter forms on pediatric house staff knowledge, parent satisfaction, and quality of care. A randomized, controlled trial. Arch Pediat Adol Med. 1996;150:975–980. doi: 10.1001/archpedi.1996.02170340089017. [DOI] [PubMed] [Google Scholar]

- McDonald CJ. Use of a computer to detect and respond to clinical events: its effect on clinician behavior. Ann Intern Med. 1976;84:162–167. doi: 10.7326/0003-4819-84-2-162. [DOI] [PubMed] [Google Scholar]

- Callahan CM, Hendrie HC, Dittus RS, Brater DC, Hui SL, Tierney WM. Improving treatment of late life depression in primary care: a randomized clinical trial. J Am Geriatr Soc. 1994;42:839–846. doi: 10.1111/j.1532-5415.1994.tb06555.x. [DOI] [PubMed] [Google Scholar]

- Marton KI, Tul V, Sox HC., Jr Modifying test-ordering behavior in the outpatient medical clinic. A controlled trial of two educational interventions. Arch Intern Med. 1985;145:816–821. doi: 10.1001/archinte.145.5.816. [DOI] [PubMed] [Google Scholar]

- Mazzuca SA, Vinicor F, Einterz RM, Tierney WM, Norton JA, Kalasinski LS. Effects of the clinical environment on physicians' response to postgraduate medical education. American Educational Research Journal. 1990;27:473–488. doi: 10.2307/1162932. [DOI] [Google Scholar]

- Becker DM, Gomez EB, Kaiser DL, Yoshihasi A, Hodge RH. Improving preventive care at a medical clinic: how can the patient help? Am J Prev Med. 1989;5:353–359. [PubMed] [Google Scholar]

- Rogers JL, Haring OM, Wortman PM, Watson RA, Goetz JP. Medical information systems: assessing impact in the areas of hypertension, obesity and renal disease. Med Care. 1982;20:63–74. doi: 10.1097/00005650-198201000-00005. [DOI] [PubMed] [Google Scholar]

- Headrick LA, Speroff T, Pelecanos HI, Cebul RD. Efforts to improve compliance with the National Cholesterol Education Program guidelines. Results of a randomized controlled trial. Arch Intern Med. 1992;152:2490–2496. doi: 10.1001/archinte.152.12.2490. [DOI] [PubMed] [Google Scholar]

- Belcher DW. Implementing preventive services. Success and failure in an outpatient trial. Arch Intern Med. 1990;150:2533–2541. doi: 10.1001/archinte.150.12.2533. [DOI] [PubMed] [Google Scholar]

- Safran C, Rind DM, Davis RB, Ives D, Sands DZ, Currier J, Slack WV, Makadon HJ, Cotton DJ. Guidelines for management of HIV infection with computer-based patient's record. Lancet. 1995;346:341–346. doi: 10.1016/S0140-6736(95)92226-1. [DOI] [PubMed] [Google Scholar]

- Gonzalez JJ, Ranney J, West J. Nurse-initiated health promotion prompting system in an internal medicine residents' clinic. South Med J. 1989;82:342–344. doi: 10.1097/00007611-198903000-00016. [DOI] [PubMed] [Google Scholar]

- Brady WJ, Hissa DC, McConnell M, Wones R. Should physicians perform their own quality assurance audits? J Gen Intern Med. 1988;3:560–565. doi: 10.1007/BF02596100. [DOI] [PubMed] [Google Scholar]

- Hopkins JA, Shoemaker WC, Greenfield S. Treatment of surgical emergencies with and without an algorithm. Arch Surg. 1980;115:745–750. doi: 10.1001/archsurg.1980.01380060043011. [DOI] [PubMed] [Google Scholar]

- Vissers MC, Biert J, Linden CJ vd, Hasman A. Effects of a supportive protocol processing system (ProtoVIEW) on clinical behaviour of residents in the accident and emergency department. Comp Methods Programs Biomed. 1996;49:177–184. doi: 10.1016/0169-2607(95)01714-3. [DOI] [PubMed] [Google Scholar]

- Auleley GR, Ravaud P, Giraudeau B, Kerboull L, Nizard R, Massin P, Garreau de Loubresse C, Vallee C, Durieux P. Implementation of the Ottawa ankle rules in France. A multicenter randomized controlled trial. JAMA. 1997;277:1935–1939. doi: 10.1001/jama.277.24.1935. [DOI] [PubMed] [Google Scholar]

- Lee TH, Pearson SD, Johnson PA, Garcia TB, Weisberg MC, Guadagnoli E, Cook EF, Goldman L. Failure of information as an intervention to modify clinical management. A time-series trial in patients with acute chest pain. Ann Intern Med. 1995;122:434–437. doi: 10.7326/0003-4819-122-6-199503150-00006. [DOI] [PubMed] [Google Scholar]

- Anonymous . In: CCQE-AHCPR guideline criteria project: develop, apply, and evaluate medical review criteria and educational outreach based upon practice guidelines: final project report. Jefferson St, editor. Rockville, MD: U.S. Dept. of Health and Human Services, Public Health Service, Agency for Health Care, Policy and Research; 1996. [Google Scholar]

- Chassin MR. A randomized trial of medical quality assurance. Improving physicians' use of pelvimetry. JAMA. 1986;256:1012–1016. doi: 10.1001/jama.256.8.1012. [DOI] [PubMed] [Google Scholar]

- Szilagyi PG, Rodewald LE, Humiston SG, Pollard L, Klossner K, Jones AM, Barth R, Woodin KA. Reducing missed opportunities for immunizations. Easier said than done. Arch Pediat Adol Med. 1996;150:1193–1200. doi: 10.1001/archpedi.1996.02170360083014. [DOI] [PubMed] [Google Scholar]

- Burack RC, Gimotty PA, George J, Stengle W, Warbasse L, Moncrease A. Promoting screening mammography in inner-city settings: a randomized controlled trial of computerized reminders as a component of a program to facilitate mammography. Med Care. 1994;32:609–624. doi: 10.1097/00005650-199406000-00006. [DOI] [PubMed] [Google Scholar]

- Evans AT, Rogers LQ, Peden JGjr, Seelig CB, Layne RD, levine MA, levin ML, Grossman RS, Darden PM, Jackson SM, Ammerman AS, Settle MB, Stritter FT, Fletcher SW. Teaching dietary counseling skills to residents: patient and physician outcomes. The CADRE Study Group. Am J Prev Med. 1996;12:259–265. [PubMed] [Google Scholar]

- Garside P. The learning organisation: a necessary setting for improving care? Qual Health Care. 1999;8:211. doi: 10.1136/qshc.8.4.211. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Studies to implement guidelines at hospitals. The reviewed studies are listed with details on type of study, setting, target, number of patientes, intervention strategies and post study percentages on the primary outcome measure.