Abstract

This study extended the limited research on the utility of tactile prompts and examined the effects of a treatment package on implementation of a token economy by instructional assistants in a classroom for students with disabilities. During baseline, we measured how accurately the assistants implemented a classroom token economy based on the routine training they had received through the school system. Baseline was followed by brief in-service training, which resulted in no improvement of token-economy implementation for recently hired instructional assistants. A treatment package of prompting and self-monitoring with accuracy feedback was then introduced as a multiple baseline design across behaviors. The treatment package was successfully faded to a more manageable self-monitoring intervention. Results showed visually significant improvements for all participants during observation sessions.

Keywords: staff training, self-monitoring, tactile prompt, classroom management

Instructional assistants and other public school employees are not necessarily trained to work with students with disabilities. Standard approaches, such as classroom rules and reprimands, are sufficient to support the behavior of some students but may not be effective in controlling the behavior of others. Such uncontrolled problem behaviors can interfere with otherwise effective instruction (Gable, Quinn, Rutherford, Howell, & Hoffman, 1999). Thus, effective and efficient staff training methods are needed to train instructional assistants to manage classroom behavior problems. One strategy that has been used to promote desirable behaviors among children with disabilities involves the use of vibrating pagers to provide tactile prompts at times when it is appropriate for the child to emit the target response.

Three studies have evaluated the effectiveness of tactile prompts delivered via vibrating pagers among children with disabilities (Shabani et al., 2002; Taylor, Hughes, Richard, Hoch, & Coello, 2004; Taylor & Levin, 1998). Taylor and Levin used a vibrating pager to alert 1 student with autism to increase verbal initiations regarding play activities. Through a multiphase multielement design, such prompts successfully increased social initiations more than verbal or no prompts. Shabani et al. systematically replicated this finding with 3 students with autism. An ABAB design showed increased verbal initiations for all 3 participants and increased responding to peer initiations for 2 participants. Finally, Taylor et al. used a multiple baseline probe design to show that tactile prompts delivered via a vibrating pager to teenagers with autism increased their use of communication cards to request help. The literature involving vibrating pagers as tactile prompts shows promise for participants with autism who lack sufficient social skills.

The present study extended this literature by applying tactile prompts signaled via a vibrating pager to increase accurate implementation of a classroom token economy by instructional assistants. Self-monitoring was selected as a component of the present study because it is a widely used and well-established intervention. By definition, it involves an individual's determination of the presence of a target behavior and is usually followed by self-recording the behavior (Harris, 1986). It has been successfully implemented to improve behaviors of staff whose positions require interactions with persons with disabilities (Burgio et al., 1990; Richman, Riordan, Reiss, Pyles, & Bailey, 1988).

Method

Participants and Setting

Three female instructional assistants were selected because they had less than 1 year of experience working at their current position and worked in a classroom with behavioral support. None had ever knowingly participated in a research study or had contact with the tactile prompt or self-monitoring interventions. All participants worked in the same class for third through fifth graders who had been diagnosed with a variety of disabilities and who were in need of behavioral support.

The classroom in which the study was performed was self-contained, with 11 students who had been referred for severe behavior problems. All students participated in a token-economy point system created by the behavioral support team. To maintain this system, students began each day with a new point sheet. Each sheet displayed a table with three columns titled, “Polite words and gestures,” “Respecting classroom order,” and “Following directions.” The table was further divided into half-hour time frames of the school day. Numbers 5 through 1 were listed for all classes of behavior and all time frames, representing the points earned by students. Students began each day with all their points, which were removed for inappropriate behavior throughout the day. A blank column for bonus points was available for good behavior. Points were exchanged weekly for prizes. (Please contact the first author for a more detailed description of the token economy and implementation.)

The primary diagnosis for 7 of the students was emotionally handicapped; 2 had been diagnosed primarily as language impaired, 1 with Asperger's syndrome, and 1 as educable mentally handicapped. Nine students were African-American, and the other 2 were Caucasian. There were 9 boys and 2 girls whose ages ranged from 10 to 14 years.

Of the many people usually present in the class, only the instructional assistants' behaviors were recorded and targeted. Students were observed because their behavior caused the opportunity for participants to perform the dependent variables. However, no particular student's behaviors were recorded. The classroom teacher was asked to proceed as she normally did, although she occasionally had to be reminded to allow the assistants to perform their desired behaviors. On the occasions in which she did not allow them the opportunity to respond, the experimenters did not count a missed opportunity. A behavior specialist who was assigned to the class exited the room for the experimental sessions.

Design and Procedure

The current study used a moving-treatments multiple baseline across behaviors design (Bailey & Burch, 2002). Baseline was followed by one session of training, and then target behavior was measured until it became relatively stable for all participants. Next, prompting, self-monitoring, and accuracy feedback were applied to the first dependent variable—managing disruptions. Once responding was stable for all participants, the prompting component of the intervention was removed from the first behavior. The entire treatment package (prompting, self-monitoring, and accuracy feedback) was then applied to the next dependent variable—bonus-point delivery. When visual inspection revealed that bonus-point delivery was consistently at or above the goal, the prompting component was then removed from bonus-point delivery and was applied to the final response—prompting appropriate behavior. Thus, the prompting component moved across behaviors but self-monitoring with accuracy feedback continued through the end of the study.

Baseline

Data were collected under normal classroom conditions. Participants were aware that they were being observed but not of the variables of interest. Observers sat as discreetly as possible and used a clipboard and data sheet to record opportunities for the participants to perform target behaviors.

Training

When visual analysis of the baseline data revealed low rates, one training session was held to discuss the expectations of participants. The first author met with the participants in a small office connected to the classroom. At this time she introduced the goals, procedures, dependent variables, and expectations. Task clarification occurred, and scenarios were discussed with modeling. Participants took written posttests in which they were asked to identify antecedents and appropriate responses. The session lasted approximately 30 min and ended when all participants had answered all posttest questions correctly.

After the training session, data were collected in a manner identical to that in the baseline condition. This phase continued until responding was relatively stable for all participants and dependent variables.

Prompting, Self-monitoring, and Accuracy Feedback

Immediately after the last session in the training phase, the first author met briefly with participants in the training room. During this time, she demonstrated the use of the pager and the self-monitoring form. To demonstrate the procedure, she gave the pager to the participant who chose to either clip it to her pants or put it in her pocket. Next, the experimenter used a remote controller to activate the pager. The experimenter explained that the vibrations would indicate the opportunity for the participant to manage a disruption, and would later indicate the time to perform a different behavior (bonus-point delivery or prompting appropriate student behavior). The experimenter told the participants that they were welcome to perform such responses without being prompted, but that it was not “bad” if it required paging to have them perform the target behavior.

At the start of each session, participants were reminded of all responses the observers were collecting as well as the one that would be prompted. The vibrating pager was given to the participant to clip to her pants or keep in her pocket. The experimenter and, when applicable, second observer sat in the corner with the remote controller. When observers noted the opportunity for the response of interest to occur (the first behavior was managing disruptions, next was bonus-point delivery, and finally prompting appropriate behavior), they waited 3 to 5 s to allow for the participant to notice the opportunity and respond appropriately. For managing disruptions, the opportunity occurred any time a student was disrupting someone in the class. For bonus-point delivery, the opportunity was the passage of 9 min without delivering bonus points (this allowed the participant 1 min to locate a student who was performing a positive social or academic behavior prior to the end of the session). For prompting appropriate student behavior, an opportunity was defined as any time that a student was noticeably off task but was not disrupting others, such as resting his cheek on his desk and closing his eyes during a math lesson. When the participant did not respond to an opportunity, the experimenter used the remote control to alert her to react. If she did not respond to the pager or performed the behavior incorrectly, it was marked as a missed opportunity. If she responded correctly to the prompt, it was recorded as positive. The presence or absence of opportunities and correct responses were collected by the experimenter and any other trained observers. These three variables are described in further detail below.

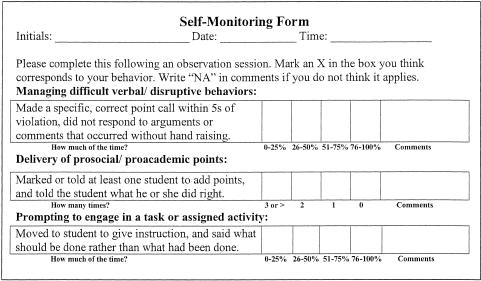

After the intervention was implemented for managing disruptions and its level reached 100% for three consecutive sessions, the intervention package was introduced for bonus-point delivery. At this time, the self-monitoring form that participants completed after sessions was extended to include bonus-point delivery. After the goal of one occasion of bonus-point delivery was met for three sessions, the intervention was introduced for prompting appropriate behavior. The tactile prompt was then made contingent on the opportunity to prompt student behavior, and the self-monitoring form was extended to include this behavior. Figure 1 displays the self-monitoring form given to participants when all three behaviors had been exposed to the intervention.

Figure 1. Self-monitoring form completed by participants during and after the prompting, self-monitoring, and accuracy feedback phase for prompting appropriate behavior.

Participants were given a form immediately after the session ended. The observer usually collected it within 5 min, although a maximum of 30 min was permitted. This delay occasionally occurred because the participant was called to leave the classroom following a session, when students needed escorts or other staff needed help. On such occasions, the observer had the participant complete the form and delivered feedback as soon as the participant returned.

The form required the participant to put a check mark in a box corresponding to the percentage of opportunities she believed she had correctly followed with a response. For the behavior being prompted, this meant that she recalled how well she responded to the vibrating prompts. For those behaviors no longer being prompted, this meant that the participant recorded the percentage of times she felt she had identified the need to respond and did so correctly. The experimenter assessed the accuracy of the self-monitoring form by noting correct or incorrect responses compared to experimenter observations. This was calculated, and the actual percentage was recorded on the back of the form and returned to the participant for review. The experimenter discreetly described any discrepancy when showing the percentage to the participant. Typical feedback involved the experimenter pointing to the actual percentage correct while she stated, “I would have marked this percentage for managing disruptive behavior, because you missed one opportunity when a child slammed his books on the desk.”

Self-monitoring with Accuracy Feedback

When participants responded appropriately to prompts 100% of the time and recorded this correctly 100% of the time for three consecutive sessions, the tactile prompt was removed from the current dependent variable and applied with self-monitoring and accuracy feedback to the next dependent variable. The self-monitoring form then included the target behaviors that were previously exposed to the intervention as well as the new one being targeted. Accuracy feedback was still delivered following completion of the form.

Maintenance

During maintenance, the length of session was gradually increased from 10 to 60 min. The experimenter told the participants that data were still being collected, and that sessions would be increased in length. In addition, participants were told that they would not be informed when data were being collected. The experimenter and other observers increased the amount of time they spent in the classroom. The entire time was not spent collecting data; instead, they helped with tasks around the classroom, graphed data, or helped students. When observers did collect data, they did so discreetly, with the data-collection forms beneath other paperwork on their clipboards. This was done to decrease the likelihood that they would serve as discriminative stimuli for participants' target behaviors.

During maintenance, sessions were first extended to fit academic lessons within the class. The length of such a lesson was about 30 min, so an observer collected data for such a lesson and then approached the participant of interest during the transition time between class activities. The observer wrote the time frame on the form and handed it to the participant while explaining the routine. An example of such an explanation was, “Fran, I just watched you during math. Will you please take a moment to mark how you think you did?” This was then extended to 60-min sessions. These sessions did not typically end with an academic period, so the experimenter waited for a break in the lesson to tell the participant she had been observed and to ask her to complete the form. If a participant left the room for more than 5 min during one of these extended sessions, data were not collected during the absence and the entire session was repeated.

The form was the same during maintenance as with the other phases, with one exception. At this time, instead of asking the participant to mark the number of times she delivered bonus points, she was asked to mark how close to the goal she had been. Accuracy feedback was still delivered, although the bonus-points data were calculated and graphed as an average per 10 min.

Data Collection

Sessions occurred during academic times of normal school days. They lasted 10 min per participant and occurred three times per day at most. Data were recorded on paper data sheets or a laptop computer. Observers sat as unobtrusively as possible while collecting data. To decrease reactivity, observers were present in the class for several weeks before collecting the data used in the study.

Observers recorded every opportunity for the participant to respond as well as whether the response was correct or incorrect on the data-collection form. A response was marked as correct if an opportunity to perform a dependent variable was followed with the appropriate staff behavior. A response was marked as incorrect if either an opportunity was followed by no staff behavior or inappropriate staff behavior or if staff performed a target response in the absence of the opportunity. The percentage of correctly performed behaviors was calculated (number of correct responses divided by number of correct responses plus incorrect responses multiplied by 100%) at the end of each session. The exception to this was with the collection of bonus-point delivery data, which were recorded as frequency data. During intervention phases, the occurrence of independent variables, as well as whether the observer considered their presence (or absence) correct or incorrect, was also recorded.

Dependent Measures

Dependent variables were identified based on observations of an exemplar class and from specific requests by the classroom teacher. Pilot observations revealed that all instructional assistants exhibited the behaviors of interest on occasion, but the frequency and consistency needed to be addressed. The staff behaviors all related to implementation of the token-economy point system.

Managing Disruptive Behavior

An opportunity to manage disruptive student behavior was identified when a student disrupted other people in the class either verbally or physically. A correct response to this opportunity was marked when the participant told the student to remove a point from the appropriate area (following directions, respecting classroom order, or polite words and gestures) of his or her recording sheet. Data were collected and graphed as a percentage of correct participant behavior.

Delivering Bonus Points and Praise

Bonus-point delivery was recorded as correct when the participant praised the student and marked, or told the student to mark, at least one bonus point every 10 min. Praise was defined as telling a student what he or she had done to earn the bonus point. The number of bonus points delivered per occasion was not collected or graphed. Instead, data were graphed as frequency of bonus-point deliveries per session.

Prompting Appropriate Behavior

Opportunities to prompt appropriate student behavior were identified when a student was not engaged in the expected activity for at least 5 s. At this time, instructional assistants were expected to redirect the student to behave appropriately. A correct response was recorded if the participant stood within 1 m of the student and told the student specifically what behavior he or she should perform. These data were represented as a percentage of correct participant behavior.

Interobserver Reliability and Treatment Integrity

Two independent observers recorded the presence or absence of all relevant variables for agreement on the occurrence and nonoccurrence of the dependent and independent variables. This ensured the reliability of the data-collection methods and integrity of the independent variables when applicable.

To assess interobserver reliability of dependent variables, exact agreement plus or minus one interval was examined for the presence or absence of correct responses by dividing the number of agreed-upon intervals (± one interval) by the total number of intervals and multiplying by 100%. Two independent observers scored a total of 45% of sessions (range, 26% to 55%), and their records yielded an overall average of all dependent variables for all participants of 96% (range, 94% to 100%).

Reliability of the independent variables was also measured throughout the study. The presence or absence of paging and form completion was recorded by all observers during intervention sessions, and assessed whether the presence or absence followed the expected protocol. Total agreement for independent variables (number of correct implementations divided by number of opportunities to implement multiplied by 100%) was calculated for each independent variable. Overall, 50% of intervention sessions (range, 29% to 87%) were evaluated for treatment integrity. The experimenter's correct implementation of paging and ensuring that self-monitoring forms were completed averaged 98% (range, 86% to 100%).

Social Validity

Participants anonymously completed questionnaires that asked how they felt about the independent variables, whether they felt the target behaviors were helpful, and how much their own behavior improved. They responded to each question using a 5-point Likert scale.

Results

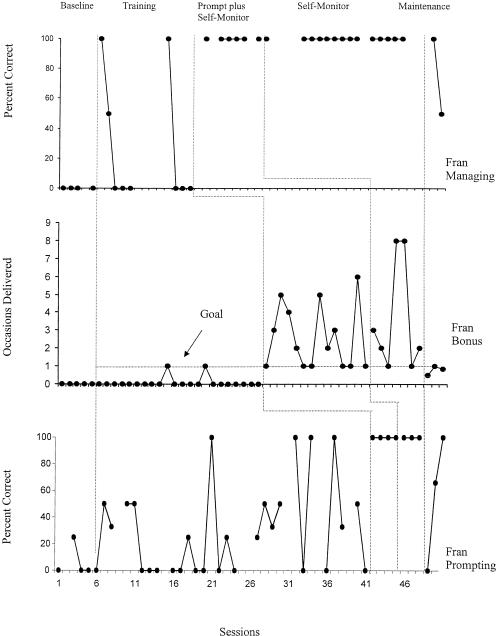

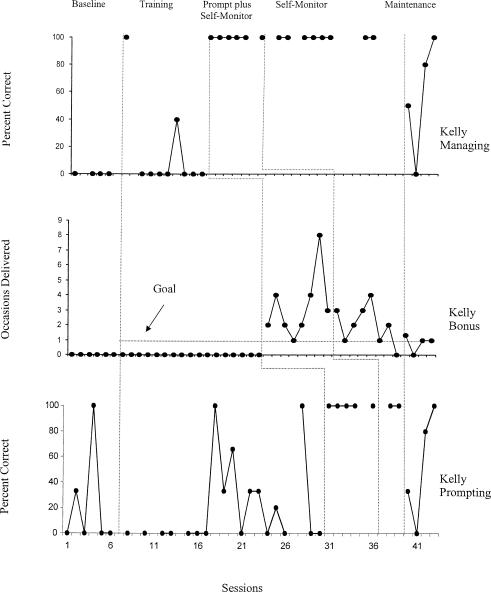

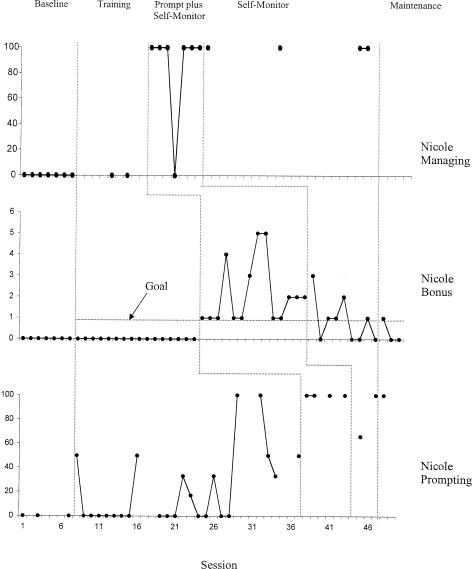

Figures 2, 3, and 4 display the data collected for Fran, Kelly, and Nicole, respectively. Those sessions without data points represent times when data were collected but no opportunities to perform that behavior were present (e.g., no student displayed a disruptive behavior so the participant did not have an opportunity to correct one).

Figure 2. Levels of managing disruptive student behavior, delivery of bonus points, and prompting appropriate student behavior during baseline and treatment phases for Fran.

Figure 3. Levels of managing disruptive student behavior, delivery of bonus points, and prompting appropriate student behavior during baseline and treatment phases for Kelly.

Figure 4. Levels of managing disruptive student behavior, delivery of bonus points, and prompting appropriate student behavior during baseline and treatment phases for Nicole.

Fran

During baseline, Fran displayed no occurrences of managing disruptive student behavior, delivered no bonus points, and prompted appropriate student behavior in only one session (Session 3). The initial training session produced little improvement in the delivery of bonus points and highly variable improvements in managing disruptive behavior and prompting appropriate student behavior. When prompting plus self-monitoring and accuracy feedback were sequentially introduced, each response showed clear increases that were maintained when the tactile prompts were discontinued and only self-monitoring remained in effect. Fran's performance of the three target behaviors was somewhat more variable during the maintenance phase, but responding remained higher than baseline levels for all three responses. All of Fran's target behaviors remained high after the prompting component was removed. However, when session length was increased to 60 min during maintenance, the behaviors decreased. Prompting appropriate behavior increased after the initial drop, although only three sessions of data were collected.

Kelly

During baseline, Kelly displayed no occurrences of managing disruptive student behavior, delivered no bonus points, and displayed variable but low levels of prompted appropriate student behavior. Following the initial training session, Kelly showed no improvement in the delivery of bonus points and small and variable increases in the other two target responses. As with Fran, the introduction of tactile prompts plus self-monitoring produced rapid and clear increases in all three target responses. When the tactile prompts were removed, responding was maintained at 100% for managing disruptive behavior and prompting appropriate student behavior, but decreased slightly for the delivery of bonus points. Responding was more variable when the lengths of the sessions were extended in the maintenance phase.

Nicole

During baseline, Nicole displayed no occurrences of any of the three target responses. Following the initial training session, managing disruptive behavior and the delivery of bonus points remained at zero, whereas prompting appropriate behavior increased somewhat but was highly variable. As with the other participants, the introduction of tactile prompts plus self-monitoring produced rapid and clear increases in all three target responses. When the tactile prompts were removed, responding was maintained at 100% for managing disruptive behavior, decreased notably for the delivery of bonus points, and decreased slightly for prompting appropriate student behavior. The maintenance phase yielded very few opportunities for Nicole to respond, so the data should be interpreted cautiously. Bonus-point delivery decreased at this time, and one data point of prompting appropriate behavior demonstrated that this remained high when the opportunity arose.

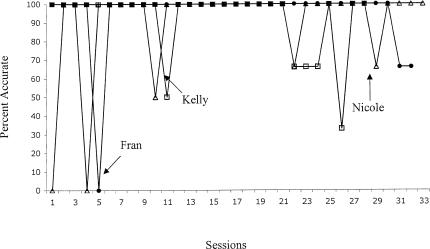

Accuracy Feedback

Figure 5 shows the percentage of correspondence between the data obtained by observers and those reported by participants (i.e., self-monitoring). For example, if a participant performed the target behaviors 25% of the time in a given session and recorded this correctly on the form, the accuracy data point would be plotted at 100% for that session. As can be seen, the participants were relatively accurate at recording their own behavior during the intervention phases. The average level of accuracy was 95% for Fran, 92% for Kelly, and 90% for Nicole.

Figure 5. Percentage of corresponding self-monitoring responses between observers and each participant.

Closed circles represent Fran's percentage of accurate responses, open squares represent accuracy for Kelly, and open triangles depict accuracy for Nicole. Session 1 was the first session in which the intervention package was implemented.

Social Validity

The 3 participants reported that the study and its procedures were useful, and 1 stated that it was extremely useful. This respondent also wrote that her participation in the study saved her job. The 3 participants marked all target behaviors (managing disruptions, prompting appropriate student behavior, and bonus-point delivery) as important or extremely important. In addition, all stated that they would be willing to participate in the same or a similar study in the future.

Discussion

The use of a tactile prompt and self-monitoring with accuracy feedback improved token-economy implementation for all participants in this study. Managing disruptions, prompting appropriate student behavior, and bonus-point delivery all increased from near-zero levels to consistently high rates in the first session in which the intervention package was implemented. Because the first data point with the tactile prompt was high for all behaviors and participants (before participants contacted the self-monitoring form), it is likely that the antecedent prompting strategy, rather than the consequent self-monitoring procedure, was responsible for the improvement. This warrants the isolation of the vibrating pager as an intervention in future studies and suggests its potential as a tool to improve staff behavior.

During the training phase, participants were made aware of the student behaviors that could occasion target responses. However, sustained and clinically significant increases in the three target responses did not occur following the provision of this information. Large and consistent improvements occurred only after the intervention package was implemented.

These results suggest that the tactile prompts signaled the participants to attend to the students' behavior and to emit the appropriate target response. The fact that the participants emitted the correct target response (e.g., managed disruptive behavior following disruptive behavior) suggests that their responding was under the joint stimulus control of the tactile prompt and the students' behavior. If the participants were responding solely to the tactile prompt, many of their responses would have been errors (e.g., giving bonus points following disruptive behavior). The fact that high levels of the target behaviors were maintained after the tactile prompt was removed suggests that the participants learned to respond correctly solely to the students' behavior, which may have been facilitated by the self-monitoring component over time. Alternatively, it is possible that the self-monitoring component was responsible for both the improvement in and the maintenance of correct responding. However, this alternative explanation seems less likely because the improvements in the target responses occurred immediately, and occurred even in sessions in which the accuracy of self-monitoring was at zero (e.g., see the first and fourth sessions for Nicole).

Although correct responding remained high when the tactile prompt was removed, the target responses decreased slightly when session length was increased in the maintenance phase, which could have been caused by a variety of issues. First, during maintenance, the participants no longer wore the pager. It is possible that wearing the pager was either a prompt to respond or it functioned as a discriminative stimulus for appropriate responding. Second, the session length may have increased too rapidly. Third, during this phase participants were not aware of the commencement of the session. Therefore, some responding may have been higher in other phases in part due to reactivity. Finally, the maintenance phase may have been too abbreviated to capture the possibility of a return to previously higher rates.

For Nicole and possibly Kelly, self-monitoring and accuracy feedback were not sufficient to maintain the behavior change of bonus-point delivery. Potential ways of dealing with this limitation in future research would be (a) to include reintroduction and gradually fading the tactile prompts or (b) to add a consequence for correct responding (other than self-monitoring).

The intervention package clearly improved the participants' implementation of the token economy, but more research is needed to determine the long-term utility of this treatment. If replicated, the length of the sessions in the maintenance phase would best be increased more slowly, and extended eventually to entire school days and school weeks. In addition, because the tactile prompts and the self-monitoring were introduced together, their independent contributions to the effectiveness of the treatment package remain uncertain. Future research should be directed toward evaluating the independent, and possibly interactive, effects of tactile prompts and self-monitoring. In addition, future research should evaluate the extent to which improvements in staff implementation of behavior programs (like token economies) result in improvements in student behavior.

Acknowledgments

This study represents some information based on a master's thesis submitted by the first author, under supervision of the second author, in partial fulfillment of the MS degree at Florida State University. Portions of this work were presented as a poster at the 31st annual meeting of the Association for Behavior Analysis, Chicago, May 2005, and in a paper session at the 25th annual meeting of the Florida Association of Behavior Analysis, Sarasota, Florida, September 2005. We thank Christopher Schatschneider and Ashby Plant for their recommendations of the conceptualization and summary of the study.

References

- Bailey J.S, Burch M.R. Research methods in app lied behavior analysis. Thousand Oaks, CA: Sage; 2002. [Google Scholar]

- Burgio L.D, Engel B.T, Hawkins A, McCormick K, Schieve A, Jones L.T. A staff management system for maintaining improvements in continence with elderly nursing home residents. Journal of Applied Behavior Analysis. 1990;23:111–118. doi: 10.1901/jaba.1990.23-111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gable R.A, Quinn M.M, Rutherford R.B, Jr, Howell K.W, Hoffman C.C. Addressing student problem behavior: Part 2. Conducting a functional behavioral assessment (3rd ed.) Washington DC: Center for Effective Collaboration and Practice; 1999. [Google Scholar]

- Harris K.R. Self-monitoring of attentional behavior versus self-monitoring of productivity: Effects on on-task behavior and academic response rate among learning disabled children. Journal of Applied Behavior Analysis. 1986;19:417–423. doi: 10.1901/jaba.1986.19-417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richman G.S, Riordan M.R, Reiss M.L, Pyles D.A.M, Bailey J.S. The effects of self-monitoring and supervisor feedback on staff performance in a residential setting. Journal of Applied Behavior Analysis. 1988;21:401–409. doi: 10.1901/jaba.1988.21-401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shabani D.B, Katz R.C, Wilder D.A, Beauchamp K, Taylor C.R, Fisher K.J. Increasing social initiations in children with autism: Effects of a tactile prompt. Journal of Applied Behavior Analysis. 2002;35:79–83. doi: 10.1901/jaba.2002.35-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor B.A, Hughes C.E, Richard E, Hoch H, Coello A.R. Teaching teenagers with autism to seek assistance when lost. Journal of Applied Behavior Analysis. 2004;37:79–82. doi: 10.1901/jaba.2004.37-79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor B.A, Levin L. Teaching a student with autism to make verbal initiations: Effects of a tactile prompt. Journal of Applied Behavior Analysis. 1998;31:651–654. doi: 10.1901/jaba.1998.31-651. [DOI] [PMC free article] [PubMed] [Google Scholar]