Abstract

In this paper, we present a new approach to clinical workplace computerization that departs from the window–based user interface paradigm. NOSTOS is an experimental computer–augmented work environment designed to support data capture and teamwork in an emergency room. NOSTOS combines multiple technologies, such as digital pens, walk–up displays, headsets, a smart desk, and sensors to enhance an existing paper–based practice with computer power. The physical interfaces allow clinicians to retain mobile paper–based collaborative routines and still benefit from computer technology. The requirements for the system were elicited from situated workplace studies. We discuss the advantages and disadvantages of augmenting a paper–based clinical work environment.

INTRODUCTION

Computer technologies promise benefits by supporting the management, execution, and follow–up of clinical care. However, taking fully advantage of computers in this context requires effective user–interfaces that support the routines of the clinician.1

The traditional desktop workstation design and Graphical User Interfaces (GUIs) that are available today have several shortcomings. Many present hospital information systems impose unnecessary and costly organizational changes and lead to inflexible clinical workflows. Meanwhile, mobile data entry is an important but still a mainly unsolved problem in computer–based healthcare information systems.2 These issues, together with the steep learning curves that are often associated with these systems, constitute a definite obstacle against gaining returns from investments in computer systems.

Researchers have discussed the importance of physical information objects, such as paper forms, folders, and sticker notes in the workplace.2,3,4 Although they acknowledge that paper technologies have many disadvantages compared to computers, they also point out that paper has subtle but powerful supporting features that are difficult to capture in a GUI. For example, paper forms offer unprecedented screen resolution as well as mobility, which makes them excellent tools for data capture. Moreover, paper documents encourage collaboration because they are easy to share and handover. Also, the spatial arrangement of documents bestows local importance on the workplace and provides an overview and deposition of information that facilitates memory recall and the tracking of work processes. Nonetheless, current approaches in Medical Informatics have aimed at substituting physical information objects for their digital counterparts (e.g., the paperless clinic efforts).

We suggest an alternative to clinical workplace computerization. Our objective is not to replace, but instead embrace, the established paper–based practices and enhance them with computer power. The NOSTOS environment offers a physical interface to a Computer–Based Patient Record5 (CPR) system that consists of digital pens, special paper forms, and a digital desk. This approach hybridizes the benefits of mobile data capture and teamwork with the advantages of CPR technology for storage and access of the information. Furthermore, our approach enforces minimal changes in established workflows and local routines. The incentive for the design came from studies carried out in a healthcare setting in which we observed how clinicians used paper–based records and other pervasive workplace objects to improve cognitive performance and promote collaborations.6 The theoretical motivation for the design was provided by distributed cognition; a view that highlights the relationship among cognition, activity, and the supporting physical tools.7

The aim of this paper is to provide a design rationale for medical ubiquitous computing environments with a specific focus on the digital paper interface approach.

UBIQUITOUS COMPUTING

Ubiquitous Computing8 is an approach that attempts to make computers available through the physical environment. Inspired by sociologists’ work on how people interact with ordinary physical tools, researchers have endeavored to develop systems that blend into the work environment to create more natural ways of using computers than through the windows and buttons of GUIs. Tablet and early medical pen–based computing9 approaches were clearly part of these developments. Of special interest in our report are the efforts that have been made to amplify ordinary physical tools and environments with functionality from computer technology.10,11 Several experiments have been conducted to enhance everyday tools, such as augmented paper12 and digital desks.13

Various kinds of paper can be augmented in several ways. For example, a digital pen has been developed with a camera that scans paper printed with a unique pattern to capture pen stokes.14 This approach enables the design of active applications such as paper–based email and improved sticker notes that can be sent directly to a computer.

Radio Frequency Identification technology (RFID) can be used to connect paper forms and folders to the electronic world.15 RFID tags are small electronic chips that contain unique identifiers and can provide physical tools with IP addresses. The tags can be read from a distance by an antenna, which enables the tracking of tagged objects in physical space (usually at a range of about 10 cm).

With these techniques, ordinary paper documents and folders can be activated and connected to computers and then viewed as part of a class of physical interfaces called Tangible User Interfaces.

Tangible User Interfaces

Tangible User Interfaces16 (TUIs) facilitate interaction between physical objects and computers. Traditional GUIs consist of virtual buttons and windows that are seen on a screen and can be manipulated by use of a mouse and keyboard. By comparison, TUIs are used to convey the results of manipulation of physical objects directly to computers. Fitzmaurice has stated the following about the properties of this class of physical interfaces:17

“[TUIs] act as specialized input devices [to computers] which can serve as dedicated physical interface widgets, affording physical manipulation and spatial arrangements…By using physical objects, we not only allow users to employ a larger expressive range of gestures and grasping behaviors but also to leverage off a users innate spatial reasoning skills and everyday knowledge of object manipulations”

Ullmer, Ishii and Glas developed a TUI for controlling video recorders,18 and their system utilized small wooden blocks that serve as physical icons for the containment, transport, and manipulation of media. Related to our work is Jacob and colleagues TUI approach.19 They developed a system in which users control computers by moving bricks spatially on a grid surface. In this system, different functions are assigned to different locations on the grid, and the position on a physical object on the grid determines actions on part of the computer.

We have worked on supplementing paper documents, folders, and desks with digital pens and RFID tags. The objective is to allow paper to function both as an input device and physical icon that can be tracked on a desk. The work is discussed below.

REQUIREMENTS

To determine the requirements for the system, we studied how physicians, nurses, and nurse’s aids used material objects in an emergency room (ER) in a 250–bed hospital in Sweden6. We employed participatory observation and followed these professionals during day and night shifts. Data was collected for a period of one person month.

Paper–based collaborative practice

Administrative work and information processing at the ER was paper–based. An example of this strategy is that, for each patient, a folder was created that contained forms used to accumulate various types of information, such as records of drugs that had been administered. To coordinate the collaborative activities and get an overview of the task to be performed, clinicians arranged spatially the patient folders in two vertical rows on a desk. The specific position of a folder within the arrangement signified the clinical status of the patient, the level of the priority of the case, and progression through the different clinical activities. Furthermore, parts of the arrangement functioned as swapping stations, where physicians and nurses exchanged work tasks. For example, if a physician wanted a laboratory test, the request was attached to the folder and the folders were then placed at a designated spot on the desk.

Such practices are common at healthcare organizations today, but it is difficult to capture physical collaborative activities in a traditional GUI. Accordingly, we wanted to determine whether it is possible to develop a system that maintains and supports the tangible dimension of collaborative activities we had observed in the ER.

Requirements on computer–augmentation

From a technical viewpoint, a bare–bone system for augmenting paper–based work should provide technical solutions to the following issues:

A method to electronically capture pen stokes on written paper forms,

An Optical Character Recognition (OCR) engine for interpreting pen strokes to text,

An approach to provide paper forms, folders, and sticker notes with unique identifiers (IP addresses),

A system for channeling feedback to users from the underlying OCR engine through devices in the physical environment. This requirement was conceived particularly important because passive paper does provide limited feedback, and

A method to track to which forms sticker notes are attached.

THE NOSTOS ENVIRONMENT

NOSTOS is an experimental ubiquitous computing healthcare environment. Essentially, we replaced the traditional GUI of an exiting CPR20 with a number of physical interfaces.

The idea was to develop a hybrid system that maintains dual representations (i.e., digital and physical representations) of the same document, folder, and sticker note. Computer augmentation of the previously established routines was achieved by combining digital paper technology, walk–up displays, headsets, a smart desk, and sensor technology in a distributed software architecture to digitize the ordinary paper forms, folders, and desks. Figure 1 shows NOSTOS.

Figure 1.

The NOSTOS ubiquitous computing healthcare environment with its walk–up display and interactive desk.

Digital Paper Interface

A fundamental feature of NOSTOS is the digital paper interface. The clinicians use a digital pen and special paper forms14 (i.e., the Anoto system) to record medical data directly into the computer domain and control system functions. Figure 2 shows part of an experimental paper form. Moreover, the digital pen has a Bluetooth transceiver that transmits wirelessly the pen strokes to the computer domain for processing. Additionally, every document, folder, and sticker note were integrated into the digital domain by attaching RFID tags on them. We used 125 KHz EM Marin H4002 tags (about 3.5 cm in diameter) in the system. These tags can be read off from a distance of about 15 cm with a small 10 cm antenna and reader. Additionally, NOSTOS provides a basic OCR engine that translates pen strokes into text. The OCR implementation was based on SATIN; a Java–based API and toolkit that provides functionality for pen–based applications.21

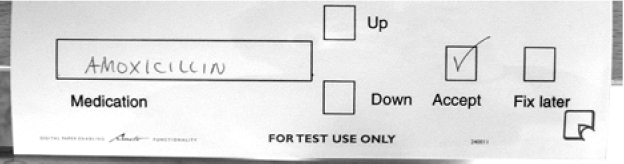

Figure 2.

Part of an experimental paper–based interface with special paper widgets. Users interact with NOSTOS by writing and checking boxes on the paper forms with a digital pen.

To interact with the system, users employ the digital pen to write and check boxes on the paper forms. The user must verify that the input has been correctly interpreted by the system to maintain information consistency between the electronic and physical documents. NOSTOS provides feedback for this purpose in two ways: through walk–up displays in the physical environment and by radio–enabled headsets. For example, users walk–up to a nearby display and can verify that information about a medication written on the form has been correctly converted to text by NOSTOS. A RFID reader at the display is used to identity the unique paper form and shows its digital representation on the screen. To accept the text input, the user simply checks the Accept box near the medication field of the paper form with the digital pen. Similar interaction techniques are employed to make corrections, and scroll in a list of alternatives. Figure 3 shows the interaction technique.

Figure 3.

Users interact with NOSTOS by means of digital pens and special paper applications. Walk–up displays and mobile headsets provide feedback from the system.

In the auditory approach, a Bluetooth–enabled mobile headset provides the feedback. In this case, the clinician writes a name of a medication on the form, and the system channel its interpretation of the text into the earphone by means of an audio message. The user confirms by checking the Accept box, and the system verifies once again the selection in the earphone.

Sticker notes were often used in the ER. To support and augment this practice, we developed a symbolic language that enables the system to understand and locate notes. For example, to link a sticker note to a specific document, users write a special symbol (‘attach’) on both the sticker note and the document in a succession. NOSTOS recognizes these actions and attaches the virtual representation of the sticker note to the virtual document to maintain consistency between the physical and virtual worlds.

The SATIN toolkit were used to implement the symbolic language.

The interactive desk

We developed an interactive desk to support awareness and compensate for imperfections in the physical world. The rationale behind the design was based on our findings from the ER, where we observed how clinicians spatially arranged folders to display the shared work situation on a desk. However, this representational strategy became unsound when folders were missing on the desk.

The desk provides functionality that can be used to track the positions of the patient folders. A projector system mounted above the desk superimposes computer–generated imagery on the real desktop, and the virtual folders can be shown in place of the missing ones. In this way it is possible to maintain a reliable representation and display of the patients under treatment at the clinic. In addition, because the folders act as physical icons, the desk informs NOSTOS of what is going on at the clinic, such as the number of patients and triaging.

The desk was built from a standard 125 KHz RFID reader (communicating through the RS–232 serial protocol) and moving antenna placed under the desk to track tags that are attached to the folders. Figure 4 shows part of the interactive desk.

Figure 4.

The interactive desk of NOSTOS tracks the physical folders on it and can display virtual folders and sticker notes.

Software architecture

The physical interfaces were developed to communicate with the CPR system. A distributed Peer–to–Peer architecture is used for the physical interfaces and consists of a set of thin clients and a central lookup–service. The lookup–service is responsible for bridging client requests from the physical interfaces to the appropriate CPR interface services in the network such as text input. All communication is based on Java’s RPC (remote procedure call).

The thin clients are responsible for Bluetooth communication with the digital pens pen, reading of RFID tags, translating and interpreting pen strokes. Additionally, a client is responsible for providing feedback either through the walk–up display or the headset.

DISCUSSION

The NOSTOS environment employs digital pens and sensor technologies to digitize established and functioning paper–based clinical administration practices. Basically, the approach allows clinicians to continue to use familiar paper objects for data capture and collaboration. Furthermore, NOSTOS does not impose costly organizational changes, although it provides the advantages of CPR technology.

We found that the augmentation approach is robust and appealing in that it does not require electronic power to be functional. In the case of a power outage or computer failure, only the digital augmentation will be lost, not the entire system for managing patients.

Initially, we believed that we could develop a system that would leave the routines of the clinician intact. However, some changes were imposed by the design, the most notable of which is that the user has to verify text input to conform that it is correctly interpreted by NOSTOS. Manual verification must be done to ensure that a physical document and its digital counterpart contain the same information. This action is necessary because paper is a passive medium that provides limited feedback from the underlying systems, and the OCR engine of the prototype were insufficient. Our solution to his problem was to provide feedback through walk–up displays and headsets. However, it might be more appropriate to use wearable displays that can give instant feedback and to develop a better OCR. We believe that the robustness of the OCR can be significantly improved by integrating a controlled medical vocabulary such as the Unified Medical Language System.

Considering interaction, we found that the auditory feedback through the headsets in our prototype was cumbersome due to poor response time. The walk–up display is preferable in this context although it is obviously less mobile than the auditory technique.

Active and communicating digital paper solutions may prove to be supportive in healthcare. It is our opinion that such solution requires only basic functionality to offer real benefits in medical data capture. For example, they could be highly useful if they provide feedback and have pop–up menus that give users memory support. In addition, medical ubiquitous computing environments could gain from having augmented folders that are aware of the following: documents they contain, sticker notes attached to them, and their spatial location in physical space. Our research group is currently investigating the possibility of creating such smart binders and folders.

CONCLUSION

We developed an experimental ubiquitous computing environment for data capture and collaboration based on a digital paper approach. Our view is that limited feedback in the paper interface is an aspect that can be tolerated in the light of the rich interactional and collaborative advantages offered by paper materials. We developed two approaches that channel feedback through walk–up displays and mobile headsets, respectively. We conclude that the digital paper interface approach is promising, although more work is needed to improve feedback structures and achieve more robust OCR.

REFERENCES

- 1.Patel VL, Kushniruk AW. Interface design for health care environments: The role of cognitive science. In: Proc of the 1998 AMIA Fall Symposium; 1998 November 7–11; Orlando, FL. [PMC free article] [PubMed]

- 2.Heath C, Luff P. Technology in Action. Cambridge (MA): Cambridge University Press; 2000.

- 3.Norman D. Cognitive Artifacts. In: Carroll JM, ed. Designing Interaction: Psychology at the Human–Computer Interface. Cambridge (MA): Cambridge University Press; 1991. p. 17–38.

- 4.Sellen A, Harper R. The Myth of the Paperless Office. Cambridge (MA): MIT Press; 2001.

- 5.Dick RS, Steen EB. The Computer–Based Patient Record: An Essential Technology for Health Care. Washington DC: National Academy Press; 1991. [PubMed]

- 6.Bång M, Timpka T. Cognitive Tools in Medical Teamwork: The Spatial Arrangement of Patient Records. Meth Inf Med In Press. [PubMed]

- 7.Hutchins E. Cognition in the Wild. Cambridge (MA): MIT Press; 1995.

- 8.Weiser M. The Computer for the 21st Century. Sci Am. 1991;265(3):94–104. [Google Scholar]

- 9.Poon AD, Fagan LM, Shortliffe EH. The PEN–Ivory Project: Exploring User–interface Design for the Selection of Items from Large Controlled Vocabularies of Medicine. JAMIA. 1996;3(2):168–183. doi: 10.1136/jamia.1996.96236285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wellner P, Mackay W, Gold R. Computer Augmented Environments: Back to the Real World. Com of the ACM. 1993;36(7):24–26. [Google Scholar]

- 11.McGee D, Cohen P, Wu L. Something from nothing: Augmenting a paper–based work practice with multimodal interaction. In: Proc of the Designing Augmented Reality Environments Conference 2000 (DARE'00); April 12–14; Helsingor, Denmark: Copenhagen: ACM Press; 2000. p. 71–80.

- 12.Mackay WE, Fayard A–L. (1999) Designing Interactive Paper: Lessons from three Augmented Reality Projects. In: Proc of International Workshop on Augmented Reality (IWAR’98); 1999; Natick (MA): A K Peters Ltd; 1999.

- 13.Wellner P. Interacting with paper on the DigitalDesk. Com of the ACM. 1993;36(7):86–96. [Google Scholar]

- 14.Anoto AB. Available at: www.anoto.com Accessed June 26, 2003.

- 15.Want RK, Fishkin K, Gujar A, Harrison B. Bridging physical and virtual worlds with electronic tags. In: Proc of Human Factors and Computing Systems (CHI’99); Pittsburgh (PA): ACM Press; 1999.

- 16.Ullmer B, Ishii H. Emerging Frameworks for Tangible User Interfaces. IBM Systems Journal. 2000;39(3–4):915–931. [Google Scholar]

- 17.Fitzmaurice G. Graspable User Interfaces [Dissertation]. University of Toronto; 1996.

- 18.Ullmer B, Ishii H, Glas D. mediaBlocks: Physical Containers, Transports, and Controls for Online Media. In: Proc of Computer Graphics (SIGGRAPH'98); 1998 July 19–24, ACM Press; 1998. p. 379–386.

- 19.Jacob R, Ishii H, Pangaro G, Patten J. A Tangible Interface for Organizing Information Using a Grid. In: Proc of Human Factors in Computing Systems Conference; 2001 ACM Press; 2002. p. 339–346.

- 20.Bång M, Hagdahl A, Eriksson H, Timpka T. Groupware for Case Management and Inter–Organizational Collaboration: The Virtual Rehabilitation Team. In: Patel VL et al, eds. Proc of the 10th Word Congress on Medical Informatics; 2001; Amsterdam: IOS Press 2001. [PubMed]

- 21.Hong J, Landay J. SATIN: A Toolkit for Informal Ink–based Applications. CHI Letters. 2000;2(2):63–72. [Google Scholar]